Abstract

We have developed a real-time video analytics system for measuring colonoscopy performance, including total visible surface areas, real-time feedback to the endoscopist about the measurements of visible surface areas, video clarity, preparation, and distention conditions. Our lab experiments show that the correlations between the computer and the experienced colonoscopist are: 76% in visible surface area estimation, 83.9% in clarity evaluation, 90% in preparation condition assessment, and 67.9% in distention condition evaluation. Our algorithm appears to be faster in response to dynamic scope movements compared to a 3D scope positioning device. In addition, the clinical experiment shows the system detected unexpected scope malfunction events in real-time.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

A colonoscopy is a therapeutic screening method for detecting precancerous polyps, colon cancers, and many other diseases. It involves a manually-controlled endoscopic camera connected to a computer and a display. Currently, the procedures are performed based on visual observation and experience. A field survey found that the rate of missing polyps present in the patient can reach as high as 28% [1, 2]. The scope moving speed, fatigue, and vigilance each plays a significant role in the detection of polyps. In addition, the clarity of the colonoscopy video, patients’ preparation, and colon distention status are measurable factors. The most critical factor however, is where the scope is aimed to. A colon can be simplified as a cylinder that has four quadrants. A well-trained colonoscopist would make sure all quadrants are thoroughly inspected. Therefore, the visible surface area is an essential measurement to assess whether the operator has missed any quadrants during the screening process, normally during the scope withdrawal period. In order to detect polyps, the scope first needs to be aimed in the right direction. This is important to future AI systems as well. Any AI system would not be able to perfectly detect polyps from the video unless the scope sweeps through all surface areas. Furthermore, it is beneficial to evaluate the colonoscopy procedure performance in real-time, when the colonoscope is still inside the patient’s body so that the doctor can go back to examine the missing area.

For over a decade, researchers have developed quantitative methods to evaluate the quality of exam (QoE) for colonoscopy procedures [3,4,5,6,7], including measuring the scope moving speed [3], scope rotational patterns, video clarity, preparation conditions, and so on. However, visible surface measurement and comprehensive data visualization are still missing in most literature.

Recently, the author’s lab has developed a computer-assisted colonoscopy procedure performance quality measurement system from live colonoscopy videos [8, 9]. The system provides for an objective assessment of the colonoscopy. The system can also be integrated into existing colonoscopy systems without modification. Additionally, it provides real-time feedback so that the operators can reassess areas of potential decreased visibility. The system can be used for quality assurance, training, and improvement of the polyp detection rate. In this study, we focus on the laboratory experiments to compare the measurements between an experienced colonoscopist and the computer. We will also present case studies in the clinical environment to assess the accuracy of the proposed method, including comparing the Olympus’ 3D scope position sensor ScopeGuide™ with the computer’s result and the system’s response to an anomalous scope event.

2 Overview of the System

In this study, we divide the wall of the colon into four quadrants: top left (TL), top right (TR), bottom left (BL), and bottom right (BR). See Fig. 1. In this system, we calculate the measurements per section over a given distance, currently every 5 cm. The display of the measurement results is shown in Fig. 1 on the right. First, we measure the camera orientation by detecting the vanishing point of the scope view to determine which quadrant it is aimed at. Then, we calculate the coverage percentage for the length section, update the accumulated measurements for the section, and repeat until the colon exit is detected.

The scope travel distance can be obtained over time with optical flow and epi-polar plane projection. Based on the estimation of the scope’s orientation and travel distance, we can calculate the total percentage of the examined surface. We visualize the surface in 4 × n cells, where 4 rows represent 4 quadrants and n columns represent n segments of 5 or 10 cm wide rings of the colon wall. The number of columns varies, depending on the length of the colon. There is a counter for each cell that counts how many frames in the 10 cm segment the cell (e.g. Top Left) that were visible in the video. For example, if we set the minimal number of frames on any 10 cm segment to 500, then a count of 250 would amount to 50% coverage. We then map the percentage value to a color heat map. For example, if the coverage is zero, then the cell color is black. If the coverage is 100%, then the color is white or green (e.g. where the intensity level is 255).

The visualization panel also includes scores for clarity, preparation conditions, and distention level. Clarity is a measurement of the image quality of the video, which measures blurriness with a Gaussian Function. Preparation is a measurement of the existence of food, colored drink, or stool, which can be recognized by a color vision model. Distention is a measurement of expansion of the diameter of a colon when it is filled with pumped air, which is described by a shape model. Those measurements can be mapped into additional grid cells below the surface map, with the same scope location cursor and columns. The multiple measurement maps can be updated in real-time and exported into a log file after the procedure in an XML format.

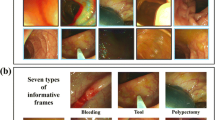

In this study, we have collected over 60 videos for testing. 22 videos were prepared for surface area evaluation, including good, suboptimal, and bad surface area videos. For distention, we prepared 17 videos, including good distention (i.e. a fully opened colon) and bad distention (i.e. a closed colon). To evaluate the preparation of the colon, we prepared 15 videos that have stool and videos that do not have stool. For clarity, 8 videos were used in our evaluation.

3 Visible Surface Area Measurement

In the surface area evaluation, the software calculates the visibility results of four quadrants every 5 cm. Figures 2 and 3 show the overall average evaluation of surface area of the colonoscopist versus the computer. Here, we found a correlation of around 76%. When we asked the colonoscopist to repeat the evaluation, we found that the computer’s results appear to be more consistent than humans.

4 Clarity Evaluation

Clarity is evaluated based upon how the video quality compares from that of the doctor and that of the computer. Eight videos were used to evaluate the software on clarity, and the correlation found between the doctor and the computer was 83.9%.

5 Preparation Condition Evaluation

In this experiment, the preparation condition refers to the detection of stool in a given frame. The videos were scored from 0% to 100%. We found the correlation is around 90%. The computer results are consistent with the expert’s assessment (Fig. 4).

6 Distention Evaluation

Distension calculation is based upon the shape of the cross-section of folds in a given part of the colon. The correlation is 67.9%. The results show that there is room for improvement in the shape classification algorithm, especially in some anomalous cases. A prime example would be the “bad” video segment, where the doctor scores only 1 but the computer scores 6 (Fig. 5).

7 Comparison with 3D Position Sensor

We compared the computer’s distance estimation result with the Olympus 3D position sensor ScopeGuide (™) in real-time and recorded the data from both systems (Fig. 6).

We found that our system has a much faster tracking response time (30 frames per second) than the ScopeGuide™ (less than 1 frame per second). Therefore, our system can reveal more dynamic information than the existing equipment. In addition, our system can provide the scope orientation at the quadrant level, but the ScopeGuide™ cannot.

8 Performance Evaluation in Clinical Environment

We tested the system at an endoscopy laboratory. Figure 7 shows a screenshot of the system in normal operation conditions. The visualization shows that the colonoscopy procedure was going well and most scores were perfect. Figure 8 shows an anomalous situation in which the scope cable broke down and failed to tilt its head around. The visible surface area measurement shows high percentages of missing areas.

This kind of incident happened only once a year at most, but we were lucky to encounter such an anomalous incident to prove the effectiveness of our system.

9 Conclusions

We have developed a real-time video analytics system for measuring colonoscopy performance. The quality of the exam evaluation includes the estimation of total visible surface areas per segment, real-time feedback to the endoscopist of areas visualized, and a color-coded display demonstrating exam quality in real-time for clarity, preparation conditions, and distention conditions. Our lab experiments show that the correlations between the computer and the experienced colonoscopist are: 76% in visible surface area estimation, 83.9% in clarity evaluation, 90% in preparation condition assessment, and 67.9% in distention condition evaluation. Our algorithm appears to be faster in response to dynamic scope movements compared to a 3D scope positioning device. In addition, the clinical experiment shows that the system detected unexpected scope malfunction events in real-time.

References

Leufkens, A.M., Van Oljen, M.G., Vieggaar, F.P., Siersema, P.D.: Factors influencing the miss rate of polyps in a back to back colonoscopy study. Endoscopy 44, 470–475 (2012)

Rex, D.K., et al.: Colonoscopic miss rates of adenomas determined by back to back colonoscopies. Gastroenterol 112, 24–28 (1997)

US Patent 7,894,648: Colonoscopy video processing for quality metrics determination, 22 February 2011

Oh, J.H., Rajbal, M.A., Muthukudage, J.K., Tavanapong, W., Wong, J., de Groen, P.C.: Real-time phase boundary detection in colonoscopy videos. In: Proceedings of 6th International Symposium on Image and Signal Processing and Analysis (2009)

Rex, D.K.: Looking over your shoulder during colonoscopy: potential roles for video recording colonoscopy withdrawals. Gastrointest. Endosc. 75(1), 134–137 (2012)

Oh, J.H., Hwang, S., Cao, Y., Tavanapong, W., Liu, D., Wong, J., de Groen, P.C.: Measuring objective quality of colonoscopy. IEEE Trans. Biomed. Eng. 56(9), 2190–2196 (2009)

Kaminski et al.: Quality indicators for colonoscopy and the risk of interval cancer. New Engl. J. Med. 362, 1795–1803 (2010)

Cai, Y., Thakkar, S.: US Provisional Patent Application, 62/919,942: Real-Time Measurement of Visible Surface Area from Colonoscopy Video, 5 April 2019

Cai, Y.: Real-time measurement of visible surface area from an endoscopic video. In: Proceedings of International Conference on Computational Science. Springer (2020)

Acknowledgements

The authors would like to thank Dawit Weldeghergish for data analysis and Dr. Shyam Thakkar for data collection and the quality of exam evaluation. The project is sponsored by Innovation Works, CMU, and the DHTI program.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Cai, Y. (2020). Real-Time Video Analytics for Measuring Colonoscopy Performance. In: Kalra, J., Lightner, N. (eds) Advances in Human Factors and Ergonomics in Healthcare and Medical Devices. AHFE 2020. Advances in Intelligent Systems and Computing, vol 1205. Springer, Cham. https://doi.org/10.1007/978-3-030-50838-8_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-50838-8_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50837-1

Online ISBN: 978-3-030-50838-8

eBook Packages: EngineeringEngineering (R0)