Abstract

This presentation introduces the theory leading to solution methods for differential algebraic equations (DAEs) under interval uncertainty in which the uncertainty is in the initial conditions of the differential equation and/or the entries of the coefficients of the differential equation and algebraic restrictions. While we restrict these uncertainties to be intervals, other types of uncertains like generalized uncertainties such as fuzzy intervals are done in a similar manner albeit leading to more complex analyses. Linear constant coefficient DAEs and then interval linear constant coefficient problems will illustrate both the theoretically challenges and solution approaches. The way the interval uncertainty is handled is novel and serves as a basis for more general uncertainty analysis.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

This presentation introduces interval differential-algebraic equations. To our knowledge, the publication that is closest to our theoretical approach is [11], in which an interval arithmetic, they call ValEncIA, is used to analyzed interval DAEs. What is presented here and what is new is that we solve the interval DAE problem using the constraint interval representation (see [6, 7]) to encode all interval initial condition and/or interval coefficients. It is shown that this representation has theoretical advantages not afforded to the usual interval representation. The coefficients and initial values are, for this presentation, constant to maintain the article relatively short. However, the approach in which there are variable coefficients and/or initial values can easily be extend and this is pointed out. Since we transform (variable) interval coefficients to (variable) interval initial values, it is, in theory, a straight forward process, albeit more complex.

The constraint interval representation leads to useful numerical methods as will be demonstrated. The limitations of this publication prohibit the full development of the methods. The initial steps are indicated. What is presented here does not deal directly with what is called validated methods (see [9, 10] for example). However, when the processes developed here are carried out in a system that accounts for numerical errors using outward directed rounding, for example, in INTLAB or CXSC, then the results will be validated. We restrict ourselves to what is called (see below) semi-explicit DAEs. That is, all problems are assumed to have been transformed to the semi-explicit form. However, our approach is much wider.

The general form of the semi-explicit DAE is

While the focus is incorporating (constant) interval uncertainties in (1), and (2), generalized uncertainties as developed in [8], can be analyzed in similar fashion. Note that the variable (and constant) interval coefficient/initial value is a type of generalized uncertainty and fits perfectly in the theory that is presented.

2 Definition and Properties

One can also think of the implicit ODE with an algebraic constraint,

as a semi-explicit DAE as follows Let

This will increase the number of variables. However, this will not be the approach for this presentation. Our general form will assume that the DAE is in the semi-explicit form (1), (2).

Given an implicit differential equation (3), when \(\frac{\partial F}{\partial y}\) is not invertible, that is, we do not have an explicit differential equation, at least theoretically, in the form (1), (2)), we can differentiate (4) to obtain

If \(\frac{\partial G}{\partial y}(y,t)\) is non-singular, then (5) can be solved explicitly for \(y^{^{\prime }}\) as follows.

and (6), (7), and (8) is in the form (1), (2) and the DAE is called an index 1 DAE.

This not being the case, that is, \(\frac{\partial G}{\partial y}(y(t),t)\) is singular, then we have the form

which can be written as

and we again differentiate with respect to \(y^{\prime }\) and test for singularity. If the partial of H can be solved for \(y^{^{\prime }}\), then we have an index-2 DAE. This process can be continued, in principle, until (hopefully) \(y^{\prime }\) as an explicit function of y and t is found.

DAEs arise in various contexts, in applications. We present two types of problems where DAEs arise - the simple pendulum and unconstrained optimal control, that illustrate the main issues associated with DAEs. Then we will show interval uncertainty in the DAEs using some of these examples. Our solution methods for the interval DAEs are based on what is developed for the examples.

3 Linear Constant Coefficient DAEs

Linear constant coefficient DAEs arise naturally in electrical engineering circuit problems as well as in some control theory problems. A portion of this theory, sufficient to understand our solution methods, is presented next. The linear constant coefficient DAE is defined as

where A and B are \(m\times m\) matrices, x(t) is a \(m\times 1\) vector “state function” and f is a \(m\times 1\) function vector, and

so that

Note that this is indeed a semi-explicit DAE, where differential part is

where the matrix A is assumed to be invertible, and the algebraic part is

Example 1

Consider the linear constant coefficient DAE \(Ax^{\prime }(t)+Bx(t)=f\) where

The ODE part is

and the algebraic part is

Integrating the ODE part (10), we get

simultaneously, we get

Example 2

Consider the linear constant coefficient DAE

The ODE part is

and the algebraic constraint is

Putting (16) into (15) and solving for \(x_{1}^{\prime }\), we have

Remark 1

1) When, for the \(m-\)variable problem, the algebraic constraint can be substituted into the differential equation, and the differential equation, which is linear, is integrated, there are m equations in m unknowns. Interval uncertainty enters when the matrices A and/or B and/or the initial condition \(y_{0}\) are intervals \(A\in [A],B\in [B],y_{0}\in [y_{0}].\) This is illustrated.

4 Illustrative Examples

Two DAE examples, beginning with the simple pendulum, are presented next.

4.1 The Simple Pendulum

Consider the following problem (see [4]) arising from a simple pendulum,

where g is the acceleration due to gravity, \(\gamma \) is the unknown tension in the string, and \(L=1\) is the length of the pendulum string. In this example, we will consider the unknown tension to be an interval \(\left[ \gamma \right] =\left[ \underline{\gamma },\overline{\gamma }\right] \) to model the uncertainty of the value of the tension. Moreover, we will also assume that the initial values are also intervals. We can restate (17) as a first order system where we will focus on the mechanical constraint omitting the energy constraint, as follows:

Note that the uncertainty parameter \(\gamma \) is considered as a part of the differential equation and is constant (\(u_{5}\)) whose initial condition is an interval. This is in general the way constant interval (generalized) uncertainty in the parameters is handled. If the coefficient were in fact variable, \(u_{5}^{\prime }(t)\ne 0\) but a differential equation itself, for example,

where h(t) would define how the rate of change of the coefficient is varying with respect to time. Equation (18) is a standard semi-explicit real DAE and in this case, with interval initial conditions. How to solve such a system is presented in Sect. 5.

4.2 Unconstrained Optimal Control

We next present the transformation of unconstrained optimal control problems to a DAE. The form of the general unconstrained optimal control is the following.

When \(\varOmega \) is the set of all square integrable functions, then the problem becomes unconstrained. In this case we denote the constraint set \(\varOmega _{0}.\) The Pontryagin Maximization Principle utilizes the Hamiltonian function, which is defined for (19) as

The function \(\lambda (t)\) is called the co-state function and is a row vector \((1\times n).\) The co-state function can be thought of as the dynamic optimization equivalent to the Lagrange multiplier and is defined by the following differential equation:

Under suitable conditions (see [5]), the Pontryagin Maximization Principle (PMP) states that if there exists an (optimal) function v(t) such that \(J\left[ v\right] \ge J\left[ u\right] ,\forall u\in \varOmega ,\) (the optimal control), then it maximizes the Hamiltonian with respect to the control, which in the case of unconstrained optimal control means that

where (23) is the algebraic constraint. Thus, the unconstrained optimal control problem, (19) together with the differential equation of the co-state, (22), and the PMP (23) results in a boundary valued DAE as follows:

One example that is well studied is the linear quadratic optimal control problem (LQP), which is defined for the unconstrained optimal control problem as

where \(A_{n\times n}\) is an \(n\times n\) real matrix, \(B_{n\times m}\) is an \(m\times n\) real matrix, \(Q_{n\times n}\) is a real symmetric positive definite matrix, and \(R_{m\times m}\) is a real invertible positive semi-definite matrix. For the LQP

remembering that \(\lambda \) is a row vector. The optimal control is obtained by solving

which, when put into the differential equations, yields

This results in the system

The next section will consider DAEs with interval uncertainty in the coefficients and in the initial conditions.

5 Interval Uncertainty in DAEs

This section develops an interval solution method. The interval problem for non-linear equation considers interval coefficients as variables whose differential is zero and initial condition is the respective interval. For linear problems, it is sometimes advantageous to deal with the interval coefficients directly as illustrated next.

5.1 An Interval Linear Constant Coefficient DAE

Given the linear constant coefficient DAE \(Ax^{\prime }(t)+Bx(t)=f\), suppose that the coefficient matrices are interval matrices \(\left[ A\right] \) and \(\left[ B\right] \). That is,

Example 3

Consider Example 1, except we have interval entries

where \(\left[ A_{1}\right] =\left[ A_{2}\right] =\left[ B_{4}\right] =\left[ 0.9,1.1\right] ,\left[ A_{3}\right] =\left[ A_{4}\right] =\left[ B_{1}\right] =\left[ B_{2}\right] =\left[ 0,0\right] ,\) and \(\left[ B_{3}\right] =\left[ 1.9,2.1\right] .\) The ODE part is

and the algebraic part is

Integrating (25) we have

which together with (26) forms the interval linear system

Using constraint interval (see [8] where any interval [a, b] has the representation \([a,b]=a+\lambda (b-a))\), then

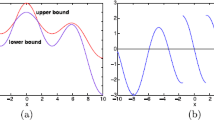

where \(\overrightarrow{\lambda }=(\lambda _{11},\lambda _{12},\lambda _{21},\lambda _{22}).\) Any instantiation of \(\lambda _{ij}\in [0,1]\) will yield a valid solution given the associated uncertainty. However, if one wishes to extract the interval containing \(\left[ \begin{array} [c]{c} x_{1}(\overrightarrow{\lambda })\\ x_{2}(\overrightarrow{\lambda }) \end{array} \right] ,\) a global min/max over \(0\le \lambda _{ij}\le 1\) would need to be implemented.

Example 4

Consider the linear quadratic problem with interval initial condition for x,

For this problem,

Thus

This implies that

and with the initial conditions

6 Conclusion

This study introduced the method to incorporate interval uncertainty in differential algebraic problems. Two examples of where DAEs under uncertainty arise were presented. Two solution methods with interval uncertainty for the linear problem and for the linear quadratic unconstrained optimal control problem were shown. Unconstrained optimal control problems lead to interval boundary-valued problems, which subsequent research will address. Moreover, more general uncertainties such as generalized uncertainties (see [8]), probability distributions, fuzzy intervals are the next steps in the development of a theory of DAEs under generalized uncertainties.

References

Ascher, U.M., Petzhold, L.R.: Computer Methods of Ordinary Differential Equations and Differential-Algebraic Equations. SIAM, Philadelphia (1998)

Brenan, K.E., Campbell, S.L., Petzhold, L.R.: Numerical Solution of Initial-Valued Problems in Differential-Algebraic Equations. SIAM, Philadelphia (1996)

Corliss, G.F., Lodwick, W.A.: Correct computation of solutions of differential algebraic control equations. Zeitschrift für Angewandte Mathematik nd Mechanik (ZAMM) 37–40 (1996). Special Issue “Numerical Analysis, Scientific Computing, Computer Science”

Corliss, G.F., Lodwick, W.A.: Role of constraints in validated solution of DAEs. Marquette University Technical Report No. 430, March 1996

Lodwick, W.A.: Two numerical methods for the solution of optimal control problems with computed error bounds using the maximum principle of pontryagin. Ph.D. thesis, Oregon State University (1980)

Lodwick, W.A.: Constrained interval arithmetic. CCM Report 138, February 1999

Lodwick, W.A.: Interval and fuzzy analysis: an unified approach. In: Hawkes, P.W. (ed.) Advances in Imagining and Electronic Physics, vol. 148, pp. 75–192. Academic Press, Cambridge (2007)

Lodwick, W.A., Thipwiwatpotjana, P.: Flexible and Generalized Uncertainty Optimization. SCI, vol. 696. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-51107-8. ISSN 978-3-319-51105-4

Nedialkov, N.: Interval tools for ODEs and DAEs. In: 12th GAMM-IMACS International Symposium on Scientific Computing, Computer Arithmetic, and Validated Numerics (SCAN 2006) (2006)

Nedialkov, N., Pryce, J.: Solving DAEs by Taylor series (1), computing Taylor coefficients. BIT Numer. Math. 45(3), 561–591 (2005)

Andreas, A., Brill, M., Günther, C.: A novel interval arithmetic approach for solving differential-algebraic equations with ValEncIA-IVP. Int. J. Appl. Math. Comput. Sci. 19(3), 381–397 (2009)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Lodwick, W.A., Mizukoshi, M.T. (2020). An Introduction to Differential Algebraic Equations Under Interval Uncertainty: A First Step Toward Generalized Uncertainty DAEs. In: Lesot, MJ., et al. Information Processing and Management of Uncertainty in Knowledge-Based Systems. IPMU 2020. Communications in Computer and Information Science, vol 1238. Springer, Cham. https://doi.org/10.1007/978-3-030-50143-3_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-50143-3_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50142-6

Online ISBN: 978-3-030-50143-3

eBook Packages: Computer ScienceComputer Science (R0)