Abstract

Storytelling is an integral part of narratives relating to our daily events, news, personal experiences, and fantasies. While humans have long narrated their stories, the mediums they have used to do so have evolved over time through the effects of technological developments: initially, storytelling was solely oral, then written forms were added, and now, with the effects of new media, such narratives have also begun to employ photography and video. These new media tools are also undergoing their own processes of expansion and development. Today one of the most attention-getting are those using Virtual Reality (VR) technologies, a means that allows users to experience being-in-the-virtual-environments, with possibilities of becoming entirely immersed in a virtual environment. The ability to experience an environment with three-dimensional features enhances the experience in sensorial ways, with simultaneous stimulation of both the user’s visual and auditory sensorial systems. The aim of this study is to gain a better understanding of what exactly the user experiences through VR storytelling. To this end we have conducted an experimental research based on an examination of the immersive experience in VR, which constructs the presence feeling. The experiment has been designed to study the effects on forty users. These participants used the HTC Vive head-mounted display to experience the contents of a story called “Allumette” (designed by Penrose Studios). User behaviors were recorded and observed by the tools used to collect data from both the physical world and the virtual environment. Users’ physical movements were documented as coordinate data, while the behavioral reflections in the virtual environment were recorded as a video. Following this virtual experimentation, users were asked to answer a questionnaire that measured their responses to their VR storytelling experience. User experience was finally measured by analyzing both the behavioral outputs of the subjects and the questionnaire. “Cinemetrics” methodology was implemented to analyze the camera movements, which were considered as the user behavioral reflections in VR. The results of this study based on analyzing the behaviors and the reactions to visual and aural stimuli in the VR environment both lead to a clearer understanding of VR storytelling and uses these results to propose a design guide for VR storytelling.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Man has always used storytelling to relate his daily events, news, personal, or fantasies. According to Benjamin (2014), the story is one of the oldest forms of communication, one that differs from information passed down in the form of the relating of real events. Storytellers extract the story of their own experiences, or of experiences that have been narrated to them, and through their narration make the experience also that of those who listen to them (Benjamin 2014). The storyteller uses different environments as storytelling tools and by doing so creates original experiences that can trigger the imagination of his or her audience.

This story experience includes four kinds of narrative theory elements: characters, time, space, and storyteller. As Dervişcemaloğlu (2014) has explained, narrative theory studies are mostly focused on time. Lessing cites from Bucholz, Sabine, and Manfred Jahn relative to the fact that, especially prior to the 19th century, narration functioned more as a tool than as a purpose, and space was used primarily as just as a background or a décor (Dervişcemaloğlu 2014). Therefore, space was not as valuable as time in the construction of the plot. This situation changed with such advancements in the spatial arts as cinema, theater, or virtual arts. Now, developments in virtual environments allow for the stimulation of the audience in sensorial ways, thus leading space to become an element that proves to be more critical than other story environments. In this study, space was explored to understand the spatial sensory experience inside storytelling.

Virtual reality (VR) can be defined as media in which reality is reconstructed by computers (computer simulation). Baudrillard (2011) describes simulation as a hyperreality in which reality is reproduced through models. The spectator human is the one who builds his own reality, and this helps him to develop methods of understanding his own world. Heidegger’s definition of “being-in-the-world” defines, as Ökten (2012) has stated, the individual who is conscious of “being-here” or “human existence.” DaseinFootnote 1 in Baudrillard’s hyperreality, on the other hand, is reinterpreted in the context of being “being-in-the-virtual-world” (Coyne 1994). In this study, the concept of virtual dasein has been defined as a virtual individual. In this context, the virtual individual is considered to be that individual who found and performed his spatial movements here.

VR creates an immersive experience by creating a virtual presence and stimulates both visual and aural perception. It creates real-life-like experiences through being-in-the-virtual-world features. Being inside the story and experiencing it with such body movements as turning around 360-degrees, watching 3D characters through 3D environments can serve to differentiate all the perception of the story. The three-dimensional possibilities of virtual reality enable the individual to immerse differently in media.

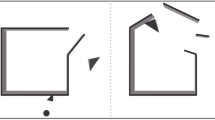

While humans employ bilateral vision as they sense the physical world, VR uses head-mounted display (HMD) to create a phenomenon of vision that exists in the physical environment while extending to the virtual environment through augmentations of stereoscopic vision. The HTC Vive VR HMD was installed in a defined space which is called room-scale (see Fig. 1). The 360-degree vision (6DoF – six degrees of freedom) and spatial navigation possibilities provided by HMD parallels that of physical reality. Virtual space thus becomes a tool that allows us to measure physical behaviors and orientations while the user is watching the story unfold.

This study aims to understand how VR affects the story experience of the user. The forty individuals who participated in this experiment were provided with VR HMD as the medium for their fifteen minute exposure to the contents of a story. Their behaviors as they watched this Allumette story content with HMD were recorded during the session. For the purposes of this study we defined user experience in terms of user behaviors, explorations, and their ability to remember the story line when presented within the VR medium. By doing so we were able to create meaningful data that can be utilized in the design of virtual reality storytelling spaces that can construct a three-dimensional user experience. Measuring the users’ movements while using the VR head-mounted display and recording what they were seeing while watching the VR storytelling content allowed us to gain a better understanding of space perceptions and memory.

This study has been based on four fundamental goals to be gained on experiments on the subject: The first has been to understand how the role of architectural space changes when storytelling is integrated with VR technology. This understanding of the role played by space within the story demonstrates how narrative approaches can be affected. The results of this study lead to a clearer understanding of just how story space can be optimally designed. The second goal was to better understand just how the user explores the space within which s/he is immersed and to question if this exploration has an effect on perception. The third goal was to investigate the relation between the content of the visuals, the audio, and memory details. The fourth was to learn more about the kinds of effects on memory when VR is utilized as the medium of storytelling and to understand which narrative details were – or where not – remembered by the participants. Essential to this is to gain an understanding of the degree to which VR space exploration affects the memory.

2 Storytelling Mediums

Since the beginning of history, storytelling media have been advancing in ways that parallel man’s technological advancements. Stories were first narrated in the forms of paintings and hieroglyphs, then oral story-telling traditions were instilled, followed by stories transmitted in written forms. Now, with the emergence of what we term as “new media,” the range has widened to include photography and cinema. This widening of new mediums has given way to the emergence of mixed media. This development has been outlined by Blumenthal as he cites Henry Jenkins, the author of the book Convergence Culture, in his creation of a model he calls “transmedia storytelling” (Blumenthal 2016).

Today the still-developing technology utilizing VR as a technology is fast becoming another storytelling medium. This is an expected phenomenon for storytelling has always been an immersive experience and VR, which is based on such sensatory immersion, naturally emerges as a successful and preferred environment for such narration.

2.1 Conventional Storytelling

Extant cave paintings and certain religious rituals are proof that man has always felt the need to transmit his stories and that these needs even predate the invention of writing or even speaking. When we speak of conventional storytelling, we are referring to oral and scribed storytelling. We learn of a culture’s history through the contents and forms of storytelling it employed. These stories were passed down through that culture’s history as its populace both memorized and then retaught these narratives, sustaining and revealing its heroic figures and events (Leber 2017). Oral storytelling traditions thus function as conventional mediums as they serve as means of communication that construct reality conceptualizations for the dasein through narrative.

The new media, which includes such forms as photography, movies, multimedia, and computer games, have now become their own unique forms of storytelling mediums. While movies are passive forms of story narrations, computer games have evolved into a media that integrates the player into the narrative. As Zona (2014) cites from Stephen Heath’s article of 1976, the notion of the narrative space, which is a theoretical term, is considered to function of an element of novels, films, video games, and also everyday mediums. She explains his approach as if grounded from a passive viewer of the film and Renaissance perspective rules, and relates that he defines narrative space as an element that controls the action during the story from the vantage point of the audience (Zona 2014). In this perspective, the story creates its own narrative space, one which Heath says is based on the observer’s perspective, and is transformed into a reconstruction of the space in the minds of its audiences. (Heath 1976). Because the audience experiences the stories with the space, it can be argued that the story includes an architectural space, even in storytelling that is only transmitted in written form.

2.2 Virtual Reality Storytelling

As defined by Heim (1993), virtual reality is a broad term because it can be applied to its utilizations in all kinds of activities carried out during our daily lives. VR, however, creates an embodied effect that emerges as a new reality of its own, one that portends potentials for the futures of film, theater, and literature. In Ryan’s (1999) mention of the ideas put forth by Pimentel and Texeira, the actual question is not whether this creation embodies a true physical space or not, but rather whether the user can believe it is true for at least some of the time. In this sense it is similar to reading and getting inside a good novel, and being captivated by a video game. Virtual reality (VR) creates a multi-sensory and unique experience. VR technology offers an immersive experience with three-dimensional (3D) features and interactivity. This technology offers its users the ability to watch and to move inside the scene by using HMD, listening to audio with headphones so that the audience can be isolated from the outside world. Users can see the virtual world through glasses, and they are not exposed to the voices of the outside world, which means that they cannot be distracted by anything external to him or her and the VR storytelling experience. The full isolation and the notion of being-in-the-virtual-world provides a new kind of experience for storytelling.

The three-dimensional story space of VR allows users to navigate freely and provides opportunities for non-linear storytelling. It is, therefore, the user who decides the storyline (Lau and Chen 2009). Also, VR has the capability of non-static storytelling with the characters, objects, so the story becomes active and dynamic based on the user’s behaviors (Lau and Chen 2009). The last potential they describe is that the user can choose the flow of the story with non-linear storytelling (Lau and Chen 2009).

As defined by Ryan (2001), the VR medium is a kind of a digital wonderland which is an extension of the physical world, and sensorial stimulation that creates the illusion of leaving the body outside. The VR user becomes a new person, one who can experience the presented non-materialized reality with such senses as tactile and aural senses. The user is involved with the medium itself by using gestures or commands that provide such freedoms as that of visualizing imagination-originated thoughts without these thoughts becoming physically materialized (Ryan 2001). It is within such a “digital wonderland,”, that the user is transformed into the protagonist of the story being presented by using this personalization and by watching and interacting with the content in three-dimensional space. One of the very first examples is Disney’s Aladdin, which was designed as a VR storytelling experience. The team recorded the experiences of 45,000 users, leading to valuable primary findings. They found that regardless of gender and age, all of their viewers adapted to the reality of the simulation’s space, and were able to bypass the actual reality that they were not truly in the space. Another finding of the research was that the content of the experience is vital for the immersive perception (Pausch et al. 1996).

Before VR, media designed to represent three-dimensional space, such as animations or motion pictures, occurred in the real world. Due to the limits of a two-dimensional screen, the audience could only see the story from a surface creating a two-dimensional perception. The notion of a 360-degree canvas that changes the user’s passive role while watching the narrative inside a frame gives a chance for a real immersive experience that is hard to design as an experience (Anderson 2016). The linear storyline which is experienced with conventional storytelling methods changes when presented with VR. The storyline is developed within a three-dimensional space, one that offers the opportunity to explore. With this, every individual within the audience can focus on the story from their own unique perspectives within that 3D space (see Fig. 2). Such a personal perspective differentiates the experience in a way that fractures the linear storyline and leads the audience to create a personal storyline.

Morgan says that VR has “frame-less scenes,” which need to be designed to get the attention of the user by attracting them and leading them following all the story as an experience (Morgan 2017). He also adds that VR experiences have no limits or borderlines, which means the user does not have to follow the story. The user experience is thus an element that leads the user injection into the story, thus creating an experience that differs from that provided by film or theater (Morgan 2017).

Cotting says that the story narrated within a movie progresses within a passive environment, such that the audience does not have the power to influence what they are watching; they become passive observers of that which is presented to them. However, during a VR experience, the user becomes an active participant in the story and s/he decides where to look, what to do, or what or who to follow. In this way, the user creates his or her unique individual experience (Cotting 2016). Therefore, a well-designed VR experience means encouraging the user to get involved in the story for creating their personal experiences; it is not about telling the story passively (Cotting 2016).

3 Experiencing the Story in Virtual Reality

In this study the experiences and reactions of each participant were analyzed within a fifteen-minute long VR narrative exposure. At the end of the exposure, participants were asked to fill in an approximate ten-minute long questionnaire. This study was initiated by using 18 pilot experimental sessions. These pilot experiments both aided in the development of the questionnaire and also revealed what measurements would best lead to an understanding of the VR storytelling spaces. Following this pilot study and modifications to the methodology, the experiences of a total of forty participants were measured as they were exposed to the Allumette story with the HTC Vive HMD tool. Movement data were collected by utilizing the headset positional data tracking. A total of forty videos recorded both physical and virtual environments.

3.1 Pilot Experiments

The pilot study showed that participants were curious about the experience and tended to wander about the space, leading us to realize that the questions we were asking needed to be widened. Pilot study participants also displayed the tendency to explore all the places, thus demonstrating the need to measure spatial movements. When the participant had concerns about the spaces provided in the external world, s/he tended not restrict the movements he or she were making. It was thus revealed that the participants needed to acquire some familiarity with HMD itself. More experienced participants were better able to locate and react to spatial sounds. Some participants were more likely to expansively explore the story space. It was observed that such participants were more likely to create vantage points for the story.

3.2 Application of the VR Storytelling Experiment

The pilot experiments demonstrated the need for positional data, leading us to utilize the Brekel OpenVR Recorder software (Brekel OpenVR Recorder 2008). In addition to this we also used the Open Broadcaster Software during-experiment to record the videos of the virtual environment.

The experimentation started with a brief to the participants. Before wearing the headset, users were provided with information about the kinds of experience they would have and how they should move. Then both the Brekel OpenVR Recorder and OBS Studio Screen Recorder software were initiated at the same time so as to ensure data consistency between all the participants, for we realized that if the positional movement data does not start at the same time for each participant, the starting points would not be matched.

3.3 Data Analysis of VR Experiment

The video recordings, movement data, and the questionnaire were analyzed so as to understand the VR storytelling experience. Therefore, some methods such as audio analysis, visual analysis, movement data analysis, and the “Cinemetrics” (McGrath and Gardner 2007) were adapted to this methodology. At the end, analyses were superposed to show all information together.

The first analysis was conducted on the video recordings of the virtual environment. Every user’s individual screen recording was examined to detect the attractor points, and then the audio of the recording was analyzed. After that, the recordings were analyzed based on the visuals. Following this, “Cinemetrics” methodology, which is detailed below, was adapted to the VR storytelling analysis.

Definition of Attractor Points

Allumette represents a 15-min experience (Chung 2016). We determined which scenes performed as Attractor Points, thus permitting reliable analysis of the user experience. The screen recordings were then watched scene by scene so as to detect the reactions in which the participant was grasped by the story as evidenced by his or hers attempt to explore the environment to find some motions. The attractor points were identified as those in which some highlighted actions occurred in the story, such as the explosion of the ship. These points could be augmented both by sounds and by images. Fifteen attractor points, whether image only, sound only, or a combination of image and sound were detected from screen recordings.

One of the attractor points was the scene with “the interior” (see Fig. 3). While the participant was trying to look around the environment, one side of the flying ship opened, revealing the interior. With a change of the second scene, the sounds of a flying ship came from the left, and then the ship arrived and parked on the floating settlement. The mother takes control of the flying ship so as to stop it from falling onto the crowd in the town square. The ship explodes as she is flying it. This explosion constitutes the fourteenth attractor point: “the explosion” (see Fig. 3).

In the last dark scene, as the girl was standing near the bridge the participant hears the sound of a cough. Here the user is stimulated by the sound. That was the last and the fifteenth attractor point: “the cough” (see Fig. 3).

Audio Analysis

The Allumette story audio consists of one musical piece used as the background along with the sounds of the characters and their gestures, and story elements. Because some of the attractors can function as aural perception stimulants, the story audio plays an essential role in the user experience. In some instances, these sounds gave rise to an increased user reaction to some of the attractor points.

Audio analysis commenced by converting the raw data of the “.wav (Waveform Audio File Format)” file to the numerical data by using python code. Sound waves drawn through a software script provides the flexibility to properly scale the sound waves. Every second included ten “sound-lines” because of the calculated soundwaves, meaning there was one sound-line for every millisecond, (see Fig. 4).

Drawing the sound-lines allowed us to see which line serves as the starting line of the attractor point. We then determined the sound-lines for every attractor point, thus allowing us to see big scaled sound-lines on the attractor points. This proved to be valuable as it allowed us to define the real level of the sound from scaled sound-lines giving us the chance to compare with visual and the movement of the user for that attractor. We saw that the analysis of soundwaves and crosschecking them with the movements, visual stimulations, and reactions were essential to understanding the aural stimuli in VR storytelling (Fig. 9). Because the results showed that users were highly affected by the audio components, VR designers should carefully consider which parameters are essential, such as the position of the sound or volume of the sound.

The “Cinemetrics”

Cinemetrics methodology was applied to the screen recordings as a means of gaining a detailed understanding of user behaviors. This is a method that was designed by McGrath and Gardner (2007) so as to read the scenes from the movies and to create architectural drawings from the scenes. The origin of the study is based on Gilles Deleuze’s cinema research studies.

Cinemetrics analyzes a scene by uses the metric system to show the movements of the camera. As Heath (1976) tells us, “The movements of the camera create the narrative space.” Deleuze (1986) cited Bergson’s hypothesis about “movement,” and his claims that movement is different from the space covered. He added that covered space is related to that which is past and divisible, while movement is relevant to the present and indivisible (Deleuze 1986). This serves as a conclusion to a more complex idea that the “spaces covered” are the parts of a single, identical, homogeneous space, but “movements” are heterogeneous, irreducible through them (Deleuze 1986).

Assembling, comprehending, and combining images in daily life and work are commonplace (McGrath and Gardner 2007). These researchers defined daily life as a series of such events ranging from getting out of bed and continuing to unfinished drawings, but not waking up slowly or thinking about anything else, just finalizing the job that inspires us (McGrath and Gardner 2007). This narrative illustrates the morning time as a spatial form of “movement images,” which are equivalent to regular daily progress – wake up and go to the work (McGrath and Gardner 2007). With this narrative approach, “continuous spatial moments are sequenced in time according to personal storytelling style” (McGrath and Gardner 2007).

McGrath and Gardner (2007) explained that the Cinemetrics drawing system was created to develop a personal architectural approach that corresponds to the dynamic nature of reality. They assert that linear thinking on architectural drawing is actually based on the perspective that developed during Renaissance Italy, one that started with the architect and the mechanical engineer, Filippo Brunelleschi.

This approach then continued to advance and to spread with Johannes Gutenberg’s invention of the printing press (McGrath and Gardner 2007). However, in this day and age, this kind of linear thinking needs to change if it will be able to adapt to the “cybernetic” instruments, thus giving rise to the necessity of developing new methodologies (McGrath and Gardner 2007). Their research focused on how architects have expanded existing tools so as to create the ability to move topological architectural forms based on the suitability of the computers. These tools emerged to correspond to new “sensorimotor schema” based on how we sense and act. Cinemetrics was developed to serve as this new “sensorimotor schema” and to widen the architectural field by its tools and applications based on the schema (McGrath and Gardner 2007).

This methodology was developed to fulfill the new technology needs of architectural drawings with computer-generated systems. Architectural drawing systems are explanatory in nature and serve as abstractions of tangible objects such as buildings. New technological developments, however, are now expanding the use of these abstractions and surpass those of still images and tangible objects. Advanced technology has widened the definition of what we mean by the word image, taking it beyond that of a passive visual. Movement in and of itself has also become an important feature. It was towards this new requirement that advanced drawings have been developed. The Cinemetrics method used in films and architectural drawings are now overlapping with each other. Movement is rendering movies to become even more comprehensible and when utilized with architectural drawing methods the camera can render the scene more readable.

In VR applications, the HMD serves as the camera, with the user selecting the scene’s vantage points. To this end, user behavior also functions as camera movement. This has revealed that by adapting Cinemetrics methodology to the space and the movement within virtual reality, drawing the scenes as the movie helps to reveal user behaviors.

In this study we adapted this methodology to the virtual reality medium. Three-dimensional environment scenes were created by using panoramic processing programs. After generating the panoramic image by the user’s HMD screen recording video, the movements were processed from one image for every second. When the story flow came to the attractor point, users tended to turn towards the right because of the dynamic motion (the Flying Boat) (see Fig. 5). Then the user followed the boat with “pan right” three times more. Then s/he tended to tilt down. In the end, Tilt-Up did.

This method revealed whether the movement inside VR storytelling serves to verify whether the design of the content was served well or not. If the purpose of the story content was directed towards increasing user experience, such movement analysis could reveal the expected results.

3.4 Movement Data Visualization

The data were collected from the recording software, which generated two outputs. The first one was the raw positional data file with the ‘.txt’ (Text File) extension. The second one was the animation of the head-mounted display file with the ‘.fbx’ (Filmbox) extension. Positional data were used to understand such user behaviors as how they react, and how much they explore, along with the measurements of their total paths, the measurements of the vertical path, and the measurements of the horizontal path.

The positional recordings included six-column data set for every second and the only three of these data, which are the positional coordinates (posX, posY, posZ) used for the analysis. Euler angles (rotX, rotY, rotZ) were not used. Positional data have been converted to the “.csv (comma-separated values)” file to import the file inside used software.

Software was also utilized to generate the movement paths, movement graphs, the horizontal and the vertical movement quantity, and also total path quantity (see Fig. 6). Movement paths were generated for each of the forty participants, and movement quantities were also calculated. After all the paths were generated, they were super-imposed so as to understand the total exploration (see Fig. 7). The movement data were calculated as quantities.

3.5 Results

This experiment was conducted to understand the “user experience” of VR storytelling. This study led to the possibility of categorizing some estimations relative to user behaviors/reactions and memory. The study demonstrated that the behaviors were affected by both visual and aural stimulations. Also, memory was based on details, space, and story.

The behaviors/reactions of the user inside VR were examined; and this examination demonstrated that the users tended to react to the motions, sounds and the space of the story. If the scene had more than one motion, most of the users followed the movement that was more dynamic. If the users tracked the story, they demonstrated the most reactions to the stimulants, such as the attractors. Their tracking was affected by the motions’ speed. If the story becomes slow and monotonous, the users tried to find other motions by looking around. If there is a more dynamic motion than the story scene, the user was attracted to motion instead of watching the story.

The behavioral data revealed that if the user was attracted to the space of the story, her/his reactions for motions and sounds tended to be weaker. Also, if there are more than one trackable motion, the user is attracted by the more animated one and tracks it.

Moreover, most of the participants tended to wander. Although most of them wandered through the space, some of the users who were not comfortable with the VR headset simply watched the story or displayed limited movements. The wanderer users tended to look around and wanted to see all the building details of the story space. This was an essential issue in order to design a story space, modeling it, and creating a story script.

Another interpretation is that if the current scene became slower than the previous scene, the user waited and looked for more, and he/she tended to wander around. This means that wandering tendencies were triggered by two different elements: the first is that the feeling of being present in the being-in-the-virtual-world of the story attracted people to wander. Also, if the users failed to catch the motion of the scene, they displayed an increased tendency to wander.

Furthermore, some of the attractor points, such as the “walk” were aural stimulants. These attractions were defined by the sounds of the gestures such as that of the little girl walking on the snow. The background music and the sounds of the story elements such as the sounds emitted by the flying ship’s engine and the explosion of the ship were all defined as aural stimulants. These latter sounds were differentiated from the background music. If the user was stimulated by the sound, we noted three different possible reactions. The first reaction was that of noticing the sound. Also, some of the users looked about, trying to locate the origin of the aural source. Some users did not react to the sound and displayed no interest in either the sound itself or its origin.

The memory and recall of details, space, and the storyline were essential elements of this study. The colors of the matches were a small detail that most people could not answer correctly. They failed to answer correctly even if the colors were red, yellow, pink/purple, and the answer was mostly blue, which was the color of the blind man’s suit in the same scene. Then, even if the users followed the story, their attentional degree cannot be sufficient enough to remember the details, such as the colors of the matches. It can be concluded from this situation that the highlighted components as the blind man can be remembered easier because there are so many distracting elements in the story space.

In general, the users’ distance perception differed between two levels (the upper floating ground and the lower floating ground) (see Fig. 7). They can perceive the upper level better because this level serves as their vantage point. However, when it comes to the lower floating ground, their perceptions became fragmented and lost objectivity. One of the reasons for this fragmentation is that the users cannot directly approach the lower level and can only watch that level from a distance. Also, if the vertical movements of the users crosschecked with these answers, their distance perception of lower-level fractionation can be understandable. Although most of the users have a high number for the total path, their vertical path measurement is shallow. It can be concluded that if the user were able approach the lower level, a better distance perception and more vertical movements could be created (Fig. 8).

The questionnaire about the space included questions about the architectural styles of the space and the house. This question had a high correlation of correct answers. Very few wrong answers were given to the questions, which were about the architectural styles and the specific space details. One of the questions is the architectural type of the bridge, and the 99% of the users gave the right answer to the arched bridge. Also, 90% of the users provided correct answers to the question regarding the low-rise buildings. This leads us to understand that virtual individuals have a better memory of more general questions while question regarding small details proved more difficult to remember.

Remembering the storyline was the last part of the analysis. The story summaries as provided by the users were examined through the VR space movements (Fig. 9). So, if the user did not track the story, her/his summary would not be sufficient. If the user explored a lot, he/she could not catch the storyline details.

The final step was to crosscheck and discuss the behavioral and the mnesic examinations. These estimations may prove to be a simple guide to understanding what the designer should do so as to elicit specific reactions. While designing the storytelling inside the VR environment, these data can support the design decisions. As an example, if the designer aims to attract the user with a story element, he/she can highlight the element and thus achieve the intended reaction.

4 Conclusion

The behavioral analysis and the other measurement analysis were conducted to attain the purpose of understanding the user experience by measuring space perception and memory recall of a story inside VR storytelling. The following methodologies were used, such as the audio analysis, the visual analysis, Cinemetrics, and the movement data analysis.

The quantitative and the qualitative data were collected and analyzed, along with the personal perceptional database having an effect on the experience. With this simple guide, a VR designer can predict what will happen if the user engages the story or not engage the story, and then s/he can direct the user with some moves. As an example, if some motions are meaningful for the designer, the designer can highlight that motion to get the attention of the user and lead them to track that motion. One of the findings of this study was that motions have a hierarchical influence order for the user. That means the more dynamic motion gets the more attention is given to them.

Users who follow the story display a greater reaction to both visual and aural stimulants. If the user is wandering about the space and/or not watching the story, s/he is not affected by the stimulations provided. The audio analysis demonstrated how such audio changes affect the user. The study also revealed whether or not the user reacted to the sounds. The result showed that most of the users did react to the sounds, such as the attractor point, the walk. When a sound started, users tended to look about trying to determine the source. Users also identified the distance and the location of the sound source. This study determined that aural stimuli plays a significant role in VR storytelling. While designing a VR storytelling content, the user can be stimulated easily by spatial aural features. Visual analysis was used to gain an understanding of how vantage points affect the experience. After the comparison of the 40 participants’ screen recording videos, the results showed that the outcomes differentiated between each other, so everybody creates their unique experiences. That means the user becomes the director, and perhaps even the screenwriter.

Adapting the Cinemetrics methodology for VR storytelling is an experimental process. The original “Cinemetrics” offers conventional film analysis of camera moves and the structure of the frame (McGrath et al. 2007). When this method is adapted to VR storytelling, the vantage point of the user functions as the camera and the standing point chosen by the user becomes the frame. When this method was applied to VR it revealed the points towards which users tended to look, thus unveiling detailed information of design needs. The VR medium gives the user the opportunity to navigate within a three-dimensional story space. Therefore, the designer should decide that story and space dominance balance for VR storytelling.

The movement data analysis was evaluated together with all other data, such as the audio and visual analysis, so as to gain a more proper understanding of the user experience. An increase in totals of movement path correlated with a higher degree of user exploration. Similarly, if the vertical movement degree was low, the “one-point view” degree tended to be very high.

Designing storytelling for the VR environment is a new way of thinking because three-dimensional space and using an “undefined frame” for watching as a movie differentiates the “scenes.” Unlike this, traditional designs of a movie (an animation or real footage movie), all called for design decisions to be based on the selected frame.

All these approaches demonstrate that the user, now serving as virtual individual within the story, becomes an actor for a VR storytelling; s/he is an active element of the story. So, if a user experience designer aims to craft VR storytelling properly, the designer needs to consider how the user will potentially respond to the story. This study was conducted to support these concerns, so this simple guide to understanding user behaviors inside VR storytelling can corroborate the user experience design.

Notes

- 1.

Dasein means “being there” or “presence” with the German understanding of ‘das’ as “there” and sein meaning “being”. This term is often translated into English with the word “existence”. It is a fundamental concept in Martin Heidegger's existential philosophy and in his work ‘Being and Time’.

References

Anderson, A.T.: VR Storytelling: 5 Explorers Defining the Next Generation of Narrative. Ceros Blog - Interactive Content Marketing & Design Tips. https://www.ceros.com/blog/vr-storytelling-5-explorers-defining-next-generation-narrative. Accessed 30 Aug 2016

Baudrillard, J.: Simulacra and Simulation, 3rd edn. (2011)

Benjamin, W.: Son bakışta aşk: Walter Benjamin’den seçme yazılar, pp. 81, 171. Metis Publications, İstanbul (2014)

Blumenthal, H.: Storyscape, a New Medium of Media. Georgia Institute of Technology, Atlanta (2016)

Chung, E.: Allumette. Penrose Studios (2016)

Cotting, D.: Storytelling vs. Story Enabling: Crafting Experiences in the New Medium of Virtual Reality. https://medium.com/shockoe/storytelling-vs-story-enabling-crafting-experiences-in-the-new-medium-of-virtual-reality-8b982359d3b8. Accessed 02 Nov 2019

Coyne, R.: Heidegger and virtual reality: the implications of heidegger’s thinking for computer representations. Leonardo 27(1), 65 (1994)

Deleuze, G.: Cinema I: The Movement Image. (H. Tomlinson, & B. Habberjam, Trans.). University of Minnesota, Minneapolis (1986)

Dervişcemaloğlu, B.: Anlatıbilime Giriş, 1st edn. Dergah Yayınları, Istanbul (2014)

Heath, S.: Narrative space. Screen 17(3), 68–112 (1976)

Heim, M.: The Metaphysics of Virtual Reality. Oxford University Press, New York (1993)

Lau, S.Y., Chen, C.J.: Designing a virtual reality (VR) storytelling system for educational purposes. In: Iskander, M., Kapila, V., Karim, M. (eds.) Technological Developments in Education and Automation, pp. 135–138. Springer, Heidelberg (2009). https://doi.org/10.1007/978-90-481-3656-8_26

Leber, R.: The History of Storytelling: Part I: The Invention of Story. IndiePen Ink. http://indiepenink.com/research-a-torium/the-history-of-storytelling-part-1-the-invention-of-story/. Accessed 21 Apr 2018

McGrath, B., Gardner, J.: Cinemetrics. Wiley-Academy, Chichester (2007)

Morgan, A.: Virtual Reality: The User Experience of Story | Adobe Blog. from Adobe Blog. https://theblog.adobe.com/virtual-reality-the-user-experience-of-story/. Accessed 28 Apr 2018

Ökten, K.H.: Heidegger’e Giriş. Agora Kitaplığı, İstanbul (2012)

Pausch, R., Snoddy, J., Taylor, R., Watson, S., Haseltine, E.: Disney’s Aladdin: first steps toward storytelling in virtual reality. In: SIGGRAPH 1996 Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, pp. 193–203. Association for Computing Machinery, Inc., New York (1996)

Ryan, M.-L.: Immersion vs. interactivity: virtual reality and literary theory. SubStance 28(2), 110 (1999)

Ryan, M.-L.: Narrative as Virtual Reality: and Interactivity in Literature and Electronic Media. The Johns Hopkins University Press, Baltimore (2001)

Zona, M.: Narrative Space? https://mineofgod.wordpress.com/2014/01/08/narrative-space/. Accessed 20 Mar 2018

Acknowledgments

This research was conducted to serve as a master thesis at Istanbul Technical University Architectural Design Computing Program. The study was conducted with the support of Istanbul Technical University Rectorate, which supplied the HTC Vive Head Mounted Display. We would like to thank our vice-rector Prof. Dr. Alper Ünal, Scientific Research Project Coordinator Behzat Şentürk, and Mehmet Kara.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Dumlu, B.N., Demir, Y. (2020). Analyzing the User Experience of Virtual Reality Storytelling with Visual and Aural Stimuli. In: Marcus, A., Rosenzweig, E. (eds) Design, User Experience, and Usability. Design for Contemporary Interactive Environments. HCII 2020. Lecture Notes in Computer Science(), vol 12201. Springer, Cham. https://doi.org/10.1007/978-3-030-49760-6_29

Download citation

DOI: https://doi.org/10.1007/978-3-030-49760-6_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-49759-0

Online ISBN: 978-3-030-49760-6

eBook Packages: Computer ScienceComputer Science (R0)