Abstract

The historical rise of vacuum electronics (VE) was enabled by the availability of electrical power and by improved vacuum techniques, but its further progress relied on improved electron sources and their control. The development of VE has been pushed by several technological waves/cycles, starting with incandescent lamps, continuing with the radio tube era and then followed by the cathode-ray tubes. Yet vacuum electronics is still alive and has specific advantages in the high-power, high-frequency domain. The improvement trends of cathodes over time, related to specific and also advanced application requirements will be addressed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1.1 The Foundations of Vacuum Electronics

1.1.1 The Availability of Electric Power

One of the prerequisites for vacuum electronics is, of course, the availability of electric power generators and later on of a distributing grid for power supplies. Hence the advances in this field happened some decades before the rise of vacuum electronics started [1,2,3,4]. We find a similar development (limiting) growth curve as in other fields of technology.

Already in antiquity around 585 B.C., the first concepts of magnetic and electric forces were described by Thales of Miletus, electron being the Greek word for amber, wherefrom charges could be generated by rubbing, and magnetic forces being manifested in magnetic ores found in Magnesia [3, 5]. Yet they more or less remained curiosities and did not trigger any applications. An exception could be the so-called battery of Baghdad from the time of the Parthian Empire after 247 B.C., which was capable to deliver 250 µA at 0.25 V, when a saline solution was added; a possible application could have been electroplating [6].

In modern times Otto von Guericke was not only a pioneer in vacuum technology with the Magdeburg half-spheres experiment in 1654, demonstrating that 16 horses could not draw two evacuated metal half-spheres apart [2, 7, 8]. Guericke also built an electrization machine in 1663 (see Fig. 1.1), using a sulfur sphere and friction [3, 7,8,9,10,11]. With this sulfur sphere, Gottfried Wilhelm Leibniz in 1672 discovered electrical sparks. In 1745 the German cleric Ewald von Kleist and the Dutch scientist Pieter van Musschenbroek from Leyden, both found that charges generated by friction could be stored and accumulated in a Kleist jar or more commonly “Leyden jar” [9]. Typical for spark experiments using Leyden jars were very high voltages and rather low discharge currents (with powers in the order of 30–50 W, see Ayrton [11]). In 1799/1800 Alessandro Volta (Italian) produced continuous electrical power for the first time (as opposed to a spark or static electricity) from a stack of 40 cells of silver and zinc plates, with felt soaked with salt solution in between the electrodes. This battery lasted for several days [3, 9, 12]. From our knowledge nowadays, it had a electromotive force of 62.8 V and should have been capable of delivering about 20 W, due to the conversion of chemical to electrical energy in a redox reaction. In a replica experiment to be followed on YouTube [13], one can see that the voltage of 22 elements’ stack of Zn and Cu, which Volta also used, is about 24 V and the power in the order of 8 W. Of course, one can increase the current by using several parallel cells or larger cell sizes and one can increase the voltage by further stacking. Such an upscaling was of course realized in the following years. The introduction of the horizontal trough battery by William Cruickshank in 1802, in one version consisting of 60 Zn–Ag pairs with an estimated power of 17 W, increased battery life to several weeks. For all these galvanic cells the achievable current was limited by the electrode area, the current density being usually a factor of about 5 lower than the maximum current density of about 50 mA/cm2 [14, 15]. Humphrey Davy used 3 different batteries with powers ranging from about 170 to 260 W for his chemical experiments, with which he first isolated alkali elements and also demonstrated arc discharges between carbon electrodes. Based on these improved cells, William Pepys in 1808 started to construct one of the strongest batteries with 2000 plate pairs in a trough configuration and 82.6 m2 total plate area (103 cm2 single plate surface area) for the Royal society of London [3, 16, 17], financed by a subscription [17]. It was a plunge type battery in a nitrous and sulfuric acid solution of higher conductivity, finally installed in 1810 with an estimated power of about 7 kW at 1.7–2.2 kV (see Fig. 1.2).

The 1663 electrization machine of Otto von Guericke, using a sulfur sphere and friction. The figure is based on [7], “The electrical experimenter”, Sept. 1915, p.198

The great Battery of London (ca. 1810), figure from [16], Louis Figuier, “Les Merveilles da la Science”, Paris 1867 (Fig. 346, p. 673), reproduced by G. Gaertner

A battery of 600 Zn–Cu galvanic cells with single electrode surface area of 900 cm2 was constructed in Paris in 1813, funded by Napoleon I, with an estimated power of 4.6 kW. But both approaches had been surpassed before by Vasily Petrov in St. Petersburg in 1802/1803 with a huge, also horizontal battery of 4 troughs with 4200 Zn–Cu galvanic cells (491 cm2 single plate area) with an estimated power of 17 kW. Yet he was still using a salt solution (as Volta) of lower conductivity. He also demonstrated the first continuous arc discharge between two carbon electrodes in 1802/3, but unfortunately published it only in Russian [18].

The main improvement trend was to increase the rather short life of these galvanic cells by using different and improved materials. In this context John F. Daniell (UK) in 1836 introduced a porous diaphragm between the Zn–Cu electrodes and 2 fluids in order to overcome polarization [3, 9]. In 1854 the German Wilhelm Josef Sinsteden invented the lead accumulator by replacing copper by lead and using sulfuric acid as the fluid, which was then strongly improved in 1859 by the Frenchman Gaston Planté, making the first secondary or rechargeable battery with electrodes of Pb and PbO2 and an electrolyte of sulfuric acid technically feasible [9]. A predecessor of this accumulator was invented by J. W. Ritter in 1802 [9]. In Germany the physician Carl Gassner in 1887 developed the first dry cell.

It has to be noted that up to that time also in the later literature in most cases, no performance data on these devices were given since metrology was still in its infancy. The author estimated the performance based on material and geometrical data and our knowledge nowadays and made use of the work of Ayrton [11] and King [19]. Of course the estimated power given for different batteries up to 1825 has to be reduced, if one sets a minimum requirement for the operational time of about 1000 h.

These electrical power sources were also very valuable for establishing the laws of electricity and magnetism in the years to come, as done by the Danish man Hans Christian Oersted, by the Frenchman André- Marie Ampère in 1820 and later by the German Georg Simon Ohm (1826), the Englishman Michael Faraday (1829, 1852) and finally by James Clerk Maxwell (1865) [3].

Another approach to supply electrical power was based on the conversion of mechanical energy to electrical energy by moving magnets and inductive currents. The first usable machine was built by the Frenchman Hyppolite Pixii in 1832, by turning a permanent magnet in front of a pair of coils, producing an alternating current. In a second machine built in the same year, he introduced a commutator and obtained undulating DC current [9, 12]. A commercial application arose, when a supply for the electrical arc lamps for lighthouses was needed. In 1857–1858 Prof. Frederick Holmes constructed a magneto-electric machine for the South Foreland lighthouse in the UK. The test version had 36 permanent magnets on 6 wheels, weighed 2000 kg and gave a DC output of 1.8 kW [3] (according to [20] only of 700 W). In the final version two machines with 60 permanent stationary magnets were delivered, now with iron frames instead of wood, which weighed 5500 kg. The two wheels with coils were driven by a steam engine through a belt drive [3, 19]. Looking at the weight of these machines, they were not really efficient.

Werner Siemens in Germany showed, based on an idea of Henry Wilde, that permanent magnets were not necessary to convert mechanical to electrical energy, and built his first technically convincing dynamo-electric machine (“Elektrodynamische Maschine”) of table-size in 1866, which could deliver 26–29 W. Thus, the efficiency could be greatly improved and in the years to come more powerful machines were built, for instance, the Siemens dynamo of 1877 with a commutator delivering 20 A at 50 V (1 kW DC) [3, 5, 9, 20]. The Pearl street power station installed by T.A. Edison in 1879 generated electric power of 100 kW [3, 4]. In 1883 the company Siemens Brothers installed a dynamo machine with single-phase alternators in London with 250 kW power. In 1900 the same company showed a 1.57 MW machine at the Paris Exhibition [3]. As an example of the worldwide activities Siemens & Halske designed and built the first public power plant of about 4 MW in the vicinity of the coal mines in Brakpan near Johannesburg in South Africa, which went into operation in 1897 [21]. Each of the 3-phase generators generated 975 kW at 700 V. The power was transmitted at 10 kV to various gold mines. A picture of the machine room is shown in Fig. 1.3.

Machine room in 1897 of the power station Brakpan in South Africa, equipped with Siemens & Halske three-phase generators (975 kW at 700 V), coupled directly to the steam engines [21]. Courtesy of Siemens Historical Institute

The number of power stations strongly increased with time in the years from 1890 to 1910 and also the maximum power. In that time DC was dominant and in order to store energy for low load intervals accumulator stations were added, allowing to store about 15% of total electrical energy [4]. In the beginning of the twentieth century, the majority of new power stations supplied either alternating or 3-phase AC current. It has to be pointed out that in general the steam electric power stations need, for example, coal for steam generation and hence in total chemical energy is converted via thermal to mechanical and then to electrical energy. For a 100 MW turbo generator the amount of coal needed is enormous: 150 tons of brown coal per hour and 350 tons of steam per hour. Such a power plant was Golpa-Zschornewitz in Germany with 45 MW in 1915 (1918 180 MW), which was later topped by Boxberg (German Democratic Republic) with 3.52 GW in 1966 [9].

The first huge water power station was built by Tesla and Westinghouse in 1895 at the Niagara falls, comprising 3 aggregates of turbines and 2-phase dynamo-machines of 4 MW each [5]. In 1924/25 the Walchensee power station went into operation in Germany, with four 3-phase generators delivering 72 MW in total and two single-phase generators of 52 MW [5].

From the power stations the electrical energy was fed into a power grid, distributing it to the end-users, but DC transmission was limited to shorter distances. The first successful long-distance 3-phase transmission took place from Lauffen power station to the Frankfurt Electricity Exhibition over 175 km in 1891 and was realized by Michail von Doliwo-Dobrowolski of AEG (he was born in St. Petersburg in 1862) [4]. In 1925 long-distance transmission started, using 3-phase (rotating) current and high voltages of 110 kV or 220 kV due to lower losses [4].

The first pioneer nuclear power station Obninsk near Moscow was built in the Soviet Union in 1954 and supplied 5 MW. Calder Hall (two reactors) was built in the UK in 1956 and had an output of 69 MW (later on 180 MW); it was followed by Shipping Port in the US in 1957 with 100 MW [10]. In 1974 Biblis A in Germany generated 1.2 GW of electrical power [9], see Fig. 1.4. Nowadays the Kashiwazaki-Kariwa Nuclear Power Plant in Japan with 7 boiling water reactors and a rated power of 8.2 GW is the largest one of the world. It was completed in 1997 [22]. Of course nuclear fission is much more efficient than the burning of coal: 1 atom of U235 supplies 200 MeV of energy compared to 4 eV by the chemical reaction of 1 atom C12 with O2, which means 1 g of U235 delivers about 2.5 million times more energy than 1 g of coal. In this case the nuclear reactor supplies the thermal energy for the steam–electric power plant and replaces firing of coal [4]. An intermediate drastic improvement step could be nuclear reactors using fast neutrons, with higher reactor temperature and much more efficient use of nuclear fuel, also strongly reducing the amount and decay times of nuclear waste. A promising alternative also is the molten salt thorium reactor, which is currently discussed worldwide. Such a type was tested by Weinberg and his team 1965–69 in the USA (based on U233) and is based on the conversion of Th232 to U233 by neutron capture. It has several advantages over the nuclear reactor types used so far: Th232 is 4 times more abundant than U238, the reactor type is inherently safer, is more efficient, with less radioactive waste, decays faster, is not useful for nuclear weapons, but the initial radioactivity of the waste can be higher [23]. A further approach with about 60% higher efficiency compared to conventional nuclear reactors are fast breeder reactors, which have already a longer history of research and development. Here in 2016, the BN 800 fast breeder reactor in Belojarsk in Russia went into operation with a power of 800 MW. Its predecessor BN 600 started in 1980. The next reactor of this type there, the BN 1200, is under construction [24]. A still more efficient and safer approach would be nuclear fusion reactors. The first prototype of it, the International Thermonuclear Experimental Reactor (=ITER) in Cadarache (France) is planned to show the feasibility with a gain factor Q = 10 (fusion energy to plasma energy) around 2035 [25]. An international collaboration as in the ITER project is needed, since the problems in maintaining a high density plasma at temperatures higher than in the interior of the sun for a longer time are tremendous [25].

Nuclear power plant Biblis in Germany 1974 [26]; Courtesy of Siemens Historical Institute

One should keep in mind that the majority of all power plants: coal, nuclear, geothermal, solar thermal electric power plants, waste incineration plants as well as many natural gas power plants are steam-electric (86%!). Yet it has to be mentioned that hydropower stations have surpassed the largest nuclear power stations in the meantime: the Itaipu Dam water power plant (Brazil, Paraguay) delivers 14.2 GW electric power since 1991 [5, 27], where the dam has created an artificial lake of 1350 km2 area (total cost 20 billion US$). Besides the flooding of river valleys there are a lot more risks associated with the dam itself, as can be seen from the Sayano-Shushenskaya Dam and power plant in Russia (6.4 GW, 1985), where already several accidents have happened. By the way, since 1961 the new Niagara Falls power station delivers 2.5 GW. Yet the new world record is set by the Three Gorges Dam hydropower plant in China in 2008 with 22.5 GW and an artificial lake of 1000 km2 area [27].

In Fig. 1.5 the maximum available electrical power per generator/power station is shown in dependence on time. After a slow increase in the seventeenth and eighteenth century, a steep increase starts in the nineteenth century with the industrial revolution. In the time before 1820, the red squares are power estimates partly based on rebuilt devices and our knowledge nowadays. The blue triangles from 1826 on (after the formulation of Ohms law) are based on measurements (data based on [3,4,5, 9, 20, 21, 26, 27]). The steeper slope of the blue dashed line is the limiting curve for the conversion of finally mechanical to electrical energy based on thermal, hydropower or nuclear power sources. The learning curve for a new technology such as the galvanic cells or Siemens generators may be steeper, but initially starts lower. The availability of electrical power is, of course, a prerequisite for the start of vacuum electronics, together with the development of vacuum technology. The increasing demand for electric energy at the end of the nineteenth century is driven by the demand for lighting, then followed by motors and electric transportation/tramways.

Availability of electrical power versus time (per power generator/power plant): The red lines show the slow increase in available electrical power till 1800, when it was generated via friction or conversion of chemical to electrical energy. The steeper slope of the blue dashed line is the limiting curve for the conversion of finally mechanical to electrical energy based on thermal, hydropower or nuclear power sources. The learning curve for new technology such as the galvanic cells or Siemens generators may be steeper, but initially starts lower.

In this context we will shortly mention alternative sources of energy, which are less risky. They did not play a role in the initial advancement of electrical energy supply, but have become important nowadays. Here the wording renewable energies is wrong, since physicists are well familiar with the conservation of energy: it at least should be renewable or sustainable energy sources, which means permanently available energy supplies, such as wind energy or light from the sun. The maximum rated power of offshore single wind turbines now reaches 8 MW [28,29,30,31], with a blade length up to 80 m, onshore values of 2–4 MW are typical. Photovoltaic power plants have been realized up to 1 GW peak (166 MW peak Solarkomplex Senftenberg in Germany; 850 MW peak solar plant near Longyangxia in China) [32]. Yet the electric energy supplied is strongly fluctuating, the average level is much lower than the peak rating, the problem of storage is not solved and their advancement is also linked to strongly increasing area consumption. The strong fluctuations still imply the need for conventional power plants for the baseload [33–35].

It is instructive to look at the following comparison. The area consumption of power plants of different kinds is not only a question of the net basement area used, but also for the required surrounding infrastructure. Let us take the Biblis nuclear power plant as an example: the total electric power available was about 2.35 GW, assuming the planned Blocks C and D would have been realized then 4.7 GW would have been available from an area of about 0.3 km2 at the banks of River Rhine (see Wikipedia [36]). If we compare it with wind power area requirements, we refer, for example, to the statistical evaluation of Denholm et al. from the US Department of Energy [37]. In their report they derived an average permanent direct impact area (including permanent clearing area) of 0.3 ha/MW (+ a temporary impact area of 0.7 ha/MW), but a total wind park project area of 34 ha/MW. This larger area is due to the fact that a certain distance between wind turbines is needed to avoid the turbulent flow created by other wind turbines from the initially laminar flow. From the first value for the direct impact one would calculate an area consumption of 14.1 km2 for 4.7 GW capacity of wind power, but from the distance requirement the area consumption is 1598 km2, which is already 62% of the federal state Saarland. If one takes into account that offshore at best 20% of the nominal power can be realized, the required area reaches about 8000 km2, which is half of the area of Thüringen. This should not rule out wind energy as a renewable energy source in the energy mix, but its risks such as killing flying animals, reducing forest area, changing the airflow patterns and an observed drying effect on soil should be taken into account [31, 37].

1.1.2 Milestones in Vacuum Technology

The second basic condition for the rise of vacuum electronics is the availability of vacuum and hence vacuum technology. Already in antiquity Greek philosophers, especially Demokritos (460–370 B.C.), were speculating whether there might exist an absolutely empty space, in contrast to matter (filled by indivisible atoms). It was Aristotle (384–322 B.C.), who claimed that nature will not allow total emptiness and that there is a “horror vacui”, which became also the dogmatic belief of the catholic church [38, 39].

Only at the beginning of modern times, with a weakening belief in dogmas, in 1641–1643 in Italy Gasparo Berti and Vincenzo Viviani could explain why suction pumps cannot pump water higher than about 10 m, namely because of atmospheric pressure. Berti first measured this pressure with a water column and demonstrated vacuum above the column. In 1643/1644 then Viviani and Evangelista Torricelli replaced water by mercury in a thin column and invented the Hg pressure manometer [5, 38, 40].

Based on his experiments with the first air pump in 1641, Otto von Guericke first could not remove water from a wooden barrel by pumping, because it was of course not airtight. He then replaced the barrel by two iron half-spheres, which exactly fitted on each other, but only when he used thicker material they withstood air pressure and he was able to evacuate them. This was eventually the first vacuum chamber. In 1654 and 1656 he showed experiments with evacuated half-spheres at the Imperial Diets (Reichstage) in Regensburg and Würzburg. In 1657 he conducted his famous Magdeburg half-spheres experiment (now with copper) also using a solid piston pump for evacuation and reaching an estimated 13 mbar rough vacuum. 16 horses could not move the evacuated half-spheres apart. The work of Guericke was first reported in the books of Caspar Schott (Professor in Würzburg, whom Guericke had sent his results) in 1657 and 1664 and in an extended version by Guericke himself in 1672 [8, 38, 39, 41].

In 1660 Robert Boyle with the help of Robert Hooke improved the solid piston (air) pump and added a U tube Hg manometer in the vessel and thus reached 8 mbar. These Hg manometers are capable of measuring vacuum down to 1 mbar and with later improvements down to 0.1 mbar [40]. Despite a lot of new insights, for example, the Boyle–Mariotte law for ideal gases, the vacuum achieved was only slowly improved during the next 200 years, as can be seen in Fig. 1.5. Only when solid piston vacuum pumps as used by von Guericke got replaced by mercury piston pumps as first used by the glassblower Heinrich Geissler from Bonn (Germany) in 1855/56, reaching 0.13 mbar, this initiated an accelerated improvement of the ultimate vacuum [38, 40].

In 1865 Sprengel [40] devised a pump in which a train of mercury droplets trapped packets of gas in a glass tube and carried the gas away and thus reached 1.3 × 10−2 mbar. This type of pump was continuously improved in the following decades by several researchers like William Crookes and finally G. Kahlbaum, who reached 5 × 10−6 mbar in 1894 [40].

Of course this also needed improvement of pressure measurement, which was achieved by McLeod in 1874. The McLeod gauge permits pressure measurements down to 10−6 mbar. It is based on the compression of the gas by a mercury column to an easily measured higher pressure, and the use of Boyle’s law to calculate the original pressure [40].

In the beginning of the twentieth century the German physicist Wolfgang Gaede developed several new types of vacuum pumps, which revolutionized vacuum technology [38]. His inventions were initially triggered by the need for better vacuum for metal surface investigations. It started with the development of the motor-driven rotary oil pump (fore pump) reaching 2 × 10−2 mbar, which was manufactured by the Leybold company from 1907 on. The next step was the invention of the Hg rotary vane pump also in 1905, capable of reaching about 2 × 10−6 mbar using a fore pump as above. Gaede introduced the molecular drag pump in 1913, achieving 4 × 10−7 mbar, and the mercury diffusion pump in 1915 [40, 42]. In 1916, I. Langmuir further increased the pumping speed of the Hg diffusion pump, which is needed for industrial applications, and in 1918 R. Sherwood reached 2.7 × 10−8 mbar with an improved version [42].

For such low pressures the hot-filament ionization gauge was invented by O. E. Buckley in 1916, ranging down 1.3 × 10−8 mbar [43]. In the next 30 years, only slow progress was made concerning the ultimate vacuum. In 1937, Hunt described methods to reach ultrahigh vacuum (UHV, ranging from 10−7 to 10−11 mbar), including baking and use of getters, but the sensitivity of gauges was not sufficient for pressures lower than 10−8 mbar. In the years from 1935 to 1950, various getters were introduced, which after sealing and activation, helped to further pump down the tubes during operation. It was finally recognized that the limited sensitivity of the gauges was related to the creation of soft X-rays at the collector and a superimposed photoelectron current [42, 44]. A breakthrough in pressure measurement sensitivity was the Bayard–Alpert gauge, which lowered the X-ray limit drastically by reducing the surface area of the collector. It was invented by R. Bayard and D. Alpert and is able to measure down to 10−11 mbar [44]. Improvements in pumping soon followed. The molecular pump of Gaede was improved in the form of a multistage turbo-molecular pump by W. Becker of the company Pfeiffer Vakuum in 1958, with attainable vacua now in the range of 10−10 mbar [45]. Also in 1958 L. Hall of the company Varian introduced the ion getter pump, which is capable of reaching 10−10 mbar after first pumping down with a high vacuum pump and baking the vacuum chamber [46]. Further improvements of the Bayard–Alpert design reduced the X-ray limit further and allowed to measure pressures below 10−12 mbar. The years from 1950 to 1970 were very fruitful years for vacuum science and technology. Residual gas analysis was introduced by W. Paul by application of quadrupole mass filters. The ultimate vacuum was further reduced. Already Hobson [47] reported 1 × 10−14 mbar in a small glass system cooled to 4.2 K, measured with a Bayard–Alpert gauge. This was again reached by W. Thompson and S. Hanrahan in 1977. XHV results of 4 × 10−14 mbar were obtained by C. Benvenuti in 1977 and 1993 [44, 48]. In 1989, H. Ishimaru of Japan obtained 5 × 10−13 mbar by using a turbo-molecular pump for pumping down, careful baking the Al chamber and maintaining XHV by two ion getter pumps and a titanium sublimation pump. This is the lowest value for chambers at room temperature. For the measurement, he used a point collector gauge [49].

The lowest pressure reported so far, namely 6.7 × 10−17 mbar, was determined indirectly from the storage of anti-protons in a Helium cooled Penning trap by G. Gabrielse et al. in 1990 at CERN [50]. In this application of cooled Paul ion traps top results were also achieved recently by Micke et al. in 2019 [51] and by Schwarz et al. in 2012 [52], both claiming a pressure range of 1 × 10−15–1 × 10−14 mbar (1 × 10−13–1 × 10−12 Pa). For accelerator applications also a new pump type has been introduced, such as linear non-evaporable getter (NEG) pumps, explained in the review of Benvenuti [53].

The ultimate vacuum reached as a function of time is shown in Fig. 1.6, which is an update of G. Gaertner (see [2]) based on [2, 42, 44, 48, 51, 52]. It is a typical development function with some prominent milestones, showing a slow decrease of the ultimate vacuum reached (logarithmic scale) from 1650 to1850 (blue broken line), then followed by a much steeper decrease from then on, (red broken line, consistent with an exponential fit) partly motivated by the improvement drive of incandescent lamps and later radio tubes and CRTs. There is an indication of the progress currently slowing down, since all pressure values below 10−13 mbar were obtained with cryo-cooled ion traps.

1.2 Historical Development of Vacuum Electron Tubes

1.2.1 The Rise of Incandescent Lamps

The development of vacuum tubes was first driven by lamp applications not yet suited for practical use. In 1840 Robert William Grove from the UK invented the incandescent lamp, which was further improved by J. W. Starr from the US in 1845 (US patent) and by Joseph Swan from the UK in the years 1860–1878, who got a UK patent on it in 1878 [5]. In 1854 the German mechanic Heinrich Goebel built incandescent lamps using carbonized bamboo fibers in his shop in New York with a claimed lifetime of up to 400 h. The industrial prospects of the practical application culminated in a lawsuit, where also the inventors Edison and Swan were involved. Goebel finally got a US patent on his lamp in 1893 shortly before he died [5, 9]. Yet Edison had pioneered the industrial application and sold light bulbs for 50 cents already in 1883 and the industrial production of incandescent lamps started and then experienced a continuous rise in production numbers in the following years. The competition and the patent suits between Swan and Edison at the end lead to the foundation of the Edison and Swan Electric Light Company [9].

Figure 1.7 shows the annual production rate of incandescent lamps and electron tubes in the US from 1880 to 1940 as given by P. A. Redhead in his instructive review [54]. Since the luminous efficacy of incandescent lamps was doubled to 10 lm/W by replacing carbon filaments by tungsten filaments, they became the dominant lamp type from 1910 on. We also see that electron tube production started slowly in 1905 and made use of the existing vacuum technology for mass production of incandescent lamps, which were still produced as vacuum lamps. This will be addressed in the next paragraph. The technological cycle of incandescent lamps continued for the next hundred years and only recently started to decline due to the advent of energy-saving LED lamps [2].

1.2.2 The Early History of Vacuum Tubes and the Radio Tube Era

The development of the first vacuum electron tubes started a bit later than lamp development. In 1854 the glassblower Heinrich Geissler from Bonn in Germany invented the Geissler tubes with platinum wire feedthroughs, which he evacuated with his mercury pump to some mbar residual gas pressure. By using such a Geissler tube with 2 electrodes and a Ruehmkorff inductor, the German physicist Julius Pluecker invented the gas discharge lamp [5, 9]. In1859 Pluecker experimented with invisible cathode rays, which he called “glow rays” or “Glimmstrahlen”, later together with Hittorf [5]. The name “cathode rays” or “Kathodenstrahlen” was first introduced by the German physicist Eugen Goldstein in 1876. In 1878 the Englishman Sir William Crookes was the first to confirm the existence of cathode rays by displaying them, with his invention of the Crookes tube, a crude prototype for all future cathode-ray tubes [5, 55]. In 1897 J. J. Thomson proposed that the cathode rays were composed of negatively charged fragments of atoms that he called “corpuscles” and which we call electrons [56].

In 1883 Thomas Alva Edison from the USA discovered current flow to a collector plate in a light bulb and got a patent on such a diode for voltage regulation. Now the progress accelerated in view of industrial applications [54].

When experimenting with focused high-energy cathode rays, Wilhelm Conrad Röntgen discovered the X-rays in 1895. The today form of the X-ray tube was pioneered by William Coolidge (USA) in 1908, who used a thermionic tungsten wire cathode surrounded by a Wehnelt cylinder as electron source [5, 9].

In 1897 the German Karl Ferdinand Braun invented the CRT oscilloscope using a sideways anode, deflection plates, a magnetic focusing field and a phosphor screen—the Braun Tube was the forerunner of today’s television and radar tubes [5, 9].

In 1904 A. Fleming first introduced diodes for detection and rectification of high-frequency electromagnetic waves in telegraph receiver stations in the UK, parallel to A. Wehnelt with rectifier tubes in Germany. This detection turned out to be much more efficient than the “coherer” used before. The next step was the triode, which was introduced by the addition of a grid in 1906 by Lee de Forest in the USA parallel to Robert von Lieben in Austria and was used for amplification of weak electrical signals by modulation of much larger electron current. The tetrode with 2 grids was first suggested by the German Walter Schottky in 1916. The pentode was patented by Bernard Tellegen of Philips in the Netherlands in 1926 [57]. In 1920 medium-wave radio transmission had started in the USA. Now after a long phase of basic inventions, the industrial applications started to take off [54]. The number of grids was further increased and tubes with up to nine grids were built. Besides amplification, they also could be used as oscillators, first shown by A. Meissner in Austria 1913 [9].

The fields of tube applications increased from valves in receivers for wireless telegraphy and telephony, from telephone repeaters to radio receivers and radio transmitters.

An interesting development was the Loewe multi-tubes (“Loewe-Mehrfach-röhren”), where several (up to three) tubes were put in one bulb including passive components such as resistors and capacitors [2]. As the first integrated tubes they were a forerunner of integrated circuits, but were not successful commercially. Yet double triodes or double pentodes in one tube were quite common. In the late 1930s, several thousand different types of radio tubes were manufactured in Europe and the US by various companies. During World War II, radar applications (also using RF magnetrons) became very important.

Figure 1.7 shows the annual production rate of incandescent lamps and electron tubes in the US from 1880 to 1940 as given by P. A. Redhead in his instructive review [2, 54]. The electron tube production started slowly in 1905 and made use of the existing vacuum technology for mass production of incandescent lamps. At the beginning of the twentieth century, tube developments were dominated by radio tubes, which were used as multi-grid tubes in transmitter and receiver sets. During World War I, they got the first boost through military applications. In 1917, half a million tubes were produced in the US, mostly used in stationary amplifiers for wire telephony. In France in 1918, 300,000 of such tubes were manufactured. By 1932, the company Philips alone had produced over 100 million tubes (about 15 million in 1932) and 1 million radio receivers so far and at that time was the world’s largest manufacturer of radios and Europe`s largest manufacturer of radio tubes [58, 59]. In the years before, Philips had acquired Valvo in Germany (1925) and 50% of Mullard in the UK (1924) [60]. Other important tube companies were. for example, RCA, Westinghouse, GE, Western Electric in the US, GEC in the UK, AEG Telefunken and Siemens in Germany, Tungsram in Hungary and Tokyo Electric (Toshiba) in Japan. Figure 1.8 illustrates the dimensional range of Philips pentodes from 1937 (which were more efficient than tetrodes) used for transmission, ranging from 15 W (to the left) to 15 kW (PA 12/15 to the right, with a length of 61 cm) and a frequency ≤20 MHz. An even larger Philips transmitting tube was the TA 20/250 with 250 kW and length including water cooler of 1.4 m [61]. In World War II radar applications became decisive. Radio tubes reached their culmination in the 1940s–1960s, where about 1.2 billion tubes were produced per year around 1950 [1, 55, 57, 62]. Receiving tubes found a new application in television sets. The history of radio tubes is presented in more detail in the books of G. E. J. Tyne (“Saga of the Vacuum Tube” [62]), J. W. Stokes (“70 Years of Radio Valves and Tubes” [63]) and S. Okamura (“History of Electron Tubes”, [57]). Unfortunately, the book of Tyne only covers the time till 1930, but gives a good overview of the types of tubes produced and also on tube company history with detailed references. The book of Stokes is mainly centered on the USA and the UK including major companies and covers the whole radio tube era with detailed references. The overview of Okamura mainly deals with Japan and the USA, includes electron tubes in general and gives also some commercial information, but only gives few references.

Series of Philips pentodes from 1937 with outputs from 15 W (the smallest) to 15 kW (water-cooled PA 12/15 to the right, the length of which is about 61 cm; H. G. Boumeester, Philips Technical Review 2/4 (1937) 115–121, Fig. 6 [61].

During the radio tube era also the cathodes used were improved: due to tube pressure at best in the range of pre- to high vacuum in the early years, tungsten and later thoriated tungsten were the thermionic cathodes primarily used, since they are less sensitive to emission poisoning by residual gases. With further improvements of vacuum techniques by W. Gaede, I. Langmuir and others, electron tubes with oxide cathodes, first introduced by A. Wehnelt in 1904 [2, 64, 65], became feasible. From 1920 on the commercial application of oxide cathodes spread rapidly due to the advantage of operation at lower temperatures (“dull emitters” instead of W and Th/W “bright emitters”), and further enabled by the introduction of getters and the use of alkaline earth carbonates. This led to an oxide–cathode monopoly in radio-receiving valves, but they could not be used for high-voltage applications (>5 kV) due to arcing. The emission efficiency of a directly heated oxide cathode with about 40–100 mA/W was much higher than that of thoriated tungsten (6–30 mA/W) and tungsten cathodes (1.7–4 mA/W) in commercial receiver and transmitter valves in the 1930s. The efficiency values, of course, depend, for example, on rated life, emitter geometries and emission capability, especially of improved later cathode versions. Boumeester of Philips in 1937 gives the values of 3–8 mA/W for tungsten cathodes, 80 mA/W for thoriated tungsten and 200–300 mA/W for (earth alkaline) oxide cathodes [61]. Cathode and material knowledge at the end of the radio tube era was wrapped up in the books of Fred Rosebury (“Handbook of electron tube and vacuum techniques”, American Institute of Physics 1993, originally MIT 1964 [60]) and of Walter H. Kohl (“Materials and techniques for vacuum devices”, Reinhold Publishing Corp., New York 1967 [64]).

Then in the 1960s, electronic tubes were gradually replaced by transistors and the era of the radio valves approached its end (Fig. 1.9) with the development of Si technology and ICs at Texas Instruments and Bell and their commercialization. Yet the first computers were still based on tube technology and were quite bulky, but had no chance on the way to miniaturization.

Historical trends/technological waves in vacuum electronics and neighboring fields according to [2].

1.2.3 The Technological Cycle of Cathode Ray Tubes

During the zenith and decline of the radio tubes, the production of cathode-ray tubes (CRTs) for displays started to rise. This is illustrated in Fig. 1.9, where CRTs appear as the second technological vacuum-tube wave [2]. The picture tube was the basis of common television from the beginning around 1939 until about 2007 and experienced a continuous increase in production numbers after 1945. It was a technological extension of radio tube technology, based on the multi-gridded electron gun, where the magnetic deflection of the electron beam was added, with a fast horizontal sweep and vertical stepping, the beam spot landing on the phosphor dotted screen. Soon new features were introduced, such as color (using three guns for red, green and blue phosphor dots), increase of screen size, changes in format, and higher brightness and resolution [1, 66,67,68,69,70, 59]. Figure 1.10 shows a schematic cross-section of a television tube (TVT). The essential parts are the electron gun with the cathodes (three cathodes in the case of a color TVT for the colors red, green and blue), the magnetic yoke (coils) for the beam deflection, the shadow mask and the phosphor screen, where the pictures are displayed in a 50–100 Hz sequence. The cathodes used in CRTs were mainly oxide cathodes, the rest of about 10% were impregnated cathodes used for higher loads. Philips alone produced about 200 million oxide cathode units (0.65 W units) per year at the zenith of CRT production. The electron gun part could be separately tested in the form of dummy tubes and is essentially based on radio-tube technology. In the combat with flat displays, the last improvements were super-flat screens and super-slim tubes with a shorter electron gun, e.g. by LG. Philips Displays. High-definition television was also realized in prototypes at Philips and other companies already in 1996. The additional peak in Fig. 1.9 after 1990 was due to the application as computer monitors, culminating in 260 million CRTs sold in 1999 [2, 58]. Before the onset of color monitor tube (CMT) production, the seven largest CRT and also CTV set producers in the world in 1987 were Philips (the Netherlands, 8.6 million sets/year), Thomson (France, 6.8 million sets/year), Matsushita (Japan, 4.7 million sets/year), Sony (Japan, 3.8 million sets/year), Toshiba (3.2 million sets/year), Hitachi (Japan, 3.1 million sets/year) and Samsung (South Korea, 2.5 million sets/year) [70]. Philips had acquired Sylvania/GTE from the USA in 1980. In 1987 Thomson had taken over the radio and TV business from GE and former RCA. In 1999 Zenith (USA) was bought by LG from South Korea. Due to these concentrations and the rise of Chinese producers, the picture had somewhat changed in 1999: in television CRTs Philips (17.5% by value) and Thomson (12.8%) were still heading in market share, followed by Sony (12.5%), Matsushita (12%), Samsung (11%) and LG (7.8%). But in monitor CRTs Samsung had taken the lead (19% by value), together with Chunghwa Picture Tubes (CPT, 17.5%) and LG (15%), followed by Sony (9.5%), Philips (8.5%) and Hitachi (7.5%) [67]. The decline began in 2001, when color monitor tubes (CMTs) were phasing out, being replaced by flat and thin liquid-crystal displays (LCDs). Unlike the end of the radio tube era, where production sites could be, for example, switched to TV tube production due to similar technology, LCD technology was disruptive and, moreover, too expensive for production in Europe or the US. Therefore, the CRT companies used several strategies for survival in a shrinking market. There already existed a lot of cross-license agreements. At that time the largest TVT producers were the newly founded LG. Philips Displays CRTs with market shares of about 24% (by number of CRTs), Samsung SDI with 21%, and Matsushita, Sony and TTD (former Thomson) with about 9% each. Concerning CMTs, Samsung SDI was leading with about 27%, followed by LG. Philips Displays with 24% and Chunghwa Picture Tubes (CPT) with 20% [68, 69]. In the years after 2000, CRTs experienced severe price erosions of up to 30% for CMTs and up to 15% for TVTs, exerting a lot of cost down pressure. Factories had to be closed down or shifted to countries with cheaper wages. This was especially a problem for factories/producers in Europe, where a lot of workers lost their jobs, since also the LCDs were much cheaper to fabricate in East Asia. Hitachi stopped its CMT production in 2001 and its TVT production in 2002. Former rivals merged their business, after LG and Philips now Matsushita and Toshiba in 2002. LG. Philips Displays CRTs went bankrupt in 2006 and factories in Europe were closed down. In this scenario of a dying industry, the post-mortem anti-trust fine of the EU commission in 2012 [71] was incommensurate, since it was not linked to the damage and hence the profits of the cartel (but instead to the turnover; the cartel already starting in 1996/1997), did not help the people in Europe who had lost their jobs years ago, and maybe helped to shrink the European company Philips further. Of course cartel formation cannot be tolerated, but with respect to CRTs after 2000 it seemed to be similar to monitoring the water in a sinking boat by the passengers. In the end the cartel was inefficient, did not stick to “agreements” and could not stop the inevitable loss of profitability. Ironically enough, one of the founders of the cartel and whistleblower was not fined, but sentenced in the US for another LCD cartel. Only companies with sizeable LCD production could remain in the TV and color display business.

Despite the lower price of television tubes, the replacement by flat-screen devices, such as LCDs or plasma panels, followed in the next years and LCDs became dominant in 2007. New features such as high and super-high definition television, much larger screen sizes and three-dimensional televisions were introduced.

1.2.4 The Continuous Progress of Other Noncyclic Vacuum Electron Tubes

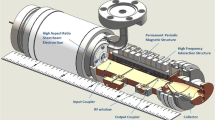

Not affected by the decline of radio tubes and later of cathode-ray tubes (CRTs) were other types of vacuum tubes experiencing a continuous progress, such as X-ray tubes, microwave tubes at high powers and high frequencies, electron beam devices for materials characterization and processing, ion propulsion systems, particle accelerators and thermionic converters. Most of these are described in more detail in the book “Vacuum electronics” edited by Eichmeier and Thumm [1] and in [2]. It is important that vacuum electron devices (VEDs) are superior to solid-state devices in the high-power and high-frequency domain, also with respect to reliability and life, as pointed out in [2, 56], and hence there is no threat of replacement. In [72, 73] J. H. Booske gives a limit of “power density” pavg. × f2 = pavg. × (c2 /λ2) (used as a figure of merit; f2 = (c/λ)2 serves as a measure of the reverse interaction area) with respect to cost and reliability between commercial solid-state amplifiers and vacuum electronic amplifiers of

Above this line vacuum electronic devices (VEDs) are superior in the high-frequency, high-power region. There are three reasons for it: in solid-state devices (SSDs) the electron current generates heat via collisions in the solid, which is not the case for an electron beam in vacuum. Second, voltages in an SSD are limited to avoid breakdown. These voltage limits are much higher for VEDs. Third, VEDs can be operated at higher temperatures than SSDs. By the way, there exists also a limit in scaled “power density” for vacuum electronic devices according to J. H. Booske of

which is more than 7 orders of magnitude higher than the relation for SSDs. High-magnetic-field gyrotrons and free-electron lasers (FELs) approach this line, best shown in a double logarithmic plot of power versus frequency as by Booske [72, 73]. There is also a progress of this figure of merit of VEDs over time, but mainly due to the introduction of new tube concepts.

In Fig. 1.11 total annual sales (in billions of €) are depicted versus time for three important vacuum tube types, namely microwave tubes, X-ray tubes and lamps (fluorescent/CFL and incandescent; converted update of [1]). Phasing out of incandescent lamps has started due to national energy savings legislation. The rise of LEDs is also shown, data here are without fixtures, controls and without car applications [74,75,76,77,78]. One can also see that the world market for microwave tubes and X-ray tubes remains nearly constant over time.

Total annual sales (in billions of €) versus time for three important vacuum tube types, namely microwave tubes, X-ray tubes and lamps (fluorescent/CFL and incandescent, compare [1]). Phasing out of incandescent lamps has started due to national energy savings legislation. The rise of LEDs is also shown, data here are without fixtures, controls and without car applications (lighting world market see [74,75,76,77,78])

Besides the boost in vacuum technology and cathode technology induced during the radio tube era and CRT tube era, also these baseline applications need and will trigger further continuous improvements. Advanced requirements to cathodes are set up for the high-power/high-frequency region of mm-wave devices, not only for terahertz imaging, but also for electron beam lithography, for particle accelerators and for thermionic converters, which we will discuss in the next paragraph.

1.3 Historical Development and Improvement Directions of Modern Vacuum Electron Sources

During the progress of vacuum electronics, we also see continuous improvement of the emission capability of thermionic cathodes from 1910 till 2020, here, as shown in Fig. 1.12. In the last century, practically all the cathodes used have been thermionic cathodes, where reviews have been given in [1, 79,80,81,82,83,84]. The leveling-off after 2004, especially of oxide cathode improvement, is mainly due to the reduction of research efforts after the decline of the CRT era. Due to tube pressure at best in the range of pre- to high vacuum (10−3 to 10−7 mbar), tungsten and later thoriated tungsten (Th-W) were the thermionic cathodes used at the beginning of the twentieth century for more than a decade, since they are less sensitive to emission poisoning by poisonous residual gases, which especially holds for tungsten. This is the main reason why tungsten is still used in X-ray tubes (see Chap. 5 of R. Behling). But also Th-W emission could be increased by improved vacuum and controlled carburization of the tungsten base before activation [1, 80].

Historical development of thermionic cathode emission capabilities—an update. The plot shows on the vertical axis the maximum current density achieved for specific cathodes versus year of achievement on the horizontal axis. The lower red line shows the evolution of DC emission of oxide cathodes (red circles). The upper blue line interpolates the DC emission of metal matrix-based cathodes including Ba dispenser cathodes (blue squares) and Ba scandate cathodes (violet diamonds). (Condition is an operational lifetime ≥20,000 h at the given DC emission current density jdc). The open symbols are pulsed emission data for different cathodes including Ba scandate cathodes, where the life requirement there is ≥ 4000 h; compare [1, 2]. Explanation of the abbreviations is given in the text.

With further improvements of vacuum techniques by W. Gaede, I. Langmuir and others, electron tubes with oxide cathodes became feasible in the 30s [65]. They continued to be used in CRTs, since due to preparation by spray coating of the carbonates on indirectly heated Ni caps they are rather cheap in production. Although they have a very low work function of about 1.5 eV and hence much lower operating temperature than W and Th-W, they are only suitable for lower DC current densities due to their low electrical conductivity as semiconductor-based material. Yet also their DC loadability has been steadily increased in the course of time, which can be seen in the lower red line in Fig. 1.12. This improvement in performance was not only due to improved vacuum, but was achieved by different forms of doping with rare earth oxides (SO and SF cathodes) especially with Eu2O3, Y2O3 and Sc2O3, also by the addition of Ni particles to the porous BaO·SrO coating (oxide plus cathode of Philips). Despite increased emission and life, the main improvement direction is a further increase in the DC loadability. A further modification was reservoir oxide cathodes investigated by Lemmens and Zalm [85] and later by G. Medicus and others [1], which did not really become competitive. More details on oxide cathodes can be found in Chap. 4.

The next boost with increased emission current density came by the introduction of reservoir and impregnated Ba dispenser cathodes in the 1950s, which could be used in tubes with ultra-high vacuum. This innovation in the cathode field was mainly achieved by the Philips company (linked to CRT research and development), as can be seen from the invention of the L-cathode in 1949 by H. Lemmens et al. (reservoir Ba dispenser cathode) and of the first impregnated (I) Ba dispenser cathodes (S, also B cathode) by H. Levi in 1955 [1]. In 1966, P. Zalm et al. introduced the so-called magic (M) cathode with an Os/Ru top layer on a tungsten base, impregnated with Ba–Ca–aluminate [1]. Similar improvements were obtained with top layers of Ir and to a lesser extend of Re, and also with alloys of the elements Os, Ir, Re, W in form of a coating or as constituents of the base matrix [80]. These Ba dispenser cathodes are the subject of Chap. 2 of this book and are mostly used in long-life microwave tubes for space applications. The lifetimes as given in Table 1.1 do depend not only on DC load and temperature, but also on the design, for Ba dispenser cathodes especially on the pellet thickness (impregnant reservoir), see [86]. The performance data of different types of thermionic cathodes applied in commercial tubes are listed in Table 1.1 in the sequence of introduction. Lifetime is specified for the DC current densities given, which are the limiting condition especially for oxide cathodes. These data are partly also the basis for Fig. 1.12.

Other variants are the so-called metal alloy dispenser cathodes such as Re2Th, Pt5Ba, Pd5Ba, Ir2La, Ir5Ce, which were pioneered by B. Djubua and colleagues from Russia [88] and rely on monolayer films of Th, Ba, La or Ce, respectively, forming on the surface of the alloy and reducing its work function. Especially Ir2La and Ir5Ce with work functions of about 2.2 eV are capable of delivering 100 A/cm2 pulsed for 1000 h and 10 A/cm2 pulsed for 10,000 h [88].

A variant of the Ba dispenser cathodes promising still higher emission capability are the scandate cathodes, first introduced by L. Figner in 1967 in Russia in the form of the pressed scandate cathode, then followed by the impregnated scandate and mixed matrix and top-layer scandate cathodes investigated mainly by Philips and Hitachi [1]. Philips Research improved scandate cathodes continuously to space charge limited emission current densities higher than 360 A/cm2 (pulsed) by introducing top-layer scandate cathodes prepared by laser ablation deposition (LAD) [89], but never introduced them in their CRT tubes for cost, lifetime and other reasons, which will be discussed in Chap. 3 of this book. Yet there is need for these thermionic cathodes with very high emission capability in advanced new applications as in high-power gyrotrons, in terahertz imaging devices and for electron beam lithography. In Beijing the group of Yiman Wang has continued research on scandate cathodes, especially nanometer-size scandia particle doped Ba dispenser cathodes (SDD) [90, 91], and reaches now top DC current densities of 40 A/cm2 with a lifetime of larger than 10,000 h (test still continuing) [87], as we can also see in Fig. 1.12. It has to be pointed out that in this figure the quoted DC current density is always linked to a minimum lifetime >10,000 h (for CRTs ≥20,000 h) and the pulsed current density to ≥4000 h. Of course for space applications 130 kh at 10 A/cm2 have been achieved with specially designed Ba dispenser cathodes as shown in Chap. 2. The progress in lifetime at a certain emission level is not addressed here and will need a different diagram.

Most of these improvements are due to the introduction of cathodes with lower work function and hence higher emission current density je, which can be seen from the Richardson Dushman equation (for derivation, see [1]), which is usually written in the form

where Ath = 120.4 Acm−2 K−2 is the thermionic constant for the ideal case, eΦ the work function and js the saturated emission current density, valid for zero extraction field at the surface (hence it is also called zero field thermionic emission). In reality the thermionic constant in (1.3) is replaced by the phenomenological Richardson constant AR, which in most cases is smaller or much smaller than Ath and is characteristic for a certain type of cathode. For values je < js the space charge field of the electron cloud in front of the surface is not compensated by the external extraction field (i.e. the Laplace field between cathode and anode) and the Child-Langmuir equation for space charge limited emission holds, which is in the first approximation independent of the temperature, but depends on the Laplace field strength Ua/D

The geometry factor K = 2.33 × 10−6/D2 is given in units of A/(cm2 × V3/2), where D is the cathode to anode distance in cm and Ua the anode voltage in V. There arise further corrections of space charge limited emission by taking the electron velocity distribution into account, which modify (1.4) (see [1]), but will not be discussed here.

Besides a reduction of the work function of thermionic cathodes and hence increased usable space charge limited current density, increased lifetime at the operating temperature and an increased emission uniformity over the emitting surface are further requirements. Since lower work function in most cases implies a lower operating temperature and a higher gas-poisoning sensitivity, ultra-high vacuum is needed in the electron tubes. In meeting these aims, thermionic cathode research concentrates on modification of known materials by additions, on investigation of new materials, on high-resolution characterization and modification of cathode surfaces including modern thin-film deposition methods, and design of resupply reservoirs for long life.

For cathode applications, for example, in microwave tubes, it is instructive to know that the achievable output power Pout, for example, of a klystron is proportional to the electron emission current Ie times of a factor dependent on anode voltage and magnetic field. Interestingly, the cost per tube then scales to a good approximation with Pout, the factor being in the range 1.4–3 $ per watt [2]. For space applications of microwave tubes, a long lifetime of these cathodes in the order of 10 years or more at the operating current density is needed. Cathodes used for these applications are typically Ba dispenser cathodes. Yet the higher the current density and hence the temperature, the shorter the lifetime. Here also Ba dispenser cathodes with lower work function are needed. Booske [73] has shown that for closing the terahertz gap between about 200 GHz and 3 THz, vacuum electron generators are needed. This need is most pronounced for compact mobile generators. Here in a design study based on scaling laws for 100 W CW power at 200 GHz frequency, a cathode with at least 160 A/cm2 DC would be required under the assumption that the other parameters are optimized. J. Booske sees the only possibility in beam compression, since also long life is needed, but it has the disadvantage of nonuniformity over the beam diameter. In this respect sheet beams for further compression from scandia doped dispenser (SDD) cathodes with lateral source dimensions of 1 mm × 50 µm have been realized by Y. Wang and coworkers with up to 100 A/cm2 current density [90, 91], which will be described in Chap. 3.

Other requirements concern the tolerable base pressure. Here poisoning sensitivity and robustness with respect to ion bombardment are the properties to be improved.

For high-resolution electron beams for microscope applications high-brightness cathodes are required [92] as discussed in Chap. 6 by P. Kruit. Here the emission current density is not decisive, but the reduced differential brightness Br, is given as

Here I is the beam current, As is the virtual source area, Ω the beam angle and U the beam voltage.

In the practical brightness definition, the differential values are replaced by I, the beam area at half-width and by Ω = π × α2 and hence are 1.44 times higher than differential brightness. Typical emitters used are tungsten tips, LaB6 hairpin tips and above all a specific Schottky emitter, consisting of a tungsten single crystal tip with (100) orientation coated with a Zr–O surface dipole, reducing the work function from 4.5 to 2.9 eV. This Schottky emitter has a reduced brightness of Br = 1–2 × 108 A/(m2 sr V) with a current limit of 10 nA, LaB6 exhibits Br = 2 × 105 A/(m2 sr V), tungsten reaches 5 × 108 A/(m2 sr V) and carbon nanotubes reach 3 × 109 A/(m2 sr V).

For electron beam microscopy the current of a single beam is maybe sufficient, but for lithography arrays of thousands of parallel beams are needed with a total beam current in the order of 200 µA on the wafer and uniform beam currents of, e.g. 13 nA. Regarding the losses in the optical column in total, a thermionic emission current of 130 mA has to be supplied. Here it has been shown by the company Mapper [92], that impregnated cathodes as used for CRTs fulfill these requirements. With Os/Ru-I cathodes, Br= 106 A/(m2 sr V) is reached. Here scandate cathodes should exhibit a reduced brightness of Br= 107 A/(m2 sr V) and could be the next step in mask-less electron beam lithography. Yet here current stability, current density uniformity and sufficient life >1000 h still need to be proven for this application.

Another type of cathodes are photocathodes, which rely on Einstein’s photoelectric effect of 1905. They are used as photo detectors, e.g. in night vision, in photo-multipliers and as electron injectors in particle accelerators or in free-electron masers. The classical materials used are pure metals such as Cu, but with low quantum efficiency QE, and semiconductors such as Cs2Te, Cs3Sb, K2CsSb and others with higher QE. Used are also field emitters with superimposed laser photoemission [93]. Improvement directions are high peak current densities in the pulsed beam of >105 A/cm2 and high beam currents of more than 1 mA, which will be discussed in detail in Chap. 7 on photocathodes.

And finally a general trend is to try to replace thermionic cathodes by cold cathodes, essentially field emitters [94,95,96,97,98,99]. Here a general trend of improvement over time cannot be shown in a plot of current density versus time, since they exhibit already current densities much higher than thermionic cathodes, which largely depend on very small emitting areas. Hence natural applications are electron beam devices. For other applications requiring higher currents, practical measures are the increase of emitting area by using bunches or arrays of them and the increase of stable DC current from field emitter arrays (=FEAs) on a larger area. A graphical overview of the most important cold cathode types, including tungsten tips, different types of field emitters and their arrays, and pn emitters (which are also known as “reverse biased junction cold cathodes” [2, 100]), is given in Fig. 1.13 in a plot of emission current density versus emitting area, in this form first given by Gaertner [2]. Here the improvement direction over time is given in trajectories more perpendicular to the lines of equal current (10 µA, 1 mA, 100 mA) in direction of higher DC currents. Zhirnov [97] in 2000 first tried to identify standardization criteria for FE measurements, since in many cases results from different experimenters are not comparable. He also stated, that the figure of merit is the total current emitted divided by the entire cathode area and also showed a plot of current density versus area partly based on Spindt emitter arrays. His diagram corresponds to the 1 mA line in the lower half of the diagram of Gaertner. Charbonnier [98] also discussed these questions, but instead of a similar diagram came up with the statement, that maximum DC current is 3 mA and maximum pulsed current is limited to about 120 mA.

Plot of field emission (cold emission) current density versus emitter area (including passive parts) based on the literature data for very sharp W tips, Spindt arrays, CNTs and CNT dot arrays, Si FEAs and pn emitters according to [2] and Chap. 12 (see Tables and Fig. 12.18). Lines of equal current are shown for 10 µA, 1 and 100 mA.

Wenger et al. [99] have pointed out that the field emitter arrays have not proven their usefulness in practical applications due to short life and inherent sensitivity to ion bombardment and arcing. They also showed, that when increasing current by increasing voltage of CNTs, after a Fowler Nordheim behavior at lower voltages a limited FE region follows, where the limitation is caused by space charge and by the resistance of the contact, the substrate and the emitter. At further voltage increase a normal glow discharge starts. Hence an FE-ignited glow discharge can be controlled and used as a plasma electron source. This topic will not be part of this book.

Field emission cathode features and requirements with respect to specific applications will be discussed in more detail in Chap. 10 by N. Egorov and E. Sheshin.

1.4 Future Requirements

In conclusion we have identified several improvement directions of cathodes for future requirements, depending on their application: For thermionic cathodes these are lower work function/ lower operating temperature/ higher electron emission current density/ longer life/ higher robustness versus poisoning and ion bombardment. Certain trade-offs will depend on the priorities set by the application. Currently the most advanced research concentrates on Ba scandate cathodes, since they promise to meet the high requirements, e.g. for terahertz imaging. For high-resolution electron beams for microscope and lithography applications, high-brightness cathodes are required, but also with increased beam current. Also here Ba scandate cathodes are promising candidates. For photocathodes improvement is directed to high peak current densities in the pulsed beam of >105 A/cm2 and to high beam currents of more than 1 mA. For laser exited photocathodes fast response times and high brightness is needed. Improvement options are higher quantum efficiency via lower work function and surface coatings on suitable metals or semiconductors.

For field emitters to become competitive in vacuum electron tubes, higher (DC) currents, higher emitting areas, higher stability and robustness are needed. Otherwise they will only be used in some niche applications. These questions will be addressed in more detail in the following chapters of this book.

References

G. Gaertner, H.W.P. Koops, Vacuum electron sources and their materials and technologies, in Chapter 10 of Vacuum Electronics, Components and Devices, ed. by J. Eichmeier, M. Thumm (Springer, 2008)

G. Gaertner, Historical development and future trends of vacuum electronics. J. Vac. Sci. Technol. B 30(6), 060801 (2012)

P. Dunsheath, A History of Electrical Power Engineering (MIT Press, Cambridge, 1962)

Wolfram Fischer (Ed.), “Die Geschichte der Stromversorgung”, Verlags- u. Wirtschaftsgesellschaft der Elektrizitätswerke m.b.H., Frankfurt a. Main, 1992

F.R. Paturi, Chronik der Technik (Chronik Verlag, Dortmund, 1988)

I. Buchmann, Batteries in a Portable World, Cadex Electronics 2011. http://www.mlelectronics.be/doc_downloads/producten/batterijen/basisinfo/BatteryKnowledgePart1.pdf

The Electrical Experimenter, Sept 1915, p. 198. https://www.americanradiohistory.com/Archive-Electrical-Experimenter/EE-1915–09.pdf

C. Schott, O. von Guericke, Ottonis de Guericke Experimenta Nova Magdeburgica de Vacuo Spatio (Amsterdam, 1672)

W. Conrad (ed.), Geschichte der Technik in Schlaglichtern (Meyers Lexikonverlag, Mannheim, 1997)

M. Schneider, Elektrisiermaschinen im 18. und 19. Jahrhundert, Ein kleines Lexikon (Universität Regensburg, 2003/2004)

W.E. Ayrton, Practical Electricity; Laboratory and Lecture Course (Cassell & Company Ltd., London, Paris & Melbourne, 1891)

H.W. Meyer, A History Of Electricity And Magnetism (MIT Press Design Department, 1971 and Burndy Library, Norwalk, Connecticut, 1972)

Roobert33, 2015. https://www.youtube.com/watch?v=c_0N-0lfxpE

B. Garg, Introduction to Flow Batteries: Theory and Applications, coursework for PH240 (Stanford University, Fall, 2011)

T. Nguyen, R. F. Savinell, Flow batteries. Interface 19(3), 54 (2010)

L. Figuier, Les Merveilles de la Science, Furne, Jouvet et Cie., Paris 1867, see Chapter “La pile de Volta”, pp. 598–706

P. Unwin, R. Unwin, ‘A devotion to the experimental sciences and arts’: the subscription to the great battery at the Royal Institution 1808–91. Br. Soc. Hist. Sci. BJHS 40(2), 181–203 (2007)

A. Anders, Tracking down the origin of Arc plasma science-II. Early continuous discharges. IEEE Trans. Plasma Sci. 31, 1060–1069 (2003)

W. James King, The Development of Electrical Technology in the 19th Century: l. The Electro-chemical Cell and the Electromagnet. BULLETIN 228: Contributions from the Museum of History and Technology (1962), pp. 231–418

V. Leiste, Siemens History Site-Im Fokus-Dynamoelektrisches Prinzip. https://www.siemens.com/history/de/aktuelles/1056_dynamoelektrisch

Siemens Historical Institute (ed.), Age of Electricity (Deutscher Kunstverlag, 2014)

http://www.power-technology.com/features/feature-largest-nuclear-power-plants-world/

S. David, E. Huffer, H. Nifenecker, High efficiency nuclear power plants using liquid fluoride thorium reactor technology. Europhys. News 38, 24–27 (2007). https://doi.org/10.1051/EPN:2007007

Russian fast reactor reaches full power, 17 August 2016. http://www.world-nuclear-news.org/Articles/Russian-fast-reactor-reaches-full-power

M. Claessens, ITER: The Giant Fusion Reactor, Springer 2020

https://en.wikipedia.org/wiki/List_of_largest_power_stations

https://www.energie-lexikon.info/windenergieanlage.html. RP Energie-Lexikon, Rüdiger Paschotta (2017)

http://www.ingenieur.de/Fachbereiche/Windenergie/Groesstes-Windrad-Welt-Herz-Nieren-getestet

Wind turbine, Wikipedia 2019. https://en.wikipedia.org/wiki/Wind_turbine

J. Peinke, D. Heinemann, M. Kühn, Windenergie, eine turbulente Sache? Physik J. 13(7), 35f (2014)

https://de.wikipedia.org/wiki/Photovoltaik-Freiflächenanlage

http://www.ageu-die-realisten.com/archives/1473. Der Flächenbedarf von Stromerzeugungsanlagen, Günther Keil (2015)

A. Bachem, C. Buchal, Energiewende-quo vadis? Physik J. 12(12), 33–39 (2013)

M. Bettzüge, Nationaler Hochmut oder cui bono? Physik J. 13(5), 33–38 (2014)

P. Denholm, M. Hand, M. Jackson, S. Ong, Land-Use Requirements of Modern Wind Power Plants in the United States. Technical Report NREL/TP-6A2-4 5834 August 2009, US Department of Energy, National Renewable Energy Laboratory

K. Wey, R.J. Peters, Geschichte der Vakuumtechnik. Vak. Forsch. Prax. 14, 183 (2002)

N. Marquardt, Introduction to the Principles of Vacuum Physics (CERN Accelerator School: Vacuum Technology, 1999). http://www.iaea.org/inis/collection/NCLCollectionStore/_Public/32/011/32011645.pdf

P.A. Redhead, History of Vacuum Devices (CERN Accelerator School: Vacuum Technology, 1999), pp. 281–290. http://cdsweb.cern.ch/record/455984/files/p281.pdf

W. Knapp, Vacuum technology, in Chapter 11 of Vacuum Electronics, Components and Devices, ed. J. Eichmeier, M. Thumm (Springer, 2008)

P.A. Redhead, The ultimate vacuum. Vacuum 53, 137–149 (1999)

A. Calcatelli, The development of vacuum measurements down to extremely high vacuum –XHV, in Proceedings of 3rd IMEKO TC16 International Conference on Pressure Measurement, Merida 2007 (Curran Associates 2009), p. 219. http://www.imeko.org/publications/tc16-2007/IMEKO-TC16-2007-KL-034u.pdf

P.A. Redhead, Vacuum science and technology: 1950–2003. J. Vac. Sci. Technol. A 21, S12–S14 (2003)

W. Becker (Pfeiffer Vakuumtechnik), Turbo-molecular pump, US Patent 3477381A, published 11 Nov 1969, priority 30 Dec 1966

L.D. Hall, Ionic vacuum pumps. Science 128, 3319 (1958)

J.P. Hobson, Measurements with a modulated Bayard-Alpert gauge in aluminosilicate glass at pressures below 10–12 Torr. J. Vac. Sci. Technol. 1, 1 (1964)

P.A. Redhead, Extreme High Vacuum (CERN Accelerator school: Vacuum technology, 1999), pp. 213–226

H. Ishimaru et al., Ultimate pressure of the order of 10−13 Torr in an aluminum alloy vacuum chamber. J. Vac. Sci. Technol. B 7, 2439–2442 (1989)

G. Gabrielse, H. Kalinowsky et al., Thousandfold improvement in the measured Antiproton Mass. Phys. Rev. Lett. 65, 1317–1320 (1990)

P. Micke, M. Schwarz et al., Closed-cycle, low-vibration 4 K cryostat for ion traps and other applications. Rev. Sci. Instr. 90, 065104 (2019)

M. Schwarz et al., Cryogenic linear Paul trap for cold highly charged ion experiments. Rev. Sci. Instr. 83, 083115 (2012)

C. Benvenuti, Extreme high vacuum technology for particle accelerators, in Proceedings of the 2001 Particle Accelerator Conference (IEEE), Chicago (2001), pp. 602–606

P.A. Redhead, Vacuum and the electron tube industry. J. Vac. Sci. Technol. A 23, 1252 (2005)

O. Darrigol (CNRS), Electrodynamics from Ampere to Einstein (Oxford University Press, Oxford, 2000)

P.A. Redhead, Birth of electronics: thermionic emission and vacuum. J. Vac. Sci. Technol. A 16, 1394 (1998)

S. Okamura, History of Electron Tubes (Ohmsha, Tokyo, 1994 and IOS Press, 1994)

G. Bekooy, Philips Honderd–en industriele onderneming (Europese Bibliothek, Zaltbommel, 1991)

D. van Delft, A. Maas, Philips Research–100 years of Inventions that Matter (WBooks, Zwolle, 2013)

F. Rosebury, Handbook of Electron Tube and Vacuum Techniques (American Institute of Physics, 1993, originally MIT, 1964)

H.G. Boumeester, Development and manufacture of modern transmitting valves. Philips Tech. Rev. 2(4), 115–121 (1937)

G.E.J. Tyne, Saga of the Vacuum Tube (Indianapolis, 1987)

J.W. Stokes, 70 Years of Radio Valves and Tubes (New York, 1982)

W.H. Kohl, Materials and techniques for vacuum devices (Reinhold Publishing Corp., New York, 1967)

G. Gaertner, D. den Engelsen, Appl. Surf. Sci. 251, 24–30 (2005)

J.A. Castellano, in Digest of Society for Information Display (SID) Symposium 1999 (SID, San Jose, 1999), p. 356

P. Combes, Display Components, Philips ppt-presentation (2000). See https://www.yumpu.com/en/document/view/217010/philippe-combes

J. Kitzmiller, Industry and Trade Summary-Television Picture Tubes and other Cathode Ray Tubes. USITC Publication 2877, May 1995

N. Stam, CRT Innovations, PCMAG.COM, 31.3.2003, based on isuppli/Stanford resources + internal information from LPD 2002

M. Kenney, R. Florida (eds.) Locating Global Advantage. Industry Dynamics in the International Economy. Stanford 2004. Chapter 4: M. Kenney, The shifting value chain. The Television Industry in North America, p. 182

European Commission, Case AT.39437, TV and computer monitor tubes. Cartel procedure, 5 Dec 2012

R.J. Barker, J.H. Booske, N.C. Luhmann, G.S. Nusinovic (eds.) Modern Microwave and Millimeter-Wave Power Electronics (Wiley-IEEE, New York, 2005)

J.H. Booske, Plasma physics and related challenges of millimeter-wave-to-terahertz and high power microwave generation. Phys. Plasmas 15, 055502 (2008)

H. Verhaar (Philips Lighting) Global Incandescent Phase-out; Meeting the Demand. Presentation 12 May 2008, Shanghai. http://www.energyrating.gov.au/document/presentation-global-incandescent-phase-out-meeting-demand

J. Stettler, A. Leslie, A. Bell (new street Research) The Future of Lighting: Who Will Win?. Industry analysis, 5 May 2010. www.newstreetresearch.com, http://www.sdr.si/pdf/the%20future%20of%20lighting%20100305.pdf

P. Waide, Phase Out of Incandescent Lamps. International Energy Agency, Information paper, April 2010, https://www.oecd-ilibrary.org/docserver

G. Zissis, P. Bertoldi, 2014 Update on the Status of LED Market (European Commission, Joint Research Centre, JRC Technical Report, Ispra, Italy, 2014)

G. Zissis, P. Bertoldi, Status of LED-Lighting World Market in 2017. European Commission, JRC Technical Reports, Ispra (2018)

G.A. Haas, Thermionic electron sources, in Methods of Experimental Physics, vol. 4, part A (Academic Press, New York, 1967)

R.O. Jenkins, A review on thermionic cathodes. Vacuum 19(8), 353 (1969)

R. Thomas, J. Gibson, G.A. Haas, R. Abrams, Thermionic sources for high brightness electron beams. IEEE Trans. ED 37, 850–861 (1990)

P.W. Hawkes, Thermionic emission. Encycl. Appl. Phys. 21, 229–243 (1997)

R.R. Umstattd, Advanced electron beam sources. Chapter 8 in Modern Microwave and Millimeter-Wave Power Electronics, ed. by R. Barker et al. (Wiley, 2005), pp. 393–444

S. Yamamoto, Fundamental physics of vacuum electron sources. Rep. Prog. Phys. 69, 181–232 (2006)

H. Lemmens, P. Zalm, New developments in oxide-coated cathodes-oxide-coated cathodes for loads of 1 to 2 A/cm2. Philips Techn. Rev. 23, 21–24 (1961/62)

T. Aida, H. Tanuma, S. Sasaki et al., Emission life and surface analysis of barium-impregnated thermionic cathodes. J. Appl. Phys. 74, 6482–6487 (1993)

W. Liang, Y. Wang, J. Wang, W. Liu, F. Yang, DC emission characteristic of nanosized-scandia-doped impregnated dispenser cathodes. IEEE Trans. ED 61/6, 1749 (2014) and Y. Wang, Scandate cathode–what we have learned and what we expect to know, in Proceedings of the IVESC 2016, Seoul, p. 3

B. Djubua, O. Kultashev, A. Makarov, O. Polivnikova, E. Zemchikhin, Metal alloy cathodes for application in vacuum microwave devices, in Proceedings of the IVEC/IVESC 2012, Monterey, CA, paper 125

G. Gaertner, P. Geittner, H. Lydtin, A. Ritz, Emission properties of top-layer scandate cathodes prepared by LAD. Appl. Surf. Sci. 111, 11–17 (1997)

J. Wang, W. Liu, Y. Wang, M. Zhou, in Abstract Book of 2008 International Vacuum Electron Sources Conference (IVESC), London (2008), p. 10

Y. Wang, J. Wang, W. Liu, J. Li, in Abstract Book of 2008 International Vacuum Electron Sources Conference (IVESC), London (2008), p. 16

J.R. Meijer et al. (MAPPER Lithography B.V.), Electron sources for MAPPER maskless lithography, in Proceedings of IVESC-ICEE-2014, Saint-Petersburg, Russia (2014)

D.H. Dowell, J. Smedley, et al., Cathode R&D for future light sources. SLAC-Pub-14002 and Nucl. Instrum. Methods Phys. Res. A 622, 685 (2010)

I. Brodie, C. Spindt, Vacuum microelectronics, in Advances in Electronics and Electron Physics, vol. 83, ed. by P. Hawkes (Academic Press, New York, 1992), p. 1

W. Zhu (ed.), Vacuum Microelectronics (Wiley, 2001)

N. Egorov, E.P. Sheshin, Electron Field Emission, Principles and Applications (In Russian) (Intellekt, 2011); Updated English version Field Emission Electronics (Springer, 2017)

V.V. Zhirnov, C. Lizzul-Rinne, G.J. Wojak, R.C. Sanwald, J.J. Hren, “Standardization” of field emission measurements. J. Vac. Sci. Technol. B 19, 87 (2001)

F. Charbonnier, Developing and using the field emitter as a high intensity electron source. Appl. Surf. Sci. 94(95), 26–43 (1996)

D. Wenger, W. Knapp, B. Hensel, S.F. Tedde, Transition of electron field emission to normal glow discharge. IEEE Trans. Electron Devices 61(11), 3864–3870 (2014)