Abstract

The uncertainty of models is now becoming one of the most important issues in the process of dealing with practical applications. In order to improve reliability and accuracy of inference, one usually adopts the model averaging method instead of selecting a single final model through a model selection procedure. Under the Bayesian framework, two upper bounds of the risk are derived and the posteriors are obtained by minimizing the bounds with a fixed prior. Then we propose two data-based algorithms to get proper priors for Bayesian model averaging in this paper. Simulations show that by using these priors, smaller mean squared prediction errors can be gotten both in synthetic data and real data studies, especially for the data of poor quality.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

It is common in practice that the observed data can be described by different models. A standard procedure to make inference is to choose a best model according to some criteria, such as model predictive ability, model fitting ability or many different information criteria like AIC and BIC. After selection, all the inferences and conclusions are made based on the assumption that the selected model is correct.

However, the drawbacks of this approach exist obviously. The selection of one particular model may lead to riskier decisions since it ignores the model uncertainty. In other words, if we choose a wrong model, the consequence will be disastrous. Moral-Benito already pointed out the concern in [8], “From a pure empirical viewpoint, model uncertainty represents a concern because estimates may well depend on the particular model considered.” Therefore, combining multiple models to reduce the model uncertainty is very desirable.

As an alternative strategy, model averaging enables researchers to draw conclusions based on the whole universe of candidate models. In particular, researchers estimate all the candidate models and then compute a weighted average of all the estimates for the coefficient on X. There are two different approaches to model averaging in the literature including Frequentist Model Averaging (FMA) and Bayesian Model Averaging (BMA).

Frequentist approaches focus on improving prediction and use weighted mean of estimates from different models while Bayesian approaches focus on the probability that a model is true and consider priors and posteriors for different models. Ref. [4] suggested to use Bayesian inference to reduce the model uncertainty and pointed out the importance of the fragility of regression analysis to arbitrary decisions about the choice of control variables. Bayesian Model Averaging considers model uncertainty through the posterior distribution. The model posteriors are obtained by Bayes’ theorem, and therefore allowing for combined estimation and prediction. Compared with the FMA approaches, there are a huge literature on the use of BMA in statistics.

Influenced by [4], most works were concentrated only on the linear models. Ref. [10] extended to generalized linear models by providing a straightforward approximation. For more details, refer to some landmark reviews such as [2, 8, 15] on BMA. Moreover, Refs. [6, 19] gave good estimators of the risk in linear mixed-effects models. For getting the posterior distribution of the weights, Ref. [17] gave a method called SOIL which can well separate the variables in the true model from the rest under some assumptions. However, they used a default prior for the procedure.

The Bayesian approaches have the advantage of using arbitrary domain knowledge through a proper prior. However, they can’t guarantee the upper bound of the decision risk without assuming the truth of the prior. The Probably Approximately Correct (PAC) framework, first formulated by [7], was proposed to deal with this problem. It has been widely developed in recent years. Refs. [5, 11] gave tighter bounds in some specific cases. Ref. [1] provided an extended PAC-Bayes bound for learning the proper priors. But, they used the same data for learning the prior and the posterior simultaneously. This issue will make the ability of generalization worse.

There have been many recent developments in model averaging. Refs. [14, 18] presented two criteria, Mallows criterion and jackknife criterion, to determine the weights of model averaging. Their meanings are not as directly as minimizing the upper bound of the risk. They didn’t build the relation between the risk and the criteria theoretically. Refs. [6, 19] gave good estimators of the risk in a certain type of models while our work doesn’t specify the model type. For getting the posterior distribution of the weights, Ref. [17] gave a method without choosing a proper prior. Ref. [1] provided an extended PAC-Bayes bound for learning the proper priors. Nevertheless, it involved reusing of the data which increased the probability of overfitting.

In this paper, we propose a specific risk bound under our settings and two data-based methods for adjusting the priors in PAC-Bayes framework. And, two practical algorithms are given accordingly. The main contributions of this work are the following. First, sequential batch sampling method is proposed to deal with the situation that there isn’t historical data while the data can be sampled with the rules made by researchers. Second, when the historical data existed, we use similar old tasks to extract the mutual knowledge with the current task for adjusting the priors. Third, two theoretical risk bounds are provided for these two situations respectively. Fourth, empirical demonstration shows that the proposed meta-methods have excellent performances in the numerical studies.

The reminder of this paper is organized as follows. In Sect. 23.2, a standard risk bound and a practical sequential batch sampling method are established for obtaining a better prior in no previous data situation. Section 23.3 proposes the method to deal with historical similar data for the same purpose. Illustrative simulations given in Sect. 23.4 show that our algorithms will lead to more effective prediction and support our theoretical results. For real-world dataset, we apply the proposed methods to two real datasets and confirm the higher prediction accuracy of minimizing risk bound method. Section 23.5 concludes this paper with some discussions. Some proofs of theorems are delegated to the supplementary materials.

2 Sequential Adjustment of Priors

In a traditional supervised learning task, the learner needs to find an optimal model (or hypothesis) to fit the data, and then uses the learned model to make predictions. In the Bayesian approach, various models are allowed to fit the data. In particular, the learner needs to learn an optimal model distribution over the candidate models, and then uses the learned model distribution to make predictions.

More specifically, in a supervised learning task, we are given a set \(S=\{(x_i, y_i)\}_{i=1}^n\) of i.i.d. samples drawn from an unknown distribution D over \({\mathscr {X}}\times {\mathscr {Y}}\), i.e., \((x_i,y_i)\sim D\). The goal is to find a model h in the candidate model set \(\mathscr { H}\), a set of functions mapping features (feature vector) to responses, that minimizes the expected loss function \(\mathbb E_{(x, y)\sim D}L(h, x, y)\), where L is a bounded loss function. Without loss of generality, we assume L is bounded by [0, 1]. In the Bayesian framework, a distribution Q over \(\mathscr { H}\) is the purpose instead of searching a specific optimal model \(h\in \mathscr { H}\). Therefore, the goal turns to finding the optimal model distribution Q, which minimizes \(\mathbb E_{h\sim Q}\mathbb E_{(x, y)\sim D}L(h, x, y)\). Then one could use the weighted average of the models over \(\mathscr { H}\) to make predictions, namely, \(\widehat{y}=\mathbb E_{h\sim Q}h(x)\). More generally, we further assume that the candidate model set \(\mathscr { H}\) consists of K classes of models \(\mathscr {M}_1, \mathscr {M}_2, \dots , \mathscr {M}_K\) with \(\mathscr { H}=\bigcup _{k=1}^K\mathscr {M}_k\). Each model class \(\mathscr {M}_k\) is associated with a probability \(w_k\), and for each model class \(\mathscr {M}_k\), there is a distribution \(Q_k\) over \(\mathscr {M}_k\). For example, a model class \(\mathscr {M}_k\) could be a group of models obtained from the Lasso method, and the hyper-parameter \(\lambda \) in Lasso follows a distribution \(Q_k\). Another common example is that \(\mathscr {M}_k\) is a group of neural networks with a certain architecture, and the weights of neural networks follow a joint distribution \(Q_k\). In this way, the total distribution over \(\mathscr { H}\) can be written as \(\xi =(w, Q_1, \dots , Q_K)\), where w consists of \(w_1, \dots , w_K\) with \(||w||_1=1\). The goal of the learning task is to find an optimal distribution \(\xi \) which minimizes the expected risk \(R(\xi ,D):=\mathbb E_{h\sim \xi }\mathbb E_{(x, y)\sim D}L(h, x, y)\), and then the prediction is made by \(\widehat{y}=\mathbb E_{h\sim \xi }h(x)=\sum _{k=1}^K [w_k \cdot \mathbb E_{h\sim Q_k}h(x)]\).

Since sample distribution D is unknown, the expected risk \(R(\xi ,D)\) cannot be computed directly. Therefore, it is usually be approximated by the empirical risk \(\widehat{R}(\xi ,S):=\mathbb E_{h\sim \xi }\sum _{(x_i,y_i)\in S} L(h,x_i,y_i)/|S|\) in practice, and \(\xi \) is learned by minimizing the empirical risk \(\widehat{R}(\xi ,S)\). When the sample size is large enough, it would be a good approximation. However, in many situations, we don’t have so much data, which may lead to large difference between them. Thus, using the empirical risk \(\widehat{R}(\xi ,S)\) to approximate the expected risk \(R(\xi ,D)\) is not appropriate any longer.

We first study the difference between the empirical risk \(\widehat{R}(\xi ,S)\) and the expected risk \(R(\xi ,D)\). Based on the literature [7], we can obtain an upper bound of their difference which is stated as the following theorem.

Theorem 23.1

Let \(\xi ^0\) be a prior distribution over \(\mathscr { H}\) that must be chosen before observing the samples, and let \(\delta \in (0,1)\). Then with probability at least \(1-\delta \), the following inequality holds for all posterior distributions \(\xi \) over \(\mathscr { H}\),

where n is the cardinality of sample set S, and \(\mathrm {KL}(\cdot || \cdot )\) denotes the Kullback-Leibler (KL) divergence between two distributions.Footnote 1

According to the above theorem, it is clear that only when the sample size n is large, the difference \(R(\xi ,D)-\widehat{R}(\xi ,S)\) can be guaranteed to be small. Thus, minimizing \(\widehat{R}(\xi ,S)\) may not lead to the minimizer of \(R(\xi ,D)\), which matches our intuition. To avoid the risk of the approximation, one can minimizes the upper bound of the expected risk \(R(\xi ,D)\) in stead of using the empirical risk \(\widehat{R}(\xi ,S)\) as an approximation. In particular, we denote the right hand side of Eq.(23.1) by \(\overline{R}(\xi ,\xi ^0,S)\). Then one can learn the model distribution \(\xi \) by minimizing \(\overline{R}(\xi ,\xi ^0,S)\). Intuitively, such choice of \(\xi \) for the learning task makes the worst case best.

Theorem 23.1 also indicates that the prior \(\xi ^0\) plays an important role. Since the choice of \(\xi \) balances the tradeoff between the empirical risk \(\widehat{R}(\xi ,S)\) and the regularization term, if the prior \(\xi ^0\) is far away from the true optimal model distribution \(\xi ^*\), the posterior \(\xi \) will also be bad. The best situation for optimizing the posterior \(\xi \) is that the prior \(\xi ^0\) exactly equals to the true optimal model distribution \(\xi ^*\). Then, the regularization term disappears. In other words, if there is a good prior \(\xi ^0\) which is close to \(\xi ^*\), the upper bound \(\overline{R}(\xi ,\xi ^0,S)\) will be small. However, without any prior knowledge, one can only use data to help obtain a better prior. The naive method is directly using the non-informative prior as \(\xi ^0\) for minimizing \(\overline{R}(\xi ,\xi ^0,S)\) to get the posterior \(\xi \). In this paper, we propose a more carefully designed method to get a better posterior than the naive method. In the following, we consider two different scenarioes for learning the prior. First, the data can be collected adaptively. The learner is allowed to do sampling in rounds and updates the prior distribution after each sampling. In each round, the learner can sample the data according to the prior distribution in the current round. Such iterative procedure updates the prior step by step. Ultimately, compared with dealing the whole data at once, this procedure of adjusting prior leads to a smaller upper bound. Moreover, it also gives an opportunity to choose some good sample sets for reducing the volatility of the estimators which is measured by \(v(\xi ,D)=\mathbb E_x\mathbb E_{h}(h(x)-\mathbb E_{h}h(x))^2\). The function \(\widehat{v}(\xi ,B)=\frac{1}{|B|}\sum _{x\in B}\mathbb E_{h}(h(x)-\mathbb E_{h}h(x))^2\) is defined to measure the volatility of the posterior \(\xi \) at the sample set B. The complete algorithm for sequential batch sampling is shown in Algorithm 6. Second, the data including the new task and other similar old tasks which have been already collected. The sequential sampling method can not be adopted in this scenario. Since the previous tasks are similar with the new task, we could use these old data to learn the prior for the new task. The details will be discussed in Sect. 23.3.

For Algorithm 6, the data is processed in b steps. First, a space-filling design is used as initial experiment points to reduce the probability of overfitting caused by the unbalanced sampling. Traditional space-filling design aims to fill the input space with design points that are as “uniform” as possible in the input space. The uniformity of space-filling design is illustrated in Fig. 23.1. For next steps, uncertain points are needed to be explored. And, the uncertainty is measured by the volatility v. Hence, the batch with large volatility will be chosen. Note that if we set a huge \(\gamma \), we will just explore a small region of the input space.

The setting of \(\gamma \) refers to [20]. However, in practice, it is found that this parameter \(\gamma \) does not matter much, since the results are similar with a wide range of \(\gamma \). This procedure helps to reduce the variance of the estimator which is proved in [20] by sequential sampling. Furthermore, it also helps to adjust the prior in each step which is called learning the prior. The proposition is stated as below.

Proposition 23.1

For \(i=1,2,\ldots ,b\), let \(B_i=S\), \(\xi ^*\) is the minimizer of the RHS of Eq. (23.1) with non-informative prior \(\xi ^0\) and \(\xi _i\) are obtained by Algorithm 6, then we have \(\overline{R}(\xi _b,\xi _{b-1},S)\le \overline{R}(\xi ^*,\xi ^0,S)\).

The above proposition can be understood straightforwardly. First, since we adjust the prior through the data step by step, the final prior \(\xi _{b-1}\) is better than the non-informative prior. Consequently, it receives the smaller expected risk. Second, we choose the sample sets sequentially with large volatility to do experiments in order to reduce uncertainty. The property is also confirmed in Sect. 23.4.

3 Priors Based on Historical Data

As mentioned in Sect. 23.2, when the data of historical tasks and the new tasks have already collected, sampling method can not be used any longer. Still, the learner needs a good prior for the reliable inferences. In order to get a good prior, it is helpful to extract the mutual knowledge from similar tasks. In particular, there are m sample tasks \(T_1,\ldots , T_m\) i.i.d. generated from an unknown task distribution \(\tau \). For each sample task \(T_i\), a sample set \(S_i\) with \(n_i\) samples is generated from an unknown distribution \(D_i\). Without ambiguity, we use notation \(\xi (\xi ^0,S)\) to denote the posterior under the prior \(\xi ^0\) after observing the sample set S. The quality of a prior \(\xi ^0\) is measured by \(\mathbb E_{D_i\sim \tau }\mathbb E_{S_i\sim D_i^{n_i}}R(\xi (\xi ^0,S_i),D_i)\). Thus, the expected loss we want to minimize is

Similar to the single-task case, the above expected risk cannot be computed directly, thus the following empirical risk is used to estimate it:

where each sample set \(S_i\) is divided into a training set \(S_i^{train}\) and a validation set \(S_i^{validation}\).

Consider the regression setting for task T. Suppose the true model is

where \(f_T:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) is the function to be learned, the error term \(\varepsilon _T\) is assumed to be independent of X and has a known probability density \(q(t), t\in {\mathbb {R}}\) with mean 0 and a finite variance. The unknown function \(\sigma _T(x_T)\) controls the variance of the error at \(X=x_T\). There are \(n_T\) i.i.d. samples \(\{(x_{T,i},y_{T,i})\}_{i=1}^{n_T}\) drawn from an unknown joint distribution of \((x_T,y_T)\). Assume that there is a candidate model set \(\mathscr { H}\). Each of them is a function mapping features (feature vector) to response, i.e., \(h\in \mathscr { H}:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\). To take the information of the old tasks, which can reflect the importance of each \(h\in \mathscr { H}\), the following Algorithm 7 is proposed.

This algorithm is based on the cross-validation framework. First, using \(T_i\) to obtain the candidate priors \(\xi _i\) by minimizing the risk bound with non-informative prior. Cross-validation determines the importance of the priors. The j-th task is divided into two parts randomly. The first part is used to learn the posterior with the prior \(\xi _i\). The second part is to evaluate the performance of the posterior by its likelihood function. This evaluation is inspired by [9]. To simplify the determination of the weights, Ref. [9] proposed a frequentist approach to BMA. The Bayes’ theorem was replaced by the Schwarz asymptotic approximation which could be viewed as using maximized likelihood function as the weights of the candidate models. The \(\widehat{\sigma }\) on the denominator of \(E_j^i\) makes the weight larger if the model is accurate. This procedure repeats many times for each pair (i, j). Their averages reveal the importance of the priors. In the end, the \(\xi ^*\) is obtained by weighted averaging them all. the property of this algorithm can be guaranteed by the following theorem.

The following regularization conditions are assumed for the results. First, q is assumed to be a known distribution with 0 and variance 1.

-

(C1)

The functions f and \(\sigma \) are uniformly bounded, i.e., \(\sup _x|f(x)|\le A<\infty \) and \(0<c_m\le \sigma (x)\le c_M<\infty \) for constants \(A,c_m\) and \(c_M\).

-

(C2)

The error distribution q satisfies that for each \(0<s_0<1\) and \(c_T>0\), there exists a constant B such that

$$\int q(x)\ln \frac{q(x)}{\frac{1}{s}q(\frac{x-t}{s})}\mu (dx)\le B((1-s)^2+t^2)$$for all \(s_0\le s\le s_0^{-1}\) and \(-c_T\le t\le c_T\).

-

(C3)

The risks of the estimators for approximating f and \(\sigma ^2\) decrease as the sample size increases.

For the condition (C1), note that, when we deal with k-way classification tasks, the responses belong to \(\{1,2,\ldots ,k\}\) which is bounded obviously. Moreover, if the input space is a finite region which often happens in real datasets, most common functions are bounded uniformly. The constants \(A,c_m,c_M\) are involved in the derivation of the risk bounds, but they can be unknown in practice when we implement the Algorithm 7. The condition (C2) is satisfied by Gaussian, t (with degrees of freedom larger than two), double-exponential, and so on. The condition (C3) usually holds for a good estimating procedure, like consistent estimators. A model has consistency if the expected risk tends to zero when experimental size tends to infinity. Note that the conditions are satisfied in most situations.

Theorem 23.2

Assume (C1)–(C3) are satisfied and \(\sigma _{T_i}\) is known. Then, the combined posterior \(\xi ^*\) as given above satisfies

with probability at least \(1-\delta \), where the constant \(C_1,C_2\) depend on the regularization conditions, \(\pi \) is the initial prior which should be non-informative prior and \(\xi _j^*\) is the minimizer of Eq. (23.1) with \(\xi ^0=\xi _j\) and \(S=S_{i,n_i^{'}}^{(1)}\).

For simplify, we assume that the condition that \(\sigma _{T_i}\) is known in Theorem 23.2. In fact it is not a necessary condition, a more general case and corresponding proof can be found in Appendix.

In this general proof, it can be seen that variance estimation is also important for the Algorithm 7. Even if a procedure estimates \(f_T\) very well, a bad estimator of \(\sigma _T\) can substantially reduce its weight in the final estimator. Under the condition (C3), the risks of a good procedure for estimating \(f_T\) and \(\sigma _T\) usually decrease as the sample size increases. The influence of the number of testing points \(n'_i\) is quite clear. Smaller \(n'_i\) decreases the first penalty term but increases the main terms that involve the risks of each j. Moreover, Theorem 23.2 reveals the vital property that if one alternative model is consistent, the combined model will also have the consistency.

4 Simulations

In this section, some examples are shown to illustrate the procedure of Algorithms 6 and 7 and confirm Proposition 23.1. The method of minimizing the upper bound in Theorem 23.1 with non-informative prior is denoted by RBM (Risk Bound Method). Also, the SOIL method in [17] is under the comparison. The optimization for RHS of Eq. (23.1) in our algorithms is dealt by gradient descend method. R package “SOIL” is used to obtain the results of the SOIL method. First, we begin with linear models.

4.1 Synthetic Data Analysis

Example 23.1

The simulation data \(\{({x_i},y_i)\}_{i=1}^n\) is generated for the RBM from the linear model \(y_i=1+{x_i}^T\beta +\sigma \varepsilon _i\), where \(\varepsilon _i\sim N(0,1)\), \(\sigma \in \{1,5\}\) and \({x_i}\sim N_d(0,\Sigma )\). For each element \(\Sigma _{ij}\) of \(\Sigma \), \(\Sigma _{ij}=\rho ^{|i-j|}~(i\ne j)\) or \(1~(i=j)\) with \(\rho \in \{0,0.9\}\). The sequential batch sampling has b steps, and each step uses n/b samples followed Algorithm 6.

All the specific settings for parameters are summarized in Table 23.1, and the confidence level \(\delta \) in Theorem 23.1 is set to 0.01. The Mean Squared Prediction Error(MSPE) \(\mathbb E_x|f(x)-\widehat{f}(x)|^2\) and volatility defined in Sect. 23.2 are compared. They are obtained by sampling 1000 samples from the same distribution and computing their empirical MSPE \(\sum _x|f(x)-\widehat{f}(x)|^2/10^3\) and volatility. For each model setting with a specific choice of the parameters \((\rho ,\sigma )\), we repeat 100 times and compute the average empirical value. The comparison among RBM, SOIL and SBS(Sequential Batch Sampling) are shown in Table 23.2.

The volatility of SOIL method is the smallest and very close to zero. This phenomenon shows that SOIL is focused on a few models, even just one model when the volatility equals to zero. Consequently, its MSPE is larger than other two methods. SBS as a modification of RBM has similar results with RBM when \(\sigma \) is small. However, when \(\sigma \) is large, SBS performs much better than RBM. In this situation, the information of data is easily covered by big noises. Hence, a good prior which can provide more information is vital for this procedure.

Next example considers the same comparison but in non-linear models. In last example, the alternative models include the true model, but now the true non-linear model is approximated by many linear models.

Example 23.2

The simulation data \(\{({x_i},y_i)\}_{i=1}^{50}\) is generated for the RBM from the non-linear models

-

1.

\(y_i=1+\sin (x_{i,1})+\cos (x_{i,2})+\varepsilon _i\),

-

2.

\(y_i=1+\sin (x_{i,1}+x_{i,2})+\varepsilon _i\),

where \(\varepsilon _i\sim N(0,1)\), and \({x_i}\sim N_8(0,I)\). The sequential batch sampling has 5 steps, and each step uses 10 samples followed Algorithm 6.

The results of Example 23.2 is listed in Table 23.3. Mostly, it is similar with the results of Example 23.1. The difference is that the volatility of SOIL becomes large when the model is completely non-linear. Using linear models to fit non-linear model obviously increases the model uncertainty, since none of the fitting models is correct.

The final example is under the situation that the data has been already collected. Hence, we can’t use the SBS method to get the data. However, we have the extra data of many old similar tasks. In particular, we have the data of Example 23.1. Now, the new task is to fit a new model.

Example 23.3

The data of Example 23.1 with \((\rho ,\sigma )=(0,1)\) is given. The new task data \(\{({x_i},y_i)\}_{i=1}^{20}\) is generated from the linear model \(y_i=1+{x_i}^T\beta +\sigma \varepsilon _i\), where \(\varepsilon _i\sim N(0,1)\), \(\sigma \in \{1,2,3,4,5\}\), \(\beta =\{1,-1,0,0,0.5,0,\ldots ,0\}\) and \({x_i}\sim N_{10}(0,I)\).

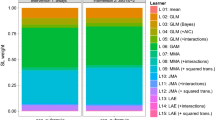

The method described in Algorithm 7 is denoted by HDR (Historical Data Related). The results in Fig. 23.2 show the high consistency with the last two examples. When \(\sigma \) is small, the different priors lead to similar result since the current data has key influence. However, when \(\sigma \) is large, the difference between RMB and HDR is huge. The reason is that the current data has been polluted by the strong noise. Hence, a good prior can provide the vital information about the model distribution.

Comparisons among the three methods in Example 23.3

4.2 Real Data Study

Here, we apply the proposed methods to two real datasets, BGS data and Bardet data, which are also used in [17].

First, the BGS data is with small d and from the Berkeley Guidance Study (BGS) by [13]. The dataset records 66 boys’ physical growth measures from birth to eighteen years. Following [17], we consider the same regression model. The response is age 18 height and the factors include weights at ages two (WT2) and nine (WT9), heights at ages two (HT2) and nine (HT9), age nine leg circumference (LG9) and age 18 strength (ST18).

Second, for large d, the Bardet data collects tissue samples from the eyes of 120 twelve-week-old male rats. For each tissue, the RNAs of 31, 042 selected probes are measured by the normalized intensity valued. The gene intensity values are in log scale. Gene TRIM32, which causes the Bardet-Biedl syndrome, is the response in this study. The genes that are related to it are investigated. A screening method [3] is applied to the original probes. This screened dataset with 200 probes for each of 120 tissues is also used in [17].

Both cases are data-given cases that we can’t use sequential batch sampling method. For the different setting of d, we assign corresponding similar historical data for two real datasets. The data of model 1 in Example 23.1 for the BGS data with small d. The data of model 3 in Example 23.1 for the Bardet data with large d.

We randomly sample 10 rows from the data as the test set to calculate empirical MSPE and volatility. The results are summarized in Table 23.4. From Table 23.4, we can see that both RBM and HDR have smaller MSPE than SOIL. However, HDR doesn’t perform much better than RBM. This can be explained intuitively as follows. In theory, the historical tasks and the current task are assumed that they come from the same task distribution. But in practice, how to measure the similarity between tasks is still a problem. Hence, an unrelated historical dataset may provide less information for the current prediction.

5 Concluding Remarks

This paper is based on the PAC-Bayes framework to study the model averaging problem. More concretely, the work is about how to assign the proper distribution on the candidate models. The work proposes specific upper bounds of the risks in different situations and aims to minimize them. In other words, it makes the worst situation best. For this purpose, two practical algorithms are provided to solve this optimization under two realistic situations respectively. One is that no previous data can be used, but the experimenters have the opportunity to design the sampling method before the collection of the data. The other one is that much historical data is given, the analysts should figure out a proper method to deal with these data. In the first case, the prior is adjusted step by step. Compared with dealing the whole data at once, this sequential method has the smaller upper bound of the risk. In the second case, using historical similar tasks to extract the information about the prior which is called meta-learning. The meta-learner is for the prior and the base-learner is for the posterior. Both methods are confirmed to be effective in our simulation and real data study.

However, some problems need to be investigated. First, in sequential batch sampling procedure, the volatility is used as a criterion to sample the data. This choice is based on our experience. There may exist other choices that have better results. Second, when a lot of historical data is available, many similar old tasks may be considered to extract more information for learning the new task better. How to define ‘similar’ is still an open problem. In practice, the similarity isn’t measured by the data. Instead, it is judged by experts, which is not expected.

Notes

- 1.

\(\mathrm {KL}(P||P^0)\) is defined as \(\mathbb E_{x\sim P}\ln \frac{P(x)}{P^0(x)}\).

References

Amit, R., Meir, R.: Meta-learning by adjusting priors based on extended pac-bayes theory. In: International Conference on Machine Learning, pp. 205–214 (2018)

Hoeting, J.A., Madigan, D., Raftery, A.E., Volinsky, C.T.: Bayesian model averaging: A tutorial. Stat. Sci. 14(4), 382–401 (1999)

Huang, J., Ma, S., Zhang, C.H.: Adaptive lasso for sparse high-dimensional regression. Stat. Sin. 18(4), 1603–1618 (2008)

Leamer, E.E.: Specification searches. Wiley, New York (1978)

Lever, G., Laviolette, F., Shawe-Taylor, J.: Tighter pac-bayes bounds through distribution-dependent priors. Theor. Comput. Sci. 473(2), 4–28 (2013)

Liang, H., Zou, G., Wan, A.T.K., Zhang, X.: Optimal weight choice for frequentist model average estimators. J. Am. Stati. Assoc. 106(495), 1053–1066 (2011)

Mcallester, D.A.: Pac-bayesian model averaging. In Proceedings of the Twelfth Annual Conference on Computational Learning Theory, pp. 164–170 (1999)

Moral-Benito, E.: Model averaging in economics: An overview. J. Econ. Surv. 29(1), 46–75 (2015)

Raftery, A.E.: Bayesian model selection in social research. Soc. Methodol. 25(25), 111–163 (1995)

Raftery, A.E.: Approximate bayes factors and accounting for model uncertainty in generalised linear models. Biometrika 83(2), 251–266 (1996)

Seeger, M.: Pac-bayesian generalisation error bounds for gaussian process classification. J. Mach. Learn. Res. 3(2), 233–269 (2002)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press (2014)

Tuddenham, R.D., Snyder, M.M.: Physical growth of california boys and girls from birth to eighteen years. Publ. Child Dev. 1, 183–364 (1954)

Wan, A.T.K., Zhang, X., Zou, G.: Least squares model averaging by mallows criterion. J Econ. 156(2), 277–283 (2010)

Wasserman, L.: Bayesian model selection and model averaging. J. Math. Psychol. 44(1), 92–107 (2000)

Yang, Y.: Adaptive regression by mixing. J. Am. Stat. Assoc. 96(454), 574–588 (2001)

Ye, C., Yang, Y., Yang, Y.: Sparsity oriented importance learning for high-dimensional linear regression. J. Am. Stat. Assoc. 2, 1–16 (2016)

Zhang, X., Wan, A.T.K., Zou, G.: Model averaging by jackknife criterion in models with dependent data. J. Econ. 174(2), 82–94 (2013)

Zhang, X., Zou, G., Liang, H.: Model averaging and weight choice in linear mixed-effects models. Biometrika 1(1), 205–218 (2014)

Zhou, Q., Ernst, P.A., Morgan, K.L., Rubin, D.B., Zhang, A.: Sequential rerandomization. Biometrika 105(3), 745–752 (2018)

Acknowledgments

The authors sincerely thank the editors and a referee for their valuable comments, which further improve this paper. The work is supported by NSFC grant 11671019, LMEQF and Beijing Institute of Technology Research Fund Program for Young Scholars.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

First, we review the classical PAC-Bayes bound [7, 12] with general notations.

Lemma 23.1

Let \({\mathscr {X}}\) be a sample space and \(\mathscr { F}\) be a function space over \({\mathscr {X}}\). Define a loss function \(g(f,X):\mathscr { F}\times {\mathscr {X}}\rightarrow [0,1]\), and \(S=\{X_1,\ldots ,X_n\}\) be a sequence of n independent identical distributed random samples. Let \(\pi \) be some prior distribution over \(\mathscr { F}\). For any \(\delta \in (0,1]\), the following bound holds for all posterior distributions \(\rho \) over \(\mathscr { F}\),

Proof of Theorem 23.1 We use Lemma 23.1 to bound the expected risk with the following substitutions. The n samples are \(X_i\triangleq z_i\). The function \(f\triangleq h\) where \(h\in \mathscr { H}\). The loss function \(g(f,X)\triangleq L(h,z)\in [0,1]\). The prior \(\pi \) is defined by \(\pi \triangleq \xi ^0\), in which we first sample k from \(\{1,\ldots ,K\}\) according to corresponding weights \(\{w_1,\ldots ,w_K\}\) and then sample h from \(Q_k\). The posterior is defined similarly, \(\rho \triangleq \xi \).

The KL-divergence term is

Substituting the above into Eq. (23.2), it follows that

Using the notations in Sect. 23.2, we can rewrite the above as below,

Proof of Proposition 23.1 First, we proof that for \(i=2,\ldots ,b\),

By definition of \(\xi _i\),

Following these inequalities,

This finishes the proof.

Proof of Theorem 23.2 According to Theorem 1 in [16], we have

where \(\xi _j^*\) is the minimizer of Eq. (23.1) with \(\xi _0=\xi _j\) and \(S=S_{i,\alpha }^{(1)}\) denoted by \(\xi _j^*(\xi _j,S_{i,\alpha }^{(1)})\).

For any \(\alpha \ge n_i^{'}\) and an estimator satisfied the condition (C3), the inequalities \({\mathbb E}||\sigma _{T_i}^2-\widehat{\sigma }_{j,n_i^{'}}^2||^2\ge {\mathbb E}||\sigma _{T_i}^2-\widehat{\sigma }_{j,\alpha }^2||^2\) and \({R}(\xi _{j}^*(\xi _j,S_{i,n_i^{'}}^{(1)}),D_i)\ge {R}(\xi _{}^*(\xi _j,S_{i,\alpha }^{(1)}),D_i)\) hold. Plugging into Eq. (23.6) for \(\alpha =n_i^{'}+1,\ldots ,n_i\), it follows that

where \(\xi _j^*\) is the minimizer of Eq. (23.1) with \(\xi ^0=\xi _j\) and \(S=S_{i,n_i^{'}}^{(1)}\).

Then, the result follows by the above inequality combined with Eq. (23.5). In order to obtain the form in Theorem 23.2, one only needs to note that if \(\sigma _{T_i}\) is known, the term \({\mathbb E}||\sigma _{T_i}^2-\widehat{\sigma }_{j,n_i^{'}}^2||^2\) vanishes.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ai, M., Huang, Y., Yu, J. (2020). Data-Based Priors for Bayesian Model Averaging. In: Fan, J., Pan, J. (eds) Contemporary Experimental Design, Multivariate Analysis and Data Mining. Springer, Cham. https://doi.org/10.1007/978-3-030-46161-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-46161-4_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-46160-7

Online ISBN: 978-3-030-46161-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)