Abstract

This chapter focuses on investment decision-making under uncertainty. The traditional approach to human decision-making is characterized by its attempts of optimizing and maximizing: optimizing the probability estimates and maximizing expected utility. However, these models often fail in the face of uncertainty, where probability estimates are not precise or simply unknown. The author proposes alternative approaches to the uncertainty challenge within and beyond the context of financial investment and provides preliminary evidence of reducing uncertainty by using frequency counts, single reason, simple heuristics, and decision reference points.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Financial decision-making

- Uncertainty

- Radical uncertainty

- Bounded rationality

- One-reason decision-making

- Simple heuristics

- Decision reference points

The Uncertainty Challenge: Rational Models in an Irrational Market

Frank Knight proposed a well-accepted distinction between risk (when the probabilities of expected outcomes are known) and uncertainty (when the probabilities of expected outcomes are unknown). Knight may not have anticipated that behavioral decision making research would largely restrict its focus on risky decisions, by sticking to a reductionist optimization approach to human decision making. This approach reduces the concept of decision rationality to a small set of axioms and optimizes by deriving a single utility score for each choice option from the “weighted sum” of expected values and probabilities. The mainstream research in the fields of economics, finance, and behavioral decision making has demonstrated a persistent preference for probability-based models, to replace uncertainty with risk, so that decisions can be parsimoniously gauged by rational axioms and the principle of utility maximization (von Neumann & Morgenstern, 1944; Savage, 1954).

This persistent effort to reduce decision problems to mathematical formulations seems to have its roots in the history of science. In 1654, prompted by a question of how to score an unfinished game of chance, Blaise Pascal and Pierre de Fermat formulated probabilities for chance events. Correspondence between them established the concept of expected value and marked the beginning of mathematical studies of decision making. Following their lead, in 1738, Daniel Bernoulli laid the foundation for risk science by examining subjective value functions (see Buchanan & O’Connell, 2006; Shafer, 1990).

Another significant event in the literature of behavioral and financial decision making was the Keynes vs. Ramsey-Savage debate, following the publication of A Treatise on Probability by John Maynard Keynes in 1921. The central point of the debate concerns whether probability estimates are appropriate in modeling some financial situations where, as Keynes puts it, “we simply do not know.” Keynes believed that the financial and business environment is characterized by “radical uncertainty.” The only reasonable response to the question “what will interest rates be in 20 years’ time?” is “we simply do not know.” In contrast to this view, Ramsey (1922, 1926/2016) argued that probabilities can be derived objectively from the choices and preferences of the decision-makers, based on a small set of rational axioms.

Surprisingly, there was little development in the theories of decision making in the century and a half between Bernoulli (1738) and Keynes (1921). However, we have witnessed rapid developments and groundbreaking discoveries in many other areas of science during this period. These scientific achievements, to name a few, include the Bayesian model of probability by Thomas Bayes in 1763, John Dalton’s atomic theory in chemistry in 1805, Darwin’s theory of evolution in 1859, Mendel’s laws of inheritance in 1865, the discovery of the periodic table by Mendeleev in 1869, Karl Pearson’s statistical testing in 1892, Albert Einstein’s theory of special relativity in 1905, Walther Nernst’s third law of thermodynamics in 1906, and Thomas Morgan’s laws of genetic linkage and genetic recombination in 1911 and 1915. All these scientific developments highlight the importance of logic and rationalism and reveal the beauty of a reductionist approach to discovering simple rules in a complicated universe and deriving fundamental principles that govern numerous behaviors of diverse organisms. With such a reductionist vision, psychology has embarked on a journey of discovering a few physics-like laws of behavior, as seen in the effort of structuralism to search for the elements of thought, of behaviorism to find general principles of reinforcement and learning, and of cognitive research to reveal a small set of logic rules of reasoning and decision making.

The power of Newton’s laws in physics and the beauty of the periodic table of elements in chemistry have inspired social scientists to discover a small set of laws to concisely describe and predict complicated human behaviors. In economics and finance, the focus of work has been on identifying the axioms of rationality and principles of probability. As a result, decision rationality is defined largely by logical consistency with neoclassic standards of expected utility maximization. To be rational, homo economicus, the economic man, would have to know all of the expected consequences and their probabilities. In reality, however, this omniscience assumption is oftentimes shackled by the cognitive limitations of the decision-maker and shattered by the harsh uncertainty of the decision environment.

In the world of financial investment, not only are probabilities of future returns unknown, but market reactions to observable performance of a company are also capricious. Figure 9.1 compares the changes in revenues over 8–10 years with the corresponding changes in the market capitalizations for three companies (Wal-Mart, Exxon Mobil, and Yahoo). As Fig. 9.1 shows, the market had distinct reactions to the similarly linear increases in revenue of these three companies. Adding to this market uncertainty, the market expectations for each company, as shown in its market cap, lack consistency in reaction to a simple linear increase in revenues over time. It is difficult to rely on the historical record to predict what the market expectation will be at the next moment in time. Although a post hoc statistical fitting function may show a consistent overall correspondence between the changes in revenue and the changes in market cap, such a function is insufficient for ad hoc investment decisions under market uncertainty.

Asset pricing theories typically relate expected risk premiums to covariances between the return on an asset and some ex ante risk factors. However, it is difficult to identify and stay with a set of common risk factors since risks are confined to task environment, specific types of assets, and specific periods (Keim & Stambaugh, 1986). Using the asset-pricing model, based on the ex-ante variables, is particularly problematic under uncertainty, where the past does not have to define the present or future. Regression analysis of the market data revealed that risk premiums varied as a function of levels of uncertainty. In the bond market, and as one would reasonably predict, risk premiums are positively correlated with actual asset prices. In contrast, in the stock market of small firms with high levels of uncertainty, the risk premiums are largely negatively correlated with actual stock prices (Keim & Stambaugh, 1986).

Lessons from Russell’s Turkey

The commonly accepted method of making backward inferences from choice preferences promoted by Ramsey (1922), von Neumann and Morgenstern (1944), and Savage (1954) is only possible under the assumption that the decision-maker’s preferences obey the axioms of rationality, such as dominance, transitivity, independence, etc. These axioms serve as foundations for neoclassical theories of economics. However, people systematically violate these axioms when making judgments and decisions (Kahneman & Tversky, 1979, Kahneman, 2000, 2011; Tversky & Kahneman, 1992). Daniel McFadden (1999) noted that people are often rule-driven, rather than cost-benefit analyzing as neoclassic economic models suggest. When probability-based models encounter financial reality, expected utility calibrations simply do not match the “irrational” behaviors of the investors. An ultimate challenge of financial reality to finance theory is market uncertainty, characterized by events that are unprecedented, unpredicted, and unpredictable for their potential effects and a lack of knowledge, experience, and time for deliberation.

When risk models are “forced” to guide financial decisions under uncertainty, each parameter in the model brings in a certain amount of noise and complexity, which compound each other and subsequently break down the accuracy of model predictions. More important, without doing a psychological analysis of motives and respective values of the decision-maker and decision-recipient, the probability estimates of the model can be inaccurate and dangerously misleading. In the following, I will try to illustrate how probability-based calculations fail in a real world of uncertainty and exemplify possible remedies using the example of “Bertrand Russell’s Turkey”. In his book The Problems of Philosophy, Russell (1957) demonstrated how logical and probability-based inductive reasoning goes astray with an ingenious example of an inductivist turkey, reformulated below.

A smart turkey, who is capable of inductive reasoning, was captured by a farmer and brought back to his turkey farm. Although scared, the inductivist turkey did not jump to conclusions. The turkey found that, on the first morning at the turkey farm, it was fed at 9 a.m. The turkey continued its sampling and made its observations on different days of the week and under different weather conditions. Each day, it updated its Bayesian probability calculation in terms of the probability that it would be fed again. This probability continues to increase each day after it was fed in the morning. Finally, after 100 days of observation, the turkey was satisfied with its Bayesian estimation and concluded that “I will be fed tomorrow morning” and “I am always fed in the morning.” That was the day before Christmas Eve. On the morning of Christmas Eve, the turkey was not fed but instead had its throat cut.

The inductivist turkey failed to distinguish between uncertainty and risk. Gerd Gigerenzer (2015) calls this failure “turkey’s illusion.” Gigerenzer describes the dangers of confusing uncertain and risky types of decisions. He argues that risks are limited and can be calculated only when uncertainty is low and outcomes are predictable (see also Volz & Gigerener, 2012). However, probabilities derived from a large amount of data from the past can quantify expected future happenings only in stable environments. In the world of uncertainty, outcomes take an all-or-nothing form, where uncertainty becomes certainty only after a decision results in outcomes. The decision-maker then faces new uncertainty again. Such uncertainty-certainty conversions take place repeatedly without predictable probabilities in between. An important function of human intelligence is to either reduce uncertainty to qualitative likelihood estimates or deal with it without resorting to probability estimation in situations of “radical uncertainty.”

In less radical uncertain situations, the likelihood of an event cannot be pinpointed but can be reduced to categorical likelihoods. To reduce outcome uncertainty, decision agents try to estimate the ranges and categorical likelihoods of expected outcomes (e.g., likely, unlikely). Xiong (2017) provided some preliminary evidence of such categorical likelihood estimation with Chinese participants. The results of this study showed that the participants evaluated uncertain events in terms of categorical and ordinal likelihoods. Moreover, they were able to convert the verbal likelihood descriptions to corresponding probabilities on a numerical scale. The commonly used verbal descriptions of the categorical likelihoods of expected outcomes seem to have two focal points: reliable vs. unreliable. The average cardinal conversions of the “reliable” and “unreliable” outcomes on the probability scale were 37.1% and 63.2%, respectively.

Following Bertrand Russell, many researchers have questioned the ecological validity of inductive logic and probability-based models in finance and economics. However, only few had paid attention to alternative methods that might help the turkey make a better judgment. What other mental tools, besides salvation from God, could the turkey use to save its life? I propose that a psychological analysis of motives based on survival instincts, aided by simple frequency sampling, would help this intelligent turkey out of its predicament.

The turkey’s failure was not due to its choice of an inductive approach. The turkey paradox shows that it is not the statistics per se that is misleading but the way it is used that is responsible for the failure of Russell’s turkey. What went wrong with the inductivist turkey was its ignorance of the needs and motives of the farmer. The turkey only asked, “When do I get fed?” It never asked, “Why do I get fed?” Statistical thinking with simple frequency counts may help if used with social intelligence. Living on a turkey farm, the inductivist turkey can gather some functional information. For instance, how many fellow turkeys have been taken away? Among these turkeys, how many have ever returned to the farm? How old were these turkeys when they disappeared? With these questions in mind, the turkey might find out that most of the fellow turkeys which disappeared were taken away after being fed for about 100 days. Once being taken away, no one would return. These observations would allow the turkey to reach a different conclusion. It would not be difficult to get these kinds of information. One only needs to get a few frequency counts from natural sampling (e.g., y out of z were taken away after 100 days, x out of z never returned, etc.). This intelligent turkey thus is likely to abandon Bayesian probability updates and adopt natural frequency counts for an analysis of motives. The turkey would decide to escape from the farm before it is too late.

A problem of relying on probability calculations is that it focuses only on correlations instead of causation. Probability models deal with questions of “what and when” but not “why.” However, answers to “why” questions provide more reliable predictions in an unstable and uncertain environment since psychological factors (e.g., values, motives, personality traits, dispositions, etc.) are often more stable than social and situational factors.

I derive from the above analysis two key points. First, probability calculations are only correlational and incapable of revealing the motives of decision-makers. Probability-based models of risky choice fail in the face of real-world uncertainty. Second, but more importantly, a decision agent is capable of reducing uncertainty by doing a psychological analysis of motives and values of interacting agents, aided by natural frequency sampling. Decision models should consider ecological and social rationalities. A good model of decision making is a psychologically valid model.

Under Uncertainty, Less Is More

Different views have challenged the reductionist optimization approach. In line with Keynes’ idea of “radical uncertainty,” Knight suggested that it is uncertainty that characterizes the business environment and enables profit opportunities to emerge. In a similar vein, Simon and Newell (1958) pointed out, “there are no known formal techniques for finding answers to most of the important top-level management problems” (p. 4) because these are “ill-structured.” Simon (1956) proposed that the human mind is capable of coping with such an ill-structured environment with limited cognitive capacity. Coping with a complicated environment with limited computational capacity requires simplicity.

One way of achieving simplicity in decision making is to rely on a single reason. To illustrate how a vital decision can be firmly made under time pressure and with a very small sample, consider the example of the decision made by the vice present Dick Cheney on 9/11, 2001, when America was under attack. Two airplanes had already crashed into the Twin Towers, another one into the Pentagon. Combat air patrols were aloft. Cheney received the report that a fourth plane was “80 miles out” from Washington, D.C. At this moment, as President Bush was on Air Force One in the sky, Cheney received no instruction on how to respond to the attacks. A military aide was asking Cheney for shoot-down authority. Now Dick Cheney faced a huge decision on a morning in which every minute mattered. Cheney did not flinch, according to the 9/11 Commission Report, and immediately gave the order to shoot the fourth airplane down, telling others the president had “signed off on the concept.”

Clearly, Cheney’s decision was based on national security, which was prioritized in his mind above all other possible concerns. This is a case of less is more and less is more precious. When Cheney made the decision, the available information was limited and was from a small frequency sample of four airplanes with a clear understanding about the motives of the terrorists. When making such a vital decision in an unpredictably variable and urgent situation, one-reason decision making with a clear stopping rule becomes necessary. When big decisions have to be made instantly, the heuristics that rely on a single reason seem to be the art of effective leadership. Under uncertainty, probability estimates are inevitably volatile and unreliable, and these parameters are likely to increase existing uncertainty rather than reduce it. Frustrated by the normative approach to uncertainty, President Harry Truman reportedly said, “All my economists say, ‘on the one hand... but on the other.’ Give me a one-handed economist!” (Boller, 1981, p. 278). President Truman’s complaint calls for research on probability-free simple heuristics, such as intuition-based decision making (March, 2010; Zsambok & Klein, 2014), one-reason decision making, and even ignorance-based decision making (Gigerenzer, 2007, 2010).

A grand example of ignorance-based decision making is the free-market economy, which assumes that people, including experts and policymakers, are ignorant about how to accurately and consistently predict future needs, stimulate innovations, put forth economic policies, or estimate asset prices. This ignorance-based approach relies on the invisible hand to move the economy forward through individual self-interest and freedom of production and consumption. It is in sharp contrast to the command economy of a central planning bureaucracy, which assumes that the government can be omniscient and omnipotent in predicting, directing, and controlling the economy and market behaviors. Simplicity has been identified as a powerful strategy to succeed in the literature of business management. What separates successful companies from average companies is a “hedgehog” wisdom of using simplicity to succeed (Berlin, 1953). Organizations are more likely to succeed if they can identify the one thing that they do best. This “hedgehog strategy” is in the “DNA” of successful companies (Collins, 2001).

James March (2010) emphasizes the important role of experience and storytelling in dealing with novelty and uncertainty in organizations. “Organizations were pictured as pursuing intelligence, and intelligence was presented as having two components. The first involves the instrumental utility of adaptation to the environment. The second involves the gratuitous interpretation of the nature of things through the use of human intellect.” (p. 117). From the second point of view, organizational learning does not fit with utility formulations with a distinct value structure and ranking for expected outcomes, since unique things are often equally valued in the mind of the owner. Once the value of an important thing or person reaches a psychological threshold, the decision-maker would abandon utilitarian calculations. People may refuse to rank valuable things since each of the valuable things is important in a unique way. Ranking valuable things in terms of their expected utilities is like turning friends into competing enemies of each other.

Reducing Uncertainty with Simple Heuristics and One-Reason Decision Making

Although many believed that the Keynes vs. Ramsey and Savage debate had consequently strengthened the foundation of probability-weighted utility models in neoclassic economics and finance, Keynes’ idea of “radical uncertainty” has drawn an increasing amount of attention. Following the vision of Herbert Simon, researchers have been working on developing alternative and probability-independent decision tools. A major effort in this area is to take a heuristic approach to decision making under uncertainty. The satisficing heuristic proposed by Simon (1956) abandons the central idea of probability weighting optimization and utility maximization and demonstrates the advantages of using a satisfactory and sufficing stopping rule when making decisions in an uncertain and fast-changing environment. Step-by-step, fast, and frugal heuristics have been shown to match or even outperform well-known statistical benchmark models, such as multiple regression, and Bayesian algorithms, particularly when uncertainty is high and knowledge about the world is incomplete (Gigerenzer, 2015; Gigerenzer & Selten, 2001). Gigerenzer and Gaissmaier (2011) identified three building blocks of effective heuristics: (1) search rules that state where to look for information; (2) stopping rules that state when to stop searching; and (3) decision rules that govern how to choose given the available information.

Andre Haldane, Executive Director of Financial Stability of the Bank of England, observed a persistent effort to develop more and more complex models in the mainstream studies of financial investment and financial regulation in reaction to financial crises. He drew an analogy between catching a financial crisis and catching a Frisbee. Both are difficult when trying to understand them with mathematical models. Yet despite this formal and mathematical complexity, catching a Frisbee is remarkably common. Even an average dog can master the skill. What is the secret of the dog’s success? The answer is to run at a speed so that the angle of gaze to the Frisbee remains constant. Humans follow the same simple rules of thumb to catch a Frisbee. The key is to keep it simple. We should not fight complexity with complexity. Complexity expands rather than confines uncertainty; it generates rather than reduces uncertainty (see Haldane & Madouros, 2012). In contrast, simple heuristics can be successful in complex, uncertain environments and can be selectively applied to different business situations (Artinger, Petersen, Gigerenzer, & Weibler, 2015). The simplest reason for not using complex models in the real world of financial management is that collecting and processing the information necessary for complex decision making is punitively costly.

The second reason in favor of simple heuristics is that the normative decision models require probability-weighting functions and optimization, as established in expected utility theory (e.g., Neumann and Morgenstern, 1944) and its statistical analog, multiple regression models. However, probability calculation and weighting are often unnecessary in complex environments, where equal-weighting or “tallying” strategies are superior to risk-weighted alternatives (DeMiguel, Garlappi, & Uppal, 2007; Gigerenzer & Brighton, 2009). To illustrate how simple models can outperform complex models in the real world of asset management, Haldane and Madouros (2012) drew on actual financial market data of 200 entities since 1973 and constructed the maximum number of combinations of portfolios of different sizes, ranging from simple combinations of two assets to complex combinations of 100 assets per portfolio. For each of these sets of portfolios, they forecasted the risk in a Value-at-Risk (VaR) framework with estimates of asset volatilities and correlations. They used a relatively simple model (exponentially weighted covariance matrix) and a complex multivariate model to generate forecasts of VaR over the period of 2005–2012. They evaluated the models by comparing the model expected daily returns with actual returns. The results showed that for very simple portfolios of two or three assets, the performance of the simpler and complex models is similar. However, as the number of assets increases, the simpler model progressively outperforms the complex one. This result suggests that overfitting is a common problem of using complex models to make out-of-sample predictions, particularly when the portfolio is also large and thus complex. However, the routine response to financial crises by banks and regulators is to add more regulations and make existing forecasting models more complex. Contrary to this practice, simplicity, rather than complexity, may be better suited for reducing financial uncertainty and problems.

To develop alternative probability-free models, Dosi, Napoletano, Roventini, and Treibich (2019) argued that agents have to cope with a complex evolving economy characterized by deep uncertainty resulting from imperfect information, technology changes, and structural breaks. In these circumstances, the authors found that neither individual nor macroeconomic dynamics improve when agents apply normative utility calculations. In contrast, fast and frugal heuristics may be “rational” responses in complex and changing macroeconomic environments.

Dosi et al. (2019) suggested four possible reasons for why heuristics work well in complex business situations. First, heuristics can allow the decision agent to get more accurate forecasts than complex procedures because they are more robust to changes in the fundamentals of the economy. Second, the larger forecast errors of sophisticated agents are due to an insufficient number of observations employed in their estimations. Third, there may be selection pressure for heuristic-guided firms do better with a selection bias for heuristic learners than sophisticated learners. The fourth is that in complex and rapidly changing economies, more sophisticated rules contribute to greater volatility. In such environments, more information does not yield higher accuracy.

Overall, the aforementioned simple heuristics are fast because they use only part of the potentially available information in the environment, and they are frugal because they are guided by stopping rules for information search and use only a few cues or even a single piece of information for making a decision (one-reason decision making). These heuristics are also fast and frugal because they are specially designed mental tools for solving specific problems in specific task environments.

Demarcating Uncertainty with Decision Reference Points

It is ironic that on the one hand economics is defined as a study of goal-directed behaviors, but, on the other hand, normative economic models of decision making omit any reference point (e.g., the status quo, goal, or bottom line) (Wang, 2001). The use of a single value (the expected value) for each choice option is done at the cost of valuable information about risk distributions. As a result, each choice option is represented by a single value without information about how expected outcomes vary in relation to the decision reference points.

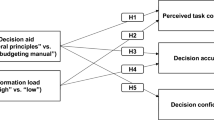

Recent developments in the field of behavioral decision making suggest that individuals in various risky choice situations use multiple reference points to guide their decision making. Based on tri-reference point (TRP) theory (Wang & Johnson, 2012), decision-makers strive to reach a goal and at the same time avoid falling below a bottom line. Prospect theory (Kahneman & Tversky, 1979) demonstrates that the carrier of subjective value is not total wealth but changes from the status quo (SQ) that separate expected outcomes into gains and losses. The TRP theory further divides the expected outcome space into four functional regions: negative outcomes are divided into failure and loss regions by the minimum requirement (MR) reference point and positive outcomes are divided into gain and success regions by the goal (G) reference point (see the upper panel of Fig. 9.2). As illustrated in Fig. 9.2, without reference points, the value of A to B, B to C, and C to D is the same. Once the reference points are in place, the psychological value of A to B is the highest since it is a “life-death” change from failure to survival. The value of C to D is a change “from good to great” and is thus higher than that of the change from B to C, which represents fluctuations around the status quo.

According to the TRP theory, reference point-dependent decisions should follow two rules of thumb: the MR priority principle and the mean-variance principle. The MR priority principle states that the relative psychological impact of the reference points obeys the order of MR > G > SQ. Empirical evidence (Wang & Johnson, 2012) supports this assumption: First, the disutility of a loss is greater than the utility of the same amount of gain (loss aversion). Second, the disutility of failure is greater than the utility of success in the same task (failure aversion). The mean-variance principle dictates risk/variance avoidance when the mean expected value of choice options is above the relevant reference point (MR or G) and risk/variance-seeking when the mean expected value of choice options is below the reference point.

TRP-based decision making can be independent of probability weighting and thus can be applied to choice situations for which the probabilities of expected outcomes are unknown but their distribution ranges can be estimated. Wang (2019) proposed a quintuple classification of uncertainty existing in different stages of information processing in decision making, including uncertainty in the information source, information acquisition, cognitive evaluation, choice selection, and immediate and future outcomes. People use different approaches to coping with different kinds of uncertainty. With regard to the outcome uncertainty, MR and G reference points can demarcate uncertain outcomes into functional regions and thus make it possible to compare uncertain choice options based solely on the distributions of these options without resorting to precise probability estimates.

As illustrated in panel B of Fig. 9.2, the MR priority principle and the mean-variance principle guide the choices between Option A and Option B, where Option A has a greater expected range (−100 to 600) than Option B (100–400).

Consider first the choice situation displayed on the left. Which investment option should you choose? Given that your MR = 150 and your G = 500, the low end of Option A and the low end of Option B are both below the MR and thus are functionally equivalent. You should avoid both options. If the choice is mandatory, you then only need to compare the high ends of the two options. The high end of Option A but not Option B can reach the goal G. Thus, you should choose Option A.

Consider now the choice situation displayed on the right side of panel B of Fig. 9.2. Which investment option should you choose? This time, you face the same options with different reference points (i.e., MR = 0, G = 300). Both options can reach the G at their high end and thus are functionally equal. What determines your choice would be the relationship between the low end of each option and the MR. Option B has a clear advantage since the low end of Option B is above the MR, whereas the low end of Option A falls below the MR. So, your choice should be Option B.

When applied to choices under uncertainty, the mean-variance principle of the TRP theory states that one should be variance averse when the low end of an option threatens the MR and be variance-seeking when the high end of an option reaches the G. In the case that one option spreads over both the MR and G and the other ranges within the two reference points, one should be variance averse and choose the second option to avoid any chance of functional death. This kind of baseline thinking should be an effective way of avoiding potential financial failures individually and financial crises collectively.

Implications for Thinking, Fast and Slow and the Dichotomy of Systems 1 and 2

Market failures have been attributed to systemic decision biases (Thaler & Sunstein, 2008) and their underlying thought processes. In his book Thinking, Fast and Slow, Daniel Kahneman (2011) distinguishes between two modes of thought in two systems of information processing. Based on the classifying features of Systems 1 and 2, the fast, autonomic, and intuitive System 1 is more error-prone than the slow, effortful, and deliberate System 2. However, based on the previous risk and uncertainty analysis in this chapter, System 2 may work well in risk situations but is likely to fail in the face of uncertainty. System 2 may be more fragile and error-prone, given its limited cognitive resources and small capacity. In the example of performing multiplications by System 2, errors can take place, particularly under time pressure or in multitasking situations. System 2 serves as a helper of System 1 under uncertainty situations, where new information is needed or raw information needs to be further processed.

Human memory capacity is both limited and non-verbatim (Miller, 1956; Reyna & Brainerd, 1995). These design features of human cognition determine the types of errors that humans are prone to make. In tasks with clear rules (e.g., chess games), computational human errors can be significantly reduced by artificial interference and big data techniques. Unfortunately, a set of clear-cut rules cannot define and guide most human decisions. Detecting a face from the background is easy for real human intelligence but a daunting task for artificial intelligence.

A key question from an evolutionary perspective is why Homo sapiens evolved to be primarily intuitive and occasionally analytical, as described by Kahneman (2011). From a design point of view, the neural programming and mechanisms of System 1 are more complex and efficient (e.g., visual pattern recognition) than the rational and rule-based System 2 (e.g., numerical calculations). Another key question concerns the environment in which human intelligence evolved. What were the likely ecological environments where System 1 and System 2 evolved? The faster System 1 should work well in a harsh and uncertain environment, which is typical in human evolutionary history. In contrast, a slow and deliberate System 2 should be useful in a more resourceful and predictable modern environment, where future-oriented decisions are often beneficial for a prolonged lifespan. In a fast-changing and unpredictable environment, System 2 can be used to gather new information for System 1 to eventually make feeling-based decisions. In other words, System 2 feeds System 1 with processed novel information. Decisions are made only after converting analytical calculations into feelings (Damasio, 1996, 1999). For instance, people decide to marry a person when they fall in love with the person. However, women tend to love those who are caring and have bright financial prospects, while men tend to love those who are physically beautiful and have great reproductive potential (Buss & Schmitt, 1993). System 1, although seemingly quick and simple, takes into consideration many factors and digests them into feelings and intuitions for effective decision making.

Although System 2 uses effortful processing, it is indecisive without System 1, particularly for making decisions under uncertainty. This argument is consistent with the idea that System 2 is a slave or subsystem of System 1. This argument is also consistent empirically with the finding that the effortful processing of System 2 often results in System 1 activation, as indicated by bodily signs of increased heart rate and dilated pupils (Kahneman, 2011). For vital decisions under uncertainty, a slow and deliberative System 2 is not assurance but a liability, unless it can work together with System 1. Once thinking activates anticipatory emotions, decisions may become intuitive, adaptive, and reliable.

References

Artinger, F., Petersen, M., Gigerenzer, G., & Weibler, J. (2015). Heuristics as adaptive decision strategies in management. Journal of Organizational Behavior, 36(S1), S33–S52.

Berlin, I. (1953). The hedgehog and the fox: An essay on Tolstoy’s view of history. London, UK: Weidenfeld & Nicolson.

Bernoulli, D. (1738/1954). Exposition of a new theory on the measurement of risk. Econometrica, 22, 23–36. Translation of Bernoulli, D. (1738). Specimen theoriae novae de mensura sortis. Papers Imp. Acad. Sci. St. Petersburg, 5, 175–192.

Boller, P. F., Jr. (Ed.). (1981). Presidential anecdotes. New York, NY: Penguin.

Buchanan, L., & O’Connell, A. (2006). A brief history of decision making. Harvard Business Review, 84(1), 32–41.

Buss, D. M., & Schmitt, D. P. (1993). Sexual strategies theory: An evolutionary perspective on human mating. Psychological Review, 100(2), 204–232.

Collins, J. (2001). Good to great. New York, NY: Harper Business.

Damasio, A. (1996). Descartes’ error: Emotion, reason and the human brain. London, UK: Papermac.

Damasio, A. (1999). The feeling of what happens: Body and emotion in the making of consciousness. Orlando, FL: Harcourt Brace.

DeMiguel, V., Garlappi, L., & Uppal, R. (2007). Optimal versus naive diversification: How inefficient is the 1/N portfolio strategy. The Review of Financial Studies, 22(5), 1915–1953.

Dosi, G., Napoletano, M., Roventini, A., & Treibich, T. (2019). Debunking the granular origins of aggregate fluctuations: From real business cycles back to Keynes. Journal of Evolutionary Economics, 29(1), 67–90.

Gigerenzer, G. (2007). Gut feelings: The intelligence of the unconscious. New York, NY: Penguin.

Gigerenzer, G. (2010). Moral satisficing: Rethinking moral behavior as bounded rationality. Topics in Cognitive Science, 2(3), 528–554.

Gigerenzer, G. (2015). Risk savvy: How to make good decisions. New York, NY: Penguin.

Gigerenzer, G., & Brighton, H. (2009). Homo heuristicus: Why biased minds make better inferences. Topics in Cognitive Science, 1(1), 107–143.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual Review of Psychology, 62, 451–482.

Gigerenzer, G., & Selten, R. (Eds.). (2001). Bounded rationality: The adaptive toolbox. Cambridge, MA: MIT Press.

Haldane, A. G., & Madouros, V. (2012). The dog and the frisbee. Revista de Economía Institucional, 14(27), 13–56.

Kahneman, D. (2000). A psychological point of view: Violations of rational rules as a diagnostic of mental processes. The Behavioral and Brain Sciences, 23, 681–683.

Kahneman, D. (2011). Thinking, fast and slow. New York, NY: Farrar, Straus, and Giroux.

Kahneman, D., & Tversky, A. (1979). Prospect theory. Econometrica, 47, 263–292.

Keim, D. B., & Stambaugh, R. F. (1986). Predicting returns in the stock and bond markets. Journal of Financial Economics, 17(2), 357–390.

Keynes, J. M. (1921). A treatise on probability. London, UK: Macmillan and Co.

Knight, F. H. (1921). Risk, uncertainty and profit. New York, NY: Hart, Schaffner and Marx.

March, J. G. (2010). The ambiguities of experience. Ithaca, NY: Cornell University Press.

McFadden, D. (1999). Rationality for economists. Journal of Risk and Uncertainty, 19, 73–105.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81–97.

Ramsey, F. P. (1922/1989). Mr. Keynes on probability. Cambridge Magazine, 11, 3–5. Reprinted in the British Journal for the Philosophy of Science, 40, 219–222.

Ramsey, F. P. (1926/2016). Truth and probability. In Readings in formal epistemology (pp. 21–45). New York, NY: Springer.

Reyna, V. F., & Brainerd, C. J. (1995). Fuzzy-trace theory: An interim synthesis. Learning and Individual Differences, 7(1), 1–75.

Russell, B. (1957). The problems of philosophy. London, UK: Williams & Nogate.

Savage, L. J. (1954). The foundations of statistics. New York, NY: Wiley.

Shafer, G. (1990). The unity and diversity of probability. Statistical Science, 5(4), 435–444.

Simon, H. A. (1956). Rational choice and the structure of the environment. Psychological Review, 63(2), 129–138.

Simon, H. A., & Newell, A. (1958). Heuristic problem solving: The next advance in operations research. Operations Research, 6(1), 1–10.

Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. New Haven, CT: Yale University Press.

Tversky, A., & Kahneman, D. (1992). Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty, 5(4), 297–323.

Volz, K. G., & Gigerenzer, G. (2012). Cognitive processes in decisions under risk are not the same as in decisions under uncertainty. Frontiers in Neuroscience, 6, 105.

von Neumann, J., & Morgenstern, O. (1944). Theory of games and economic behavior. Princeton, NJ: Princeton University Press.

Wang, X. T. (2001). Bounded rationality of economic man: New frontiers in evolutionary psychology and bioeconomics. Journal of Bioeconomics, 3, 83–89.

Wang, X. T. (2019). Using behavioral economics to cope with uncertainty: Expand the scope of effective nudging. 如何用行为经济学应对不确定性: 拓展有效助推的范围. Acta Psychologica Sinica 《中国心理学报》, 4, 407–414.

Wang, X. T., & Johnson, G. J. (2012). A tri-reference point theory of decision making under risk. Journal of Experimental Psychology: General, 141(4), 743–756.

Xiong, G. (2017). Research on categorical probability estimation and its distribution of inflection points. (Unpublished doctoral dissertation) Jinan University, Guangzhou, China.

Zsambok, C. E., & Klein, G. (Eds.). (2014). Naturalistic decision making. New York, NY: Psychology Press.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Wang, X.T.(. (2020). Financial Decision Making Under Uncertainty: Psychological Coping Methods. In: Zaleskiewicz, T., Traczyk, J. (eds) Psychological Perspectives on Financial Decision Making. Springer, Cham. https://doi.org/10.1007/978-3-030-45500-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-45500-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-45499-9

Online ISBN: 978-3-030-45500-2

eBook Packages: Behavioral Science and PsychologyBehavioral Science and Psychology (R0)