Abstract

Computational fluid dynamics (CFD) analysis has recently been applied to study the performance of waste-to-energy systems, with a broad literature range dedicated to this topic. However, there is still a lack of guidance, particularly for new researchers, concerning a broad and detailed discussion on the current routes available to appropriately make use of these numerical models to describe waste combustion and gasification processes.

In this sense, this chapter contributes with theoretical considerations that are crucial to understanding the practical implementation within the ANSYS Fluent framework. The main required features to solve the solution, the main settings and the insights about the process flow are fully addressed. Overall, the guidelines here provided intend to guide the reader throughout the complete implementation process while clarifying many aspects of performing a suitable waste-to-energy analysis in CFD.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Computational fluid dynamics

- Gasification modelling

- Biomass gasification

- Guidelines

- Tutorial

- Fluidized bed gasifier

- Hydrodynamics

- ANSYS Fluent

- 2-D simulation

- Best practices

13.1 Introduction

Global warming and climate change stand as some of the greatest environmental, social and economic threats of our time. One solution to overcome this issue is by gradually employing renewable energy sources to replace fossil fuels envisioning an alternative to move towards sustainable development while mitigating environmental problems (Cai et al. 2011; Cardoso et al. 2019b; Couto et al. 2016a; Tarelho et al. 2011; Vicente et al. 2016).

Biomass and waste products carry great development potential since they can be easily stored and transported, and unlike other renewable energy sources, they can also be converted into biofuels thus increasing their applicability, contributing significantly to the energy independence of the region along with associated economic and environmental benefits (Ahmad et al. 2016; Cardoso et al. 2018b; Galadima and Muraza 2015; Pinto et al. 2014; Pio et al. 2017).

The economic and energetic performance of a solution turning biomass and wastes into energy depends on many variables, having the feedstock supply sustainability, the robust technical performance and the ability to predict the final products without carrying out expensive experimental tests, precedence in these lists (Cardoso et al. 2019a; Lamers et al. 2015; Leme et al. 2014; Lourinho and Brito 2015; Pereira et al. 2016). The correct assessment and approach to this and other concerns will determine the type of technology proper for each specific project. Selection of a preferred technology is complex and requires careful consideration of the fuel flexibility, type of biomass, global efficiency and performance, to mention a few.

It is then necessary to perform experimental and numerical work in different power scales comparing the most suitable technologies, typically combustion and gasification (Couto et al. 2017a; Maurer et al. 2014; Molino et al. 2016; Neves et al. 2011; Sanderson and Rhodes 2005). Both technologies are complex systems depending nonlinearly on a large number of parameters making the experimental work hard. Together with experimental characterization, the development of high-fidelity models is crucial for these technologies’ evaluation (Cardoso et al. 2019c; Ramos et al. 2018; Sharma et al. 2015; Silva and Rouboa 2015; Vepsäläinen et al. 2013). Table 13.1 lists a set of different numerical approaches to handle thermochemical conversion processes.

A set of governing equations are applied behind computational fluid dynamics (CFD) models. These are built by resorting on conservation of mass equations, momentum, energy and species over a designated region of the reactor, capable of evaluating temperature and concentration, among numerous others parameters with a considerable precision rate (Couto et al. 2016b, 2017b; Olaofe et al. 2014; Silva and Rouboa 2015; Xue et al. 2012). Therefore, CFD is broadly recognized as an appropriate and useful tool to deal with thermochemical conversion processes, and for that reason, it will be the bottom line of this tutorial guide.

Since the late 1990s, we have witnessed the development of CFD studies applied to a large broad of chemical and physical problems. The trend towards the use of CFD solutions applied to thermochemical conversion processes was inevitable, often involving the prediction of products and frequently discussing the design of different configurations, becoming a major tool for energy-related researchers and scientists.

A multitude of options take place throughout the process solving and, in concert, lead to the overall necessity to be aware of the many pieces comprising the CFD simulation. It is not surprising that the full understanding of the mechanisms behind the simulation setup requires in-depth knowledge of the software fundamental steps and guidelines. The real value of this knowledge is many times jeopardized, and the researchers often focus their efforts only in the physical problem neglecting the best way to implement it in a computational environment.

In this chapter, one aims to provide a working background for the practical scientists, researchers and engineers who wish to apply a CFD simulation to thermochemical conversion procedures without needing to become a computational master. Throughout the chapter, we will focus on practical interpretations of common problems, based on somewhat simplified but effective approaches, introducing some of the necessary theory to understand the step-by-step process to assemble a full resolution. Although one shall focus on thermochemical conversion processes, most of the discussed methods herein apply to a large range of other physical and chemical problems.

13.2 Problem and Domain Identification

CFD is a powerful tool, but its application should be restricted to cases where its implementation is justifiable since it can easily become much more computationally expensive and time-consuming than a simpler approach capable of reaching similar results.

In some cases, the decision of using analytical methods or 1-D models is straightforward and provides the required insights for less accurate needs. Typical examples are the problems with an analytical solution, the ones in which the engineer is only required to acknowledge the trends concerning the problem in hands and also some margin of error is allowed. Furthermore, the analytical methods or simpler models always provide a good first shot and an effective way of comparison with more sophisticated approaches as CFD.

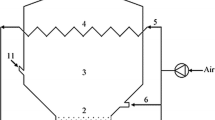

After carefully deciding on what type of results one wants to explore, and which is the most appropriate tool available, the user must focus on identifying the domain and isolate the intended section from the complete physical system. In the case of a gasification plant, the user can model a singular or a set of components such as the gasifier, the cleaning and feeding systems or even the heat exchangers. For the sake of simplicity, our attention here is devoted to the most important component in such setup, the gasifier. Figure 13.1 depicts a 75 kWth pilot-scale bubbling fluidized bed gasifier.

Most of the studies found in the literature emphasize the simulation effort in the gasifier (Couto et al. 2015a, b; Dinh et al. 2017; Sharma et al. 2014; Xue et al. 2011). This is easily understandable because relevant outputs in a gasification process such as the syngas composition, the particle behaviour, the bed hydrodynamics, the flow patterns and the temperature distribution are uncovered in these simulations.

The simulation of an entire gasification facility is mostly applied to flowsheet simulators like ASPEN Plus, although this solution lacks to provide detailed results on particle behaviour or aiding to understand the complex hydrodynamics within.

13.3 Pre-processing

13.3.1 Geometry

The next step requires the geometry definition of the selected gasifier and the division of the geometry into small elements, the so-called mesh generation. The geometry can be imported from design software such as SolidWorks or generated under the ANSYS Fluent framework. Figure 13.2 shows the schematics of the 75 kWth pilot-scale gasifier with all the respective inlets and outlet. Those are especially relevant at this stage because they allow defining the boundary conditions of such domain.

The users can create a geometry from scratch or a pre-existing one from a computer-aided design (CAD) model. Starting with a pre-existing geometry can save some time and effort; however other challenges may arise such as how to extract the fluid region from a solid part. Also, trying to simplify or remove unnecessary features from an already defined solid can be tricky or time-consuming. When creating a geometry from scratch, users are able to analyse the problem beforehand, allowing them to remove features they deem unnecessary that would complicate meshing such as fillets or bolt heads, which frequently add no crucial information and can be assumed to have little to no impact on the final solution.

Some additional questions can figure out challenging issues. Should the user select a 2-D or a 3-D geometry? Can the user take advantage of the design symmetry? In fact, researchers usually tend to choose a 2-D geometry for easiness and moderate numerical effort. A more sophisticated approach of still using 2-D geometries takes advantage of design symmetry and uses 2-D axisymmetric problem setups (Xie et al. 2008). When the accuracy is of primary importance, the 3-D domains are an imposition, but some authors still use only a small and representative part of such domain to meet an equilibrium between accuracy and computational time. When describing fluidized bed systems, and in order to obtain fully developed turbulent velocity profiles at the air and/or biomass inlet, some authors include pre-inlet pipes for the oxidizer and/or substrate inlet. For simplification purposes, only the 2-D geometry scheme is addressed.

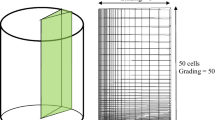

13.3.2 Mesh

As mentioned before, the geometry is split into small cells for numerical purposes. Here stands a major concern—the mesh must hold a good quality in several aspects, element distribution, element quantity and shape, and element smooth transition, to ensure that the results follow the pre-defined criteria of convergence. The process of developing a good mesh could be quite demanding, and a thumb rule is to generate a primary coarse mesh and then proceed by duplicating the number of elements in the following meshes (Cardoso et al. 2018a). When dealing with solid combustion or gasification, the user must guarantee a ratio within 5–12 between the maximum grid size and the particle diameter (Cardoso et al. 2018a). This rule may guide the user towards when to stop the mesh refinement. Lastly, the user must balance between the accuracy and the time needed for the simulation.

There is an assortment of parameters such as element quality, aspect ratio, skewness and orthogonal quality that are important indicators of the mesh quality. Element quality relates the ratio of the volume to the sum of the square of the edge lengths for 2-D elements, ranging from 0 to 1, in which higher values indicate higher element quality, 0 standing for null or negative volume element and 1 for perfect cube or square. Aspect ratio measures the stretching of a cell, and its acceptable range must be lower than 100. Skewness provides the level of distortion of the existing elements from standard or normalized elements; hence skewness metrics must be kept as low as possible, 0 for excellent and 1 for unacceptable. Orthogonal quality is determined by vectoring from the centre of an element to each of the adjacent elements, ranging from 0 to 1, where 0 claims the worst elements and 1 indicates high quality. When the user proceeds with a 2-D simulation, triangular- and quadrilateral-shaped elements are usually selected.

The user is advised to test with at least three or four meshes prior to the final decision. The chosen mesh must succeed at these quality parameters test, follow the implemented convergence criteria and reach the final solution in a reasonable amount of time.

An efficient way to refine a particular mesh is to identify and locate high gradients. This can be done by employing two possible routes: one is by manually setting a particular region where high gradients are expected, e.g. near walls, inlets/outlets, wall boundaries, smaller features and curved regions, and the other is to apply a mesh adaption feature, allowing the automatic mesh refinement in regions where the software sees fit without user interaction. However, in order to locally refine the mesh, the user must be able to recognize the areas where higher gradients are more likely to occur. ANSYS Meshing allows to speed up this process by implementing “Size Functions”; these controls automatically refine the mesh in the areas that will typically have higher gradients.

13.3.3 Mesh Analysis

In order to allow readers acquiring a deeper understanding of the relationship between mesh density, results and computational cost, a mesh sensitivity analysis was conducted based on previous works developed by the authors (Cardoso et al. 2018a).

The simulations were carried out in a 2-D geometry and are shown in Fig. 13.3, holding the three mesh resolutions previously presented in Table 13.2. A mesh refinement ratio of 2 and a maximum grid spacing rule of 10–12 times the particle diameter were used to build all meshes in this study. All numerical simulations concern gasification experiments making use of eucalyptus wood as biomass, mean diameter of 5 mm, and quartz sand as bed material, mean diameter of 0.5 mm. Operating conditions were set at a superficial gas velocity of 0.25 m/s, time-averaged over a total of 3 s simulation time and operating temperature of 873 K. The geometry domain was created according to the real dimensions of the 75 kWth fluidized bed reactor, 0.25 m width, 2.3 m height and static bed height of 0.23 m, so as to reproduce the established experimental operating conditions closer to a real scenario. At the bottom, atmospheric air is injected into the reactor, inlet, while at the top of the geometry, an opening is set to withdraw the produced syngas, outlet. Additional information regarding the reactor configurations and simulation parameters can be found in Cardoso et al. (2017, 2018a).

Clearer solids and void distribution areas are provided by the finer meshes, 50,544 and 103,350 elements, while a rougher representation is granted by the coarser mesh, 25,272 elements, as it misinterprets solid presence throughout various areas along the bed. Indeed, different mesh densities result in representation dissimilarities. Convergence-wise, coarser meshes tend to fail to provide a realistic interpretation of the bed behaviour, delivering fallacious assumptions and results accentuating the need to perform a mesh sensitivity study. Finer meshes are capable of providing a more accurate solution; nevertheless, computational time increases as a mesh is made finer; therefore one must reasonably balance between accuracy and computational resource availability.

13.4 Settings

When the user initiates the ANSYS Fluent, several decisions must be made. The user should define the type of geometry, 2-D or 3-D; the level of accuracy, single or double precision; and the processing options, serial or parallel. If the user disposes of a multicore machine, parallel processing can actively speed up the simulation. Along this section, the most important setting steps for performing a simulation will be briefly discussed. For the sake of simplicity, the steps regarding the chemical model implementation will not be covered in this chapter, and its proper implementation will be later defined in the upcoming chapters due to their importance. Therefore, all attentions are devoted to the remaining settings.

In the setup general options, two types of solvers are available, “pressure-based”, default option, and “density-based”. The pressure-based solver is used for most cases, gasification processes included, such as handling problems with low Mach number, once being more accurate for incompressible subsonic flows. On the other hand, the density-based solver is more accurate for supersonic flow applications with higher Mach number, such as to capture interacting shock waves. Regarding the time dependence, the flow characteristics can be specified as either steady-state or transient.

An important step in the setup of the model is defining the materials and their physical properties. Within the materials section, the user can edit, or create, the properties of any material from the ANSYS database. Here, the user can input the relevant properties for the problem scope. ANSYS Fluent will automatically show the properties that need to be defined according to the models previously selected by the user. For solid materials in gasification processes, density, specific heat, thermal conductivity and viscosity must be defined. For each property, one may specify them as a constant, a linear or polynomial function, define it by a kinetic theory or even employ a user-defined function (UDF). UDF inclusion can be advantageous, allowing the user to customize the setup bringing the solution closer to its particular needs. In fact, ANSYS Fluent allows the user to customize a lot of its standard features by UDF inclusion.

With the materials once defined, one must set the phases and the interactions between them in the “Phases” dialogue box placed in the toolbars. The options here contained will vary regarding the type of multiphase model the user employs. Then, one must also set the parameters related to the operating conditions in the model, such as operating pressure, atmospheric pressure as the default value, temperature, density and gravity. To finish the main actions necessary to undertake in the setup stage, the user must now assign the boundary conditions to each previously designated zone and perform the required inputs for each boundary. Boundary conditions are a required and very important component of the mathematical model. These specify the boundary locations in the geometry, allowing to direct the motion of flow to enter and exit the solution domain. ANSYS Fluent provides various types of boundary conditions concerning the type of solution at hands and the physical models considered.

13.5 Mathematical Model Formulation

Combustion and gasification of waste materials include more than one species phase making the process more complicated to handle. They are typical cases of multiphase flows. In general, the mathematical treatment given in a multiphase flow describes the gas phase as a continuum approach, being the solid phase the one who differs in the approach.

The Eulerian-Eulerian method treats the solid phase as a continuum, while the Eulerian-Lagrangian numerical approach tracks the solid particles individually. Within the Eulerian-Eulerian method, the most implemented option used to handle the thermochemical waste conversion to energy is the Eulerian granular model, while regarding the Eulerian-Lagrangian methods, the discrete phase model (DPM) is the one who stands out. Table 13.3 depicts the most relevant features of both methods.

Despite being excellent options, they still present a bunch of limitations and drawbacks. As mentioned above, the Eulerian granular models treat both phases as a continuum, meaning that the information given from particle trajectories and size distribution is scarce. The treatment of polydisperse particles is also ineffective with this method (Garzó et al. 2007). The DPM method addresses these drawbacks by tracking every particle and the particle collision (Fan et al. 2018), but such power demands a terrible computational effort limiting the number of particles to 2 × 105, which is not ideal for treating combustors or gasifiers.

To solve the inability to handle with dense particulate flows, in recent years, a new Eulerian-Lagrangian method known as dense discrete phase model (DDPM) was brought in. This one shows better grid independence and turns the mathematical treatment of particle size distribution easier. The DDPM method can be divided into two approaches: the DDPM-kinetic theory of granular flow (KTGF) and the DDPM-discrete element model (DEM). The DDPM-KTGF approach is more suited for diluted to moderately dense particulate flows. Its main advantage is to allow for faster computations while predicting particle-particle collisions without full DEM. On the other hand, the DDPM-DEM approach is most suited for dense to near-packing limit particulate flows.

13.5.1 Turbulent Flow

The large percentage of flows in practical cases of engineering is turbulent. This means the flows are three-dimensional, irregular, aperiodic and with a broad range of length and time scales. This also means the mathematical treatment becomes more demanding, sometimes even exceptionally demanding. When a flow is under a turbulent regime, there are fluctuating velocity fields, which in turn affect the main hydrodynamic features.

The turbulence transfer between phases plays a predominant role in the case of gasification and combustion being crucial to be aware of the leading modelling approaches.

There are three basic approaches that are normally employed to compute a turbulent flow:

-

Direct numerical simulation (DNS).

-

Scale-resolving simulations (SRS).

-

Reynolds-averaged Navier-Stokes simulations (RANS).

The DNS approach does not require any modelling to solve the full unsteady Navier-Stokes equations. This solution is known as being computationally demanding, and its application comes more as a research tool without any direct practical use in real industrial cases. In the SRS approach, there is a balance between modelling and resolution, with the smaller eddies than the grid being modelled and the bigger ones being directly resolved in the calculation. The traditional option in the industry relies on the use of the RANS models. It distinguishes itself from the previous methods by allowing a solution for steady-state simulations while modelling all the turbulence features. The RANS approach includes a large set of turbulence sub-models with specific features. Table 13.4 depicts the most relevant options within the RANS approach.

The right turbulence model to handle a real problem is still a very arguable question, and there is not a clear answer. Anyway, regarding combustion and gasification, the use of the standard k-ε model (ANSYS 2006; Zhang et al. 2015; Liu et al. 2013) still continues to be the primary option since it was first proposed by Launder and Spalding (1974). Figure 13.4 summarizes the three main approaches to compute a turbulent flow and corresponding features.

13.5.2 Radiation Model

The transfer of heat through electromagnetic energy is defined as radiation. Thermal radiation effects should be accounted whenever the heat radiation is at least equal or of greater magnitude than that of convective and conductive heat transfer rates, being of practical importance only at very high temperatures, above 800 K (Wong and Seville 2006). Radiation phenomena undergo complex interactions between the phases, so to accurately predict these interplays, computationally effective thermal radiation models are required to solve the radiative intensity transport equations. Table 13.5 describes the main radiation models available in ANSYS Fluent.

13.6 Solver Settings

Before proceeding with the solution calculation, the user must first set the solution methods. ANSYS Fluent provides multiple schemes to solve different types of solutions; however, only the available solution method settings for solving Eulerian multiphase flows will be addressed, and additional information on Lagrangian flows will be provided in the combustion tutorial chapter. For Eulerian multiphase flows, ANSYS Fluent solves the phase momentum equations, the shared pressure and the phasic volume fraction equations either by implementing a coupled or a segregated fashion. Figure 13.5 depicts an overview concerning the various features the user must engage to properly set the solver.

After the initialization, the “Patch” button becomes enabled. Setting the Patch values for individual variables in certain regions of the domain is an essential task while modelling multiphase flows and combustion problems. In order to do so, one must first create a domain region adaption, within the Adapt panel, which marks individual cells for refinement. In fluidized bed simulation, this marked cell region of interest is the area occupied by the static bed, delimited by the extremities of the reactor’s domain and the bed height, and this selection is very important to set the different phase volume fractions in the region. Having defined the region for adaption, it is a good practice to display it so to visually verify if it encompasses the desired area. Following this procedure, the user may now return to the Patch panel and set the initial volume fraction of the solids, bed material and biomass in case of a binary mixture, in the marked bed region of the fluidized bed.

The “Run Calculation” allows to finally start the solver iterations. The available panel options in this task page vary concerning previous settings made. For a transient flow calculation, the user disposes of various options to determine the time step. Employing a “Fixed” time-stepping method allows the user to input the intended time step size, in seconds, and the number of time steps. If desired, the user may enable the “Extrapolate Variables” option, to estimate an initial guess for the next time step, allowing to speed up the transient solution by reducing required sub-iteration, and the “Data Sampling for Time Statistics”, to compute the time average, mean and root mean square of the instantaneous values sampled during the calculation. All remaining options may be set as default.

Additionally, during the calculation process, the user may display contours, vectors, monitor plots or even mesh, for any desired quantity in the “Graphics and Animations” dropdown list. Displaying the solid volume fraction contours in the reactor’s domain is particularly useful for hydrodynamic analysis in fluidized bed gasification , allowing the user to follow the solid evolution being refreshed for every time step throughout the simulation time. Having finished all these solver settings, the user may now start the calculation.

-

If the user desires to shorten the computational time for transient solutions, the phase-coupled SIMPLE scheme is more appropriate than the coupled scheme.

-

In case the user chooses to enforce a couple scheme, a Courant number, 200 by default, and explicit relaxation factors for momentum and pressure, 0.75 by default, must be specified. The user may decrease the Courant number and the explicit relaxation factors if difficulties in reaching the convergence are encountered, whether being due to higher-order schemes or to the high complexity of the problem, such as in multiphase and combustion problems. These can later be heightened if the iteration process runs smoothly.

-

Different numerical schemes may respond differently to the applied under-relaxation factors. For instance, setting lower under-relaxation factors for the volume fraction equation for the coupled scheme may lag the solution considerably, and values placed around 0.5 or above are considered acceptable. Contrarily, the phase-coupled SIMPLE generally requires a low under-relaxation for the volume fraction equation.

-

When the solution solver requires higher-order numerical schemes or higher spatial discretization, it is recommended that the user initiates the solution by setting smaller time steps. These may be further increased after performing a few time steps so as to achieve a better approximation of the pressure field.

-

For better convergence in gasification analysis, it is recommended to start the solution with a non-reacting flow and without radiation model. To do so, it is necessary to disable the chemical reactions, radiation equations and fluid-particle interactions. For instance, if the user intends to evaluate the solid particle behaviour within a fluidized bed, the solution must be initiated without the inclusion of the chemical reaction sub-model. This allows analysing, in a first stage, the hydrodynamics features by employing a simpler approach and determining if the results obtained are within tolerance and if proper behaviour is being achieved. After such validation, the chemical reactions model and the devolatilization sub-model can be securely added to the mathematical model.

-

Lastly, the residuals stand as useful indicators of the iterative converge of the solution, quantifying the error in the solution of the equations system; thus it is important to monitor the residual behaviour during the calculation. Throughout this iterative process, the residuals are expected to progressively decay to smaller values, never reaching exact zero, up until they get levelled and substantial changes stop occurring. The lower the residual values are, the more numerically accurate the solution will be. If the residuals present an increasing behaviour within the first few iterations, one should consider lowering the under-relaxation factors and resume the calculation. Occasionally, the residuals may present a rather unstable behaviour showing huge fluctuations; on such occasions, the user should proceed by reducing the under-relaxation factors. Yet, if the instabilities prevail, this might be a sign of previous misconfigurations; thereby, the user must re-check previous settings such as initial values, boundary conditions, mesh and fluid properties, in order to reach more stable residual curves.

13.7 Best Practices, Model Validation and Verification

After completing the whole simulation process and getting a solution, it is time to proceed with an in-depth analysis of the results. The overall accuracy of the numerical simulation depends on the magnitude of several factors:

-

Round-off errors.

-

Iteration errors.

-

Discretization errors.

-

Simplifications and assumptions.

-

Differences between the numerical model and the real problem.

The first item emphasizes the errors that are coming up from the misrepresentation of real numbers due to incorrect truncation. Nowadays, with robust computational power, these errors are minimized, but a bunch of situations can lead to the source of the problem:

-

Significant differences in length and time scales.

-

Large range parameter variation.

-

Major grid variations.

Some thumb rules are advised such as using a double-precision feature, defining target variables and comparing the double-precision results with single precision.

The iteration errors are intimately related with the user ability to reduce the presence of residuals in the numerical simulation. The best way to ensure a good convergence implies the right selection of residual criteria for the most critical parameters. It will be wise to plot and follow the residuals of these parameters through the convergence process. The user can impose tighter criteria in specific settings and must pay attention if the mass and energy balances are being respected.

As previously described, the use of the right mesh is crucial to get a good solution. There will always be differences between the solution found on the selected grid and an infinitely fine grid. The major goal here is to minimize such differences by simultaneously balancing the required time to run the numerical simulation. The reader should bear in mind that it is impossible to get an exact solution due to discretization errors but it is possible to keep them low by performing a mesh sensitivity analysis. Different discretization schemes in fine grids provide very similar results, while coarser meshes can lead to substantial dissimilarities. Anyway, the user should be aware of the most suitable discretization scheme for the problem in hands.

The last items seem obvious, but a significant contribution of the errors arises from inadequate simplifications, assumptions or incorrect use of mathematical models. The user can cut all the previous mistakes and still find substantial deviations between the experimental and numerical runs. Here, the user must be sure about the physics of the problem and test the impact of the riskiest assumptions. On the other hand, all the computational packages include a large number of models with their simplifications and assumptions, and the user must check the right adequacy for what it is intended.

In summary, the best practices to minimize such errors go through the following actions:

-

Get a good grid quality by performing sensitivity analysis.

-

Use the double-precision scheme and compare the results with single precision.

-

Follow the residuals and check the mass and energy balances.

-

Correct the defined residual criteria when needed.

-

Use high-order discretization schemes.

-

Check which one of the available models is suitable to reasonably depict the physics of the problem.

The listing of the previous errors and the ways to prevent it are a good source of inspiration for the next step. The user must verify and validate the results. It is essential to understand the meaning of verification and validation and the importance of performing both. By verification, one means the procedure to guarantee that the software package solves the mathematical problem with all the equations, boundary conditions and all the other computational settings. By validation, one means the procedure to ensure the employed model satisfies the experimental data in a broad range of conditions.

The validation procedure is sometimes hard because experimental data is not always available and when available rarely offers a large set of conditions for comparison. Anyway, the user must ensure that the model fits reasonably the experimental data at least in a set of conditions where the key parameters vary within an interesting range. If the model captures the key trends, there is a certain degree of confidence that the user can extrapolate conclusions in alternative geometries or under hazardous conditions that are prohibitive experimentally.

13.8 Post-processing

Having converged and validated the solution, it is now time to obtain the results first planned in the pre-analysis step. Researchers possess a wide range of options when it comes to post-processing such as contour plots, vectors, streamlines, iso-surfaces, video screenings, creating planes and lines to study particular solution regions, creating graphical representations and generate reports. Within the ANSYS framework, there are two possible routes to post-process the simulation results from Fluent, the Fluent post-processing integrated tools or the ANSYS CFD-Post application.

In the “Results” task page, one may find a set of the most common post-processing features, namely, contours, vectors, pathlines, particle tracks, animations, several types of plotting and reports. Supplementary post-processing tasks can be found within the “Postprocessing” banner in the toolbar tabs. Here, the user may access and create surface regions in the solution domain like points, lines and planes.

The other possible route for post-processing is to use the ANSYS CFD-Post. Both platforms are perfectly capable of addressing the most basic post-processing; however, contrary to the ANSYS Fluent post-processing built-in options, the CFD-Post provides far more powerful and sophisticated post-processing capabilities as 3D-viewer files, user variables, automatic HTML report generation and case comparison tools (Rüdisüli et al. 2012). ANSYS Fluent allows the user to send the case and data files to CFD-Post and to perform the various post-processing actions. For such, the user must select the quantities one desires to export by creating a “CFD-Post Compatible Automatic Export” within the “Calculation Activities” task page.

Regarding fluidized bed gasification , the use of CFD-Post is advantageous as it allows to produce visual data with higher quality, assisting to better visualize and understand the complex flow phenomena within the reactor. Indeed, the ANSYS CFD-Post options are immense and are best learned in a hands-on manner; the experience will lead researchers to take their result visualization and analysis to the next level by taking the maximum potential of such a broad application.

13.9 Conclusions

This chapter has summarized and discussed the main process steps one must endure to implement a model within the ANSYS Fluent framework. The implementation of chemistry sets will be later defined in the upcoming chapters due to their importance. At this stage, the user must have a clear idea on the theory behind how to manage the ANSYS Fluent software and how it interplays with gasification and combustion process implementation.

Abbreviations

- ANN:

-

Artificial neural network

- CAD:

-

Computer-aided design

- CFD:

-

Computational fluid dynamics

- DDPM:

-

Dense discrete phase model

- DDPM-DEM:

-

Dense discrete phase model-discrete element model

- DDPM-KTGF:

-

Dense discrete phase model-kinetic theory of granular flow

- DNS:

-

Direct numerical simulation

- DO:

-

Discrete ordinates

- DPM:

-

Discrete phase model

- DTRM:

-

Discrete transfer radiation model

- HTML:

-

Hypertext markup language

- RANS:

-

Reynolds-averaged Navier-Stokes simulations

- RNG:

-

Renormalization group

- S2S:

-

Surface-to-surface

- SRS:

-

Scale-resolving simulations

- SST:

-

Shear-stress transport

- UDF:

-

User-defined function

References

Ahmad A, Zawawi N, Kasim F, Inayat A, Khasri A (2016) Assessing the gasification performance of biomass: a review on biomass gasification process conditions, optimization and economic evaluation. Renew Sust Energ Rev 53:1333–1347. https://doi.org/10.1016/j.rser.2015.09.030

ANSYS (2006) Modeling turbulent flows

ANSYS (2013) ANSYS fluent theory guide

Baruah D, Baruah DC (2014) Modeling of biomass gasification: a review. Renew Sust Energ Rev 39:806–815. https://doi.org/10.1016/j.rser.2014.07.129

Cai Y, Huang G, Yeh S, Liu L, Li L (2011) A modeling approach for investigating climate change impacts on renewable energy utilization. Int J Energy Res 36:764–777. https://doi.org/10.1002/er.1831

Cardoso J, Silva V, Eusébio D, Brito P (2017) Hydrodynamic modelling of municipal solid waste residues in a pilot scale fluidized bed reactor. Energies 10:17773. https://doi.org/10.3390/en10111773

Cardoso J, Silva V, Eusébio D, Brito P, Tarelho L (2018a) Improved numerical approaches to predict hydrodynamics in a pilot-scale bubbling fluidized bed biomass reactor: a numerical study with experimental validation. Energy Convers Manag 156:53–67. https://doi.org/10.1016/j.renene.2014.11.089

Cardoso J, Silva V, Eusébio D, Brito P, Tarelho L (2018b) Comparative scaling analysis of two different sized pilot-scale fluidized bed reactors operating with biomass substrates. Energy 151:520–535. https://doi.org/10.1016/j.energy.2018.03.090

Cardoso J, Silva VB, Eusébio D (2019a) Techno-economic analysis of a biomass gasification power plant dealing with forestry residues blends for electricity production in Portugal. J Clean Prod 212:741–753. https://doi.org/10.1016/j.jclepro.2018.12.054

Cardoso J, Silva VB, Eusébio D (2019b) Process optimization and robustness analysis of municipal solid waste gasification using air-carbon dioxide mixtures as gasifying agent. Int J Energy Res 43:4715–4728. https://doi.org/10.1002/er.4611

Cardoso J, Silva VB, Eusébio D, Brito P, Boloy R, Tarelho SJ (2019c) Comparative 2D and 3D analysis on the hydrodynamics behaviour during biomass gasification in a pilot-scale fluidized bed reactor. Renew Energy 131:713–729. https://doi.org/10.1016/j.renene.2018.07.080

Couto N, Silva V, Monteiro E, Teixeira S, Chacartegui R, Bouziane K, Brito P, Rouboa A (2015a) Numerical and experimental analysis of municipal solid wastes gasification process. Appl Therm Eng 78:185–195. https://doi.org/10.1016/j.applthermaleng.2014.12.036

Couto N, Silva V, Monteiro E, Brito P, Rouboa A (2015b) Using an Eulerian-granular 2-D multiphase CFD model to simulate oxygen air enriched gasification of agroindustrial residues. Renew Energy 77:174–181. https://doi.org/10.1016/j.renene.2014.11.089

Couto N, Silva V, Monteiro E, Rouboa A, Brito P (2016a) Hydrogen-rich gas from gasification of Portuguese municipal solid wastes. Int J Hydrog Energy 41:10619–10630. https://doi.org/10.1016/j.ijhydene.2016.04.091

Couto N, Silva V, Bispo C, Rouboa A (2016b) From laboratorial to pilot fluidized bed reactors: analysis of the scale-up phenomenon. Energy Convers Manag 119:177–186. https://doi.org/10.1016/j.enconman.2016.03.085

Couto N, Silva V, Monteiro E, Rouboa A, Brito P (2017a) An experimental and numerical study on the miscanthus gasification by using a pilot scale gasifier. Renew Energy 109:248–261. https://doi.org/10.1016/j.renene.2017.03.028

Couto N, Silva V, Cardoso J, Rouboa A (2017b) 2nd law analysis of Portuguese municipal solid waste gasification using CO2/air mixtures. J CO2 Util 20:347–356. https://doi.org/10.1016/j.jcou.2017.06.001

Dinh CB, Liao CC, Hsiau SS (2017) Numerical study of hydrodynamics with surface heat transfer in a bubbling fluidized-bed reactor applied to fast pyrolysis of rice husk. Adv Powder Technol 28:419–429. https://doi.org/10.1016/j.apt.2016.10.013

Fan H, Guo D, Dong J, Cui X, Zhang M, Zhang Z (2018) Discrete element method simulation of the mixing process of particles with and without cohesive interparticle forces in a fluidized bed. Powder Technol 327:223–231. https://doi.org/10.1016/j.powtec.2017.12.016

Fogler HS, Gurmen NM (2002) Aspen plus workshop for reaction engineering and design. The University of Michigan

Galadima A, Muraza O (2015) Waste to liquid fuels: potency, progress and challenges. Int J Energy Res 39:1451–1478. https://doi.org/10.1002/er.3360

Garzó V, Dufty JW, Hrenya CM (2007) Enskog theory for polydisperse granular mixtures. I. Navier-Stokes order transport. Phys Rev E Stat Nonlin Soft Matter Phys 76:031303. https://doi.org/10.1103/PhysRevE.76.031303

Godlieb W, Deen NG, Kuipers JAM (2007) A discrete particle simulation study of solids mixing in a pressurized fluidized bed. In: Paper presented at the 2007 ECI conference on the 12th international conference on fluidization—new horizons in fluidization engineering, Vancouver, Canada

Lamers P, Roni M, Tumuluru J, Jacobson J, Cafferty K, Hansen J, Kenney K, Teymouri F, Bals B (2015) Techno-economic analysis of decentralized biomass processing depots. Bioresour Technol 194:205–213. https://doi.org/10.1016/j.biortech.2015.07.009

Launder BE, Spalding DB (1974) The numerical computation of turbulent flows. Comput Methods Appl Mech Eng 3:269–289. https://doi.org/10.1016/0045-7825(74)90029-2

Leme M, Rocha M, Lora E, Venturini O, Lopes B, Ferreira C (2014) Techno-economic analysis and environmental impact assessment of energy recovery from municipal solid waste (MSW) in Brazil. Resour Conserv Recycl 87:8–20. https://doi.org/10.1016/j.resconrec.2014.03.003

Liu H, Elkamel A, Lohi A, Biglari M (2013) Computational fluid dynamics modeling of biomass gasification in circulating fluidized-bed reactor using the Eulerian−Eulerian approach. Ind Eng Chem Res 52:18162–18174. https://doi.org/10.1021/ie4024148

Lourinho G, Brito P (2015) Assessment of biomass energy potential in a region of Portugal (Alto Alentejo). Energy 81:189–201. https://doi.org/10.1016/j.energy.2014.12.021

Maurer S, Schildhauer TJ, Ruud van Ommen J, Biollaz SMA, Wokaun A (2014) Scale-up of fluidized beds with vertical internals: studying the sectoral approach by means of optical probes. Chem Eng J 252:131–140. https://doi.org/10.1016/j.cej.2014.04.083

Molino A, Chianese S, Musmarra D (2016) Biomass gasification technology: the state of the art overview. J Energy Chem 25:10–25. https://doi.org/10.1016/j.jechem.2015.11.005

Neves D, Thunman H, Matos A, Tarelho L, Barea AG (2011) Characterization and prediction of biomass pyrolysis products. Prog Energy Combust Sci 37:611–630. https://doi.org/10.1016/j.pecs.2011.01.001

Olaofe OO, Patil AV, Deen NG, van der Hoef MA, Kuipers JAM (2014) Simulation of particle mixing and segregation in bidisperse gas fluidized beds. Chem Eng Sci 108:258–269. https://doi.org/10.1016/j.ces.2014.01.009

Pereira S, Costa M, Carvalho M, Rodrigues A (2016) Potential of poplar short rotation coppice cultivation for bioenergy in southern Portugal. Energy Convers Manag 125:242–253. https://doi.org/10.1016/j.enconman.2016.03.068

Pinto F, André RN, Carolino C, Miranda M, Abelha P, Direito D, Perdikaris N, Boukis I (2014) Gasification improvement of a poor quality solid recovered fuel (SRF). Effect of using natural minerals and biomass wastes blends. Fuel 117:1034–1044. https://doi.org/10.1016/j.fuel.2013.10.015

Pio D, Tarelho L, Matos M (2017) Characteristics of the gas produced during biomass direct gasification in an autothermal pilot-scale bubbling fluidized bed reactor. Energy 120:915–928. https://doi.org/10.1016/j.energy.2016.11.145

Ramos A, Monteiro E, Silva V, Rouboa A (2018) Co-gasification and recent developments on waste-to-energy conversion: a review. Renew Sust Energ Rev 81:380–398. https://doi.org/10.1016/j.rser.2017.07.025

Rüdisüli M, Schildhauer TJ, Biollaz SMA, Ommen JR (2012) Scale-up of bubbling fluidized bed reactors—a review. Powder Technol 217:21–38. https://doi.org/10.1016/j.powtec.2011.10.004

Sanderson J, Rhodes M (2005) Bubbling fluidized bed scaling laws: evaluation at large scales. AIChE J 51:2686–2694. https://doi.org/10.1002/aic.10511

Sehrawat J, Patel M, Kumar B (2015) Gaussian process regression to predict incipient motion of Alluvial channel. In: Das K, Deep K, Pant M, Bansal JC, Nagar A (eds) Proceedings of fourth international conference on soft computing for problem solving, advances in intelligent systems and computing, vol 336. Springer, New Delhi. https://doi.org/10.1007/978-81-322-2220-0_35

Sharma A, Wang S, Pareek V, Yang H, Zhang D (2014) CFD modeling of mixing/segregation behavior of biomass and biochar particles in a bubbling fluidized bed. Chem Eng Sci 106:264–274. https://doi.org/10.1016/j.ces.2013.11.019

Sharma A, Wang S, Pareek V, Yang H, Zhang D (2015) Multi-fluid reactive modeling of fluidized bed pyrolysis process. Chem Eng Sci 123:311–321. https://doi.org/10.1016/j.ces.2014.11.019

Silva VB, Rouboa A (2013) Using a two-stage equilibrium model to simulate oxygen air enriched gasification of pine biomass residues. Fuel Process Technol 109:111–117. https://doi.org/10.1016/j.fuproc.2012.09.045

Silva VB, Rouboa A (2015) Combining a 2-D multiphase CFD model with a response surface methodology to optimize the gasification of Portuguese biomasses. Energy Convers Manag 99:28–40. https://doi.org/10.1016/j.enconman.2015.03.020

Tarelho L, Neves D, Matos M (2011) Forest biomass waste combustion in a pilot-scale bubbling fluidised bed combustor. Biomass Bioenergy 35:1511–1523. https://doi.org/10.1016/j.biombioe.2010.12.052

Vepsäläinen A, Shah S, Ritvanen J, Hyppänen T (2013) Bed Sherwood number in fluidised bed combustion by Eulerian CFD modelling. Chem Eng Sci 93:206–213. https://doi.org/10.1016/j.ces.2013.01.065

Vicente E, Tarelho L, Teixeira E, Duarte M, Nunes T, Colombi C, Gianelle V, Rocha G, Sanchez de la Campa A, Alves C (2016) Emissions from the combustion of eucalypt and pine chips in a fluidized bed reactor. J Environ Sci 42:246–258. https://doi.org/10.1016/j.jes.2015.07.012

Wong YS, Seville JPK (2006) Single-particle motion and heat transfer in fluidized beds. AIChE J 52:4099–4109. https://doi.org/10.1002/aic.11012

Xie N, Battaglia F, Pannala S (2008) Effects of using two- versus three-dimensional computational modeling of fluidized beds part I, hydrodynamics. Powder Technol 182:1–13. https://doi.org/10.1016/j.powtec.2007.07.005

Xue Q, Heindel TJ, Fox RO (2011) A CFD model for biomass fast pyrolysis in fluidized-bed reactors. Chem Eng Sci 66:2440–2452. https://doi.org/10.1016/j.ces.2011.03.010

Xue Q, Dalluge D, Heindel TJ, Fox RO, Brown RC (2012) Experimental validation and CFD modeling study of biomass fast pyrolysis in fluidized-bed reactors. Fuel 97:757–769. https://doi.org/10.1016/j.fuel.2012.02.065

Zhang Y, Lei F, Xiao Y (2015) Computational fluid dynamics simulation and parametric study of coal gasification in a circulating fluidized bed reactor. Asia Pac J Chem Eng 10:307–317. https://doi.org/10.1002/apj.1878

Acknowledgements

The authors are deeply thankful to the Portuguese Foundation for Science and Technology (FCT) for the grant SFRH/BD/146155/2019 and for the projects IF/01772/2014 and CMU/TMP/0032/2017.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Cardoso, J., Silva, V.B., Eusébio, D. (2020). Implementation Guidelines for Modelling Gasification Processes in Computational Fluid Dynamics: A Tutorial Overview Approach. In: Inamuddin, Asiri, A. (eds) Sustainable Green Chemical Processes and their Allied Applications. Nanotechnology in the Life Sciences. Springer, Cham. https://doi.org/10.1007/978-3-030-42284-4_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-42284-4_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-42283-7

Online ISBN: 978-3-030-42284-4

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)