Abstract

This contribution contains the description and investigation of three numerical methods for solving generalized minimax problems. These problems consists in the minimization of nonsmooth functions which are compositions of special smooth convex functions with maxima of smooth functions. The most important functions of this type are the sums of maxima of smooth functions. Section 11.2 is devoted to primal interior point methods which use solutions of nonlinear equations for obtaining minimax vectors. Section 11.3 contains investigation of smoothing methods, based on using exponential smoothing terms. Section 11.4 contains short description of primal-dual interior point methods based on transformation of generalized minimax problems to general nonlinear programming problems. Finally the last section contains results of numerical experiments.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Generalized Minimax Problems

In many practical problems we need to minimize functions that contain absolute values or pointwise maxima of smooth functions. Such functions are nonsmooth but they often have a special structure enabling the use of special methods that are more efficient than methods for minimization of general nonsmooth functions. The classical minimax problem, where F(x) =max1≤k≤mf k(x), or problems where the function to be minimized is a nonsmooth norm, e.g. F(x) = ∥f(x)∥∞, F(x) = ∥f +(x)∥∞, F(x) = ∥f(x)∥1, F(x) = ∥f +(x)∥1 with f(x) = [f 1(x), …, f m(x)]T and \(f_+({\boldsymbol x}) = [\max \{f_1({\boldsymbol x}),0\}, \dots , \max \{f_m({\boldsymbol x}),0\}]^T\), are typical examples. Such functions can be considered as special cases of more general functions, so it is possible to formulate more general theories and construct more general numerical methods. One possibility for generalization of the classical minimax problem consists in the use of the function

where \({\boldsymbol p}_k \in \mathbb {R}^m\), \(1 \leq k \leq \overline {k}\), and \({{\boldsymbol f}}: \mathbb {R}^n \to \mathbb {R}^m\) is a smooth mapping. This function is a special case of composite nonsmooth functions of the form \(F({\boldsymbol x}) = f_0({\boldsymbol x}) + \max _{1 \leq k \leq \overline {k}} ({\boldsymbol p}_k^T {{\boldsymbol f}}({\boldsymbol x}) + b_k)\), where \(f_0:\mathbb {R}^n \to \mathbb {R}\) is a continuously differentiable function [8, Section 14.1].

Remark 11.1

We can express all above mentioned minimax problems and nonsmooth norms in form (11.1).

-

(a)

Setting p k = e k, where e k is the k-th column of an identity matrix and \(\overline {k} = m\), we obtain F(x) =max1≤k≤mf k(x) (the classical minimax).

-

(b)

Setting p k = e k, p m+k = −e k and \(\overline {k} = 2m\), we obtain \(F({\boldsymbol x}) = \max _{1 \leq k \leq m} \max \{f_k({\boldsymbol x}), -f_k({\boldsymbol x})\} = \|f({\boldsymbol x})\|{ }_{\infty }\).

-

(c)

Setting p k = e k, p m+1 = 0 and \(\overline {k} = m+1\), we obtain \(F({\boldsymbol x}) = \max \{\max _{1 \leq k \leq m} f_k({\boldsymbol x}), 0\} = \|f({\boldsymbol x})_+\|{ }_{\infty }\).

-

(d)

If \(\overline {k} = 2^m\) and p k, 1 ≤ k ≤ 2m, are mutually different vectors whose elements are either 1 or − 1, we obtain \(F({\boldsymbol x}) = \sum _{k=1}^m \max \{f_k({\boldsymbol x}), -f_k({\boldsymbol x})\} = \|f({\boldsymbol x})\|{ }_1\).

-

(e)

If \(\overline {k} = 2^m\) and p k, 1 ≤ k ≤ 2m, are mutually different vectors whose elements are either 1 or 0, we obtain \(F({\boldsymbol x}) = \sum _{k=1}^m \max \{f_k({\boldsymbol x}), 0\} = \|f_+({\boldsymbol x})\|{ }_1\).

Remark 11.2

Since the mapping f(x) is continuously differentiable, the function (11.1) is Lipschitz. Thus, if the point \({\boldsymbol x} \in \mathbb {R}^n\) is a local minimum of F(x), then 0 ∈ ∂F(x) [25, Theorem 3.2.5] holds. According to [25, Theorem 3.2.13], one has

where \(\bar {\mathcal {I}}({\boldsymbol x}) = \{k \in \{1, \dots , \overline {k}\} : {\boldsymbol p}_k^T {{\boldsymbol f}}({\boldsymbol x}) = F({\boldsymbol x})\}\). Thus, if the point \({\boldsymbol x} \in \mathbb {R}^n\) is a local minimum of F(x), then multipliers λ k ≥ 0, \(1 \leq k \leq \overline {k}\), exist, such that \(\lambda _k ({\boldsymbol p}_k^T {{\boldsymbol f}}({\boldsymbol x}) - F({\boldsymbol x})) = 0\), \(1 \leq k \leq \overline {k}\),

where J(x) is a Jacobian matrix of the mapping f(x).

Remark 11.3

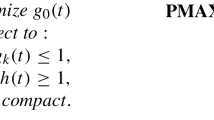

It is clear that a minimum of function (11.1) is a solution of a nonlinear programming problem consisting in minimization of a function \(\tilde {F}: \mathbb {R}^{n+1} \to \mathbb {R}\), where \(\tilde {F}({\boldsymbol x},z) = z\), on the set

Denoting \(c_k({\boldsymbol x},z) = {\boldsymbol p}_k^T {{\boldsymbol f}}({\boldsymbol x}) - z\) and a k = ∇c k(x, z), \(1 \leq k \leq \overline {k}\), we obtain \({\boldsymbol a}_k = [{\boldsymbol p}_k^T J({\boldsymbol x}), -1]^T\) and \({\boldsymbol g} = \nabla \tilde {F}({\boldsymbol x},z) = [{\mathbf {0}}^T,1]^T\), so the necessary KKT conditions can be written in the form

\(\lambda _k ({\boldsymbol p}_k^T {{\boldsymbol f}}({\boldsymbol x}) - z) = 0\), where λ k ≥ 0, \(1 \leq k \leq \overline {k}\), are the Lagrange multipliers and z = F(x). Thus, we obtain the same necessary conditions for an extremum as in Remark 11.2.

From the examples given in Remark 11.1 it follows that composite nondifferentiable functions are not suitable for representation of the functions F(x) = ∥f(x)∥1 and F(x) = ∥f +(x)∥1 because in this case the expression on the right-hand side of (11.1) contains 2m elements with vectors p k, 1 ≤ k ≤ 2m. In the subsequent considerations, we will choose a somewhat different approach. We will consider generalized minimax functions established in [5] and [23].

Definition 11.1

We say that \(F:\mathbb {R}^n \to \mathbb {R}\) is a generalized minimax function if

where \(h: \mathbb {R}^m \to \mathbb {R}\) and \(f_{kl}: \mathbb {R}^n \to \mathbb {R}\), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are smooth functions satisfying the following assumptions.

Assumption 11.1

Functions f kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are bounded from below on \(\mathbb {R}^n\), so that there exists a constant \( \underline {F} \in \mathbb {R}\)such that \(f_{kl}({\boldsymbol x}) \geq \underline {F}\), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, for all \({\boldsymbol x} \in \mathbb {R}^n\).

Assumption 11.2

Functions F k, 1 ≤ k ≤ m, are bounded from below on \(\mathbb {R}^n\), so that there exist constants \( \underline {F}_k \in \mathbb {R}\)such that \(F_k({\boldsymbol x}) \geq \underline {F}_k\), 1 ≤ k ≤ m, for all \({\boldsymbol x} \in \mathbb {R}^n\).

Assumption 11.3

The function h is twice continuously differentiable and convex satisfying

for every \({\boldsymbol z} \in {\mathcal {Z}} = \{{\boldsymbol z} \in \mathbb {R}^m: z_k \geq \underline {F}_k, \; 1 \leq k \leq m\}\) (vector \({\boldsymbol z} \in \mathbb {R}^m\) is called the minimax vector).

Assumption 11.4

Functions f kl(x), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are twice continuously differentiable on the convex hull of the level set

for a sufficiently large upper bound \(\overline {F}\)and subsequently, constants \(\overline {g}\)and \(\overline {G}\)exist such that \(\|{\boldsymbol g}_{kl}({\boldsymbol x})\| \leq \overline {g}\)and \(\|G_{kl}({\boldsymbol x})\| \leq \overline {G}\)for all 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and \({\boldsymbol x} \in \operatorname {\mathrm {conv}} {\mathcal {D}}_F(\overline {F})\), whereg kl(x) = ∇f kl(x) and G kl(x) = ∇2f kl(x).

Remark 11.4

The conditions imposed on the function h(z) are relatively strong but many important nonsmooth functions satisfy them.

-

(1)

Let \(h: \mathbb {R} \to \mathbb {R}\) be an identity mapping, so h(z) = z and h′(z) = 1 > 0. Then setting \(\overline {k} = 1\), \(m_1 = \overline {l}\) and

$$\displaystyle \begin{aligned}F({\boldsymbol x}) = h(F_1({\boldsymbol x})) = F_1({\boldsymbol x}) = \max_{1 \leq l \leq \overline{l}} {\boldsymbol p}_l^T {{\boldsymbol f}}({\boldsymbol x})\end{aligned}$$(since \(f_{1l} = {\boldsymbol p}_l^T {{\boldsymbol f}}({\boldsymbol x})\)), we obtain the composite nonsmooth function (11.1) and therefore the functions F(x) =max1≤k≤mf k(x), F(x) = ∥f(x)∥∞, F(x) = ∥f +(x)∥∞.

-

(2)

Let \(h: \mathbb {R}^m \to \mathbb {R}\), where h(z) = z 1 + ⋯ + z m, so \(\frac {\partial }{\partial z_k} h({\boldsymbol z}) = 1 > 0\), 1 ≤ k ≤ m. Then function (11.2) has the form

$$\displaystyle \begin{aligned} F({\boldsymbol x}) = \sum_{k=1}^m F_k({\boldsymbol x}) = \sum_{k=1}^m \max_{1 \leq l \leq m_k}f_{kl}({\boldsymbol x}) \end{aligned} $$(11.4)(the sum of maxima). If m k = 2 and \(F_k({\boldsymbol x}) = \max \{f_k({\boldsymbol x}), -f_k({\boldsymbol x})\}\), we obtain the function F(x) = ∥f(x)∥1. If m k = 2 and \(F_k({\boldsymbol x}) = \max \{f_k({\boldsymbol x}), 0\}\), we obtain the function F(x) = ∥f +(x)∥1. It follows that the expression of functions F(x) = ∥f(x)∥1 and F(x) = ∥f +(x)∥1 by (11.2) contains only m summands and each summand is a maximum of two function values. Thus, this approach is much more economic than the use of formulas stated in Remark 11.1(d)–(e).

Remark 11.5

Since the functions F k(x), 1 ≤ k ≤ m, are regular [25, Theorem 3.2.13], the function h(z) is continuously differentiable, and \(h_k = \frac {\partial }{\partial z_k} h({\boldsymbol z}) > 0\), one can write [25, Theorem 3.2.9]

where \(\bar {\mathcal {I}}_k({\boldsymbol x}) = \{l: 1 \leq l \leq m_k, f_{kl}({\boldsymbol x}) = F_k({\boldsymbol x})\}\). Thus, one has

where for 1 ≤ k ≤ m it holds λ kl ≥ 0, λ kl(F k(x) − f kl(x)) = 0, 1 ≤ l ≤ m k, and \(\sum _{l=1}^{m_k} \lambda _{kl}=1\). Setting u kl = h kλ kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, we can write

where for 1 ≤ k ≤ m it holds u kl ≥ 0, u kl(F k(x) − f kl(x)) = 0, 1 ≤ l ≤ m k, and \(\sum _{l=1}^{m_k} u_{kl} = h_k\). If a point \({\boldsymbol x} \in \mathbb {R}^n\) is a minimum of a function F(x), then 0 ∈ ∂F(x), so there exist multipliers u kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, such that

Remark 11.6

Unconstrained minimization of function (11.2) is equivalent to the nonlinear programming problem

The condition (11.3) is sufficient for satisfying equalities z k = F k(x), 1 ≤ k ≤ m, at the minimum point. Denoting c kl(x, z) = f kl(x) − z k and a kl(x, z) = ∇c kl(x, z), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, we obtain \({\boldsymbol a}_{kl}({\boldsymbol x},{\boldsymbol z}) = [{\boldsymbol g}_{kl}^T({\boldsymbol x}), -{\boldsymbol e}_k^T]^T\), where g kl(x) is a gradient of f kl(x) in x and e k is the k-th column of the unit matrix of order m. Thus, the necessary first-order (KKT) conditions have the form

where u kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are Lagrange multipliers and z k = F k(x). So we obtain the same necessary conditions for an extremum as in Remark 11.5.

Remark 11.7

A classical minimax problem

can be replaced with an equivalent nonlinear programming problem

and the necessary KKT conditions have the form

Remark 11.8

Minimization of the sum of absolute values

where

can be replaced with an equivalent nonlinear programming problem

(there are two constraints \(c_k^-({\boldsymbol x}) = z_k - f_k({\boldsymbol x}) \geq 0\) and \(c_k^+({\boldsymbol x}) = z_k + f_k({\boldsymbol x}) \geq 0\) for each index 1 ≤ k ≤ m) and the necessary KKT conditions have the form

where 1 ≤ k ≤ m. If we set \(u_k = u_k^+ - u_k^-\) and use the equality \(u_k^+ + u_k^- = 1\), we obtain \(u_k^+ = (1 + u_k)/2\), \(u_k^- = (1 - u_k)/2\). From conditions \(u_k^+ \geq 0\), \(u_k^- \geq 0\) the inequalities − 1 ≤ u k ≤ 1, or |u k|≤ 1, follow. The condition \(u_k^+ + u_k^- = 1\) implies that the numbers \(u_k^+\), \(u_k^-\) cannot be simultaneously zero, so either z k = f k(x) or z k = −f k(x), that is z k = |f k(x)|. If f k(x) ≠ 0, it cannot simultaneously hold z k = f k(x) and z k = −f k(x), so the numbers \(u_k^+\), \(u_k^-\) cannot be simultaneously nonzero. Then either \(u_k = u_k^+ = 1\) and z k = f k(x) or \(u_k = -u_k^- = -1\) and z k = −f k(x), that is u k = f k(x)∕|f k(x)|. Thus, the necessary KKT conditions have the form

Remark 11.9

Minimization of the sum of absolute values can also be reformulated so that more slack variables are used. We obtain the problem

where 1 ≤ k ≤ m. This problem contains m general equality constraints and 2m simple bounds for 2m slack variables.

In the subsequent considerations, we will restrict ourselves to functions of the form (11.4), the sums of maxima that include most cases important for applications. In this case, it holds

where \(\tilde {{\boldsymbol e}} \in \mathbb {R}^m\) is a vector with unit elements. The case when h(z) is a general function satisfying Assumption 11.3 is studied in [23].

2 Primal Interior Point Methods

2.1 Barriers and Barrier Functions

Primal interior point methods for equality constraint minimization problems are based on adding a barrier term containing constraint functions to the minimized function. A resulting barrier function, depending on a barrier parameter \(0 < \mu \leq \overline {\mu } < \infty \), is successively minimized on \(\mathbb {R}^n\) (without any constraints), where μ ↓ 0. Applying this approach on the problem (11.7), we obtain a barrier function

where \(\varphi : (0,\infty ) \to \mathbb {R}\) is a barrier which satisfies the following assumption.

Assumption 11.5

Function φ(t), t ∈ (0, ∞), is twice continuously differentiable, decreasing, and strictly convex, with limt↓0φ(t) = ∞. Function φ′(t) is increasing and strictly concave such that limt↑∞φ′(t) = 0. For t ∈ (0, ∞) it holds − tφ′(t) ≤ 1, t 2φ″(t) ≤ 1. There exist numbers τ > 0 and \( \underline {c} > 0\)such that for t < τ it holds

and

Remark 11.10

A logarithmic barrier function

is most frequently used. It satisfies Assumption 11.5 with \( \underline {c} = 1\) and τ = ∞ but it is not bounded from below since \(\log t \uparrow \infty \) for t ↑∞. For that reason, barriers bounded from below are sometimes used, e.g. a function

which is bounded from below by number \( \underline {\varphi } = -\log \tau \), or a function

which is bounded from below by number \( \underline {\varphi } = c = -\log \tau -3/2\), or a function

which is bounded from below by number \( \underline {\varphi } = c = -\log {\tau }-3\). Coefficients a, b, c are chosen so that function φ(t) as well as its first and second derivatives are continuous in t = τ. All these barriers satisfy Assumption 11.5 [23] (the proof of this statement is trivial for logarithmic barrier (11.25)).

Even if bounded from below barriers (11.26)–(11.28) have more advantageous theoretical properties (Assumption 11.1 can be replaced with a weaker Assumption 11.2 and the proof of Lemma 11.2 below is much simpler, see [23]), algorithms using logarithmic barrier (11.26) are usually more efficient. Therefore, we will only deal with methods using the logarithmic barrier \(\varphi (t) = -\log t\) in the subsequent considerations.

2.2 Iterative Determination of a Minimax Vector

Suppose the function h(z) is of form (11.21). Using the logarithmic barrier \(\varphi (t) = -\log t\), function (11.22) can be written as

Further, we will denote g kl(x) and G kl(x) gradients and Hessian matrices of functions f kl(x), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and set

and

Denoting by g(x, z) the gradient of the function B μ(x, z) and \(\gamma _k({\boldsymbol x},{\boldsymbol z}) = \frac {\partial }{\partial z_k} B_{\mu }({\boldsymbol x},{\boldsymbol z}) \), the necessary conditions for an extremum of the barrier function (11.22) can be written in the form

where \(A_k({\boldsymbol x}) = [{\boldsymbol g}_{k1}({\boldsymbol x}), \ldots , {\boldsymbol g}_{km_k}({\boldsymbol x})]\), which is a system of n + m nonlinear equations for unknown vectors x and z. These equations can be solved by the Newton method. In this case, the second derivatives of the Lagrange function (which are the first derivatives of expressions (11.31) and (11.32)) are computed. Denoting

the Hessian matrix of the Lagrange function and setting

we can write

Using these formulas we obtain a system of linear equations describing a step of the Newton method

where

Setting

and γ(x, z) = [γ 1(x, z), …, γ m(x, z)]T, a step of the Newton method can be written in the form

The diagonal matrix D(x, z) is positive definite since it has positive diagonal elements.

During iterative determination of a minimax vector we know a value of the parameter μ and vectors \({\boldsymbol x} \in \mathbb {R}^n\), \({\boldsymbol z} \in \mathbb {R}^m\) such that z k > F k(x), 1 ≤ k ≤ m. Using formula (11.40) we determine direction vectors Δx, Δz. Then, we choose a step length α so that

and z k + αΔz k > F k(x + αΔx), 1 ≤ k ≤ m. Finally, we set x + = x + αΔx, z + = z + αΔz and determine a new value μ + < μ. If the matrix of system of equations (11.40) is positive definite, inequality (11.41) is satisfied for a sufficiently small value of the step length α.

Theorem 11.1

Let the matrix G(x, z) given by (11.33) be positive definite. Then the matrix of system of equations (11.40) is positive definite.

Proof

The matrix of system of equations (11.40) is positive definite if and only if the matrix D and its Schur complement W − CD −1C T are positive definite [7, Theorem 2.5.6]. The matrix D is positive definite since it has positive diagonal elements. Further, it holds

matrices \(A_k V_k A_k^T - A_k V_k \tilde {{\boldsymbol e}}_k (\tilde {{\boldsymbol e}}_k^T V_k \tilde {{\boldsymbol e}}_k)^{-1} (A_k V_k \tilde {{\boldsymbol e}}_k)^T\), 1 ≤ k ≤ m, are positive semidefinite due to the Schwarz inequality and the matrix G is positive definite by the assumption. □

2.3 Direct Determination of a Minimax Vector

Now we will show how to solve system of equations (11.31)–(11.32) by direct determination of a minimax vector using two-level optimization

and

The problem (11.42) serves for determination of an optimal vector \({\boldsymbol z}({\boldsymbol x};\mu ) \in \mathbb {R}^m\). Let \(\tilde {B}_{\mu }({\boldsymbol z}) = B_{\mu }({\boldsymbol x},{\boldsymbol z})\) for a fixed chosen vector \({\boldsymbol x} \in \mathbb {R}^n\). The function \(\tilde {B}_{\mu }({\boldsymbol z})\) is strictly convex (as a function of a vector z), since it is a sum of convex function (11.21) and strictly convex functions \(-\mu \log (z_k - f_{kl}({\boldsymbol x}))\), 1 ≤ k ≤ m, 1 ≤ l ≤ m k. A minimum of the function \(\tilde {B}_{\mu }({\boldsymbol z})\) is its stationary point, so it is a solution of system of equations (11.32) with Lagrange multipliers (11.30). The following theorem shows that this solution exists and is unique.

Theorem 11.2

The function \(\tilde {B}_{\mu }({\boldsymbol z}): (F({\boldsymbol x}),\infty ) \to \mathbb {R}\)has a unique stationary point which is its global minimum. This stationary point is characterized by a system of equationsγ(x, z) = 0, or

which has a unique solution \({\boldsymbol z}({\boldsymbol x};\mu ) \in \mathbb {Z} \subset \mathbb {R}^m\) such that

for 1 ≤ k ≤ m.

Proof

Definition 11.1 implies f kl(x) ≤ F k(x), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, where the equality occurs for at least one index l.

-

(a)

If (11.44) holds, then we can write

$$\displaystyle \begin{aligned} \begin{array}{rcl} 1 = \sum_{l=1}^{m_k} \frac{\mu}{z_k - f_{kl}({\boldsymbol x})} > \frac{\mu}{z_k - F_k({\boldsymbol x})} \; &\displaystyle \Leftrightarrow &\displaystyle \; z_k - F_k({\boldsymbol x}) > \mu, \\ 1 = \sum_{l=1}^{m_k} \frac{\mu}{z_k - f_{kl}({\boldsymbol x})} < \frac{m_k \mu}{z_k - F_k({\boldsymbol x})} \; &\displaystyle \Leftrightarrow &\displaystyle \; z_k - F_k({\boldsymbol x}) < m_k \mu, \end{array} \end{aligned} $$which proves inequalities (11.45).

-

(b)

Since

and the function γ k(x, z k) is continuous and decreasing in F k(x) + μ < z k(x;μ) < F k(x) + m k by (11.37), the equation γ k(x, z k) = 0 has a unique solution in this interval. Since the function \(\tilde {B}_{\mu }({\boldsymbol z})\) is convex this solution corresponds to its global minimum.

□

System (11.44) is a system of m scalar equations with localization inequalities (11.45). These scalar equations can be efficiently solved by robust methods described e.g. in [14] and [15] (details are stated in [22]). Suppose that z = z(x;μ) and denote

To find a minimum of B μ(x, z) in \(\mathbb {R}^{n+m}\), it suffices to minimize \(\hat {B}({\boldsymbol x};\mu )\) in \(\mathbb {R}^n\).

Theorem 11.3

Consider the barrier function (11.46). Then

where G(x;μ) = G(x, z(x;μ)) and W(x;μ), C(x;μ), D(x;μ), U k(x;μ), \(V_k({\boldsymbol x};\mu ) = U_k^2({\boldsymbol x};\mu ) / \mu \), 1 ≤ k ≤ m, are obtained by the same substitution. A solution of equation

is identical with Δxgiven by (11.40), wherez = z(x;μ) (soγ(x, z(x;μ)) = 0).

Proof

Differentiating the barrier function (11.46) and using (11.32) we obtain

where

Formula (11.48) can be obtained by additional differentiation of relations (11.32) and (11.47) using (11.50). A simpler way is based on using (11.40). Since (11.32) implies γ(x, z(x;μ)) = 0, we can substitute γ = 0 into (11.40), which yields the equation

where z = z(x;μ), that confirms validity of formulas (11.48) and (11.49) (details can be found in [22]). □

Remark 11.11

To determine an inverse of the Hessian matrix, one can use a Woodbury formula [7, Theorem 12.1.4] which gives

If the matrix \(\nabla ^2 \hat {B}({\boldsymbol x};\mu )\) is not positive definite, it can be replaced by a matrix \(L L^T = \nabla ^2 \hat {B}({\boldsymbol x};\mu ) + E\), obtained by the Gill–Murray decomposition [10]. Note that it is more advantageous to use system of linear equations (11.40) instead of (11.49) for determination of a direction vector Δx because the system of nonlinear equations (11.44) is solved with prescribed finite precision, and thus a vector γ(x, z), defined by (11.32), need not be zero.

From

it follows that ∥V k(x;μ)∥↑∞ if μ ↓ 0, so Hessian matrix (11.48) may be ill-conditioned if the value μ is very small. From this reason, we use a lower bound \( \underline {\mu } > 0\) for μ.

Theorem 11.4

Let Assumption 11.4be satisfied and \(\mu \geq \underline {\mu } > 0\). If G(x;μ) is uniformly positive definite (if a constant \( \underline {G}\)exists such that \({\boldsymbol v}^T G({\boldsymbol x}; \mu ) {\boldsymbol v} \geq \underline {G} \|{\boldsymbol v}\|{ }^2\)), then there is a number \(\overline {\kappa } \geq 1\)such that \(\kappa (\nabla ^2 \hat {B}({\boldsymbol x};\mu )) \leq \overline {\kappa }\).

Proof

-

(a)

Using (11.30), (11.48), and Assumption 11.4, we obtain

$$\displaystyle \begin{aligned} \|\nabla^2 \hat{B}({\boldsymbol x};\mu)\| & \leq \left\|G({\boldsymbol x};\mu) + \sum_{k=1}^m A_k({\boldsymbol x}) V_k({\boldsymbol x};\mu) A_k^T({\boldsymbol x})\right\| \\ & \leq \sum_{k=1}^m \sum_{l=1}^{m_k} \left(\left|G_{kl}({\boldsymbol x}) u_{kl}({\boldsymbol x},\mu)\right| +\frac{1}{\mu} \left|u^2_{kl}({\boldsymbol x};\mu) {\boldsymbol g}_{kl}({\boldsymbol x}) {\boldsymbol g}_{kl}^T({\boldsymbol x}) \right|\right) \\ & \leq \frac{\overline{m}}{\mu} \left(\overline{\mu} \, \overline{G} + \overline{g}^2\right)\stackrel{\varDelta}{=} \frac{\overline{c}}{\mu} \leq \frac{\overline{c}}{\underline{\mu}}, {} \end{aligned} $$(11.52)because \(0 \leq u_{kl}({\boldsymbol x}; \mu ) \leq \tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}; \mu ) =1\), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, by (11.44).

-

(b)

From the proof of Theorem 11.1 it follows that the matrix \(\nabla ^2 \hat {B}({\boldsymbol x};\mu ) - G({\boldsymbol x};\mu )\) is positive semidefinite. Therefore,

$$\displaystyle \begin{aligned}\underline{\lambda}(\nabla^2 \hat{B}({\boldsymbol x};\mu)) \geq \underline{\lambda}(G({\boldsymbol x};\mu)) \geq \underline{G}.\end{aligned}$$ -

(c)

Since (a) implies \(\overline {\lambda }(\nabla ^2 \hat {B}({\boldsymbol x};\mu )) = \|\nabla ^2 \hat {B}({\boldsymbol x};\mu )\| \leq \overline {c} / \underline {\mu }\), using (b) we can write

$$\displaystyle \begin{aligned} \kappa(\nabla^2 \hat{B}({\boldsymbol x};\mu)) = \frac{\overline{\lambda}(\nabla^2 \hat{B}({\boldsymbol x};\mu))}{\underline{\lambda}(\nabla^2 \hat{B}({\boldsymbol x};\mu))} \leq \frac{\overline{c}}{\underline{\mu} \, \underline{G}} \stackrel{\varDelta}{=}\overline{\kappa}. {} \end{aligned} $$(11.53)

□

Remark 11.12

If there exists a number \(\overline {\kappa } > 0\) such that \(\kappa (\nabla ^2 \hat {B}({\boldsymbol x}_i;\mu _i)) \leq \overline {\kappa }\), \(i \in \mathbb {N}\), the direction vector Δx i, given by solving a system of equations \(\nabla ^2\hat {B}({\boldsymbol x}_i;\mu _i)\varDelta {\boldsymbol x}_i = -\nabla \hat {B}({\boldsymbol x}_i;\mu _i)\), satisfies the condition

where \(\varepsilon _0 = 1 / \sqrt {\overline {\kappa }}\) and \({\boldsymbol g}({\boldsymbol x};\mu ) = \nabla \hat {B}({\boldsymbol x};\mu )\). Then, for arbitrary numbers 0 < ε 1 ≤ ε 2 < 1 one can find a step length parameter α i > 0 such that for x i+1 = x i + α iΔx i it holds

so there exists a number c > 0 such that (see [26, Section 3.2])

If Assumption 11.4 is not satisfied, then only (Δx i)Tg(x i;μ i) < 0 holds (because the matrix \(\nabla ^2 \hat {B}({\boldsymbol x};\mu )\) is positive definite by Theorem 11.1) and

2.4 Implementation

Remark 11.13

In (11.39), it is assumed that G(x, z) is the Hessian matrix of the Lagrange function. Direct computation of the matrix G(x;μ) = G(x, z(x;μ)) is usually difficult (one can use automatic differentiation as described in [13]). Thus, various approximations G ≈ G(x;μ) are mostly used.

-

The matrix G ≈ G(x;μ) can be determined using differences

$$\displaystyle \begin{aligned}G {\boldsymbol w}_j = \frac 1{\delta} \left( \sum_{k=1}^m A_k({\boldsymbol x} + \delta {\boldsymbol w}_j) {\boldsymbol u}_k({\boldsymbol x}; \mu) - \sum_{k=1}^m A({\boldsymbol x}) {\boldsymbol u}_k({\boldsymbol x}; \mu) \right).\end{aligned}$$The vectors w j, \(1 \leq j \leq \overline {k}\), are chosen so that the number of them is as small as possible [4, 27].

-

The matrix G ≈ G(x;μ) can be determined using the variable metric methods [17]. The vectors

$$\displaystyle \begin{aligned}{\boldsymbol d} = {\boldsymbol x}_+ - {\boldsymbol x}, \quad {\boldsymbol y} = \sum_{k=1}^m A_k({\boldsymbol x}_+) {\boldsymbol u}_k({\boldsymbol x}_+; \mu) - \sum_{k=1}^m A_k({\boldsymbol x}) {\boldsymbol u}_k({\boldsymbol x}_+; \mu)\end{aligned}$$are used for an update of G.

-

If the problem is separable (i.e. f kl(x), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are functions of a small number n kl = O(1) of variables), one can set as in [12]

$$\displaystyle \begin{aligned}G = \sum_{k=1}^m \sum_{l=1}^{m_k} Z_{kl} \hat{G}_{kl} Z_{kl}^T u_{kl}({\boldsymbol x}, {\boldsymbol z}),\end{aligned}$$where the reduced Hessian matrices \(\hat {G}_{kl}\) are updated using the reduced vectors \(\hat {{\boldsymbol d}}_{kl} = Z_{kl}^T ({\boldsymbol x}_+ - {\boldsymbol x})\) and \(\hat {{\boldsymbol y}}_{kl} = Z_{kl} ({\boldsymbol g}_{kl}({\boldsymbol x}_+) - {\boldsymbol g}_{kl}({\boldsymbol x}))\).

Remark 11.14

The matrix G ≈ G(x;μ) obtained by the approach stated in Remark 11.13 can be ill-conditioned so condition (11.54) (with a chosen value ε 0 > 0) may not be satisfied. In this case it is possible to restart the iteration process and set G = I. Then \(\overline {G} = 1\) and \( \underline {G} = 1\) in (11.52) and (11.53), so it is a higher probability of fulfilment of condition (11.54). If the choice G = I does not satisfy (11.54), we set Δx = −g(x;μ) (a steepest descent direction).

An update of μ is an important part of interior point methods. Above all, μ ↓ 0 must hold, which is a main property of interior point methods. Moreover, rounding errors may cause that z k(x;μ) = F k(x) when the value μ is small (because F k(x) < z k(x;μ) ≤ F k(x) + m kμ and F k(x) + m kμ → F k(x) if μ ↓ 0), which leads to a breakdown (division by z k(x;μ) − F k(x) = 0) when computing 1∕(z k(x;μ) − F k(x)). Therefore, we need to use a lower bound \( \underline {\mu }\) for a barrier parameter (e.g. \( \underline {\mu } = 10^{-8}\) when computing in double precision).

The efficiency of interior point methods also depends on the way of decreasing the value of a barrier parameter. The following heuristic procedures proved successful in practice, where \( \underline {g}\) is a suitable constant.

Procedure A

- Phase 1.:

-

If \(\|{\boldsymbol g}({\boldsymbol x}_i;\mu _i)\| \geq \underline {g}\), then μ i+1 = μ i (the value of a barrier parameter is unchanged).

- Phase 2.:

-

If \(\|{\boldsymbol g}({\boldsymbol x}_i;\mu _i)\| < \underline {g}\), then

$$\displaystyle \begin{aligned} \mu_{i+1} = \max\left\{\tilde{\mu}_{i+1}, \, \underline{\mu}, \, 10 \, \varepsilon_M |F({\boldsymbol x}_{i+1})|\right\}, {} \end{aligned} $$(11.58)where F(x i+1) = F 1(x i+1) + ⋯ + F m(x i+1), ε M is a machine precision, and

(11.59)

(11.59)The values \( \underline {\mu } = 10^{-8}\), λ = 0.85, and σ = 100 are usually used.

Procedure B

- Phase 1.:

-

If ∥g(x i;μ i)∥2 ≥ 𝜗μ i, then μ i+1 = μ i (the value of a barrier parameter is unchanged).

- Phase 2.:

-

If ∥g(x i;μ i)∥2 < 𝜗μ i, then

$$\displaystyle \begin{aligned} \mu_{i+1} = \max\{\underline{\mu},\|{\boldsymbol g}_i({\boldsymbol x}_i;\mu_i)\|{}^2\}. {} \end{aligned} $$(11.60)The values \( \underline {\mu } = 10^{-8}\) and 𝜗 = 0.1 are usually used.

The choice of \( \underline {g}\) in Procedure A is not critical. We can set \( \underline {g} = \infty \) but a lower value is sometimes more advantageous. Formula (11.59) requires several comments. The first argument of the minimum controls the decreasing speed of the value of a barrier parameter which is linear (a geometric sequence) for small i (the term λμ i) and sublinear (a harmonic sequence) for large i (the term μ i∕(σμ i + 1)). Thus, the second argument ensuring that the value μ is small in a neighborhood of a desired solution is mainly important for large i. This situation may appear if the gradient norm ∥g(x i;μ i)∥ is small even if x i is far from a solution. The idea of Procedure B proceeds from the fact that a barrier function \(\hat {B}({\boldsymbol x};\mu )\) should be minimized with a sufficient precision for a given value of a parameter μ.

The considerations up to now are summarized in Algorithm 11.1 introduced in the Appendix. This algorithm supposes that the matrix A(x) is sparse. If it is dense, the algorithm is simplified because there is no symbolic decomposition.

2.5 Global Convergence

Now we prove the global convergence of the method realized by Algorithm 11.1.

Lemma 11.1

Let numbers z k(x;μ), 1 ≤ k ≤ m, be solutions of Eq.(11.44). Then

Proof

Differentiating (11.44) with respect to μ, one can write for 1 ≤ k ≤ m

which after multiplication of μ together with (11.30) and (11.44) gives

Differentiating the function

and using (11.44) we obtain

□

Lemma 11.2

Let Assumption 11.1be satisfied. Let {x i} and {μ i}, \(i \in \mathbb {N}\), be the sequences generated by Algorithm 11.1. Then the sequences \(\{\hat {B}({\boldsymbol x}_i;\mu _i)\}\), {z(x i;μ i)}, and {F(x i)}, \(i \in \mathbb {N}\), are bounded. Moreover, there exists a constant L ≥ 0 such that for \(i \in \mathbb {N}\)it holds

Proof

-

(a)

We first prove boundedness from below. Using (11.61) and Assumption 11.1, one can write

$$\displaystyle \begin{aligned} \begin{array}{rcl} \hat{B}({\boldsymbol x};\mu) - \underline{F} &\displaystyle = &\displaystyle \sum_{k=1}^m z_k({\boldsymbol x};\mu) - \underline{F} - \mu \sum_{k=1}^m \sum_{l=1}^{m_k} \log(z_k({\boldsymbol x};\mu) - f_{kl}({\boldsymbol x})) \\ &\displaystyle \geq &\displaystyle \sum_{k=1}^m \left(z_k ({\boldsymbol x};\mu) - \underline{F} - m_k \mu \log(z_k({\boldsymbol x};\mu) - \underline{F})\right). \end{array} \end{aligned} $$A convex function \(\psi (t) = t - m \mu \log (t)\) has a unique minimum at the point t = mμ because ψ′(mμ) = 1 − mμ∕mμ = 0. Thus, it holds

$$\displaystyle \begin{aligned} \hat{B}({\boldsymbol x};\mu) & \geq \underline{F} + \sum_{k=1}^m (m_k \mu - m_k \mu \log(m_k \mu))\\ & \geq \underline{F} +\sum_{k=1}^m \min \{0, \, m_k \overline{\mu} (1 - \log(m_k \overline{\mu})\} \\ & \geq \underline{F} + \sum_{k=1}^m \min \{0, \, m_k \overline{\mu}(1 - \log(2 m_k \overline{\mu})\} \stackrel{\varDelta}{=} \underline{B}. \end{aligned} $$Boundedness from below of sequences {z(x i;μ i)} and {F(x i)}, \(i \in \mathbb {N}\), follows from inequalities (11.45) and Assumption 11.1.

-

(b)

Now we prove boundedness from above. Similarly as in (a) we can write

$$\displaystyle \begin{aligned} \begin{array}{rcl} \hat{B}({\boldsymbol x};\mu) - \underline{F} &\displaystyle \geq &\displaystyle \sum_{k=1}^m \frac{z_k({\boldsymbol x};\mu) - \underline{F}}{2} \\ &\displaystyle &\displaystyle + \sum_{k=1}^m \left(\frac{z_k({\boldsymbol x};\mu) - \underline{F}}{2} - m_k \mu \log(z_k({\boldsymbol x};\mu) - \underline{F})\right). \end{array} \end{aligned} $$A convex function \(t/2 - m \mu \log (t)\) has a unique minimum at the point t = 2mμ. Thus, it holds

$$\displaystyle \begin{aligned} \begin{array}{rcl} \hat{B}({\boldsymbol x};\mu) &\displaystyle \geq &\displaystyle \sum_{k=1}^m \frac{z_k({\boldsymbol x};\mu) - \underline{F}}{2} + \underline{F} + \sum_{k=1}^m \min \{0, \, m \overline{\mu} (1 - \log(2 m_k \overline{\mu}))\} \\ &\displaystyle = &\displaystyle \sum_{k=1}^m \frac{z_k({\boldsymbol x};\mu) - \underline{F}}{2} + \underline{B} \end{array} \end{aligned} $$or

$$\displaystyle \begin{aligned} \sum_{k=1}^m (z_k({\boldsymbol x};\mu) - \underline{F}) \leq 2(\hat{B}({\boldsymbol x};\mu) - \underline{B}). {} \end{aligned} $$(11.63)Using the mean value theorem and Lemma 11.1, we obtain

$$\displaystyle \begin{aligned} \hat{B}({\boldsymbol x}_{i+1}; \mu_{i+1}&) - \hat{B}({\boldsymbol x}_{i+1}; \mu_i) \\ &= \sum_{k=1}^m \sum_{l=1}^{m_k} \log(z_k({\boldsymbol x}_{i+1}; \tilde{\mu}_i) - f_{kl}({\boldsymbol x}_{i+1}))(\mu_i - \mu_{i+1}) \\ & \leq \sum_{k=1}^m \sum_{l=1}^{m_k} \log(z_k({\boldsymbol x}_{i+1};\mu_i) - f_{kl}({\boldsymbol x}_{i+1}))(\mu_i - \mu_{i+1}) \\ & \leq \sum_{k=1}^m m_k \log(z_k({\boldsymbol x}_{i+1};\mu_i) - \underline{F}) (\mu_i - \mu_{i+1}), {} \end{aligned} $$(11.64)where \(0 < \mu _{i+1} \leq \tilde {\mu }_i \leq \mu _i\). Since \(\log (t) \leq t/e\) (where \(e = \exp (1)\)) for t > 0, we can write using inequalities (11.63), (11.64), and (11.45)

$$\displaystyle \begin{aligned} \hat{B}({\boldsymbol x}_{i+1}; \mu_{i+1}) - \underline{B} &\leq \hat{B}({\boldsymbol x}_{i+1}; \mu_i) - \underline{B} \\ &~~+ \sum_{k=1}^{m} m_k \log(z_k({\boldsymbol x}_{i+1};\mu_i) - \underline{F}) (\mu_i - \mu_{i+1}) \\ & \leq \hat{B}({\boldsymbol x}_{i+1}; \mu_i) - \underline{B} \\ &~~ + e^{-1} \sum_{k=1}^{m} m_k (z_k({\boldsymbol x}_{i+1};\mu_i) - \underline{F})(\mu_i - \mu_{i+1}) \\ & \leq \hat{B}({\boldsymbol x}_{i+1}; \mu_i) - \underline{B} \\ &~~ + 2 e^{-1} \overline{m} (\hat{B}({\boldsymbol x}_{i+1};\mu_i) - \underline{B})(\mu_i - \mu_{i+1}) \\ & = (1 + \lambda \delta_i)(\hat{B}({\boldsymbol x}_{i+1};\mu_i) - \underline{B})\\ &\leq (1 + \lambda \delta_i)(\hat{B}({\boldsymbol x}_i;\mu_i) - \underline{B}), \end{aligned} $$where \(\lambda =2 \overline {m} / e\) and δ i = μ i − μ i+1. Therefore,

$$\displaystyle \begin{aligned} \begin{array}{rcl} \hat{B}({\boldsymbol x}_{i+1}; \mu_{i+1}) - \underline{B} &\displaystyle \leq &\displaystyle \prod_{j=1}^i (1 + \lambda \delta_j) (\hat{B}({\boldsymbol x}_1; \mu_1) - \underline{B}) \\ &\displaystyle \leq &\displaystyle \prod_{i=1}^\infty (1 + \lambda \delta_i) (\hat{B}({\boldsymbol x}_1; \mu_1) - \underline{B}) {} \end{array} \end{aligned} $$(11.65)and since

$$\displaystyle \begin{aligned}\sum_{i=1}^{\infty} \lambda \delta_i = \lambda \sum_{i=1}^{\infty} (\mu_i - \mu_{i+1}) = \lambda (\overline{\mu} - \lim_{i \uparrow \infty} \mu_i) \leq \lambda \overline{\mu}\end{aligned}$$the expression on the right-hand side of (11.65) is finite. Thus, the sequence \(\{\hat {B}({\boldsymbol x}_i;\mu _i)\}\), \(i \in \mathbb {N}\), is bounded from above and the sequences {z(x i;μ i)} and {F(x i)}, \(i \in \mathbb {N}\), are bounded from above as well by (11.63) and (11.45).

-

(c)

Finally, we prove formula (11.62). Using (11.64) and (11.45) we obtain

$$\displaystyle \begin{aligned} \hat{B}({\boldsymbol x}_{i+1}; \mu_{i+1}) - \hat{B}(&{\boldsymbol x}_{i+1}; \mu_i) \\ &\leq \sum_{k=1}^m m_k \log(z_k({\boldsymbol x}_{i+1};\mu_i) - \underline{F})(\mu_i - \mu_{i+1}) \\ & \leq \sum_{k=1}^m m_k \log(F_k({\boldsymbol x}_{i+1}) + m_k \mu_i - \underline{F})(\mu_i - \mu_{i+1}) \\ & \leq \sum_{k=1}^m m_k \log(\overline{F} + m_k \overline{\mu} - \underline{F})(\mu_i - \mu_{i+1})\\ & \stackrel{\varDelta}{=} L (\mu_i - \mu_{i+1}) \end{aligned} $$(the existence of a constant \(\overline {F}\) follows from boundedness of a sequence {F(x i)}, \(i \in \mathbb {N}\)), which together with (11.57) gives \(\hat {B}({\boldsymbol x}_{i+1};\mu _{i+1}) \leq \hat {B}({\boldsymbol x}_i;\mu _i) + L (\mu _i - \mu _{i+1})\), \(i \in \mathbb {N}\). Thus, it holds

$$\displaystyle \begin{aligned} \hat{B}({\boldsymbol x}_i;\mu_i) \leq \hat{B}({\boldsymbol x}_1;\mu_1) + L (\mu_1 - \mu_i) \leq \hat{B}({\boldsymbol x}_1;\mu_1) + L \overline{\mu} \stackrel{\varDelta}{=} \overline{B}, \quad i \in N. {} \end{aligned} $$(11.66)

□

The upper bounds \(\overline {g}\) and \(\overline {G}\) are not used in Lemma 11.2, so Assumption 11.4 may not be satisfied. Thus, there exists an upper bound \(\overline {F}\) (independent of \(\overline {g}\) and \(\overline {G}\)) such that \(F({\boldsymbol x}_i) \leq \overline {F}\) for all \(i \in \mathbb {N}\). This upper bound can be used in definition of a set \({\mathcal {D}}_F(\overline {F})\) in Assumption 11.4.

Lemma 11.3

Let Assumption 11.4and the assumptions of Lemma 11.2be satisfied. Then, if we use Procedure Aor Procedure Bfor an update of parameter μ, the values {μ i}, \(i \in \mathbb {N}\), form a non-decreasing sequence such that μ i ↓ 0.

Proof

The value of parameter μ is unchanged in the first phase of Procedure A or Procedure B. Since a function \(\hat {B}({\boldsymbol x};\mu )\) is continuous, bounded from below by Lemma 11.2, and since inequality (11.56) is satisfied (with μ i = μ), it holds ∥g(x i;μ)∥↓ 0 if phase 1 contains an infinite number of subsequent iterative steps [26, Section 3.2]. Thus, there exists a step (with index i) belonging to the first phase such that either \(\|{\boldsymbol g}({\boldsymbol x}_i;\mu )\| < \underline {g}\) in Procedure A or ∥g(x i;μ)∥2 < 𝜗μ in Procedure B. However, this is in contradiction with the definition of the first phase. Thus, there exists an infinite number of steps belonging to the second phase, where the value of parameter μ is decreased so that μ i ↓ 0. □

Theorem 11.5

Let assumptions of Lemma 11.3be satisfied. Consider a sequence {x i}, \(i \in \mathbb {N}\), generated by Algorithm 11.1, where \( \underline {\delta } = \underline {\varepsilon } = \underline {\mu } = 0\). Then

for 1 ≤ k ≤ m and 1 ≤ l ≤ m k.

Proof

-

(a)

Equalities \(\tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}_i;\mu _i) = 1\), 1 ≤ k ≤ m, are satisfied by (11.44) because \( \underline {\delta }=0\). Inequalities z k(x i;μ i) − f kl(x i) ≥ 0 and u kl(x i;μ i) ≥ 0 follow from formulas (11.45) and statement (11.50).

-

(b)

Relations (11.56) and (11.62) yield

$$\displaystyle \begin{aligned} \begin{array}{rcl} \hat{B}({\boldsymbol x}_{i+1};\mu_{i+1}) - \hat{B}({\boldsymbol x}_i;\mu_i) &\displaystyle = &\displaystyle (\hat{B}({\boldsymbol x}_{i+1};\mu_{i+1}) - \hat{B}({\boldsymbol x}_{i+1};\mu_i)) \\ &\displaystyle &\displaystyle + (\hat{B}({\boldsymbol x}_{i+1};\mu_i) - \hat{B}({\boldsymbol x}_i;\mu_i)) \\ &\displaystyle \leq &\displaystyle L \, (\mu_i - \mu_{i+1}) - c \, \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 \end{array} \end{aligned} $$and since limi↑∞μ i = 0 (Lemma 11.3), we can write by (11.66) that

$$\displaystyle \begin{aligned} \begin{array}{rcl} \underline{B} &\displaystyle \leq &\displaystyle \lim_{i \uparrow \infty} \hat{B}({\boldsymbol x}_{i+1};\mu_{i+1}) \\ &\displaystyle \leq &\displaystyle \hat{B}({\boldsymbol x}_1;\mu_1) + L \sum_{i=1}^{\infty} (\mu_i - \mu_{i+1}) - c \sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 \\ &\displaystyle \leq &\displaystyle \hat{B}({\boldsymbol x}_1;\mu_1) + L \overline{\mu} - c \sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 = \overline{B} - c \sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2. \end{array} \end{aligned} $$Thus, it holds

$$\displaystyle \begin{aligned}\sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 \leq \frac{1}{c}(\overline{B} - \underline{B}) < \infty,\end{aligned}$$which gives \({\boldsymbol g}({\boldsymbol x}_i;\mu _i) = \sum _{k=1}^m \sum _{l=1}^{m_k} {\boldsymbol g}_{kl}({\boldsymbol x}_i) u_{kl}({\boldsymbol x}_i;\mu _i) \downarrow {\mathbf {0}}\).

-

(c)

Let indices 1 ≤ k ≤ m and 1 ≤ l ≤ m k are chosen arbitrarily. Using (11.50) and Lemma 11.3 we obtain

$$\displaystyle \begin{aligned}u_{kl}({\boldsymbol x}_i;\mu_i) (z_k({\boldsymbol x}_i;\mu_i) - f_{kl}({\boldsymbol x}_i)) = \frac{\mu_i(z_k({\boldsymbol x}_i;\mu_i) - f_{kl}({\boldsymbol x}_i))}{z_k({\boldsymbol x}_i;\mu_i) - f_{kl}({\boldsymbol x}_i)} = \mu_i \downarrow 0.\end{aligned}$$

□

Corollary 11.1

Let the assumptions of Theorem 11.5be satisfied. Then, every cluster point \({\boldsymbol x} \in \mathbb {R}^n\)of a sequence {x i}, \(i \in \mathbb {N}\), satisfies necessary KKT conditions (11.8)–(11.9) wherezandu(with elements z kand u kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k) are cluster points of sequences {z(x i;μ i)} and {u(x i;μ i)}, \(i \in \mathbb {N}\).

Now we will suppose that the values \( \underline {\delta }\), \( \underline {\varepsilon }\), and \( \underline {\mu }\) are nonzero and show how a precise solution of the system of KKT equations will be after termination of computation.

Theorem 11.6

Let the assumptions of Lemma 11.3be satisfied. Consider a sequence {x i}, \(i \in \mathbb {N}\), generated by Algorithm 11.1. Then, if the values \( \underline {\delta } > 0\), \( \underline {\varepsilon } > 0\), and \( \underline {\mu } > 0\)are chosen arbitrarily, there exists an index i ≥ 1 such that

for 1 ≤ k ≤ m and 1 ≤ l ≤ m k.

Proof

Inequality \(|1 - \tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}_i;\mu _i)| \leq \underline {\delta }\) follows immediately from the fact that the equation \(\tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}_i;\mu _i) = 1\), 1 ≤ k ≤ m, is solved with precision \( \underline {\delta }\). Inequalities z k(x i;μ i) − f kl(x i) ≥ 0, u kl(x i;μ i) ≥ 0 follow from formulas (11.45) and statement (11.50) as in the proof of Theorem 11.5. Since μ i ↓ 0 and g(x i;μ i) ↓0 by Lemma 11.3 and Theorem 11.5, there exists an index i ≥ 1 such that \(\mu _i \leq \underline {\mu }\) and \(\|{\boldsymbol g}({\boldsymbol x}_i;\mu _i)\| \leq \underline {\varepsilon }\). Using (11.50) we obtain

□

Theorem 11.5 is a standard global convergence result. If the stopping parameters \( \underline {\delta }, \underline {\varepsilon }, \underline {\mu }\) are zero, the sequence of generated points converges to the point satisfying the KKT conditions for the equivalent nonlinear programming problem. Theorem 11.6 determines a precision of the obtained solution if the stopping parameters are nonzero.

2.6 Special Cases

Both the simplest and most widely considered generalized minimax problem is the classical minimax problem (11.10), when m = 1 in (11.4) (in this case we write m, z, u, v, U, V , A instead of m 1, z 1, u 1, v 1, U 1, V 1, A 1). For solving a classical minimax problem one can use Algorithm 11.1, where a major part of computation is very simplified. System of equations (11.38) is of order n + 1 and has the form

where g(x, z) = A(x)u(x, z), \(\gamma ({\boldsymbol x},z) = 1 - \tilde {{\boldsymbol e}}^T {\boldsymbol u}({\boldsymbol x},z)\), \(V({\boldsymbol x},z) = U^2({\boldsymbol x},z) / \mu = \operatorname {\mathrm {diag}} [u_1^2({\boldsymbol x},z), \dots , u_m^2({\boldsymbol x},z)] / \mu \), and u k(x, z) = μ∕(z − f k(x)), 1 ≤ k ≤ m. System of equations (11.44) is reduced to one nonlinear equation

whose solution z(x;μ) lies in the interval F(x) + μ ≤ z(x;μ) ≤ F(x) + mμ. To find this solution by robust methods from [14, 15] is not difficult. A barrier function has the form

with \(\nabla \hat {B}({\boldsymbol x};\mu ) = A({\boldsymbol x}) {\boldsymbol u}({\boldsymbol x};\mu )\) and

If we write system (11.67) in the form

where W(x, z) = G(x, z) + A(x)V (x, z)A T(x), \({\boldsymbol c}({\boldsymbol x},z) = A({\boldsymbol x}) V({\boldsymbol x},z) \tilde {{\boldsymbol e}}\) and \(\delta ({\boldsymbol x},z) = \tilde {{\boldsymbol e}}^T V({\boldsymbol x},z) \tilde {{\boldsymbol e}}\), then

Since

where ω = c TW −1c − δ, we can write

where

The matrix W is sparse if the matrix A(x) has sparse columns. If the matrix W is not positive definite, we can use the Gill–Murray decomposition

where E is a positive semidefinite diagonal matrix. Then we solve equations

and set

If we solve the classical minimax problem, Algorithm 11.1 must be somewhat modified. In Step 2, we solve only Eq. (11.68) instead of the system of equations (11.44). In Step 4, we determine a vector Δx by solving Eq. (11.71) and using relations (11.72). In Step 4, we use the barrier function (11.69) (the nonlinear equation (11.68) must be solved at the point x + αΔx).

Minimization of a sum of absolute values, i.e., minimization of the function (11.14) is another important generalized minimax problem. In this case, a barrier function has the form

where z k > |f k(x)|, 1 ≤ k ≤ m. Differentiating B μ(x, z) with respect to x and z we obtain the necessary conditions for an extremum

and

where g k(x) = ∇f k(x), 1 ≤ k ≤ m, which corresponds to (11.31)–(11.32). Equations in (11.44) are quadratic of the form

where 1 ≤ k ≤ m, and their solutions is given by

(the second solutions of quadratic equations (11.76) do not satisfy the condition z k > |f k(x)|, so the obtained vector z does not belong to a domain of \(\tilde {B}_{\mu }({\boldsymbol z})\)). Using (11.75) and (11.77) we obtain

for 1 ≤ k ≤ m and

Using these expressions, we can write formulas (11.47) and (11.48) in the form

and

where

A vector \(\varDelta {\boldsymbol x} \in \mathbb {R}^n\) is determined by solving the equation

where \({\boldsymbol g}({\boldsymbol x};\mu ) = \nabla \hat {B}({\boldsymbol x};\mu ) \neq 0\). From (11.83) and (11.81) it follows

so if a matrix G(x;μ) is positive definite, a matrix \(\nabla \hat {B}({\boldsymbol x};\mu )\) is positive definite as well (since a diagonal matrix V (x;μ) is positive definite by (11.82)) and (Δx)Tg(x;μ) < 0 holds (a direction vector Δx is descent for a function \(\hat {B}({\boldsymbol x};\mu )\)).

If we minimize a sum of absolute values, Algorithm 11.1 needs to be somewhat modified. In Step 2, we solve quadratic equations (11.76) whose solutions are given by (11.77). In Step 4, we determine a vector Δx by solving Eq. (11.83), where matrix \(\nabla ^2 \hat {B}({\boldsymbol x};\mu )\) is given by (11.83). In Step 4, we use the barrier function (11.79).

3 Smoothing Methods

3.1 Basic Properties

Similarly as in Sect. 11.2.1 we will restrict ourselves to sums of maxima, where a mapping \({\boldsymbol h}: \mathbb {R}^n \to \mathbb {R}^m\) is a sum of its arguments, so (11.4) holds. Smoothing methods for minimization of sums of maxima replace function (11.4) by a smoothing function

where

depending on a smoothing parameter \(0 < \mu \leq \overline {\mu }\), which is successively minimized on \(\mathbb {R}^n\) with μ ↓ 0. Since f kl(x) ≤ F k(x), 1 ≤ l ≤ m k, and the equality arises for at least one index, at least one exponential function on the right-hand side of (11.85) has the value 1, so the logarithm is positive. Thus \(F_k({\boldsymbol x}) \leq S_k({\boldsymbol x};\mu ) \leq F_k({\boldsymbol x}) + \mu \, \log \, m_k\), 1 ≤ k ≤ m, hold. Therefore

so S(x;μ) → F(x) if μ ↓ 0.

Remark 11.15

Similarly as in Sect. 11.2.2 we will denote g kl(x) and G kl(x) the gradients and Hessian matrices of functions f kl(x), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and

where

Thus, it holds u kl(x;μ) ≥ 0, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and

Further, we denote \(A_k({\boldsymbol x}) = J_k^T({\boldsymbol x}) = [{\boldsymbol g}_{k1}({\boldsymbol x}), \dots , {\boldsymbol g}_{km_k}({\boldsymbol x})]\) and \(U_k({\boldsymbol x};\mu ) = \operatorname {\mathrm {diag}} [u_{k1}({\boldsymbol x};\mu ), \dots , u_{km_k}({\boldsymbol x};\mu )]\) for 1 ≤ k ≤ m.

Theorem 11.7

Consider the smoothing function (11.84). Then

and

where \({\boldsymbol g}({\boldsymbol x};\mu ) = \sum _{k=1}^m A_k({\boldsymbol x}) {\boldsymbol u}_k({\boldsymbol x};\mu ) = A({\boldsymbol x}) {\boldsymbol u}({\boldsymbol x})\) and

Proof

Obviously,

Differentiating functions (11.85) and using (11.87) we obtain

Adding up these expressions yields (11.89). Further, it holds

Differentiating (11.91) and using (11.92) we obtain

where \( G_k({\boldsymbol x};\mu ) = \sum _{l=1}^{m_k} G_{kl}({\boldsymbol x}) u_{kl}({\boldsymbol x}; \mu )\). Adding up these expressions yields (11.90). □

Remark 11.16

Note that using (11.90) and the Schwarz inequality we obtain

because \(\tilde {{\boldsymbol e}}_k^T U_k({\boldsymbol x};\mu ) \tilde {{\boldsymbol e}}_k = \tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x};\mu ) = 1\), so the Hessian matrix ∇2S(x;μ) is positive definite if the matrix G(x;μ) is positive definite.

Using Theorem 11.7, a step of the Newton method can be written in the form x + = x + αΔx where

or

where

A matrix W in (11.94) has the same structure as a matrix W in (11.48) and, by Theorem 11.7, smoothing function (11.84) has similar properties as the barrier function (11.46). Thus, one can use an algorithm that is analogous to Algorithm 11.1 and considerations stated in Remark 11.12, where S(x;μ) and ∇2S(x;μ) are used instead of \(\hat {B}({\boldsymbol x}; \mu )\) and \(\nabla ^2 \hat {B}({\boldsymbol x}; \mu )\). It means that

if Assumption 11.4 is satisfied and

in remaining cases.

The considerations up to now are summarized in Algorithm 11.2 introduced in the Appendix. This algorithm differs from Algorithm 11.1 in that a nonlinear equation \(\tilde {{\boldsymbol e}}^T {\boldsymbol u}({\boldsymbol x}; \mu ) = 1\) need not be solved in Step 2 (because (11.88) follows from (11.87)), Eq. (11.93)–(11.94) instead of (11.71)–(11.72) are used in Step 4, and a barrier function \(\hat {B}({\boldsymbol x}; \mu )\) is replaced with a smoothing function S(x;μ) in Step 6. Note that the parameter μ in (11.84) has different meaning than the same parameter in (11.46), so we could use another procedure for its update in Step 7. However, it is becoming apparent that using Procedure A or Procedure B is very efficient. On the other hand, it must be noted that using exponential functions in Algorithm 11.2 has certain disadvantages. Computation of the values of exponential functions is more time consuming than performing standard arithmetic operations and underflow may also happen (i.e. replacing nonzero values by zero values) if the value of a parameter μ is very small.

3.2 Global Convergence

Now we prove the global convergence of the smoothing method realized by Algorithm 11.2.

Lemma 11.4

Choose a fixed vector \({\boldsymbol x} \in \mathbb {R}^n\). Then \(S_k({\boldsymbol x}; \mu ): (0, \infty ) \to \mathbb {R}\), 1 ≤ k ≤ m, are nondecreasing convex functions of μ > 0 and

where \( \underline {m}_k\)is a number of active functions (for which f kl(x) = F k(x)) and

Proof

Denoting φ kl(x;μ) = (f kl(x) − F k(x))∕μ ≤ 0, 1 ≤ k ≤ m, so

we can write by (11.85) that

and

because φ kl(x;μ) ≤ 0, u kl(x;μ) ≥ 0, 1 ≤ k ≤ m, and φ kl(x;μ) = 0 holds for at least one index. Thus, functions S k(x;μ), 1 ≤ k ≤ m, are nondecreasing. Differentiating (11.87) with respect to μ we obtain

Differentiating (11.99) with respect to μ and using Eqs. (11.88) and (11.100) we can write

because

holds by the Schwarz inequality. Thus, functions S k(x;μ), 1 ≤ k ≤ m, are convex, so their derivatives \(\frac {\partial }{\partial \mu } S_k({\boldsymbol x}; \mu )\) are nondecreasing. Obviously, it holds

because φ kl(x;μ) = 0 if f kl(x) = F k(x) and limμ↓0φ kl(x;μ) = −∞, \(\lim _{\mu \downarrow 0} \varphi _{kl}({\boldsymbol x}; \mu ) \exp \varphi _{kl}({\boldsymbol x}; \mu ) = 0\) if f kl(x) < F k(x). Similarly, it holds

because limμ↑∞φ kl(x;μ) = 0 and limμ↑∞|u kl(x;μ)|≤ 1 for 1 ≤ k ≤ m. □

Lemma 11.5

Let Assumptions 11.2and 11.4be satisfied. Then the values μ i, \(i \in \mathbb {N}\), generated by Algorithm 11.2, create a nonincreasing sequence such that μ i ↓ 0.

Proof

Lemma 11.5 is a direct consequence of Lemma 11.3 because the same procedures for an update of a parameter μ are used and (11.95) holds. □

Theorem 11.8

Let the assumptions of Lemma 11.5be satisfied. Consider a sequence {x i} \(i \in \mathbb {N}\), generated by Algorithm 11.2, where \( \underline {\varepsilon } = \underline {\mu } = 0\). Then

and

for 1 ≤ k ≤ m and 1 ≤ l ≤ m k.

Proof

-

(a)

Equations \(\tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}_i; \mu _i) = 1\) for 1 ≤ k ≤ m follow from (11.88). Inequalities F k(x i) − f kl(x i) ≥ 0 and u kl(x i;μ i) ≥ 0 for 1 ≤ k ≤ m and 1 ≤ l ≤ m k follow from (11.4) and (11.87).

-

(b)

Since S k(x;μ) are nondecreasing functions of the parameter μ by Lemma 11.4 and (11.95) holds, we can write

$$\displaystyle \begin{aligned} \begin{array}{rcl} \underline{F} &\displaystyle \leq &\displaystyle \sum_{k=1}^m F_k({\boldsymbol x}_{i+1}) \leq S({\boldsymbol x}_{i+1};\mu_{i+1}) \leq S({\boldsymbol x}_{i+1};\mu_i) \\ &\displaystyle \leq &\displaystyle S({\boldsymbol x}_i;\mu_i) - c \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 \leq S({\boldsymbol x}_1;\mu_1) - c \sum_{j=1}^i \|{\boldsymbol g}({\boldsymbol x}_j;\mu_j)\|{}^2, \end{array} \end{aligned} $$where \( \underline {F} = \sum _{k=1}^m \underline {F}_k\) and \( \underline {F}_k\), 1 ≤ k ≤ m, are lower bounds from Assumption 11.2. Thus, it holds

$$\displaystyle \begin{aligned}\underline{F} \leq \lim_{i \uparrow \infty} S({\boldsymbol x}_{i+1};\mu_{i+1}) \leq S({\boldsymbol x}_1;\mu_1) - c \sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2,\end{aligned}$$or

$$\displaystyle \begin{aligned}\sum_{i=1}^{\infty} \|{\boldsymbol g}({\boldsymbol x}_i;\mu_i)\|{}^2 \leq \frac{1}{c}(S({\boldsymbol x}_1;\mu_1) - \underline{F}),\end{aligned}$$so ∥g(x i;μ i)∥↓ 0, which together with inequalities 0 ≤ u kl(x i;μ i) ≤ 1, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, gives limi↑∞u kl(x i;μ i)g kl(x i) = 0.

-

(c)

Let indices 1 ≤ k ≤ m and 1 ≤ l ≤ m k be chosen arbitrarily. Using (11.87) we get

$$\displaystyle \begin{aligned} \begin{array}{rcl} 0 &\displaystyle \leq &\displaystyle u_{kl}({\boldsymbol x}_i;\mu_i) (F_k({\boldsymbol x}_i) - f_{kl}({\boldsymbol x}_i)) = -\mu_i \frac{\varphi_{kl}({\boldsymbol x}_i; \mu_i) \exp \varphi_{kl}({\boldsymbol x}_i; \mu_i)}{\sum_{l=1}^{m_k} \exp \varphi_{kl}({\boldsymbol x}_i; \mu_i)} \\ &\displaystyle \leq &\displaystyle -\mu_i \varphi_{kl}({\boldsymbol x}_i; \mu_i) \exp \varphi_{kl}({\boldsymbol x}_i; \mu_i) \leq \frac{\mu_i}{e}, \end{array} \end{aligned} $$where φ kl(x i;μ i), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are functions used in the proof of Lemma 11.4, because

$$\displaystyle \begin{aligned}\sum_{l=1}^{m_k} \exp \varphi_{kl}({\boldsymbol x}_i; \mu_i) \geq 1\end{aligned}$$and the function \(t \exp t\) attains its minimal value − 1∕e at the point t = −1. Since μ i ↓ 0, we obtain u kl(x i;μ i)(F k(x i) − f kl(x i)) ↓ 0.

□

Corollary 11.2

Let the assumptions of Theorem 11.8be satisfied. Then every cluster point \({\boldsymbol x} \in \mathbb {R}^n\)of a sequence {x i}, \(i \in \mathbb {N}\), satisfies the necessary KKT conditions (11.5) and (11.6), whereu(with elementsu k, 1 ≤ k ≤ m) is a cluster point of a sequence {u(x i;μ i)}, \(i \in \mathbb {N}\).

Now we will suppose that the values \( \underline {\varepsilon }\) and \( \underline {\mu }\) are nonzero and show how a precise solution of the system of KKT equations will be after termination of computation of Algorithm 11.2.

Theorem 11.9

Let the assumptions of Theorem 11.5be satisfied and let {x i}, \(i \in \mathbb {N}\), be a sequence generated by Algorithm 11.2. Then, if the values \( \underline {\varepsilon } > 0\)and \( \underline {\mu } > 0\)are chosen arbitrarily, there exists an index i ≥ 1 such that

and

for all 1 ≤ k ≤ m and 1 ≤ l ≤ m k.

Proof

Equalities \(\tilde {{\boldsymbol e}}_k^T {\boldsymbol u}_k({\boldsymbol x}_i; \mu _i) = 1\), 1 ≤ k ≤ m, follow from (11.88). Inequalities F k(x i) − f kl(x i) ≥ 0 and u kl(x i;μ i) ≥ 0, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, follow from (11.10) and (11.87). Since μ i ↓ 0 holds by Lemma 11.5 and ∥g(x i;μ i)∥↓ 0 holds by Theorem 11.8, there exists an index i ≥ 1 such that \(\mu _i \leq \underline {\mu }\) and \(\|{\boldsymbol g}({\boldsymbol x}_i;\mu _i)\| \leq \underline {\varepsilon }\). By (11.87), as in the proof of Theorem 11.8, one can write

for 1 ≤ k ≤ m and 1 ≤ l ≤ m k. □

Theorems 11.8 and 11.9 have the same meaning as Theorems 11.5 and 11.6 introduced in Sect. 11.2.5.

3.3 Special Cases

Both the simplest and most widely considered generalized minimax problem is the classical minimax problem (11.10), when m = 1 in (11.4) (in this case we write m and z instead of m 1 and z 1). For solving a classical minimax problem one can use Algorithm 11.2, where a major part of computation is very simplified. A step of the Newton method can be written in the form x + = x + αΔx where

or

where

Since

holds by the Sherman–Morrison formula, the solution of system of equations (11.101) can be written in the form

If a matrix W is not positive definite, it may be replaced with a matrix LL T = W + E obtained by the Gill–Murray decomposition described in [10]. Then, we solve an equation

and set

Minimization of a sum of absolute values, i.e., minimization of the function (11.14) is another important generalized minimax problem. In this case, a smoothing function has the form

because \(f_k^+({\boldsymbol x}) = |f_k({\boldsymbol x})|\) if f k(x) ≥ 0 and \(f_k^-({\boldsymbol x}) = |f_k({\boldsymbol x})|\) if f k(x) ≤ 0, and by Theorem 11.7 we have

(because \(u_k^+ + u_k^- = 1\)), where g k = g k(x),

and

and where sign(f k(x)) is a sign of a function f k(x).

4 Primal-Dual Interior Point Methods

4.1 Basic Properties

Primal interior point methods for solving nonlinear programming problems profit from the simplicity of obtaining and keeping a point in the interior of the feasible set (for generalized minimax problems, it suffices to set z k > F k(x), 1 ≤ k ≤ m). Minimization of a barrier function without constraints and a direct computation of multipliers u kl, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, are basic features of these methods. Primal-dual interior point methods are intended for solving general nonlinear programming problems, where it is usually impossible to assure validity of constraints. These methods guarantee feasibility of points by adding slack variables, which appear in a barrier term added to the objective function. Positivity of the slack variables is assured algorithmically (by a step length selection). Minimization of a barrier function with equality constraints and an iterative computation of the Lagrange multipliers (dual variables) are the main features of primal-dual interior point methods.

Consider function (11.4). As is mentioned in the introduction, minimization of this function is equivalent to the nonlinear programming problem

Using slack variables s kl > 0, 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and a barrier function

a solving of the problem (11.106) can be transformed to a successive solving of problems

where μ ↓ 0. Necessary conditions for an extremum of the problem (11.108) have the form

which is \(n + m + 2 \bar {m}\) equations for \(n + m + 2 \bar {m}\) unknowns (vectors x, z = [z k], s = [s kl], u = [u kl], 1 ≤ k ≤ m, 1 ≤ l ≤ m k), where \(\bar {m} = m_1 + \dots + m_m\). Denote A(x) = [A 1(x), …, A m(x)], f = [f kl], \(S = \operatorname {\mathrm {diag}}[s_{kl}]\), \(U = \operatorname {\mathrm {diag}}[u_{kl}]\), 1 ≤ k ≤ m, 1 ≤ l ≤ m k, and

(matrices A k(x), vectors \(\tilde {{\boldsymbol e}}_k\), and numbers z k, 1 ≤ k ≤ m, are defined in Sect. 11.2.2). Applying the Newton method to this system of nonlinear equations, we obtain a system of linear equations for increments (direction vectors) Δx, Δz, Δs, Δu. After arrangement and elimination

this system has the form

where \(G({\boldsymbol x},{\boldsymbol u}) = \sum _{k=1}^m \sum _{l=1}^{m_k} G_{kl}({\boldsymbol x}) u_{kl}\). Vector \(\tilde {{\boldsymbol e}}\) in the equation \(\tilde {{\boldsymbol e}} - E^T {\boldsymbol u} = {\mathbf {0}}\) has unit elements, but its dimension is different from the dimension of a vector \(\tilde {{\boldsymbol e}}\) in (11.109).

For solving this linear system, we cannot advantageously use the structure of a generalized minimax problem (because substituting \(z_k = F_k({\boldsymbol x}) = \max _{1 \leq l \leq m_k} f_{kl}({\boldsymbol x})\) we would obtain a nonsmooth problem whose solution is much more difficult). Therefore, we need to deal with a general nonlinear programming problem. To simplify subsequent considerations, we use the notation \(\tilde {{\boldsymbol x}} = [{\boldsymbol x}^T,{\boldsymbol z}^T]^T\),

and write (11.110) in the form

where \({\boldsymbol c}(\tilde {{\boldsymbol x}}) = {{\boldsymbol f}}({\boldsymbol x}) - E {\boldsymbol z}\). This system of equations is more advantageous against systems (11.49) and (11.93) in that its matrix does not depend on the barrier parameter μ, so it is not necessary to use a lower bound \( \underline {\mu }\). On the other hand, system (11.112) has a dimension \(n + m + \bar {m}\), while systems (11.49) and (11.93) have dimensions n. It would be possible to eliminate the vector Δu, so the resulting system

where M = U −1S, would have dimension n + m (i.e., n + 1 for classical minimax problems). Nevertheless, as follows from the equation u kls kl = μ, either u kl ↓ 0 or s kl ↓ 0 if μ ↓ 0, so some elements of a matrix M −1 may tend to infinity, which increases the condition number of system (11.113). Conversely, the solution of Eq. (11.112) is easier if the elements of a matrix M are small (if M = 0, we obtain the saddle point system, which can be solved by efficient iterative methods [1, 18]). Therefore, it is advantageous to split the constraints to active with \(s_{kl} \leq \tilde {\varepsilon } u_{kl}\) (we denote active quantities by \(\hat {{\boldsymbol c}}(\tilde {{\boldsymbol x}})\), \(\hat {A}(\tilde {{\boldsymbol x}})\), \(\hat {{\boldsymbol s}}\), \(\varDelta \hat {{\boldsymbol s}}\), \(\hat {S}\), \(\hat {{\boldsymbol u}}\), \(\varDelta \hat {{\boldsymbol u}}\), \(\hat {U}\), \(\hat {M} = \hat {U}^{-1} \hat {S}\)) and inactive with \(s_{kl} > \tilde {\varepsilon } u_{kl}\) (we denote inactive quantities by \(\check {{\boldsymbol c}}(\tilde {{\boldsymbol x}})\), \(\check {A}(\tilde {{\boldsymbol x}})\), \(\check {{\boldsymbol s}}\), \(\varDelta \check {{\boldsymbol s}}\), \(\check {S}\), \(\check {{\boldsymbol u}}\), \(\varDelta \check {{\boldsymbol u}}\), \(\check {U}\), \(\check {M} = \check {U}^{-1} \check {S}\)). Eliminating inactive equations from (11.112) we obtain

and

where

and \(\hat {M} = \hat {U}^{-1} \hat {S}\) is a diagonal matrix of order \(\hat {m}\), where \(0 \leq \hat {m} \leq \bar {m}\) is the number of active constraints. Substituting (11.114) into (11.109) we can write

The matrix of the linear system (11.115) is symmetric, but indefinite, so its Choleski decomposition cannot be determined. In this case, we use either dense [3] or sparse [6] Bunch–Parlett decomposition for solving this system. System (11.115) (especially if it is large and sparse) can be efficiently solved by iterative conjugate gradient method with indefinite preconditioner [20]. If the vectors \(\varDelta \tilde {{\boldsymbol x}}\) and \(\varDelta \hat {{\boldsymbol u}}\) are solutions of system (11.115), we determine vector \(\varDelta \check {{\boldsymbol u}}\) by (11.114) and vectors \(\varDelta \hat {{\boldsymbol s}}\), \(\varDelta \check {{\boldsymbol s}}\) by (11.116).

Having vectors \(\varDelta \tilde {{\boldsymbol x}}\), Δs, Δu, we need to determine a step length α > 0 and set

where s(α) and u(α) are vector functions such that s(α) > 0, s′(0) = Δs and u(α) > 0, u′(0) = Δu. This step is not trivial, because we need to decrease both the value of the barrier function \(\tilde {B}_{\mu }(\tilde {{\boldsymbol x}},{\boldsymbol s}) = B_{\mu }({\boldsymbol x},{\boldsymbol z},{\boldsymbol s})\) and the norm of constraints \(\|{\boldsymbol c}(\tilde {{\boldsymbol x}})\|\), and also to assure positivity of vectors s and u. We can do this in several different ways: using either the augmented Lagrange function [20, 21] or a bi-criterial filter [9, 29] or a special algorithm [11, 16]. In this section, we confine our attention to the augmented Lagrange function which has (for the problem (11.106)) the form

where σ ≥ 0 is a penalty parameter. The following theorem, whose proof is given in [20], holds.

Theorem 11.10

Lets > 0,u > 0 and let vectors \(\varDelta \tilde {{\boldsymbol x}}\), \(\varDelta \hat {{\boldsymbol u}}\)be solutions of the linear system

whererand \(\hat {{\boldsymbol r}}\)are residual vectors, and let vectors \(\varDelta \check {{\boldsymbol u}}\)and Δsbe determined by (11.114) and (11.116). Then

If

and if system (11.115) is solved in such a way that

then P′(0) < 0.

Inequality (11.122) is significant only if linear system (11.115) is solved iteratively and residual vectors r and \(\hat {{\boldsymbol r}}\) are nonzero. If these vectors are zero, then (11.122) follows immediately from (11.121). Inequality (11.121) serves for determination of a penalty parameter, which should be as small as possible. If the matrix \(\tilde {G}(\tilde {{\boldsymbol x}},{\boldsymbol u})\) is positive semidefinite, then the right-hand side of (11.121) is negative and we can choose σ = 0.

4.2 Implementation

The algorithm of the primal-dual interior point method consists of four basic parts: determination of the matrix G(x, u) or its approximation, solving linear system (11.115), a step length selection, and an update of the barrier parameter μ. The matrix G(x, u) has form (11.33), so its approximation can be determined in the one of the ways introduced in Remark 11.13.

The linear system (11.115), obtained by determination and subsequent elimination of inactive constraints in the way described in the previous subsection, is solved either directly using the Bunch–Parlett decomposition or iteratively by the conjugate gradient method with the indefinite preconditioner

where \(\hat {D}\) is a positive definite diagonal matrix that approximates matrix \(\hat {G}(\tilde {{\boldsymbol x}},{\boldsymbol u})\). An iterative process is terminated if residual vectors satisfy conditions (11.122) and

where 0 < ω < 1 is a prescribed precision. The directional derivative P′(0) given by (11.118) should be negative. There are two possibilities how this requirement can be achieved. We either determine the value σ ≥ 0 satisfying inequality (11.121), which implies P′(0) < 0 if (11.122) holds (Theorem 11.10), or set σ = 0 and ignore inequality (11.122). If P′(0) ≥ 0, we determine a diagonal matrix \(\tilde {D}\) with elements

for 1 ≤ j ≤ n + m, where \(\tilde {{\boldsymbol g}} = \tilde {{\boldsymbol g}}(\tilde {{\boldsymbol x}},{\boldsymbol u})\) and \(0 < \underline {\varGamma } < \overline {\varGamma }\), set \(\tilde {G}(\tilde {{\boldsymbol x}},{\boldsymbol u}) = \tilde {D}\) and restart the iterative process by solving new linear system (11.115).

We use functions s(α) = [s kl(α)], u(α) = [u kl(α)], where \(s_{kl}(\alpha ) = s_{kl} + \alpha _{s_{kl}} \varDelta s_{kl}\), \(u_{kl}(\alpha ) = u_{kl} + \alpha _{u_{kl}} \varDelta u_{kl}\) and

when choosing a step length using the augmented Lagrange function. A parameter 0 < γ < 1 (usually γ = 0.99) assures the positivity of vectors s + and u + in (11.117). A parameter α > 0 is chosen to satisfy the inequality P(α) − P(0) ≤ ε 1αP′(0), which is possible because P′(0) < 0 and a function P(α) is continuous.

After finishing the iterative step, a barrier parameter μ should be updated. There exist several heuristic procedures for this purpose. The following procedure proposed in [28] seems to be very efficient.

Procedure C

-

Compute the centrality measure

$$\displaystyle \begin{aligned}\varrho = \frac{\bar{m} \, \min_{kl}\{s_{kl} u_{kl}\}}{{\boldsymbol s}^T {\boldsymbol u}},\end{aligned}$$where \(\bar {m} = m_1 + \dots + m_m\) and 1 ≤ k ≤ m, 1 ≤ l ≤ m k. Compute the value

$$\displaystyle \begin{aligned}\lambda = 0.1 \min\left\{0.05 \frac{1 - \varrho}{\varrho}, 2\right\}^3\end{aligned}$$and set \(\mu = \lambda {\boldsymbol s}^T {\boldsymbol u} / \bar {m}\).

The considerations up to now are summarized in Algorithm 11.3 introduced in the Appendix.

5 Numerical Experiments

The methods studied in this contribution were tested by using two collections of test problems TEST14 and TEST15 described in [19], which are the parts of the UFO system [24] and can be downloaded from the web-page www.cs.cas.cz/luksan/test.html. Both these collections contain 22 problems with functions f k(x), 1 ≤ k ≤ m, \({\boldsymbol x} \in \mathbb {R}^n\), where n is an input parameter and m ≥ n depends on n (we have used the values n = 100 and n = 1000 for numerical experiments). Functions f k(x), 1 ≤ k ≤ m, have a sparse structure (the Jacobian matrix of a mapping f(x) is sparse), so sparse matrix decompositions can be used for solving linear equation systems.

The tested methods, whose results are reported in Tables 11.1, 11.2, 11.3, 11.4, and 11.5 introduced in the Appendix, are denoted by five letters. The first pair of letters gives the problem type: either a classical minimax MX (when a function F(x) has form (11.10) or F(x) = ∥f(x)∥∞ holds) or a sum of absolute values SA (when F(x) = ∥f(x)∥1 holds). Further two letters specify the method used:

- PI :

-

–the primal interior point method (Sect. 11.2),

Table 11.1 TEST14 (minimization of maxima) — 22 problems Table 11.2 TEST14 (L ∞-approximation) — 22 problems Table 11.3 TEST15 (L ∞-approximation) — 22 problems Table 11.4 TEST14 (L 1-approximation) — 22 problems Table 11.5 TEST15 (L 1-approximation) — 22 problems - SM :

-

–the smoothing method (Sect. 11.3),

- DI :

-

–the primal-dual interior point method (Sect. 11.4).

The last letter denotes the procedure for updating a barrier parameter μ (procedures A and B are described in Sect. 11.2.4 and procedure C is described in Sect. 11.4.2).

The columns of all tables correspond to two classes of methods. The Newton methods use approximations of the Hessian matrices of the Lagrange function obtained by gradient differences [4] and variable metric methods update approximations of the Hessian matrices of the partial functions by the methods belonging to the Broyden family [12] (Remark 11.13).

The tables contain total numbers of iterations NIT, function evaluations NFV, gradient evaluations NFG, and also the total computational time, the number of problems with the value \(\overline {\varDelta }\) decreased and the number of failures (the number of unsolved problems). The decrease of the maximum step length \(\overline {\varDelta }\) is used for three reasons. First, too large steps may lead to overflows if arguments of functions (roots, logarithms, exponentials) lie outside of their definition domain. Second, the change of \(\overline {\varDelta }\) can affect the finding of a suitable (usually global) minimum. Finally, it can prevent from achieving a domain in which the objective function has bad behavior leading to a loss of convergence. The number of times the step length has decreased is in some sense a symptom of robustness (a lower number corresponds to higher robustness).

Several conclusions can be done from the results stated in these tables.

-

The smoothing methods are less efficient than the primal interior point methods. For testing the smoothing methods, we had to use the value \( \underline {\mu } = 10^{-6}\), while the primal interior methods work well with the smaller value \( \underline {\mu } = 10^{-8}\), which gives more precise results.

-

The primal-dual interior point methods are slower than the primal interior point methods, especially for the reason that system of equations (11.115) is indefinite, so we cannot use the Choleski (or the Gill–Murray [10]) decomposition. If the matrix of linear system (11.115) is large and sparse, we can use the Bunch–Parlett decomposition [6]. In this case, a large fill-in of new nonzero elements (and thus to overflow of the operational memory or large extension of the computational time) may appear. In this case, we can also use the iterative conjugate gradient method with an indefinite preconditioner [18], however, the ill-conditioned systems can require a large number of iterations and thus a large computational time.

-

It cannot be uniquely decided whether Procedure A is better than Procedure B. The Newton methods usually work better with Procedure A while the variable metric methods are more efficient with Procedure B.

-

The variable metric methods are usually faster because it is not necessary to determine the elements of the Hessian matrix of the Lagrange function by gradient differences. The Newton methods seem to be more robust (especially in case of L 1-approximation).

References

Benzi, M., Golub, G.H., Liesen, J.: Numerical solution of saddle point problems. Acta Numer. 14, 1–137 (2005)

Björck, A.: Numerical Methods in Matrix Computations. Springer, New York (2015)

Bunch, J.R., Parlett, B.N.: Direct methods for solving symmetric indefinite systems of linear equations. SIAM J. Numer. Anal. 8, 639–655 (1971)

Coleman, T.F., Moré, J.J.: Estimation of sparse Hessian matrices and graph coloring problems. Math. Program. 28, 243–270 (1984)

Di Pillo, G., Grippo, L., Lucidi, S.: Smooth Transformation of the generalized minimax problem. J. Optim. Theory Appl. 95, 1–24 (1997)

Duff, I.S., Gould, N.I.M., Reid, J.K., Turner, K.: The factorization of sparse symmetric indefinite metrices. IMA J. Numer. Anal. 11, 181–204 (1991)

Fiedler, M.: Special Matrices and Their Applications in Numerical Mathematics. Dover, New York (2008)

Fletcher, R.: Practical Methods of Optimization. Wiley, New York (1987)

Fletcher, R., Leyffer, S.: Nonlinear programming without a penalty function. Math. Program. 91, 239–269 (2002)

Gill, P.E., Murray, W.: Newton type methods for unconstrained and linearly constrained optimization. Math. Program. 7, 311–350 (1974)

Gould, N.I.M., Toint, P.L.: Nonlinear programming without a penalty function or a filter. Math. Program. 122, 155–196 (2010)

Griewank, A., Toint, P.L.: Partitioned variable metric updates for large-scale structured optimization problems. Numer. Math. 39, 119–137 (1982)

Griewank, A., Walther, A.: Evaluating Derivatives. SIAM, Philadelphia (2008)

Le, D.: Three new rapidly convergent algorithms for finding a zero of a function. SIAM J. Sci. Stat. Comput. 6, 193–208 (1985)

Le, D.: An efficient derivative-free method for solving nonlinear equations. ACM Trans. Math. Softw. 11, 250–262 (1985)

Liu, X., Yuan, Y.: A sequential quadratic programming method without a penalty function or a filter for nonlinear equality constrained optimization. SIAM J. Optim. 21, 545–571 (2011)

Lukšan, L., Spedicato, E.: Variable metric methods for unconstrained optimization and nonlinear least squares. J. Comput. Appl. Math. 124, 61–93 (2000)

Lukšan, L., Vlček, J.: Indefinitely preconditioned inexact Newton method for large sparse equality constrained nonlinear programming problems. Numer. Linear Algebra Appl. 5, 219–247 (1998)

Lukšan, L., Vlček, J.: Sparse and partially separable test problems for unconstrained and equality constrained optimization. Technical Report V-767, Prague, ICS AS CR (1998)

Lukšan, L., Matonoha, C., Vlček, J.: Interior-point method for non-linear non-convex optimization. Numer. Linear Algebra Appl. 11, 431–453 (2004)

Lukšan, L., Matonoha, C., Vlček, J.: Interior-point method for large-scale nonlinear programming. Optim. Methods Softw. 20, 569–582 (2005)

Lukšan, L., Matonoha, C., Vlček, J.: Primal interior-point method for large sparse minimax optimization. Kybernetika 45, 841–864 (2009)

Lukšan, L., Matonoha, C., Vlček, J.: Primal interior-point method for minimization of generalized minimax functions. Kybernetika 46, 697–721 (2010)

Lukšan, L., Tůma, M., Matonoha, C., Vlček, J., Ramešová, N., Šiška, M., Hartman, J.: UFO 2017. Interactive System for Universal Functional Optimization. Technical Report V-1252, Prague, ICS AS CR (2017)

Mäkelä, M.M., Neittaanmäki, P.: Nonsmooth Optimization. World Scientific, London (1992)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2006)

Tůma, M.: A note on direct methods for approximations of sparse Hessian matrices. Appl. Math. 33, 171–176 (1988)

Vanderbei, J., Shanno, D.F.: An interior point algorithm for nonconvex nonlinear programming. Comput. Optim. Appl. 13, 231–252 (1999)

Wächter, A., Biegler, L.: Line search filter methods for nonlinear programming. Motivation and global convergence. SIAM J. Comput. 16, 1–31 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Algorithm 11.1: Primal interior point method

________________________

- Data::

-