Abstract

A system of cooperative unmanned aerial vehicles (UAVs) is a group of agents interacting with each other and the surrounding environment to achieve a specific task. In contrast with a single UAV, UAV swarms are expected to benefit efficiency, flexibility, accuracy, robustness, and reliability. However, the provision of external communications potentially exposes them to an additional layer of faults, failures, uncertainties, and cyberattacks and can contribute to the propagation of error from one component to other components in a network. Also, other challenges such as complex nonlinear dynamic of UAVs, collision avoidance, velocity matching, and cohesion should be addressed adequately. Main applications of cooperative UAVs are border patrol; search and rescue; surveillance; mapping; military. Challenges to be addressed in decision and control in cooperative systems may include the complex nonlinear dynamic of UAVs, collision avoidance, velocity matching, and cohesion. In this paper, emerging topics in the field of cooperative UAVs control and their associated practical approaches are reviewed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

FormalPara Abbreviations- CCUAVs:

-

Cooperative Control Unmanned Aerial Vehicles

- CLSF:

-

Constrained Local Submap Filter

- CML:

-

Concurrent mapping and localization

- DDDAS:

-

Dynamic Data-Driven Application System

- DDF:

-

Decentralized Data Fusion

- DI:

-

Dynamic inversion

- DoS:

-

Denial of service

- ECM:

-

Electronic Counter-Measure

- EJ:

-

Escort Jamming

- FDI:

-

Fault Detection and Identification

- FTC:

-

Fault Tolerant Controllers

- GNN:

-

Grossberg Neural Network

- LIDAR:

-

Light detection and ranging

- LOS:

-

Line of Sight

- LQR:

-

Linear Quadratic Regulator

- PC:

-

Probability collective

- PDF:

-

Probability Density Function

- POMDP:

-

Observable Markov Decision Process

- PN:

-

Proportional navigation

- PP:

-

Pure pursuit

- PRS:

-

Personal Remote Sensing

- ROS:

-

Robot Operating System

- SAM:

-

Surface-to-Air Missile

- SLAM:

-

Simultaneous Localization and Mapping

- SWEEP:

-

Swarm Experimentation and Evaluation Platform

- TDS:

-

Time delay switch

- UAV:

-

Unmanned Aerial Vehicles

- UCAVs:

-

Unmanned Combat Air Vehicles

- WSN:

-

Wireless Sensor Network

1 Introduction

Swarm intelligence deals with physical and artificial systems formed of entities that have internal and external interactions coordinating by incentive or a predefined control algorithm. Flocking of birds, swarming of insects, shoaling of fishes, and herding of quadrupeds were a motive for the cooperated control of UAVs. A group of UAVs can be modeled similar to natural animal cooperation where bodies operate as a system toward reaching mutual benefits. Animals can benefit from swarm performance in defending against predators, food seeking, navigation, and energy saving. Cooperative multi-robots complete a task in a shorter time [1], have synergy [2, 3], and cover a larger area. They are also more cost-effective using smaller, simpler, and more durable robots [4]. Furthermore, they can complete a task more accurately and robustly [5].

Cooperative control is one of the most attractive topics in the field of control systems which has received the attention of many researchers. Cooperative algorithms and utilization are mainly discussed in the recent decade. Many useful surveys have been done to review the recent contributions in this field [6,7,8,9,10,11]. However, most of them were just focused on the algorithms and on the consensus control theory. A valuable review of the consensus control problem was done by Ren et al. [6]; however, significant contributions have been done thereafter. Anderson et al. [7] also focused on consensus control of the multi-agent systems. Wang et al. [8] and Zhu et al. [10] reviewed most of the consensus control problems; however, other cooperative techniques and the application of these algorithms were not discussed. Senanyake et al. investigated cooperative algorithms for searching and tracking applications [11]; however, the other algorithms and applications of the cooperative system were not considered.

To enhance the current related literature mentioned above and cover most of the applications and algorithms, the recent research studies in the field of cooperative control design will be reviewed. The applications, algorithms, and challenges are considered. The applications are categorized into surveillance, search and rescue, mapping, and military applications; then, the recent developments related to each category are reviewed. Similarly, the algorithms can be categorized into three main classes: consensus control, flocking control, and guidance based cooperative control. The challenges related to the cooperative control and applications of cooperative algorithms are investigated in a separate section. Moreover, the related mathematics of cooperative control algorithms is simplified to make it easier for readers to understand the concepts.

This paper is organized as follows: Sect. 10.2 provides potential applications of cooperative control, and Sect. 10.3 highlights possible challenges when applying cooperative control. Section 10.4 reviews algorithms used in cooperative control design. Finally, Sect. 10.5 provides the summary and conclusion of this work.

2 Applications and Literature Review

Cooperative control of multiple unmanned vehicles is one of the topics in control areas that have received increasing interest in the past several years. Single UAVs have been applied for various applications, and recently, investigators have attempted to expand and improve their applications by using a combination of multiple agents. The multiple agents concept has been used for search and rescue [2, 12,13,14,15,16], geographic mapping [17,18,19,20], military applications [21,22,23], etc. In this section, the current and potential applications of the cooperative control of UAVs are surveyed.

2.1 Search and Rescue

UAVs have been used for several years for search and rescue purposes since they are more compact and cost-effective and require less amount of time to deploy than a plane or helicopter, particularly when multiple numbers of UAVs are required to accomplish the task. Figure 10.1 displays a scenario for cooperative control of quadrotors to search for and rescue a patient or missing person in a hard to access environment. In this kind of operation, search time is the most critical factor. To satisfy the time constraint, Scherer et al. implemented a distributed control system in the robot operating system (ROS) of the multiple multi-copters, to capture the situations and display them as video streams in real-time at base stations [24]. Since UAVs have their advantages such as agility, swiftness, remote-controlling, birds eye-vision, and other integrities, they can creditably perform practical work promptly. However, when those advantageous of UAVs are operated by a cooperative control algorithm to complete a mission, the requirement of minimum time delay and other critical constraints can be achieved in searching and rescuing casualties or victims.

A cooperative approach to search for a victim in a hard to access area [25]

Many types of research and experiments are performed in search and rescue requiring cooperative control unmanned aerial vehicles (CCUAVs). For example, Waharte et al. showed that employing multiple autonomous UAVs has excellent benefits in the search and rescue operations for the corollary of Hurricane Katrina in September 2006. The notable sophistication of their work was that they divided the real-time approaches into three main categories which were greedy heuristics, potential-based heuristics, and partially observable Markov decision process (POMDP) based heuristics [25]. In a case of fire, Maza et al. investigated a multi-UAV firefighters monitoring mission in the framework of the AWARE Project using two autonomous helicopters to monitor the firemen’s performance and safety in real-time from a simulated situation where firefighters are assisting injured people in front of a burning building. This work has been done based on their previous work’s algorithm [26], SIT algorithm, which follows a market-based approach combined with a network of ground cameras and a wireless sensor network (WSN) [27]. Another scenario that CCUAVs can be wholly beneficial is to search and rescue missing persons in a wilderness. It has been many centuries that travelers had been lost in wildernesses such as mountains, oceans, deserts, jungles, rain forests, or any abandoned or uncolonized areas. Some of the missing people could be found and rescued, but many of them were lost from their families forever. Goodrich et al. have shown and identified a set of operational practices for using mini unmanned aerial vehicles (mUAVs) to support wilderness search and rescue (WiSAR) operations. In their work, technical operations such as sequential operations, remote-led operations, and base-led operations have been used to gather and analyze evidence or potential signs of a lost person to simulate a stochastic model of his behavior and a geographic description of a particular region. If the model is well matched to a specific victim, then the location of the missing person would be estimated according to the probability of the area where the lost person could be located [12]. The result of their research shows that the mUAVs could address the limitations of human-crewed aircraft which also upholds the research algorithm of CCUAVs.

2.2 Surveillance

Surveillance is one of the applications of UAVs that have been widely used. Figure 10.2 shows an overall scheme of the surveillance application using the cooperative quadrotors system. Bread et al. studied aerial surveillance of fixed-wing multi-UAVs. Fixed-wing aircraft may have a significant advantage in speed. However, the lack of hovering ability would increase their chance of collision when they work in cooperative control mode. To mitigate and overcome this constraint, Bread et al. presented an approach which consists of four significant steps: cooperation objective and constraints, coordination variable and coordination function, centralized cooperation scheme, and consensus building [28].

Ahmadzadeh et al. [16] have studied the cooperative motion-planning problem for a group of heterogeneous UAVs. In their work, the surveillance operations were conducted via the body-fixed cameras equipped on their fixed-wing UAV. They demonstrated multi-UAV cooperative surveillance with spatiotemporal specifications [16]. Besides, they used an integer programming strategy to reduce the computational effort. The main contribution of their study was to generate an appropriate trajectory associated with the complexities of coupling cameras field of view with flight paths. Paley et al. designed a glider with a coordinated control system for long-duration ocean sampling using real-time feedback control [23]. In their design, agents were modeled as Newtonian particles to steer a set of coordinated trajectories. However, this model cannot be applied for closed flocking due to the assumption that there is enough space between particles.

In the case of persistent surveillance, Nigam et al. have intensively researched on UAVs for persistent surveillance and their works have been consecutively released in the past few years. Their early efforts focused on investigating techniques for a high-level, scalable, reliable, efficient, and robust control of multiple UAVs [14] and derived an optimum policy with a single UAV [29]. They also suggested that modifications of the existing control policies would improve the system performance under dynamic constraints and proposed multi-agent reactive policy to integrate multiple UAVs and optimized the performance using a real-encode probability collective (PC) optimization framework. In the later works, Nigam et al. have developed algorithms to control multiple UAVs for persistent surveillance and devised a semi-heuristic approach for a surveillance task using multiple UAVs [15]. Their research considered the effect of aircraft dynamics on the performance of the designed cooperative mission and the advantages of their policy’s performance was demonstrated by comparing it with other benchmark approaches such as the potential field-like approach, the planning-based approach, and the optimum approach. Paley and Peterson developed their previous research for ocean sampling [23], for environmental monitoring and surveillance [30]. Each UAV was considered as a Newton particle which was incorporated in a gyroscopic steering control system. This design has several drawbacks: first, obstacle avoidance in Newton particle method is not considered; second, all UAVs are moving in the same direction which is not flexible for surveillance and searching tasks; third, each UAV orbit around an inertially fixed point at constant radius which is not an energy efficient method for monitoring and surveillance.

2.3 Localization and Mapping

High agility, wide vision, and accessibility are some of the significant factors that made the UAVs a popular tool to map and model lands or terrains [18]. UAVs have been used to map in several types of research [18,19,20]. Figure 10.3 shows the concept of cooperative 3D mapping by multiple quadrotors. Remondino et al. used UAVs for space-mapping and 3D-modeling in several types of vehicles and techniques [18]. One of the high systems in the mapping technology of UAVs is known as light detection and ranging (LIDAR) was employed by Lin et al. [19]. They have applied the LIDAR-based system on a mini-UAV-borne cooperating with Ibeo Lux and Sick laser scanners and an AVT Pike F-421 CCCD camera to map a local area in Vanttila, Espoo, Finland in a fine-scale.

As the surveillance and searching algorithms, the cooperative mapping task of UAVs can help to improve the accuracy and reduce the operation time through sharing their responsibilities. Cooperative control of autonomous vehicles can be used to make a map for an unknown environment and 3D-modeling. Fenwick et al. introduced a novel algorithm for concurrent mapping and localization (CML) which combines the information of navigation and sensors of multiple unmanned vehicles [17]. This algorithm is working based on stochastic estimation and to extract landmarks from the mapping area using a feature-based approach. Gktoan et al. developed and demonstrated the multiple sensing nodes of numerous UAV platforms using decentralized data fusion (DDF) algorithm to simultaneously localize and map the flight simulator in real-time [31].

Simultaneous localization and mapping (SLAM) presented by Williams et al. [32] can be used to examine the prospect of the constrained local submap filter (CLSF) algorithm and applied to the multi-UAVs as SLAM algorithm. The advantage of this approach is that it allows the cross-covariance process to be scheduled at convenient intervals and aids in the data association problem.

Localization and mapping in unsafe or obscure places is another critical application of UAVs. Multi-UAV cooperative control has been used for mapping in wild or unknown areas in several types of research [33, 34] such as the continuation of the SLAM algorithm and its applications presented by Bryson and Sukkarieh [33]. Han et al. have introduced personal remote sensing (PRS) multi-UAVs for contour mapping in two scenarios of nuclear radiation [34]. Their work also focused on the costs of the multi-UAVs and the efficiency of atomic radiation detection in a necessary time which were the main advantages over a single UAV mapping. Kovacina et al. also focused on mapping a hazardous substance which was a chemical cloud. To map the chemical cloud, Kovacina et al. used swarm experimentation and evaluation platform (SWEEP) with their developed rule-based, decentralized control algorithm to simulate an air vehicle swarm searching for and mapping a chemical cloud [35].

2.4 Military Applications

The cooperative control of UAVs has various practical and potential military applications varying from reconnaissance and radar deception to surface-to-air-missile jamming. It has been demonstrated that a group of low-cost and well-organized UAVs can have better effects than a single high-cost UAV [36]. Generally, the application of cooperative control for the unmanned system in the military can be categorized into two main categories: reconnaissance and penetrating strategies. To achieve these types of applications, UAVs may need to flying near each other with a specific structure. Formation flight control is one of the most straightforward cooperative strategies which consists of a set of aircrafts flying near to each other in a defined distance [37]. One of the advantages of flight formation is a significant reduction in fuel consumption through locating the follower aircraft such that the vortex of the leader aircraft reduces the induced drag of the follower aircraft [38].

2.4.1 Reconnaissance Strategy

A formation or cooperative design of UAVs can be used as reliable radars or reconnaissance tools to detect enemy troops and ballistic missiles [39, 40]. The integration of the UAVs radars will help to identify incursion objects or observe ground activities of an adversary [41, 42]. Ahmadzadeh et al. introduced a cooperative strategy to enable a heterogeneous team of UAVs to gather information for situational awareness [43]. In their work, an overall framework for reconnaissance and an algorithm for cooperative control of UAVs considering collision and obstacle avoidance were presented. Figure 10.4 shows a reconnaissance mission using multiple cooperative UAVs.

Target detection using multiple cooperative UAVs in a reconnaissance mission [44]

2.4.2 Penetrating Strategy

The new and robust defense mechanism of rivals makes it difficult to penetrate to their territories. To this aim, various strategies have been designed to deceive the target radar and defense mechanism [45,46,47].

Being hidden from the enemy radars through electronic counter-measure (ECM) is called radar jamming which is a very important action that is mostly used by unmanned combat air vehicles (UCAVs) to protect or defend themselves from surface-to-air missiles when the vehicles reconnoiter into enemy territories. The radar jamming consists of sending some noise to deceive the enemies radar signal. The radar jamming and deception can be more effective when a group of UCAVs works together. Jongrae et al. focused on the escort jamming (EJ) of the UAVs while a close formation and cooperative control procedure are designed to deceive the tracking radar of the surface-to-air missile (SAM) [45]. Generally, jamming can be classified into two categories: self or support jamming. Figure 10.5 shows the two mentioned methods of interference, where “D” shows the self-jamming and “A, B, C” UAVs show the support jamming.

Cooperative radar jamming using multiple UAVs [45]

The missiles control system is similar to the UAV control system. However, they are not designed to come back to the station. Since penetrating to the high-tech defense mechanism of a target is very complicated, a group of cooperative missiles will have more chance to penetrate a defense mechanism in comparison with being independently operated [46, 47]. Figure 10.6 shows a collective missile attack to a ship target.

3 Challenges

Multi-UAV systems have advantages over single UAVs in the impact of failure, scalability, survivability, the speed of the mission, cost, required bandwidth, and range of antennas [48]. However, these systems are complex and hard to coordinate. Gupta and Vaszkun considered three challenges in providing a stable and reliable UAV network: architectural design of networks; routing the packet from an origin to a destination and optimizing the metric; transferring from an out-of-service UAV to an active UAV, and energy conservation [48]. According to a study at MIT, the main challenges associated with the development and testing of cooperative UAVs in dynamic and uncertain situations are real-time planning; designing a robust controller; and using communication networks [23]. Ryan et al. address issues in cooperative UAV control which are aerial surveillance, detection, and tracking which allows vision-based control; collision and obstacle avoidance and formation reconfiguration; high-level control needed for real-time human interfacing; and security of communication links [49]. Oh et al. addressed the problem of modeling the agent’s interactions with each other and with the environment which is challenging to predict [50]. The most significant challenges in cooperative control of multi-agent systems can be summarized as below.

-

1.

In cooperative control, instead of developing a control objective for a single system, it is necessary to devise control objectives for several sub-systems. Moreover, the relation between the team goal and agent goal needs to be negotiated and balanced [51].

-

2.

The communication bandwidth and quality of connection among agents in the system are limited and variable. Moreover, the security of communication links in the presence of intruders should be considered in the design [52,53,54,55]. The CUAV is vulnerable to a range of cyberattacks such as denial of service (DoS) and time delay switch (TDS) attacks [56,57,58,59].

-

3.

The aerodynamic interference of the agents on each other should be considered in the design [50]. Close cooperative flight control or formation has also specific aerodynamic challenges which are called aerodynamic coupling. These aerodynamic interferences are caused by the vortex effect of the leading aircraft and should be modeled and quantified in the controller design to avoid their critical impact on the system stability. Otherwise, unwanted rolling or yawing moment will be generated which can destabilize the overall system [60, 61]. However, incorporating the coupled dynamic in the formation design can help to reduce energy consumption through the mission [62, 63].

-

4.

The controller design of CUAV should include fault tolerable algorithms through software redundancy because hardware redundancy is not an option for mini-UAVs. The fault tolerant control design for one UAV is a challenging task by itself, which has been discussed in the literature [64, 65].

4 Algorithms

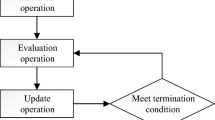

The cooperative algorithms can be categorized into three main groups based on their methodologies. They are (1) consensus techniques; (2) flocking techniques; and (3) formation based techniques. Figure 10.7 shows the main algorithms that are used for the UAVs system. Algorithms for consensus control, flocking control, and formation control are discussed below, respectively.

4.1 Consensus Strategies

In the area of cooperative control, consensus control is an important and complicated problem. In consensus control, a group of agents communicates with each other through a sensing or communication network to reach a common decision. The roots of the consensus control belong to computer science and parallel computing [66, 67]. In the last decade, the research works of Jadbabaie et al. [68] and Olfati-Saber et al. [69] had a considerable impact on other researchers to work on consensus control problems. Generally, Jadbabaie et al. [68] provided a theoretical explanation for the alignment behavior of the dynamic model introduced by Vicsek [70], and Olfati-Saber introduced a general framework to solve consensus control problem of the networks of the integrators [69]. In the following subsection, the basic concepts of the consensus control will be explained; then, recent research works in this area will be reviewed. In the cooperative control, the communications among agents are modeled by undirected graphs. Thus, a basic knowledge of graph theory is needed to understand the concept of cooperative algorithms. Therefore, the basic concept of graph theory will be briefly explained, followed by the concept of consensus control theory.

4.1.1 Graph Theory Basics in Communication Systems

Communications or sensing among the agents of a team is commonly modeled by undirected graphs. An undirected graph is denoted by G = (V, ε, A), where V = {1, 2, …, N} is the set of N nodes or agents in the network, and ε(i, j) ∈ V × V is set of edges between the ordered pairs of jth and ith agents. β = [a ij] ∈ R N×N is the adjacency matrix associated with graph G which is symmetric, and a i,j is a positive value if (i, j) ∈ ε and i ≠ j, otherwise a ij =0. Figure 10.8 shows the basic structure of a directed and undirected graph.

For example the adjacency matrix associated with the undirected graph shown in Fig. 10.8 is

where node A, B, C, D, and E are considered to be nodes 1, 2, 3, 4, and 5, respectively.

4.1.2 Consensus Control Theory

The basic concept of the consensus control theory is to stimulate similar dynamics on the state’s information of each agent in the group. Based on the communication type, each agent (vehicle) in the system can be modeled based on differential or difference equations. If the bandwidth of the communication network among the agents is large enough to allow continuous communication, then a differential equation can be used to model agent dynamics. Otherwise, the transmitted data among agents should be sent through discrete packets that need difference equations to model the agent dynamics. These are briefly explained here.

-

Continuous-time Consensus: The most common consensus algorithm used for the dynamics defined by differential equations can be presented as [6, 71, 72]

$$\displaystyle \begin{aligned} \dot{x}_i(t)=-\sum_{j=1}^n a_{ij}(t)\big(x_i(t)-x_j(t)\big),~~~~ i=1,\ldots ,n {} \end{aligned} $$(10.2)where x i(t) is the information state of the ith agent, and a ij(t) is the (i, j) element of the adjacency matrix β which is obtained from the graph G. If a ij = 0, it indicates that there is no connection between agents i and j, subsequently, they cannot exchange any information between them. The consensus algorithm shown in Eq. 10.2 can be rewritten in a matrix form as

$$\displaystyle \begin{aligned} \dot{x}(t)=-L(t)x(t) {} \end{aligned} $$(10.3)where the Laplacian matrix L = [l ij] ∈ R N×N is related to the graph G and can be obtained as follows

$$\displaystyle \begin{aligned} l_{ij}=\Bigg\{\begin{array}{cc} \sum_{j\in N_i},~~~ i=j\\ -a_{i,j} ,~~~ i\neq j \end{array} {} \end{aligned} $$(10.4)Since the l ij has zero row sums, an eigenvalue of L is 0, which is associated with an eigenvector of 1. Because L is symmetric, in a connected graph, L has N − 1 real eigenvalues on the right side of the imaginary plane. Thus, N eigenvalues of L can be defined as follows

$$\displaystyle \begin{aligned} 0=\lambda_1<\lambda_2\leq \lambda_2\ldots \leq \lambda_N {} \end{aligned} $$(10.5)Based on this condition and the fact that L is symmetric, the diagonalized L can be obtained by orthogonal transformation matrix as

$$\displaystyle \begin{aligned} L=PJP^T {} \end{aligned} $$(10.6)where P consists of the eigenvectors of the L and J is a diagonal form of L which are defined as follows

$$\displaystyle \begin{gathered} P=[r_1~ r_2~.~.~.~ r_n]\\ J=\begin{bmatrix} 0& 0_{1\times (N-1)}\\ 0_{(N-1)\times 1} & \gamma \end{bmatrix} \end{gathered} $$where γ is a matrix with diagonal form which contains N − 1 eigenvalues of L which have positive values, and r i, i ∈{1, 2, …, N} describes the eigenvectors of L where \(r_i^T r_i=1\) [6].

It can be claimed that consensus is achieved for a team of agents for all x i(0) and all i, j = 1, . . . , n, if lim t→∞|x i(t) − x j(t)| = 0 [6].

-

Discrete-time Consensus: The discrete-time consensus is used when the communication bandwidth among the agents in the team is weak or occurs at discrete instants. In this case, the information states are updated through difference equations. The following form commonly presents the discrete-time consensus [73,74,75,76]

$$\displaystyle \begin{aligned} x_i[k+1]=\sum_{j=1}^n d_{ij}[k] x_j[k], ~~~ i=1,~.~.~.~,n {} \end{aligned} $$(10.7)where k is the solving step associated to the communication event; d ij[k] is the (i, j) element of the stochastic matrix D = [d ij] ∈ R n×n. The discrete-time consensus algorithm in Eq. 10.7 can be rewritten in a matrix form as

$$\displaystyle \begin{aligned} x[k+1]=D[k]x[k] {} \end{aligned} $$(10.8)where D = [d ij] > 0, if i ≠ j and the information flows from the agent j to i, otherwise d ij[k] = 0 [75]. Similarly, a discrete-time consensus is achieved for a team of agents for all x i[0] and all i, j = 1, . . . , n, if we have lim k→∞|x i[k] − x j[k]| = 0 [75].

4.1.3 Consensus Recent Researches

The consensus control algorithm, which is based on graph theory, has received a growing interest among researchers [77, 78]. Jamshidi et al. developed a testbed and a consensus technique for cooperative control of UAVs [77]. Rezaee and Abdollahi proposed a consensus protocol for a class of high-order multi-agent systems [78]. They showed how agents achieve consensus on the average of any shared quantities using their relative positions. Li presented a geometric decomposition approach for cooperative agents [79]. Under topology adjustments, decomposing a system into sufficiently simple sub-systems facilitates subsequent analyses and provides the flexibility of choice. Liang et al. introduced an observer-based discrete consensus control system. The nonlinear observer was used to obtain the states of the agents, and a feedback control law was designed based on the data received from the observer [80]. Xia et al. introduced an optimal design for consensus control of agents with double-integrator dynamics with collision avoidance considerations [81]. Han et al. introduced a nonlinear multi-consensus control strategy for multi-agent systems [82]. In their research, both of the switching and fixed topology were considered, and their consensus controller could control three subgroups, as shown in Fig. 10.9. They were also compared their research work with their previous work [83] in which they could reduce the convergence time in consensus control. Shoja et al. introduced an estimator based consensus control scheme for agents with nonlinear and nonidentical dynamic systems [84]. In their design, they used an undirected graph model for their communication system among the agents, and multiple leaders were considered in their design. A sliding mode consensus control design for double-integrator multi-agent systems and 3-DoF helicopters was introduced by Hou et al. [85]. The advantage of their proposed method was achieving synchronization in the presence of disturbances and the ability to be implemented on 3-DoF model of helicopters.

Multi-consensus control of three subgroups by Han et al. [82]

Taheri et al. introduced an adaptive fuzzy wavelet network approach for consensus control of a class of a nonlinear second-order multi-agent system [86]. The adaptive laws were obtained using the Lyapunov theory to maintain the nonlinear dynamic stability. Then, an adaptive fuzzy wavelet network was used to compensate for the effect of unknown dynamics and time delay in the system. However, the authors did not address the design of a consensus control design for a second-order multi-agent system with a directed graph. Neural networks and robust control techniques have been used in [87] and [88] to design a consensus controller for higher-order multi-agent systems and their semi-global boundedness of consensus error was ensured by choosing sufficiently large control gains. Consensus fault tolerant controllers (FTC) with the ability to tolerate faults in the actuators of agents in a multi-agent system were also investigated [89,90,91]. Gallehdari et al. introduced an online redistributed control reconfiguration approach that employed the nearest neighbor information and the internal fault detection and identification (FDI) of the agent to keep the consensus control in the presence of faults in the actuators. They used the first-order dynamic model for their agents, and their proposed controller was designed based on minimizing the cost of faulty agent performance index which led to optimizing the performance index of the team. Later, they developed their work to optimize all the agents in the consensus FTC system [90]. Hua et al. introduced a consensus FTC design for time-varying high-order linear systems which could tolerate faults in the actuators [91].

Wang et al. introduced a new smooth function-based adaptive consensus control approach for multi-agent systems with nonlinear dynamic, unknown parameters, and uncertain disturbances without the need for the assumption of linearly parameterized reference trajectory [92]. Their approach was based on the premise of transmitting data among the agents based on an undirected graph model. Later, they extended their work for directed graph model as well [93].

4.2 Flocking Based Strategies

Flocking can be defined as a form of collective behavior of a group of interacting agents with mutual objectives. Flocking algorithms are inspired by a flock of birds and developed based on Reynolds rules. Reynolds modeled the steering behavior of each agent based on the positions and velocities of nearby flock-mates, using three terms of separation (collision avoidance), alignment (velocity matching), and cohesion (flock centering) [94].

4.2.1 Flocking Control Theory

Similar to consensus algorithms, flocking algorithms are based on graph theory. Unlike the formation strategies that require the group of agents to be in a particular shape, the group of agents in the flocking is not necessarily in a rigid shape or form. In other words, in flocking control, as long as the flock goals are satisfied, transition in the shape of the flock is allowed, e.g., it can be transformed from a rectangular shape to a triangular shape.

Several flocking algorithms have been devised for multi-agent systems with a second-order dynamic model [95,96,97]. The following equation of motion can present a group of agents with a second-order dynamic model.

where q i is the position of agent (node) i and p i is the velocity. p i, q i, u i ∈ R m and i ∈ V = 1, 2, …, N (set of N nodes or agents in the network). Flocking algorithms consists of three terms: (1) a gradient-based term, (2) a consensus term, and (3) a navigational feedback term, and can be presented as follows [96]

where ϕ(•) is a potential function, and \(n_{ij}=\sigma _{\epsilon }(q_j-q_i)=(q_j-q_i/\sqrt []{1+\epsilon \|q_jq_i\|{ }^2})\) is a vector along the line connecting q i to q j in which 𝜖 ∈ (0, 1) is a constant parameter of the norm in σ-norm. The pair (p r, q r) ∈ R m × R m is the state of a γ agent. The navigational feedback term \(f_i^{\gamma }\) is given as follows

The flocking algorithm in Eq. 10.10 can be developed by using some updating terms to tackle the problem of uncertainties in the flock control. One major problem with flocking control is its incapability of covering a large area. Thus, a semi-flocking algorithm was introduced to tackle this problem [98]. In the semi-flocking algorithm, the navigation feedback term is modified to make each agent able to decide whether to track a target or to search for a new one.

4.2.2 Flocking Recent Researches

Moshtagh and Jadbabaie introduced a novel flocking and velocity alignment algorithm to control the kinematic agents using graph theory [95]. In their design which was capable of flocking control in two and three dimensions, they used a geodesic control to minimize the misalignment potential which leads to flocking and velocity alignment. They also demonstrated that their method could keep the flocking even when the topology of proximity graph changes, and as long as the joint connectivity is well-maintained, the algorithm will be successful in consensus control. However, to guarantee the flocking success, still, one problem has to be solved, and that is how to keep the connectivity condition in the proximity graph. Olfati-Saber introduced a systematic approach for the generation of cost functions for flocking [96]. In these cost functions, the deviation from flock objects will be penalized. They demonstrated that a peer-to-peer network of agents could be used for the migration of flocks and the need for a single leader for the flock can be eliminated. The simulation results for flocking hundreds of agents in 2-D and 3-D, squeezing, and split/reuniting maneuvers were provided that showed the success of the proposed algorithm in the presence of obstacles. Saif et al. introduced a linear quadratic regulator (LQR) controller for a flock of UAVs which is independent of the number of agents in the flock [97]. This control strategy can satisfy the Reynolds rules, and independent of the number of UAVs in the flock it allows designing an LQR controller for each of the UAVs. Chapman and Mesbahi designed an optimal controller for UAV flocking in the presence of wind gusts, using a consensus-based leader-follower system to improve velocity tracking [99].

Tanner et al. introduced a control law for flocking of multi-agent systems with double-integrator dynamics and arbitrary switching in the topology of agent interaction network [100]. The non-smooth analysis was used to accommodate arbitrary switching the agent’s network, and they demonstrated that their control law is robust against arbitrary changes in the agent communication network as long as they are connected in their maneuvers. Hung and Givigi developed a model-free reinforcement learning approach to flocking of small fixed-wing UAVs in a leader-follower topology [4]. In their study, agents experience disturbances in a stochastic environment. The advantage of their online learning design is that their model is not dependent on the environment; hence, it can be implemented in a different environment without any information about the plant and disturbances in the system. This characteristic increases the adaptability of the system to unforeseen situations. However, the learning rate and convergence speed of flocking are two factors that still need to be solved. Quintero et al. introduced a leader-follower design for flocking control of multiple UAVs to conduct a sensing task [101]. The UAVs were considered as fixed-wing airplanes flying at a constant speed with fixed altitude which limits its movement in a 2-D planar surface. In their strategy, each of the followers is controlled using a stochastic optimal control problem where the cost function is the heading and distance toward the leader. This algorithm was successfully applied and implemented in three UAVs equipped with cameras; however, the offline solving the optimization problem cannot guarantee the flocking behavior of the system in the presence of nonlinear behavior of flock and its agents.

McCune et al. introduced a framework based on a dynamic data-driven application system (DDDAS) to predict, control, and improve decision making artificial swarms using repeated simulations and synergistic feedback loops [5]. Using this strategy helps to improve the decision making in the process of swarm control; however, the time frame for the real-time application of this strategy has not been considered which can affect the effectiveness of this approach. Martin et al. [102] considered a system of agents with second-order dynamics. They determined conditions to ensure that agents agree on a common velocity to achieve system flocking. The significance of their design was the allowance for disconnected communication links that were unnecessary for flocking. Practical bounds for two different communication rules were investigated; first, the agents communicate within the radius of communication bound; second, agents communicate with each other with different and randomly communication radiuses. Overall, they concluded that by choosing a proper initial velocity disagreement or by setting a small enough time step, flocking can be achieved with random communication radiuses. One of the drawbacks of their approach was an asymmetric requirement in the interaction among the agents. Generally, other types of interaction (i.e., assuming that agents interact with the nearest neighbors with a fixed parameter which is called topological interaction rule) can happen in the flock. Riehl et al. introduced a receding-horizon search algorithm for cooperative UAVs [2]. In order to find a target in the minimum time, each of the UAVs was equipped with a gimbal sensor which could be rotated to observe the nearby target; then by gathering information on a potential location for the target, they could find it. The algorithm helps to minimize the expected time for finding the target by controlling the position of UAVs and their sensors. The optimization process is a receding-horizon algorithm based on a graph with variable target probability density function (PDF). This algorithm was successfully tested using two small UAVs equipped with gimbaled video cameras.

4.3 Guidance Law Based Cooperative Control

This subsection is separated from the other cooperative control techniques because they do not deal with the guidance system in their design. In order to achieve a formation, the acceleration and angular velocity of each agent in the formation group should be calculated separately [103]. To this aim, guidance law techniques are used to obtain the desired acceleration and angular velocities. Pure pursuit (PP) guidance algorithm is one of the most practical leader-follower guidance techniques in the formation control. This algorithm was initially implemented on ground-attack missile systems that aim to hit the target [104]. Later by introducing the concept of the virtual leader (or target) it has been developed for the formation of flight control which the followers keep their line of sight (LoS) in-line with the leader movement. In other words, the velocity direction of the agents should be aligned with the velocity of the leader [103].

In the PP algorithm, between the follower speed vector \(\vec {V}\) and the virtual leader \(\vec {R}\) the following equation is maintained:

Figure 10.10 shows the geometry between the virtual leader and the follower in the PP algorithm. In this figure, \(d_{x_{ref}}\), \(d_{y_{ref}}\), and \(d_{z_{ref}}\) represent the distance between the leader and the virtual leader in the longitudinal axis, lateral axis, and the vertical axis, respectively. The required acceleration in the follower aircraft to reach the virtual leader can be calculated as follows [105, 106]

where N is the navigational constant which is usually chosen between 0.3 and 0.5. Proportional navigation (PN) guidance is another candidate that can be applied in the formation control design; however, because when the closing velocity is negative (the leader velocity is higher than the follower aircraft), the PN guidance is likely to guide the follower away from the leader [106]. In contrast, the PP guidance does not depend on the leader velocity and always guides the follower in the direction of the leader. Thus, we discussed the PP guidance laws application in the control design of the flight formation systems.

Geometry of the PP guidance algorithm [105]

4.3.1 Guidance Law Based Recent Researches

Gu et al. [107] introduced a nonlinear leader-follower based formation control law. A two-loop controller was designed where nonlinear dynamic inversion (DI) was used to design the velocity and position tracker in the outer-loop, and a linear controller was used to track the leader attitude in the inner-loop. This two-loop design is based on the difference in the changing rate of the inner-loop and outer-loop dynamic parameters. The introduced controller was experimentally tested on two WVU YF-22 aircrafts as leader and follower. The experimental results demonstrated the effectiveness of their proposed formation control law. Yamasaki et al. introduced a PP guidance based formation control system for a group of UAVs [106]. Their proposed control system uses a PP guidance algorithm and a velocity controller based on the DI control technique to avoid a collision. The attitude controller of the follower aircraft was designed based on a two-loop DI controller. Sadeghi et al. improve the Yamasaki work [106] and introduced a new approach to integrating the guidance and control system through a PID control design [37]. Their proposed approach could improve the PP guidance algorithm accuracy and the maneuverability of the formation group.

Zhu et al. introduced a least-squares method for the estimation of the leader location, then, a guidance law based on sliding mode control was designed to control the heading rate of the follower aircrafts toward the leader estimated location [108]. Ali et al. presented a guidance law for lateral formation control of UAVs based on sliding mode theory [109]. Two sliding surfaces were integrated into series to improve the control response in the formation design. A new approach for UAVs formation control considering obstacle/collision avoidance using modified Grossberg neural network (GNN) was developed by Wang et al. [110]. In order to track the desired trajectory, a model predictive controller was used. They simulated their collision/obstacle avoidance design in a 3-D environment. A LOS guidance law approach for formation control of a group of under-actuated vessels is studied in [111]. In their approach, a nonlinear synchronization controller was combined with the LOS-based path following controller to make the overall system more robust and controllable under the under-actuation situation.

5 Summary and Conclusion

In this chapter, the algorithms and applications of cooperative control techniques for UAVs are reviewed. By categorizing the recent researches to applications and methods, each was discussed separately. The latest studies in the field of cooperative control of UAVs have been investigated and the advantages and disadvantages of methods were discussed. Applications of cooperative UAVs mission in various fields have been explored. Although some studies in the cooperative field may have been missed in this survey, it is hoped that this survey would be helpful for researchers to overview the major achievements in cooperative control of UAVs.

Bibliography

L. Lin, M. Roscheck, M.A. Goodrich, B.S. Morse, Supporting wilderness search and rescue with integrated intelligence: autonomy and information at the right time and the right place. in Association for the Advancement of Artificial Intelligence (2010)

J.R. Riehl, G.E. Collins, J.P. Hespanha, Cooperative search by UAV teams: a model predictive approach using dynamic graphs. IEEE Trans. Aerosp. Electron. Syst. 47(4), 2637–2656 (2011)

J. How, Y. Kuwata, E. King, Flight demonstrations of cooperative control for UAV teams, in AIAA 3rd “Unmanned Unlimited” Technical Conference, Workshop and Exhibit (2004), p. 6490

S.-M. Hung, S.N. Givigi, A q-learning approach to flocking with UAVS in a stochastic environment. IEEE Trans. Cybern. 47(1), 186–197 (2017)

R.R. McCune, G.R. Madey, Control of artificial swarms with DDDAS. Proc. Comput. Sci. 29, 1171–1181 (2014)

W. Ren, R.W. Beard, E.M. Atkins, Information consensus in multivehicle cooperative control. IEEE Control Syst. 27(2), 71–82 (2007)

B.D. Anderson, C. Yu, J.M. Hendrickx, et al., Rigid graph control architectures for autonomous formations. IEEE Control Syst. 28(6), 48–63 (2008)

Q. Wang, H. Gao, F. Alsaadi, T. Hayat, An overview of consensus problems in constrained multi-agent coordination. Syst. Sci. Control Eng. Open Access J. 2(1), 275–284 (2014)

K.-K. Oh, M.-C. Park, H.-S. Ahn, A survey of multi-agent formation control. Automatica 53, 424–440 (2015)

B. Zhu, L. Xie, D. Han, X. Meng, R. Teo, A survey on recent progress in control of swarm systems. Sci. China Inf. Sci. 60(7), 070201 (2017)

M. Senanayake, I. Senthooran, J.C. Barca, H. Chung, J. Kamruzzaman, M. Murshed, Search and tracking algorithms for swarms of robots: a survey. Robot. Auton. Syst. 75, 422–434 (2016)

M.A. Goodrich, J.L. Cooper, J.A. Adams, C. Humphrey, R. Zeeman, B.G. Buss, Using a mini-UAV to support wilderness search and rescue: practices for human-robot teaming, in IEEE International Workshop on Safety, Security and Rescue Robotics, 2007. SSRR 2007 (IEEE, Piscataway, 2007), pp. 1–6

J.Q. Cui, S.K. Phang, K.Z. Ang, F. Wang, X. Dong, Y. Ke, S. Lai, K. Li, X. Li, F. Lin, et al., Drones for cooperative search and rescue in post-disaster situation, in IEEE 7th International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), 2015 (IEEE, Piscataway, 2015), pp. 167–174

N. Nigam, I. Kroo, Control and design of multiple unmanned air vehicles for a persistent surveillance task, in 12th AIAA/ISSMO Multidisciplinary Analysis and Optimization Conference (2008), p. 5913

N. Nigam, S. Bieniawski, I. Kroo, J. Vian, Control of multiple UAVS for persistent surveillance: algorithm and flight test results. IEEE Trans. Control Syst. Technol. 20(5), 1236–1251 (2012)

A. Ahmadzadeh, A. Jadbabaie, V. Kumar, G.J. Pappas, Multi-UAV cooperative surveillance with spatio-temporal specifications, in 45th IEEE Conference on Decision and Control, 2006 (IEEE, Piscataway, 2006), pp. 5293–5298

J.W. Fenwick, P.M. Newman, J.J. Leonard, Cooperative concurrent mapping and localization, in IEEE International Conference on Robotics and Automation, 2002. Proceedings. ICRA’02, vol. 2 (IEEE, Piscataway, 2002), pp. 1810–1817

F. Remondino, L. Barazzetti, F. Nex, M. Scaioni, D. Sarazzi, UAV photogrammetry for mapping and 3d modeling–current status and future perspectives. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 38(1), C22 (2011)

Y. Lin, J. Hyyppa, A. Jaakkola, Mini-UAV-borne LIDAR for fine-scale mapping. IEEE Geosci. Remote Sens. Lett. 8(3), 426–430 (2011)

L. Zongjian, UAV for mapping low altitude photogrammetric survey, in International Archives of Photogrammetry and Remote Sensing, Beijing, China, vol. 37 (2008), pp. 1183–1186

M.J. Mears, Cooperative electronic attack using unmanned air vehicles, in Proceedings of the 2005 American Control Conference, 2005 (IEEE, Piscataway, 2005), pp. 3339–3347

Z. Junwei, Z. Jianjun, Target distributing of multi-UAVs cooperate attack and defend based on DPSO algorithm, in Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), 2014, vol. 2 (IEEE, Piscataway, 2014), pp. 396–400

D.A. Paley, F. Zhang, N.E. Leonard, Cooperative control for ocean sampling: the glider coordinated control system. IEEE Trans. Control Syst. Technol. 16(4), 735–744 (2008)

J. Scherer, S. Yahyanejad, S. Hayat, E. Yanmaz, T. Andre, A. Khan, V. Vukadinovic, C. Bettstetter, H. Hellwagner, B. Rinner, An autonomous multi-UAV system for search and rescue, in Proceedings of the First Workshop on Micro Aerial Vehicle Networks, Systems, and Applications for Civilian Use (ACM, New York, 2015), pp. 33–38

S. Waharte, N. Trigoni, Supporting search and rescue operations with UAVS, in International Conference on Emerging Security Technologies (EST), 2010 (IEEE, Piscataway, 2010), pp. 142–147

A. Viguria, I. Maza, A. Ollero, Distributed service-based cooperation in aerial/ground robot teams applied to fire detection and extinguishing missions. Adv. Robot. 24(1–2), 1–23 (2010)

I. Maza, F. Caballero, J. Capitan, J. Martinez-de Dios, A. Ollero, Firemen monitoring with multiple UAVs for search and rescue missions, in IEEE International Workshop on Safety Security and Rescue Robotics (SSRR), 2010 (IEEE, Piscataway, 2010), pp. 1–6

R.W. Beard, T.W. McLain, D.B. Nelson, D. Kingston, D. Johanson, Decentralized cooperative aerial surveillance using fixed-wing miniature UAVS. Proc. IEEE 94(7), 1306–1324 (2006)

N. Nigam, I. Kroo, Persistent surveillance using multiple unmanned air vehicles, in IEEE Aerospace Conference, 2008 (IEEE, Piscataway, 2008), pp. 1–14

D.A. Paley, C. Peterson, Stabilization of collective motion in a time-invariant flowfield. J. Guid. Control. Dyn. 32(3), 771–779 (2009)

A.H. Goktogan, E. Nettleton, M. Ridley, S. Sukkarieh, Real time multi-UAV simulator, in IEEE International Conference on Robotics and Automation, 2003 Proceedings. ICRA’03, vol. 2 (IEEE, Piscataway, 2003), pp. 2720–2726

S.B. Williams, G. Dissanayake, H. Durrant-Whyte, Towards multi-vehicle simultaneous localisation and mapping, in IEEE International Conference on Robotics and Automation, 2002. Proceedings. ICRA’02, vol. 3 (IEEE, Piscataway, 2002), pp. 2743–2748

M. Bryson, S. Sukkarieh, Co-operative localisation and mapping for multiple UAVs in unknown environments, in IEEE Aerospace Conference, 2007 (IEEE, Piscataway, 2007), pp. 1–12

J. Han, Y. Xu, L. Di, Y. Chen, Low-cost multi-UAV technologies for contour mapping of nuclear radiation field. J. Intell. Robot. Syst. 70, 401–410 (2013)

M.A. Kovacina, D. Palmer, G. Yang, R. Vaidyanathan, Multi-agent control algorithms for chemical cloud detection and mapping using unmanned air vehicles, in IEEE/RSJ International Conference on Intelligent Robots and Systems, 2002, vol. 3, pp. 2782–2788 (IEEE, Piscataway, 2002)

I.-S. Jeon, J.-I. Lee, M.-J. Tahk, Homing guidance law for cooperative attack of multiple missiles. J. Guid. Control. Dyn. 33(1), 275–280 (2010)

M. Sadeghi, A. Abaspour, S.H. Sadati, A novel integrated guidance and control system design in formation flight. J. Aerosp. Technol. Manag. 7(4), 432–442 (2015)

E. Lavretsky, F/a-18 autonomous formation flight control system design, in AIAA Guidance, Navigation, and Control Conference and Exhibit (2002), p. 4757

L. Liu, D. McLernon, M. Ghogho, W. Hu, J. Huang, Ballistic missile detection via micro-Doppler frequency estimation from radar return. Digital Signal Process. 22(1), 87–95 (2012)

M.L. Stone, G.P. Banner, Radars for the detection and tracking of ballistic missiles, satellites, and planets. Lincoln Lab. J. 12(2), 217–244 (2000)

C. Schwartz, T. Bryant, J. Cosgrove, G. Morse, J. Noonan, A radar for unmanned air vehicles. Lincoln Lab. J. 3(1), 119–143 (1990)

Y. Wang, S. Dong, L. Ou, L. Liu, Cooperative control of multi-missile systems. IET Control Theory Appl. 9(3), 441–446 (2014)

A. Ahmadzadeh, G. Buchman, P. Cheng, A. Jadbabaie, J. Keller, V. Kumar, G. Pappas, Cooperative control of UAVS for search and coverage, in Proceedings of the AUVSI Conference on Unmanned Systems, vol. 2 (2006)

R.M. Murray, Recent research in cooperative control of multivehicle systems. J. Dyn. Syst. Meas. Control. 129(5), 571–583 (2007)

J. Kim, J.P. Hespanha, Cooperative radar jamming for groups of unmanned air vehicles, in 43rd IEEE Conference on Decision and Control, 2004. CDC, vol. 1 (IEEE, Piscataway, 2004), pp. 632–637

J.-I. Lee, I.-S. Jeon, M.-J. Tahk, Guidance law using augmented trajectory-reshaping command for salvo attack of multiple missiles, in UKACC International Control Conference (2006), pp. 766–771

I.-S. Jeon, J.-I. Lee, M.-J. Tahk, Impact-time-control guidance law for anti-ship missiles. IEEE Trans. Control Syst. Technol. 14(2), 260–266 (2006)

L. Gupta, R. Jain, G. Vaszkun, Survey of important issues in UAV communication networks. IEEE Commun. Surv. Tutorials 18(2), 1123–1152 (2016)

A. Ryan, M. Zennaro, A. Howell, R. Sengupta, J.K. Hedrick, An overview of emerging results in cooperative UAV control, in 43rd IEEE Conference on Decision and Control, 2004. CDC, vol. 1 (IEEE, Piscataway, 2004), pp. 602–607

H. Oh, A.R. Shirazi, C. Sun, Y. Jin, Bio-inspired self-organising multi-robot pattern formation: a review. Robot. Auton. Syst. 91, 83–100 (2017)

F.L. Lewis, H. Zhang, K. Hengster-Movric, A. Das, Cooperative Control of Multi-Agent Systems: Optimal and Adaptive Design Approaches (Springer, Berlin, 2013)

S. Martini, D. Di Baccio, F.A. Romero, A.V. Jiménez, L. Pallottino, G. Dini, A. Ollero, Distributed motion misbehavior detection in teams of heterogeneous aerial robots. Robot. Auton. Syst. 74, 30–39 (2015)

B. Khaldi, F. Harrou, F. Cherif, Y. Sun, Monitoring a robot swarm using a data-driven fault detection approach. Robot. Auton. Syst. 97, 193–203 (2017)

A. Abbaspour, M. Sanchez, A. Sargolzaei, K. Yen, N. Sornkhampan, Adaptive neural network based fault detection design for unmanned quadrotor under faults and cyber attacks, in 25th International Conference on Systems Engineering (ICSEng) (2017)

A. Abbaspour, K.K. Yen, S. Noei, A. Sargolzaei, Detection of fault data injection attack on UAV using adaptive neural network. Proc. Comput. Sci. 95, 193–200 (2016)

A. Sargolzaei, K.K. Yen, M.N. Abdelghani, Preventing time-delay switch attack on load frequency control in distributed power systems. IEEE Trans. Smart Grid 7(2), 1176–1185 (2015)

A. Sargolzaei, K. Yen, M.N. Abdelghani, Delayed inputs attack on load frequency control in smart grid, in Innovative Smart Grid Technologies 2014 (IEEE, Piscataway, 2014), pp. 1–5

A. Sargolzaei, K.K. Yen, M.N. Abdelghani, S. Sargolzaei, B. Carbunar, Resilient design of networked control systems under time delay switch attacks, application in smart grid. IEEE Access 5, 15,901–15,912 (2017)

A. Sargolzaei, A. Abbaspour, M.A. Al Faruque, A.S. Eddin, K. Yen, Security challenges of networked control systems, in Sustainable Interdependent Networks (Springer, Berlin, 2018), pp. 77–95

F. Giulietti, M. Innocenti, M. Napolitano, L. Pollini, Dynamic and control issues of formation flight. Aerosp. Sci. Technol. 9(1), 65–71 (2005)

R.L. Pereira, K.H. Kienitz, Tight formation flight control based on h approach, in 24th Mediterranean Conference on Control and Automation (MED), 2016 (IEEE, Piscataway, 2016), pp. 268–274

R.J. Ray, B.R. Cobleigh, M.J. Vachon, C. St. John, Flight test techniques used to evaluate performance benefits during formation flight, in NASA Conference Publication, NASA (1998, 2002)

J. Pahle, D. Berger, M.W. Venti, J.J. Faber, C. Duggan, K. Cardinal, A preliminary flight investigation of formation flight for drag reduction on the c-17 aircraft (2012)

A. Abbaspour, K.K. Yen, P. Forouzannezhad, A. Sargolzaei, A neural adaptive approach for active fault-tolerant control design in UAV. IEEE Trans. Syst. Man Cybern. Syst. Hum. 99, 1–11 (2018)

A. Abbaspour, P. Aboutalebi, K.K. Yen, A. Sargolzaei, Neural adaptive observer-based sensor and actuator fault detection in nonlinear systems: application in UAV. ISA Trans. 67, 317–329 (2017)

V. Borkar, P. Varaiya, Asymptotic agreement in distributed estimation. IEEE Trans. Autom. Control 27(3), 650–655 (1982)

J. Tsitsiklis, D. Bertsekas, M. Athans, Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE Trans. Autom. Control 31(9), 803–812 (1986)

A. Jadbabaie, J. Lin, A.S. Morse, Coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Trans. Autom. Control 48(6), 988–1001 (2003)

R. Olfati-Saber, R.M. Murray, Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Control 49(9), 1520–1533 (2004)

T. Vicsek, A. Czirók, E. Ben-Jacob, I. Cohen, O. Shochet, Novel type of phase transition in a system of self-driven particles. Phys. Rev. Lett. 75(6), 1226 (1995)

G. Xie, L. Wang, Consensus control for a class of networks of dynamic agents. Int. J. Robust Nonlinear Control 17(10–11), 941–959 (2007)

F. Xiao, L. Wang, Asynchronous consensus in continuous-time multi-agent systems with switching topology and time-varying delays. IEEE Trans. Autom. Control 53(8), 1804–1816 (2008)

X. Chen, F. Hao, Event-triggered average consensus control for discrete-time multi-agent systems. IET Control Theory Appl. 6(16), 2493–2498 (2012)

H. Zhang, H. Jiang, Y. Luo, G. Xiao, Data-driven optimal consensus control for discrete-time multi-agent systems with unknown dynamics using reinforcement learning method. IEEE Trans. Ind. Electr. 64(5), 4091–4100 (2017)

D. Ding, Z. Wang, B. Shen, G. Wei, Event-triggered consensus control for discrete-time stochastic multi-agent systems: the input-to-state stability in probability. Automatica 62, 284–291 (2015)

J. Jin, N. Gans, Collision-free formation and heading consensus of nonholonomic robots as a pose regulation problem. Robot. Auton. Syst. 95, 25–36 (2017)

M. Jamshidi, A.J. Betancourt, J. Gomez, Cyber-physical control of unmanned aerial vehicles. Sci. Iran. 18(3), 663–668 (2011)

H. Rezaee, F. Abdollahi, Average consensus over high-order multiagent systems. IEEE Trans. Autom. Control 60(11), 3047–3052 (2015)

W. Li, Unified generic geometric-decompositions for consensus or flocking systems of cooperative agents and fast recalculations of decomposed subsystems under topology-adjustments. IEEE Trans. Cybern. 46(6), 1463–1470 (2016)

H. Liang, H. Zhang, Z. Wang, Distributed-observer-based cooperative control for synchronization of linear discrete-time multi-agent systems. ISA Trans. 59, 72–78 (2015)

Y. Xia, X. Na, Z. Sun, J. Chen, Formation control and collision avoidance for multi-agent systems based on position estimation. ISA Trans. 61, 287–296 (2016)

T. Han, Z.-H. Guan, M. Chi, B. Hu, T. Li, X.-H. Zhang, Multi-formation control of nonlinear leader-following multi-agent systems. ISA Trans. 69, 140–147 (2017)

G.-S. Han, Z.-H. Guan, X.-M. Cheng, Y. Wu, F. Liu, Multiconsensus of second order multiagent systems with directed topologies. Int. J. Control, Autom. Syst. 11(6), 1122–1127 (2013)

S. Shoja, M. Baradarannia, F. Hashemzadeh, M. Badamchizadeh, P. Bagheri, Surrounding control of nonlinear multi-agent systems with non-identical agents. ISA Trans. 70, 219 (2017)

H. Hou, Q. Zhang, Finite-time synchronization for second-order nonlinear multi-agent system via pinning exponent sliding mode control. ISA Trans. 65, 96–108 (2016)

M. Taheri, F. Sheikholeslam, M. Najafi, M. Zekri, Adaptive fuzzy wavelet network control of second order multi-agent systems with unknown nonlinear dynamics. ISA Trans. 69, 89–101 (2017)

H. Zhang, F.L. Lewis, Adaptive cooperative tracking control of higher-order nonlinear systems with unknown dynamics. Automatica 48(7), 1432–1439 (2012)

S. El-Ferik, A. Qureshi, F.L. Lewis, Neuro-adaptive cooperative tracking control of unknown higher-order affine nonlinear systems. Automatica 50(3), 798–808 (2014)

Z. Gallehdari, N. Meskin, K. Khorasani, A distributed control reconfiguration and accommodation for consensus achievement of multiagent systems subject to actuator faults. IEEE Trans. Control Syst. Technol. 24(6), 2031–2047 (2016)

Z. Gallehdari, N. Meskin, K. Khorasani, Distributed reconfigurable control strategies for switching topology networked multi-agent systems. ISA Trans. 71, 51–67 (2017)

Y. Hua, X. Dong, Q. Li, Z. Ren, Distributed fault-tolerant time-varying formation control for high-order linear multi-agent systems with actuator failures. ISA Trans. 71, 40–50 (2017)

R. Wang, X. Dong, Q. Li, Z. Ren, Distributed adaptive formation control for linear swarm systems with time-varying formation and switching topologies. IEEE Access 4, 8995–9004 (2016)

W. Wang, C. Wen, J. Huang, Distributed adaptive asymptotically consensus tracking control of nonlinear multi-agent systems with unknown parameters and uncertain disturbances. Automatica 77, 133–142 (2017)

C.W. Reynolds, Flocks, herds and schools: a distributed behavioral model. ACM SIGGRAPH Comput. Graph. 21(4), 25–34 (1987)

N. Moshtagh, A. Jadbabaie, Distributed geodesic control laws for flocking of nonholonomic agents. IEEE Trans. Autom. Control 52(4), 681–686 (2007)

R. Olfati-Saber, Flocking for multi-agent dynamic systems: algorithms and theory. IEEE Trans. Autom. Control 51(3), 401–420 (2006)

O. Saif, I. Fantoni, A. Zavala-Rio, Flocking of multiple unmanned aerial vehicles by LQR control, in International Conference on Unmanned Aircraft Systems (ICUAS), 2014 (IEEE, Piscataway, 2014), pp. 222–228

S.H. Semnani, O.A. Basir, Semi-flocking algorithm for motion control of mobile sensors in large-scale surveillance systems. IEEE Trans. Cybern. 45(1), 129–137 (2015)

A. Chapman, M. Mesbahi, UAV flocking with wind gusts: adaptive topology and model reduction, in American Control Conference (ACC), 2011 (IEEE, Piscataway, 2011), pp. 1045–1050

H.G. Tanner, A. Jadbabaie, G.J. Pappas, Flocking in fixed and switching networks. IEEE Trans. Autom. Control 52(5), 863–868 (2007)

S.A. Quintero, G.E. Collins, J.P. Hespanha, Flocking with fixed-wing UAVS for distributed sensing: a stochastic optimal control approach, in American Control Conference (ACC), 2013 (IEEE, Piscataway, 2013), pp. 2025–2031

S. Martin, A. Girard, A. Fazeli, A. Jadbabaie, Multiagent flocking under general communication rule. IEEE Trans. Control Netw. Syst. 1(2), 155–166 (2014)

W. Naeem, R. Sutton, S. Ahmad, R. Burns, A review of guidance laws applicable to unmanned underwater vehicles. J. Navig. 56(1), 15–29 (2003)

C.-F. Lin, Modern Navigation, Guidance, and Control Processing, vol. 2 (Prentice Hall, Englewood Cliffs, 1991)

N.A. Shneydor, Missile Guidance and Pursuit: Kinematics, Dynamics and Control (Elsevier, Amsterdam, 1998)

T. Yamasaki, K. Enomoto, H. Takano, Y. Baba, S. Balakrishnan, Advanced pure pursuit guidance via sliding mode approach for chase UAV, in Proceedings of AIAA Guidance, Navigation and Control Conference, AIAA, vol. 6298 (2009), p. 2009

Y. Gu, B. Seanor, G. Campa, M.R. Napolitano, L. Rowe, S. Gururajan, S. Wan, Design and flight testing evaluation of formation control laws. IEEE Trans. Control Syst. Technol. 14(6), 1105–1112 (2006)

S. Zhu, D. Wang, C.B. Low, Cooperative control of multiple UAVS for moving source seeking. J. Intell. Robot. Syst. 74(1–2), 333–346 (2014)

S.U. Ali, R. Samar, M.Z. Shah, A.I. Bhatti, K. Munawar, U.M. Al-Sggaf, Lateral guidance and control of UAVs using second-order sliding modes. Aerosp. Sci. Technol. 49, 88–100 (2016)

X. Wang, V. Yadav, S. Balakrishnan, Cooperative UAV formation flying with obstacle/collision avoidance. IEEE Trans. Control Syst. Technol. 15(4), 672–679 (2007)

E. Børhaug, A. Pavlov, E. Panteley, K.Y. Pettersen, Straight line path following for formations of underactuated marine surface vessels. IEEE Trans. Control Syst. Technol. 19(3), 493–506 (2011)

Acknowledgements

The authors would like to thank Dr. Kang Yen for his guidance, contribution, and effort toward this paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Sargolzaei, A., Abbaspour, A., Crane, C.D. (2020). Control of Cooperative Unmanned Aerial Vehicles: Review of Applications, Challenges, and Algorithms. In: Amini, M. (eds) Optimization, Learning, and Control for Interdependent Complex Networks. Advances in Intelligent Systems and Computing, vol 1123. Springer, Cham. https://doi.org/10.1007/978-3-030-34094-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-34094-0_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34093-3

Online ISBN: 978-3-030-34094-0

eBook Packages: EngineeringEngineering (R0)