Abstract

Robustness of deep learning methods for limited angle tomography is challenged by two major factors: (a) due to insufficient training data the network may not generalize well to unseen data; (b) deep learning methods are sensitive to noise. Thus, generating reconstructed images directly from a neural network appears inadequate. We propose to constrain the reconstructed images to be consistent with the measured projection data, while the unmeasured information is complemented by learning based methods. For this purpose, a data consistent artifact reduction (DCAR) method is introduced: First, a prior image is generated from an initial limited angle reconstruction via deep learning as a substitute for missing information. Afterwards, a conventional iterative reconstruction algorithm is applied, integrating the data consistency in the measured angular range and the prior information in the missing angular range. This ensures data integrity in the measured area, while inaccuracies incorporated by the deep learning prior lie only in areas where no information is acquired. The proposed DCAR method achieves significant image quality improvement: for \(120^\circ \) cone-beam limited angle tomography more than \(10\%\) RMSE reduction in noise-free case and more than \(24\%\) RMSE reduction in noisy case compared with a state-of-the-art U-Net based method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, deep learning has achieved overwhelming success in various computed tomography (CT) applications [1, 2], including low-dose CT [3,4,5], sparse-view reconstruction [6,7,8], and metal artifact reduction [9, 10]. In this work, we are interested in the application of deep learning to limited angle tomography. Image reconstruction from data acquired in an insufficient angular range is called limited angle tomography. It arises when the gantry rotation of a CT system is restricted by other system parts, or a super short scan is preferred for the sake of quick scanning time, low dose, or less contrast agent.

Conventionally, limited angle tomography is addressed by extrapolation methods [11, 12] or iterative reconstruction algorithms with total variation [13,14,15]. In the past three years, various deep learning methods have been investigated in limited angle tomography [16,17,18,19,20,21]. For example, Gu and Ye adapted the U-Net architecture [22] to learn artifacts from streaky images in the multi-scale wavelet domain [18]. Good quality images are obtained by this method for \(120^\circ \) limited angle tomography. The results presented in the literature reveal promising developments for a clinical applicability of deep learning-based reconstructions.

However, the robustness of deep learning in practical applications is still a concern. On one hand, deep learning methods may fail to generalize to new test instances as these methods are trained only on an insufficient dataset. On the other hand, due to the curse of high dimensional space [23], deep neural networks have been reported to be vulnerable to small perturbations, including adversarial examples and noise [24,25,26]. In the field of limited angle tomography, our previous work [19] has demonstrated that the U-Net method is not robust to Poisson noise as well. In this work, we devise an algorithm overcoming these limitations by enforcing data consistency with the measured raw data.

Since generating reconstructed images directly from a neural network appears inadequate, we propose to combine deep learning with known operators. The first category of such approaches is to build deep neural network architectures directly based on analytic formulas of conventional methods. In these neural networks, each layer represents a certain known operator whose weights are fine tuned by data-driven learning to improve precision. Therefore, they are called “precision learning” [27, 28]. In precision learning, maximal error bounds are limited by prior information of the analytic formulas. Würfl et al. [16, 20] proposed a neural network architecture based on filtered back-projection (FBP) to learn the compensation weights [29] for limited angle reconstruction. However, this particular method is not suitable for small angular ranges, e.g. \(120^\circ \) cone-beam limited angle tomography, since no redundant data are available to compensate missing data. The second category is to use deep learning and conventional methods to reconstruct different parts of an imaged object respectively. Bubba et al. [21] proposed a hybrid deep learning-shearlet framework for limited angle tomography, where an iterative shearlet transform algorithm [30] is utilized to reconstruct visible singularities of an imaged object while a U-Net based neural network with dense blocks [31] is utilized to predict the invisible ones. This method achieves better image quality than pure model or data-driven-based reconstruction methods. The third category is to use deep learning results as prior information for conventional methods. Zhang et al.’s method [10] is such an example for metal artifact reduction. To make the best of measured data, Zhang et al. used deep learning predictions as prior images to interpolate projection data in metal corrupted areas [10].

In this work, we choose the third category for limited angle tomography. In [19], the U-Net learns artifacts from streaky images in the image domain only. Reconstruction images obtained by such image-to-image prediction are very likely not consistent to measured data as the prediction does not have any direct connection to measured data. To make predicted images data consistent, a data consistent artifact reduction (DCAR) method is proposed: The predicted images are used as prior images to provide information in missing angular ranges first; Afterwards, a conventional reconstruction algorithm is applied to integrate the prior information in the missing angular ranges and constrain the reconstruction images to be consistent to the measured data in the acquired angular range.

2 Method

2.1 The U-Net Architecture

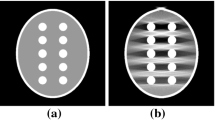

As displayed in Fig. 1, the same U-Net architecture as that in [19] is used for artifact reduction in limited angle tomography, which is modified from [22] and [18]. In this work, the input images are Ram-Lak-kernel-based FBP reconstructions from limited angle data, while the output images are artifact images. The Hounsfield scaled images are normalized to ensure stable training. An \(\ell _2\) loss function is used.

(modified from [22]).

The U-Net architecture for limited angle tomography

2.2 Data Consistent Artifact Reduction

Data Fidelity of Measured Data: We denote measured projections by \(\varvec{p}_{\text {m}}\) and the system matrix for the measured projections by \(\varvec{A}_{\text {m}}\) in cone-beam limited angle tomography. The FBP reconstruction from the measured data \(\varvec{p}_{\text {m}}\) only is denoted by \(\varvec{f}_{\text {FBP}}\). The artifact image, predicted by the U-Net, is denoted by \(\varvec{f}_{\text {artifact}}\). Then an estimation of the artifact-free image, denoted by \(\varvec{f}_{\text {U-Net}}\), is obtained by \(\varvec{f}_{\text {U-Net}} = \varvec{f}_{\text {limited}} - \varvec{f}_{\text {artifact}}\). Due to insufficient training data or sensitivity to noise in the application of limited angle tomography [19], \(\varvec{f}_{\text {U-Net}}\) is not consistent to the measured data. A data consistent reconstruction image \(\varvec{f}\) follows the following constraint,

where \(e_1\) is a parameter for error tolerance. When the measured data \(\varvec{p}_{\text {m}}\) are noise-free, \(e_1\) is ideally zero. When \(\varvec{p}_{\text {m}}\) contains noise caused by various physical effects, \(e_1\) is a certain positive value.

Because of the severe ill-posedness of limited angle tomography, the number of images satisfying the above constraint is not unique. We aim to reconstruct an image which satisfies the above constraint and meanwhile is close the U-Net reconstruction \(\varvec{f}_{\text {U-Net}}\). For this purpose, we choose to initialize the image \(\varvec{f}\) with \(\varvec{f}_{\text {U-Net}}\) and solve it in an iterative manner, i.e.,

In this way, the data consistency constraint is fully satisfied. Note that with such initialization, the deep learning prior \(\varvec{f}_{\text {U-Net}}\) contributes to the selection of one image among all images satisfying Eq. (1).

Data Fidelity of Unmeasured Data: We further denote the system matrix for an unmeasured angular range by \(\varvec{A}_\text {u}\) and its corresponding projections by \(\varvec{p}_{\text {u}}\). In cone-beam computed tomography, a short scan is necessary for image reconstruction. Therefore, in this work, we choose \(\varvec{A}_\text {u}\) such that \(\varvec{A}_{\text {m}}\) and \(\varvec{A}_\text {u}\) form a system matrix for a short scan CT system. Although the projections \(\varvec{p}_{\text {u}}\) are not measured, they can be approximated by the deep learning reconstruction \(\varvec{f}_{\text {U-Net}}\) via forward projection. Making the best of such prior information, the following constraint is proposed,

where the error tolerance parameter \(e_2\) accounts for the inaccuracy of the deep learning prior \(\varvec{f}_{\text {U-Net}}\). When \(\varvec{f}_{\text {U-Net}}\) has bad image quality, a relative large value should be set. This constraint indicates that the final reconstruction \(\varvec{f}\) is close to the deep learning prior \(\varvec{f}_{\text {U-Net}}\) in the unmeasured space and the difference between them is controlled by the parameter \(e_2\).

Regularization: To further reduce noise and artifacts corresponding to the error tolerance of \(e_1\) and \(e_2\), additional regularization is applied. In this work, the following iterative reweighted total variation (wTV) regularization [15] is utilized,

where \(\varvec{f}^{(n)}\) is the image at the \(n^\text {th}\) iteration, \(\varvec{w}^{(n)}\) is the weight vector for the \(n^\text {th}\) iteration which is computed from the previous iteration, and \(\epsilon \) is a small positive value added to avoid division by zero. A smaller value of \(\epsilon \) results in finer image resolution but slower convergence speed.

Overall Algorithm: Therefore, the overall objective function for our DCAR method is as the following,

which is a constrained optimization problem.

To solve the above objective function, simultaneous algebraic reconstruction technique (SART) + wTV is applied [15], i.e., SART is utilized to minimize the data fidelity terms of Eqs. (1) and (3), while a gradient descent method is utilized to minimize the wTV term. To minimize the data fidelity terms, SART is adapted as the following,

where the system matrix \(\varvec{A}\) is the combination of \(\varvec{A}_{\text {m}}\) and \(\varvec{A}_{\text {u}}\), the projection vector \(\varvec{p}\) is the combination of \(\varvec{p}_{\text {m}}\) and \(\varvec{p}_{\text {u}}\), and \(\mathcal {S}_{\tau }\) is a soft-thresholding operator with threshold \(\tau \) to deal with error tolerance. \(\varvec{p}_{\text {u}}\) is estimated and substituted by \(\varvec{A}_{\text {u}} \varvec{f}_{\text {U-Net}}\) in the above formula. For other parameters, \(\varvec{f}_j\) stands for the \(j^\text {th}\) pixel of \(\varvec{f}\), \(\varvec{p}_i\) stands for the \(i^\text {th}\) projection ray of \(\varvec{p}\), \(\varvec{A}_{i,j}\) is the element of \(\varvec{A}\) at the \(i^\text {th}\) row and the \(j^\text {th}\) column, l is the iteration number, N is the total pixel number of \(\varvec{f}\), \(\lambda \) is a relaxation parameter, \(\beta \) is the X-ray source rotation angle, and \(\varvec{P}_{\beta }\) stands for the set of projection rays when the source is at rotation angle \(\beta \). To minimize the wTV term, the gradient of \(||\varvec{f}||_{\text {wTV}}\) w.r.t. each pixel is computed and a gradient descent method using backtracking line search is applied [15].

2.3 Experimental Setup

We validate the proposed DCAR algorithm using 17 patients’ data from the AAPM Low-Dose CT Grand Challenge [32] simulated in \(120^\circ \) cone-beam limited angle tomography without and with Poisson noise.

System Configuration: For each patient’s data, limited angle projections are simulated in a cone-beam limited angle tomography system with parameters listed in Table 1. In the noisy case, Poisson noise is simulated considering an initial exposure of \(10^5\) photons at each detector pixel before attenuation.

Training and Test Data: To investigate the dependence of the U-Net’s performance on training data, leave-one-out cross validation is performed. For each validation, data from 16 patients are used for training while the data from the remaining one are used for test. Among the 16 patients, 25 slices from each patient are chosen for training. For the validation patient, all the 256 slices from the FBP reconstruction \(\varvec{f}_{\text {FBP}}\) are fed to the U-Net for evaluation. As the artifacts are mainly caused by limited angle scan, the effect of cone-beam angle is neglected. Therefore, 2-D slices are used for training and test instead of volumes to avoid high computation. Both the training and test data are noise-free in the noise-free case, while both the training data and test data contain Poisson noise in the noisy case.

Algorithm Parameters: The U-Net is trained on the above data using the Adam optimizer. The learning rate is \(10^{-3}\) for the first 100 epochs, \(10^{-4}\) for the \(101{-}130^{\text {th}}\) epochs, and \(10^{-5}\) for the \(131{-}150^\text {th}\) epochs. The \(\ell _2\)-norm is applied to regularize the network weights. The regularization parameter is \(10^{-4}\).

For reconstruction, in the noise-free case, the error tolerance value \(e_1\) is set to 0.001 in Eq. (1) for discretization error, while \(e_1\) is set to 0.01 for the noisy case. The U-Net reconstructions \(\varvec{f}_\text {U-Net}\) of each patient are reprojected in the angular range of \([0^\circ , 210^\circ ]\). Other system parameters are the same as those in Table 1. A relatively large tolerance value of 0.5 is chosen empirically for \(e_2\) in Eq. (3). For SART, the parameter \(\lambda \) in Eq. (6) is set to 0.8. For the wTV regularization, the parameter \(\epsilon \) is set to 5 HU for weight update. 50 iterations of SART + wTV are applied using the U-Net reconstruction \(\varvec{f}_{\text {U-Net}}\) as initialization to get the final reconstruction. For comparison, the results of 100 iterations of SART + wTV using zero images as initialization are presented.

Reconstruction results of three example slices by U-Net and DCAR in noise-free \(120^\circ \) cone-beam limited angle tomography. The images from top to bottom are from Patient NO. 17, 4, and 7, respectively. The areas marked by the arrows are reconstructed incorrectly by the U-Net, which are rectified by DCAR. The RMSE value for each slice is displayed in their subtitle. Window: \([-1000, 1000]\) HU.

3 Results

The results of three example slices in \(120^\circ \) noise-free cone-beam limited angle tomography are displayed in Fig. 2. These three slices are from Patient NO. 17, 4, and 7, respectively. In each row, the reference image \(\varvec{f}_{\text {reference}}\), the FBP reconstruction \(\varvec{f}_{\text {FBP}}\), the U-Net reconstruction \(\varvec{f}_{\text {U-Net}}\), the SART + wTV (using wTV for short in the following) reconstruction \(\varvec{f}_{\text {wTV}}\), and the DCAR reconstruction \(\varvec{f}_{\text {DCAR}}\) are displayed in order. Comparing Fig. 4(b) with Fig. 4(a), the body outline of Patient 17 is severely distorted due to missing data. Moreover, many streaks occur, obscuring anatomical structures such as the ribs and the vertebra. Figures 4(c)–(e) demonstrate that wTV, U-Net, and DCAR all are able to improve these corrupted anatomical structures. The root-mean-square error (RMSE) is reduced significantly from 328 HU for \(\varvec{f}_{\text {FBP}}\) to 138 HU for \(\varvec{f}_{\text {wTV}}\) w.r.t. the reference image. But the intensity values at the top body part are still too low in \(\varvec{f}_{\text {wTV}}\). The RMSE is further reduced to 105 HU for \(\varvec{f}_{\text {U-Net}}\) in Fig. 4(d), while DCAR reaches the smallest RMSE value of 88 HU for this slice. In the middle row and the bottom row, the U-Net is able to reconstruct most anatomical structures well. However, the structures indicated by the red arrows are apparently incorrect compared with reference images. In Fig. 4(i), the dark holes indicated by the red arrows appear, very likely because the corresponding areas in Fig. 4(g) have low intensities due to dark streak artifacts. In contrast, the two large holes in Fig. 4(n) occur without any clear clue, since no dark areas are present in Fig. 4(l). Apparently Fig. 4(n) is not consistent to measured data and by using DCAR, these two holes are reestablished, although some darkness remains.

The comparison of the mean RMSE values for wTV, U-Net, and DCAR in the leave-one-out cross-validation is plotted in Fig. 3. It indicates that wTV has the largest mean RMSE values among these three methods and DCAR achieves more than \(10\%\) improvement in mean RMSE values compared with the U-Net. This convincingly demonstrates the benefit of DCAR in reducing artifacts for limited angle tomography.

Reconstruction results of three example slices by U-Net and DCAR in \(120^\circ \) cone-beam limited angle tomography with Poisson noise. The images from top to bottom are from Patient NO. 17, 2, and 8, respectively. The areas marked by the arrows are reconstructed incorrectly by the U-Net, which are rectified by DCAR. The RMSE value for each slice is displayed in their subtitle. Window: \([-1000, 1000]\) HU. (Color figure online)

In \(120^\circ \) cone-beam limited angle tomography with Poisson noise, the results of three example slices are displayed in Fig. 4. These three slices are from Patient NO. 17, 2, and 8, respectively. In the top row, Fig. 4(b) exhibits a high level of Poisson noise especially for the areas where a lot of X-rays are missing. The Poisson noise is entirely reduced by wTV in Fig. 4(c). However, like the noise-free cases, the top body area is still distorted. Figure 4(d) indicates that the U-Net trained on noisy data is still able to reduce limited angle artifacts. In addition, most Poisson noise is also prominently reduced and only a small portion of it remains. However, many low/median contrast structures, e.g. fat and muscles in the area marked by the red arrow, are blurred and cannot be distinguished between each other. Figure 4(e) indicates that DCAR can further reduce Poisson noise and improve low/median contrast structures, as no Poisson noise remains at all and the fat and muscle tissues can be distinguished between each other. The benefit of DCAR is also demonstrated by the RMSE value as it decreases from 138 HU in Fig. 4(d) to 102 HU in Fig. 4(e). For the slice in the middle row, the U-Net also reduces most of the artifacts and Poisson noise, comparing Fig. 4(i) with Fig. 4(g). However, the cavities in the marked green box in Fig. 4(f) are missing in Fig. 4(i). They are smoothed out by the U-Net. Instead, DCAR is still able to reconstruct most of these cavities, as displayed in Fig. 4(j). For the slice in the bottom row, many dark dots occur in the U-Net reconstruction in Fig. 4(n), due to severe Poisson noise in the limited angle reconstruction in Fig. 4(l). However, these dark dots are eliminated by DCAR in Fig. 4(o). Except for these example slices, the comparison of the mean RMSE values for wTV, U-Net, and DCAR is displayed in Fig. 5. The mean RMSE values for wTV stay similar for both the noise-free and noisy cases. However, DCAR achieves more than \(24\%\) improvement compared with the U-Net in the noisy case. These remarkable results have demonstrated the robustness of DCAR to Poisson noise in \(120^\circ \) cone-beam limited angle tomography.

4 Discussion and Conclusion

In the cross-validation experiments, for each test, 16 patients’ CT data are used to train the U-Net. Since only 13 slices are chosen from each patient, 400 slices in total are used for training, which is very likely insufficient. Therefore, the U-Net training on such data has a limited generalization ability to test data. That is one potential cause to the dark holes in the U-Net reconstructions in Fig. 2 in the noise-free case. The occurrence of such dark holes make deep learning reconstructions not consistent to measured projection data. DCAR has the ability to improve such reconstructions by constraining them consistent to measured data.

In the noisy case, due to the curse of high dimensional space, noise will accumulate at each layer of the U-Net. Therefore, even if noise has a small magnitude, it still has a severe impact on the output images. That is why the U-Net is not robust to Poisson noise [19]. In this work, the U-Net is trained on data with Poisson noise. This endows the U-Net to deal with Poisson noise to a certain degree. Figure 4 indicates that the U-Net is able to reduce a certain level of Poisson noise in a manner of smoothing structures. In such a manner, some fine structures are also smoothed out, e.g., the small cavities in Fig. 4(f). In addition, in our experimental setup for the noisy case, the initial photon number without attenuation is relatively low. Hence, the Poisson noise in the FBP reconstruction images is well observed. In some cases, e.g. in Fig. 4(g), the Poisson noise is so strong that the U-Net is not able to reduce it. However, DCAR adapts the SART algorithm using soft-thresholding operators, which is noise tolerant. In addition, the wTV regularization further reduces the influence of Poisson noise as such high frequency noise pattern contradicts a gradient-sparse image, which wTV seeks.

In conclusion, the proposed DCAR method has better generalization ability to unseen data and is more robust to Poisson noise than the U-Net. This is demonstrated by our experiments, achieving significant image quality improvement. Compared to the U-Net, our method reduces the RMSE by more than \(10\%\) in the noise-free case and \(24\%\) in the noisy case for \(120^\circ \) cone-beam limited angle tomography.

References

Maier, A., Syben, C., Lasser, T., Riess, C.: A gentle introduction to deep learning in medical image processing. Zeitschrift für Medizinische Physik 29(2), 86–101 (2019)

Wang, G., Ye, J.C., Mueller, K., Fessler, J.A.: Image reconstruction is a new frontier of machine learning. IEEE Trans. Image Process. 37(6), 1289–1296 (2018)

Kang, E., Min, J., Ye, J.C.: A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med. Phys. 44(10), e360–e375 (2017)

Chen, H., et al.: Low-dose CT via convolutional neural network. Biomed. Opt. Express 8(2), 679–694 (2017)

Yang, Q., et al.: Low dose CT image denoising using a generative adversarial network with Wasserstein distance and perceptual loss. IEEE Trans. Med. Imaging 37, 1348–1357 (2018)

Han, Y.S., Yoo, J., Ye, J.C.: Deep residual learning for compressed sensing CT reconstruction via persistent homology analysis. arXiv preprint (2016)

Han, Y., Ye, J.C.: Framing U-Net via deep convolutional framelets: application to sparse-view CT. IEEE Trans. Med. Imaging 37(6), 1418–1429 (2018)

Chen, H., et al.: LEARN: learned experts’ assessment-based reconstruction network for sparse-data CT. IEEE Trans. Med. Imaging 37(6), 1333–1347 (2018)

Gjesteby, L., Yang, Q., Xi, Y., Zhou, Y., Zhang, J., Wang, G.: Deep learning methods to guide CT image reconstruction and reduce metal artifacts. In: Medical Imaging 2017: Physics of Medical Imaging, vol. 10132, p. 101322W. International Society for Optics and Photonics (2017)

Zhang, Y., Yu, H.: Convolutional neural network based metal artifact reduction in X-ray computed tomography. IEEE Trans. Med. Imaging 37(6), 1370–1381 (2018)

Louis, A.K., Törnig, W.: Picture reconstruction from projections in restricted range. Math. Methods Appl. Sci. 2(2), 209–220 (1980)

Huang, Y., et al.: Restoration of missing data in limited angle tomography based on Helgason-Ludwig consistency conditions. Biomed. Phys. Eng. Express 3(3), 035015 (2017)

Sidky, E.Y., Pan, X.: Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys. Med. Biol. 53(17), 4777 (2008)

Chen, Z., Jin, X., Li, L., Wang, G.: A limited-angle CT reconstruction method based on anisotropic TV minimization. Phys. Med. Biol. 58(7), 2119 (2013)

Huang, Y., Taubmann, O., Huang, X., Haase, V., Lauritsch, G., Maier, A.: Scale-space anisotropic total variation for limited angle tomography. IEEE Trans. Radiat. Plasma Med. Sci. 2(4), 307–314 (2018)

Würfl, T., Ghesu, F.C., Christlein, V., Maier, A.: Deep learning computed tomography. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9902, pp. 432–440. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46726-9_50

Zhang, H., et al.: Image prediction for limited-angle tomography via deep learning with convolutional neural network. arXiv preprint (2016)

Gu, J., Ye, J.C.: Multi-scale wavelet domain residual learning for limited-angle CT reconstruction. In: Proceedings of Fully 3D, pp. 443–447 (2017)

Huang, Y., Würfl, T., Breininger, K., Liu, L., Lauritsch, G., Maier, A.: Some investigations on robustness of deep learning in limited angle tomography. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11070, pp. 145–153. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00928-1_17

Würfl, T., et al.: Deep learning computed tomography: learning projection-domain weights from image domain in limited angle problems. IEEE Trans. Med. Imaging 37(6), 1454–1463 (2018)

Bubba, T.A., et al.: Learning the invisible: a hybrid deep learning-shearlet framework for limited angle computed tomography. Inverse Probl. 35(6), 064002 (2019)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. arXiv preprint (2014)

Szegedy, C., et al.: Intriguing properties of neural networks. arXiv preprint (2013)

Yuan, C., He, P., Zhu, Q., Bhat, R., Li, X.: Adversarial examples: attacks and defenses for deep learning. arXiv preprint (2017)

Antun, V., Renna, F., Poon, C., Adcock, B., Hansen, A.C.: On instabilities of deep learning in image reconstruction-does AI come at a cost? arXiv preprint arXiv:1902.05300 (2019)

Syben, C., et al.: Precision learning: reconstruction filter kernel discretization. In: Noo, F. (ed.) Procs CT Meeting, pp. 386–390 (2018)

Maier, A.K., et al.: Learning with known operators reduces maximum training error bounds. arXiv preprint (2019). Paper conditionally accepted in Nature Machine Intelligence

Riess, C., Berger, M., Wu, H., Manhart, M., Fahrig, R., Maier, A.: TV or not TV? That is the question. In: Proceedings Fully 3D, pp. 341–344 (2013)

Frikel, J.: Sparse regularization in limited angle tomography. Appl. Comput. Harmon. Anal. 34(1), 117–141 (2013)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. Proc. CVPR 1(2), 3 (2017)

McCollough, C.H., et al.: Low-dose CT for the detection and classification of metastatic liver lesions: results of the 2016 low dose CT grand challenge. Med. Phys. 44(10), e339–e352 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

The concepts and information presented in this paper are based on research and are not commercially available.

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Y., Preuhs, A., Lauritsch, G., Manhart, M., Huang, X., Maier, A. (2019). Data Consistent Artifact Reduction for Limited Angle Tomography with Deep Learning Prior. In: Knoll, F., Maier, A., Rueckert, D., Ye, J. (eds) Machine Learning for Medical Image Reconstruction. MLMIR 2019. Lecture Notes in Computer Science(), vol 11905. Springer, Cham. https://doi.org/10.1007/978-3-030-33843-5_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-33843-5_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33842-8

Online ISBN: 978-3-030-33843-5

eBook Packages: Computer ScienceComputer Science (R0)