Abstract

In this paper, we study the problem of coefficients identification in population growth models. We consider that the dynamics of the population is described by a system of ordinary differential equations of susceptible-infective-recovered (SIR) type, and we assume that we have a discrete observation of infective population. We construct a continuous observation by applying time series and an appropriate fitting to the discrete observation data. The identification problem consists in the determination of different parameters in the governing equations such that the infective population obtained as solution of the SIR system is as close as to the observation. We introduce a reformulation of the calibration problem as an optimization problem where the objective function and the restriction are given by the comparison in the \(L_2\)-norm of theoretical solution of the mathematical model and the observation, and the SIR system governing the phenomenon, respectively. We solve numerically the optimization problem by applying the gradient method where the gradient of the cost function is obtained by introducing an adjoint state. In addition, we consider a numerical example to illustrate the application of the proposed calibration method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Throughout the human history, there are diseases that due to their characteristics suddenly affect a large part of the population of a certain region, generating considerable morbidity and mortality [19]. This type of disease is called epidemic and is developed in populations that acquire a certain population density [6]. However, we observe that the term epidemic is also used sometimes in the case of noninfectious diseases that are of population scale, for instance, the dynamic of diabetes. In this work, we will analyze the epidemics due to infectious diseases and within them those that are not transmitted through vectors.

From the historic point of view, we notice that the epidemics caused several health problems on a population scale, jeopardizing the survival of different civilizations. For instance, the bubonic plague [25] and its famous plagues developed in the old Egypt [24], the epidemic of plague in Athens, Typhoid and Syracuse [4] in the old Greece, the black plague that affected the whole of Europe [34], the epidemics due to the meeting between Europeans and Native Americans in America [26], and the outbreaks of cholera due to the contamination of water with fecal matter [26]. We remark that the consequences of the plagues in America are even more important than those occurring in Europe [15].

In the last decades, there are several efforts to understand the dynamics of diseases caused by epidemic. Nowadays, the mathematical epidemiology is one of the most important branches of bio-mathematics [11, 13]. Moreover, we observe that there are several kinds of mathematical models. For instance, there are mathematical models in terms of discrete mathematics, deterministic or even stochastic ordinary differential systems or partial differential equations, and statistical theory.

The mathematical modeling of the population dynamics for infectious diseases is a standard or classical problem in differential equations theory [21, 27]. We observe that the earliest published paper on mathematical modeling of spread of disease was carried out in 1766 by Daniel Bernoulli. Trained as a physician, Bernoulli created a mathematical model to defend the practice of inoculating against smallpox [18]. According to Pesco [26], in 1927 Kermack and Mc Kendrick published an article in which they proposed a mathematical model, implemented in differential equations, that simulates the transmission of an infectious disease. This model divides the population into compartments according to the epidemiological status of the individuals, classifying them as susceptible (S), infected (I), and recovered (R), which is currently known as the SIR model.

On the other hand, related with statistical methods, we notice that inferential methods have also been developed to evaluate the correlation between epidemiological data and possible indicators of risk or health policies [1, 23]. Nowadays, epidemiology is used to describe the clinical spectrum of a disease, to know the transmission mechanisms of the disease, to know the natural history of biological systems, to make population diagnoses, to identify factors that produce the risk of acquiring the disease, and to test the effectiveness of different mitigation strategies. It is mainly used to generate information required by public health professionals to develop, implement, and evaluate health policies [12]. However, in the best of our knowledge, there are no works related with time series theory applied to model calibration in epidemiology. An exception and an advance in this research line is the recent work [9].

In the processes of mathematical modeling by ordinary differential equations, there are at least four phases: abstraction, simplification or mathematical model formulation, solution or analysis, and validation [14]. Particularly, the phase of validation requires the solution of problem well known as the mathematical model calibration.

In a broad sense, parameter calibration means that we want to find (or to calculate) some unknown constants or functions (called model parameters) from some given observations for the solution model. The mathematical concept of calibration or identification is equivalent to that of estimation in statistics. In practice, these problems can be solved by applying the inverse problem methodologies [14]. We remark that, although the estimation (calibration or identification) of unknown parameters has a significant practical importance, there are several problems which are not enough investigated due, for instance, to the lack of results on the uniqueness of the solution of the inverse problem, i.e., while the direct problem may have a unique solution, the inverse problem does not usually have the same property [21, 30]. Moreover, we observe that the inverse problem is crucial for calibrating the model and for controlling the model parameters. Approaches involving inverse problems can be successfully applied to a variety of important biological processes, including the spread of infectious diseases, allowing epidemiologists and public health specialists to predict the time interval between epidemics [5, 21].

The aim of this paper is the identification of certain coefficients (or parameters) in the ordinary differential equations system of SIR type investigated by Bai and Zhou [3] by using the inverse problem methodologies and the time series theory. We start by defining a continuous observation using the time series and an interpolation of discrete data. Then, we define an optimization problem for an appropriate cost function which is equivalent to the inverse problem. To solve the minimization problem, we apply the gradient method where the gradient of the cost function is calculated by the introduction of an adjoint state.

Among some previous and related works with the topic of parameter identification in epidemiological models, we can refer to [16,17,18, 20, 22, 29, 33]. The models considered by the authors are systems of stochastic differential equations and the notion of parameters adopted by them is given by the context of statistics theory. Thus, the methodologies are not comparable with the ones presented in this paper, since the model is a deterministic model and the notion of parameter is used to define the coefficients of the system.

The rest of the paper is organized as follows. In Sect. 2, we present the notation and precise definition of the direct problem. In Sect. 3, we define the inverse problem. In Sect. 4, we present the inverse problem solution methodology. In Sect. 5, we present a numerical experiment. Finally, in Sect. 6, we summarize some conclusions.

2 The Direct Problem

Let S(t) be the number of susceptible individuals, I(t) be the number of infective individuals, and R(t) be the number of recovered individuals at time \(t\in [0,T]\), respectively. According to the writing by Bai and Zou [3], “After studying the cholera epidemic spread in Bari in 1973, Capasso and Serio introduced the saturated incidence rate \(\beta SI(1+kI)^{-1}\) into epidemic model,” where \(\beta I\) measures the infection force of the disease and \((1+kI)^{-1}\) with \(k > 0\) describes the psychological effect or inhibition effect from the behavioral change of the susceptible individuals with the increase of the infective individuals. This incidence rate seems more reasonable than the bilinear incidence rate \(\beta SI\), because it includes the behavioral change and crowding effect of the infective individuals and prevents the unboundedness of the contact rate.

The treatment is an important way to reduce the disease spread, such as measles, tuberculosis, and flu [32]. In classical epidemic models, the treatment rate of infectives is assumed to be proportional to the number of the infectives. The proportional assumption will lead to very fast increase of the treatment resource. In fact, every community has a suitable capacity for treatment. If it is too large, the community pays for unnecessary cost. If it is too small, the community has a higher risk of disease outbreak. It is realistic to maintain a suitable capacity of disease treatment. Wang and Ruan [31] introduced a treatment function h(I), which is a positive constant m for \(I > 0\), and zero for \(I = 0\). This seems more reasonable when we consider the limitation of the treatment resource of a community.

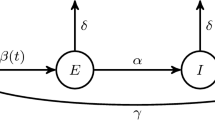

Bai and Zhou [3] formulated a nonautonomous SIR epidemic model with saturated incidence rate and constant removal rate by introducing the periodic transmission rate \(\beta (t)\). The general model is formulated as follows:

Here, \(\varLambda \) is the recruitment rate, \(\mu \) is the natural death rate, \(\gamma \) is the recovery rate of the infective population, and \(\beta (t)\) is the transmission rate at time t. Now, noticing that the first two equations in (1) are independent of the third one, and the dynamic behavior of (1) is trivial when \(I(t_0) = 0\) for some \(t_0 > 0\), Bai and Zhou [3] considered only the first two equations with \(I > 0\). Thus, these researchers restricted their study to the model given by

In the terminology of inverse problems, we have that the direct problem is given by system (2) with some appropriate initial conditions for S and I. More precisely

Definition 1

The direct problem is formulated as follows: given the constants \(T,\varLambda ,\mu ,k,m,S_0,I_0\) and the function \(\beta \), find the functions S and I satisfying the system (2) on the interval ]0, T] and the initial condition \((S,I)(0)=(S_0,I_0).\)

The direct problem is well-posed since it is the standard Cauchy problem for an ordinary differential system where the right-hand side is a locally Lipschitz function.

3 The Inverse Problem

The inverse problems consist in the determination of \(\mu \) and \(\gamma \) in the system (2) from a distribution of the number of infected individuals \(I^{obs}\) and such that the infected solution of the direct problem for \(\mu \) and \(\gamma \), denoted as \(I_{\mu ,\gamma }\), is “as close as” to \(I^{obs}\). The term “as close as” is numerically precise by considering the \(L_2\)-norm of the distance of \(I_{\mu ,\gamma }\) and \(I^{obs}\). However, we observe that \(I^{obs}\) is not defined on the whole time interval. Then, to extend \(I^{obs}\) continuously we apply time series. Then we precise the definition of the optimization problem.

To precise the application of time series, we consider the numeric values for the parameters used by Bai and Zhou [3] to investigate the stability of the periodic solution of (2) with given parameter values and small degree seasonal fluctuation in transmission rate. We set that \(\varLambda = 400, k = 0.01, \mu = 0.02, \gamma = 0.04, m = 10\), and \(\beta (t) = 0.00006 + \varepsilon \,\sin (\pi t/3)\), where \(0\le \varepsilon < 0.00006\). Then, the system (2) becomes

Now, we solve numerically system (3) and obtain the discrete synthetic observed data which is shown on Fig. 1. The next step is to adjust this data by a continuous function. Indeed, due to the structure of the data, we deduced that it would be very useful to use time series to find the best model that fits such data. Figure 2 shows the graph of the time series associated with the data. To be more precise, as the series shown in Fig. 2 shows a lot of variability, the first thing we did was to apply a transformation to the data. Then, using the programming language R [28] and the tseries library next to the Arima command, we obtained a two-differentiated AR(1) model [7], in which results are summarized in the following expression:

where B is the lag operator. Remembering that \(BY_t=Y_{t-1}\), it turns out

where \(\varepsilon _t\) is a white noise \(N(0,\sigma ^2)\).

Then, using this time series and an appropriate interpolation we construct the function \(I^{obs}(t)\) on [0, T].

We observe that model (4) corresponds to the synthetic data obtained by simulation of (3). Then for other particular cases of system (2), we proceed analogously to construct the corresponding time series model and the appropriate \(I^{obs}\) continuous function.

Plot of the number of infected population solution of (3)

Plot of the adjustment of infected population solution of (3) by the time series to an ARIMA(1, 2, 0) \(\times \) (0, 1, 0)\(_6\) model

We reformulate the inverse problem like an optimal control problem. The optimization problem is now formulated as follows: the objective function J depending on the variables \(\mu \) and \(\gamma \) is the least squares cost function and the restriction is the initial value problem for the system (2) with some parameters \(\mu \) and \(\gamma \). More precisely we have the following definition.

Definition 2

The inverse problem is defined by the optimization problem:

subject to \(I_{\mu ,\gamma }\) solution of system (2) a given initial condition \((S,I)(0)=(S_0,I_0)\), \(I^{obs}\) is the continuous observation constructed with a time series and an appropriate interpolation, and \( \delta \) a positive constant.

We remark two facts. First, the objective function J measures the distance between two functions, and therefore it must be minimized and is called cost. One of the functions involved in this cost is that which solves the initial value problem and the other is the one that adjusts the data through the series of time already found. Second, we observe that the existence of solutions for the inverse problem can be derived by applying the continuous dependence of (S, I) with respect to \((\mu ,\gamma )\). However, the uniqueness of the inverse problem solution or the proof of a unique global optimizer for J is difficult to get.

4 Methodology of the Solution for the Inverse Problem

In order to solve the optimization problem, we apply the gradient method where the gradient of the cost function is obtained by the introduction of an adjoint state. First, we deduce the gradient of the cost function when all data are continuous and then we mimic the process to introduce a discrete gradient.

4.1 Continuous Gradient

We apply the Lagrange multipliers method. We recall that the Lagrangian is a linear combination between the objective function and the constraints. As it must be scalar then the constraints, which are functions, are multiplied, respectively, by the functions p and q, and then integrated over the whole domain, obtaining the following function to be minimized:

where J is defined in (5) and \(E:=E(S_{\mu ,\gamma },I_{\mu ,\gamma };p,q)\) is given by

Thus, we have a classic minimization problem for \(\mathscr {L}\), and therefore we can apply the first-order optimal conditions to deduce the adjoint state, i.e., we calculate the gradient of the Lagrangian with respect to the variables \(\mu \) and \(\gamma \), and select the states (p, q) such that the gradient of \(\mathscr {L}\) vanishes. We note that

The calculus of \(\partial _{\mu } S,\partial _{\mu } I,\partial _{\gamma } S,\) and \(\partial _{\gamma } I\) is difficult to develop directly since the functions S and I do not depend explicitly on \(\mu \) and \(\gamma \) and the strategy is to select p and q such that \(\partial _{S} \mathscr {L}=\partial _{I} \mathscr {L}=0\). We observe that

Thus, a necessary condition for p and q such that \(\partial _{S} \mathscr {L}=\partial _{I} \mathscr {L}=0\) is given by

The backward problem (6) is called the continuous adjoint state. It gives rise to the so-called continuous gradient, which arises equal to zero the derivatives of the Lagrangian, obtaining

The gradient given in (7) can be used to solve numerically the optimization problem. However, there are several suggestions to use a discrete gradient obtained by a similar methodology instead of direct discretization for \(\nabla J\) in (7), see, for instance, [8, 10].

Scheme of the gradient calculus methodology. The definition of (S, I), J, (p, q), and \(\nabla J\) is given in (2), (5), (6), and (7), respectively. Now, the definition of \((S_\varDelta ,I_\varDelta ),J_\varDelta ,(p_\varDelta ,q_\varDelta )\), and \(\nabla J_\varDelta \) is presented in (8), (9), (11), and (12), respectively

4.2 Discrete Gradient

The discretization of (7) typically develops numerical instabilities [8, 10]. Then, the strategy is to obtain a discrete gradient by a similar methodology to that applied to get (7). We recall that the three steps are the following: (i) construct the continuous cost function given on (5), (ii) apply a Lagrangian formulation to define the continuous adjoint state given on (6), and (iii) use the continuous and the adjoint state to define the continuous gradient given on (7), see the upper part on the scheme given on Fig. 3. Then, to obtain the discrete we discretize the continuous state variables and proceed analogously to the steps (i)–(iii), see the lower part on the scheme given on Fig. 3. The specific definitions of \((S_{\varDelta },I_{\varDelta }),J_{\varDelta },(p_{\varDelta },q_{\varDelta })\), and \(\nabla J_{\varDelta }\) are given below.

The numerical solution \((S_{\varDelta },I_{\varDelta })\) is calculated by a fourth-order Runge–Kutta method. Let us select \(N\in \mathbb {N}\) and define \(\varDelta t=T/N,\) \(t_n=n\varDelta t\) and

with \(\mathbf {x}^n:=(S^n,I^n)^t\) calculated as follows:

where \(\mathbf {f}\) is defined by

i.e., \(\mathbf {f}\) is the right side of the system (2). Thus, (8) with \(\mathbf {x}^n:=(S^n,\) \(I^n)^t\) is the discretization of (2), which is called the discrete state.

The discrete cost function \(J_\varDelta \) is given by

where \(I^{n}\) is obtained by the numerical scheme (8) and \(I^{obs,n}\) is the evaluation of the continuous observation on the nodes of the mesh, i.e., \(I^{obs,n}=I^{obs}(t_n)\). Note that \(J_{\triangle }\) given on (9) is the natural discretization of J given on (5). Thus, we have that

is the discrete version or the discretization of the optimization problem (5).

In order to define the discrete adjoint state \((p_\varDelta ,q_\varDelta )\), we apply the Lagrange multipliers method. We define the discrete Lagrangian

where \(J_\varDelta \) is defined in (9) and \(E_\varDelta :=E(S_\varDelta ,I_\varDelta ;p_\varDelta ,q_\varDelta )\) is given by

Thus, by analogous arguments to the continuous case, we need to determine \((p_\varDelta ,q_\varDelta )\) such that \(\partial _{S^n} \mathscr {L}_\varDelta =\partial _{I^n} \mathscr {L}_\varDelta =0\). Thus, from differentiation of \(\mathscr {L}_\varDelta \) we obtain that \(\mathbf {p}^n:=(p^n,q^n)^t\) can calculated by the scheme

where

and \(\mathbf {x}^n\) is calculated by (8). The scheme (11) is called the discrete adjoint state.

The discrete gradient \(\nabla J_\varDelta \) is calculated by

where \(\mathbf {m}_i\) for \(i=1,\ldots ,4\) are defined in (8). The gradient given in (12) is used to solve numerically the inverse problem.

5 Numerical Results

In this section, we present a numerical result for estimating the value of parameters \(\mu \) and \(\gamma \) from synthetic observation data. We consider the system (3) and by a numerical simulation we obtain a discrete observation. Then, by the process indicated on Sect. 3 we construct \(I^{obs}\) on [0, T], see also Figs. 1 and 2. To be more precise, after processing the observation by a time series technique, we fit the discrete observation data by \(I^{obs}:[0,T]\in \mathbb {R}^+\) defined by

where the values of \(a_i,b_i\), and \(c_i\) are given by

The graph of \(I^{obs}\) given in (13) is the curve labeled as real parameters in Figs. 4 and 5.

For identification, we use the gradient method where the gradient of the cost function is defined by (12). To be more precise, we proceed to the identification with the gradient method using the curvature information [2]:

Here \(J_\varDelta \) is calculated by (9). Moreover, we remark that in our numerical simulations we consider that \(\hat{\varepsilon }=10E-6\) in the definition of \(\lambda _k\) and \(\delta =10E-12\) in the definition of \(J_\varDelta \) and the discrete adjoint state. The numerical value of the parameters is given in Table 1. The infected curve for the initial guess parameters is labeled as initial estimate of the parameters as shown in Fig. 4. The infected curve for the identified parameters is labeled as final estimate of the parameters shown in Fig. 5.

Finally, Fig. 6 illustrates the path followed by the gradient method in order to descend the values in the cost function until reaching the point where the optimum is achieved.

6 Conclusions

In this paper, we have introduced a methodology, based on discrete gradient method and time series, for parameter identification or model calibration in ordinary differential equation systems. Although the content of this research focuses on a specific ordinary differential equations system, we can deduce that the proposed method can be generalized for identification of coefficients in other types of system. Moreover, in this study, we have applied the numerical identification for synthetic observation data and expect to apply the methodology in the model calibration when the experimental data is obtained in laboratory experiments.

References

Akmatov, M.K., Kretzschmar, M., Krämer, A., Mikolajczyk, R.T.: Timeliness of vaccination and its effects on fraction of vaccinated population. Vaccine 26(31), 3805–3811 (2008)

Alvarez, F., Cabot, M.: Steepest descent with curvature dynamical system. J. Optim. Theory Appl. 120, 247–273 (2004). https://doi.org/10.1023/B:JOTA.0000015684.50827.49

Bai, Z., Zhou, Y.: Existence of two periodic solutions for a non-autonomous \(SIR\) epidemic model. Appl. Math. Model. 35, 382–391 (2011). https://doi.org/10.1016/j.apm.2010.07.002

Barriga, G., Hernández, E.: Aspectos actuales de las infecciones emergentes y reemergentes. Revista Latinoamericana de Patología Clínica y Medicina de Laboratorio 62(3), 174–182 (2015)

Bauch, C., Earn, D.: Interepidemic intervals in forced and unforced SEIR models. In: Ruan, S., Wolkowicz, G.S., Wu, J. (eds.) Dynamical Systems and Their Applications in Biology. American Mathematical Society, New York (2003)

Bhopal, R.S.: Concepts of Epidemiology: Integrating the Ideas, Theories, Principles, and Methods of Epidemiology. Oxford University Press, Oxford (2016)

Box, G.E.P., Jenkins, G.M., Reinsel, G.C., Ljung, G.M.: Time Series Analysis. Forecasting and Control, 5th edn. Wiley, Hoboken (2016)

Bürger, R., Coronel, A., Sepúlveda, M.: Numerical solution of an inverse problem for a scalar conservation law modelling sedimentation, hyperbolic problems: theory, numerics and applications. Proc. Symp. Appl. Math. 67, 445–454 (2009)

Cauchemez, S., Ferguson, N.M.: Likelihood based estimation of continuous time epidemic models from timeseries data: application to measles transmission in London. J. R. Soc. Interface 5(25), 885–897 (2008)

Coronel, A., James, F., Sepúlveda, M.: Numerical identification of parameters for a model of sedimentation processes. Inverse Probl. 19(4), 951–972 (2003)

Dayan, G.H., Shaw, K.M., Baughman, A.L., Orellana, L.C., Forlenza, R., Ellis, A., Chaui, J., Kaplan, S., Strebel, P.: Assessment of delay in age-appropriate vaccination using survival analysis. Am. J. Epidemiol. 163(6), 561–570 (2006)

Detels, R.: Epidemiology: the foundation of public health. Oxford Textbook of Global Public Health, 5th edn. (2015). https://doi.org/10.1093/med/9780199661756.001.0001

Diekmann, O., Heesterbeek, J.A.: Mathematical Epidemiology of Infectious Diseases: Model Building, Analysis and Interpretation. Wiley, New York (2000)

Engl, H.W., Flamm, C., Kügler, P., Lu, J., Müller, S., Schuster, P.: Inverse problems in systems biology. Inverse Probl. 25, 1–51 (2009). https://doi.org/10.1088/0266-5611/25/12/123014

Estrella, E.: Consecuencias epidemiológicas de la conquista de América. MS. Dpto. de Humanidades Médicas, Facultad de Medicina, Universidad Central de Quito, Ecuador (2002)

Frasso, G., Lambert, P.: Bayesian inference in an extended SEIR model with nonparametric disease transmission rate: an application to the Ebola epidemic in Sierra Leone. Biostatistics 17(4), 779–792 (2016)

Gibson, G.J., Renshaw, E.: Estimating parameters in stochastic compartmental models using Markov chain methods. Math. Med. Biol.: J. IMA 15(1), 19–40 (1998)

Hethcote, H.W.: The mathematics of infectious diseases. SIAM 42, 599–653 (2000)

Last, J.M., Abramson, J.H., Freidman, G.D.: A Dictionary of Epidemiology, 6th edn. Oxford University Press, New York (2014)

Lekone, P.E., Finkenstädt, B.F.: Statistical inference in a stochastic epidemic SEIR model with control intervention: Ebola as a case study. Biometrics 62(4), 1170–1177 (2006)

Marinova, T.T., Marinova, R.S., Omojola, J., Jackson, M.: Inverse problem for coefficient identification in SIR epidemic models. Comput. Math. Appl. 67, 2218–2227 (2014). https://doi.org/10.1016/j.camwa.2014.02.002

McKinley, T.J., Ross, J.V., Deardon, R., Cook, A.R.: Simulation based Bayesian inference for epidemic models. Comput. Stat. Data Anal. 71, 434–447 (2014)

Mohammadbeigi, A., Mokhtari, M., Zahraei, S.M., Eshrati, B., Rejali, M.: Survival analysis for predictive factors of delay vaccination in Iranian children. Int. J. Prev. Med. 6(1), 119–123 (2015). https://doi.org/10.4103/2008-7802.170868

Norrie, P.: A History of Disease in Ancient Times: More Lethal than War. Springer, Berlin (2016)

Panagiotakopulu, E.: Pharaonic Egypt and the origins of plague. J. Biogeogr. 31(2), 269–275 (2004). https://doi.org/10.1046/j.0305-0270.2003.01009.x

Pesco, P.: Modelos estocásticos para epidemias recurrentes. Tesis doctoral, La Plata, Argentina (2017)

Rhodes, A., Allman, E.S.: Mathematical Models in Biology: An Introduction. Cambridge University Press, New York (2003)

R Core Team: R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria (2018). http://www.R-project.org

Streftaris, G., Gibson, G.J.: Statistical inference for stochastic epidemic models. In: Proceedings of the 17th International Workshop on Statistical Modeling, pp. 609–616 (2002)

Tarantola, A.: Inverse Problem Theory and Methods for Model Parameter Estimation. SIAM, Philadelphia (2005)

Wang, W., Ruan, S.: Bifurcations in an epidemic model with constant removal rate of the infectives. J. Math. Anal. Appl. 291, 775–793 (2004). https://doi.org/10.1016/j.jmaa.2003.11.043

Wu, L., Feng, Z.: Homoclinic bifurcation in an SIQR model for childhood diseases. J. Differ. Equ. 168, 150–167 (2000). https://doi.org/10.1006/jdeq.2000.3882

Xu, X., Kypraios, T., O’neill, P.D.: Bayesian nonparametric inference for stochastic epidemic models using Gaussian processes. Biostatistics 17(4), 619–633 (2016)

Zietz, B.P., Dunkelberg, H.: The history of the plague and the research on the causative agent Yersinia pestis. Int. J. Hyg. Environ. Health 207(2), 165–178 (2004). https://doi.org/10.1078/1438-4639-00259

Acknowledgements

We thank to research projects DIUBB 172409 GI/C and FAPEI at U. del Bío-Bío, Chile. AC thanks to the research project DIUBB 183309 4/R at U. del Bío-Bío, Chile. IH thanks to the program “Becas de doctorado” of Conicyt-Chile. Ian Hess and Francisco Novoa-Muñoz would thank the support of the program “Fortalecimiento del postgrado” of the project “Instalación del Plan Plurianual UBB 2016–2020”

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Novoa-Muñoz, F., Espinoza, S.C., Pérez, A.C., Duque, I.H. (2019). Calibration of Population Growth Mathematical Models by Using Time Series. In: Antoniano-Villalobos, I., Mena, R., Mendoza, M., Naranjo, L., Nieto-Barajas, L. (eds) Selected Contributions on Statistics and Data Science in Latin America. FNE 2018. Springer Proceedings in Mathematics & Statistics, vol 301. Springer, Cham. https://doi.org/10.1007/978-3-030-31551-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-31551-1_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-31550-4

Online ISBN: 978-3-030-31551-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)