Abstract

The worldwide dense deployment of optical fiber networks has been driven by the desire of higher transmission capacity necessary for high-level data communications. Supporting all the higher Open Systems Interconnection (OSI) layers is the physical layer represented by the optical fiber, which, unfortunately, suffers from mechanical fragility. The robust operation of the whole network, therefore, can be jeopardized by mundane events such as the strangling of the fiber cable caused by a passing truck on a highway or by a break due to the action of rodents. In order to deal with this issue, physical layer supervision is of the utmost importance and, over the years, reflectometry techniques have been developed and upgraded so that the protection of the optical fibers is ensured. This chapter performs a brief revision of such reflectometry techniques with special focus on the Optical Time Domain Reflectometry and on one of its most interesting recent developments, the Photon-Counting Optical Time Domain Reflectometry. It further presents a technique for centimeter-resolution long-distance measurements of optical fibers in practical times and discusses its performance in detail.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

2.1 Introduction

Although fiber optics were suggested as the best choice for data transmission in 1966 [1], only after 1980s optical communications systems became commercially viable [2]. This was only possible due to the reduction of optical fiber losses as well as the development of semiconductor lasers and optical amplifiers. Undoubtedly, the major factor in the advent of the “information age” was the development of fiber optics along with microelectronics technology.

The advantages of optical fibers over other transmission methods (copper cables, satellites, etc.) are quite relevant: immunity to electrical or magnetic interference, low weight in relation to metallic cables, low manufacturing cost and extremely broad transmission bandwidth, just to name a few. Although its great reliability, optical fibers can sometimes be damaged, by a variety of causes [3, 4]: ships can break optical fibers or marine animals can chew the fiber protective coating in the ocean, for example; on land, optical fiber link follow physical infrastructures such as highways, railroads, and electric power transmission lines which are exposed to unexpected pitfalls due to works, storms, or accidents that can end up damaging the fiber cable.

In long-distance optical telecommunications links, fiber monitoring is crucial due to the negative impact that faults in the optical fiber have on the power budget of the links, which substantially limits the achievable transmission rates. The impact is not limited, however, to high data rate applications, but extend to essential services such as banking, telephone, and internet service links, which can be interrupted.

Amongst the plethora of techniques that can be used for determining the occurrence of a fault as well as locating it along the fiber link, reflectometry systems are an extremely attractive one. The physical phenomenon underlying such class of techniques is Rayleigh scattering, which causes light from a propagating probe pulse along the fiber to be scattered back to the sender, where it can be detected and processed to yield useful information about the fiber’s conditions.

In this chapter, apart from a comprehensive revision on reflectometry techniques applied to fiber optics, recent advancements in optical fiber monitoring using the so-called photon-counting Optical Time Domain Reflectometer will be presented. The technique offers ground-breaking compromise between dynamic range, spatial resolution, and monitoring times by combining independent photon-counting OTDR strategies and a powerful fault location algorithm. In order to provide the reader with all the background necessary to grasp the concepts involved, a brief revision of the main optical fiber monitoring strategies based on reflectometry will be presented in Sect. 2.2, followed by a detailed review of photon-counting OTDR protocols in Sect. 2.3. In Sect. 2.4, the foundations of the fault location algorithm, as well as its practical implementation, are provided. Section 2.5 closes the chapter by presenting a technique for centimeter-resolution optical fiber monitoring and discussing its results.

2.2 Reflectometry Strategies for Optical Fiber Monitoring

The Rayleigh scattering phenomenon was discovered in 1871 by John William Strutt (Lord Rayleigh) during observations of the solar light scattering in the Earth’s atmosphere [5, 6]. It is associated to the scattering of electromagnetic radiation from particles with radii much smaller than its wavelength [2, 7], and relates the scattered power to the wavelength as

In optical fibers, the disordered nature of the silica glass medium causes microscopic fluctuations in the local density and, thus, in the index of refraction experienced by the guided light wave. Since such microscopic fluctuations are still orders of magnitude smaller than the wavelength of the transmitted light (usually in the near infrared range) energy losses due to the Rayleigh scattering of light are observed [2]. Even though the scattering is omnidirectional, a small portion of the scattered light is collected into the acceptance cone of the fiber and propagates in the the opposite direction of the incoming light [8].

adapted from [10]

Simplified block diagram of a general reflectometry technique for optical fiber probing,

Since the process of Rayleigh scattering is predominantly elastic, the wavelength of this back-propagating light is the same as the incoming light, so no spectral filtering can separate them [9]. An optical circulator, on the other hand, is a device that allows separation of light signals that travel in opposite directions inside the fiber, and can be used to evaluate the Rayleigh backscattered portion of light. By putting together a light source, an optical circulator, and a photodetector as in Fig. 2.1, a system for measuring the intensity of Rayleigh backscattered light is created. Furthermore, since losses and reflections along the fiber will be experimented by the backreflected light, it can be used to evaluate the conditions of the fiber while only accessing one of its input ports. Several of such single-ended (as it only access one of the ends of the fiber) fiber probing techniques making use of Rayleigh backscattering have been developed over the years.

The Optical Time Domain Reflectometry. The first single-ended method designed for gaining information of undesirable losses in an optical fiber was the Optical Time Domain Reflectometer (OTDR) [11]. The OTDR can be interpreted as a device that measures the impulse response of the fiber, i.e., its reflectivity as a function of time. To do so, a probe pulse is transmitted and the Rayleigh backscattered power is measured in the photodetector connected to port 3 of the circulator as a function of time, as seen in Fig. 2.1. Also, in order to measure the impulse response of the fiber the condition of one pulse inside a fiber at a time must be satisfied [24]. Given the index of refraction of the fiber and the speed of light in vacuum, one can associate the time to the position of the fiber where the scattering took place and, thus, losses can be located. Figure 2.2 depicts an example of OTDR profile showcasing typical events that can identified.

Example of OTDR profile showcasing common events that can be identified. The profile has been acquired with a standard OTDR device Anritsu MT9083C [12]

The accuracy of an event location is determined by the temporal width of the propagating pulses: as it approaches zero, the trace produced by the OTDR approaches the impulse response and the accuracy is enhanced. On the other hand, light sources have limited output peak powers and, as the width approaches zero, the total optical power carried by the probe pulse also approaches zero. Due to the intrinsic attenuation of the fiber, only short fiber lengths can be reached if the width of the probe pulse is too short.

Although enlarging the probe pulse allows for longer fibers to be evaluated, the accuracy is compromised. The spatial resolution, or accuracy in locating a loss event, and the dynamic range, or the maximum amount of optical losses that can be withstood by the probe pulse to reach a position of the fiber, constitute a compromise relationship for OTDR devices [2, 8]. It is interesting to note that, in order to achieve better spatial resolution, the quality of the optoelectronic devices must be enhanced. In other words, in order to be able to generate and detect short optical pulses, both a high-speed modulator and a high-speed detector must be employed.

Experimental and visual evidence of the trade-off between spatial resolution and dynamic range. In both panes, the same fiber is probed but with a 200 ns-wide pulse in the upper pane and 20 ns-wide pulse in the lower pane. As the dynamic range decreases from 24 to 13 dB, the accuracy in determining the position of the reflection peak (inset of both panes) increases. The profiles have been acquired with a standard OTDR device Anritsu MT9083C [12]

An experimental and visual evidence of the the practical compromise relationship between the spatial resolution and the dynamic range is presented in the two panes of Fig. 2.3. The results correspond to the OTDR profiles of the same fiber, but with different pulse widths of the probing pulse. While the results of the upper pane correspond to a 200 ns-wide pulse yielding a 24 dB dynamic range, the results of the lower pane were acquired with a 20 ns-wide pulse yielding a reduced 13 dB dynamic range. As the dynamic range decreases from one result to the other, however, it becomes clear from the inset of the figures that the spatial resolution has been greatly enhanced and the position of the reflection has been located with higher precision.

The Optical Frequency Domain Reflectometry. While OTDRs use a pulsed optical source to measure an approximation of the impulse response of the fiber, the Optical Frequency Domain Reflectometer (OFDR) uses a continuous-wave (CW) light source to measure the transfer function of the fiber [13,14,15]. It is a well-known fact from theory of systems that the impulse response and the transfer function are uniquely related by the Fourier Transform, so the positions of losses can be determined by analyzing its response in the frequency domain.

Even though many different implementations of OFDR can be found in the literature, two distinct sets can be identified: coherent (C-) and incoherent OFDR (I-OFDR). In the former, the wavelength of a CW coherent light source is swept and the system measures the optical heterodyne beat between a portion of the probing light and the backscattered light [14]. In the latter, the frequency of a radio-frequency optical sub-carrier is swept and the beat between reference and backscattered signals is performed in the electrical domain instead of in the optical domain as for C-OFDRs [16]. In either case, utilizing the Fourier Transform, the frequency profile can be transformed back to the time domain so the events can be located in the fiber. Also, the speed of the wavelength or sub-carrier frequency sweep defines the spatial resolution of OFDR techniques.

A third flavor of OFDR is the Step-Wise Incoherent OFDR, where instead of using the sweeping speed of the optical sub-carrier to generate the heterodyne beat between reference and measured signals, the steady-state response of the fiber for each frequency value is measured [17]. It has been recently shown that this method allows for characterization of optical fibers even when the frequency range of sweep is not sufficient for a Fourier Transform-based translation from frequency to time. In such case, the analysis of the complex spatial-dependent phasor output from the measuring apparatus is analyzed in the frequency domain with a high-dimensional signal processing operator to yield relevant spatial resolution.

Modifications to the Original OTDR. Several modifications have been proposed and demonstrated using the OTDR as a core idea, with the objective of increasing the dynamic range and signal-to-noise ratio (SNR), while enhancing the spatial resolution. The coded OTDR (COTDR) is a proposition that overcomes the tradeoff between SNR and resolution [18, 19]: instead of a single pulse, random code sequences are used to modulate the probing light, and the reflection point is located by correlating the backscattered light with the delayed version of the code.

Due to the difficulty in generating the codes necessary for COTDR, the Chaos OTDR was developed, where the random codes are generated by perturbing a semiconductor laser with optical injection [20, 21]. These methods, however, consider light sources with large spectral bandwidths and might not be fit for monitoring wavelength-division-multiplexing (WDM) networks. The Tunable-OTDR, on the other hand, makes use of highly coherent optical sources and optical amplifiers to generate spectrally narrow pulses adapted to WDM networks [22].

In the classical OTDR technique, detection involves the use of p-i-n and Avalanche Photodiodes since the backscattered optical power is continuous at the detector. The maximal Spatial Resolution, i.e., the minimum distance detectable between two events, therefore, is dependent on the detector’s bandwidth and on the pulse’s temporal width. Unfortunately, the reduction of the bandwidth has a direct negative impact on the noise-equivalent power of the photodetector and imposes a limitation NEP and imposes a limitation on the measurement range.

An alternative to increased Spatial Resolution is the Photon-Counting OTDR, which employs single photon detectors. Such devices offer better sensitivity since the NEP is minimal given that a single photon is capable of generating a detection current P. Eraerds et al. [23]. With the advent of the Geiger-mode Single Photon Detector (SPD) in the telecommunication wavelength, the Photon-Counting OTDR was proposed. Operating in the gated mode, such SPDs can attain high photon detection efficiencies and extremely low dark count rates [23]. The gated operation requires, however, an intelligent management system to reduce monitoring periods and enhance the acquisition of statistically relevant data [24].

The principal advantage of the Photon-Counting OTDR over standard OTDR strategies is an alleviated compromise relationship between spatial resolution and dynamic range. The core to this enhancement is the high sensitivity of the single-photon detector, which allows for the detection of backscattered light from short pulses even after long distances [23]. Slower data acquisition is, however, a drawback of the Photon-Counting OTDR and also is founded on the characteristics of the single-photon detector, which undergoes a so-called dead time after a detection during which no data is acquired [25, 26].

To try and minimize the low data acquisition rate of Photon-Counting OTDRs, [24] used a train of gates that triggers the single-photon detector multiple times; the results show decent achievable dynamic range with minute time measurements. On a parallel work, [27] focused on increasing the resolution of Photon-Counting OTDR measurements, reaching the mark of centimeter resolutions. These two works not only highlight the results of Photon-Counting OTDR techniques but are also of paramount importance to the results presented in Sects. 2.4 and 2.5. Therefore, they will be revised in the following.

High Dynamic Photon-Counting OTDR. The High-Dynamic Photon-Counting OTDR refers to the technique presented in [24], which makes use of semiconductor optical amplifiers (SOA) to carve high peak power pulses in a continuous wave signal output from a highly coherent tunable laser source (TLS). Given the input of the TLS, the stimulated emission process will cause the amplified light to be concentrated within the original TLS bandwidth even if the SOA is saturated [28]. Therefore, to achieve maximum output peak power, an electronic driver (DR) capable of supplying 50 ns-wide current pulses of 2 A drives the SOA.

By synchronizing the pulse emission of the SOA with a train of enabling pulses directed to the detector, an FPGA board time-tags the detections which are processed by a personal computer and create the fiber profile. The experimental setup of the High-Dynamic Photon-Counting OTDR is presented in Fig. 2.4, where a variable optical attenuator can be seen before the single-photon detector input; its role is to prevent the non-linear operation of the device by controlling the average mean number of photons that impinge on the detector.

adapted from [29]

Experimental setup for the high-dynamic photon-counting OTDR,

The time-tagging of the detection pulses performed by the FPGA board is further detailed in Fig. 2.5. Since the detector must undergo a dead time period after detections, the train of enabling pulses, or gates, must abide by this time. In order to acquire detections from all the positions of the fiber, the optical pulse and the train of gates is shifted in time; this is performed with the help of a digital delay generator (DDG) that creates pulses with an arbitrary time delay with respect to an input trigger. Furthermore, each detection is associated with a 16 bit word composed by the Gate and Pulse number, respectively, which are processed by a personal computer.

The results achieved by the High-Dynamic Photon-Counting OTDR are considerable in terms of dynamic range, resolution, and data acquisition rate. The system allows for minute-long measurements that output results with approximately 5 m resolution and a 32 dB dynamic range. Even if the spatial resolution is not in the centimeter range, With all things considered, this technique figures as a good candidate for quickly evaluating an optical fiber.

High-Resolution Photon-Counting OTDR. A Time-to-Digital Converter (TDC) is a device that allows measurements of extremely short time spans between two events and can be used to increase the time resolution of a detection event. While for the High-Dynamic Photon Counting OTDR, detections are associated to the time stamp of the gate pulse that they correspond to, in the High-Resolution Photon-Counting OTDR a TDC is responsible for time-tagging the detection with respect to the optical pulse. Furthermore, the optical probes generated by the light source in this setup are extremely short, in the order of hundreds of femtoseconds. The conjunction between short pulse generation, high single-photon detector sensitivity, and fine TDC time-tagging allows the High-Resolution Photon-Counting OTDR to achieve centimeter resolution even in long-distance measurements.

The simplified block diagram of the experimental setup depicted in Fig. 2.6 shares many similarities with the one in Fig. 2.4, with the main differences being the replacement of the TLS by an Ultra Wideband Optical Source (UWS) and the time-tagging system, which instead of being conducted by the FPGA board, is a responsibility of the TDC. The transform-limited pulses generated by the UWS are 115 femtoseconds-wide and cover a broad spectral range from 800 to 1600 nm approximately [30]. In order to operate under these conditions, the pulse repetition rate is internally set as 5.8 MHz, which may conflict with the restriction of one pulse inside the fiber at a time. In order to reduce the pulse repetition rate and satisfy this condition, an SOA is connected after the UWS.

adapted from [29]

High-resolution photon-counting OTDR experimental setup depicted as a simplified block diagram,

The SOA is triggered by an electronic driver (DR) that generates 4 ns-wide 600 mA pulses with the goal of allowing the desired optical pulse to be transmitted instead of actually amplifying it. Again, the synchronization between the pulses generated by the UWS and the pulses that trigger the SOA is performed by a Digital Delay Generator (DDG). A personal computer is responsible for interpreting the detections of the TDC and composing the fiber profile, which can achieve a spatial resolution of 2.8 cm.

Unfortunately, the dynamic range achieved with this setup is limited by 14 dB due to the spectral filtering performed by an optical band-pass filter included in the experimental setup between the UWS and the SOA. The role of this filter is to shorten the bandwidth of the optical pulses so that tunability can be enforced [10]. Additionally, the detection mechanism, even though responsible for the high resolution achieved, only allows a single detection to be acquired per optical pulse transmitted through the fiber. This, in turn, reflects in a low rate of data acquisition.

2.3 Fault Location Algorithms

The main objective of a physical layer supervision technique such as the OTDR is to identify the potential faults that cause undesirable power losses over the transmission channel. From the set of all possible events that can be identified in an OTDR, it is possible to differentiate between three subsets: the events which are accounted for and are necessary for the link operation—this includes power splitters, connectors, filters, etc; undesirable events that cause minor power losses—this subset includes losses due to bending of the fiber where the radius of curvature is close, but not smaller, to the critical radius; and, finally, the events which are unaccounted for and substantially affect the power budget of the optical link—fiber breaks, extreme bends, and defective connectors are included in this subset.

Given a fiber profile, measured with the supervision technique that best suits the optical network, it is the role of an operator to distinguish between these three sets. This is extremely relevant since, if events that fall into the third set are identified, an in-field repairing unit must be scheduled so that the link operation is not compromised either for too long or not at all: recent developments on Software Defined Optical Networks (SDON) allow re-routing of data traffic to avoid defective fibers while maintaining quality of service (QoS) [31].

It is also relevant, in this context, that the information regarding the position of the fiber to be repaired is accurate. As detailed in previous sections, this is intimately tied to the available spatial resolution of the supervision technique. However, it is also related to considerable work force spent by the operator, especially when one considers the dense deployment of optical fibers that the world has experienced. Therefore, a digital signal processing tool capable of identifying the positions of faults in the digitized fiber profile could alleviate the workload and, eventually, hasten and simplify the scheduling process of the in-filed repairing units.

Specifically in OTDR profiles, the power losses are directly associated to abrupt breaks in the trend of the data stream. Thus, in order to automatically identify the faults, an algorithm must me able to identify the positions of such trend breaks and their magnitudes. Furthermore, it is imperative that the algorithm operates even in the presence of noise, since the imperfection of the measurement apparatus will inherently produce noisy fiber profiles. Identification of trend breaks, also known as step filtering, can be found in the literature, with a handful of algorithms fit to deal with problem [32,33,34,35,36]. Recently, however, a comparative study was conducted where several of such algorithms were tested and the Adaptive \(\ell _1\) Filter was elected as the most prominent candidate for application in identification of multiple faults in fiber profiles in the presence of noise [37].

In order to analyze the OTDR profile and identify the faults, a simplified model of the expected trend breaks is created, in which the remaining features such as noise contributions from CRN [38], noise contributions from measurement uncertainty [24], or even reflection peaks are considered as noise. The slope of the fiber, on the other hand, is a feature that cannot be neglected, since the algorithm would eventually compensate the continuous power loss with spurious trend breaks and overload the result with false positives. Fortunately, the inclusion of the slope in the model is a simple task and does not compromise the processing [37, 39]. The simplified model of an arbitrary OTDR profile \(y\left( d\right) \) (written in the logarithmic scale) is then written as:

where \(\alpha \) corresponds to the negative slope of the profile (the intrinsic optical attenuation of the fiber), and \(d_i\in F\) are the eventual fault positions with amplitudes \(a_i\) that belong to the set F of all faults. \(u_1\) denotes the Heaviside function that represents discontinuous steps in the data series.

Identifying the set F is the ultimate goal of the fault location algorithm as it will characterize the fiber in terms of the potential fault events that are present. Since the model is defined and the functions that are expected to compose the profile \(y\left( d\right) \) are known, the Adaptive \(\ell _1\) Filter implements a basis pursuit problem that recovers the set F given a larger set \(\mathrm{I\!F}\) of all the possible faults. The procedure involves a mixed \(\ell _1/\ell _2\) norms minimization, where an equilibrium between the best approximation of the original signal and the least amount of components in the set F is demanded [37]. The result is a highly accurate identification of not only the faults positions, but also its magnitudes; Fig. 2.7 presents the results of the Adaptive \(\ell _1\) Filter algorithm on a standard OTDR trace, where the selections accurately match the positions and magnitudes of the faults.

adapted from [40]

Adaptive \(\ell _1\) Filter selections for a standard OTDR profile. Faults at 2 and 8.4 km have been successfully identified as well as the corresponding magnitudes in dB,

The versatility provided by such a signal processing tool is extremely broad from the perspective of optical network supervision. First and foremost, it alleviates the workload of an operator, which may access only the output of the filter as an event list instead of inspecting the profile in search for the positions and magnitudes of faults. Furthermore, the massive deployment of optical fibers fomented by the ever-increasing need for higher data transmission capacity poses a problem when one considers supervising and managing all the links; an automated procedure can process multiple profiles and identify the ones which require dedicated attention. In the next section, a procedure that allows minute-time long-distance supervision with increased resolution is presented, having the fault detection algorithm at its core.

2.4 Long-Distance Centimeter-Resolution Monitoring Mediated by Fault Location Algorithm

Characterizing an optical fiber with high precision is, as discussed in the previous sections, an important ingredient of modern optical networks, which allows for robust managing of the optical network from the operator’s point of view and with higher quality of service to the user. More often than not, it also translates in cost reduction due to the simplification of tasks. In this context, the more precise is the identification and characterization of an event, the better, a desire that often encounters the compromise relation between dynamic range and spatial resolution.

The Photon-Counting OTDR strategies presented in Sect. 2.2 offer distinct but complementing characteristics to this compromise relation, i.e., the High-Dynamic Photon-Counting OTDR offers high-dynamic range (32 dB) while delivering descent spatial resolution (6 m), and the High-Resolution Photon-Counting OTDR offers extremely high resolution (3 cm) while exhibiting considerable dynamic range (14 dB). One other feature that distinguishes between these strategies is the speed of data acquisition, which is optimized in the case of the former but extremely slow for the latter. At the same time an algorithm capable of accurately identifying the fault positions and magnitudes was discussed in Sect. 2.3.

By making use of these three elements and concatenating their outputs, it is possible to achieve fast high-resolution measurements of long-distance links. In this hybrid structure, the high dynamic range of the system depicted in Fig. 2.4 and the high resolution provided by the system depicted in Fig. 2.6 are harnessed by mediating their operation with the Adaptive \(\ell _1\) Filter. A fully automatic monitoring structure was developed [10] and the chart shown in Fig. 2.8 depicts the steps taken by the system to inspect a fiber optical link.

adapted from [40]

Flowchart of the Hybrid and Automatic \(\nu \)-OTDR,

By following the chart depicted in Fig. 2.8, four steps are identified and are as follows. First, the system uses the High-Dynamic Photon-Counting OTDR to obtain a fiber profile with a high acquisition rate and coarse spatial resolution; in a 20 km link, for example, the acquisition rate is still quite high and the system allows the determination of the last point of the profile with a signal-to-noise ratio of 10 dB in just under two minutes [28]. In the second step, the digital signal processing step, the Adaptive \(\ell _1\) Filter attempts to identify those positions which might contain events; this step is also quite fast with accurate results in just under a minute [24].

In the third step, the High-Resolution Photon-Counting OTDR analyzes the regions around the positions estimated in step two, i.e., those selected by the algorithm; even though this third step would take a substantially longer time than the others if it was to inspect the whole fiber, the fact that it only needs to acquire data from a small set of positions causes it to be performed in practical times, in the order of few minutes. Finally, the fourth step involves analyzing the results of the third step once again with the fault location algorithm and providing a highly accurate event list to the operator.

Since each of the building blocks of Fig. 2.8 have already been described individually in Sects. 2.2 and 2.3, the setup of Fig. 2.9 presents the full measurement apparatus. It is interesting, however, to note that the individual setups can be easily identified in the full setup.

adapted from [40]

Architecture of the photon-counting OTDR for long-distance centimeter resolution monitoring,

A personal computer is responsible for managing the coexistence of the apparatuses at each of the measurement steps while also being in charge of processing the acquired data with the fault location algorithm. In the first step, the optical switch’s output must be connected to its upper input so that coarse monitoring can be performed. At the same time, the electrical switch outputs a signal to the single-photon detector coming from the FPGA. In the third, step, fine monitoring, both switches modify their outputs triggered by the personal computer [10, 24, 29].

An important comment is regarding the abundance of devices in the setup of Fig. 2.9, where the only device that is shared between both strategies is the single-photon detector. Unfortunately, the fine resolution Photon Counting OTDR is extremely dependent on a light source capable of generating short optical pulses. In order to generate such short pulses in time, the spectrum of the optical sources is enlarged, making it inviable to use the same optical source for both Photon Counting OTDR strategies [41]. That is because the coarse Photon Counting OTDR relies on a narrow linewidth continuous optical source so that the high peak power pulses can be generated. Likewise, the extremely different characteristics of the electrical pulses sent to the semiconductor optical amplifiers on either part of the setup does not allow for the devices to be reused.

2.5 Monitoring Different Optical Fiber Links

In order to demonstrate the capabilities of the long-distance centimeter-resolution system presented in the previous section, different optical fiber links have been selected. These links exhibit characteristics that: allow to validate the possibility of characterizing an event at long-distances with centimeter-resolution in minute-times; allow to observe the possibility of tuning the wavelength of the probing signal so that the system can be adapted to monitor WDM optical networks.

First, the monitoring system was tested in a long-haul fiber with total length of 36 km. This fiber is composed of three 12 km fibers connected one to the other, so it is expected that at least three events are identified by the fault location algorithm: 2 events on the connection between the stretches; and 1 event corresponding to the end of the fiber. The acquisition of the High-Dynamic Photon Counting OTDR step takes 150 s. Considering a 6 m spatial resolution achievable by the High-Dynamic Photon Counting OTDR, the 36000 m profile yields a digitized data series containing 6000 points. This profile is processed by the Adaptive \(\ell _1\) Filter in under two minutes, and the results of both the measured profile and the filter selections are displayed in Fig. 2.10.

It is interesting to note that the filter identified all the expected positions of faults but also outputs a few false positives in the first positions. The first false positive detection of the filter corresponds to the positive step of the beginning of the fiber, i.e., the transition between 0 and the first point measured. Since the filter consistently detects such transitions in all the profiles, it can be neglected for the purpose of fault analysis. The second false positive, however, is not associated to any preconceived features and must not be neglected.

adapted from [40]

HD-\(\nu \)-OTDR trace and the filtered signature of the \(\sim \)36km fiber link,

The list of events output from the Adaptive \(\ell _1\) Filter is the following: 0 m; \(1011\pm 6\) m; \(12303\pm 6\) m; \(24603\pm 6\) m; and \(36830\pm 6\) m. As already commented, the first positions (0) will be neglected and not used in the analysis. The next step is to transmit the findings of the filter to the High-Resolution Photon Counting OTDR, which will inspect the fiber in the regions around the fault candidates. This step will have two main effects: the first one has already been discussed, and is the centimeter inspection of the events present in the profile; the second, is the eventual elimination of false positives generated by the first run of the algorithm. This is accomplished by analyzing the results once again using the Adaptive \(\ell _1\) Filter. If there are no faults in this position, the detailed centimeter-measurement will clarify this feature and no selections will be made by the algorithm.

In Fig. 2.11, the results of the third and fourth steps of the chart are presented. Along with the set of positions to be further inspected, the High-Resolution Photon Counting OTDR also requires a range around which the fiber should be probed. This range is arbitrarily set at \(\pm 50\) m, which translates into 100 m of fiber per candidate to be analyzed with a 3 cm resolution (around 3000 points in the digitized data series); the total time taken by the third step is 150 s on average for each candidate, while the fourth step lasts around 75 s, also on average, for each candidate. The total accumulated time amounts to 900 s, since four regions were inspected during these steps; the candidate at \(1011\pm 6\) was dismissed as an event and is not depicted in Fig. 2.11.

adapted from [40]

Results of events validated during the final steps of the fault detection routine,

In this first test, the fault detection method described in Fig. 2.8 was able to deliver high-resolution event detection in a 36 km fiber under 20 min. Additionally, the event list produced is double-checked by the processing routine and eliminates false positive results from the first algorithm analysis. An important note on this methodology is that, as the number of candidates detected in the second step increases, the total procedure time also increases since all the events must be further investigated in the High-Resolution step.

An important feature of optical network monitoring is adaptability, i.e., being able to evolve with the modern architectures and technologies. Wavelength-division multiplexing is one major recent evolution in optical networking and it is most likely to figure in most of the future deployed optical fiber links. Fortunately, both the High-Dynamic and the High-Resolution Photon Counting OTDR offer what is called tunability, the capacity of tune the wavelength of operation to match a specific channel thus adapting to WDM networks.

adapted from [40]

Results of events validated during the final steps of the fault detection routine,

The evaluation of the monitoring solution in such a network was performed by setting up a simple WDM link with a feeder fiber of \(\sim \)2 km and two wavelength-dedicated fibers of \(\sim \)3.6 and \(\sim \)12 km. These two fibers, in turn, are connected to the feeder fiber through a passive wavelength multiplexer that divides the incoming light spectrum into independent channels. The specific device employed in the experiments is tuned to channels 37 (Fig. 2.12a) and channel 40 (Fig. 2.12b) of the ITUT G.694.1 WDM grid. In Fig. 2.12, the monitoring and fault finding results for the first two steps in the monitoring procedure are depicted. Notable is the absolute distinction between the measurement of channel 37 (Fig. 2.12a) and channel 40 (Fig. 2.12b), indicating that the probing signal is contained within the bandwidth ascribed to the respective channel and does not leak to adjacent channels.

Two distinct features of the fiber profiles displayed in Fig. 2.12 that deserve comments are: the false positives detected by the fault location algorithm after the fiber end, apparent in Fig. 2.12a; and the increased counts right after the reflection peak in the end of the fiber, apparent in Fig. 2.12b. These two effects are intimately related to the intrinsic physical properties of the single-photon detector and can be either bypassed or understood by characterizing and monitoring the operation of this device.

The false positives are associated to the noisy trend after the fiber end that, unfortunately, are interpreted by the algorithm as trend breaks. This feature, however, can be easily neglected in the final analysis since the number of counts after the end of the fiber in the single-photon detector is equal to the expected when considering the detector’s dark count rate, a characteristic described in the device’s datasheet [42]. Therefore, by monitoring the number of counts, the measurement apparatus is capable of identifying the point after which the counts are not associated to Rayleigh backscattered photons but, rather, merely to the detector’s noise. Detections associated to positions associated to dark counts are neglected after the signal processing step and are not fed to the subsequent monitoring steps.

The increase in the number of counts after the high-reflectivity event that creates a exponential skirt at the end of the fiber profile in Fig. 2.12b is associated to the afterpulse effect of single-photon detectors [43]. This effect is related to the trapping of charges inside the semiconductor junction and scales with the input power directed to the detector. Since the appearance of such high-reflectivity events is not controllable by the monitoring apparatus but is associated to the quality of connections along the link, there is no means of bypassing such feature in the profile. Furthermore, it is not unlikely that the fault location algorithm will create false positives due to this feature since it is not described in its simplified model of the profile. Indeed, it can be noted that a set of false positives has been selected by the algorithm. Fortunately, the false positives associated to the afterpulse effect are present in the vicinity of a real event, and can be later processed by the High-Resolution monitoring without expending otherwise unnecessary monitoring time. Additionally, the afterpulse effect has less impact on the High-Resolution measurement due to the method associated to the data acquisition in this step [44].

In Fig. 2.13, the results of the third and fourth step of the monitoring routine are depicted. The false positives that have been identified in the High-Dynamic OTDR profile of channel 40 are not depicted, but have been excluded from the event list after analysis in the fourth step. Also, since the detection of the connection between the feeder fiber and the wavelength multiplexer device around 2 km has been identified in both traces, it is depicted only once in Fig. 2.13a.

adapted from [40]

Results of the third and fourth monitoring steps in the WDM optical link. Tunability of the monitoring apparatus allows for measuring each channel individually,

The monitoring time of both channels was performed in approximately 25 min. The fact that two false positives have been identified by the fault location algorithm in the first analysis caused the overall time to increase significantly. Nevertheless, the possibility of tuning the wavelength of operation in both the High-Dynamic and High-Resolution measurement steps allowed for the system to accurately probe the WDM link and identify the correct event positions and magnitudes with high precision.

2.6 Tunability, Coherence, Chromatic Dispersion and Spatial Resolution

In order to be compatible with WDM networks (as specified in ITU-T G.694.1), a tunable OTDR must not only be capable of selecting the center wavelength of emission but also the spectral width of the optical signal. The current Dense WDM (DWDM) network channels operate with either spectral widths of 0.8 nm and 40 channels, or 0.4 nm and 80 channels, although 0.2 nm standard (the so-called Ultra-Dense WDM) have also been proposed [45]. The necessity of a tunable OTDR for monitoring WMD networks becomes clear by the result of Fig. 2.14a which shows an OTDR profile of a WDM network composed by a feeder fiber, a WDM splitter and four user fibers. Since the source used for this measurement has a broad spectral width, the backscattered power of all channels was overlapped and could not be distinguished in the resulting profile except by the end-fiber reflection peak.

adapted from [40]

Results of events validated during the final steps of the fault detection routine,

In contrast with Fig. 2.14a, b presents the profiles of the same WDM network but when a spectrally-tailored optical source was employed for the measurements. The result is two-fold: the links can be individually monitoring without overlap between the Rayleigh backscattered power from one channel to an adjacent one; monitoring each link individually causes the monitoring time to increase substantially. As commented in Sect. 2.2, tunability is easily enforced for the High-Dynamic Photon Counting OTDR since it depends solely on the TLS connected to the setup. For the High-Resolution measurements, tunability involves the inclusion of a tunable filter; the high-resolution achievable can thus be compromised by the spectral width of the pulse.

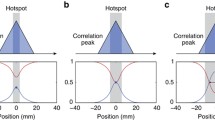

The narrower the spectrum of the optical probing pulse tailored by the tunable filter, the worse the spatial resolution will be; this is because the transform-limited pulses will broaden in time as the spectrum is filtered. This creates a fine balance between three distinct effects of the measurement: using a spectrally broader probing pulse reduces the contribution of Coherent Rayleigh Noise (CRN) on the OTDR profile [37]—CRN are the fluctuations in intensity due to the interference caused by the superposition of several light waves arriving at the detector with random phases [46] –; using a spectrally narrow pulse enlarges the pulse in time reducing the spatial resolution [47]; spectrally broader pulses are more affected by chromatic dispersion while propagating through the fiber, which, in turn, affects the spatial resolution. To illustrate these effects, Fig. 2.15a depicts experimentally measured spatial resolutions for different spectral widths tailored by the tunable filter. At the same time, the CRN effect on an OTDR profile measured with the High Dynamic Photon Counting with narrow and widened spectrum is depicted in Fig. 2.15b.

adapted from [40]

Impacts of spectral tailoring the probing pulse on the achievable spatial resolution and measurement noise,

In Fig. 2.15a, the detector’s jitter is indicated as a reference, since it may also limit the spatial resolution. Additionally, the model used to fit the experimental data was the following [40]:

where a corresponds to \(2 \cdot L \cdot D\) and b corresponds to the pulse width of the Fine Resolution Photon Counting OTDR UWS at full bandwidth. Also, we have used an approximation for the dispersion factor D and assumed that it remained constant within \(\varDelta \lambda \). From the results, it is clear that the slope for \(\varDelta \lambda \ge 1\) nm is well fitted, but the experimental results below this value show some inconsistencies with the fit. We conjecture that this behaviour may arise from the fact that the pulse peak power for reduced \(\varDelta \lambda \) is very low and the measurement results may be distorted. The back-reflected power also diminishes as the fiber length grows, which is observed in a higher contrast between experimental and fitted data for the longer fibers of 24.6 and 32.8 km. The pulse width enlargement for low values of \(\varDelta \lambda \) is due to the transform-limited pulse, i.e., it is as short as its spectral bandwidth permits.

2.7 Conclusions

An automatic, highly accurate, and fast optical fiber link monitoring system can alleviate several tasks in optical network managing besides reducing operational expenses. Reflectometry techniques based on Rayleigh scattering have met the need for single-ended probing of optical fibers but further improvements can still be performed to enforce compatibility to different network architectures, allow for coexistence between data and monitoring signals, and increase the speed in which events are evaluated. This chapter provided a brief overview of such techniques, while focusing on the Photon-Counting OTDR, a modification of the original OTDR format where instead of a linear photodetector performing data acquisition, a single-photon detector is employed.

To illustrate the potential of Photon-Counting OTDR strategies and the benefits of a fault finding algorithm, a centimeter-resolution long-distance optical fiber monitoring setup has been presented in detail. Tunability to adapt monitoring to WDM networks is a feature of this monitoring apparatus and the results are achieved in practical times. The device incorporates two Photon-Counting OTDR strategies [24, 27] and a fault finding algorithm capable of accurately identifying fault candidates in a fiber profile. The employed algorithm enables the automation of the whole process so that event candidates can be evaluated with high resolution; furthermore, it allows for the results to be output automatically without the need of an operator. A video containing an experimental run of the method can be found in [48].

The presented monitoring apparatus has been experimentally verified in long-range, mid-range, and wavelength multiplexed optical fiber links. The process involves four distinct steps:

-

the High Dynamic Photon-Counting OTDR quickly acquires the fiber profile;

-

the Adaptive \(\ell _1\) Filter identifies potential candidates in the profile;

-

the High Resolution Photon-Counting OTDR evaluates the positions around event candidates;

-

the Adaptive \(\ell _1\) Filter is once again used to determine real candidates and their precise positions.

This work paves the way for low-cost, highly reliable, automatic, and fast monitoring of optical fiber links. Even though the results validate the technique, one important improvement to the current setup would allow for a simplified implementation and reduced total cost. The UWS of the High Resolution Photon-Counting OTDR can be replaced by a gain-switched laser for short pulse generation.

References

K. Kao, G.A. Hockham, Dielectric-fibre surface waveguides for optical frequencies, in Proceedings of the Institution of Electrical Engineers, vol. 113 (IET 1966), pp. 1151–1158

G.P. Agrawal, Fiber-Optic Communication Systems. Wiley (1997)

J.P. Kilmer, A. DeVito, H.H. Yuce, C.J. Wieczorek, J.P. Varachi, W.T. Anderson, Optical cable reliability: lessons learned from post-mortem analyses, in Fiber Optics Reliability: Benign and Adverse Environments IV, International Society for Optics and Photonics, vol. 1366, (1991), pp. 85–92

S. Zemon, A. Budman, T. Wei, E. Eichen, K. Ma, Decay of transmitted light during fiber breaks-implications for break location. J. Light. Technol. 12, 1532–1535 (1994)

J.W. Strutt, Xv. on the light from the sky, its polarization and colour. Lond. Edinb. Dublin Philos. Mag. J. Sci. 41, 107–120 (1871)

J.W. Strutt, Xxxiv. on the transmission of light through an atmosphere containing small particles in suspension, and on the origin of the blue of the sky. Lond. Edinb. Dublin Philos. Mag. J. Sci. 47, 375–384 (1899)

J.W. Strutt, Lviii. on the scattering of light by small particles. Lond. Edinb. Dublin Philos. Mag. J. Sci. 41, 447–454 (1871)

D. Derickson, Fiber Optic - Test and Measurement (Prentice Hall, 1998)

L. Kissel, R. Pratt, Rayleigh scattering elastic photon scattering by bound electrons. In: Atomic inner-shell physics. Physics of Atoms and Molecules. 1 edn. (Springer, Berlin, 1985), pp. 465–532

F. Calliari, Automatic high-dynamic and high-resolution photon counting OTDR for optical fiber network monitoring. Master’s thesis, PUC-Rio (2017)

M.K. Barnoski, M.D. Rourke, S.M. Jensen, R.T. Melville, Optical time domain reflectometer. Appl. Opt. 16, 2375–2379 (1977)

Anritsu: MT9083 Series - MT9083A/B/C ACCESS master. Technical report, Specifications Sheet (2011)

R.L. Jungerman, D.W. Dolfi, Frequency domain optical network analysis using integrated optics. IEEE J. Quantum Electron. 27, 580–587 (1991)

J.P. von der Weid, R. Passy, G. Mussi, N. Gisin, On the characterization of optical fiber network components with optical frequency domain reflectometry. J. Light. Technol. 15, 1131–1141 (1997)

K. Yuksel, M. Wuilpart, V. Moeyaert, P. Mégret, Optical frequency domain reflectometry: a review, in ICTON’09. 11th International Conference on Transparent Optical Networks, 2009. (IEEE, 2009), pp. 1–5

G.C. Amaral, A. Baldivieso, J.D. Garcia, D.C. Villafani, R.G. Leibel, L.E.Y. Herrera, P.J. Urban, J.P. von der Weid, A low-frequency tone sweep method for in-service fault location in subcarrier multiplexed optical fiber networks. J. Light. Technol. 35, 2017–2025 (2017)

J. Nakayama, K. Iizuka, J. Nielsen, Optical fiber fault locator by the step frequency method. Appl. Opt. 26, 440–443 (1987)

N. Park, J. Lee, J. Park, J.G. Shim, H. Yoon, J.H., Kim, K. Kim, J.O. Byun, G. Bolognini, D. Lee et al., Coded optical time domain reflectometry: principle and applications, in Asia-Pacific Optical Communications, International Society for Optics and Photonics (2007), p. 678129

R. Liao, M. Tang, C. Zhao, H. Wu, S. Fu, D. Liu, P.P. Shum, Harnessing oversampling in correlation-coded otdr (2017). arXiv:1705.05241

Z. Xie, L. Xia, Y. Wang, C. Yang, C. Cheng, D. Liu, Fiber fault detection with high accuracy using chaotic signal from an soa ring reflectometry. IEEE Photonics Technol. Lett. 25, 709–712 (2013)

X. Dong, A. Wang, J. Zhang, H. Han, T. Zhao, X. Liu, Y. Wang, Combined attenuation and high-resolution fault measurements using chaos-OTDR. IEEE Photonics J. 7, 1–6 (2015)

D.V. Caballero, J.P. von der Weid, P.J. Urban, Tuneable OTDR measurements for WDM-PON monitoring, in 2013 SBMO/IEEE MTT-S International Microwave Optoelectronics Conference (IMOC) (2013), pp. 1–5

P. Eraerds, M. Legré, J. Zhang, H. Zbinden, N. Gisin, Photon counting OTDR: advantages and limitations. J. Light. Technol. 28, 952–964 (2010)

G.C. Amaral, J.D. Garcia, L.E. Herrera, G.P. Temporao, P.J. Urban, J.P. von der Weid, Automatic fault detection in WDM-PON with tunable photon counting OTDR. J. Light. Technol. 33, 5025–5031 (2015)

S. Cova, N. Ghioni, A. Lotito, I. Rech, F. Zappa, Evolution and prospects for single-photon avalanche diodes and quenching circuits. J. Mod. Opt. 15, (2004)

L.E.Y. Herrera, Reflectometria óptica de alta resolução por contagem de fótons. Ph.D. thesis, PUC-Rio (2015)

L. Herrera, G. Amaral, J.P. von der Weid, Ultra-high-resolution tunable PC-OTDR for PON monitoring in avionics, in Optical Fiber Communications Conference and Exhibition (OFC), 2015 (IEEE, 2015), pp. 1–3

G.C. do Amaral, FPGA applications on single photon detection systems. Master’s thesis, PUC-Rio (2014)

L.E. Herrera, F. Calliari, J.D. Garcia, G.C. do Amaral, J.P. von der Weid, High resolution automatic fault detection in a fiber optic link via photon counting OTDR, in Optical Fiber Communication Conference, Optical Society of America (2016) M3F.4

Santec Corporation, Ultra-Wideband Source UWS-1000H Technical report, Specifications Sheet (2016)

F.A. Ghonaim, T.E. Darcie, S. Ganti, Impact of sdn on optical router bypass. IEEE/OSA J. Opt. Commun. Netw. 10, 332–343 (2018)

R.L. Brown, J. Durbin, J.M. Evans, Techniques for testing the constancy of regression relationships over time. J. R. Stat. Soc. Ser. B (Methodological) 149–192 (1975)

W.S. Rea, M. Reale, C. Cappelli, J.A. Brown, Identification of changes in mean with regression trees: an application to market research. Econ. Rev. 29, 754–777 (2010)

M. Storath, A. Weinmann, L. Demaret, Jump-sparse and sparse recovery using potts functionals. IEEE Trans. Signal Process. 62, 3654–3666 (2014)

E.J. Candes, M.B. Wakin, S.P. Boyd, Enhancing sparsity by reweighted \(\ell _1\) minimization. J. Fourier Anal. Appl. 14, 877–905 (2008)

S.J. Kim, K. Koh, S. Boyd, D. Gorinevsky, \(\ell _1\) trend filtering. SIAM Rev 51, 339–360 (2009)

J.P. von der Weid, M.H. Souto, J.D. Garcia, G.C. Amaral, Adaptive filter for automatic identification of multiple faults in a noisy OTDR profile. J. Light. Technol. 34, 3418–3424 (2016)

K. De Souza, Significance of coherent rayleigh noise in fibre-optic distributed temperature sensing based on spontaneous brillouin scattering. Meas. Sci. Technol. 17, 1065 (2006)

M. Souto, J.D. Garcia, G.C. Amaral, \(\ell _1\) adaptive trend filter via fast coordinate descent, in Sensor Array and Multichannel Signal Processing Workshop (SAM), 2016 (IEEE, 2016), pp. 1–5

F. Calliari, L.E. Herrera, J.P. von der Weid, G.C. Amaral, High-dynamic and high-resolution automatic photon counting OTDR for optical fiber network monitoring, in 6th International Conference on Photonics. Optics and Laser Technology, vol. 1 (PHOTOPTICS, 2018), pp. 82–90

P. Anandarajah, R. Maher, Y. Xu, S. Latkowski, J. O’carroll, S. Murdoch, R. Phelan, J. O’Gorman, L. Barry, Generation of coherent multicarrier signals by gain switching of discrete mode lasers. IEEE Photonics J. 3 112–122 (2011)

I.D. Quantique, id210 - Advanced System for Single Photon Detection, Technical report, Specifications Sheet, (2011)

I. Rech, S. Marangoni, D. Resnati, M. Ghioni, S. Cova, Multipixel single-photon avalanche diode array for parallel photon counting applications. J. Mod. Opt. 56, 326–333 (2009)

L. Herrera, G. Amaral, J. von der Weid, Investigation of bend loss in single mode fibers with ultra-high-resolution photon-counting optical time domain reflectometer. Appl. Opt. 55, 1177–1182 (2016)

A. Shahpari, R. Ferreira, V. Ribeiro, A. Sousa, S. Ziaie, A. Tavares, Z. Vujicic, F.P. Guiomar, J.D. Reis, A.N. Pinto et al., Coherent ultra dense wavelength division multiplexing passive optical networks. Opt. Fiber Technol. 26, 100–107 (2015)

K. Shimizu, T. Horiguchi, Y. Koyamada, Characteristics and reduction of coherent fading noise in rayleigh backscattering measurement for optical fibers and components. J. Light. Technol. 10, 982–987 (1992)

A.F. Elrefaie, R.E. Wagner, D. Atlas, D. Daut, Chromatic dispersion limitations in coherent lightwave transmission systems. J. Light. Technol. 6, 704–709 (1988)

F. Calliari, G.C. do Amaral, L.E.Y. Herrera, High Dynamic and High Resolution Automatic Photon Counting OTDR (2017), https://www.youtube.com/watch?v=KQn9Du2l4NQ. Accessed on 27 April 2018

Acknowledgements

We would like to thank to the brazilian agency CNPq for the financial support, without which this work would not have been realized.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Calliari, F., Herrera, L., von der Weid, J.P., Amaral, G. (2019). Centimeter-Resolution Long-Distance Optical Fiber Monitoring. In: Ribeiro, P., Raposo, M. (eds) Optics, Photonics and Laser Technology 2018. Springer Series in Optical Sciences, vol 223. Springer, Cham. https://doi.org/10.1007/978-3-030-30113-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-30113-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30112-5

Online ISBN: 978-3-030-30113-2

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)