Abstract

Counting the number of perfect matchings in bipartite graphs, or equivalently computing the permanent of 0-1 matrices, is an important combinatorial problem that has been extensively studied by theoreticians and practitioners alike. The permanent is #P-Complete; hence it is unlikely that a polynomial-time algorithm exists for the problem. Researchers have therefore focused on finding tractable subclasses of matrices for permanent computation. One such subclass that has received much attention is that of sparse matrices i.e. matrices with few entries set to 1, the rest being 0. For this subclass, improved theoretical upper bounds and practically efficient algorithms have been developed. In this paper, we ask whether it is possible to go beyond sparse matrices in our quest for developing scalable techniques for the permanent, and answer this question affirmatively. Our key insight is to represent permanent computation symbolically using Algebraic Decision Diagrams (ADDs). ADD-based techniques naturally use dynamic programming, and hence avoid redundant computation through memoization. This permits exploiting the hidden structure in a large class of matrices that have so far remained beyond the reach of permanent computation techniques. The availability of sophisticated libraries implementing ADDs also makes the task of engineering practical solutions relatively straightforward. While a complete characterization of matrices admitting a compact ADD representation remains open, we provide strong experimental evidence of the effectiveness of our approach for computing the permanent, not just for sparse matrices, but also for dense matrices and for matrices with “similar” rows.

Author names are ordered alphabetically by last name and does not indicate contribution.

Work supported in part by NSF grant IIS-1527668, the Data Analysis and Visualization Cyberinfrastructure funded by NSF under grant OCI-0959097 and Rice University, and MHRD IMPRINT-1 Project No. 6537 sponsored by Govt of India.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Constrained counting lies at the heart of several important problems in diverse areas such as performing Bayesian inference [45], measuring resilience of electrical networks [20], counting Kekule structures in chemistry [23], computing the partition function of monomer-dimer systems [26], and the like. Many of these problems reduce to counting problems on graphs. For instance, learning probabilistic models from data reduces to counting the number of topological sorts of directed acyclic graphs [56], while computing the partition function of a monomer-dimer system reduces to computing the number of perfect matchings of an appropriately defined bipartite graph [26]. In this paper, we focus on the last class of problems – that of counting perfect matchings in bipartite graphs. It is well known that this problem is equivalent to computing the permanent of the 0-1 bi-adjacency matrix of the bipartite graph. We refer to these two problems interchangeably in the remainder of the paper.

Given an \(n\times n\) matrix

with real-valued entries, the permanent of

with real-valued entries, the permanent of

is given by

is given by

, where \(S_n\) denotes the symmetric group of all permutations of \(1, \ldots n\). This expression is almost identical to that for the determinant of

, where \(S_n\) denotes the symmetric group of all permutations of \(1, \ldots n\). This expression is almost identical to that for the determinant of

; the only difference is that the determinant includes the sign of the permutation in the inner product. Despite the striking resemblance of the two expressions, the complexities of computing the permanent and determinant are vastly different. While the determinant can be computed in time \(\mathcal {O}(n^{2.4})\), Valiant [54] showed that computing the permanent of a 0-1 matrix is #P-Complete, making a polynomial-time algorithm unlikely [53]. Further evidence of the hardness of computing the permanent was provided by Cai, Pavan and Sivakumar [11], who showed that the permanent is also hard to compute on average. Dell et al. [19] showed that there can be no algorithm with sub-exponential time complexity, assuming a weak version of the Exponential Time Hypothesis [3] holds.

; the only difference is that the determinant includes the sign of the permutation in the inner product. Despite the striking resemblance of the two expressions, the complexities of computing the permanent and determinant are vastly different. While the determinant can be computed in time \(\mathcal {O}(n^{2.4})\), Valiant [54] showed that computing the permanent of a 0-1 matrix is #P-Complete, making a polynomial-time algorithm unlikely [53]. Further evidence of the hardness of computing the permanent was provided by Cai, Pavan and Sivakumar [11], who showed that the permanent is also hard to compute on average. Dell et al. [19] showed that there can be no algorithm with sub-exponential time complexity, assuming a weak version of the Exponential Time Hypothesis [3] holds.

The determinant has a nice geometric interpretation: it is the oriented volume of the parallelepiped spanned by the rows of the matrix. The permanent, however, has no simple geometric interpretation. Yet, it finds applications in a wide range of areas. In chemistry, the permanent and the permanental polynomial of the adjacency matrices of fullerenes [32] have attracted much attention over the years [12, 13, 34]. In constraint programming, solutions to All-Different constraints can be expressed as perfect matchings in a bipartite graph [43]. An estimate of the number of such solutions can be used as a branching heuristic to guide search [42, 60]. In physics, permanents can be used to measure quantum entanglement [58] and to compute the partition functions of monomer-dimer systems [26].

Since computing the permanent is hard in general, researchers have attempted to find efficient solutions for either approximate versions of the problem, or for restricted classes of inputs. In this paper, we restrict our attention to exact algorithms for computing the permanent. The asymptotically fastest known exact algorithm for general \(n\times n\) matrices is Nijenhuis and Wilf’s version of Ryser’s algorithm [38, 46], which runs in time \({\varTheta }(n\cdot 2^n)\) for all matrices of size n. For matrices with bounded treewidth or clique-width [15, 44], Courcelle, Makowsky and Rotics [16] showed that the permanent can be computed in time linear in the size of the matrix, i.e., computing the permanent is Fixed Parameter Tractable (FPT). A large body of work is devoted to developing fast algorithms for sparse matrices, i.e. matrices with only a few entries set to non-zero values [28, 34, 48, 59] in each row. Note that the problem remains #P-Complete even when the input is restricted to matrices with exactly three 1’s per row and column [9].

An interesting question to ask is whether we can go beyond sparse matrices in our quest for practically efficient algorithms for the permanent. For example, can we hope for practically efficient algorithms for computing the permanent of dense matrices, i.e., matrices with almost all entries non-zero? Can we expect efficiency when the rows of the matrix are “similar”, i.e. each row has only a few elements different from any other row (sparse and dense matrices being special cases)? Existing results do not seem to throw much light on these questions. For instance, while certain non-sparse matrices indeed have bounded clique-width, the aforementioned result of Courcelle et al. [14, 16] does not yield practically efficient algorithms as the constants involved are enormous [24]. The hardness of non-sparse instances is underscored by the fact that SAT-based model counters do not scale well on these, despite the fact that years of research and careful engineering have enabled these tools to scale extremely well on a diverse array of problems. We experimented with a variety of CNF-encodings of the permanent on state-of-the-art counters like \(\mathsf {D4}\) [33]. Strikingly, no combination of tool and encoding was able to scale to matrices even half the size of those solved by Ryser’s approach in the same time, despite the fact that Ryser’s approach has exponential complexity even in the best case.

In this paper, we show that practically efficient algorithms for the permanent can indeed be designed for large non-sparse matrices if the matrix is represented compactly and manipulated efficiently using a special class of data structures. Specifically, we propose using Algebraic Decision Diagrams [4] (ADDs) to represent matrices, and design a version of Ryser’s algorithm to work on this symbolic representation of matrices. This effectively gives us a symbolic version of Ryser’s algorithm, as opposed to existing implementations that use an explicit representation of the matrix. ADDs have been studied extensively in the context of formal verification, and sophisticated libraries are available for compact representation of ADDs and efficient implementation of ADD operations [50, 55]. The literature also contains compelling evidence that reasoning based on ADDs and variants scales to large instances of a diverse range of problems in practice, cf. [4, 21]. Our use of ADDs in Ryser’s algorithm leverages this progress for computing the permanent. Significantly, there are several sub-classes of matrices that admit compact representations using ADDs, and our algorithm works well for all these classes. Our empirical study provides evidence for the first time that the frontier of practically efficient permanent computation can be pushed well beyond the class of sparse matrices, to the classes of dense matrices and, more generally, to matrices with “similar” rows. Coupled with a technique known as early abstraction, ADDs are able to handle sparse instances as well. In summary, the symbolic approach to permanent computation shows promise for both sparse and dense classes of matrices, which are special cases of a notion of row-similarity.

The rest of the paper is organized as follows: in Sect. 2 we introduce ADDs and other concepts that we will use in this paper. We discuss related work in Sect. 3 and present our algorithm and analyze it in Sect. 4. Our empirical study is presented in Sects. 5 and 6 and we conclude in Sect. 7.

2 Preliminaries

We denote by

an \( n\times n\) 0-1 matrix, which can also be interpreted as the bi-adjacency matrix of a bipartite graph

an \( n\times n\) 0-1 matrix, which can also be interpreted as the bi-adjacency matrix of a bipartite graph

with an edge between vertex \( i\in U \) and \( j\in V \) iff \( a_{ij} = 1\). We will denote the ith row of

with an edge between vertex \( i\in U \) and \( j\in V \) iff \( a_{ij} = 1\). We will denote the ith row of

by \( r_i \). A perfect matching in

by \( r_i \). A perfect matching in

is a subset \( \mathcal {M} \subseteq E \), such that for all \( v\in (U\cup V) \), exactly one edge \( e \in \mathcal {M} \) is incident on v. We denote by

is a subset \( \mathcal {M} \subseteq E \), such that for all \( v\in (U\cup V) \), exactly one edge \( e \in \mathcal {M} \) is incident on v. We denote by

the permanent of

the permanent of

, and by

, and by

, the number of perfect matchings in G. A well known fact is that

, the number of perfect matchings in G. A well known fact is that

, and we will use these concepts interchangeably when clear from context.

, and we will use these concepts interchangeably when clear from context.

2.1 Algebraic Decision Diagrams

Let X be a set of Boolean-valued variables. An Algebraic Decision Diagram (ADD) is a data structure used to compactly represent a function of the form \(f: 2^X\rightarrow \mathbb {R}\) as a Directed Acyclic Graph (DAG). ADDs were originally proposed as a generalization of Binary Decision Diagrams (BDDs), which can only represent functions of the form \(g: 2^X \rightarrow \{0,1\}\). Formally, an ADD is a 4-tuple \((X,T,\pi ,G)\) where X is a set of Boolean variables, the finite set \( T \subset \mathbb {R}\) is called the carrier set, \( \pi : X \rightarrow \mathbb {N} \) is the diagram variable order, and G is a rooted directed acyclic graph satisfying the following three properties:

-

1.

Every terminal node of G is labeled with an element of T.

-

2.

Every non-terminal node of G is labeled with an element of X and has two outgoing edges labeled 0 and 1.

-

3.

For every path in G, the labels of visited non-terminal nodes must occur in increasing order under \( \pi \).

We use lower case letters \( f,g,\ldots \) to denote both functions from Booleans to reals as well as the ADDs representing them. Many operations on such functions can be performed in time polynomial in the size of their ADDs. We list some such operations that will be used in our discussion.

-

Product: The product of two ADDs representing functions \(f: 2^X\rightarrow \mathbb {R}\) and \(g: 2^Y\rightarrow \mathbb {R}\) is an ADD representing the function \(f\cdot g: 2^{X\cup Y}\rightarrow \mathbb {R}\), where \( f\cdot g (\tau )\) is defined as \(f(\tau \cap X) \cdot g(\tau \cap Y) \) for every \( \tau \in 2^{X\cup Y} \),

-

Sum: Defined in a way similar to the product.

-

If-Then-Else (ITE): This is a ternary operation that takes as inputs a BDD f and two ADDs g and h. ITE(f, g, h) represents the function \(f\cdot g + \lnot f\cdot h\), and the corresponding ADD is obtained by substituting g for the leaf’1’ of f and h for the leaf ’0’, and simplifying the resulting structure.

-

Additive Quantification: The existential quantification operation for Boolean-valued functions can be extended to real-valued functions by replacing disjunction with addition as follows. The additive quantification of \(f: 2^{X}\rightarrow \mathbb {R}\) is denoted as \( \exists x. f : 2^{X\backslash \{x\}}\rightarrow \mathbb {R} \) and for \( \tau \in 2^{X\backslash \{x\}} \), we have \( \exists x. f(\tau ) = f(\tau ) + f(\tau \cup \{x\})\).

ADDs share many properties with BDDs. For example, there is a unique minimal ADD for a given variable order \( \pi \), called the canonical ADD, and minimization can be performed in polynomial time. Similar to BDDs, the variable order can significantly affect the size of the ADD. Hence heuristics for finding good variable orders for BDDs carry over to ADDs as well. ADDs typically have lower recombination efficiency, i.e. number of shared nodes, vis-a-vis BDDs. Nevertheless, sharing or recombination of isomorphic sub-graphs in an ADD is known to provide significant practical advantages in representing matrices, vis-a-vis other competing data structures. The reader is referred to [4] for a nice introduction to ADDs and their applications.

2.2 Ryser’s Formula

The permanent of

can be calculated by the principle of inclusion-exclusion using Ryser’s formula:

can be calculated by the principle of inclusion-exclusion using Ryser’s formula:

Algorithms implementing Ryser’s formula on an explicit representation of an arbitrary matrix

Algorithms implementing Ryser’s formula on an explicit representation of an arbitrary matrix

(not necessarily sparse) must consider all \(2^n\) subsets of [n]. As a consequence, such algorithms have at least exponential complexity. Our experiments show that even the best known existing algorithm implementing Ryser’s formula for arbitrary matrices [38], which iterates over the subsets of [n] in Gray-code sequence, consistently times out after 1800 s on a state-of-the-art computing platform when computing the permanent of \(n \times n\) matrices, with \(n \ge 35\).

(not necessarily sparse) must consider all \(2^n\) subsets of [n]. As a consequence, such algorithms have at least exponential complexity. Our experiments show that even the best known existing algorithm implementing Ryser’s formula for arbitrary matrices [38], which iterates over the subsets of [n] in Gray-code sequence, consistently times out after 1800 s on a state-of-the-art computing platform when computing the permanent of \(n \times n\) matrices, with \(n \ge 35\).

3 Related Work

Valiant showed that computing the permanent is \(\#P\)-complete [54]. Subsequently, researchers have considered restricted sub-classes of inputs in the quest for efficient algorithms for computing the permanent, both from theoretical and practical points of view. We highlight some of the important milestones achieved in this direction.

A seminal result is the Fisher-Temperly-Kastelyn algorithm [29, 52], which computes the number of perfect matchings in planar graphs in PTIME. This result was subsequently extended to many other graph classes (c.f. [40]). Following the work of Courcelle et al. a number of different width parameters have been proposed, culminating in the definition of ps-width [47], which is considered to be the most general notion of width [8]. Nevertheless, as with clique-width, it is not clear whether it lends itself to practically efficient algorithms. Bax and Franklin [5] gave a Las Vegas algorithm with better expected time complexity than Ryser’s approach, but requiring \( \mathcal {O}(2^{n/2}) \) space.

For matrices with at most \( C\cdot n \) zeros, Servedio and Wan [48] presented a \( (2-\varepsilon )^n \)-time and \( \mathcal {O}(n) \) space algorithm where \( \varepsilon \) depends on C. Izumi and Wadayama [28] gave an algorithm that runs in time \( \mathcal {O^*}(2^{(1-1/(\varDelta \log \varDelta ))n}) \), where \( \varDelta \) is the average degree of a vertex. On the practical side, in a series of papers, Liang, Bai and their co-authors [34, 35, 59] developed algorithms optimized for computing the permanent of the adjacency matrices of fullerenes, which are 3-regular graphs.

In recent years, practical techniques for propositional model counting (#SAT) have come of age. State-of-the-art exact model counters like \(\mathsf {DSharp}\) [37] and \(\mathsf {D4}\) [33] also incorporate techniques from knowledge compilation. A straightforward reduction of the permanent to #SAT uses a Boolean variable \(x_{ij}\) for each 1 in row i and column j of the input matrix

, and imposes Exact-One constraints on the variables in each row and column. This gives the formula

, and imposes Exact-One constraints on the variables in each row and column. This gives the formula

. Each solution to

. Each solution to

is a perfect matching in the underlying graph, and so the number of solutions is exactly the permanent of the matrix. A number of different encodings can be used for translating Exact-One constraints to Conjunctive Normal Form (see Sect. 5.1). We perform extensive comparisons of our tool with \(\mathsf {D4}\) and \(\mathsf {DSharp}\) with six such encodings.

is a perfect matching in the underlying graph, and so the number of solutions is exactly the permanent of the matrix. A number of different encodings can be used for translating Exact-One constraints to Conjunctive Normal Form (see Sect. 5.1). We perform extensive comparisons of our tool with \(\mathsf {D4}\) and \(\mathsf {DSharp}\) with six such encodings.

4 Representing Ryser’s Formula Symbolically

As noted in Sect. 2, an explicit implementation of Ryser’s formula iterates over all \(2^n\) subsets of columns and its complexity is in \(\varTheta (n\cdot 2^n)\). Therefore, any such implementation takes exponential time even in the best case. A natural question to ask is whether we can do better through a careful selection of subsets over which to iterate. This principle was used for the case of sparse matrices by Servedio and Wan [48]. Their idea was to avoid those subsets for which the row-sum represented by the innermost summation in Ryser’s formula, is zero for at least one row, since those terms do not contribute to the outer sum in Ryser’s formula. Unfortunately, this approach does not help for non-sparse matrices, as very few subsets of columns (if any) will yield a zero row-sum.

It is interesting to ask if we can exploit similarity of rows (instead of sparsity) to our advantage. Consider the ideal case of an \(n\times n \) matrix with identical rows, where each row has \(k~(\le n)\) 1s. For any given subset of columns, the row-sum is clearly the same for all rows, and hence the product of all row-sums is simply the \(n^{th}\) power of the row-sum of one row. Furthermore, there are only \(k+1\) distinct values (0 through k) of the row-sum, depending on which subset of columns is selected. The number of r-sized column subsets that yield row-sum j is clearly \({k \atopwithdelims ()j}\cdot {n-k \atopwithdelims ()r-j}\), for \(0 \le j \le k\) and \(j \le r \le n-k+j\). Thus, we can directly compute the permanent of the matrix via Ryser’s formula as

. This equation has a more compact representation than the explicit implementation of Ryser’s formula, since the outer summation is over \((k+1).(n-k+1)\) terms instead of \(2^n\) terms.

. This equation has a more compact representation than the explicit implementation of Ryser’s formula, since the outer summation is over \((k+1).(n-k+1)\) terms instead of \(2^n\) terms.

Drawing motivation from the above example, we propose using memoization to simplify the permanent computation of matrices with similar rows. Specifically, if we compute and store the row-sums for a subset \(S_1\subset [n]\) of columns, then we can potentially reuse this information when computing the row-sums for subsets \(S_2 \supset S_1\). We expect storage requirements to be low when the rows are similar, as the partial sums over identical parts of the rows will have a compact representation, as shown above.

While we can attempt to hand-craft a concrete algorithm using this idea, it turns out that ADDs fit the bill perfectly. We introduce Boolean variables \(x_j\) for each column \(1\le j \le n \) in the matrix. We can represent the summand \((-1)^{|S|} \prod _{i=1}^{n} \sum _{j\in S} a_{ij} \) in Ryser’s formula as a function \( f_{Ryser} : 2^X \rightarrow \mathbb {R} \) where for a subset of columns \(\tau \in 2^X \), we have \( f_{Ryser}(\tau ) = (-1)^{|\tau |} \prod _{i=1}^{n} \sum _{j\in \tau } a_{ij} \). The outer sum in Ryser’s formula is then simply the Additive Quantification of \(f_{Ryser}\) over all variables in X. The permanent can thus be denoted by the following equation:

We can construct an ADD for \( f_{Ryser} \) incrementally as follows:

-

Step 1: For each row \( r_i \) in the matrix, construct the Row-Sum ADD \( f_{RS}^{r_i} \) such that \( f_{RS}^{r_i}(\tau ) = \sum _{j: a_{ij} = 1} \mathbbm {1}_{\tau } (x_j) \), where \( \mathbbm {1}_{\tau } (x_j) \) is the indicator function taking the value 1 if \( x_j \in \tau \), and zero otherwise. This ADD can be constructed by using the sum operation on the variables \( x_j \) corresponding to the 1 entries in row \( r_i \).

-

Step 2: Construct the Row-Sum-Product ADD \( f_{RSP} = \prod _{i = 1 }^{n} f_{RS}^{r_i} \) by applying the product operation on all the Row-Sum ADDs.

-

Step 3: Construct the Parity ADD \( f_{PAR} = ITE(\bigoplus _{j=1}^{n} x_j, -1, +1) \), where \(\bigoplus \) represents exclusive-or. This ADD represents the \( (-1)^{|S|} \) term in Ryser’s formula.

-

Step 4: Construct \( f_{Ryser} = f_{RSP}. f_{PAR} \) using the product operation.

Finally, we can additively quantify out all variables in \( f_{Ryser} \) and multiply the result by \( (-1)^n \) to get the permanent, as given by Eq. 1.

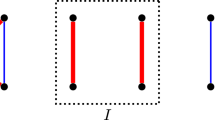

The size of the ADD \( f_{RSP} \) will be the smallest when the ADDs \( f_{RS}^{r_i} \) are exactly the same for all rows \(r_i\), i.e. when all rows of the matrix are identical. In this case, the ADDs \(f_{RS}^{r_i} \) and \( f_{RSP} \) will be isomorphic; the values at the leaves of \(f_{RSP}\) will simply be the \(n^{th}\) power of the values at the corresponding leaves of \(f_{RS}^{r_i}\). An example illustrating this for a \(4\times 4\) matrix of all 1s is shown in Fig. 1. Each level of the ADDs in this figure corresponds to a variable (shown on the left) for a column of the matrix. A solid edge represents the ‘true’ branch while a dotted edge represents the ‘false’ branch. Observe that sharing of isomorphic subgraphs allows each of these ADDs to have 10 internal nodes and 5 leaves, as opposed to 15 internal nodes and 16 leaves that would be needed for a complete binary tree based representation.

The ADD representation is thus expected to be compact when the rows are “similar”. Dense matrices can be thought of as a special case: starting with a matrix of all 1s (which clearly has all rows identical), we change a few 1s to 0s. The same idea can be applied to sparse matrices as well: starting with a matrix of all 0s (once again, identical rows), we change a few 0s to 1s. The case of very sparse matrices is not interesting, however, as the permanent (or equivalently, count of perfect matchings in the corresponding bipartite graph) is small and can be computed by naive enumeration. Interestingly, our experiments show that as we reduce the sparsity of the input matrix, constructing \(f_{RSP}\) and \(f_{Ryser}\) in a monolithic fashion as discussed above fails to scale, since the sizes of ADDs increase very sharply. Therefore we need additional machinery.

First, we rewrite Eq. 1 in terms of the intermediate ADDs as:

We then employ the principle of early abstraction to compute \(f_{Ryser}\) incrementally. Note that early abstraction has been used successfully in the past in the context of SAT solving [41], and recently for weighted model counting using ADDs in a technique called \(\mathsf {ADDMC}\) [1]. The formal statement of the principle of early abstraction is given in the following theorem.

Theorem 1

[1] Let X and Y be sets of variables and \( f:2^X\rightarrow \mathbb {R} \), \( g:2^Y\rightarrow \mathbb {R} \). For all \( x\in X\setminus Y \), we have \( \exists _x (f\cdot g) = (\exists _x (f))\cdot g \)

Since the product operator is associative and additive quantification is commutative, we can rearrange the terms of Eq. 2 in order to apply early abstraction. This idea is implemented in Algorithm \(\mathsf {RysersADD}\), which is motivated by the weighted model counting algorithm in [1].

Algorithm \(\mathsf {RysersADD}\) takes as input a 0–1 matrix

, a diagram variable order \( \pi \) and a cluster rank-order \( \eta \). \( \eta \) is an ordering of variables which is used to heuristically partition rows of

, a diagram variable order \( \pi \) and a cluster rank-order \( \eta \). \( \eta \) is an ordering of variables which is used to heuristically partition rows of

into clusters using a function \(\mathsf {clusterRank}\), where all rows in a cluster get the same rank. Intuitively, rows that are almost identical are placed in the same cluster, while those that differ significantly are placed in different clusters. Furthermore, the clusters are ordered such that there are non-zero columns in cluster i that are absent in the set of non-zero columns in clusters with rank \(> i\). As we will soon see, this facilitates keeping the sizes of ADDs under control by applying early abstraction.

into clusters using a function \(\mathsf {clusterRank}\), where all rows in a cluster get the same rank. Intuitively, rows that are almost identical are placed in the same cluster, while those that differ significantly are placed in different clusters. Furthermore, the clusters are ordered such that there are non-zero columns in cluster i that are absent in the set of non-zero columns in clusters with rank \(> i\). As we will soon see, this facilitates keeping the sizes of ADDs under control by applying early abstraction.

Algorithm \(\mathsf {RysersADD}\) proceeds by first partitioning the Row-Sum ADDs of the rows

into clusters according to their cluster rank in line 3. Each Row-Sum ADD is constructed according to the diagram variable order \( \pi \). The ADD \( f_{Ryser} \) is constructed incrementally, starting with the Parity ADD in line 4, and multiplying the Row-Sum ADDs in each cluster \( \kappa _i \) in the loop at line 7. However, unlike the monolithic approach, early abstraction is carried out within the loop at line 9. Finally, when the execution reaches line 12, all variables representing columns of the input matrix have been abstracted out. Therefore, \( f_{Ryser} \) is an ADD with a single leaf node that contains the (possibly negative) value of the permanent. Following Eq. 2, the algorithm returns the product of \( (-1)^n \) and \(f_{Rsyer}(\emptyset )\).

into clusters according to their cluster rank in line 3. Each Row-Sum ADD is constructed according to the diagram variable order \( \pi \). The ADD \( f_{Ryser} \) is constructed incrementally, starting with the Parity ADD in line 4, and multiplying the Row-Sum ADDs in each cluster \( \kappa _i \) in the loop at line 7. However, unlike the monolithic approach, early abstraction is carried out within the loop at line 9. Finally, when the execution reaches line 12, all variables representing columns of the input matrix have been abstracted out. Therefore, \( f_{Ryser} \) is an ADD with a single leaf node that contains the (possibly negative) value of the permanent. Following Eq. 2, the algorithm returns the product of \( (-1)^n \) and \(f_{Rsyer}(\emptyset )\).

The choice of the function \( \mathsf {clusterRank}\) and the cluster rank-order \( \eta \) significantly affect the performance of the algorithm. A number of heuristics for determining \(\mathsf {clusterRank}\) and \(\eta \) have been proposed in literature, such as Bucket Elimination [18], and Bouquet’s Method [7] for cluster ranking, and MCS [51], LexP [31] and LexM [31] for variable ordering. Further details and a rigorous comparison of these heuristics are presented in [1]. Note that if we assign the same cluster rank to all rows of the input matrix, Algorithm \(\mathsf {RysersADD}\) reduces to one that constructs all ADDs monolithically, and does not benefit from early abstraction.

4.1 Implementation Details

We implemented Algorithm 1 using the library Sylvan [55] since unlike CUDD [50], Sylvan supports arbitrary precision arithmetic – an essential feature to avoid overflows when the permanent has a large value. Sylvan supports parallelization of ADD operations in a multi-core environment. In order to leverage this capability, we created a parallel version of \(\mathsf {RysersADD}\) that differs from the sequential version only in that it uses the parallel implementation of ADD operations natively provided by Sylvan. Note that this doesn’t require any change to Algorithm \(\mathsf {RysersADD}\), except in the call to Sylvan functions. While other non-ADD-based approaches to computing the permanent can be parallelized as well, we emphasize that it is a non-trivial task in general, unlike using Sylvan. We refer to our sequential and parallel implementations for permanent computation as \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) respectively, in the remainder of the discussion. We implemented our algorithm in C++, compiled under GCC v6.4 with the O3 flag. We measured the wall-times for both algorithms. Sylvan also supports arbitrary precision floating point computation, which makes it easy to extend \(\mathsf {RysersADD}\) for computing permanent of real-valued matrices. However, we leave a detailed investigation of this for future work.

5 Experimental Methodology

The objective of our empirical study was to evaluate \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) on randomly generated instances (as done in [35]) and publicly available structured instances (as done in [34, 59]) of 0-1 matrices.

5.1 Algorithm Suite

As noted in Sect. 3, a number of different algorithms have been reported in the literature for computing the permanent of sparse matrices. Given resource constraints, it is infeasible to include all of these in our experimental comparisons. This is further complicated by the fact that many of these algorithms appear not to have been implemented (eg: [28, 48]), or the code has not been made publicly accessible (eg: [34, 59]). A fair comparison would require careful consideration of several parameters like usage of libraries, language of implementation, suitability of hardware etc. We had to arrive at an informed choice of algorithms, which we list below along with our rationale:

-

\(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\): For the dense and similar rows cases, we use the monolithic approach as it is sufficient to demonstrate the scalability of our ADD-based approach. For sparse instances, we employ Bouquet’s Method (List) [7] clustering heuristic along with MCS cluster rank-order [51] and we keep the diagram variable order the same as the indices of columns in the input matrix (see [1] for details about the heuristics). We arrived at these choices through preliminary experiments. We leave a detailed comparison of all combinations for future work.

-

Explicit Ryser’s Algorithm: We implemented Nijenhuis and Wilf’s version [38] of Ryser’s formula using Algorithm H from [30] for generating the Gray code sequence. Our implementation, running on a state-of-the-art computing platform (see Sect. 5.2), is able to compute the permanent of all matrices with \( n \le 25 \) in under 5 s. For \( n = 30 \), the time shoots up to approximately 460 s and for \(n \ge 34\), the time taken exceeds 1800 s (time out for our experiments). Since the performance of explicit Ryser’s algorithm depends only on the size of the matrix, and is unaffected by its structure, sparsity or row-similarity, this represents a complete characterization of the performance of the explicit Ryser’s algorithm. Hence, we do not include it in our plots.

-

Propositional Model Counters: Model counters that employ techniques from SAT-solving as well as knowledge compilation, have been shown to scale extremely well on large CNF formulas from diverse domains. Years of careful engineering have resulted in counters that can often outperform domain-specific approaches. We used two state-of-the-art exact model counters, viz. \(\mathsf {D4}\) [33] and \(\mathsf {DSharp}\) [37], for our experiments. We experimented with 6 different encodings for At-Most-One constraints: (1) Pairwise [6], (2) Bitwise [6], (3) Sequential Counter [49], (4) Ladder [2, 22], (5) Modulo Totalizer [39] and (6) Iterative Totalizer [36]. We also experimented with \(\mathsf {ADDMC}\), an ADD-based model counter [1]. However, it failed to scale beyond matrices of size 25; ergo we do not include it in our study.

We were unable to include the parallel #SAT counter countAtom [10] in our experiments, owing to difficulties in setting it up on our compute set-up. However, we could run countAtom on a slightly different set-up with 8 cores instead of 12, and 16 GB memory instead of 48 on a few sampled dense and similar-row matrix instances. Our experiments showed that countAtom timed out on all these cases. We leave a more thorough and scientific comparison with countAtom for future work.

5.2 Experimental Setup

Each experiment (sequential or parallel) had exclusive access to a Westemere node with 12 processor cores running at 2.83 GHz with 48 GB of RAM. We capped memory usage at 42 GB for all tools. We implemented explicit Ryser’s algorithm in C++, compiled with GCC v6.4 with O3 flag. The \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) algorithms were implemented as in Sect. 4.1. \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) had access to all 12 cores for parallel computation. We used the python library PySAT [27] for encoding matrices into CNF. We set the timeout to 1800 s for all our experiments. For purposes of reporting, we treat a memory out as equivalent to a time out.

5.3 Benchmarks

The parameters used for generating random instances are summarized in Table 1. We do not include matrices with \( n < 30 \) since the explicit Ryser’s algorithm suffices (and often performs the best) for such matrices. The upper bound for n was chosen such that the algorithms in our suite either timed out or came close to timing out. For each combination of parameters, random matrix instances were sampled as follows:

-

1.

We started with an \( n \times n \) matrix, where the first row had \( \rho \cdot n \) 1s at randomly chosen column positions, and all other rows were copies of the first row.

-

2.

\( C_f\cdot n \) randomly chosen entries in the starting matrix are flipped i.e. 0 flipped to 1 and vice versa.

For the dense case, we start with a matrix of all 1s while for the sparse case, we start with a matrix of all 0s, and used intermediate row density values for the similar-rows case. We chose higher values for \( C_f \) in the sparse case because for low values, the bipartite graph corresponding to the generated matrix had very few perfect matchings (if any), and these could be simply counted by enumeration. We generated a total of 2125 benchmarks covering a broad range of parameters. For all generated instances, we ensured that there was at least one perfect matching, since the case with zero perfect matchings can be easily solved in polynomial time by algorithms like Hopcroft-Karp [25]. In order to avoid spending inordinately large time on failed experiments, if an algorithm timed out on all generated random instances of a particular size, we also report a time out for that algorithm on all larger instances of that class of matrices. We also double-check this by conducting experiments with the same algorithm on a few randomly chosen larger instances.

The SuiteSparse Matrix Collection [17] is a well known repository of structured sparse matrices that arise from practical applications. We found 26 graphs in this suite with vertex count between 30 and 100, of which 18 had at least one perfect matching. Note that these graphs are not necessarily bipartite; however, their adjacency matrices can be used as benchmarks for computing the permanent. A similar approach was employed in [57] as well.

Fullerenes are carbon molecules whose adjacency matrices have been used extensively by Liang et al. [34, 57, 59] for comparing tools for the permanent. We were able to find the adjacency matrices of \( C_{60} \) and \( C_{100} \), and have used these in our experiments.

6 Results

We first study the variation of running time of \(\mathsf {RysersADD}\) with the size of ADDs involved. Then we compare the running times of various algorithms on sparse, dense and similar-row matrices, as well as on instances from SuiteSparse Matrix Collection and on adjacency matrices of fullerenes \( C_{60} \) and \( C_{100} \). The total computational effort of our experiments exceeds 2500 h of wall clock time on dedicated compute nodes.

6.1 ADD Size Vs Time Taken by \(\mathsf {RysersADD}\)

In order to validate the hypothesis that the size of the ADD representation is a crucial determining factor of the performance of \(\mathsf {RysersADD}\), we present 3 scatter-plots (Fig. 2) for a subset of 100 instances, of each of the dense, sparse and similar-rows cases. In each case, the 100 instances cover the entire range of \( C_f \) and n used in Table 1, and we plot times only for instances that didn’t time out. The plots show that there is very strong correlation between the number of nodes in the ADDs and the time taken for computing the permanent, supporting our hypothesis.

6.2 Performance on Dense Matrices

We plot the median running time of \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) against the matrix size n for dense matrices with \( C_f \in \{1,1.1,1.2,1.3\} \) in Fig. 3. We only show the running times of \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\), since \(\mathsf {D4}\) and \(\mathsf {DSharp}\) were unable to solve any instance of size 30 for all 6 encodings. We observe that the running time of both the ADD-based algorithms increases with \( C_f \). This trend continues for \( C_f = 1.4 \), which we omit for lack of space. \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) is noticeably faster than \(\mathsf {RysersADD}\), indicating that the native parallelism provided by Sylvan is indeed effective.

6.3 Performance on Sparse Matrices

Fig. 4 depicts the median running times of the algorithms for sparse matrices with \( C_f \in \{3.9,4.3,4.7,5.1\} \). We plot the running time of the ADD-based approaches with early abstraction (see Sect. 5.1). Monolithic variants (not shown) time out on all instances with \( n \ge 40 \). For \(\mathsf {D4}\) and \(\mathsf {DSharp}\), we plot the running times only for Pairwise encoding of At-Most-One constraints, since our preliminary experiments showed that it substantially outperformed other encodings. We see that \(\mathsf {D4}\) is the fastest when sparsity is high i.e. for \( C_f \le 4.3 \), but for \( C_f \ge 4.7 \) the ADD-based methods are the best performers. \(\mathsf {DSharp}\) is outperformed by the remaining 3 algorithms in general.

6.4 Performance on Similar-Row Matrices

Figure 5 shows plots of the median running time on similar-row matrices with \( C_f = \{1,1.1,1.2,1.3\} \). We only present the case when \( \rho = 0.8 \), since the plots are similar when \( \rho \in \{0.7, 0.9\} \). As in the case of dense matrices, \(\mathsf {D4}\) and \(\mathsf {DSharp}\) were unable to solve any instance of size 40, and hence we only show plots for \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\). The performance of both tools is markedly better than in the case of dense matrices, and they scale to matrices of size 80 within the 1800 s timeout.

6.5 Performance on SuiteSparse Matrix Collection

We report the performance of algorithms \(\mathsf {RysersADD}\), \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\), \(\mathsf {D4}\) and \(\mathsf {DSharp}\) on 13 representative graphs from the SuiteSparse Matrix Collection in Fig. 6. Except for the first 4 instances, which can be solved in under 5 s by all algorithms, we find that \(\mathsf {D4}\) is the fastest in general, while the ADD-based algorithms outperform \(\mathsf {DSharp}\). Notably, on the instance “can_61", both \(\mathsf {D4}\) and \(\mathsf {DSharp}\) time out while \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) solve it comfortably within the alloted time. We note that the instance “can_61" has roughly 9n 1s, while \(\mathsf {D4}\) is the best performer on instances where the count of 1s in the matrix lies between 4n and 6n.

6.6 Performance on Fullerene Adjacency Matrices

We compared the performance of the algorithms on the adjacency matrices of the fullerenes \( C_{60} \) and \( C_{100} \). All the algorithms timed out on \( C_{100} \). The results for \( C_{60} \) are shown in Table 2. The columns under \(\mathsf {D4}\) and \(\mathsf {DSharp}\) correspond to 6 different encodings of At-Most-One constraints (see Sect. 5.1). It can be seen that \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) performs the best on this class of matrices, followed by \(\mathsf {D4}\). The utility of early abstraction is clearly evident, as the monolithic approach times out in both cases.

Discussion: Our experiments show the effectiveness of the symbolic approach on dense and similar-rows matrices, where neither \(\mathsf {D4}\) nor \(\mathsf {DSharp}\) are able to solve even a single instance. Even for sparse matrices, we see that decreasing sparsity has lesser effect on the performance of ADD-based approaches as compared to \(\mathsf {D4}\). This trend is confirmed by “can_61" in the SuiteSparse Matrix Collection as well, where despite the density of 1s being 9n, \(\mathsf {RysersADD}\) and \(\mathsf {RysersADD}{\text {-}\mathsf {P}}\) finish well within timeout, unlike \(\mathsf {D4}\). In the case of fullerenes, we note that the algorithm in [34] solved \( C_{60} \) in 355 s while the one in [59] took 5 s, which are in the vicinity of the times reported in Table 2. While this is not an apples-to-apples comparison owing to differences in the computing platform, it indicates that the performance of general-purpose algorithms like \(\mathsf {RysersADD}\) and \(\mathsf {D4}\) can be comparable to that of application-specific algorithms.

7 Conclusion

In this work we introduced a symbolic algorithm called \(\mathsf {RysersADD}\) for permanent computation based on augmenting Ryser’s formula with Algebraic Decision Diagrams. We demonstrated, through rigorous experimental evaluation, the scalability of \(\mathsf {RysersADD}\) on both dense and similar-rows matrices, where existing approaches fail. Coupled with the technique of early abstraction [1], \(\mathsf {RysersADD}\) performs reasonably well even on sparse matrices as compared to dedicated approaches. In fact, it may be possible to optimize the algorithm even further, by evaluating other heuristics used in [1]. We leave this for future work. Our work also re-emphasizes the versatility of ADDs and opens the door for their application to other combinatorial problems.

It is an interesting open problem to obtain a complete characterization of the class of matrices for which ADD representation of Ryser’s formula is succinct. Our experimental results for dense matrices hint at the possibility of improved theoretical bounds similar to those obtained in earlier work on sparse matrices. Developing an algorithm for general matrices that is exponentially faster than Ryser’s approach remains a long-standing open problem [28], and obtaining better bounds for non-sparse matrices would be an important first step in this direction.

References

Dudek, J.M., Phan, V.H.N., Vardi, M.Y.: ADDMC: Exact weighted model counting with algebraic decision diagrams. https://arxiv.org/abs/1907.05000

Ansótegui, C., Manyà, F.: Mapping problems with finite-domain variables to problems with boolean variables. In: Hoos, H.H., Mitchell, D.G. (eds.) SAT 2004. LNCS, vol. 3542, pp. 1–15. Springer, Heidelberg (2005). https://doi.org/10.1007/11527695_1

Arora, S., Barak, B.: Computational Complexity: A Modern Approach. Cambridge University Press, Cambridge (2009)

Bahar, R., et al.: Algebraic decision diagrams and their applications. J. Formal Methods Syst. Des. 10(2/3), 171–206 (1997)

Bax, E., Franklin, J.: A permanent algorithm with exp [(n1/3/2ln (n))] expected speedup for 0–1 matrices. Algorithmica 32(1), 157–162 (2002)

Biere, A., Heule, M., van Maaren, H., Walsh, T.: Handbook of Satisfiability: Volume 185 Frontiers in Artificial Intelligence and Applications. IOS Press, Amsterdam (2009)

Bouquet, F.: Gestion de la dynamicité et énumération d’impliquants premiers: une approche fondée sur les Diagrammes de Décision Binaire. PhD thesis, Aix-Marseille 1 (1999)

Brault-Baron, J., Capelli, F., Mengel, S.: Understanding model counting for \(\beta \)-acyclic CNF-formulas. arXiv preprint arXiv:1405.6043 (2014)

Broder, A.Z.: How hard is it to marry at random? (on the approximation of the permanent). In: Proceedings of the Eighteenth Annual ACM Symposium on Theory of Computing, pp. 50–58. ACM (1986)

Burchard, J., Schubert, T., Becker, B.: Laissez-faire caching for parallel #SAT solving. In: Heule, M., Weaver, S. (eds.) SAT 2015. LNCS, vol. 9340, pp. 46–61. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24318-4_5

Cai, J.-Y., Pavan, A., Sivakumar, D.: On the hardness of permanent. In: Meinel, C., Tison, S. (eds.) STACS 1999. LNCS, vol. 1563, pp. 90–99. Springer, Heidelberg (1999). https://doi.org/10.1007/3-540-49116-3_8

Cash, G.G.: A fast computer algorithm for finding the permanent of adjacency matrices. J. Math. Chem. 18(2), 115–119 (1995)

Chou, Q., Liang, H., Bai, F.: Computing the permanental polynomial of the high level fullerene C70 with high precision. MATCH Commun. Math. Comput. Chem. 73, 327–336 (2015)

Courcelle, B.: Graph rewriting: an algebraic and logic approach. In: Formal Models and Semantics, pp. 193–242. Elsevier (1990)

Courcelle, B., Engelfriet, J., Rozenberg, G.: Handle-rewriting hypergraph grammars. J. Comput. Syst. Sci. 46(2), 218–270 (1993)

Courcelle, B., Makowsky, J.A., Rotics, U.: On the fixed parameter complexity of graph enumeration problems definable in monadic second-order logic. Discrete Appl. Math. 108(1–2), 23–52 (2001)

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. (TOMS) 38(1), 1 (2011)

Dechter, R.: Bucket elimination: a unifying framework for reasoning. Artif. Intell. 113(1–2), 41–85 (1999)

Dell, H., Husfeldt, T., Marx, D., Taslaman, N., Wahlén, M.: Exponential time complexity of the permanent and the tutte polynomial. ACM Trans. Algorithms (TALG) 10(4), 21 (2014)

Duenas-Osorio, L., Meel, K.S., Paredes, R., Vardi, M.Y.: Counting-based reliability estimation for power-transmission grids. In: AAAI, pp. 4488–4494 (2017)

Fujita, M., McGeer, P., Yang, J.-Y.: Multi-terminal binary decision diagrams: an efficient datastructure for matrix representation. Form. Methods Syst. Des. 10(2–3), 149–169 (1997)

Gent, I.P., Nightingale, P.: A new encoding of all different into SAT. In: International Workshop on Modelling and Reformulating Constraint Satisfaction, pp. 95–110 (2004)

Gordon, M., Davison, W.: Theory of resonance topology of fully aromatic hydrocarbons. I. J. Chem. Phys. 20(3), 428–435 (1952)

Grohe, M.: Descriptive and parameterized complexity. In: Flum, J., Rodriguez-Artalejo, M. (eds.) CSL 1999. LNCS, vol. 1683, pp. 14–31. Springer, Heidelberg (1999). https://doi.org/10.1007/3-540-48168-0_3

Hopcroft, J.E., Karp, R.M.: An n\(\hat{}\,5/2\) algorithm for maximum matchings in bipartite graphs. SIAM J. Comput. 2(4), 225–231 (1973)

Huo, Y., Liang, H., Liu, S.-Q., Bai, F.: Computing monomer-dimer systems through matrix permanent. Phys. Rev. E 77(1), 016706 (2008)

Ignatiev, A., Morgado, A., Marques-Silva, J.: PySAT: a Python toolkit for prototyping with SAT oracles. In: SAT, pp. 428–437 (2018)

Izumi, T., Wadayama, T.: A new direction for counting perfect matchings. In: 2012 IEEE 53rd Annual Symposium on Foundations of Computer Science, pp. 591–598. IEEE (2012)

Kasteleyn, P.W.: The statistics of dimers on a lattice: I. The number of dimer arrangements on a quadratic lattice. Physica 27(12), 1209–1225 (1961)

Knuth, D.E.: Generating all n-tuples. The Art of Computer Programming, 4 (2004)

Koster, A.M., Bodlaender, H.L., Van Hoesel, S.P.: Treewidth: computational experiments. Electron. Notes Discrete Math. 8, 54–57 (2001)

Kroto, H.W., Heath, J.R., O’Brien, S.C., Curl, R.F., Smalley, R.E.: C60: Buckminsterfullerene. Nature 318(6042), 162 (1985)

Lagniez, J.-M., Marquis, P.: An improved decision-DNNF compiler. In: IJCAI, pp. 667–673 (2017)

Liang, H., Bai, F.: A partially structure-preserving algorithm for the permanents of adjacency matrices of fullerenes. Comput. Phys. Commun. 163(2), 79–84 (2004)

Liang, H., Huang, S., Bai, F.: A hybrid algorithm for computing permanents of sparse matrices. Appl. Math. Comput. 172(2), 708–716 (2006)

Martins, R., Joshi, S., Manquinho, V., Lynce, I.: Incremental cardinality constraints for MaxSAT. In: O’Sullivan, B. (ed.) International Conference on Principles and Practice of Constraint Programming, pp. 531–548. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10428-7_39

Muise, C., McIlraith, S.A., Beck, J.C., Hsu, E.: DSHARP: fast d-DNNF compilation with sharpSAT. In: Canadian Conference on Artificial Intelligence (2012)

Nijenhuis, A., Wilf, H.S.: Combinatorial Algorithms: for Computers and Calculators. Elsevier (2014)

Ogawa, T., Liu, Y., Hasegawa, R., Koshimura, M., Fujita, H.: Modulo based CNF encoding of cardinality constraints and its application to MaxSAT solvers. In: 2013 IEEE 25th International Conference on Tools with Artificial Intelligence, pp. 9–17. IEEE (2013)

Okamoto, Y., Uehara, R., Uno, T.: Counting the number of matchings in chordal and chordal bipartite graph classes. In: Paul, C., Habib, M. (eds.) WG 2009. LNCS, vol. 5911, pp. 296–307. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-11409-0_26

Pan, G., Vardi, M.Y.: Search vs. symbolic techniques in satisfiability solving. In: Hoos, H.H., Mitchell, D.G. (eds.) SAT 2004. LNCS, vol. 3542, pp. 235–250. Springer, Heidelberg (2005). https://doi.org/10.1007/11527695_19

Pesant, G., Quimper, C.-G., Zanarini, A.: Counting-based search: Branching heuristics for constraint satisfaction problems. J. Artif. Intell. Res. 43, 173–210 (2012)

Régin, J.-C.: A filtering algorithm for constraints of difference in CSPs. AAAI 94, 362–367 (1994)

Robertson, N., Seymour, P.D.: Graph minors. III. Planar tree-width. J. Comb. Theory Ser. B 36(1), 49–64 (1984)

Roth, D.: On the hardness of approximate reasoning. Artif. Intell. 82(1), 273–302 (1996)

Ryser, H.: Combinatorial mathematics, the carus mathematical monographs. Mathematical Association of America, no. 4 (1963)

Sæther, S.H., Telle, J.A., Vatshelle, M.: Solving #SAT and MaxSAT by dynamic programming. J. Artif. Intell. Res. 54, 59–82 (2015)

Servedio, R.A., Wan, A.: Computing sparse permanents faster. Inf. Process. Lett. 96(3), 89–92 (2005)

Sinz, C.: Towards an optimal CNF encoding of boolean cardinality constraints. In: van Beek, P. (eds) International Conference on Principles and Practice of Constraint Programming, pp. 827–831. Springer, Heidelberg (2005). https://doi.org/10.1007/11564751_73

Somenzi, F.: CUDD package, release 2.4.1. http://vlsi.colorado.edu/~fabio/CUDD/

Tarjan, R.E., Yannakakis, M.: Simple linear-time algorithms to test chordality of graphs, test acyclicity of hypergraphs, and selectively reduce acyclic hypergraphs. SIAM J. Comput. 13(3), 566–579 (1984)

Temperley, H.N., Fisher, M.E.: Dimer problem in statistical mechanics-an exact result. Philos. Mag. 6(68), 1061–1063 (1961)

Toda, S.: On the computational power of PP and (+)P. In: Proceedings of FOCS, pp. 514–519. IEEE (1989)

Valiant, L.: The complexity of enumeration and reliability problems. SIAM J. Comput. 8(3), 410–421 (1979)

van Dijk, T., van de Pol, J.: Sylvan: multi-core framework for decision diagrams. Int. J. Softw. Tools Technol. Transf. 19(6), 675–696 (2017)

Wallace, C., Korb, K.B., Dai, H.: Causal discovery via MML. In: ICML 1996, pp. 516–524 (1996)

Wang, L., Liang, H., Bai, F., Huo, Y.: A load balancing strategy for parallel computation of sparse permanents. Numer. Linear Algebra Appl. 19(6), 1017–1030 (2012)

Wei, T.-C., Severini, S.: Matrix permanent and quantum entanglement of permutation invariant states. J. Math. Phys. 51(9), 092203 (2010)

Yue, B., Liang, H., Bai, F.: Improved algorithms for permanent and permanental polynomial of sparse graph. MATCH Commun. Math. Comput. Chem. 69, 831–842 (2013)

Zanarini, A., Pesant, G.: Solution counting algorithms for constraint-centered search heuristics. Constraints 14(3), 392–413 (2009)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Chakraborty, S., Shrotri, A.A., Vardi, M.Y. (2019). On Symbolic Approaches for Computing the Matrix Permanent. In: Schiex, T., de Givry, S. (eds) Principles and Practice of Constraint Programming. CP 2019. Lecture Notes in Computer Science(), vol 11802. Springer, Cham. https://doi.org/10.1007/978-3-030-30048-7_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-30048-7_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30047-0

Online ISBN: 978-3-030-30048-7

eBook Packages: Computer ScienceComputer Science (R0)