Abstract

Nowadays we have many methods allowing to exploit the regularising properties of the linear part of a nonlinear dispersive equation (such as the KdV equation, the nonlinear wave or the nonlinear Schrödinger equations) in order to prove well-posedness in low regularity Sobolev spaces. By well-posedness in low regularity Sobolev spaces we mean that less regularity than the one imposed by the energy methods is required (the energy methods do not exploit the dispersive properties of the linear part of the equation). In many cases these methods to prove well-posedness in low regularity Sobolev spaces lead to optimal results in terms of the regularity of the initial data. By optimal we mean that if one requires slightly less regularity then the corresponding Cauchy problem becomes ill-posed in the Hadamard sense. We call the Sobolev spaces in which these ill-posedness results hold spaces of supercritical regularity. More recently, methods to prove probabilistic well-posedness in Sobolev spaces of supercritical regularity were developed. More precisely, by probabilistic well-posedness we mean that one endows the corresponding Sobolev space of supercritical regularity with a non degenerate probability measure and then one shows that almost surely with respect to this measure one can define a (unique) global flow. However, in most of the cases when the methods to prove probabilistic well-posedness apply, there is no information about the measure transported by the flow. Very recently, a method to prove that the transported measure is absolutely continuous with respect to the initial measure was developed. In such a situation, we have a measure which is quasi-invariant under the corresponding flow.

The aim of these lectures is to present all of the above described developments in the context of the nonlinear wave equation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

4.1 Deterministic Cauchy Theory for the 3d Cubic Wave Equation

4.1.1 Introduction

In this section, we consider the cubic defocusing wave equation

where u = u(t, x) is real valued, \(t\in \mathbb R\),  (the 3d torus). In (4.1.1), Δ denotes the Laplace operator, namely

(the 3d torus). In (4.1.1), Δ denotes the Laplace operator, namely

Since (4.1.1) is of second order in time, it is natural to complement it with two initial conditions

In this section, we will be studying the local and global well-posedness of the initial value problem (4.1.1)–(4.1.2) in Sobolev spaces via deterministic methods.

The Sobolev spaces \(H^s(\mathbb T^3)\) are defined as follows. For a function f on \(\mathbb T^3\) given by its Fourier series

we define the Sobolev norm \(H^s(\mathbb T^3)\) of f as

where 〈n〉 = (1 + |n|2)1∕2. On has that

For integer values of s one can also give an equivalent norm in the physical space as follows

where the summation is taken over all multi-indexes \(\alpha =(\alpha _1,\alpha _2,\alpha _3)\in \mathbb N^3\).

As we shall see, it will be of importance to understand the interplay between the linear and the nonlinear part of (4.1.1). Indeed, let us first consider the Cauchy problem

which is obtained from (4.1.1) by neglecting the Laplacian. If we set

then the last problem can be written as

On may wish to solve, at least locally, the last problem via the Cauchy-Lipschitz argument in the spaces \(H^{s_1}(\mathbb T^3)\times H^{s_2}(\mathbb T^3)\). For such a purpose one should check that the vector field F(U) is locally Lipschitz on these spaces. Thanks to the Sobolev embedding \(H^s(\mathbb T^3)\subset L^\infty (\mathbb T^3)\), s > 3∕2 we can see that the map \(U_1\mapsto U_1^3\) is locally Lipschitz on \(H^s(\mathbb T^3)\), s > 3∕2. It is also easy to check that the map \(U_1\mapsto U_1^3\) is not continuous on \(H^s(\mathbb T^3)\), s < 3∕2. A more delicate argument shows that it is not continuous on \(H^{3/2}(\mathbb T^3)\) either. Therefore, if we impose that F(u) is locally Lipschitz on \(H^{s_1}(\mathbb T^3)\times H^{s_2}(\mathbb T^3)\) than we necessarily need to impose a regularity assumption s 1 > 3∕2. As we shall see below the term containing the Laplacian in (4.1.1) will allow as to significantly relax this regularity assumption.

On the other hand if we neglect the nonlinear term u 3 in (4.1.1), we get the linear wave equation which is well-posed in \(H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3)\) for any \(s\in \mathbb R\), as it can be easily seen by the Fourier series description of the solutions of the linear wave equation (see the next section). In other words the absence of a nonlinearity allows us to solve the problem in arbitrary singular Sobolev spaces.

In summary, we expect that the Laplacian term in (4.1.1) will help us to prove the well-posedness of the problem (4.1.1) in singular Sobolev spaces while the nonlinear term u 3 will be responsible for the lack of well-posedness in singular spaces.

4.1.2 Local and Global Well-Posedness in H 1 × L 2

4.1.2.1 The Free Evolution

We first define the free evolution, i.e. the map defining the solutions of the linear wave equation

Using the Fourier transform and solving the corresponding second order linear ODE’s, we obtain that the solutions of (4.1.3) are generated by the map S(t), defined as follows

where

and

We have that S(t)(u 0, u 1) solves (4.1.3) and if (u 0, u 1) ∈ H s × H s−1, \(s\in \mathbb R\) then S(t)(u 0, u 1) is the unique solution of (4.1.3) in \(C(\mathbb R;H^s(\mathbb T^3))\) such that its time derivative is in \(C(\mathbb R;H^{s-1}(\mathbb T^3))\). It follows directly from the definition that the operator \(\bar {S}(t)\equiv (S(t),\partial _t S(t))\) is bounded on H s × H s−1, \(\bar {S}(0)=\mathrm {Id}\) and \(\bar {S}(t+\tau )=\bar {S}(t) \circ \bar {S}(\tau )\), for every real numbers t and τ. In the proof of the boundedness on H s × H s−1, we only use the boundedness of \(\cos {}(t|n|)\) and \(\sin {}(t|n|)\). As we shall see below one may use the oscillations of \(\cos {}(t|n|)\) and \(\sin {}(t|n|)\) for |n|≫ 1 in order to get more involved L p, p > 2 properties of the map S(t).

Let us next consider the non homogeneous problem

Using the variation of the constants method, we obtain that the solutions of (4.1.4) are given by

As a consequence, we obtain that the solution of the non homogeneous problem (4.1.4) is one derivative smoother than the source term F. More precisely, for every \(s\in \mathbb R\), the solution of (4.1.4) satisfies the bound

4.1.2.2 The Local Well-Posedness

We state the local well-posedness result.

Proposition 4.1.1 (Local Well-Posedness)

Consider the cubic defocusing wave equation

posed on \(\mathbb T^3\). There exist constants c and C such that for every \(a\in \mathbb R\), every Λ ≥ 1, every

satisfying

there exists a unique solution of (4.1.6) on the time interval [a, a + c Λ −2] of (4.1.6) with initial data

Moreover the solution satisfies

(u, ∂ tu) is unique in the class \(L^\infty ([a,a+c \Lambda ^{-2}],H^1(\mathbb T^3)\times L^2(\mathbb T^3))\)and the dependence with respect to the initial data and with respect to the time is continuous. Finally, if

for some s ≥ 1 then there exists c s > 0 such that

Proof

If u(t, x) is a solution of (4.1.6) then so is u(t + a, x). Therefore, it suffices to consider the case a = 0.

Thanks to the analysis of the previous section, we obtain that we should solve the integral equation

Set

Then for T ∈ (0, 1], we define X T as

endowed with the natural norm

Using the boundedness properties of \(\bar {S}\) on H s × H s−1 explained in the previous section and using the Sobolev embedding \(H^1(\mathbb T^3)\subset L^6(\mathbb T^3)\), we get

It is now clear that for T = c Λ−2 , c ≪ 1 the map \(\Phi _{u_0,u_1}\) sends the ball

into itself. Moreover, by a similar arguments involving the Sobolev embedding \(H^1(\mathbb T^3)\subset L^6(\mathbb T^3)\) and the Hölder inequality, we obtain the estimate

Therefore, with our choice of T, we get that

Consequently the map \(\Phi _{u_0,u_1}\) is a contraction on B. The fixed point of this contraction defines the solution u on [0, T] we are looking for. The estimate of \(\|\partial _t u\|{ }_{L^2}\) follows by differentiating in t the Duhamel formula (4.1.8). Let us now turn to the uniqueness. Let \(u,\tilde {u}\) be two solutions of (4.1.6) with the same initial data in the space X T for some T > 0. Then for τ ≤ T, we can write similarly to (4.1.9)

Let us take τ such that

This fixes the value of τ. Thanks to (4.1.10), we obtain that u and \(\tilde {u}\) are the same on [0, τ]. Next, we cover the interval [0, T] by intervals of size τ and we inductively obtain that u and \(\tilde {u}\) are the same on each interval of size τ. This yields the uniqueness statement.

The continuous dependence with respect to time follows from the Duhamel formula representation of the solution of (4.1.8). The continuity with respect to the initial data follows from the estimates on the difference of two solutions we have just performed. Notice that we also obtain uniform continuity of the map data-solution on bounded subspaces of H 1 × L 2.

Let us finally turn to the propagation of higher regularity. Let (u 0, u 1) ∈ H 1 × L 2 such that (4.1.7) holds satisfy the additional regularity property (u 0, u 1) ∈ H s × H s−1 for some s > 1. We will show that the corresponding solution remains in H s × H s−1in the (essentially) whole time of existence. For s ≥ 1, we define \(X^s_T\) as

endowed with the norm

We have that the solution with data (u 0, u 1) ∈ H s × H s−1 remains in this space for time intervals of order \( (1+\|u_0\|{ }_{H^s}+\|u_1\|{ }_{H^{s-1}})^{-2} \) by a fixed point argument, similar to the one we performed for data in H 1 × L 2. We now show that the regularity is preserved for (the longer) time intervals of order \( (1+\|u_0\|{ }_{H^1}+\|u_1\|{ }_{L^{2}})^{-2}\,. \) Coming back to (4.1.8), we can write

Now using the Kato-Ponce product inequality, we can obtain that for σ ≥ 0, one has the bound

Using (4.1.11) and applying the Sobolev embedding \(H^1(\mathbb T^3)\subset L^6(\mathbb T^3)\), we infer that

Therefore, we arrive at the bound

By construction of the solution we infer that if T ≤ c s Λ−2 with c s small enough, we have that

which implies the propagation of the regularity statement for u. Strictly speaking, one should apply a bootstrap argument starting from the propagation of the regularity on times of order \( (1+\|u_0\|{ }_{H^s}+\|u_1\|{ }_{H^{s-1}})^{-2} \) and then extend the regularity propagation to the longer interval [0, c s Λ−2]. One estimates similarly ∂ tu in H s−1 by differentiating the Duhamel formula with respect to t. The continuous dependence with respect to time in H s × H s−1 follows once again from the Duhamel formula (4.1.8). This completes the proof of Proposition 4.1.1. □

Theorem 4.1.2 (Global Well-Posedness)

For every \( (u_0,u_1)\in H^1(\mathbb T^3)\times L^2(\mathbb T^3) \) the local solution of the cubic defocusing wave equation

can be extended globally in time. It is unique in the class \(C(\mathbb R ;H^1(\mathbb T^3)\times L^{2}(\mathbb T^3))\) and there exists a constant C depending only on \(\|u_0\|{ }_{H^1}\) and \(\|u_1\|{ }_{L^2}\) such that for every \(t\in \mathbb R\) ,

If in addition \( (u_0,u_1)\in H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3) \)for some s ≥ 1 then

Remark 4.1.3

One may obtain global weak solutions of the cubic defocusing wave equation for data in H 1 × L 2 via compactness arguments. The uniqueness and the propagation of regularity statements of Theorem 4.1.2 are the major differences with respect to the weak solutions.

Proof of Theorem 4.1.2

The key point is the conservation of the energy displayed in the following lemma.

Lemma 4.1.4

There exist c > 0 and C > 0 such that for every \((u_0,u_1)\in H^1(\mathbb T^3)\times L^2(\mathbb T^3)\)the local solution of the cubic defocusing wave equation, with data (u 0, u 1), constructed in Proposition 4.1.1is defined on [0, T] with

and

As a consequence, for t ∈ [0, T],

Remark 4.1.5

Using the invariance with respect to translations in time, we can state Lemma 4.1.4 with initial data at an arbitrary initial time.

Proof of Lemma 4.1.4

We apply Proposition 4.1.1 with \(\Lambda = \|u_0\|{ }_{H^1}+\|u_1\|{ }_{L^2}\) and we take T = c 10 Λ−2, where c 10 is the small constant involved in the propagation of the H 10 × H 9 regularity. Let (u 0,n, u 1,n) be a sequence in H 10 × H 9 which converges to (u 0, u 1) in H 1 × L 2 and such that

Let u n(t) be the solution of the cubic defocusing wave equation, with data (u 0,n, u 1,n). By Proposition 4.1.1 these solutions are defined on [0, T] and they keep their H 10 × H 9 regularity on the same time interval. We multiply the equation

by ∂ tu n. Using the regularity properties of u n(t), after integrations by parts, we arrive at

which implies the identity

We now pass to the limit n→∞ in (4.1.13). The right hand-side converges to

by the definition of (u 0,n, u 1,n) (we invoke the Sobolev embedding for the convergence of the L 4 norms) . The right hand-side of (4.1.13) converges to

by the continuity of the flow map established in Proposition 4.1.1. Using the compactness of \(\mathbb T^3\) and the Hölder inequality, we have that

and therefore

is bounded by

Now, using (4.1.12) and the Sobolev inequality

we obtain that for t ∈ [0, T],

This completes the proof of Lemma 4.1.4. □

Let us now complete the proof of Theorem 4.1.2. Let \((u_0,u_1)\in H^1(\mathbb T^3)\times L^2(\mathbb T^3)\). Set

where the constants c and C are defined in Lemma 4.1.4. We now observe that we can use Proposition 4.1.1 and Lemma 4.1.4 on the intervals [0, T], [T, 2T], [2T, 3T], and so on and therefore we extend the solution with data (u 0, u 1) on [0, ∞). By the time reversibility of the wave equation we similarly can construct the solution for negative times. More precisely, the free evolution S(t)(u 0, u 1) well-defined for all \(t\in \mathbb R\) and one can prove in the same way the natural counterparts of Proposition 4.1.1 and Lemma 4.1.4 for negative times. The propagation of higher Sobolev regularity globally in time follows from Proposition 4.1.1 while the H 1 a priori bound on the solutions follows from Lemma 4.1.4. This completes the proof of Theorem 4.1.2. □

Remark 4.1.6

One may proceed slightly differently in the proof of Theorem 4.1.2 by observing that as a consequence of Proposition 4.1.1, if a local solution with H 1 × L 2 data blows-up at time T ⋆ < ∞ then

The statement (4.1.14) is in contradiction with the energy conservation law.

Remark 4.1.7

Observe that the nonlinear problem

behaves better than the linear problem

with respect to the H 1 global in time bounds. Indeed, Theorem 4.1.2 establishes that the solutions of (4.1.15) are bounded in H 1 as far as the initial data is in H 1 × L 2. On the other hand one can consider u(t, x) = t which is a solution of the linear wave equation (4.1.16) on \(\mathbb T^3\) with data in H 1 × L 2 and its H 1 norm is clearly growing in time.

Remark 4.1.8

The sign in front of the nonlinearity is not of importance for Proposition 4.1.1. One can therefore obtain the local well-posedness of the cubic focusing wave equation

posed on \(\mathbb T^3\), with data in \(H^1(\mathbb T^3)\times L^2(\mathbb T^3)\). However, the sign in front of the nonlinearity is of crucial importance in the proof of Theorem 4.1.2. Indeed, one has that

is a solution of (4.1.17), posed on \(\mathbb T^3\) with data \((\sqrt {2},-\sqrt {2})\) which is not defined globally in time (it blows-up in H 1 × L 2 at t = 1).

4.1.3 The Strichartz Estimates

In the previous section, we solved globally in time the cubic defocusing wave equation in H 1 × L 2. One may naturally ask whether it is possible to extend these results to the more singular Sobolev spaces H s × H s−1 for some s < 1. It turns out that this is possible by invoking more refined properties of the map S(t) defining the free evolution. The proof of these properties uses in an essential way the time oscillations in S(t) and can be quantified as the L p, p > 2 mapping properties of S(t) (cf. [19, 30]).

Theorem 4.1.9 (Strichartz Inequality for the Wave Equation)

Let \((p,q)\in \mathbb R^2\)be such that 2 < p ≤∞ and \( \frac {1}{p}+\frac {1}{q}=\frac {1}{2}. \)Then we have the estimate

We shall use that the solutions of the wave equation satisfy a finite propagation speed property which will allow us to deduce the result of Theorem 4.1.9 from the corresponding Strichartz estimate for the wave equation on the Euclidean space. Consider therefore the wave equation

where now the spatial variable x belongs to \(\mathbb R^3\) and the initial data (u 0, u 1) belong to \(H^s(\mathbb R^3)\times H^{s-1}(\mathbb R^3)\). Using the Fourier transform on \(\mathbb R^3\), we can solve (4.1.18) and obtain that the solutions are generated by the map S e(t), defined as

where for u 0 and u 1 in the Schwartz class,

and

where \( \widehat {u_0}\) and \(\widehat {u_1}\) are the Fourier transforms of u 0 and u 1 respectively. By density, one then extends S e(t)(u 0, u 1) to a bounded map from \(H^s(\mathbb R^3)\times H^{s-1}(\mathbb R^3)\) to \(H^s(\mathbb R^3)\) for any \(s\in \mathbb R\). The next lemma displays the finite propagation speed property of S e(t).

Proposition 4.1.10 (Finite Propagation Speed)

Let \((u_0,u_1)\in H^s(\mathbb R^3)\times H^{s-1}(\mathbb R^3)\)for some s ≥ 0 be such that

for some R > 0 and \(x_0\in \mathbb R^3\). Then for t ≥ 0,

Proof

The statement of Proposition 4.1.10 (and even more precise localisation property) follows from the Kirchoff formula representation of the solutions of the three dimensional wave equation. Here we will present another proof which has the advantage to extend to an arbitrary dimension and to variable coefficient settings. By the invariance of the wave equation with respect to spatial translations, we can assume that x 0 = 0. We need to prove Proposition 4.1.10 only for (say) s ≥ 100 which ensures by the Sobolev embedding that the solutions we study are of class \(C^2(\mathbb R^4)\). We than can treat the case of an arbitrary \((u_0,u_1)\in H^s(\mathbb R^3)\times H^{s-1}(\mathbb R^3)\), s ≥ 0 by observing that

where ρ ε(x) = ε −3ρ(x∕ε), \(\rho \in C^\infty _0(\mathbb R^3)\), 0 ≤ ρ ≤ 1, \(\int \rho =1\). It suffices then to pass to the limit ε → 0 in (4.1.19). Indeed, for \(\varphi \in C^\infty _0(|x|>t+R)\), S e(t)(ρ ε ⋆ u 0, ρ ε ⋆ u 1)(φ) is zero for ε small enough while ρ ε ⋆ S e(t)(u 0, u 1)(φ) converges to S e(t)(u 0, u 1)(φ).

Therefore, in the remaining of the proof of Proposition 4.1.10, we shall assume that S e(t)(u 0, u 1) is a C 2 solution of the 3d wave equation. The main point in the proof is the following lemma.

Lemma 4.1.11

Let \(x_0\in \mathbb R^3\), r > 0 and let S e(t)(u 0, u 1) be a C 2solution of the 3d linear wave equation. Suppose that u 0(x) = u 1(x) = 0 for |x − x 0|≤ r. Then S e(t)(u 0, u 1) = 0 in the cone C defined by

Proof

Let u(t, x) = S e(t)(u 0, u 1). For t ∈ [0, r], we set

where \(B(x_0,r-t)=\{x\in \mathbb R^3\,:\, |x|\leq r-t \}\). Then using the Gauss-Green theorem and the equation solved by u, we obtain that

where \(\partial B\equiv \{x\in \mathbb R^3\,:\, |x|= r-t \}\), dS(y) is the volume element associated with ∂B and ν(y) is the outer unit normal to ∂B. We clearly have

which implies that \(\dot {E}(t)\leq 0\). Since E(0) = 0 we obtain that E(t) = 0 for every t ∈ [0, r]. This in turn implies that u(t, x) is a constant in C. We also know that u(0, x) = 0 for |x − x 0|≤ r. Therefore u(t, x) = 0 in C. This completes the proof of Lemma 4.1.11. □

Let us now complete the proof of Proposition 4.1.10. Let \(t_0\in \mathbb R\) and \(y\in \mathbb R^3\) such that |y| > R + t 0. We need to show that u(t 0, y) = 0. Consider the cone C defined by

Set \(B\equiv C\cap \{(t,x)\in \mathbb R^4: t=0 \}\). We have that

and therefore by the definition of t 0 and y we have that

Therefore u(0, x) = ∂ tu(0, x) for |x − y|≤ t 0. Using Lemma 4.1.11, we obtain that u(t, x) = 0 in C. In particular u(t 0, y) = 0. This completes the proof of Proposition 4.1.10. □

Using Proposition 4.1.10 and a decomposition of the initial data associated with a partition of unity corresponding to a covering of \(\mathbb T^3\) by sufficiently small balls, we obtain that the result of Theorem 4.1.9 is a consequence of the following statement.

Proposition 4.1.12 (Local in Time Strichartz Inequality for the Wave Equation on \(\mathbb R^3\))

Let \((p,q)\in \mathbb R^2\)be such that 2 < p ≤∞ and \(\frac {1}{p}+\frac {1}{q}=\frac {1}{2}\). Then we have the estimate

Proof

Let \(\chi \in C^\infty _0(\mathbb R^3)\) be such that χ(x) = 1 for |x| < 1. We then define the Fourier multiplier χ(D x) by

Using a suitable Sobolev embedding in \(\mathbb R^3\), we obtain that for every \(\sigma \in \mathbb R\),

Therefore, by splitting u 1 as

and by expressing the \(\sin \) and \(\cos \) functions as combinations of exponentials, we observe that Proposition 4.1.12 follows from the following statement.

Proposition 4.1.13

Let \((p,q)\in \mathbb R^2\)be such that 2 < p ≤∞ and \( \frac {1}{p}+\frac {1}{q}=\frac {1}{2}. \)Then we have the estimate

Remark 4.1.14

Let us make an important remark. As a consequence of Proposition 4.1.13 and a suitable Sobolev embedding, we obtain the estimate

Therefore, we obtain that for \(f\in H^s(\mathbb R^3)\), s > 1, the function \(e^{ it\sqrt {-\Delta _{\mathbb R^3}}}(f)\) which is a priori defined as an element of \(C([0,1];H^s(\mathbb R^3))\) has the remarkable property that

for almost every t ∈ [0, 1]. Recall that the Sobolev embedding requires the condition s > 3∕2 in order to ensure that an \(H^s(\mathbb R^3)\) function is in \(L^\infty (\mathbb R^3)\). Therefore, one may wish to see (4.1.22) as an almost sure in t improvement (with 1∕2 derivative) of the Sobolev embedding \(H^{\frac {3}{2}+}(\mathbb R^3)\subset L^\infty (\mathbb R^3)\), under the evolution of the linear wave equation.

Proof of Proposition 4.1.13

Consider a Littlewood-Paley decomposition of the unity

where the summation is taken over the dyadic values of N, i.e. N = 2j, j = 0, 1, 2, … and P 0, P N are Littlewood-Paley projectors. More precisely they are defined as Fourier multipliers by Δ0 = ψ 0(D x) and for N ≥ 1, P N = ψ(D x∕N), where \(\psi _0\in C_0^\infty (\mathbb R^3)\) and \(\psi \in C_0^\infty (\mathbb R^3\backslash \{0\})\) are suitable functions such that (4.1.23) holds. The maps ψ(D x∕N) are defined similarly to (4.1.21) by

Set

Our goal is to evaluate \(\|u\|{ }_{L^p([0,1]L^q(\mathbb R^3))}\). Thanks to the Littlewood-Paley square function theorem, we have that

The proof of (4.1.24) can be obtained as a combination of the Mikhlin-Hörmander multiplier theorem and the Khinchin inequality for Bernouli variables.Footnote 1 Using the Minkowski inequality, since p ≥ 2 and q ≥ 2, we can write

Therefore, it suffices to prove that for every \(\psi \in C_0^\infty (\mathbb R^3\backslash \{0\})\) there exists C > 0 such that for every N and every \(f\in L^2(\mathbb R^3)\),

Indeed, suppose that (4.1.26) holds true. Then, we define \(\tilde {P}_{N}\) as \(\tilde {P}_N=\tilde {\psi }(D_x/N)\), where \(\tilde {\psi }\in C_0^\infty (\mathbb R^3\backslash \{0\})\) is such that \(\tilde {\psi }\equiv 1\) on the support of ψ. Then \(P_N=\tilde {P}_{N} P_{N}\). Now, coming back to (4.1.25), using the Sobolev inequality to evaluate \( \|P_0 u\|{ }_{L^p_t L^q_{x}}\) and (4.1.26) to evaluate \(\|P_N u\|{ }_{l^2_N L^p_t L^q_x }\), we arrive at the bound

Therefore, it remains to prove (4.1.26). Set

Our goal is to study the mapping properties of T from \(L^2_{x}\) to \(L^p_tL^q_{x}\). We can write

where \(\frac {1}{p}+\frac {1}{p'}=\frac {1}{q}+\frac {1}{q'}=1\). Note that in order to write (4.1.27) the values 1 and ∞ of p and q are allowed. Next, we can write

where T ⋆ is defined by

Indeed, we have

Therefore (4.1.28) follows. But thanks to the Cauchy-Schwarz inequality we can write

Therefore, in order to prove (4.1.26), it suffices to prove the bound

Next, we can write

Therefore, estimate (4.1.26) would follow from the estimate

An advantage of (4.1.29) with respect to (4.1.26) is that we have the same number of variables in both sides of the estimates. Coming back to the definition of T and T ⋆, we can write

Now by using the triangle inequality, for a fixed t ∈ [0, 1], we can write

On the other hand, using the Fourier transform, we can write

Therefore,

where

A simple change of variable leads to

In order to estimate K(t, x − x′), we invoke the following proposition.

Proposition 4.1.15 (Soft Stationary Phase Estimate)

Let d ≥ 1. For every Λ > 0, N ≥ 1 there exists C > 0 such that for every λ ≥ 1, every \(a\in C^{\infty }_0(\mathbb R^d)\), satisfying

every φ ∈ C ∞(supp(a)) satisfying

one has the bound

Remark 4.1.16

Observe that in (4.1.31), we do not require upper bounds for the first derivatives of φ.

We will give the proof of Proposition 4.1.15 later. Let us first show how to use it in order to complete the proof of (4.1.26). We claim that

Estimate (4.1.33) trivially follows from the expression defining K(t, x − x′) for |tN|≤ 1 (one simply ignores the oscillation term). For |Nt|≥ 1, using Proposition 4.1.15 (with a = ψ 2, N = 2 and d = 3), we get the bound

where

Observe that φ is C ∞ on the support of ψ and moreover it satisfies the assumptions of Proposition 4.1.15. We next observe that

Indeed, since \(\nabla \varphi (\xi )=\pm \frac {\xi }{|\xi |}+t^{-1}N(x-x')\) we obtain that one can split the support of integration in regions such that there are two different j 1, j 2 ∈{1, 2, 3} such that one can perform the change of variable

with a non-degenerate Hessian. More precisely, we have

which is not degenerate for ξ 3 ≠ 0. Therefore for ξ 3 ≠ 0, we can choose j 1 = 1 and j 2 = 2. Similarly, ξ 1 ≠ 0, we can choose j 1 = 2 and j 2 = 3 and for ξ 2 ≠ 0, we can choose j 1 = 1 and j 2 = 3. Therefore, using that the support of ψ does not meet zero, after splitting the support of the integration in three regions, by choosing the two “good” variables and by neglecting the integration with respect to the remaining variable, we obtain that

Thus, we have (4.1.34) which in turn implies (4.1.33).

Thanks to (4.1.33), we arrive at the estimate

On the other hand, we also have the trivial bound

Therefore using the basic Riesz-Torin interpolation theorem, we arrive at the bound

Therefore coming back to (4.1.30), we get

Therefore, the estimate (4.1.29) would follow from the one dimensional estimate

Thanks to our assumption, one has \(\frac {2}{p}<1\) and also

Therefore estimate (4.1.35) is precisely the Hardy-Littlewood-Sobolev inequality (cf. [29]). This completes the proof of (4.1.26), once we provide the proof of Proposition 4.1.15.

Proof of Proposition 4.1.15

We follow [17]. Consider the first order differential operator defined by

which satisfies L(e iλφ) = e iλφ . We have that

where \(\tilde {L}\) is defined by

As a consequence, we get the bound

where \(N\in \mathbb {N}\). To conclude, we need to estimate the coefficients of \(\tilde {L}.\) We shall use the notation \(\langle u\rangle = ( 1 + |u|{ }^2)^{\frac 12}\) and we set λ = μ 2. At first, we consider

We clearly have

and we shall estimate the derivatives of F. Set

We have the following statement.

Lemma 4.1.17

For |α| = k ≥ 1, we have the bound

where \(C:\mathbb R^{+}\rightarrow \mathbb R^{+}\)is a suitable continuous increasing function (which can change from line to line and can always be taken of the form C(t) = (1 + t)Mfor a sufficiently large M).

Proof

Using an induction on k, we get that ∂ αF for |α| = k ≥ 1 is a linear combination of terms under the form

where

Since \(|Q^{(m)}(u)| \lesssim \langle u\rangle ^{ -m- 1} ,\) we get

Moreover, by the Leibnitz formula

We therefore have the following bound for T q

Next, by using (4.1.39), we note that

Therefore, we get

This completes the proof of Lemma 4.1.17. □

We are now in position to prove the following statement.

Lemma 4.1.18

For \(N\in \mathbb {N}\) , we can write \(\tilde {L}^N\) under the form

with the estimates

and more generally for |β| = k,

Proof

We reason by induction on N. First, we notice that \(\tilde {L}\) is under the form

where

Consequently, by using (4.1.37), we get that

and by the Leibnitz formula, since ∂ αa j for |α|≥ 1 is a linear combination of terms under the form

we get by using (4.1.38) that for |α| = k ≥ 1,

Consequently, we also find thanks to (4.1.44), (4.1.38) that for |α| = k ≥ 0,

Using (4.1.44) (4.1.45), we obtain that the assertion of the lemma holds true for N = 1. Next, let us assume that it is true at the order N. We have

Consequently, we get that the coefficients are under the form

Therefore, by using (4.1.43) and (4.1.42), we get that (4.1.41) is true for N + 1. In order to prove (4.1.42) for N + 1, we need to evaluate \(\partial ^\gamma a_{\alpha }^{(N+1)}\). The estimate of the contribution of all terms except \(\partial ^\gamma (a_{j} \partial _{j} a_{\beta }^{(N)})\) follows directly from the induction hypothesis. In order to estimate \(\partial ^\gamma ( a_{j} \partial _{j} a_{\beta }^{(N)})\), we need to invoke (4.1.43) and (4.1.44) and the induction hypothesis. This completes the proof of Lemma 4.1.18. □

Finally, thanks to (4.1.36) and Lemma 4.1.18, we get

where

This completes the proof of Proposition 4.1.15. □

This completes the proof of Proposition 4.1.13. □

This completes the proof of Proposition 4.1.12. □

Remark 4.1.19

If in the proof of the Strichartz estimates, we use the triangle inequality instead of the square function theorem and the Young inequality instead of the Hardy-Littlewood-Sobolev inequality, we would obtain slightly less precise estimates. These estimates are sufficient to get all sub-critical well-posedness results. However in the case of initial data with critical Sobolev regularity the finer arguments using the square function and the Hardy-Littlewood-Sobolev inequality are essentially needed.

4.1.4 Local Well-Posedness in H s x H s−1, s ≥ 1∕2

In this section, we shall use the Strichartz estimates in order to improve the well-posedness result of Proposition 4.1.1. We shall be able to consider initial data in the more singular Sobolev spaces H s × H s−1, s ≥ 1∕2. We start by a definition.

Definition 4.1.20

For 0 ≤ s < 1, a couple of real numbers \((p,q), \frac 2 s\leq p\leq + \infty \) is s-admissible if

For T > 0, 0 ≤ s < 1, we define the spaces

and its “dual space”

(p′, q′) being the conjugate couple of (p, q), equipped with their natural norms (notice that to define these spaces, we can keep only the extremal couples corresponding to p = 2∕s and p = +∞ respectively).

We can now state the non homogeneous Strichartz estimates for the three dimensional wave equation on the torus \(\mathbb T^3\).

Theorem 4.1.21

For every 0 < s < 1, every s-admissible couple (p, q), there exists C > 0 such that for every T ∈ ]0, 1], every \(F\in Y^{1-s}_T\), every \((u_0,u_1)\in H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3)\)one has

and

Proof

Thanks to the Hölder inequality, in order to prove (4.1.48), it suffices the consider the two end point cases for p, i.e. p = 2∕s and p = ∞ (the estimate in \(C ([0, T]; H^s( \mathbb T^3))\) is straightforward). The case p = 2∕s follows from Theorem 4.1.9. The case p = ∞ results from the Sobolev embedding. This ends the proof of (4.1.48).

Let us next turn to (4.1.49). We first observe that

follows by duality from (4.1.48). Thanks to (4.1.50), we obtain that it suffices to show

where (p 1, q 1) is s-admissible and \((p_2^{\prime },q_2^{\prime })\) are such that (p 2, q 2) are (1 − s)-admissible and where for shortness we set

Denote by Π0 the projector on the zero Fourier mode on \(\mathbb T^3\), i.e.

We have the bound

By the Hölder inequality

and therefore, it suffices to show the bound

where

By writing the \(\sin \) function as a sum of exponentials, we obtain that (4.1.52) follows from

Observe that \((- \Delta )^{-\frac {1}{2}}\Pi _0^{\perp }\) is well defined as a bounded operator from \(H^s(\mathbb T^3)\) to \(H^{s+1}(\mathbb T^3)\). Set

Thanks to (4.1.48), by writing

we see that the map K is bounded from \(H^s(\mathbb T^3)\) to \(X^s_T\). Consequently, the dual map K ∗, defined by

is bounded from Y s to \(H^{-s}(\mathbb T^3)\). Using the last property with s replaced by 1 − s (which remains in ]0, 1[ if s ∈ ]0, 1[), we obtain the following sequence of continuous mappings

On the other hand, we have

Therefore, we obtain the bound

The passage from (4.1.55) to (4.1.53) can be done by using the Christ-Kiselev [11] argument, as we explain below. By a density argument it suffices to prove (4.1.53) for \(F\in C^{\infty }( [0,T]\times \mathbb T^3 )\). We can of course also assume that

For n ≥ 1 an integer and m = 0, 1, ⋯ , 2n, we define t n,m as

Of course \(0=t_{n,0}\leq t_{n,1}\leq \cdots \leq t_{n,2^n}=T\). Next, we observe that for 0 ≤ α < β ≤ 1 there is a unique n such that α ∈ [2m2−n, (2m + 1)2−n) and β ∈ [(2m + 1)2−n, (2m + 2)2−n) for some m ∈{0, 1, ⋯ , 2n−1 − 1}. Indeed, this can be checked by writing the representations of α and β in base 2 (the number n corresponds to the first different digit of α and β). Therefore, if we denote by χ τ<t(τ, t) the characteristic function of the set {(τ, t) : 0 ≤ τ < t ≤ T} then we can write

where χ n,m (m = 0, 1, ⋯ , 2n) denotes the characteristic function of the interval [t n,m, t n,(m+1)). Indeed, in order to achieve (4.1.56), it suffices to apply the previous observation for every : 0 ≤ τ < t ≤ T with α and β defined as

Therefore, thanks to (4.1.56), we can write

as

The goal is to evaluate the \(L^{p_1}_T L^{q_1}\) norm of the last expression. Using that for a fixed n, χ n,2m+1(t) have disjoint supports, we obtain that the \(L^{p_1}_T L^{q_1}\) norm of the last expression can be estimated by

Now, using (4.1.55), we obtain that the last expression is bounded by

By definition

and therefore (4.1.57) equals to

The last series is convergent since by the definition of admissible pairs it follows that \(p_2^{\prime }<2<p_1\). Therefore we proved that (4.1.55) indeed implies (4.1.53). This completes the proof of Theorem 4.1.21. □

We can now use Theorem 4.1.21 in order to get the following improvement of Proposition 4.1.1.

Theorem 4.1.22 (Low Regularity Local Well-Posedness)

Let s > 1∕2. Consider the cubic defocusing wave equation

posed on \(\mathbb T^3\). There exist positive constants γ, c and C such that for every Λ ≥ 1, every

satisfying

there exists a unique solution of (4.1.58) on the time interval [0, T], T ≡ c Λ −γwith initial data

Moreover the solution satisfies

u is unique in the class \(X^s_T\)described in Definition 4.1.20and the dependence with respect to the initial data and with respect to the time is continuous. More precisely, if u and \(\tilde {u}\)are two solutions of (4.1.58) with initial data satisfying (4.1.59) then

Finally, if

for some σ ≥ s then there exists c σ > 0 such that

Proof

We shall suppose that s ∈ (1∕2, 1), the case s ≥ 1 being already treated in Proposition 4.1.1. As in the proof of Proposition 4.1.1, we solve the integral equation

by a fixed point argument. Recall that

We shall estimate \(\Phi _{u_0,u_1}(u)\) in the spaces \(X_T^s\) introduced in Definition 4.1.20. Thanks to Theorem 4.1.21

Another use of Theorem 4.1.21 gives

Observe that the couple \((\frac {2}{1+s}, \frac {2}{2-s})\) is the dual of \((\frac {2}{1-s}, \frac {2}{s})\) which is the end point (1 − s)-admissible couple. We also observe that if (p, q) is an s-admissible couple then \(\frac {1}{q}\) ranges in the interval \( [\frac {1}{2}-\frac {s}{2},\frac {1}{2}-\frac {s}{3}]. \) The assumption s ∈ (1∕2, 1) implies

Therefore \(q^{\star }\equiv \frac {6}{2-s}\) is such that there exists p ⋆ such that (p ⋆, q ⋆) is an s-admissible couple. By definition p ⋆ is such that

The last relation implies that

Now, using the Hölder inequality in time, we obtain

which in turn implies

Consequently

A similar argument yields

Now, one obtains the existence and the uniqueness statements as in the proof of Proposition 4.1.1. Estimate (4.1.60) follows from (4.1.61) and a similar estimate obtained after differentiation of the Duhamel formula with respect to t. The propagation of regularity statement can be obtained as in the proof of Proposition 4.1.1. This completes the proof of Theorem 4.1.22. □

Concerning the uniqueness statement, we also have the following corollary which results from the proof of Theorem 4.1.22.

Corollary 4.1.23

Let s > 1∕2. Let (p ⋆, q ⋆) be the s-admissible couple defined by

Then the solutions constructed in Theorem 4.1.22 is unique in the class

Remark 4.1.24

As a consequence of Theorem 4.1.22, we have that for each \( (u_0,u_1)\in H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3) \) there is a solution with a maximum time existence T ⋆ and if T ⋆ < ∞ than necessarily

One can also prove a suitable local well-posedness in the case s = 1∕2 but in this case the dependence of the existence time on the initial data is more involved. Here is a precise statement.

Theorem 4.1.25

Consider the cubic defocusing wave equation

posed on \(\mathbb T^3\) . For every

there exists a time T > 0 and a unique solution of (4.1.63) in

with initial data

Proof

For T > 0, using the Strichartz estimates of Theorem 4.1.21, we get

Similarly, we get

Therefore if T is small enough then we can construct the solution by a fixed point argument in \(L^{4}([0,T]\times \mathbb T^3)\). In addition, the Strichartz estimates of Theorem 4.1.21 yield that the obtained solution belongs to \(C([0,T];H^{\frac {1}{2}}(\mathbb T^3))\). This completes the proof of Theorem 4.1.25. □

Remark 4.1.26

Observe that for data in \(H^{\frac {1}{2}}(\mathbb T^3)\times H^{-\frac {1}{2}}(\mathbb T^3)\) we no longer have the small factor T κ, κ > 0 in the estimates for \(\Phi _{u_0,u_1}\). This makes that the dependence of the existence time T on the data (u 0, u 1) is much less explicit. In particular, we can no longer conclude that the existence time is the same for a fixed ball in \(H^{\frac {1}{2}}(\mathbb T^3)\times H^{-\frac {1}{2}}(\mathbb T^3)\) and therefore we do not have the blow-up criterium (4.1.62) (with s = 1∕2).

4.1.5 A Constructive Way of Seeing the Solutions

In the proof of Theorem 4.1.22, we used the contraction mapping principle in order to construct the solutions. Therefore, one can define the solutions in a constructive way via the Picard iteration scheme. More precisely, for \((u_0,u_1)\in H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3)\), we define the sequence (u (n))n≥0 as u (0) = 0 and for a given u (n), n ≥ 0, we define u (n+1) as the solutions of the linear wave equation

Thanks to (the proof of) Theorem 4.1.22 the sequence (u (n))n≥0 is converging in \(X^s_T\), and in particular in \(C([0,T];H^s(\mathbb T^3))\) for

One has that

and for n ≥ 1,

where the trilinear map \({\mathcal T}\) is defined as

One then may compute

The expression for u (3) is then

We now observe that for n ≥ 2, the nth Picard iteration u (n) is a sum from j = 1 to j = 3n−1 of j-linear expressions of u (1). Moreover the first 3n−2 terms of this sum contain the (n − 1)th iteration. Therefore the solution can be seen as an infinite sum of multi-linear expressions of u (1). The Strichartz inequalities we proved can be used to show that for s ≥ 1∕2,

The last estimate can be used to analyse the multi-linear expressions in the expansion and to show its convergence. Observe that, we do not exploit any regularising effect in the terms of the expansion. The ill-posedness result of the next section, will basically show that such an effect is in fact not possible. In our probabilistic approach in the next section, we will exploit that the trilinear term in the expression defining the solution is more regular in the scale of the Sobolev spaces than the linear one, almost surely with respect to a probability measure on H s, s < 1∕2.

4.1.6 Global Well-Posedness in H s x H s−1, for Some s < 1

One may naturally ask whether the solutions obtained in Theorem 4.1.22 can be extended globally in time. Observe that one cannot use the argument of Theorem 4.1.2 because there is no a priori bound available at the H s, s ≠ 1 regularity. One however has the following partial answer.

Theorem 4.1.27 (Low Regularity Global Well-Posedness)

Let s > 13∕18. Then the local solution obtained in Theorem 4.1.22can be extended globally in time.

For the proof of Theorem 4.1.27, we refer to [18, 27, 40]. Here, we only present the main idea (introduced in [14]). Let \((u_0,u_1)\in H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3)\) for some s ∈ (1∕2, 1). Let T ≫ 1. For N ≥ 1, we define a smooth Fourier multiplier acting as 1 for frequencies \(n\in \mathbb Z^3\) such that |n|≤ N and acting as N 1−s|n|s−1 for frequencies |n|≥ 2N. A concrete choice of I N is I N(D) = I((− Δ)1∕2∕N), where I(x) is a smooth function which equals 1 for x ≤ 1 and which equals x s−1 for x ≥ 2. In other words I(x) is one for x close to zero and decays like x s−1 for x ≫ 1. We choose N = N(T) such that for the times of the local existence the modified energy (which is well-defined in H s × H s−1)

does not vary much. This allows to extend the local solutions up to time T ≫ 1. The analysis contains two steps, a local existence argument for I Nu under the assumption that the modified energy remains below a fixed size and an energy increase estimate which is the substitute of the energy conservation used in the proof of Theorem 4.1.2. More precisely, we choose N as N = T γ for some γ = γ(s) ≥ 1. With this choice of N the initial size of the modified energy is T γ(1−s). The local well-posedness argument assures that I Nu (and thus u as well) exists on time of size T −β for some β > 0 as far as the modified energy remains \(\lesssim T^{\gamma (1-s)}\). The main part of the analysis is to get an energy increase estimate showing that on the local existence time the modified energy does not increase more then T −α for some α > 0. In order to arrive at time T we need to iterate ≈ T 1+β times the local existence argument. In order to ensure that at each step of the iteration the modified energy remains \(\lesssim T^{\gamma (1-s)}\), we need to impose the condition

As far as (4.1.64) is satisfied, we can extend the local solutions globally in time. The condition (4.1.64) imposes the lower bound on s involved in the statement of Theorem 4.1.27. One may conjecture that the global well-posedness in Theorem 4.1.27 holds for any s > 1∕2.

4.1.7 Local Ill-Posedness in H s x H s−1, s ∈ (0, 1∕2)

It turns out that the restriction s > 1∕2 in Theorem 4.1.22 is optimal. Recall that the classical notion of well-posedness in the Hadamard sense requires the existence, the uniqueness and the continuous dependence with respect to the initial data. A very classical example of contradicting the continuous dependence with respect to the initial data for a PDE is the initial value problem for the Laplace equation with data in Sobolev spaces. Indeed, consider

Equation (4.1.65) has the explicit solution

Then for every \((s_1,s_2)\in \mathbb R^2\), v n satisfies

as n tends to + ∞ but for t ≠ 0,

as n tends to + ∞. Consequently (4.1.65) in not well-posed in \( H^{s_1}(\mathbb T)\times H^{s_2}(\mathbb T) \) for every \((s_1,s_2)\in \mathbb R^2\) because of the lack of continuous dependence with respect to the initial data (0, 0).

It turns out that a similar phenomenon happens in the context of the cubic defocusing wave equation with low regularity initial data. As we shall see below the mechanism giving the lack of continuous dependence is however quite different compared to (4.1.65). Here is the precise statement.

Theorem 4.1.28

Let us fix s ∈ (0, 1∕2) and \((u_0,u_1)\in C^{\infty }(\mathbb T^3)\times C^\infty (\mathbb T^3)\). Then there exist δ > 0, a sequence \((t_n)_{n=1}^{\infty }\)of positive numbers tending to zero and a sequence \((u_n(t,x))_{n=1}^{\infty }\)of \(C(\mathbb R;C^{\infty }(\mathbb T^3))\)functions such that

with

but

In particular, for every T > 0,

Proof of Theorem 4.1.28

We follow [6, 12, 48]. Consider

subject to initial conditions

where φ is a nontrivial bump function on \(\mathbb R^3\) and

with δ 1 > 0 to be fixed later. Observe that for n ≫ 1, we can see φ(nx) as a C ∞ function on \(\mathbb T^3\).

Thanks to Theorem 4.1.2, we obtain that (4.1.66) with data given by (4.1.67) has a unique global smooth solution which we denote by u n. Moreover \(u_n\in C(\mathbb R;C^{\infty }(\mathbb T^3))\) thanks the propagation of the higher Sobolev regularity and the Sobolev embeddings.

Next, we consider the ODE

Lemma 4.1.29

The Cauchy problem (4.1.68) has a global smooth (non constant) solution V (t) which is periodic.

Proof

One defines locally in time the solution of (4.1.68) by an application of the Cauchy-Lipschitz theorem. In order to extend the solutions globally in time, we multiply (4.1.68) by V ′. This gives that the solutions of (4.1.68) satisfy

and therefore taking into account the initial conditions, we get

The relation (4.1.69) implies that (V (t), V ′(t)) cannot go to infinity in finite time. Therefore the local solution of (4.1.68) is defined globally in time. Let us finally show that V (t) is periodic in time. We first observe that thanks to (4.1.69), |V (t)|≤ 1 for all times t. Therefore t = 0 is a local maximum of V (t). We claim that there is t 0 > 0 such that V ′(t 0) = 0. Indeed, otherwise V (t) is decreasing on [0, +∞) which implies that V ′(t) ≤ 0 and from (4.1.69), we deduce

Integrating the last relation between zero and a positive t 0 gives

Therefore

and we get a contradiction for t 0 ≫ 1. Hence, we indeed have that there is t 0 > 0 such that V ′(t 0) = 0. Coming back to (4.1.69) and using that V (t 0) < 1, we deduce that V (t 0) = −1. Therefore t = t 0 is a local minimum of V (t). We now can show exactly as before that there exists t 1 > t 0 such that V ′(t 1) = 0 and V (t 1) > −1. Once again using (4.1.69), we infer that V (t 1) = 1, i.e. V (0) = V (t 1) and V ′(0) = V ′(t 1). By the uniqueness part of the Cauchy-Lipschitz theorem, we obtain that V is periodic with period t 1. This completes the proof of Lemma 4.1.29. □

We next denote by v n the solution of

It is now clear that

In the next lemma, we collect the needed bounds on v n.

Lemma 4.1.30

Let

for some δ 2 > δ 1. Then, we have the following bounds for t ∈ [0, t n],

Finally, there exists n 0 ≫ 1 such that for n ≥ n 0 ,

Proof

Estimates (4.1.71) and (4.1.72) follow from the general bound

where t ∈ [0, t n] and σ ≥ 0. For integer values of σ, the bound (4.1.75) is a direct consequence of the definition of v n. For fractional values of σ one needs to invoke an elementary interpolation inequality in the Sobolev spaces. Estimate (4.1.73) follows directly from the definition of v n. The proof of (4.1.74) is slightly more delicate. We first observe that for n ≫ 1, we have the lower bound

Now, we can obtain (4.1.74) by invoking (4.1.75) (with σ = 2), the lower bound (4.1.76) and the interpolation inequality

for some θ > 0. It remains therefore to show (4.1.76). After differentiating once the expression defining v n, we see that (4.1.76) follows from the following statement.

Lemma 4.1.31

Consider a smooth not identically zero periodic function V and a non trivial bump function \(\phi \in C^\infty _0( \mathbb {R}^d)\). Then there exist c > 0 and λ 0 ≥ 1 such that for every λ > λ 0

Proof

We can suppose that the period of V is 2πL for some L > 0. Consider the Fourier expansion of V ,

We can assume that there is an open ball B of \(\mathbb R^d\) such that for some c 0 > 0, \(|\partial _{x_1}\phi (x)|\geq c_0\) on B. Let 0 ≤ ψ ≤ 1 be a non trivial \(C^\infty _0(B)\) function. We can write

where

and

Clearly I 1 > 0 is independent of λ. On the other hand

Therefore, after an integration by parts, we obtain that \(|I_{2}|\lesssim \lambda ^{-1}\). This completes the proof of Lemma 4.1.31. □

This completes the proof of Lemma 4.1.30. □

We next consider the semi-classical energy

We are going to show that for very small times u n and v n + S(t)(u 0, u 1) are close with respect to E n but these small times are long enough to get the needed amplification of the H s norm. We emphasise that this amplification is a phenomenon only related to the solution of (4.1.70). Here is the precise statement.

Lemma 4.1.32

There exist ε > 0, δ 2 > 0 and C > 0 such that for δ 1 < δ 2, if we set

then for every n ≫ 1, every t ∈ [0, t n],

Moreover,

Proof

Set u L = S(t)(u 0, u 1) and w n = u n − u L − v n. Then w n solves the equation

with initial data

Set

Multiplying Eq. (4.1.78) with ∂ tw n and integrating over \(\mathbb T^3\) gives

which in turn implies

Similarly, by first differentiating (4.1.78) with respect to the spatial variables, we get the bound

Now, using (4.1.79) and (4.1.80), we obtain the estimate

Therefore using (4.1.71), (4.1.72), we get

where G ≡ G 1 + G 2 with

and

Since \(u_L\in C^{\infty }(\mathbb {R}\times \mathbb T^3)\) is independent of n, using (4.1.73) and (4.1.75) we can estimate G 1 as follows

Writing for t ∈ [0, t n],

we obtain

Set

Observe that e n(w n(t)) is increasing. Using (4.1.82) (with k = 0, 1), (4.1.73) and the Leibniz rule, we get that for t ∈ [0, t n] and for l = 1, 2,

Thanks to the Gagliardo-Nirenberg inequality, and (4.1.82) with k = 0, we get for t ∈ [0, t n],

Hence, we can use (4.1.83) to treat the quadratic and cubic terms in w n and to get the bound

Therefore, coming back to (4.1.81), we get for t ∈ [0, t n],

We now observe that

is resulting directly from the definition. Therefore, we have the bound

We first suppose that e n(w n(t)) ≤ 1. This property holds for small values of t since E n(w n(0)) = e n(w n(0)) = 0. In addition, the estimate for e n(w n(t)) we are looking for is much stronger than e n(w n(t)) ≤ 1. Therefore, once we prove the desired estimate for e n(w n(t)) under the assumption e n(w n(t)) ≤ 1, we can use a bootstrap argument to get the estimate without the assumption e n(w n(t)) ≤ 1.

Estimate (4.1.84) yields that for t ∈ [0, t n],

and consequently

An integration of the last estimate gives that for t ∈ [0, t n],

(one should see δ 2 as 3δ 2 − 2δ 2 and s − 1∕2 as 1 − (3∕2 − s)). Since s < 1∕2, by taking δ 2 > 0 small enough, we obtain that there exists ε > 0 such that for t ∈ [0, t n],

and in particular one has for t ∈ [0, t n],

We next estimate \(\|w_{n}(t,\cdot )\|{ }_{L^2}\). We may write for t ∈ [0, t n],

Thanks to (4.1.85) and the definition of t n, we get

Therefore, since s < 1∕2,

An interpolation between (4.1.85) and (4.1.86) yields (4.1.77). This completes the proof of Lemma 4.1.32. □

Using Lemma 4.1.32, we may write

Recall that (4.1.74) yields

provided n ≫ 1. Therefore, by choosing δ 1 small enough (depending on δ 2 fixed in Lemma 4.1.32), we obtain that the exists δ > 0 such that

which in turn implies that

This completes the proof of Theorem 4.1.28. □

Theorem 4.1.28 implies that the Cauchy problem associated with the cubic focusing wave equation,

is ill-posed in \(H^s(\mathbb T^3)\times H^{s-1}(\mathbb T^3)\) for s < 1∕2 because of the lack of continuous dependence for any \(C^{\infty }(\mathbb T^3)\times C^{\infty }(\mathbb T^3)\) initial data.

For future references, we also state the following consequence of Theorem 4.1.28.

Theorem 4.1.33

Let us fix s ∈ (0, 1∕2), T > 0 and

Then there exists a sequence \((u_n(t,x))_{n=1}^{\infty }\) of \(C(\mathbb R;C^{\infty }(\mathbb T^3))\) functions such that

with

but

Proof

Let \((u_{0,m}, u_{1,m})_{m=1}^{\infty }\) be a sequence of \(C^{\infty }(\mathbb T^3)\times C^{\infty }(\mathbb T^3)\) functions such that

For a fixed m, we apply Theorem 4.1.28 in order to find a sequence \((u_{m,n}(t,x))_{n=1}^{\infty }\) of \(C(\mathbb R;C^{\infty }(\mathbb T^3))\) functions such that

with

and for every m ≥ 1,

Now, using the triangle inequality, we obtain that for every l ≥ 1 there is M 0(l) such that for every m ≥ M 0(l) there is N 0(m) such that for every n ≥ N 0(m),

Thanks to (4.1.87), we obtain that for every m ≥ 1 there exists N 1(m) ≥ N 0(m) such that for every n ≥ N 1(m),

We now observe that

is a sequence of solutions of the cubic defocusing wave equation satisfying the conclusions of Theorem 4.1.33. □

Remark 4.1.34

It is worth mentioning that we arrive without too much complicated technicalities to a sharp local well-posedness result in the context of the cubic wave equation because we do not need a smoothing effect to recover derivative losses neither in the nonlinearity nor in the non homogeneous Strichartz estimates. The X s, b spaces of Bourgain are an efficient tool to deal with these two difficulties. These developments go beyond the scope of these lectures.

4.1.8 Extensions to More General Nonlinearities

One may consider the wave equation with a more general nonlinearity than the cubic one. Namely, let us consider the nonlinear wave equation

posed on \(\mathbb T^3\) where α > 0 measures the “degree” of the nonlinearity. If u(t, x) is a solution of (4.1.88) posed on \(\mathbb R^3\), than so is \(u_{\lambda }(t,x)=\lambda ^{\frac {2}{\alpha }}u(\lambda t,\lambda x)\). Moreover

which implies that H s with \(s=\frac {3}{2}-\frac {2}{\alpha }\) is the critical Sobolev regularity for (4.1.88). Based on this scaling argument one may wish to expect that for \(s>\frac {3}{2}-\frac {2}{\alpha }\) the Cauchy problem associated with (4.1.88) is well-posed in H s × H s−1 and that for \(s<\frac {3}{2}-\frac {2}{\alpha }\) it is ill-posed in H s × H s−1. In this section, we verified that this is indeed the case for α = 2. For 2 < α < 4, a small modification of the proof of Theorem 4.1.22 shows that (4.1.88) is locally well-posed in H s × H s−1 for \(s\in (\frac {3}{2}-\frac {2}{\alpha },\alpha )\). Then, as in the proof of Theorem 4.1.2, we can show that (4.1.88) is globally well-posed in H 1 × L 2. Moreover a small modification of the proof of Theorem 4.1.28 shows that for \(s\in (0,\frac {3}{2}-\frac {2}{\alpha })\) the Cauchy problem for (4.1.88) is locally ill-posed in H s × H s−1. For α = 4, we can prove a local well-posedness statement for (4.1.88) as in Theorem 4.1.25. The global well-posedness in H 1 × L 2 for α = 4 is much more delicate than the globalisation argument of Theorem 4.1.2. It is however possible to show that (4.1.88) is globally well-posed in H 1 × L 2 (see [20, 21, 41, 42]). The new global information for α = 4, in addition to the conservation of the energy, is the Morawetz estimate which is a quantitative way to contradict the blow-up criterium in the case α = 4. For α > 4 the Cauchy problem associated with (4.1.88) is still locally well-posed in H s × H s−1 for some \(s>\frac {3}{2}-\frac {2}{\alpha }\). The global well-posedness (i.e. global existence, uniqueness and propagation of regularity) of (4.1.88) for α > 4 is an outstanding open problem. For α > 4, the argument used in Theorem 4.1.28 may allow to construct weak solutions in H 1 × L 2 with initial data in H σ for \(1<\sigma <\frac {3}{2}-\frac {2}{\alpha }\) which are losing their H σ regularity. See [28] for such a result for (4.1.88), posed on \(\mathbb R^3\).

4.2 Probabilistic Global Well-Posedness for the 3d Cubic Wave Equation in H s, s ∈ [0, 1]

4.2.1 Introduction

Consider again the Cauchy problem for the cubic defocusing wave equation

where

In the previous section, we have shown that (4.2.1) is (at least locally in time) well-posed in \({\mathcal H}^s(\mathbb T^3)\), s ≥ 1∕2. The main ingredient in the proof for s ∈ [1∕2, 1) was the Strichartz estimates for the linear wave equation. We have also shown that for s ∈ (0, 1∕2) the Cauchy problem (4.2.1) is ill-posed in \({\mathcal H}^s(\mathbb T^3)\).

One may however ask whether some sort of well-posedness for (4.2.1) survives for s < 1∕2. We will show below that this is indeed possible, if we accept to “randomise” the initial data. This means that we will endow \({\mathcal H}^s(\mathbb T^3)\), s ∈ (0, 1∕2) with suitable probability measures and we will show that the Cauchy problem (4.2.1) is well-posed in a suitable sense for initial data (u 0, u 1) on a set of full measure.

Let us now describe these measures. Starting from \((u_0,u_1)\in {\mathcal H}^s\) given by their Fourier series

we define \(u_{j}^\omega \) by

where (α j(ω), β n,j(ω), γ n,j(ω)), \(n\in \mathbb Z^3_{\star }\), j = 0, 1 is a sequence of real random variables on a probability space \((\Omega ,p,{\mathcal F})\). We assume that the random variables \((\alpha _j,\beta _{n,j},\gamma _{n,j})_{n\in \mathbb Z^3_{\star },j=0,1}\) are independent identically distributed real random variables with a distribution θ satisfying

(notice that under the assumption (4.2.3) the random variables are necessarily of mean zero). Typical examples (see Remark 4.2.13 below) of random variables satisfying (4.2.3) are the standard Gaussians, i.e.

(with an identity in (4.2.3)) or the Bernoulli variables

An advantage of the Bernoulli randomisation is that it keeps the \({\mathcal H}^s\) norm of the original function. The Gaussian randomisation has the advantage to “generate” a dense set in \({\mathcal H}^s\) via the map

for most of \((u_0,u_1)\in {\mathcal H}^s\) (see Proposition 4.2.2 below).

Definition 4.2.1

For fixed \((u_0, u_1) \in \mathcal {H}^s\), the map (4.2.4) is a measurable map from \((\Omega ,{\mathcal F})\) to \({\mathcal H}^s\) endowed with the Borel sigma algebra since the partial sums form a Cauchy sequence in \(L^2(\Omega ;{\mathcal H}^s)\). Thus (4.2.4) endows the space \({\mathcal H}^s(\mathbb T^3)\) with a probability measure which is the direct image of p. Let us denote this measure by \(\mu _{(u_0, u_1)}\). Then

Denote by \({\mathcal M}^s\) the set of measures obtained following this construction:

Here are two basic properties of these measures.

Proposition 4.2.2

For any s′ > s, if \((u_0, u_1) \notin \mathcal {H}^{s'}\), then

In other words, the randomisation (4.2.4) does not regularise in the scale of the L 2-based Sobolev spaces (this fact is obvious for the Bernoulli randomisation). Next, if (u 0, u 1) have all their Fourier coefficients different from zero and if \(\mathrm {supp}(\theta )=\mathbb R\)then supp( \(\mu _{(u_0, u_1)})={\mathcal H^s}\). In other words, under these assumptions, for any \((w_0, w_1)\in \mathcal {H}^s\)and any 𝜖 > 0,

or in yet other words, any set of full \(\mu _{(u_0, u_1)}\)-measure is dense in \(\mathcal {H}^s\) .

We have the following global existence and uniqueness result for typical data with respect to an element of \({\mathcal M}^s\).

Theorem 4.2.3 (Existence and Uniqueness)

Let us fix s ∈ (0, 1) and \(\mu \in {\mathcal M}^s\). Then, there exists a full μ measure set \(\varSigma {\subset } {\mathcal H}^s(\mathbb T^3)\)such that for every (v 0, v 1) ∈ Σ, there exists a unique global solution v of the nonlinear wave equation

satisfying

Furthermore, if we denote by

the flow thus defined, the set Σ is invariant by the map Φ(t), namely

The next statement gives quantitative bounds on the solutions.

Theorem 4.2.4 (Quantitative Bounds)

Let us fix s ∈ (0, 1) and \(\mu \in {\mathcal M}^s\). Let Σ be the set constructed in Theorem 4.2.3. Then for every ε > 0 there exist C, δ > 0 such that for every (v 0, v 1) ∈ Σ, there exists M > 0 such that the global solution to (4.2.6) constructed in Theorem 4.2.3satisfies

with

and

Remark 4.2.5

Recall that Π0 is the orthogonal projector on the zero Fourier mode and \(\Pi _0^{\perp } = {\text{Id}} - \Pi _0\).

We now further discuss the uniqueness of the obtained solutions. For s > 1∕2, we have the following statement.

Theorem 4.2.6 (Unique Limit of Smooth Solutions for s > 1∕2)

Let s ∈ (1∕2, 1). With the notations of the statement of Theorem 4.2.3, let us fix an initial datum (v 0, v 1) ∈ Σ with a corresponding global solution v(t). Let \((v_{0,n},v_{1,n})_{n=1}^{\infty }\)be a sequence of \({\mathcal H}^1(\mathbb T^3)\)such that

Denote by v n(t) the solution of the cubic defocusing wave equation with data (v 0,n, v 1,n) defined in Theorem 4.1.2. Then for every T > 0,

Thanks to Theorem 4.1.33, we know that for s ∈ (0, 1∕2) the result of Theorem 4.2.6 cannot hold true ! We only have a partial statement.

Theorem 4.2.7 (Unique Limit of Particular Smooth Solutions for s < 1∕2)

Let s ∈ (0, 1∕2). With the notations of the statement of Theorem 4.2.3, let us fix an initial datum (v 0, v 1) ∈ Σ with a corresponding global solution v(t). Let \((v_{0,n},v_{1,n})_{n=1}^{\infty }\)be the sequence of \(C^\infty (\mathbb T^3)\times C^\infty (\mathbb T^3)\)defined as the usual regularisation by convolution, i.e.

where \((\rho _n)_{n=1}^{\infty }\)is an approximate identity. Denote by v n(t) the solution of the cubic defocusing wave equation with data (v 0,n, v 1,n) defined in Theorem 4.1.2. Then for every T > 0,

Remark 4.2.8

We emphasise that the result of Theorem 4.1.33 applies for the elements of Σ. More precisely, thanks to Theorem 4.1.33, we have that for every (v 0, v 1) ∈ Σ there is a sequence \((v_{0,n},v_{1,n})_{n=1}^{\infty }\) of elements of \(C^\infty (\mathbb T^3)\times C^\infty (\mathbb T^3)\) such that

but such that if we denote by v n(t) the solution of the cubic defocusing wave equation with data (v 0,n, v 1,n) defined in Theorem 4.1.2 then for every T > 0,

Therefore the choice of the particular regularisation of the initial data in Theorem 4.2.7 is of key importance. It would be interesting to classify the “admissible type of regularisations” allowing to get a statement such as Theorem 4.2.7 .

Remark 4.2.9

We can also see the solutions constructed in Theorem 4.2.3 as the (unique) limit as N tends to infinity of the solutions of the following truncated versions of the cubic defocusing wave equation.

where S N is a Fourier multiplier localising on modes of size ≤ N. The convergence of a subsequence can be obtained by a compactness argument (cf. [9]). The convergence of the whole sequence however requires strong solutions techniques.

The next question is whether some sort of continuous dependence with respect to the initial data survives in the context of Theorem 4.2.3. In order to state our result concerning the continuous dependence with respect to the initial data, we recall that for any event B (of non vanishing probability) the conditioned probability p(⋅|B) is the natural probability measure supported by B, defined by

We have the following statement.

Theorem 4.2.10 (Conditioned Continuous Dependence)

Let us fix s ∈ (0, 1), let A > 0, let \(B_A\equiv (V\in {\mathcal H}^s : \|V\|{ }_{{\mathcal H}^s}\leq A)\)be the closed ball of radius A centered at the origin of \({\mathcal H}^s\)and let T > 0. Let \(\mu \in {\mathcal M}^s\)and suppose that θ (the law of our random variables) is symmetric. Let Φ(t) be the flow of the cubic wave equations defined μ almost everywhere in Theorem 4.2.3. Then for ε, η > 0, we have the bound

where \( X_{T}\equiv (C ([0,T]; \mathcal {H}^s)\cap L^4([0,T]\times \mathbb T^3))\times C([0,T];H^{s-1}) \)and g(ε, η) is such that

Moreover, if for s ∈ (0, 1∕2) we assume in addition that the support of μ is the whole \({\mathcal H}^s\)(which is true if in the definition of the measure μ, we have \(a_{i}, b_{n,j}, c_{n,j} \neq 0, \forall n \in \mathbb {Z}^d\)and the support of the distribution function of the random variables is \(\mathbb {R}\)), then there exists ε > 0 such that for every η > 0 the left hand-side in (4.2.7) is positive.

A probability measure θ on \(\mathbb R\) is called symmetric if

A real random variable is called symmetric if its distribution is a symmetric measure on \(\mathbb R\).

The result of Theorem 4.2.10 is saying that as soon as η ≪ ε, among the initial data which are η-close to each other, the probability of finding two for which the corresponding solutions to (4.2.1) do not remain ε close to each other, is very small. The last part of the statement is saying that the deterministic version of the uniform continuity property (4.2.7) does not hold and somehow that one cannot get rid of a probabilistic approach in the question concerning the continuous dependence (in \({\mathcal H}^s\), s < 1∕2) with respect to the data. The ill-posedness result of Theorem 4.1.28 will be of importance in the proof of the last part of Theorem 4.2.10.

4.2.2 Probabilistic Strichartz Estimates

Lemma 4.2.11

Let \((l_n(\omega ))_{n=1}^{\infty }\) be a sequence of real, independent random variables with associated sequence of distributions \((\theta _{n})_{n=1}^{\infty }\) . Assume that θ n satisfy the property

Then there exists α > 0 such that for every λ > 0, every sequence \((c_n)_{n=1}^{\infty }\in l^2\)of real numbers,

As a consequence there exists C > 0 such that for every p ≥ 2, every \((c_n)_{n=1}^{\infty }\in l^2\) ,

Remark 4.2.12

The property (4.2.8) is equivalent to assuming that θ n are of zero mean and assuming that

Remark 4.2.13

Let us notice that (4.2.8) is readily satisfied if \((l_n(\omega ))_{n=1}^{\infty }\) are standard real Gaussian or standard Bernoulli variables. Indeed in the case of Gaussian

In the case of Bernoulli variables one can obtain that (4.2.8) is satisfied by invoking the inequality

More generally, we can observe that (4.2.11) holds if θ n is compactly supported.

Remark 4.2.14

In the case of Gaussian we can see Lemma 4.2.11 as a very particular case of a L p smoothing properties of the Hartree-Foch heat flow (see e.g. [44, Section 3] for more details on this issue).

Proof of Lemma 4.2.11

For t > 0 to be determined later, using the independence and (4.2.8), we obtain

Therefore

or equivalently,

We choose t as

Hence

In the same way (replacing c n by − c n), we can show that

which completes the proof of (4.2.9). To deduce (4.2.10), we write

which completes the proof of Lemma 4.2.11. □

As a consequence of Lemma 4.2.11, we get the following “probabilistic” Strichartz estimates.

Theorem 4.2.15

Let us fix s ∈ (0, 1) and let \(\mu \in {\mathcal M}^s\)be induced via the map (4.2.4) from the couple \((u_0,u_1)\in {\mathcal H}^s\). Let us also fix σ ∈ (0, s], 2 ≤ p 1 < +∞, 2 ≤ p 2 ≤ +∞ and \(\delta >1+ \frac 1 {p_1}\). Then there exists a positive constant C such that for every p ≥ 2,

As a consequence for every T > 0 and p 1 ∈ [1, ∞), p 2 ∈ [2, ∞],

Moreover, there exist two positive constants C and c such that for every λ > 0,

Remark 4.2.16

Observe that (4.2.13) applied for p 2 = ∞ displays an improvement of 3∕2 derivatives with respect to the Sobolev embedding which is stronger than the improvement obtained by the (deterministic) Strichartz estimates (see Remark 4.1.14). The proof of Theorem 4.2.15 exploits the random oscillations of the initial data while the proof of the deterministic Strichartz estimates exploits in a crucial (and subtle) manner the time oscillations of S(t). In the proof of Theorem 4.2.15, we simply neglect these times oscillations.

Remark 4.2.17

In the proof of Theorem 4.2.15, we shall make use of the Sobolev spaces \(W^{\sigma ,q}(\mathbb T^3)\), σ ≥ 0, q ∈ (1, ∞), defined via the norm

Proof of Theorem 4.2.15

We have that

equals

A trivial application of Lemma 4.2.11 implies that

Therefore, using that δ > 1 + 1∕p 1 the expression (4.2.15) can be bounded by

Therefore, it remains to estimate

By a use of the Hölder inequality on \(\mathbb T^3\), we observe that it suffices to estimate

Let q < ∞ be such that σ > 3∕q. Then by the Sobolev embedding \(W^{\sigma ,q}(\mathbb T^3)\subset C^0(\mathbb T^3)\), we have

Therefore, we need to estimate

which equals

By using the Hölder inequality in ω, we observe that it suffices to evaluate the last quantity only for \(p>\max (p_1,q)\). For such values of p, using the Minkowski inequality, we can estimate (4.2.16) by

Now, we can write \((1-\Delta )^{\sigma /2} \Pi _0^{\perp } S(t) (u_0^\omega ,u_1^\omega )\) as

with

Now using (4.2.10) of Lemma 4.2.11 and the boundedness of \(\sin \) and \(\cos \) functions, we obtain that (4.2.17) can be bounded by

Since δ > 1 + 1∕p 1, we can estimate (4.2.18) by

This completes the proof of (4.2.12). Let us finally show how (4.2.12) implies (4.2.14). Using the Tchebichev inequality and (4.2.12), we have that

is bounded by

We now choose p as

which yields (4.2.14). This completes the proof of Theorem 4.2.15. □

The proof of Theorem 4.2.15 also implies the following statement.

Theorem 4.2.18

Let us fix s ∈ (0, 1) and let \(\mu \in {\mathcal M}^s\)be induced via the map (4.2.4) from the couple \((u_0,u_1)\in {\mathcal H}^s\). Let us also fix p ≥ 2, σ ∈ (0, s] and q < ∞ such that σ > 3∕q. Then for every T > 0,

4.2.3 Regularisation Effect in the Picard Iteration Expansion

Consider the Cauchy problem

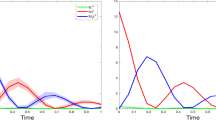

where (u 0, u 1) is a typical element on the support of \(\mu \in {\mathcal M}^s\), s ∈ (0, 1). According to the discussion in Sect. 4.1.5 of the previous section, for small times depending on (u 0, u 1), we can hope to represent the solution of (4.2.20) as

where Q j is homogeneous of order j in (u 0, u 1). We have that

etc. We have that μ a.s. Q 1∉H σ for σ > s. However, using the probabilistic Strichartz estimates of the previous section, we have that for T > 0,

Therefore the second non trivial term in the formal expansion defining the solution is more regular than the initial data ! The strategy will therefore be to write the solution of (4.2.20) as

where v ∈ H 1 and solve the equation for v by the methods described in the previous section. In the case of the cubic nonlinearity the deterministic analysis used to solve the equation for v is particularly simple, it is in fact very close to the analysis in the proof of Proposition 4.1.1. For more complicated problems the analysis of the equation for v could involve more advanced deterministic arguments. We refer to [4], where a similar strategy is used in the context of the nonlinear Schrödinger equation and to [16] where it is used in the context of stochastic PDE’s.

This argument is not particularly restricted to Q 3. One can imagine situations when for some m > 3, Q m is the first element in the expansion whose regularity fits well in a deterministic analysis. Then we can equally well look for the solutions under the form

and treat v by a deterministic analysis. It is worth noticing that such a situation occurs in the work on parabolic PDE’s with a singular random source term [22,23,24]. In these works in expansions of type (4.2.21) the random initial data (u 0, u 1) should be replaced by the random source term (the white noise). Let us also mention that in the case of parabolic equations the deterministic smoothing comes from elliptic regularity estimates while in the context of the wave equation we basically rely on the smoothing estimate (4.1.5).

4.2.4 The Local Existence Result

Proposition 4.2.19

Consider the problem

There exists a constant C such that for every time interval I = [a, b] of size 1, every Λ ≥ 1, every (v 0, v 1, f) ∈ H 1 × L 2 × L 3(I, L 6) satisfying

there exists a unique solution on the time interval [a, a + C −1Λ −2] of (4.2.22) with initial data

Moreover the solution satisfies \( \|(v,\partial _t v)\|{ }_{L^\infty ([a,a+C^{-1} \Lambda ^{-2}],H^1\times L^2)}\leq C\Lambda , \) (v, ∂ tv) is unique in the class L ∞([a, a + C −1Λ −2], H 1 × L 2) and the dependence in time is continuous.

Proof

The proof is very similar to the proof of Proposition 4.1.1. By translation invariance in time, we can suppose that I = [0, 1]. We can rewrite the problem as

Set

Then for T ∈ (0, 1], using the Sobolev embedding \(H^1(\mathbb T^3)\subset L^6(\mathbb T^3)\), we get

It is now clear that for T ≈ Λ−2 the map \(\Phi _{u_0,u_1,f}\) send the ball

into itself. Moreover by a similar argument, we obtain that this map is a contraction on the same ball. Thus we obtain the existence part and the bound on v in H 1. The estimate of \(\|\partial _t v\|{ }_{L^2}\) follows by differentiating in t the Duhamel formula (4.2.23). This completes the proof of Proposition 4.2.19. □

4.2.5 Global Existence

In this section, we complete the proof of Theorem 4.2.3. We search v under the form v(t) = S(t)(v 0, v 1) + w(t). Then w solves

Thanks to Theorems 4.2.15 and 4.2.18, we have that μ-almost surely,

σ > 3∕q. The local existence for (4.2.24) follows from Proposition 4.2.19 and the first estimate in (4.2.25). We also deduce from Proposition 4.2.19, that as long as the H 1 × L 2 norm of (w, ∂ tw) remains bounded, the solution w of (4.2.24) exists. Set

Using the equation solved by w, we now compute

Now, using the Cauchy-Schwarz inequality, the Hölder inequalities and the Sobolev embedding \(W^{\sigma ,q}(\mathbb T^3)\subset C^0(\mathbb T^3)\), we can write

and consequently, according to Gronwall inequality and (4.2.25), w exists globally in time.

This completes the proof of the existence and uniqueness part of Theorem 4.2.3. Let us now turn to the construction of an invariant set. Define the sets

and \( \varSigma \equiv \Theta +{\mathcal H}^1. \) Then Σ is of full μ measure for every \(\mu \in {\mathcal H}^s\), since so is Θ. We have the following proposition.

Proposition 4.2.20

Assume that s > 0 and let us fix \(\mu \in {\mathcal M}^s\). Then, for every (v 0, v 1) ∈ Σ, there exists a unique global solution

of the nonlinear wave equation

Moreover for every \(t\in \mathbb R\) , (v(t), ∂ tv(t)) ∈ Σ and thus by the time reversibility Σ is invariant under the flow of (4.2.26).

Proof

By assumption, we can write \((v_0,v_1)=(\tilde {v}_0,\tilde {v}_1)+(w_0,w_1)\) with \((\tilde {v}_0,\tilde {v}_1)\in \Theta \) and \((w_0,w_1)\in {\mathcal H}^1\). We search v under the form

Then w solves

Now, exactly as before, we obtain that

where

Therefore thanks to the Gronwall lemma, using that \({\mathcal E}(w(0))\) is well defined, we obtain the global existence for w. Thus the solution of (4.2.26) can be written as

Coming back to the definition of Θ, we observe that

Thus (v(t), ∂ tv(t)) ∈ Σ.

This completes the proof of Theorem 4.2.3. □

4.2.6 Unique Limits of Smooth Solutions

In this section, we present the proofs of Theorems 4.2.6 and 4.2.7.

Proof of Theorem 4.2.6

Thanks to Theorem 4.2.3, the Sobolev embeddings and Theorem 4.2.15 we obtain that

and

where (p ⋆, q ⋆) are as in Corollary 4.1.23 (observe that q ⋆ ≤ 6). Once, we have this information the proof of Theorem 4.2.6 follows from Theorem 4.1.22 (here we use the assumption s > 1∕2) and Corollary 4.1.23. Indeed, let us fix T > 0 and let Λ be such that

Let τ > 0 be the time of existence associated with Λ in Theorem 4.1.22. We now cover the interval [0, T] with intervals of size τ and using iteratively the continuous dependence statement of Theorem 4.1.22 and the uniqueness statement given by Corollary 4.1.23, we obtain that

This completes the proof of Theorem 4.2.6. □

We now turn to the proof of Theorem 4.2.7 which is slightly more delicate.

Proof of Theorem 4.2.7

For (v 0, v 1) ∈ Σ we decompose the solution as

Similarly, we decompose the solutions issued from (v 0,n, v 1,n) as

Using the energy estimates of the previous section, we obtain that

where

Therefore

Using that

and the fact that (v 0, v 1) ∈ Σ, we obtain that

where g(t) and f(t) are defined in (4.2.25). Therefore, we obtain that for every T > 0 there is C > 0 such that for every n,

Next, we observe that w and w n solve the equations

and

Therefore

We multiply the last equation by ∂ t(w − w n), and by using the Sobolev embedding \(H^1(\mathbb T^3)\subset L^6(\mathbb T^3)\) and the Hölder inequality, we arrive at the bound

Using (4.2.28) and the properties of the solutions obtained in Theorem 4.2.3, we obtain

The last inequality implies the following bound for t ∈ [0, T],

More precisely, we used that if x(t) ≥ 0 satisfies the differential inequality

for some z(t) ≥ 0 and y(t) ≥ 0 then

Coming back to (4.2.29) and using the Hölder inequality, we get for t ∈ [0, T],

Recalling (4.2.27), we obtain that for 1 < p < ∞,

Therefore (4.2.30) implies that

Recall that

Using once again (4.2.27) and

we get

and consequently

This completes the proof of Theorem 4.2.7. □

Remark 4.2.21

In the proof of Theorem 4.2.7, we essentially used that the regularisation by convolution works equally well in H s and L p (p < ∞) and that it commutes with the Fourier multipliers such as the free evolution S(t). Any other regularisation respecting these two properties would produce smooth solutions converging to the singular dynamics constructed in Theorem 4.2.3.

4.2.7 Conditioned Large Deviation Bounds

In this section, we prove conditioned large deviation bounds which are the main tool in the proof of Theorem 4.2.10.

Proposition 4.2.22