Abstract

Why do people do what they do? The field of motivation offers many different answers to this question, and in this chapter, we integrate a number of these answers by offering a novel perspective that views motivational processes as emerging from distributed yet highly interactive valuation systems that guide behavior. Each valuation system consists of hierarchical perception loops that use ascending feedback control to match mental models to the world and hierarchical action loops that use descending feedback control to match means to ends. Motivational force and direction emerge from distributed valuation system dynamics on three broad levels of complexity. On the first inherent motivation level, predictability and competence motives emerge from aggregated gap reduction imperatives of the perception and action loops. On the second intentional motivation level, committed goals and feedback control of goal pursuit emerge from synchronized valuation systems. On the third identity motivation level, goals about goals, or identity, and pursuits of pursuits, or self-regulation, emerge from synchronized intentional motivation. Each level of emergent motivation can produce affective feelings that modulate valuation systems and facilitate learning. The valuation systems perspective integrates key insights about motivation and demonstrates how complex motivational phenomena can emerge from basic perception and action processes.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Motivational processes

- Valuation systems

- Mental models

- Perception loops

- Action loops

- Intrinsic motivation

- Intentional motivation

- Identity motivation

- Self-regulation

- Learning

Motivation: A Valuation Systems Perspective

The questions that keep behavioral scientists up at night often concern motivation or why people and other animals do what they do. Why do people behave in ways that harm them in the long run? Why don’t all students try to learn and all adults engage in exercise? Why do people conform to some norms but break others? Motivation has been central to behavioral science since early theorists such as Sigmund Freud and Clark Hull used it as a foundation for constructing grand accounts of behavior. In the decades since their time, motivation has continued to fascinate researchers both as a focal interest (Dweck, 2017; Ryan, 2012; Shah & Gardner, 2008) and a pathway to understanding other phenomena such as the nervous system (Simpson & Balsam, 2016), emotion (Fox, Lapate, Shackman, & Davidson, 2018), cognition (Braver, 2016; Kreitler, 2013), development (Heckhausen, 2000), individual differences (Corr, DeYoung, & McNaughton, 2013), and social relations (Dunning, 2011). These diverse efforts to understand motivation have yielded a diversity of accounts that await attempts at integration. In this chapter, we offer one such attempt.

Our starting point is the idea that understanding motivation involves understanding how behavior obtains its force and direction (Pezzulo, Rigoli, & Friston, 2018). For instance, take the behavior of queuing to buy a ticket for a concert. Motivational force relates to the quantitative aspects of this behavior, such as the time spent in the queue or the price paid for the ticket. Motivational direction relates to the qualitative aspects of this behavior, such as choosing a particular concert or ticket booth over alternatives. Where do these aspects of behavior come from? Our view is that they emerge from the complex dynamics that produce behavior—different mental processes acting and interacting in parallel (Cisek, 2012; Gross, 2015; Hunt & Hayden, 2017; Ochsner & Gross, 2014; Pessoa, 2018). Products of complex dynamics tend to have emergent properties—features that characterize the product but not necessarily any of the individual processes that give rise to it. For example, political will is an emergent property of a society that cannot be found in its entirety within any individual or institution. We view the defining features of motivation—force and direction of behavior—as similarly emergent properties of behavior that need not exist in their entirety anywhere else in the mind. The force and direction of queuing for a ticket simply emerge from a combination of perceptions, beliefs, expectations, plans, feelings, habits, and other mental processes.

In this chapter, we trace the emergence of different motivational phenomena from the mental system that shape behavior. In the first section, we offer a simplified sketch of the systems that give rise to behavior and thereby motivation. Specifically, we introduce the notion of a valuation system that shapes behavior by solving two adaptive problems. Perception loops within valuation systems solve the problem of understanding the world by matching models of the world to sensory evidence. Action loops within valuation systems solve the problem of acting effectively on the world by matching models of means to models of ends. Both loops rely on different versions of hierarchical feedback control, the principle of reducing gaps between pairs of representations by iteratively altering one of them.

In the second section of the paper, we suggest that distributed valuation systems give rise to different forms of motivational force and direction that can be placed along a gradient of complexity, revealing three broad levels. The first inherent motivation level consists of the predictability and competence motives arising from the gaps that perception and action loops seek to minimize. The second intentional motivation level consists of goal commitment arising from sufficiently realistic and valuable goals and goal pursuit arising from synchronization of valuation systems into a behavioral feedback control cycle. The third identity motivation level consists of goals about goals, or identity as well as pursuit of pursuits, or self-regulation that emerges from further synchronization of intentional motivation. These emergent motivational phenomena are often reflected in awareness as feelings that modulate the operation of distributed valuation systems and provide a teaching signal. The valuation system perspective integrates insights from motivation theories in a novel way and demonstrates how complex motivational phenomena can be characterized as emerging from basic perception and action processes.

Valuation Systems

To trace how motivation emerges during behavior, we begin with a functional analysis of the valuation systems that produce behavior. By valuation system, we mean any mental system that represents the world and prompts action to help an individual to transition toward more valued states of the world. The mind can be viewed as a collection of different valuation systems, many of which are active and interactive most of the time (Gross, 2015). For instance, evolutionarily older systems involved in producing automatic behavior are complemented by evolutionarily younger systems producing flexible behavior (Evans & Stanovich, 2013; Rangel, Camerer, & Montague, 2008). Likewise, more specialized systems involved in dealing with particular challenges are complemented by more domain-general systems (Cosmides & Tooby, 2013).

To characterize the broad set of different valuation systems in common terms, we turn to a functional analysis. Grounded in an understanding of the problems that a set of systems can solve, a functional analysis seeks to identify general operating principles of these systems on a computational level, overlooking, initially at least, algorithmic and implementational details (Marr, 1982). For instance, a functional analysis of braking systems would reveal that all braking systems address the problem of how to slow a vehicle by converting kinetic energy into another type of energy. These insights characterize braking systems irrespective of their underlying algorithms (e.g., friction or regeneration) and implementations (e.g., steel or carbon fiber), thereby providing a common set of concepts for thinking about different braking systems. Our aim is to find a comparable common set of concepts for thinking about different valuation systems.

As with any functional analysis, we start by asking what problems valuation systems address. Broadly, these systems produce behavior that helps an individual to approach rewarding and to avoid punishing configurations of the internal and external environment. To do this, the valuation systems need to solve two basic problems—the perception problem of building a serviceable map of the world while relying only on fragmented sensory input and the action problem of finding situation-specific means to desired ends.

The perception problem arises because the mind lacks direct knowledge of the world. It receives information through an array of sensors that transform isolated features of the internal and external environment into streams of noisy data. For instance, single features of fruits, such as their size, color, or location, may all fail to reliably distinguish edible from inedible fruits. In order to act adaptively, valuation systems need some understanding of the structure of the world, such as the objects of edible and inedible fruits. Solving the perception problem therefore requires extracting the adaptively relevant structure of the world.

The action problem arises because an action that is appropriate in one place or time may not be appropriate in another place or time. For example, just because looking near a tree for food worked well last time does not mean it will work well this time. Trees do not carry fruit all of the time, and not all trees carry edible fruit. Solving the action problem thus requires flexibly producing different actions in different situations. This is because it would be difficult to solve this problem by relying solely on rigid links between stimuli and actions (e.g., reflexes) or between needs and actions (e.g., instincts). Solving the action problem therefore requires acting in accordance with the structure of the world.

Formulating the perception and action problems helps to identify the operating principles that valuation systems use to solve these problems. In the sections that follow, we argue that valuation systems solve both problems by combining hierarchical mental models that represent the structure of the world by conjoining simpler models into increasingly elaborate ones with hierarchical feedback control processes that minimize gaps between pairs of models by altering one of them.

Hierarchical Mental Models

Mental representations are neural patterns that stand in for different pieces of information in some computation (Pouget, Dayan, & Zemel, 2000). Some mental representations are mental models that stand in for multimodal states that the world can take. The term world is used broadly here, to denote the environment both outside and inside the individual, and the term state is used to denote a multimodal configuration of the internal and external environment. States of the world can therefore include places like a grocery store, beings like a cashier, or objects like an apple. They can also include bodily states such as hunger and mental states such as a plan to get some apples.

Most mental models rely on hierarchical abstraction, whereby more elaborate models are formed by conjoining a number of less elaborate models (Fig. 6.1; Ballard, 2017; Simon, 1962). The least elaborate mental models represent embodied experiences produced by the sensory-motor repertoire of the individual (Binder et al., 2016). Embodied models on a lower layer help define less embodied semantic models on a higher layer such as “food” and “paying.” As hierarchical abstraction progresses, it yields increasingly elaborate mental models including schemata, scenarios, and narratives (Baldassano, Hasson, & Norman, 2018; Binder, 2016). Elaborate models can denote whole situations or events that relate places, beings, objects, as well as mental and bodily states into a single comprehensive representation such as “grocery shopping” (Radvansky & Zacks, 2011). Abstraction hierarchies are implemented throughout the brain (Ballard, 2017; Fuster, 2017) and can be algorithmically expressed as multilayered neural networks (Lake, Ullman, Tenenbaum, & Gershman, 2017; McClelland & Rumelhart, 1981).

Mental models formed through hierarchical abstraction. Mental models (squares in each row) are neural patterns denoting states that the world can take. They are formed through hierarchical abstraction whereby patterns on a lower layer denoting experienced states of the world (e.g., an apple) are linked to patterns on a higher layer denoting more abstract states of the world (e.g., grocery shopping)

A key feature of mental models is their reusability. For example, there is considerable overlap between the neural patterns involved in perceiving and imagining equivalent stimuli (Lacey & Lawson, 2013) as well as between performing and imagining equivalent actions (Jeannerod, 2001; O’Shea & Moran, 2017). The same mental model can thus be used to denote a state of the world as it is experienced here and now for one computation and to denote an equivalent state of the world as it is mentally simulated within a different computation (Hesslow, 2012). For instance, seeing an apple within reach and wanting an apple that has yet to be found can involve the same mental model of an apple. Mental models can be reused for different purposes, including recalling how the world was, mentalizing how it might seem from another perspective, and, crucially for motivation, predicting how it might be in the near or distant future (Hesslow, 2012; Moulton & Kosslyn, 2009; Mullally & Maguire, 2014). Mental models are activated as predictions that denote states of the world that are probable given information arriving from—and stored knowledge about—the world. Some predictions concern imminent sensory input given how the world is believed, but not yet sensed, to be here and now (Huang & Rao, 2011; Kersten, Mamassian, & Yuille, 2004; Kok & de Lange, 2015). Other predictions concern sensory input from the states that the world is expected to take in the future (Gershman, 2018; Moulton & Kosslyn, 2009; Toomela, 2016). We assume that most mental models can be reused for either of these two versions of prediction (de Lange, Heilbron, & Kok, 2018).

Mental models play a key role in solving both the perception and action problems. They help to solve the perception problem by replacing the fragmented and variable sensory information arriving from the world with a coherent and stable perceived reality furnished by mental models. A crocodile is perceived to have sharp teeth even if its mouth is closed, because the actual sensory information about teeth, which is vulnerable to occlusion, is replaced by a mental model (Kersten et al., 2004). However, a model is only as useful as its match to the world. It would be decidedly unhelpful to mentally model a swimming crocodile as a floating log. Solving the perception problem thus requires not only possessing mental models but also choosing the right ones to represent a given state of the world. This suggests that the mind has a way to keep track of the probability that a prediction it has made really corresponds to reality. In functional terms, the mind can be said to have a tagging system that captures perceptual certainty (Petty, Briñol, & DeMarree, 2007). For instance, the mental model of an apple will have a stronger certainty tag when it is used to perceive a graspable apple than when it is used to desire an as yet unseen apple. Certainty tagging is a functional construct that can be implemented in the brain using different neural codes (Ma & Jazayeri, 2014). The idea that activated mental models have variable certainty aligns with evidence that neural representations are often probabilistic and that awareness is often accompanied by variable degrees of certainty or confidence (Grimaldi, Lau, & Basso, 2015; Pouget, Drugowitsch, & Kepecs, 2016). There is further evidence that perceptual decisions involve accumulation of evidence in favor of competing representations until one crosses a threshold (Gold & Shadlen, 2007). This suggests that strengthening certainty tags above some threshold is what turns the tagged mental model from a prediction into part of perceived reality.

In addition to helping solve the perception problem, mental models also help to solve the action problem of choosing one of several possible means, such as pushing or pulling the door, to pursue an end, such as to enter a room. Mental models help here by representing means and ends in a common modality of future states of the world. Ends such as entering a room are mental models of future states that the individual isinclined toapproach or avoid (c.f. Elliot & Fryer, 2008; Kruglanski et al., 2002; Tolman, 1925). Means are mental models of future states that are likely to result from preforming some action, such as a door being pushed open (Gershman, 2018; Hamilton, Grafton, & Hamilton, 2007; Hommel, Müsseler, Aschersleben, & Prinz, 2001; Ridderinkhof, 2014).

In addition to representing means and ends in a common domain, however, solving the action problem also requires choosing means that are appropriate for an end in a given context. This suggests that the mind has a way to keep track of the probability that a means would lead to a desired end. We propose, again in functional terms, that this probability is captured by bipolar valence tags. Specifically, a valence tag of a mental model represents the extent to which the state of the world denoted by that model (i.e., a means) would make a desired state (i.e., an end) more or less likely. The idea that activated mental models have variable valence tags aligns with evidence that most mental representations have an evaluative property of goodness vs. badness for the individual (Bargh, Chaiken, Govender, & Pratto, 1992; Carruthers, 2018; Cunningham, Zelazo, Packer, & Van Bavel, 2007; Man, Nohlen, Melo, & Cunningham, 2017).

Akin to how certainty tags above some threshold determine which mental models are perceived to be real, we argue that valence tags above some threshold determine which mental models function as action tendencies or a future state that valuation systems seek to make more likely (for positive valence tags) or less likely (for negative valence tags) through action. This view aligns with evidence that actions are initiated in the brain not primarily as representations of motion paths or muscle movements but instead as representations of states of the world that muscle movements should produce (Adams, Shipp, & Friston, 2013; Colton, Bach, Whalley, & Mitchell, 2018; Todorov, 2004). For instance, the action tendency to grasp an apple is encoded as a valence-tagged model of the world where the apple is already in hand. Under favorable conditions, the tendency can be enacted through muscle movements believed to bring about this end state.

Action tendencies can range from very broad, such as to approach or to avoid a tagged state of the world (Krieglmeyer, De Houwer, & Deutsch, 2013; Phaf, Mohr, Rotteveel, & Wicherts, 2014), to very specific, such as to produce or refrain from a small movement. Broad action tendencies that merely indicate whether a state should be approached or avoided are usually thought of as ends. More specific action tendencies that indicate more fine-grained courses of action are usually thought of as means. However, our perspective suggests that both ends such as being in a room and means such as the door becoming open through pushing or pulling ultimately belong to the same class of action tendencies—valence-tagged predictions that valuation systems seek to make more or less likely to exist.

Our functional analysis thus far suggests that valuation systems involve mental models with variable certainty and valence tags. The strength of these tags, which can vary independently across different models as well as for the same model across different times, determine whether a model functions as a prediction, as part of perceptual reality, or as an action tendency (Fig. 6.2). To illustrate, consider a person entering a café. As she steps into the café, her abstract schema of a café as well as information arriving at her senses combine to activate a number of mental models. At this early stage, most of these models are predictions about what the room is believed but not yet confirmed to contain (e.g., there should be tea for sale here). The predictions also concern what is believed to happen at a café in the future, either via action (e.g., getting tea by ordering it) or otherwise (e.g., people will be talking). The initially weak certainty tags of such predictions are updated as more sensory evidence is accumulated, leading some predictions to be tagged certain enough to become part of perceived reality (e.g., I now smell and see tea on sale here). Meanwhile, valence tags will be transferred from broad end-like action tendencies (e.g., drink something) to increasingly specific means-like action tendencies (e.g., order a cup of green tea).

Different functions of mental models based on variable certainty and valence tags. Many mental models are activated as predictions about what the situation might contain now or in the future. Valuation systems compare these predictions against sensory evidence and strengthen the certainty tags of the most accurate models, turning them into perceived reality. Valuation systems also compare means-like predictions to end-like predictions and strengthen the valence tags of the most effective means, turning them into action tendencies

Hierarchical Feedback Control

Armed with the idea of mental models with variable certainty and valence tags, we can now ask how valuation systems activate mental models and update their certainty and valence tags. Mental models can be activated by bottom-up and top-down information flows within abstraction hierarchies (Fig. 6.1; Bar, 2007; de Lange et al., 2018; Lamme & Roelfsema, 2000). On the one hand, coarse sensory input rapidly spreads across abstraction layers where it activates various mental models of what could be causing the sensed input. For instance, from a distance, a shop front on a street could activate the models of a café, a restaurant, and a bakery. On the other hand, as each activated abstract model activates its less abstract constituent models, a parallel top-down stream of model activation ensues. For instance, the café model activates the models of people sitting at tables, while the bakery model activates the models of people queuing at the counter. As a result of the parallel bottom-up and top-down activation flows, there are usually a large number of activated mental models at any given time. Most of these models function as predictions about what might be going on in that moment as well as in the future.

The next step toward solving the perception and action problems involves updating the certainty and valence tags of the activated predictions so that only the most accurate models become parts of perceived reality and only the most desirable models become action tendencies. We suggest that both tasks can be accomplished by variations of the computational principle of hierarchical feedback control (Clark, 2013; Friston, 2010; Seth, 2015). Feedback control involves iteratively producing outputs that reduce a gap between an input and a target. For example, a guitar can be tuned by playing a note on one string (input), comparing it to the same note played on another string (target), and changing the tension of one of the two strings (output) until the gap between the strings is sufficiently reduced. Given that either of the strings could be tuned to reduce the gap, there are two kinds of feedback control—ascending and descending (see Fig. 6.3).

Ascending feedback control loops consider their lower-level input as the reference value and change their target until it matches the input. This form of feedback control helps solve the perception problem by matching mental models to sensory input. Descending feedback control loops, by contrast, consider their higher-level target as the reference value and change their input until it matches the target. This form of feedback control helps solve the action problem by matching means to ends. The computational principle of feedback control has a long history in behavioral science (Ashby, 1954; Maxwell, 1868; Miller, Galanter, & Pribram, 1960; Powers, 1973; von Uexküll, 1926; Wiener, 1948) as well as compelling algorithmic and implementation expressions including Bayesian inference (Friston, 2010; Gershman, 2019), optimal feedback control (Scott, 2004; Todorov, 2004), and reinforcement learning (Glimcher, 2011; Lee, Seo, & Jung, 2012). It is therefore a promising candidate for a functional description of the common operating principles of different valuation systems (Carver & Scheier, 2011; Gross, 2015; Pezzulo & Cisek, 2016; Seth, 2015; Stagner, 1977; Sterling, 2012).

Ascending and descending feedback control help to solve the perception and action problems, respectively, when they operate between layers of abstraction hierarchies populated by mental models (see Figs. 6.4 and 6.5). Ascending feedback control operating between abstraction layers forms perception loops that use bottom-up evidence to assess the accuracy of top-down predictions (Chanes & Barrett, 2016; Clark, 2013; Friston, 2010; Henson & Gagnepain, 2010; Huang & Rao, 2011). Imagine a person taking a first sip from a cup of tea she has just ordered in a café. The action of ordering the tea has activated the mental model of “hot tea” as the best guess of what her cup contains. This prediction in turn generates a top-down cascade of increasingly specific further predictions about the sensations that a cup of tea should cause, such as “hotness.” Meanwhile, imagine that the drink in her cup is actually iced tea, producing the sensory observation of “coldness.” As predictions such as hotness cascade downward and evidence such as coldness cascade upward along abstraction hierarchies, perception loops can harness the gaps between these information flows to solve the perception problem using ascending feedback control. Specifically, a perception loop takes top-down predictions (e.g., hotness) as its targets, compares them to the bottom-up sensory evidence (e.g., coldness) as its input, and updates the certainty tags of the predictions as its output (e.g., weaken the certainty tag of “hot tea,” strengthen that of “iced tea”). This process can be repeated until all perceptual gaps are sufficiently minimized (see Fig. 6.4).

Perception loops using ascending feedback control between successive abstraction layers to match predictions to sensory evidence. Top-down flow of information corresponds to increasingly specific sensory predictions. Bottom-up flow of information corresponds to increasingly abstract sensory evidence. Ascending feedback control loops operating between pairs of layers use gaps between predictions and evidence to update certainty tags of predictions on the upper layer

Action loops using descending feedback control between successive abstraction layers to match means to ends. Top-down flow of information corresponds to increasingly specific action tendencies. Bottom-up flow of information corresponds to increasingly abstract action outcomes. Descending feedback control loops operating between pairs of layers use gaps between action tendencies and action outcomes to update valence tags of action outcomes on the lower layer

Perception loops minimize gaps between many pairs of abstraction layers in parallel. For instance, the target layer of the previous example, where the models “hot tea” and “iced tea” reside, is simultaneously the input layer to a more abstract feedback loop whose target layer contains a schema representing how cafés work. The higher loop takes the evidence produced by the lower loop that the cup might contain iced tea as its input and compares it to predictions such as “receiving the hot tea that was ordered” produced by the schema. It detects a gap and converts it into a change to the broader schema, for instance by inferring that the barista must have misunderstood the original order to mean iced tea. Iterative and hierarchically parallel ascending feedback control can therefore underlie increasingly complex perceptual and cognitive phenomena from perception to categorization, attribution, judgment, and so forth (Clark, 2013; Friston, 2010; Seth, 2015).

Mirroring how ascending feedback control loops address the perception problem, descending feedback control loops address the action problem of selecting situation-specific means to valence-tagged ends. Action loops work with predictions that represent how the world ought to be in the future (i.e., ends) and how it would be if different action tendencies were enacted (i.e., means). The computational task for the action loop is to strengthen the action tendencies that promise to be most effective means to an end in a given situation. This can be done by running descending feedback control loops between hierarchical layers of mental models (Adams et al., 2013; Shadmehr, Smith, & Krakauer, 2010; Todorov, 2004). The target positions of such loops are occupied by ends, such as the broad action tendency to “drink tea” that might be activated when a person enters a café (Fig. 6.5). The input to such loops consists of the expected outcomes of specific actions afforded by the situation, such as ordering different beverages from the barista. The action loop can now detect gaps between the end state (drink tea) and the predicted action outcomes (getting the ordered tea vs. getting the ordered coffee) and update the valence tags of the actions that yield the smallest gap (strengthen the positive tag for ordering tea, weaken the positive tag for ordering coffee).

Action loops minimize gaps between many abstraction layers in parallel. This is helpful for implementing relatively abstract action tendencies such as “drink tea” that can require different combinations of specific means depending on the characteristics of a situation, such as whether orders are taken at the table or at the counter in a particular café. Once a relatively abstract action loop has valence tagged an action outcome such as “order tea” as an effective means to the end of “drink tea,” a less abstract action loop can treat “order tea” as its end state and find that it would be served well by a means such as being over at the counter. An even less abstract loop may then valence-tag walking as a suitable means toward the end of being at the counter and so forth. Conversely, an end such as “drink tea” may itself have become an action tendency within an action loop serving a more abstract end such as adhering to the social convention of ordering something in a café. In effect, descending feedback control extends the valence tags from more to less abstract predictions until a way to change the world is found (Fishbach, Shah, & Kruglanski, 2004). This operating principle allows action loops to flexibly identify effective courses of action to strive for end states across different and changing situations.

Perception and action processes are deeply interwoven (Hamilton et al., 2007; Hommel et al., 2001; Ridderinkhof, 2014). For instance, perception makes use of simulated action outcomes to infer how different states of the world might have come about (Hesslow, 2012). Similarly, action makes use of perceived action outcomes to fine-tune motor control (Todorov, 2004). We therefore view each valuation system as a collection of functionally coupled perception and action loops (Fig. 6.6). A primary manifestation of perception-action coupling within a valuation system is the emergence of action affordances or perceived opportunities for action a situation offers (Cisek, 2007; Gibson, 1954). In functional terms, action affordances are a series of predictions that are deemed reasonably probable by perception loops and are also linked into a means-ends chain by action loops. For instance, the model of drinking tea functions as an action affordance if it is deemed a probable occurrence in a café by a perception loop and is also related to action tendencies such as ordering tea and walking to the counter by an action loop. Detection of action affordances thus requires perception loops to predict different action outcomes and action loops to organize them into effective means-end chains.

A valuation system. A set of functionally coupled perception and action loops can be thought of as a valuation system. The system operates with a commonly accessible pool of mental models (squares in each row) activated across different layers of abstraction hierarchies. Action affordances emerge from valuation systems as perception loops activate models of states that may follow the current one and action loops organize these predictions into means-ends chains

We have now defined a single valuation system, consisting of coupled perception and action loops that minimize gaps between mental models to solve key adaptive problems. This sketch of the complex dynamics underlying behavior remains incomplete, however, as we also need to consider that behavior usually emerges from several valuation systems acting and interacting in parallel. The existence of many different valuation systems may reflect the evolution of the brain as an expanding set of fairly compartmentalized solutions to fairly circumscribed problems, in addition to a suite of shared and domain-general cognitive resources (Cosmides & Tooby, 2013; Pinker, 1999). Rather than being inefficient, this setup may in fact provide flexibility and robustness to behavior control (Sterling, 2012). Overt behavior may therefore be best thought of as a distributed consensus between valuation systems focusing on different features of the world as well as on different kinds of end states (Cisek, 2012; Hunt & Hayden, 2017; O’Doherty, 2014; Vickery, Chun, & Lee, 2011). This principle is illustrated by functional specialization in the prefrontal cortex between regions evaluating information from different sources such as exteroceptive and interoceptive senses, visceral and skeletal motor systems, episodic simulation, and metacognitive representations of actions, emotions, and the self (Dixon, Thiruchselvam, Todd, & Christoff, 2017).

Given the existence of different valuation systems, how can their contributions be integrated without producing contradictory behavior, such as someone reaching simultaneously for an apple and a chocolate bar, and failing to grasp either? One possibility is that behavioral consistency emerges from competitions between mental models. Both perceptual and action decisions appear to involve sequential accumulation of “evidence” in favor of alternatives until one crosses a threshold and emerges as a discrete winner (Bogacz, 2007; Gold & Shadlen, 2007; Ratcliff, Smith, Brown, & McKoon, 2016; Yoo & Hayden, 2018). Within our perspective, this implies that discrete perception of some models as real emerges from sequential accumulation of certainty tags and discrete commitment to act emerges from sequential accumulation of valence tags. For instance, a decision to grasp an apple over a chocolate bar can ensue when the valence tag on the action tendency to grasp an apple reaches a decision threshold sooner than the valence tag on the tendency to grasp the chocolate bar. Notably, competitions can occur at different abstraction layers in parallel. For instance, in parallel with the competition between grasping action tendencies, another competition may have occurred on a higher layer of abstraction between end-like tendencies such as eating something tasty or eating something healthy. As valuation systems facilitate both ascending and descending information flows, the competitions on different layers influence each-other. For instance, as the tendency to eat in a healthy manner is strengthened, it will function as one source of the evidence that can tip the competition between grasping actions in favor of grasping for the apple rather than the chocolate bar.

The Emergence of Motivation

We have now considered how valuation systems are formed when hierarchical mental models are combined with feedback control operating in perception and action loops. From our valuation systems perspective, it is these dynamic interactions within and between valuation systems that give rise to the emergent motivational properties of force and direction.

Complex dynamics can give rise to emergent properties and often do so across many levels of increasing complexity. To take an example from the physical domain, some properties of water—such as adhesion to other molecules—emerge as soon as hydrogen and oxygen atoms form a water molecule. By contrast, other properties of water—such as the orderly structure of ice crystals—emerge from interactions involving larger numbers of water molecules. In a similar fashion, behavior can be characterized by different kinds of force and direction that emerge along a gradient of complexity.

In the sections that follow, we identify motivational phenomena that emerge at three levels of complexity along this gradient. Each level corresponds to a broad section of the gradient, and transitions between the levels are gradual. On the first inherent motivation level, predictability and competence motives emerge from aggregated gap reduction imperatives of the perception and action loops, respectively. On the second intentional motivation level, goal commitment and goal pursuit cycles emerge from synchronized valuation systems. On the third identity motivation level, identity and self-regulation emerge from synchronized goal pursuit cycles.

As we consider each of these emergent phenomena, we will argue that they can become reflected in conscious awareness as affective feelings that orchestrate system-wide responses and facilitate learning from experience (Carver & Scheier, 1990; Chang & Jolly, 2018; Lang & Bradley, 2010; Pessoa, 2018; Weiner, 1985). We consider feelings to be affective when they contain the evaluative property of goodness vs badness. This suggests that all affective feelings reflect the valence tags of relevant mental models, as they are retrieved and updated. However, not all valence tags are reflected in feelings as valence tags can also be retrieved and updated outside conscious awareness.

From the perspective of understanding motivation, affective feelings have two important functions. First, they can modulate several distributed valuation systems at once. For instance, affective feelings prioritize relevant world states within mental competitions (Frijda, 2009), constrict and broaden the scope of information processing (Gable & Harmon-Jones, 2010), and make certain action families more or less prepotent (Frijda, 1987). Affective feelings are therefore one way in which emergent motivational phenomena can influence the processes they emerge from. The second function of affective feelings is to produce a learnable piece of information. As a conscious reflection of an otherwise hidden process, an affective feeling makes motivational phenomena part of the world that can be explained by mental models (Barrett, 2017; Seth, 2013). As such models are stored in memory, they can influence the operation of valuation systems upon future encounters of similar situations. For instance, a memory trace of relaxation brought about by a cup of tea can strengthen an action tendency to have another cup of tea in the future.

Inherent Motivation: Predictability and Competence

The first novel feature to emerge from a constellation of valuation systems involves an aggregation of the gap reduction imperatives within individual feedback control loops into the motives of predictability and competence (c.f. Mineka & Hendersen, 1985). Feedback control loops generate elemental motivational force by transforming otherwise inert differences between mental models into changes to certainty and valence tags. Individually, each change generated in this way may fall short of being a consistent form of motivation, as it may not become manifest in behavior. Collectively, however, the outputs of all active feedback control loops give rise to the emergent motives of predictability and competence.

The predictability motive emerges from the aggregate imperatives to minimize gaps in perception loops. This motive manifests as a desire to understand the world, over and above any desire to influence it. Constructs that overlap with the predictability motive include epistemic motivation (De Dreu, Nijstad, & van Knippenberg, 2008) and the needs for optimal predictability (Dweck, 2017), for confidence (Cialdini & Goldstein, 2004), for cognition (Cacioppo & Petty, 1982), for closure (Kruglanski & Webster, 1996), and for understanding (Stevens & Fiske, 1995). Our perspective suggests that these constructs relate to an imperative to minimize perceptual gaps by finding mental models that explain information arriving from the world. Sometimes, sufficiently accurate models can simply be retrieved from memory. This in itself can be motivating as indicated by the allure of quizzes and crossword puzzles. At other times, new models need to be constructed by combining new information with information that is already known. The predictability motive therefore also contributes to behaviors that facilitate the development of mental models such as strategic observation and intuitive experimentation (Gopnik & Schulz, 2007).

The competence motive emerges from the aggregate imperative to minimize gaps in action loops. This motive manifests as a desire to be able to impact the world over and above any ensuing rewards and punishments (Abramson, Seligman, & Teasdale, 1978; Bandura, 1977; Leotti, Iyengar, & Ochsner, 2010; Skinner, 1996). Constructs that overlap with the competence motive include the needs for competence (Deci & Ryan, 2000; Dweck, 2017), for achievement (McClelland, Atkinson, Clark, & Lowell, 1953), for control (Burger & Cooper, 1979), and for effectance (Stevens & Fiske, 1995; White, 1959). Our perspective suggests that these constructs relate to an imperative to minimize action gaps by finding effective means to various ends. Over short time scales, this can be accomplished without overt action, by computations within valuation systems that organize scattered predictions into coherent means-ends chains, or action affordances. This in itself can be motivating as indicated by the aversion people feel to situations where their freedom to act is restricted. Over longer time scales, minimizing action gaps requires overt action and feedback to acquire and hone new skills. The competence motive therefore contributes to behaviors that facilitate skill acquisition such as play and exploration (Pellegrini, 2009).

Interestingly, people’s preferences for predictability and competence appear to taper off above some optimal level (Dweck, 2017). For instance, people tend to like music in which they can predict many but not all changes in melody and rhythm (Eerola, 2016). Similarly, people tend to enjoy games in which they have a good but not perfect control over winning (Abuhamdeh & Csikszentmihalyi, 2012). It appears that neither complete predictability nor complete competence is necessarily desirable. One explanation for this is that the predictability and competence motives are satisfied not only by the state of minimized perception and action gaps but also by the progress in minimizing them. Focusing only on the size of perception and action gaps, and not on their dynamics, can be short-sighted as it may preclude the individual from exploring new environments and acquiring new skills. For instance, playing one level of a multilevel computer game over and over again would soon provide minimal perception and action gaps as event sequences and action outcomes become fully known. However, sticking to one level would preclude the player from discovering new environments and acquiring new skills. Players’ general eagerness to progress to new levels suggests that people are motivated by progressive decreases in perception and action gaps not only by their low levels. The nonlinearity of the predictability and competence motives may therefore help maintain a balance between exploiting and exploring the environment (Cohen, McClure, & Yu, 2007; Friston et al., 2015).

As they emerge from distributed valuation systems, predictability and competence motives can give rise to affective feelings. Conscious reflections of the predictability motive include the feelings of surprise, confusion and curiosity that have been associated with directing cognitive resources toward understanding (D’Mello, Lehman, Pekrun, & Graesser, 2014; Loewenstein, 1994; Silvia, 2008; Wessel, Danielmeier, Morton, & Ullsperger, 2012). Our perspective suggests that these feelings reflect to-be-minimized gaps within perception loops. Surprise, elicited by unexpected events, should correspond to perception gaps caused by sensory evidence contradicting recent predictions about the future. Confusion and curiosity, by contrast, should correspond to perception gaps caused by sensory evidence contradicting predictions about the present, i.e., difficulties in finding mental models that would explain the current state of the world.

Conscious reflections of the competence motive may include the feelings of frustration and boredom that have been associated with regulation of effort and exploration (Geana, Wilson, Daw, & Cohen, 2016; Louro, Pieters, & Zeelenberg, 2007; Westgate & Wilson, 2018). Our perspective relates these feelings to gaps within action loops. Frustration should arise from gaps remaining within action loops because of difficulties in detecting feasible action affordances or means-end chains that would take the individual from the current state of affairs toward some end state. Boredom, by contrast, should arise when the gaps in action loops are minimized to such a high degree that the individual runs the risk of missing opportunities to learn new skills, i.e., of sacrificing exploration to exploitation (Geana et al., 2016).

Predictability and competence motives form the first level of motivation to emerge from distributed valuation systems. This level is relatively low on the gradient of complexity as predictability and competence motives arise from simple aggregation of the gap reduction imperatives within perception and action loops. Over and above predictability and competence, people prefer to understand some things more than others and to be competent in some activities more than in others. We argue that these motives result from the more complex forms of motivation relating to goals and identity, which we will consider in the next two subsections.

Intentional Motivation: From Goal Commitment to Goal Pursuit

The motivational phenomena emerging on the second level along the gradient of complexity range from goal commitment to goal pursuit. Goal commitment, or incentive salience (Berridge, 2018), is what distinguishes the few goals that dominate behavior at a given time from the many other potential goals or ends that action loops also consider. Goal pursuit is the relatively coherent and persistent behavior aimed at reducing goal gaps between the current and desired states of the world (Moskowitz & Grant, 2009). In this section, we suggest that synchronous certainty and valence tagging across several valuation systems give rise to committed goals and goal pursuit cycles.

At any given time, people are committed to pursue only a subset of activated action tendencies—the future states with valence tags suggesting they should be approached or avoided (Elliot & Fryer, 2008; Klein, Wesson, Hollenbeck, & Alge, 1999). For example, a person in a café might exhibit action tendencies to “talk to people” as well as to “read the news” but become committed to only one of these goals. What determines which one? Expectancy-value accounts of motivation suggest that people generally commit to end states that are sufficiently valuable as well as sufficiently probable (Atkinson, 1957; Eccles & Wigfield, 2002; Hull, 1932; Steel & König, 2006; Weiner, 1985). Expressed in terms of our perspective, committed goals are therefore predictions with sufficiently strong certainty and valence tags. For a prediction such as “talk to people” to emerge as a goal, it thus needs to be considered sufficiently probable by perception loops as well as a sufficiently feasible means toward some end by action loops. Crucially, the more ends a prediction serves, the stronger its valence tag can be. For instance, “talk to people” may win commitment over “read the news” because even as both action tendencies are feasible means to the end of “avoid boredom,” only talking to people is also a feasible means to the end of “find a companion.” We therefore suggest that predictions generally become goals through synchronized consideration by several valuation systems.

The emergence of a goal can in turn amplify the synchronization between different valuation systems. Goal commitment is often accompanied by substantial prioritization of goal-relevant perception and action at the expense of alternatives (Landhäußer & Keller, 2012; Shah, Friedman, & Kruglanski, 2002). Our framework explains this by the synchronizing impact a prediction with strong certainty and valence tags can have on perception and action loops. As a prediction with a strong certainty tag, a committed goal activates a number of goal-relevant predictions within perception loops. For instance, someone committed to drinking tea may be imagining what drinking tea feels like, thinking about where to get tea, and recalling a recent article about the health effects of drinking tea. As a prediction with a strong valence tag, a committed goal also generates further goal-relevant action tendencies within action loops. For instance, the person wanting tea may imagine walking to one café, driving to another, and preparing tea at the office. The simultaneous impacts on perception and action loops manifest in goal-relevant information becoming more easily detected, more thoroughly processed, and more difficult to ignore, often at the expense of models that are less relevant for the goal, contributing to the related phenomena of motivated attention (Pessoa, 2015; Vuilleumier, 2015) and goal shielding (Shah et al., 2002).

Another consequence of increased synchrony between perception and action loops is the reliable emergence of a previously unavailable signal of goal gap. Goal gap represents the distance between how the world is perceived to be and how it is desired to be according to the goal (Chang & Jolly, 2018; Elliot & Fryer, 2008; Kruglanski et al., 2002). This signal is distinct from both the perception and action gaps that are computed within valuation systems. A goal gap compares the world as it is according to the most certain models to how it should be according to the committed goal. By contrast, a perception gap compares the world as it might be according to various predictions to how it is according to sensory evidence, and an action gap compares the world as it would be in some end state to how it would be owing to some action. A goal gap is an important additional piece of information that complements the value (valence tag) and expectancy (certainty tag) associated with a goal. The goal gap indicates how much more work and time might be needed before a goal can be attained. Integration of recent goal gap changes can further function as a speedometer indicating whether success in goal pursuit is accelerating or decelerating. These pieces of information are known to be pivotal to the force and direction with which people strive for goals (Carver & Scheier, 2011; Chang & Jolly, 2018; Louro et al., 2007).

The final unique property to emerge from distributed valuation systems on the second level of complexity is the goal pursuit cycle that implements descending feedback control to minimize goal gaps (Fig. 6.7a). Recall that descending feedback control, which is also operative within action loops, involves iteratively changing an input to minimize a gap between the input and a target. Within the goal pursuit cycle, the target position is occupied by the goal, the input position by the current state of world, and the output position by a desired change to the world. As it iterates, the goal pursuit cycle seeks to minimize the goal gap by changing the world. This function emerges from the operation of distributed valuation systems in the sense that the goal pursuit cycle relies on perception loops for its input and action loops for its output. The current state of world, which is compared to the goal, is produced by the collective operation of perception loops. Likewise, the change to the world that the goal pursuit cycle outputs is produced by the collective operation of action loops that translate the desired change to the world into action tendencies by valence tagging increasingly specific predictions. The goal pursuit feedback control cycle is therefore an emergent process that recapitulates the structure of descending feedback control.

Emergence of goal pursuit. (a) Goal pursuit as an emergent feedback control cycle that takes the perceived reality produced by perception loops as input, compares it to the committed goal as its target, and uses action loops to change the world as its output. (b) A schematic rendering of the processes in (a) that focuses on four iterative steps of the goal pursuit cycle

The goal pursuit process can be redrawn as a simpler cycle consisting of four key steps of World, Perception, Valuation, and Action (Fig. 6.7b). Consider for instance someone committed to a goal to assemble a piece of furniture such as a shelf. The World step of the goal pursuit cycle denotes the current state of the world with a disassembled shelf. At the Perception step, mental models are found to capture goal-relevant information such as pieces of the shelf and affordances for connecting them to each other. At the Valuation step, the perceived disassembled shelf is compared to the committed goal of assembled shelf, and the gaps between the two are detected. At the Action step, action tendencies intended to reduce the goal gap are generated and, as long as the pursuit of the given goal remains a priority, enacted. Next, all steps of the loop are repeated to adjust behavior to the outcomes of the actions and other changes in the world. The loop generally iterates until the goal gap has been minimized, unless people are also motivated to maintain the absence of the gap (Ecker & Gilead, 2018). The loop can also disintegrate when the goal loses its committed status (Carver & Scheier, 2005).

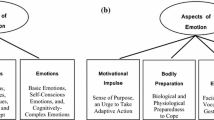

These intentional motivational phenomena can be reflected in awareness through their contributions to achievement emotions such as hope and anxiety, contentment and disappointment, or relief and despair (Harley, Pekrun, Taxer, & Gross, 2019; Pekrun, 2006; Weiner, 1985). This is because emotions rely on appraisal processes that represent the relationship between a situation and goals (Moors, 2010; Smith & Lazarus, 1993), leading to loosely orchestrated changes in the mind and the body (Barrett, Mesquita, Ochsner, & Gross, 2007; Moors, Ellsworth, Scherer, & Frijda, 2013; Mulligan & Scherer, 2012). In terms of our framework, the appraised relationships between a situation and goals overlaps with the goal gaps computed at the Valuation step of the feedback control goal pursuit cycle (Chang & Jolly, 2018; Moors, Boddez, & De Houwer, 2017; Uusberg, Taxer, Yih, Uusberg, & Gross, 2019). In particular, goal gaps are closely aligned with the appraisal of goal congruence that is strongly associated with the valence of affective feelings (Scherer, Dan, & Flykt, 2006). Specifically, positive affect is generated when the world is helpful for goals and negative affect is generated when the world is unhelpful for goals. The helpfulness assessment may also take into account the rate of goal progress, leading to positive affect when a goal is getting closer and negative affect when it is not (Carver & Scheier, 1990). Other important appraisal dimensions such as accountability and coping potential can be thought of as abstract features of the mental models that valuation systems have applied to explain the situation.

We have now seen how intentional motivation ranging from goal commitment to goal pursuit can emerge from distributed valuation systems. The synchronized combination of valence and certainty tags produces committed goals and goal pursuit feedback control cycles. This cycle focuses perception loops on the extraction of goal-relevant information and action loops on the implementation of desired changes to the world. One consequence of the emergence of goal pursuit is the temporal and cross-situational durability of the impact a committed goal has on behavior. For instance, someone who has already spent some time queuing for a concert ticket may be more resistant to giving up than someone who has not yet begun. However, the temporal durability of some goals exceeds what can be explained by intentional motivation alone, suggesting a role for a third level of motivation to emerge along the gradient of complexity that we discuss next.

Identity Motivation: From Self to Self-Regulation

The motivational phenomena to emerge on the identity motivation level include identity, or a valued sense of self (Berkman, Livingston, & Kahn, 2017), and self-regulation, or biasing of behavioral impulses serving more imminent goals in favor of pursuits of more distant goals (Berkman et al., 2017; Kotabe & Hofmann, 2015; O’Leary, Uusberg, & Gross, 2017). We propose that these motivational phenomena emerge from distributed valuation systems on the third level along the gradient of complexity. In this section, we will argue that identity and self-regulation can be seen as meta-level versions of goal commitment and goal pursuit. Specifically, we view identity as a commitment to attain certain goals and self-regulation as a feedback control pursuit of certain goal pursuits.

Identity as well as self-regulation revolve around highly abstract mental models that denote the self. Mental models of the self can be viewed as conjunctions of various other models that represent self-related information such as personal characteristics, social roles, long-term goals, and personal narratives (Dweck, 2013; Gillihan & Farah, 2005; McAdams, 2013). These self-related mental models arise within perception loops to help make sense of what is going on inside and outside of the person. Self-models with a sufficiently good match to evidence populate a person’s self-awareness. Over time, some self-models can obtain persistent certainty tags and become part of perceived reality irrespective of momentary evidence, underlying a person’s self-concept.

Self-models can also function as committed goals or end states that an individual seeks to turn into reality. We refer to such goals as identity. Our perspective suggests that self-models amount to identity the same way any mental model becomes a goal—by sufficiently strong valence and certainty tags. Identity includes parts of the self-concept that are persistently tagged with positive or negative valence, giving rise to the phenomenon of self-esteem (Mann, Hosman, Schaalma, & de Vries, 2004). We suggest that the need for self-coherence or the self-verification motive can be understood as a commitment to positively valenced aspects of the self-concepts (Dweck, 2017; Leary, 2007; Swann, 1982). A related but distinct component of identity is the ideal self (Higgins, 1987) which can be viewed as a set of self-models that are strongly valence tagged, insufficiently certainty tagged to already belong to the self-concept, but sufficiently certainty tagged to emerge as a committed goal. The striving for this aspect of identity overlaps with motivational phenomena such as self-enhancement and self-protection (Alicke & Sedikides, 2009; Leary, 2007).

We suggest that identity can synchronize valuation systems the same way all committed goals do and should therefore produce goal shielding and goal gaps. This prediction aligns with findings that self-related information is prioritized in various information processing stages, indicating that identity can indeed produce goal shielding (Alexopoulos, Muller, Ric, & Marendaz, 2012). Identity can also produce goal gaps, or representations of the distance between the self as it is perceived to be and how it is desired to be. For instance, people can have a strong sense of being incongruent with their self-concept (Swann, 1982) and not living up to their ideal selves (Alicke & Sedikides, 2009).

The unique feature to emerge on the third level of the gradient of complexity is recursiveness or meta-level nature of identity and self-regulation. Identity, a type of goal, can be thought of as a goal about other goals. The intentional-level goals are often in competition as people regularly juggle different pursuits in parallel. For instance, a meeting with a colleague can involve working on several agenda items, maintaining the relationship, handling of phone notifications, and dealing with bodily signals such as thirst. The number of parallel goals people care about increases with the temporal window of analysis, as we move from a moment to a day, to a week, to a year, or to the foreseeable future. Identity can be seen as one mechanism through which some goals will become prioritized over others (Berkman et al., 2017). For instance, when the meeting described above grows overwhelming, a person who identifies with being an efficient manager but not with being a nice person may sacrifice the goal of managing the relationship in service of managing the agenda. The person’s identity has therefore functioned as a goal to prioritize one goal over another.

The recursiveness of identity motivation is also visible when self-regulation is viewed as an identity pursuit gap reduction feedback loop. Self-regulation is what is needed to stop oneself from consuming pleasant substances that are harmful in the long run or to sacrifice activities with a short-term payoff such as watching TV to activities with a long-term payoff such as exercising. A common element in these situations, and a defining feature of self-regulation, is the competition between the pursuit of shorter-term goals and the pursuit of longer-term goals (Berkman et al., 2017; Duckworth, Gendler, & Gross, 2016; Kotabe & Hofmann, 2015; Van Tongeren et al., 2018). The goals or end states that self-regulation seeks to alter thus amount not to any state in the external environment but to the state of competition between different goal pursuits within the individual.

Viewing self-regulation as a form of goal pursuit suggests that self-regulation can also be analyzed as a feedback control process involving the World, Perception, Valuation, and Action steps (see Fig. 6.8). Self-regulation as a feedback control goal pursuit cycle seeks to reduce the gap between some component of identity and the perceived self. A key difference between regular goal pursuit emerging on the intentional motivation level and the meta-level goal pursuit of self-regulation is the nature of the world that these cycles seek to change. Whereas goal pursuit seeks to change the state of environment, both external and internal, self-regulation seeks to change the state of other goal pursuits. For instance, consider someone trying to overcome a craving for a tasty burger in favor of a healthy and environmentally friendly salad. Self-regulation is needed in this situation not for actually ordering the salad, which would be trivial for action loops within an intentional-level goal pursuit cycle. Self-control is needed in this situation to shift the balance among the motivational processes emerging on the intentional level to commit to the salad instead of the burger. Thus, the world that self-regulation seeks to change is the state of other goal pursuits (Fig. 6.8).

Self-regulation as identity pursuit. Self-regulation is a feedback control goal pursuit cycle that seeks to minimize a gap between an aspect of identity and perceived state of the self. It represents the state of relevant ongoing goal pursuits at the Perception step, evaluates them in relation to identity at Valuation step, and launches regulation strategies at the Action step

The focus on other goals is then propagated to the remaining steps of self-regulation goal pursuit cycle (Gross, 2015; O’Leary et al., 2017). The Perception step of self-regulation involves perception loops using interoceptive and other evidence to populate self-awareness with appropriate mental models, such as the concept of craving for the burger (Barrett, 2017; Seth, 2013). At the Valuation step, gaps are detected between the perceived state of ongoing goal pursuits and aspects of identity such as being a healthy and ethical person. Finally, the Action step of self-regulation includes overt and covert action that are directed at changing the state of ongoing goal pursuits, such as deliberately focusing on the negative consequences of eating the burger with the aim to reappraise its allure (Duckworth et al., 2016; Lazarus, 1993; O’Leary et al., 2017). Self-regulation can go through multiple iterations before the identity gap is minimized.

Identity motivation can also give rise to unique emotional episodes. One class of emotions emerging on this level are self-conscious emotions such as pride, shame, and guilt (Leary, 2007; Tracy & Robins, 2004). The appraisal process underlying these emotions assesses the congruence between the situation and aspects of identity, such as one’s social standing. Another unique class of emotion to emerge on this level are meta-emotions or emotions arising in response to another emotion. For instance, people can feel negatively about an emotion they experience, such as anxiety, if they have appraised this emotion to be incongruent with a relevant goal such as giving a good presentation (Tamir, 2015). The affective feelings in response to internal states are important triggers of self-regulatory processes such as emotion regulation (Gross, 2015).

We have drawn parallels between committed goals and identity and between goal pursuit and self-regulation. In fact, the structure of the feedback control goal pursuit process can also help us understand the action tendencies produced by the higher-order self-regulatory process (Gross, 1998, 2015). As the goal of identity pursuit is to alter the state of concurrent goal pursuits, it can in principle alter each of the four phases of goal pursuit. First, the self-regulatory loop can alter or modify the world states that goal pursuit processes take as their input. For instance, someone wishing to avoid eating too many sweets may remove sweets from their home. Second, self-regulation can interfere with the Perception step of goal pursuit by directing attention away from thinking about sweets. Third, the self-regulatory loop can interfere with the Valuation step of goal pursuit, for instance by thinking about how a recent meal already provided a sweet experience, thereby making the goal gaps seem smaller. Finally, the self-regulatory loop can launch actions that directly target the tendencies produced by goal pursuit processes, such as suppressing the urge to get some sweets.

Conclusion

In this chapter, we have presented a valuation systems perspective on motivation. This account relies on a functional analysis of valuation systems that combine mental models with hierarchical feedback control to solve the perception and action problems associated with producing adaptive behavior in a dynamic, rapidly changing world. The perception problem is solved by perception loops that populate perceptual reality with predictions that do the best job of explaining sensory evidence. The action problem is solved by action loops that generate action tendencies by identifying the means that do the best job of approximating end states. Motivational force and direction emerge from the dynamic interactions within and between valuation systems at three broad levels along a gradient of complexity. Inherent motives of predictability and competence arise from aggregated gaps within perception and action loops, respectively. Intentional motivation arises as predictions with sufficient certainty and valence tags become committed goals that synchronize valuation systems and give rise to a goal pursuit cycle that uses descending feedback control to minimize goal gaps. Identity motivation arises from further synchronization of valuation systems into identity, or goal about goals, and self-regulation, or feedback control of goal pursuits. Each of these levels can also give rise to affective feelings that can regulate distributed valuation systems and function as teaching signals.

Motivation as viewed from the valuation systems perspective has three broad characteristics. First, motivation is emergent. There is no stage in the unfolding of behavior at which the motive to act is fully formed and then merely implemented. Instead, action affordances detected by valuation systems are converted to action tendencies across several competing valuation systems. Second, motivation is constructive as it arises neither from the environment nor the person in isolation but from an active negotiation between the two within valuation systems. Our perception of the world, of our own goals, and of afforded actions relies on the mental models that perception systems have generated over time and in the moment. This suggests that the mental models we bring to a situation have a substantial impact on the motivation we experience (Dweck, 2017). Third, motivation is allostatic. While homeostatic control seeks to maintain a fixed state of a system, allostatic control seeks to flexibly adjust the state of the system in anticipation of changes in the world (Barrett, 2017; Sterling, 2012; Toomela, 2016). Action loops enact allostatic control by guiding behavior toward predictive mental models across multiple layers of complexity. Taken together, we hope these ideas help move us toward an integrative perspective on motivation.

References

Abramson, L. Y., Seligman, M. E., & Teasdale, J. D. (1978). Learned helplessness in humans: Critique and reformulation. Journal of Abnormal Psychology, 87, 49–74.

Abuhamdeh, S., & Csikszentmihalyi, M. (2012). The importance of challenge for the enjoyment of intrinsically motivated, goal-directed activities. Personality and Social Psychology Bulletin, 38, 317–330. https://doi.org/10.1177/0146167211427147

Adams, R. A., Shipp, S., & Friston, K. J. (2013). Predictions not commands: Active inference in the motor system. Brain Structure and Function, 218, 611–643. https://doi.org/10.1007/s00429-012-0475-5

Alexopoulos, T., Muller, D., Ric, F., & Marendaz, C. (2012). I, me, mine: Automatic attentional capture by self-related stimuli. European Journal of Social Psychology, 42, 770–779. https://doi.org/10.1002/ejsp.1882

Alicke, M. D., & Sedikides, C. (2009). Self-enhancement and self-protection: What they are and what they do. European Review of Social Psychology, 20, 1–48. https://doi.org/10.1080/10463280802613866

Ashby, W. R. (1954). Design for a brain. New York, NY: John Wiley & Sons Inc.

Atkinson, J. W. (1957). Motivational determinants of risk-taking behavior. Psychological Review, 64, 359–372. https://doi.org/10.1037/h0043445

Baldassano, C., Hasson, U., & Norman, K. A. (2018). Representation of real-world event schemas during narrative perception. Journal of Neuroscience, 38, 9689–9699. https://doi.org/10.1523/JNEUROSCI.0251-18.2018

Ballard, D. H. (2017). Brain computation as hierarchical abstraction. Cambridge, MA: The MIT Press.

Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84, 191–215. https://doi.org/10.1037/0033-295X.84.2.191

Bar, M. (2007). The proactive brain: Using analogies and associations to generate predictions. Trends in Cognitive Sciences, 11, 280–289. https://doi.org/10.1016/j.tics.2007.05.005

Bargh, J. A., Chaiken, S., Govender, R., & Pratto, F. (1992). The generality of the automatic attitude activation effect. Journal of Personality and Social Psychology, 62, 893–912. https://doi.org/10.1037/0022-3514.62.6.893

Barrett, L. F. (2017). The theory of constructed emotion: An active inference account of interoception and categorization. Social Cognitive and Affective Neuroscience, 12, 1–23. https://doi.org/10.1093/scan/nsw154

Barrett, L. F., Mesquita, B., Ochsner, K. N., & Gross, J. J. (2007). The experience of emotion. Annual Review of Psychology, 58, 373–403. https://doi.org/10.1146/annurev.psych.58.110405.085709

Berkman, E. T., Livingston, J. L., & Kahn, L. E. (2017). Finding the “self” in self-regulation: The identity-value model. Psychological Inquiry, 28, 77–98. https://doi.org/10.1080/1047840X.2017.1323463

Berridge, K. C. (2018). Evolving concepts of emotion and motivation. Frontiers in Psychology, 9, 1647. https://doi.org/10.3389/fpsyg.2018.01647

Binder, J. R. (2016). In defense of abstract conceptual representations. Psychonomic Bulletin & Review, 23, 1096–1108. https://doi.org/10.3758/s13423-015-0909-1

Binder, J. R., Conant, L. L., Humphries, C. J., Fernandino, L., Simons, S. B., Aguilar, M., & Desai, R. H. (2016). Toward a brain-based componential semantic representation. Cognitive Neuropsychology, 33, 130–174. https://doi.org/10.1080/02643294.2016.1147426

Bogacz, R. (2007). Optimal decision-making theories: Linking neurobiology with behaviour. Trends in Cognitive Sciences, 11, 118–125. https://doi.org/10.1016/j.tics.2006.12.006

Braver, T. S. (Ed.). (2016). Motivation and cognitive control. New York, NY: Routledge.

Burger, J. M., & Cooper, H. M. (1979). The desirability of control. Motivation and Emotion, 3, 381–393. https://doi.org/10.1007/BF00994052

Cacioppo, J. T., & Petty, R. E. (1982). The need for cognition. Journal of Personality and Social Psychology, 42, 116–131. https://doi.org/10.1037/0022-3514.42.1.116

Carruthers, P. (2018). Valence and value. Philosophy and Phenomenological Research, 97, 658–680. https://doi.org/10.1111/phpr.12395

Carver, C. S., & Scheier, M. F. (1990). Origins and functions of positive and negative affect: A control-process view. Psychological Review, 97, 19–35. https://doi.org/10.1037/0033-295X.97.1.19

Carver, C. S., & Scheier, M. F. (2005). Engagement, disengagement, coping, and catastrophe. In A. J. Elliot & C. S. Dweck (Eds.), Handbook of competence and motivation (pp. 527–547). New York, NY: Guilford Press.

Carver, C. S., & Scheier, M. F. (2011). Self-regulation of action and affect. In K. D. Vohs & R. F. Baumeister (Eds.), Handbook of self-regulation: Research, theory, and applications (2nd ed., pp. 13–39). New York, NY: Guilford Press.

Chanes, L., & Barrett, L. F. (2016). Redefining the role of limbic areas in cortical processing. Trends in Cognitive Sciences, 20, 96–106. https://doi.org/10.1016/j.tics.2015.11.005

Chang, L. J., & Jolly, E. (2018). Emotions as computational signals of goal error. In A. S. Fox, R. C. Lapate, A. J. Shackman, & R. J. Davidson (Eds.), The nature of emotion: Fundamental questions (2nd ed., p. 21). New York, NY: Oxford University Press.

Cialdini, R. B., & Goldstein, N. J. (2004). Social influence: Compliance and conformity. Annual Review of Psychology, 55, 591–621. https://doi.org/10.1146/annurev.psych.55.090902.142015

Cisek, P. (2007). Cortical mechanisms of action selection: The affordance competition hypothesis. Philosophical Transactions of the Royal Society B: Biological Sciences, 362, 1585–1599. https://doi.org/10.1098/rstb.2007.2054

Cisek, P. (2012). Making decisions through a distributed consensus. Current Opinion in Neurobiology, 22, 927–936. https://doi.org/10.1016/j.conb.2012.05.007

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. The Behavioral and Brain Sciences, 36, 181–204. https://doi.org/10.1017/S0140525X12000477

Cohen, J. D., McClure, S. M., & Yu, A. J. (2007). Should I stay or should I go? How the human brain manages the trade-off between exploitation and exploration. Philosophical Transactions of the Royal Society B: Biological Sciences, 362, 933–942. https://doi.org/10.1098/rstb.2007.2098

Colton, J., Bach, P., Whalley, B., & Mitchell, C. (2018). Intention insertion: Activating an action’s perceptual consequences is sufficient to induce non-willed motor behavior. Journal of Experimental Psychology: General, 147, 1256–1263. https://doi.org/10.1037/xge0000435

Corr, P. J., DeYoung, C. G., & McNaughton, N. (2013). Motivation and personality: A neuropsychological perspective. Social and Personality Psychology Compass, 7, 158–175. https://doi.org/10.1111/spc3.12016

Cosmides, L., & Tooby, J. (2013). Evolutionary psychology: New perspectives on cognition and motivation. Annual Review of Psychology, 64, 201–229. https://doi.org/10.1146/annurev.psych.121208.131628

Cunningham, W. A., Zelazo, P. D., Packer, D. J., & Van Bavel, J. J. (2007). The iterative reprocessing model: A multilevel framework for attitudes and evaluation. Social Cognition, 25, 736–760. https://doi.org/10.1521/soco.2007.25.5.736

D’Mello, S., Lehman, B., Pekrun, R., & Graesser, A. (2014). Confusion can be beneficial for learning. Learning and Instruction, 29, 153–170. https://doi.org/10.1016/j.learninstruc.2012.05.003

De Dreu, C. K. W., Nijstad, B. A., & van Knippenberg, D. (2008). Motivated information processing in group judgment and decision making. Personality and Social Psychology Review, 12, 22–49. https://doi.org/10.1177/1088868307304092

de Lange, F. P., Heilbron, M., & Kok, P. (2018). How do expectations shape perception? Trends in Cognitive Sciences, 22, 764–779. https://doi.org/10.1016/j.tics.2018.06.002

Deci, E. L., & Ryan, R. M. (2000). The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry, 11, 227–268. https://doi.org/10.1207/S15327965PLI1104_01

Dixon, M. L., Thiruchselvam, R., Todd, R., & Christoff, K. (2017). Emotion and the prefrontal cortex: An integrative review. Psychological Bulletin, 143, 1033–1081. https://doi.org/10.1037/bul0000096

Duckworth, A. L., Gendler, T. S., & Gross, J. J. (2016). Situational strategies for self-control. Perspectives on Psychological Science, 11, 35–55. https://doi.org/10.1177/1745691615623247

Dunning, D. (Ed.). (2011). Social motivation. New York, NY: Psychology Press.

Dweck, C. S. (Ed.). (2013). Self-theories: Their role in motivation, personality, and development (2nd ed.). New York, NY: Psychology Press.