Abstract

In this paper, we study the edge metric dimension problem (EMDP). We establish a potential function and give a corresponding greedy algorithm with approximation ratio \(1+\ln n+\ln (\log _2 n)\), where n is the number of vertices in the graph G.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The concepts of metric generators (originally called locating sets) and the concepts of metric dimension (originally called the location number) were introduced by Slater in [17] in connection with uniquely determining the position of an intruder in a network. Harary and Melter [11] discovered the same concepts independently.

We now recall the definition of the metric dimension. Let \(G = (V, E)\) be a simple connected undirected graph. A vertex \(v \in V \) is called to resolve or distinguish a pair of vertices \(u, w \in V\) if \(d(v, u)\ne d(v, w)\), where \(d(\cdot ,\cdot )\) denotes the distance between two vertices in G. A metric generator of G is a subset \(V^{\prime }\subseteq V\) such that for each pair \(u, w\in V\) there exists some vertex \(v\in V^{\prime }\) that distinguishes u and w. The minimum cardinality of a metric generator is called the metric dimension of G, denoted by \(\dim (G)\).

The metric dimension problem (MDP) has been widely investigated from the graph theoretical point of view. Cáceres et al. [3] studied the metric dimension of cartesian products \(G \Box H\), and proved that the metric dimension of \(G \Box G \) was tied in a strong sense to the minimum order of a so-called doubly resolving set in G. They established bounds on \(G \Box H\) for many examples of G and H. Chartrand et al. [7] studied resolvability in graphs and the metric dimension of a graph. It was shown that \(\dim (H)\le \dim (H \Box K_2)\le \dim (H) + 1\) for every connected graph H. Moreover, it was shown that for every positive real number \(\varepsilon \), there exists a connected graph G and a connected induced subgraph H of G such that \(\frac{\dim (G)}{\dim (H)}\le \varepsilon \). Saputro et al. [16] studied the metric dimension of regular bipartite graphs, and determined the metric dimension of k-regular bipartite graphs G(n, n) where \(k=n-1\) or \(k=n-2\). Chappell et al. [6] studied relationships between metric dimension, partition dimension, diameter, and other graph parameters. They constructed “universal examples” of graphs with given partition dimension, and they used these to provide bounds on various graph parameters based on metric and partition dimensions. They formed a construction showing that for all integers \(\alpha \) and \(\beta \) with \(3\le \alpha \le \beta +1\) there exists a graph G with partition dimension \(\alpha \) and \(\beta \). Cáceres et al. [5] studied the metric dimension of infinite locally finite graphs, i.e. those infinite graphs such that all its vertices have finite degree. They gave some necessary conditions for an infinite graph to have finite metric dimension and characterized infinite trees with finite metric dimension.

So far only a few papers have discussed the computational complexity issues of the MDP. The NP-hardness of the MDP was mentioned by Garey and Johnson [10]. An explicit reduction from the 3-SAT problem was given by Khuller et al. [14]. They also obtained for the Metric Dimension problem a \((2 \ln (n) +\Theta (1))\)-approximation algorithm based on the well-known greedy algorithm for the Set Cover problem and showed that the MDP is polynomial-time solvable for trees. Beerliova et al. [1] showed that the MDP (which they call the Network Verification problem) cannot be approximated within a factor of \(O(\log (n))\) unless \(P=NP\). Hauptmann et al. [12] gave a \((1+\ln (|V|)+\ln (\log _2 (|V|)))\)-approximation algorithm for the MDP in graphs.

The concept of a doubly resolving set of a graph G was introduced by Caceres et al. [4]. We say vertices u, v of the graph G doubly resolve vertices x, y of G, if \(d(u, x)-d(u, y)\ne d(v, x)-d(v, y)\). A vertex set S is called a doubly resolving set of G if every two distinct vertices of G are doubly resolved by some two vertices of S.

Kratica et al. [15] proved that the minimal doubly resolving sets problem is NP-hard. Chen et al. [8] designed an \((1+ o(1))\ln n\)-approximation algorithm for the weighted minimum doubly resolving set problem.

The edge metric dimension is a variant of the metric dimension. We now recall the definition of the edge metric dimension. For any \(v \in V\) and \(e=uw\in E\), we use \(d(e, v)=\min \{d(u, v), d(w, v)\}\) to denote the distance between the vertex v and the edge e. We say that two distinct edges \(e_1, e_2 \in E\) are distinguished by the vertex \(v \in V \) if \( d(v, e_1)\ne d (v, e_2)\). A subset \(S\subseteq V\) is said to be an edge metric generator of G if every two distinct edges of G can be distinguished by some vertex in S. An edge metric basis of G is an edge metric generator of G of the minimum cardinality and its cardinality is called the edge metric dimension, denoted by \(\dim _e(G)\).

Kelenc et al. [13] proved that computing the edge metric dimension of connected graphs is NP-hard. As a response to an open problem presented in [13], Zhu et al. [18] considered the maximum edge metric dimension problem on graphs. Zubrilina [19] classified the graphs on n vertices for which \(\dim _e(G)=n-1\) and showed that \(\frac{\dim _e(G)}{\dim (G)}\) is not bounded from above (here \(\dim (G)\) is the standard metric dimension of G). They computed \(\dim _e(G \Box P_m)\) and \(\dim _e(G + K_1)\). Zubrilina [20] discussed the edge metric dimension of the random graph G(n, p) and obtained \(\dim _e(G(n, p)) = (1 + o(1)) \frac{4 \log (n)}{\log (\frac{1}{q})}\), where \(q=1-2p{(1-p)^2}(2-p)\). In this paper, we discuss the edge metric dimension problem.

The paper is organized as follows: In Sect. 2, we construct a normalized, monotone increasing and submodular potential function and give a greedy algorithm for the edge metric dimension problem. In Sect. 3, we show that the algorithm presented in this paper has approximation ratio \(1+\ln n+\ln (\log _2 n)\), where n is the number of vertices in the graph G.

2 Approximation Algorithm

Throughout this paper we assume that the graph \(G=(V,E)\) is simple connected and undirected. In this section, we first construct a potential function and study the properties of the potential function. Then we give a greedy algorithm for the edge metric dimension of G.

Definition 2.1

Let \(\Gamma \) be a subset of V. We define the \(equivalence \ relation\) \(\equiv _{\Gamma }\) for E as follows: for edges \(e_1,e_2\in E\),

Definition 2.2

Let \(\Gamma \) be a subset of V and \(\{E_1,E_2,\ldots ,E_k\}\) be the set of equivalence classes of \(\equiv _\Gamma \) for E. We call the value \(H(\Gamma )=\log _2 ({\prod _{i=1}^k {|E_i|!}})\) the entropy of \(\Gamma \).

For any \(v\in V\), let

It is direct to see that any equivalence class of \(\equiv _\Gamma \) is either an equivalent class of \(\equiv _{\Gamma \cup \{v\}}\) or a union of several equivalence classes of \(\equiv _{\Gamma \cup \{v\}}\).

Lemma 2.3

Let \(\Gamma \) be a subset of V and \(v\in V\). Then \(\Delta _v H(\Gamma )=0\) if each equivalence class of \(\equiv _\Gamma \) is one of \(\equiv _{(\Gamma \cup \{v\})}\); and \(\Delta _v H(\Gamma )>0\) otherwise.

Lemma 2.4

Let \(\Gamma \) be a subset of V. Then \(\Gamma \) is an edge metric generator of G if and only if \(H(\Gamma )=0\).

Proof

Observe that each of the two assertions is equivalent with the assertion that every equivalent class of \( \equiv _\Gamma \) is a singleton. The result follows. \(\square \)

Lemma 2.5

For any two sets \(\Gamma _0\subseteq \Gamma _1\subseteq V\) and any vertex \(v\in V\backslash \Gamma _1\), we have

Proof

If \(\Gamma _ 0= \Gamma _1\), then the lemma holds. If \(\Gamma _0 \subset \Gamma _1\), we divide the proof into two cases: case 1, the vertex v partitions each equivalence class of \(\equiv _{\Gamma _0}\) into at most two equivalence classes; case 2, the vertex v partitions some equivalence class of \(\equiv _{\Gamma _0}\) into at least three equivalence classes.

Case 1. Since \(\Delta _vH(\Gamma _0)=H(\Gamma _0)-H(\Gamma _0\cup \{v\}=\log _2 \frac{|\prod _{\Gamma _0}|}{|\prod _{\Gamma _0\cup \{v\}}|}\), it suffices to show

Write \(S=\Gamma _1\setminus \Gamma _0\). Let \(\{E_1, E_2,\cdots , E_k\}\) be the equivalence classes of \(\equiv _{\Gamma _0}\), \(\{A_1, A_2,\cdots ,A_n\}\) the equivalence classes of \(\equiv _{\Gamma _0 \cup \{v\}}\) and \(\{B_1, B_2,\cdots ,B_t\}\) the equivalence classes of \(\equiv _{\Gamma _1 }\). By the comments above Lemma 2.3 and the assumption, for each i, \(E_i=A_{i_1}\cup A_{i_2}\) and \(E_i\) is a union of some \(B_{i_1},\cdots , B_{i_t}\). Without loss of generality, assume \(t=2\). Let \(F_i=A_{i_1}\cap B_{i_{1}}\), \(H_i= A_{i_2}\cap B_{i_{1}}\), \(C_i=(E_i\cap A_{i_1})\backslash F_i\), \(D_i=(E_i\cap A_{i_2})\backslash H_i\). Let \(|F_i|=f_i\), \(|H_i|=h_i\), \(|C_i|=c_i\), \(|D_i|=d_i\). Then \(|E_i|=f_i+h_i+c_i+d_i\). Since \({{f_i+c_i}\atopwithdelims (){f_i+h_i+c_i+d_i}}\ge {{f_i}\atopwithdelims (){h_i+f_i}}{{c_i}\atopwithdelims (){c_i+d_i}}\), we have

i.e.

Thus

Case 2. Assume that the vertex v partitions each \(E_j\) into \(k_j\) equivalence classes, where \(j=1,2,\ldots ,m\). Let \(k=\max _{j} \{k_j\}\). Then by assumption, \(k\ge 3\). For \(1\le j\le m\), there exist the vertices \(x_1\), \(x _2\),..., \(x _k\) such that \(x_1\) divides \(E_j\) into \(E_{j_1}\) and \(E_j\setminus E_{j_1}\), vertex \(x_2\) divides \(E_j\setminus E_{j_1}\) into \(E_{j_2}\) and \(E_j\setminus { (E_{j_1}\cup E_{j_2}})\), ..., vertex \(x_k\) divides \(E_j\setminus ({E_{j_1}\cup E_{j_2}\cup \ldots \cup E_{j_{k_j-1}}})\) into \(E_{j_{k_j}}\) and \(\emptyset \). Then by the argument in Case 1, we have

\(\square \)

Let \(\mathbb {R}\) be the real number field. We define a function \(f: 2^{V}\rightarrow \mathbb {R}\) by

Lemma 2.6

The function f defined above is normalized, monotone increasing and submodular.

Proof

It is easy to know that \(f(\emptyset )=0\), that is to say, the function f is normalized. By Lemma 2.3, f is monotone increasing. By Lemma 2.5 f is submodular. \(\square \)

Based on the above lemmas, we give a greedy approximation algorithm for the EMDP.

3 Theoretical Analysis

To obtain the ratio of Algorithm 1. We first prove the following lemma.

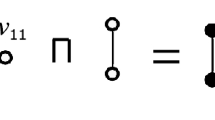

Lemma 3.1

Let \(v_1, v_2,\cdots , v_k\) be the elements in \(\Gamma _g\) in the order of their selection into the set \(\Gamma _g\). Denote \(\Gamma _0 = \emptyset \) and \(\Gamma _i = \{v_1, v_2,\cdots , v_i\}\), for \(i = 1,\cdots , k\). Then for \(i =2, \cdots , k\), we have

Proof

By [2, Lemma 6], it is sufficient to prove \(\Delta _{v_i}f(\Gamma _{i-1})> 0\). Assume \(\Delta _{v_i} f(\Gamma _{i-1})=0\) for some i \((2\le i\le k)\), for a contradiction. Then \(H(\Gamma _{i-1}\cup \{v_i\})=H(\Gamma _{i-1})\). By the greedy strategy, the vertex \(v_i\) can not be chosen in \(\Gamma _g\). A contradiction. \(\square \)

Theorem 3.2

Algorithm 1 produces an approximate solution within a ratio \(1+\ln n+\ln (\log _2 n)\).

Proof

Let \(\Gamma ^{*}\) denote an optimal solution to the edge metric dimension problem. By Lemmas 2.6 and 3.1 and [9, Theorem 3.7], and since \(f(\Gamma ^*)=f(V)=\log _2 (n!)\), the approximation ratio of Algorithm 1 is

\(\square \)

References

Beerliova, Z., et al.: Network discovery and verification. IEEE J. Sel. Area Commun. 24, 2168–2181 (2006)

Berman, P., DasGupta, B., Kao, M.: Tight approximability results for test set problems in bioinformatics. J. Comput. Syst. Sci. 71(2), 145–162 (2005)

Cáceres, J., et al.: On the metric dimension of Cartesian products of graphs. SIAM J. Discret. Math. 21, 423–441 (2007)

Cáceres, J., Hernando, C., Mora, M., Pelayo, I.M., Puertas, M.l., Seara, C., et al.: On the metric dimension of Cartesian products of graphs. SIAM J. Discret. Math. 21(2), 423–441 (2007)

Cáceres, J., Hernando, C., Mora, M., Pelayo, I., Puertas, M.: On the metric dimension of infinite graphs. Electron. Notes Discret. Math. 35, 15–20 (2009)

Chappell, G., Gimbel, J., Hartman, C.: Bounds on the metric and partition dimensions of a graph. Ars Combin. 88, 349–366 (2008)

Chartrand, G., Eroh, L., Johnson, M., Oellermann, O.: Resolvability in graphs and the metric dimension of a graph. Discret. Appl. Math. 105, 99–133 (2000)

Chen, X.J., Hu, X.D., Wang, C.J.: Approximation for the minimum cost doubly resolving set problem. Theor. Comput. Sci. 609, 526–543 (2016)

Du, D.Z., Ko, K.I., Hu, X.D.: Design and Analysis of Approximation Algorithms, vol. 62. Springer, New York (2011). https://doi.org/10.1007/978-1-4614-1701-9

Garey, M.R., Johnson, D.S.: Computers and Intractability: A Guide to the Theory of NP-Completeness. Freeman, New York (1979)

Harary, F., Melter, R.A.: On the metric dimension of a graph. Ars Combin. 2, 191–195 (1976)

Hauptmann, M., Schmied, R., Viehmann, C.: Approximation complexity of metric dimension problem. J. Discret. Algorithms 14, 214–222 (2012)

Kelenc, A., Tratnik, N., Yero, I.G.: Uniquely identifying the edges of a graph: the edge metric dimension. Discret. Appl. Math. 251, 204–220 (2018)

Khuller, S., Raghavachari, B., Rosenfeld, A.: Landmarks in graphs. Discret. Appl. Math. 70, 217–229 (1996)

Kratica, J., C̈angalović, M., Kovac̈ević-Vujc̈ić, V.: Computing minimal doubly resolving sets of graphs. Comput. Oper. Res. 36, 2149–2159 (2009)

Baca, M., Baskoro, E.T., Salman, A.N.M., Saputro, S.W., Suprijanto, D.: The metric dimension of regular bipartite graphs. Bull. Math. Soc. Sci. Math. Roumanie 54(1), 15–28 (2011)

Slater, P.J.: Leaves of trees. In: Southeastern Conference on Combinatorics, Graph Theory, and Computing, Congressus Numerantium, vol. 14, pp. 549–559 (1975)

Zhu, E.Q., Taranenko, A., Shao, Z.H., Xu, J.: On graphs with the maximum edge metric dimension. Discret. Appl. Math. 257, 317–324 (2019)

Zubrilina, N.: On edge dimension of a graph. Discret. Math. 341, 2083–2088 (2018)

N. Zubrilina. On the edge metric dimension for the random graph (2016). arXiv:1612.06936 [math.CO]

Acknowledgement

The authors would like to thank Professor Ding-Zhu Du for his many valuable advices during their study of approximation algorithm. This work was supported by the NSF of China (No. 11471097), Hebei Province Foundation for Returnees (CL201714) and Overseas Expertise Introduction Program of Hebei Auspices (25305008).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Huang, Y., Hou, B., Liu, W., Wu, L., Rainwater, S., Gao, S. (2019). On Approximation Algorithm for the Edge Metric Dimension Problem. In: Du, DZ., Li, L., Sun, X., Zhang, J. (eds) Algorithmic Aspects in Information and Management. AAIM 2019. Lecture Notes in Computer Science(), vol 11640. Springer, Cham. https://doi.org/10.1007/978-3-030-27195-4_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-27195-4_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-27194-7

Online ISBN: 978-3-030-27195-4

eBook Packages: Computer ScienceComputer Science (R0)