Abstract

We investigate a non-submodular maximization problem subject to a p-independence system constraint, where the non-submodularity of the utility function is characterized by a series of parameters, such as submodularity (supmodularity) ratio, generalized curvature, and zero order approximate submodularity coefficient, etc. Inspired by Feldman et al. [15] who consider a non-monotone submodular maximization with a p-independence system constraint, we extend their Repeat-Greedy algorithm to non-submodular setting. While there is no general reduction to convert algorithms for submodular optimization problems to non-submodular optimization problems, we are able to show the extended Repeat-Greedy algorithm has an almost constant approximation ratio for non-monotone non-submodular maximization.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Submodular optimization is widely studied in optimization, computer science, and economics, etc. Submodularity is a very powerful tool in many optimization applications such as viral marketing [9, 18], recommendation system [12, 15, 21], nonparametric learning [1, 16], and document summarization [20], etc.

The greedy algorithm introduced by Nemhauser et al. [22] gave the first \((1-e^{-1})\)-approximation for monotone submodular maximization with a cardinality constraint (SMC). Feige [13] considered a maximal k-cover problem, which is a special case of SMC, and showed that there is no algorithms with approximation ratio greater than \((1-e^{-1}+\epsilon )\) for any \(\epsilon >0\), under the assumption P \(\ne \) NP. Sviridenko [23] considered a monotone submodular maximization with a knapsack constraint, and provided a tight \((1-e^{-1})\)-approximation algorithm with time complexity \(O(n^5)\). Calinescu et al. [8] provided a \((1-e^{-1})\)-approximation algorithm for monotone submodular maximization with a matroid constraint. All extant results of constrained submodular maximization assume monotonicity of the objective functions. In this paper, we consider a non-monotonic and non–submodular maximization problem subject to a more general p-independence system constraint.

1.1 Our Contributions

In this work, we consider a non-submodular maximization with an independence system constraint. Specifically, all feasible solutions associated with this model generate a p-independence system and the objective function is characterized by a series of parameters, such as submodularity (supmodularity) ratio, generalized curvature, and zero order approximate submodularity coefficient, etc. Our main results can be summarized as follows.

-

We first investigate the efficiency of a greedy algorithm with two scenarios. Firstly, the objective function is non-submodularity and non-monotonic. Secondly, the feasible solution belongs to a p-independence system. We show that some good properties are still retained in the non-submodular setting (Theorem 1).

-

Second we study the non-monotone non-submodular maximization problem without any constraint. Based on a simple approximate local search, for any \(\epsilon >0\) we show that there exists a polynomial time \((3/c^2+\epsilon )\)-approximation algorithm, where c is the zero order approximate submodularity coefficient of objective function (Theorem 2).

-

Finally, we apply the first two algorithms as the subroutines to solve a non-monotone non-submodular maximization problem with p-independence system constraint. Our algorithm is an extension of the Repeat-Greedy introduced in [15]. Based on a multiple times rounding of the above subroutine algorithms, we derived a nearly constant approximation ratio algorithm (Theorem 3).

1.2 The Organization

We give a brief summary of related work in Sect. 2. The necessary preliminaries and definitions are presented in Sect. 3. The main algorithms and analyses are provided in Sect. 4. We present a greedy algorithm in Sect. 4.1, an approximate local search in Sect. 4.2, and the core algorithm is provided in Sect. 4.3. In Sect. 5, we offer a conclusion for our work.

2 Related Works

Non-monotone Submodular Optimization. Unlike the monotone submodular optimization, there exists a natural obstacle in the study of non-monotonic case. For example, direct application of the greedy algorithm introduced by Nemhauser et al. [22] to the non-monotonic case does not yield a constant approximation guarantee. Some previous work is summarized below. For the non-monotone submodular maximization problem without any constraint (USM), Feige et al. [14] presented a series of algorithms. They first showed a uniform random algorithm has a \(1/4\)-approximation ratio. Second, they gave a deterministic local search \(1/3\)-approximation algorithm and a random \(2/5\)-approximation algorithm. For symmetric submodular functions, they derived a \(1/2\)-approximation algorithm and showed that any \((1/2+\epsilon )\)-approximation for symmetric submodular functions must need an exponential number of queries for any fixed \(\epsilon >0\). Based on local search technique, Buchbinder et al. [7] provided a random linear time \(1/2\)-approximation algorithm.

Optimization of non-monotone submodular with complex constraints are also considered previously. Buchbinder and Feldman [5] gave a deterministic \(1/2\)-approximation algorithm for USM with time complexity \(O(n^2)\). For non-monotone submodular maximization with cardinality constraint, they derived a deterministic \(1/e\)-approximation algorithm, which has a slightly better approximation ration than the random \((1/e+0.004)\)-approximation ratio by [6]. Buchbinder and Feldman [4] considered a more general non-monotone submodular maximization problem with matroid constraint and presented the currently best random 0.385-approximation algorithm. Lee et al. [19] derived a \(1/(p+1+1/(p-1)+\epsilon )\)-approximation algorithm as well as non-monotone submodular maximization algorithm with a constraint of the intersection of p matroids. For a more general p-independence system constraint, Gupta et al. [17] derived a \(1/3p\)-approximation, which needs \(O(np\ell )\) function value oracles, where \(\ell \) is the maximum size of feasible solutions. Mirzasoleiman et al. [21] improved the approximation ratio to \(1/2k\), while the time complexity was still bounded by \(O(np\ell )\). Recently, with improved time complexity of \(O(n\ell \sqrt{p})\), the approximation ratio was improved to \(1/(p+\sqrt{p})\) by Feldman et al. [15].

Non-submodular Maximization. There are also many problems in optimization and machine learning whose utility functions do not possess submodularity. Das and Kempe [11] introduced a definition of submodularity ratio \(\gamma \) to measure the magnitude of submodularity of the utility function. For the maximization of monotone non-submodular function with cardinality constraint (NSMC), they showed the greedy algorithm can achieve a \((1-e^{-\gamma })\)-approximation ratio. Conforti and Cornuéjols [10] studied the efficiency of the greedy algorithm by defining curvature \(\kappa \) of submodular objective functions for SMC and showed the approximation could be improved it to \(1/\kappa (1-e^{-\kappa })\). Bian et al. [2] introduced a more expressive formulation by providing a definition of the generalized curvature \(\alpha \) of any non-negative set function. Combining the submodularity ratio with the generalized curvature, they derived the tight \(1/\alpha (1-e^{1/(\alpha \gamma )})\)-approximation ratio of the greedy algorithm for NSMC. Inspired by these work, Bogunovic et al. [3] introduced further parameters, such as supmodularity ratio, inverse generalized curvature, etc., to characterize the utility function. They derived the first constant approximation algorithm for monotone robust non-submodular maximization problem with cardinality constraint.

3 Preliminaries

In this section, we present some necessary notations. We are given a ground set \({\mathcal {V}}=\{u_1,...,u_n\}\), and a utility function \(f:2^{{\mathcal {V}}}\rightarrow R_+\). The function f may not be submodular; namely the following zero order condition of submodular may not hold

For our purpose, we define a parameter c to approximate the submodularity of our utility function.

Definition 1

Given an integer k, let \(\{A_i\}^{k}_{i=1}\) be a collection of subsets of \({\mathcal {V}}\). The zero order approximate submodularity coefficient is the largest \(c_k\in [0,1]\) such that

For any \(A, B\subseteq {\mathcal {V}}\), we set \(f_{A}(B)=f(A\cup \{B\})-f(A)\) as the amount of change by adding B to A. For the sake of brevity and readability, we set \(f_{A}(u)=f(A+u)-f(A)\) for any singleton element \(u\in {\mathcal {V}}\). Then we restate the submodularity ratio \(\gamma \) in the following definition. The submodularity ratio measures how close of f being submodular.

Definition 2

([2, 3, 11]). Given an integer k, the submodularity ratio of a non-negative set function f with respect to \({\mathcal {V}}\) is

Let k be the maximum size of any feasible solution, and omit signs \(k, {\mathcal {V}}\) and f for clarity. Bian et al. [2] introduced an equivalent formulation of submodularity ratio \(\gamma \) by the largest \(\gamma \) such that

Bogunovic et al. [3] defined supmodularity ratio \({\check{\gamma }}\) that measures how close a utility function is supermodular.

Definition 3

([3]). The supmodularity ratio of a non-negative set function f is the largest \({\check{\gamma }}\) such that

For a monotone submodular function, Conforti and Cornuéjols [10] introduced the definition of total curvature \(\kappa _f\) and curvature \(\kappa _f(S)\) w.r.t. a set \(S\subseteq {\mathcal {V}}\) as follows. Denote \(\kappa _f=1-\min _{u\in {\mathcal {V}}}f_{{\mathcal {V}}\setminus \{u\}}(u)/f(u)\), and \(\kappa _f(S)=1-\min _{u\in S}f_{S\setminus \{u\}}(u)/f(u)\). Sviridenko et al. [24] provided a definition of curvature from submodular to non-submodular functions. The expanded curvature is defined as \(\kappa ^o=1-\min _{u\in {\mathcal {V}}}\min _{A, B\subseteq {\mathcal {V}}\setminus \{u\}}f_{A}(u)/f_{B}(u)\). Bian et al. [2] presented a more expressive formulation of curvature, which measures how close a set function is to being supmodular.

Definition 4

([2]). The generalized curvature of a non-negative function f is the smallest scalar \(\alpha \) such that

Recently, Bogunovic et al. [3] introduced the concept of inverse generalized curvature \({\check{\alpha }}\), which can be described as follows:

Definition 5

([3]). The inverse generalized curvature of a non-negative function f is the smallest scalar \({\check{\alpha }}\) such that

The above parameters are used to characterize a non-negative set function from different points of view. We provide a lower bound of zero order approximate submodularity coefficient c by inverse generalized curvature \({\check{\alpha }}\). I.e., \(c\ge 1-{\check{\alpha }}\). The proof is referred to the full version. We omit the relation of the other parameters as they can be found in [2, 3]. In the rest of this part, we restate the concept of the p-independence system.

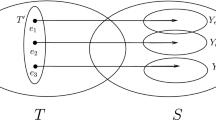

Let \({\mathcal {I}}=\{A_i\}_{i}\) be a finite collection of subsets chosen from \({\mathcal {V}}\). We say the tuple \(({\mathcal {V}}, {\mathcal {I}})\) is an independence system if for any \(A\in {\mathcal {I}}\), \(A^\prime \subseteq A\) implies that \(A^\prime \in {\mathcal {I}}\). The sets of \({\mathcal {I}}\) are called the independent sets of the independence system. An independent set B contained in a subset \(X\subseteq {\mathcal {V}}\) is a base (basis) of X if no other independent set \(A\subseteq X\) strictly contains B. By the above terminologies we restate the definition of p-independence system as follows.

Definition 6

([15]). An independence system \(({\mathcal {V}}, {\mathcal {I}})\) is a p-independence system if, for every subset \(X\subseteq {\mathcal {V}}\) and for any two bases \(B_1, B_2\) of X, we have \(|B_1|/|B_2|\le p\).

In our model, assume the utility function is characterized by these parameters, and the collection of all feasible subsets constructs a p-independence system. We also assume that there exist a utility function value oracle and an independence oracle; i.e., for any \(A\subseteq {\mathcal {V}}\), we can obtain the value of f(A) and know if A in \({\mathcal {I}}\) or not. The model can be described as follows:

where \(({\mathcal {V}},{\mathcal {I}})\) is a p-independence system.

4 Algorithms

In this section, we present some algorithms in dealing with non-submodular maximization. Before providing our main algorithm, we first investigate the efficiency of two sub-algorithms. In Subsect. 4.1, we restate the greedy algorithm for submodular maximization with p-independence system constraint, and show that some good properties are still retained in the non-submodular setting. In Subsect. 4.2, we present a local search for non-monotone non-submodular maximization without any constraint. Finally, we provide the core algorithm in Subsect. 4.3.

4.1 Greedy Algorithm Applied to Non-submodular Optimization

The pseudo codes of the greedy algorithm are presented in Algorithm 1. Let \(S^G=\{u_1,...,u_\ell \}\) be the returned set by Algorithm 1. We start with \(S^G=\emptyset \). In each iteration, we choose the element u with maximum gain, and add it to the current solution if it satisfies \(S^G+u\in {\mathcal {I}}\). For clarity, we let OPT be any optimum solution set of maximizing the utility function under p-independence system constraint. Then we can derive a lower bound of \(f(S^G)\) by the following theorem.

Theorem 1

Let \(S^G\) be the returned set of Algorithm 1, then we have

Proof

Refer to the full version of this paper.

Let \(B\in {\mathcal {I}}\) be any independent set and set \(S^G_{i}=\{u_1,...,u_i\}\) be the set of the first i elements added by Algorithm 1. We can iteratively construct a partition of B according to \(S^G\). We start with \(B_0=B\) and set \(B_i=\{u\in B\setminus S^G_i|S^G_i+u\in {\mathcal {I}}\}\) for iteration \(i\in [\ell ]=\{1,...,\ell \}\), where \(\ell \) denotes the size of \(S^G\) in the end. Then the collection of \(\{B_{i-1}\setminus B_i\}^\ell _{i=1}\) derives a partition of B. Let \(C_i=B_{i-1}\setminus B_i\) for any \(i\in [\ell ]\). The construction can be summarized as Algorithm 2. The properties of the above partition are presented in the following lemma.

Lemma 1

Let \(\{C_i\}^\ell _{i=1}\) be the returned partition of Algorithm 2, then

-

for each \(i\in [\ell ]\), we have \(\sum ^i_{j=1}p_j\le p\cdot i\) where \(p_j=|C_j|\); and

-

for each \(i\in [\ell ]\), we have \(p_i\cdot \delta _i\ge \gamma (1-{\check{\alpha }})f_{S^G}(C_i)\).

Proof

Refer to the full version of this paper.

4.2 Local Search Applied to Non-submodular Optimization

In this subsection, we present a local search algorithm for the non-monotone non-submodular maximization problem without any constraint. The main pseudo codes are provided by Algorithm 3. Feige et al. [14] introduced the local search approach to deal with the non-monotone submodular optimization problem. We extend their algorithm to the non-submodular setting, and show that the algorithm still keeps a near constant approximation ratio by increasing a factor.

In order to implement our algorithm in polynomial time, we relax the local search approach and find an approximate local solution. Let \(S^{LS}\) be the returned set of Algorithm 3 and let \(OPT^o\) be any optimum solution without any constraint. We restate the definition of approximate local optimum as follows.

Definition 7

([14]). Given a set function \(f:2^{{\mathcal {V}}}\rightarrow R\), a set \(A\subseteq {\mathcal {V}}\) is called a \((1+\lambda )\)-approximate local optimum if, \(f(A-u)\le (1+\lambda )\cdot f(A)\) for any \(u\in A\) and \(f(A+u)\le (1+\lambda )\cdot f(A)\) for any \(u\notin A\).

By the definition of the approximate local optimum solution, we show that there exists a similar performance guarantee in the non-submodular case. The details are summarized in the following theorem.

Theorem 2

Given \(\epsilon >0, c\) and \({\check{\alpha }}\in [0,1)\). Let \(S^{LS}\) be the returned set of Algorithm 3 by setting set \(\lambda = \frac{c^2\epsilon }{(1-{\check{\alpha }})n}\). We have

Proof

Refer to the full version of this paper.

Before proving the above theorem, we need the following lemma.

Lemma 2

If S is a \((1+\lambda )\)-approximate local optimum for a non-submodular function f, then for any set T such that \(T\subseteq S\) or \(T\supseteq S\), we have

Proof

Let \(S=\{u_1,...,u_q\}\) be a \((1+\lambda )\)-approximate local optimum solution returned by Algorithm 3. W.l.o.g., we assume \(T\subseteq S\), then we construct \(T_i\) such that \(T=T_1\subseteq T_2\subseteq \cdots \subseteq T_r=S\) and \(u_i=T_i\setminus T_{i-1}\). For each \(i\in \{2,...,q\}\), we have

where the first inequality follows by the definition of the inverse generalized curvature and the second inequality follows by the definition of the approximate local optimum. Summing up the above inequalities, we have

implying that \(f(T)\le [1+\lambda q(1-{\check{\alpha }})]f(S)\le [1+\lambda n(1-{\check{\alpha }})]f(S)\), where the second inequality follows from \(q\le n\). Simultaneously, the case of \(T\supseteq S\) can be similarly derived by the above process.

4.3 The Core Algorithm

In this subsection, we present the main algorithm, which is an extension of the Repeat-Greedy algorithm introduced in [15]. The pseudo codes are presented as Algorithm 4. We run the main algorithm in r rounds. Let \({\mathcal {V}}_i\) be the set of candidate elements set at the start of round \(i\in [r]\). We first run the greedy step of Algorithm 1. Then, we proceed with the local search step of Algorithm 3 on the set returned from the first step. Simultaneously, we update the candidate ground set as \({\mathcal {V}}_i={\mathcal {V}}\setminus {\mathcal {V}}_{i-1}\). Finally, we output the best solution among all returned sets. We can directly obtain two estimations of the utility function by Theorems 1 and 2, respectively. The results are summarized as follows.

Lemma 3

For any iteration \(i\in [r]\) of Algorithm 4, we have

-

1.

\(f(S_i\cup (OPT\cap {\mathcal {V}}_i))\le \left( \frac{p}{\gamma ^2{\check{\gamma }}(1-{\check{\alpha }})}+1\right) f(S_i)\), and

-

2.

\(f(S_i\cap OPT )\le \left( \frac{3}{c^2}+\epsilon \right) f(S^\prime _i)\).

Buchbinder et al. [6] derived an interesting property in dealing with non-monotone submodular optimization problems. Now, we extend this property to the non-submodular case, as summarized in the following lemma.

Lemma 4

Let \(g: 2^{\mathcal {V}} \rightarrow R_+\) be a set function with inverse generalized curvature \({\check{\alpha }}_g\), and S be a random subset of \({\mathcal {V}}\) where each element appears with probability at most \(\mathrm {pr}\) (not necessarily independent). Then \({\mathbf {E}}[g(S)]\ge \left[ 1-(1-{\check{\alpha }}_g)\mathrm {pr}\right] \cdot g(\emptyset )\).

Proof

Refer to the full version of this paper.

Using this result, we can derive an estimation of f(OPT). Let S be a random set of \(\{S_i\}^r_{i=1}\) with probability \(\mathrm {pr}=\frac{1}{r}\) and set \(g(S)=f(OPT\cup S)\) for any \(S\subseteq {\mathcal {V}}\). Then we have \({\check{\alpha }}={\check{\alpha }}_f={\check{\alpha }}_g\). By Lemma 4, we yield

Multiplying both sides of the last inequality by r, we get

The following lemma presents a property based on zero order approximate submodularity coefficient of the objective function.

Lemma 5

([15]). For any subsets \(A, B, C\subseteq {\mathcal {V}}\), we have

Proof

To prove this lemma, we have the following

where the first inequality follows from the nonnegativity of the objective function and the second inequality is derived by the definition of the zero order approximate submodularity coefficient c.

From these lemmas and choosing properly the number of rounds, we conclude that if the parameters of the utility function are fixed, or have a food estimation, then Algorithm 4 yieds a near constant performance guarantee for problem (1). The details are presented in the following theorem.

Theorem 3

Give an objective function \(f:2^V\rightarrow R_+\) with parameters \(c, \gamma , {\check{\gamma }}, {\check{\alpha }}\), and a real number \(\epsilon >0\), let S be the returned set of Algorithm 4. Set \(r=\left\lceil \varDelta \right\rceil \). Then we have

where

Proof

Refer to the full version of this paper.

5 Conclusion

We consider the non-submodular and non-monotonic maximization problem with a p-independence system constraint, where the objective utility function is characterized by a set of parameters such as submodularity (supmodularity) ratio, inverse generalized curvature, and zero order approximate submodularity coefficient. We study a greedy algorithm applied to non-submodular optimization with p-independence system constraint, and show the algorithm preserves some good properties even though the objective function is non-submodularity. Then, we investigate the unconstrained non-submodular maximization problem. Utilizing an approximate local search technique, we derive an \(O(3/c^2+\epsilon )\)-approximation algorithm, where c is the zero order approximate submodularity coefficient. Finally, combining these two algorithms, we obtain an almost constant approximation algorithm for the non-monotone non-submodular maximization problem with p-independence system constraint.

References

Badanidiyuru, A., Mirzasoleiman, B., Karbasi, A., Krause, A.: Streaming submodular maximization: massive data summarization on the fly. In: 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 671–680. ACM (2014)

Bian, A.-A., Buhmann, J.-M., Krause, A., Tschiatschek, S.: Guarantees for greedy maximization of non-submodular functions with applications. In: 34th International Conference on Machine Learning, pp. 498–507. JMLR (2017)

Bogunovic, I., Zhao, J., Cevher, V.: Robust maximization of non-submodular objectives. In: 21st International Conference on Artificial Intelligence and Statistics, pp. 890–899. Playa Blanca, Lanzarote (2018)

Buchbinder, N., Feldman, M.: Constrained submodular maximization via a non-symmetric technique arXiv:1611.03253 (2016)

Buchbinder, N., Feldman, M.: Deterministic algorithms for submodular maximization problems. ACM Trans. Algorithms 14(3), 32 (2018)

Buchbinder, N., Feldman, M., Naor, J.-S., Schwartz, R.: Submodular maximization with cardinality constraints. In: 25th Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 1433–1452. Society for Industrial and Applied Mathematics (2014)

Buchbinder, N., Feldman, M., Seffi, J., Schwartz, R.: A tight linear time \(1/2\)-approximation for unconstrained submodular maximization. SIAM J. Comput. 44(5), 1384–1402 (2015)

Calinescu, G., Chekuri, C., Pál, M., Vondrák, J.: Maximizing a monotone submodular function subject to a matroid constraint. SIAM J. Comput. 40(6), 1740–1766 (2011)

Chen, W., Zhang, H.: Complete submodularity characterization in the comparative independent cascade model. Theor. Comput. Sci. (2018)

Conforti, M., Cornuéjols, G.: Submodular set functions, matroids and the greedy algorithm: tight worst-case bounds and some generalizations of the Rado-Edmonds theorem. Discrete Appl. Math. 7(3), 251–274 (1984)

Das, A., Kempe, D.: Submodular meets spectral: greedy algorithms for subset selection, sparse approximation and dictionary selection. In: 28th International Conference on Machine Learning, pp. 1057–1064. Omnipress (2011)

El-Arini, K., Veda, G., Shahaf, D., Guestrin, C.: Turning down the noise in the blogosphere, In: 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 289–298. ACM (2009)

Feige, U.: A threshold of \(\ln n\) for approximating set cover. J. ACM 45(4), 634–652 (1998)

Feige, U., Mirrokni, V.-S., Vondrák, J.: Maximizing non-monotone submodular functions. SIAM J. Comput. 40(4), 1133–1153 (2011)

Feldman, M., Harshaw, C., Karbasi, A.: Greed is good: near-optimal submodular maximization via greedy optimization. In: 30th Annual Conference on Learning Theory, pp. 758–784. Springer (2017)

Gomes, R., Krause, A.: Budgeted nonparametric learning from data streams. In: 27th International Conference on Machine Learning, pp. 391–398. Omnipress (2010)

Gupta, A., Roth, A., Schoenebeck, G., Talwar, K.: Constrained non-monotone submodular maximization: offline and secretary algorithms. In: Saberi, A. (ed.) WINE 2010. LNCS, vol. 6484, pp. 246–257. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-17572-5_20

Kempe, D., Kleinberg, J., Tardos, É.: Maximizing the spread of influence through a social network. In: 9th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 137–146. ACM (2003)

Lee, J., Sviridenko, M., Vondrák, J.: Submodular maximization over multiple matroids via generalized exchange properties. Math. Oper. Res. 35(4), 795–806 (2010)

Lin, H., Bilmes, J.: A class of submodular functions for document summarization. In: 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, vol. 1, pp, 510–520. Association for Computational Linguistics (2011)

Mirzasoleiman, B., Badanidiyuru, A., Karbasi, A.: Fast constrained submodular maximization: personalized data summarization. In: 33rd International Conference on Machine Learning, pp. 1358–1366. JMLR (2016)

Nemhauser, G.-L., Wolsey, L.-A., Fisher, M.-L.: An analysis of approximations for maximizing submodular set functions–I. Math. Program. 14(1), 265–294 (1978)

Sviridenko, M.: A note on maximizing a submodular set function subject to a knapsack constraint. Oper. Res. Lett. 32(1), 41–43 (2004)

Sviridenko, M., Vondrák, J., Ward, J.: Optimal approximation for submodular and supermodular optimization with bounded curvature. Math. Oper. Res. 42(4), 1197–1218 (2017)

Acknowledgments

The first two authors are supported by Natural Science Foundation of China (Nos. 11531014, 11871081). The third author is supported by Natural Sciences and Engineering Research Council of Canada (No. 283106). The fourth author is supported by China Postdoctoral Science Foundation funded project (No. 2018M643233) and Natural Science Foundation of China (No. 61433012). The fifth author is supported by Natural Science Foundation of Shanxi province (No. 201801D121022).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, R., Xu, D., Du, D., Xu, Y., Yan, X. (2019). Maximization of Constrained Non-submodular Functions. In: Du, DZ., Duan, Z., Tian, C. (eds) Computing and Combinatorics. COCOON 2019. Lecture Notes in Computer Science(), vol 11653. Springer, Cham. https://doi.org/10.1007/978-3-030-26176-4_51

Download citation

DOI: https://doi.org/10.1007/978-3-030-26176-4_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-26175-7

Online ISBN: 978-3-030-26176-4

eBook Packages: Computer ScienceComputer Science (R0)