Abstract

This chapter introduces an overland snowfall rate algorithm for passive microwave radiometers. It relies on an embedded algorithm to detect the presence of snowfall. The retrieval of cloud properties is the foundation of the snowfall rate algorithm and is accomplished with a one-dimensional variational (1DVAR) model. The snowfall rate derived with the physical algorithm is further adjusted based on calibration against a ground radar and gauge combined precipitation product. Both the snowfall detection and the snowfall rate algorithms have been validated respectively against ground observations and radar and gauge combined analyses from the contiguous United States with satisfactory results. Specifically, the correlation coefficient of the validation data with the radar analyses reaches 0.50. Currently, the snowfall rate product is operationally generated at near real-time at the US National Oceanic and Atmospheric Administration.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Snow

- Ice

- Snowfall detection

- Snowfall rate

- NOAA

- Logistic regression

- 1DVAR

- Microwave humidity sounders

- Brightness temperature

- MRMS

- GFS

- Radiative transfer model

- Particle distribution function

- Ice water content.

1 Introduction

Satellite remote sensing of snowfall is challenging due to the complexity of atmospheric processes involved and the lack of both the understanding about these processes and the information on the forcing variables. It is more difficult to estimate snowfall rate than rainfall rate because more information, such as ice particle shape and density, is required for developing physically based snowfall rate algorithm that is generally unavailable. Consequently, snowfall retrieval algorithms have lagged significantly behind the development of satellite rain rate algorithms (Ferraro et al. 2005; Kummerow et al. 2011). However, snowfall retrieval from passive microwave (PMW) instruments has been gaining momentum in recent years. The observations from PMW are uniquely suitable for snowfall detection and retrieval. PMW has the ability to penetrate clouds, hence directly bearing the signature of snow particles. High frequencies, such as window channels around 160 GHz and water vapor sounding channels around 183 GHz, are particularly sensitive to the radiance depressing effect of ice scattering (Bennartz and Bauer 2003). Some snowfall rate algorithms have been developed utilizing this property (Noh et al. 2006; Kim et al. 2008; Liu and Seo 2013; Skofronick-Jackson et al. 2013; Kummerow et al. 2015; Kidd et al. 2016; You et al. 2017; Meng et al. 2017; Kongoli et al. 2015, 2018). It is noted that many microwave algorithms that retrieve precipitation (rainfall or snowfall) rely on the sensing of ice signatures.

The algorithm introduced in this chapter retrieves snowfall rate over land (hereafter denoted as SFR). It consists of two components: snowfall detection (SD) and snowfall rate estimation. The former is a statistical algorithm whiles the latter employs a 1DVAR-based approach to retrieve snowfall rate. Owing to its physically-based framework, the SFR algorithm can cover a wide range of snowfall conditions. Currently, the SFR product is operationally produced at near real-time at the US National Oceanic and Atmospheric Administration (NOAA).

2 Data and Models

2.1 Instruments and Data

The SFR algorithm utilizes measurements from two sets of PMW instruments: Advanced Microwave Sounding Unit-A (AMSU-A) and Microwave Humidity Sounder (MHS) pair, and the Advanced Technology Microwave Sounder (ATMS). AMSU-A and MHS are aboard four POES and Metop satellites, and ATMS is aboard S-NPP and NOAA-20 satellites. These are cross-track scanning, PMW sounders with channels ranging from 23.8 GHz to 190.31 (MHS)/183.31 (ATMS) GHz, i.e. a combination of window, temperature sounding and water vapor sounding frequencies. The sensors have variable footprint sizes depending on frequency and scan position. The nadir resolution is about 16 km for high frequencies which is the resolution of the SFR product.

Some Numerical Weather Prediction (NWP) model data are also employed where required environmental state observations are not available. The model data employed are from the Global Forecast System (GFS) produced at NOAA National Centers for Environmental Prediction (NCEP). The forecast data satisfy the latency requirement of the SFR production at near real-time.

The SD algorithm was trained with in-situ weather observations. The ground data were obtained from the Quality Controlled Local Climatology Data (QCLCD) product. This dataset is generated from surface meteorological observations from approximately 1600 U.S locations. The data undergo interactive and manual quality control at NOAA National Centers for Environmental Information (NCEI) in addition to automated quality control. It provides direct measurement of present weather including snowfall occurrence and other related variables at hourly intervals, making it a valuable independent ground truth reference for satellite snowfall identification studies.

The SFR algorithm was calibrated against the NCEP Stage IV precipitation analysis. This dataset takes the Multi-Radar Multi-Sensor (MRMS) precipitation data (Zhang et al. 2016) as input. It further incorporates gauge, model, and satellite data, and applies human quality controls. The hourly Stage IV data has a 4 km resolution over the contiguous United States (CONUS). It is a high-quality standard radar-based precipitation analyses used by the US National Weather Service.

2.2 Logistic Regression

The SD algorithm applies a Logistic Regression (LR) technique to derive the probability of snowfall (POS). LR estimates the probability of a binary outcome Y as an exponential continuous function of a set of predictor variables:

where P is the probability of success of the binary variable Y; X is the vector of independent variables; and β is the vector of regression coefficients. The logarithm of the probability of Y, called the logit, can be expressed as linear combination of independent variables as in multiple regression:

The inverse of the logit function is called the logistic function:

where B is the logit function or the multiple linear regression term in Eq. (17.2). The fitting procedure consists in iteratively finding the set of regression coefficients using maximum likelihood estimation of the joint distribution of the response Y:

where yi is an individual measured value of Y, and pi is the probability that yi takes on a certain value. Note that pi is computed using Eq. (17.1). This differs from ordinary least squares regression where a unique analytic solution can be found in closed form.

2.3 Radiative Transfer Model and 1DVAR

A 1DVAR approach (Yan et al. 2008) is used in the SFR algorithm to retrieve cloud properties. The model retrieves ice cloud properties and snow emissivity at five microwave window and water vapor sounding channels: 23.8, 31.4, 89.0/88.2, 157.0/165.5, and 190.31/183.31 ± 7 GHz, respectively for AMSU-A and MHS/ATMS. The inversion method involves a set of iterations where brightness temperatures (Tbs) at the five frequencies are simulated using an RTM (Weng et al. 2001) at each iteration with given atmospheric and surface parameters (i.e., control vector). The differences between simulated and observed Tbs (ΔTbs) are compared to preset thresholds. The iteration will terminate if the ΔTbs fall below the thresholds, i.e. the retrieval converges. Otherwise, the elements of the control vector are adjusted and iteration will continue until it reaches the maximum allowed number of iterations, indicating that the retrieval is nonconvergent. The threshold for the first four frequencies is 1.5 K and for 190.31/183.31 ± 7 GHz is 3 K. The atmospheric and surface parameters from a successful run become the retrieved properties using the inversion method. The parameters include land surface emissivity at the five frequencies, total precipitable water (TPW), ice water path (Iw), effective size of ice particles (De), surface temperature (Ts), and cloud temperature (Tc).

The RTM utilized in this 1DVAR algorithm is a two-stream, one-layer model (Weng et al. 2001). The simulation error caused by the simplifications is mitigated by a correcting procedure developed by Weng and Grody (2000). Simulated Tbs are corrected with a set of empirical equations that were derived from a data set of Tb observations and the corresponding uncorrected simulations. According to Yan et al. (2008), the standard deviations of the corrected Tbs at frequencies 23.8, 31.4, 89, and 150 GHz are 0.21, 0.33, 0.72, and 0.68 K, respectively. Additional study shows that the standard deviation for 190.31 GHz and 183 ± 7 GHz are 4.06 and 2.62 K, respectively.

This 1DVAR model was adopted for the SFR algorithm for a few reasons. While a simple assumption of one-layer atmosphere is made in the RTM, it allows the model parameters, such as Iw, to be expressed in analytic forms and provides one with both qualitative and quantitative understanding of the connection among model parameters. The two-stream formula makes computation effective so retrieval can be completed quickly even with full-orbit data. Computation efficiency and product latency can be important factors for operational applications such as weather forecasting. Lastly, the 1DVAR model was developed for retrieving snow emissivity. Its parameterization, such as bias correction and emissivity initialization, is consistent with emissivity properties of cold conditions. The close connection between snow emissivity and snowfall makes the 1DVAR an appropriate model to use in a snowfall rate algorithm.

2.4 Ice Particle Terminal Velocity

An important component of the SFR algorithm is the ice particle terminal velocity. Heymsfield and Westbrook (2010) modified Mitchell’s (1996) formula for ice particle fall speed and developed a new equation:

where V is the ice particle terminal velocity, D the maximum dimension of the ice particle, η the dynamic viscosity of air, ρa the air density, δ0 and C0 the fitting parameters (δ0 = 8.0 and C0 = 0.35), and the modified Best number X is defined as

where m is the mass, m = πD3ρI/6 for spherical particles, ρI the density of ice particle and assumed to be 0.6 g cm−3, g the gravitational constant, Ar the ice particle area ratio (the area of the particle projected normal to the flow divided by the area of a circumscribing disc) and is 1 for spherical particle. Heymsfield and Westbrook (2010) computed terminal velocities of ice particles of various shapes using this approach and compared them with measurements. Their results show that the predicted and measured particle fall velocities agree well for all particle shapes examined. This conclusion is the basis for selecting the Heymsfield and Westbrook (2010) model to compute ice particle terminal velocity in the SFR algorithm.

3 Snowfall Detection

Snowfall detection is an integral part of the snowfall rate retrieval. Only when snowfall is detected will the SFR algorithm be applied to retrieve snowfall rate. The QCLCD hourly data from two winter seasons between 2012 and 2014 were taken as ground truth to train the SD algorithm. The ‘present weather’ reported by QCLCD contains information on the type of precipitation, which was used for classification of cases into falling snow or no-precipitation. When constructing the training dataset, the maximum time offset between the satellite and station-collocated pairs was set at 30 min with station time following satellite time. The maximum separation distance between satellite footprint centroid and station location was set at 25 km. Only the closest station within the 25 km distance from the satellite footprint centroid was matched.

The SD model is a hybrid algorithm that combines the output from a statistical model utilizing satellite passive microwave measurements with the output from a statistical model using meteorological variable forecasts from GFS. Both models were trained with the QCLCD in-situ data (Kongoli et al. 2018). Each module of the combined algorithm is described below.

3.1 Satellite Module

The satellite SD module computes POS using the LR technique and the principal components (PCs) of the five/seven high-frequency Tbs at 89.0/88.2 GHz and above for MHS/ATMS, respectively (Kongoli et al. 2015). PCs, instead of Tbs, are utilized as LR model predictors because the high frequency measurements are highly correlated with each other which can cause instability in the regression coefficients. Another predictor variable is the satellite local zenith angle (LZA) to account for the scan angle effect in satellite observations. The limb-corrected oxygen absorption channel at 53.6 GHz, Tb53L, (AMSU-A channel 5 and ATMS channel 6) is utilized as an atmospheric temperature proxy to define two retrieval regimes: cold and warm. To optimize retrievals, the PC weights and logistic regression coefficients are trained with in-situ station observations of snowfall and no-snowfall occurrence and pre-computed separately for the warmer (Tb53L between 244 and 252 K) and colder (Tb53L between 240 and 244 K) weather regimes. This approach is a major advancement compared to the previous version of Kongoli et al. (2003) in that it allows snowfall retrievals in much colder environments, down to near surface temperatures of about −15 °C. In addition, the statistical probabilistic approach is a more robust method than the previous decision tree approach.

3.2 Weather Module

A similar probabilistic LR approach was adopted for snowfall detection using GFS meteorological variable forecasts as predictors. Experience with satellite PMW instruments and theoretical investigations (e.g., Munchak and Skofronick-Jackson 2013) indicate that the detectability of precipitation over snow cover surfaces deteriorates significantly compared to bare land. In addition, snowfall analysis and estimation from physical parameters would be desirable in and of itself, for providing alternative retrieval strategies and insights into the relative importance of these parameters in snowfall processes. You et al. (2017) explored the influence of several environmental parameters on both rain and snowfall estimations and found that relative humidity and vertical velocity are related to the occurrence of snowfall, more so than that of rainfall. On the other hand, experience with the AMSU-A/MHS and ATMS satellite algorithms have shown that cloud thickness computed from forecast data is an efficient filter in reducing false alarms (Meng et al. 2017). Therefore, to develop the algorithm, the forecast variables considered include relative humidity at 2-m, 1-km, 2-km and 3-km height, cloud thickness, and vertical velocity at 2-km and 3-km height.

3.3 Hybrid Algorithm

The rationale for the hybrid algorithm (Kongoli et al. 2018) is to compute an output as a weighted average of outputs from the satellite and the weather-based SD algorithms:

where P refers to POS, W refers to weight, and hyb, sat and wea refer to the hybrid, satellite and the weather-based SD algorithms, respectively. Note that Wsat + Wwea = 1. Equation (17.7) may therefore be written as:

where Wsat is between 0 and 1. Presented with a set of brightness temperatures and ancillary data, POS for the satellite and the weather models are computed. The POS for the hybrid algorithm is derived based on Eq. (17.8) for a specific weighting parameter Wsat. Next, the resulting probability value (Phyb) is assigned to “snowfall” if it is greater than an a priori threshold probability.

3.4 SD Filters

Rainfall and snow cover can exhibit similar characteristics as snowfall in PMW measurements. Consequently, they can contaminate the outcome of the statistical SD model. In addition, the SD algorithm does not exclude rainfall in its detection so temperature-based screening is necessary to filter out rainfall. These considerations led to the development of a set of filters to further screen the snowfall identified by the SD model. Most filters use atmospheric and surface information from the GFS forecasts. The meteorological variables considered include 2-m temperature, maximum atmospheric temperature, 2-m relative humidity, Tb53L, and cloud thickness (CT). For instance, one of the filters checks 2 m temperatures at the two consecutive GFS forecast times that encompass the satellite passing time. If both temperatures are above 1 °C, it indicates a persistently warm environment at the surface that generally cannot sustain snowfall (Meng et al. 2017). Another filter requires surface relative humidity to be above 60% because analysis has shown that this condition is almost always met during snowfall (Kongoli et al. 2015). The most important filter is a check on CT. It requires CT to be at least 1500 m. While this requirement is likely to remove shallow snowfall such as lake effect snow, it will not significantly lower the Probability of Detection (POD). This is because the SFR algorithm is not sensitive to very shallow snowfall due to the weak effect from the latter on PMW measurements. The exertion of a CT threshold eliminates most of the confusions between true snowfall and snow cover on the surface. Our analysis has shown that this is a very effective filter that can significantly reduce false snowfall detection (Meng et al. 2017).

4 Snowfall Rate

The SFR algorithm (Meng et al. 2017) is composed of three main elements: (i) retrieval of cloud properties from a 1DVAR, (ii) derivation of initial SFR, i.e. SFRi, and (iii) SFRi calibration to obtain the final SFR product. This section details the theoretical basis of the algorithm and the calibration approach.

4.1 Methodology

The Particle Distribution Function (PDF) of ice particles adopts the modified gamma distribution from Yan et al. (2008) and Weng et al. (2001):

where D is the diameter of the ice particle (assuming spherical habit), Dm the nominal diameter of the particle, N0 the intercept parameter, ν the shape parameter for the gamma distribution, and Γ the gamma function. Furthermore,

and

where De is the effective diameter of the ice particles and Iw ice water path. If the shape parameter is assumed to be 1, the PDF in Eq. (17.9) reduces to an exponential distribution:

Assuming the number of ice particles follows the above exponential distribution, the water equivalent snowfall rate, SFRi, can be expressed as (Meng et al. 2017):

or

where ρw is the density of water.

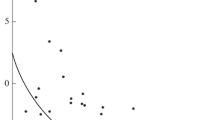

An implicit assumption is made in the above equation, i.e. ice water content (IWC) is uniformly distributed in the cloud column. It is consistent with the one-layer RTM (Yan et al. 2008). However, this assumption is not a true representation of cloud physics. In snowing clouds, ice crystals grow in mass as they fall in the cloud through two mechanisms: i) Bergeron process, i.e. supersaturated water vapor deposition, and ii) accretion (riming), i.e. freezing of super cooled liquid droplets onto the surface of ice crystals. These processes generally lead to higher IWC at the lower portion of the cloud than in the upper portion. Figure 17.1 presents ten randomly selected, normalized IWC estimate profiles retrieved from CloudSat (Stephens et al. 2002). In general, these profiles show an increasing trend from cloud top to cloud base, and supports the notion that IWC is not uniformly distributed in most clouds. As a first step to mitigate the bias caused by the simple assumption about IWC, it is assumed that IWC has a linearly distribution through the cloud column. This effectively doubles the SFRi in Eq. (17.14) at cloud base through simple trigonometric calculation.

By adopting Heymsfield and Westbrook’s (2010) model (Eqs. 17.5 and 17.6) and assuming spherical ice habit, SFRi can then be expressed as:

The above equation does not have an analytical solution. It is solved numerically using Romberg’s method.

4.2 Calibration

Since the IWC profile is not known from the PMW measurements or the GFS model, SFRi was calibrated against Stage IV precipitation analysis to improve retrieval accuracy. SFRi data was collocated with Stage IV data from large snowstorms in the two winter seasons between 2015 and 2017. The spatial collocation was performed by convoluting higher resolution Stage IV data to lower resolution satellite Field-of-View (FOV). The SFRi estimates were matched with Stage IV analyses within 1 h of the satellite overpass. The snowfall detected by the SD algorithm was utilized to identify snowfall pixels for Stage IV since the latter does not include precipitation phase.

The calibration adopts the histogram matching technique described in Kidder and Jones (2007). This approach matches the cumulative probability distribution functions (CDF) of two products (SFRi and Stage IV in this case) through lease square method to achieve optimal overall agreement. The equation for estimating S-NPP ATMS SFR is:

Table 17.1 compares the S-NPP SFR statistics against Stage IV from before and after calibration. It shows that the calibration significantly reduces the bias in the initial SFR retrievals while largely maintaining the root mean square error (RMSE) and correlation with Stage IV. It is noted that, with the simple assumption of linear IWC distribution, the 1DVAR-based SFR retrieval has already achieved a correlation coefficient of 0.52 with Stage IV. It demonstrates the merit of this physical algorithm.

Figures 17.2 and 17.3 respectively present the scatter plots and the probability density functions (PDFs) for the same datasets as for Table 17.1. The underestimation in SFRi below 1 mm h−1 is mitigated to a certain degree. So is the overestimation above about 3.5 mm h−1 (Fig. 17.2). The two PDFs also reach much better agreement through the calibration (Fig. 17.3). A low limit of 0.05 mm h−1 is set in the SFR product because false alarm rate (FAR) increases drastically for very light snowfall. This low limit results in the slight dip at the low end of the SFR PDF (Fig. 17.3).

5 Validation

Separate validation studies were conducted to verify the performance of the SD and the SFR algorithms, respectively, since they are independent of each other.

5.1 SD Validation

The QCLCD in-situ hourly ground data were employed for SD algorithm validation. Periods during the three winter seasons between 2014 and 2017 were selected to sample snowfall events over CONUS. The satellite, weather-based and hybrid SD outputs at the satellite FOV scale were matched with in-situ data following the same spatio-temporal collocation criteria as for constructing the SD training data.

Table 17.2 presents the statistical results of the S-NPP ATMS satellite and the hybrid algorithm, the latter using a weighting coefficient f equal to 0.5, i.e., when GFS-based and satellite outputs have the same uncertainty information. Compared to the satellite-only algorithm, the hybrid algorithm has substantially improved POD, FAR and the Heidke Skill Score (HSS). It is important to emphasize that the statistical results reported here are computed from comparisons with in-situ measurements where light snowfall cases (reported as “trace” or with zero surface accumulation) were dominant. Generally, light snowfall is much more difficult to detect than heavier snowfall due to its weak impact on satellite measurements.

Figure 17.4 demonstrates the improvement of the hybrid model for a major snowfall event hitting the Northeast US on 5 February 2014. As shown in Fig. 17.4, a considerable snowfall area of this significant event was missed by the satellite algorithm but captured by the hybrid algorithm.

S-NPP ATMS SFR using (a) satellite-only SD algorithm, and (b) hybrid SD algorithm during a major snowfall event on 5 February 2014 in the US Image (c) is the near coincident radar reflectivity which covers both snowfall and rainfall (in the southern part of CONUS). The noted oval areas in (a) and (b) show legitimate snowfall that was missed by the satellite-only algorithm but captured by the hybrid algorithm

5.2 SFR Validation

The SFR algorithm was validated against the Stage IV analyses from October 2016 to April 2017. SFR was retrieved using the abovementioned algorithm (Eqs. 17.15 and 17.16) for all snowfall FOVs over CONUS from this period. The data set was collocated with Stage IV data via convolution over sensor footprints. Table 17.3 lists the statistics of the S-NPP SFR validation data. The correlation and bias are comparable to those of the calibration data. The RMSE is somewhat higher. The scatter plot and PDF of the validation data are displayed in Fig. 17.5. As expected from the RMSE, data can be observed to scatter more than the calibration data. It is noted that, unlike the calibration dataset which is composed of data from large snowstorm systems, the validation data include all SFR retrievals that could be collocated with Stage IV from winter 2017. The fact that the validation data still perform reasonably well demonstrates the strength of the SFR algorithm for both large-scale and localized snowfall.

As part of the validation study, the 3-month average S-NPP SFR from January ~ March 2017 was calculated and compared to the corresponding Stage IV average from the same period (Fig. 17.6). The SD detected snowfall was again used to determine snowfall for Stage IV. Stage IV does not cover most of the western US due to poor radar coverage in this region. This reveals one of the advantages of satellite snowfall rate product like SFR, i.e. satellite product can fill in radar gaps. The overall intensity patterns between the two images in Fig. 17.6 are rather similar, i.e. heavier snowfall in the Northeast of US, around the Great Lakes, and along the Rocky Mountains; and lighter snowfall in between. It is stressed that the 3-month snowfall average is based on the snowfall detected by the SFR algorithm. The current algorithm has low detection rate for shallow snowfall such as lake effect snow and shallow orographic snow. It is most likely that the snowfall represented in Fig. 17.6 only includes a portion of such shallow snowfall. The statistics of the 3-month SFR average against the Stage IV data are given in Table 17.4. As expected, the metrics of the seasonal average are superior to the validation and even the calibration data.

6 Summary and Conclusions

An overland SFR algorithm has been developed for PMW radiometers (the AMSU-A/MHS pair aboard the NOAA POES and EUMETSAT Metop satellites, and ATMS aboard the S-NPP and NOAA-20 satellites). The algorithm relies on an embedded SD model to detect snowfall. Only when snowfall is detected will the SFR algorithm be applied to retrieve snowfall rate.

The SD model is a hybrid algorithm that combines the output from a satellite-based statistical model and the output from a GFS model-based statistical model. Both statistical algorithms were trained with QCLCD in-situ observations of snowfall and no-snowfall. The satellite model couples the PCs of PMW measurements at high frequencies with LR to produce the satellite-based POS. The other statistical model takes a set of meteorological variables from GFS forecast as predictors and produces the weather-based POS with a LR model. The final POS is a weighted average of the above two probabilities and is assigned to “snowfall” if it is greater than an a priori threshold. The SD algorithm is further enhanced with a set of mostly NWP model-based filters. These filters, especially the cloud thickness threshold, are effective at removing false alarms in the SD model output.

The SFR algorithm is a physically-based model and is composed of three main elements: retrieving cloud properties, estimating initial SFR, i.e. SFRi, and determining the final SFR with an adjustment. Cloud properties, Iw and De, are retrieved using a 1DVAR approach that uses a two-stream RTM as the forward model to simulate Tbs at five window and water vapor sounding channels. An existing model is adopted to calculate ice particle terminal velocity. The retrieved cloud properties are used in the computation of the terminal velocity and IWC, and eventually SFRi. Finally, SFR is determined by adjusting SFRi with a cubic regression function. The adjustment function was derived from matching SFRi histogram with that of the Stage IV precipitation data. The effect of the adjustment is to reduce the overall bias in SFRi that is conceivably due to the uncertainty associated with the assumption about IWC linear distribution. The adjustment has little impact on the RMS and the correlation of SFRi with Stage IV analyses.

The SD algorithm was validated against hourly in-situ ground data. Compared to the satellite-only model, the hybrid SD algorithm exhibits much improved performance in terms of POD, FAR, and HSS. For instance, the former has an HSS of 0.23 while the latter 0.44. The SFR product was validated using the Stage IV precipitation analyses. Both point and seasonal (January – March 2017) average SFR were compared to the corresponding Stage IV data. While the calibration dataset is composed of data from large snowstorm systems from winters 2015 and 2016, the validation data include all SFR retrievals that could be collocated with Stage IV from winter 2017. The validation data still perform well with correlation and bias comparable to the calibration data while have somewhat higher RMS. The results demonstrate the strength of the SFR algorithm for both large-scale and localized snowfall.

References

Bennartz, R., & Bauer, P. (2003). Sensitivity of microwave radiances at 85–183 GHz to precipitating ice particles. Radio Science, 38, 8075. https://doi.org/10.1029/2002RS002626.

Ferraro, R. R., Weng, F., Grody, N. C., Zhao, L., Meng, H., Kongoli, C., Pellegrino, P., Qiu, S., & Dean, C. (2005). NOAA operational hydrological products derived from the advanced microwave sounding unit. IEEE Transactions on Geoscience and Remote Sensing, 43, 1036–1049. https://doi.org/10.1109/TGRS.2004.843249.

Heymsfield, A. J., & Westbrook, C. D. (2010). Advances in the estimation of ice particle fall speeds using laboratory and field measurements. Journal of the Atmospheric Sciences, 67, 2469–2482. https://doi.org/10.1175/2010JAS3379.1.

Kidd, C., Matsui, T., Chern, J., Mohr, K., Kummerow, C., & Randel, D. (2016). Precipitation estimates from cross-track passive microwave observations using a physically based retrieval scheme. Journal of Hydrometeorology, 17, 383–400. https://doi.org/10.1175/JHM-D-15-0051.1.

Kidder, S. Q., & Jones, A. S. (2007). A blended satellite total precipitable water product for operational forecasting. Journal of Atmospheric and Oceanic Technology, 24(1), 74–81. https://doi.org/10.1175/JTECH1960.1.

Kim, M.-J., Weinman, J. A., Olson, W. S., Chang, D.-E., Skofronick-Jackson, G., & Wang, J. R. (2008). A physical model to estimate snowfall over land using AMSU-B observations. Journal of Geophysical Research, 113, D09201. https://doi.org/10.1029/2007JD008589.

Kongoli, C., Pellegrino, P., Ferraro, R., Grody, N. C., & Meng, H. (2003). A new snowfall detection algorithm over land using measurements from the Advanced Microwave Sounding Unit (AMSU). Geophysical Research Letters, 30(14), 1756. https://doi.org/10.1029/2003GL017177.

Kongoli, C., Meng, H., Dong, J., & Ferraro, R. (2015). A snowfall detection algorithm over land utilizing high-frequency passive microwave measurements—application to ATMS. Journal of Geophysical Research, 120, 1918–1932. https://doi.org/10.1002/2014JD022427.

Kongoli, C., Meng, H., Dong, J., & Ferraro, R. (2018). A hybrid snowfall detection method from satellite passive microwave measurements and global weather forecast models. Quarterly Journal of the Royal Meteorological Society, 144(S1), 120–132. https://doi.org/10.2002/qj3270.

Kummerow, C. D., Ringerud, S., Crook, J., Randel, D., & Berg, W. (2011). An observationally generated a priori database for microwave rainfall retrievals. Journal of Atmospheric and Oceanic Technology, 28, 113–130. https://doi.org/10.1175/2010JTECHA1468.1.

Kummerow, C. D., Randel, D., Kulie, M., Wang, N.-Y., Ferraro, R. R., Munchak, S. J., & Petkovic, V. (2015). The evolution of the Goddard profiling algorithm to a fully parametric scheme. Journal of Atmospheric and Oceanic Technology, 32, 2265–2280. https://doi.org/10.1175/JTECH-D-15-0039.1.

Liu, G., & Seo, E.-K. (2013). Detecting snowfall over land by satellite high-frequency microwave observations: The lack of scattering signature and a statistical approach. Journal of Geophysical Research, 118, 1376–1387. https://doi.org/10.1002/jgrd.50172.

Meng, H., Dong, J., Ferraro, R. R., Yan, B., Zhao, L., Kongoli, C., Wang, N.-Y., & Zavodsky, B. (2017). A 1DVAR-based snowfall rate retrieval algorithm for passive microwave radiometers. Journal of Geophysical Research, 122. https://doi.org/10.1002/2016JD026325.

Mitchell, D. L. (1996). Use of mass- and area-dimensional power laws for determining precipitation particle terminal velocities. Journal of the Atmospheric Sciences, 53, 1710–1723. https://doi.org/10.1175/1520-0469(1996)053<1710:UOMAAD>2.0.CO;2.

Munchak, S. J., & Skofronick-Jackson, G. (2013). Evaluation of precipitation detection over various surfaces from passive microwave imagers and sounders. Atmospheric Research, 131, 81–94. https://doi.org/10.1016/j.atmosres.2012.10.011.

Noh, Y.-J., Liu, G., Seo, E.-K., Wang, J. R., & Aonashi, K. (2006). Development of a snowfall retrieval algorithm at high microwave frequencies. Journal of Geophysical Research, 111, D22216. https://doi.org/10.1029/2005JD006826.

Skofronick-Jackson, G., Johnson, B. T., & Munchak, S. J. (2013). Detection thresholds of falling snow from satellite-borne active and passive sensors. IEEE Transactions on Geoscience and Remote Sensing, 51, 4177–4189. https://doi.org/10.1109/TGRS.2012.2227763.

Stephens, G. L., Vane, D. G., Boain, R. J., Mace, G. G., Sassen, K., Wang, Z., Illingworth, A. J., O’Connor, E. J., Rossow, W. B., Durden, S. L., Miller, S. D., Austin, R. T., Benedetti, A., Mitrescu, C., & the CloudSat Science Team. (2002). The CloudSat mission and the A-train: A new dimension of space-based observations of clouds and precipitation. Bulletin of the American Meteorological Society, 83, 1771–1790. https://doi.org/10.1175/BAMS-83-12-1771.

Weng, F., & Grody, N. (2000). Retrieval of ice cloud parameters using a microwave imaging radiometer. Journal of the Atmospheric Sciences, 57, 1069–1081. https://doi.org/10.1175/1520-0469(2000)057<1069:ROICPU>2.0.CO;2.

Weng, F., Yan, B., & Grody, N. (2001). A microwave land emissivity model. Journal of Geophysical Research, 106, 20,115–20,123. https://doi.org/10.1029/2001JD900019.

Yan, B., Weng, F., & Meng, H. (2008). Retrieval of snow surface microwave emissivity from the advanced microwave sounding unit. Journal of Geophysical Research, 113, D19206. https://doi.org/10.1029/2007JD009559.

You, Y., Wang, N.-Y., Ferraro, R. R., & Rudlosky, S. (2017). Quantifying the snowfall detection performance of the GPM microwave imager channels over land. Journal of Hydrometeorology, 18, 729–751. https://doi.org/10.1175/JHM-D-16-0190.1.

Zhang, J., Howard, K., Langston, C., Kaney, B., Qi, Y., Tang, L., Grams, H., Wang, Y., Cocks, S., Martinaitis, S., Arthur, A., Cooper, K., Brogden, J., & Kitzmiller, D. (2016). Multi-Radar Multi-Sensor (MRMS) quantitative precipitation estimation: Initial operating capabilities. Bulletin of the American Meteorological Society, 97. https://doi.org/10.1175/BAMS-D-14-00174.1.

Acknowledgements

The authors would like to acknowledge Jun Dong of University of Maryland for conducting the SFR calibration and validation studies, and Banghua Yan and Limin Zhao of NOAA for providing support during the development of the SFR algorithm. This research has been supported by NOAA through grant NA09NES4400006 (Cooperative Institute for Climate and Satellites-CICS) at the University of Maryland, Earth System Science Interdisciplinary Center (ESSIC). The views, opinions, and findings contained in this report are those of the authors and should not be construed as an official National Oceanic and Atmospheric Administration or U.S. Government position, policy, or decision.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Meng, H., Kongoli, C., Ferraro, R.R. (2020). A 1DVAR-Based Snowfall Rate Algorithm for Passive Microwave Radiometers. In: Levizzani, V., Kidd, C., Kirschbaum, D.B., Kummerow, C.D., Nakamura, K., Turk, F.J. (eds) Satellite Precipitation Measurement. Advances in Global Change Research, vol 67. Springer, Cham. https://doi.org/10.1007/978-3-030-24568-9_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-24568-9_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-24567-2

Online ISBN: 978-3-030-24568-9

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)