Abstract

Most of existing event prediction approaches consider event prediction problems within a specific application domain while event prediction is naturally a cross-disciplinary problem. This paper introduces a generic taxonomy of event prediction approaches. The proposed taxonomy, which oversteps the application domain, enables a better understanding of event prediction problems and allows conceiving and developing advanced and context-independent event prediction techniques.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

An event is defined as a timestamped element in a temporal sequence [39]. Examples of events include earthquakes, flooding, and business failure. Event prediction aims to assess the future aspects of event features, e.g. occurrence time and probability, frequency, intensity, duration and spatial occurrence. Event prediction problem is encountered in different research and practical domains, and a large number of event prediction approaches have been proposed in the literature [46, 51, 60]. However, most of existing event prediction approaches have been initially designed and used within a specific application domain [16, 17, 59] while event prediction is naturally a cross-disciplinary problem.

Events can be categorized into either simple or complex [24]. A complex event is a collection of simple or complex events that can be linearly ordered in event streams or partially ordered in event clouds [24]. In this paper, we distinguish between two types of complex events. Type 1 of complex events represents a collection of events where only the collection characteristics are accessible and measurable. This is due to the fact that the access to the characteristics of simple events is costly, difficult or non-relevant. Earthquakes are good examples of this type of complex events. The predicative analysis of Type 1 complex events can be handled using the classical event prediction approaches. Type 2 complex events represents a collection of events where the characteristics of simple events as well as events collection are accessible and measurable. Examples of Type 2 complex events include computer system and Internet of Things failures. The predicative analysis of Type 2 complex events is essentially based on Complex Event Processing (CEP) techniques [24, 58].

The objective of this paper is to identify and classify the main mature and classical approaches of event prediction. It introduces a generic taxonomy of event prediction approaches that oversteps application domain. This generic cross-disciplinary view enables a better understanding of event prediction problems and opens road for the design and development of advanced and context-independent techniques. The proposed taxonomy distinguishes first three main categories of event prediction approaches, namely generative, inferential and hybrid. Each of these categories contains several event prediction methods, whose characteristics are presented in this paper.

The paper is organized as follows. Section 2 introduces the taxonomy. Sections 3–5 detail the main categories of event prediction approaches. Section 6 discusses some existing approaches. Section 7 concludes the paper.

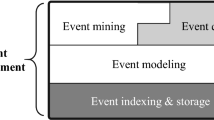

2 General View of the Taxonomy

The taxonomy in Fig. 1 presents a generic classification of event prediction approaches in time series. This taxonomy includes only classical and mature approaches that are well established in the literature. Furthermore, this taxonomy has been constructed based on some commonly studied event types from several fields, namely finance, geology, hydrology, medicine and computer science. Three main categories of event prediction approaches can be distinguished in Fig. 1:

-

Generative approaches. These approaches build theoretical models of the system generating the target event and predict future events through simulation. The term generative refers to the strategy adopted by generative science [18] consisting in the modelling of natural phenomena and social behavior through mathematical equations [12] or computational agents [21]. They are adapted to predict events where specific simulation frameworks are accessible, for instance flood modeling and simulation frameworks [12, 14]. Generative approaches are mature and well proven. These approaches require a strong expertise in the target event field.

-

Inferential approaches. Real world is complex and even though physics and mathematics have greatly evolved, our knowledge of rules that control observed phenomena is still superficial [38]. Thus, generative approaches still deficient in cases where the knowledge of the system generating the event is insufficient. Inferential approaches fill this gap. These approaches literally learn and infer patterns from past data.

-

Hybrid approaches. These approaches combine models constructed from observed data with models based on physics laws. Hence, they employ generative and inferential approaches. The authors in [30] design hybrid approaches by ‘conceptual approaches’. The basic idea of hybrid approaches is to use inferential methods to prepare the considerable amount of historical data required as input to generative methods.

These categories will be further detailed in the rest of this paper:

3 Generative Approaches to Event Prediction

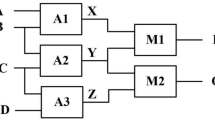

The flowchart in Fig. 2 illustrates graphically the working principle of generative approaches. Three main steps can be distinguished. First, the theoretical structure of the system generating the event is modelled. Second, the obtained model is calibrated and validated using real-world datasets. This step consists in the estimation of the model parameters that fit at best the available data. Finally, simulation is performed, the future states of the system are generated and future event characteristics are deduced.

There are two main sub-categories of generative approaches:

-

Dynamical system modeling. A dynamical system can be described as a set of states S and a rule of change R that determines the future state of the system over time T. In other words, the rule of change \(R:S\times T \longrightarrow S\) gives the consequent states of the system for each \(s\in S\). These approaches build the theoretical model, then construct a computational model that implements the theoretical mathematical model [12]. In hydrology field, these models are called hydrodynamic models [37]. Dynamical system modeling approaches depend on the model robustness. They are mainly applied in weather forecast and flood prediction.

-

Agent-based simulation. These approaches consist in the modelling of the system components behavior as interacting agents. They are effective when human social behavior need to be considered [21].

The main difference between these two sub-categories concerns the model conception foundation. In the first case, differential equations govern the system evolution, whereas, in the second case, logical statements establish the rules and interaction between agents [11].

4 Inferential Approaches to Event Prediction

The working principle of inferential approaches is shown in Fig. 3, where three main steps are involved. First, data is created and analyzed. Second, predictive modelling (i.e. inference) is conducted. Inference may be based on expert opinion or on a quantitative predictive model, as detailed in what follows. Finally, the model is tested over unseen datasets. The event characteristics are deduced from obtained results.

There are two main trends within inferential approaches: qualitative and quantitative. The first case is conducted through human experts while the second relies on statistical or machine learning techniques.

4.1 Qualitative Approaches

Qualitative approaches relies on Human expertise. Experts in the target event field analyze the data in order to deduce common patterns. Then, they construct mathematical or logical relations between studied variables and event probable occurrence. These relations are commonly called indexes in finance context. Unlike generative approaches where each model must have sound theoretical foundation, qualitative approaches allow subjectivity in the constructed model. According to [2], subjectivity is accepted when human behavior is under study.

4.2 Quantitative Approaches

Within quantitative approaches, data is processed through algorithms and statistical techniques. There is a large number of quantitative approaches. The main difference between them concerns the format of data used to carry out the study. Hence, quantitative approaches are further subdivided according to data format into three subgroups, which are detailed in the following paragraphs. We design by \(e^t\) a target event and by \(e_o^t\) the occurrence o of the event \(e^t\) and \(t_o\) its time of occurrence with \(o\in [1..n]\); n is the number of past events considered in the study.

Matrix Data Structure-Based Approaches. In this case, data has the format of a matrix. This format is commonly used in statistics. Cases (i.e. observations or learning set) representing the matrix rows are event instances \(e_o^t\). Matrix rows can also be control-cases \(c_z^t\) representing random situations that take place at time \(t_z\) such that \(t_o \ne t_z\) with \(z\in [1..m]\); m is the total number of control-cases. The matrix columns are variables (i.e. features, biomarkers, attributes) \({X_k}\) with \(k\in [1..K]\); K is the total number of variables. Finally, data used has the following format: \(M=(x_{ij})\) with \(i\in [1..n+m]\) and \(j\in [1..K]\); and \(x_{ij}\) is the value of variable \(X_j\) for each observation. Approaches dealing with such format are classification approaches and event frequency analysis approaches.

Classification Approaches. The prediction process involves predictor variables referenced above as \({X_k}\). Classification can be supervised or unsupervised. For supervised classification, a decision variable D such that \(D\in \{X_k\}\) specifies the predicted outcome. The decision variable can be the event magnitude or simply a binary valued variable specifying the actual occurrence of the event or not [32]. In unsupervised classification, clusters are deduced and interpreted as prediction outcomes [32]. Classification techniques for prediction purpose can be applied as single classifiers [3, 47] or as hybrid classifiers [13, 15] which is the recent trend in this area. The authors in [32] give a summary of hybrid classifiers for business failure prediction.

Event Frequency Analysis Approaches. The event occurrences are described by a unique random variable or a set of variables \({X_o}\). This can be the event intensity (i.e. magnitude for earthquakes) or other characteristics such as the volume and duration for floods [61]. In this category of approaches, the variable outcomes are estimated by analysing frequency distribution of event occurrences. The estimation of outcomes relies on descriptive statistics and consists practically in approximating the variables distribution then deducing their statistical descriptions.

There are two cases for this type of approaches: (i) rare events with a focus on maximum values for \({X_o}\) (i.e. extreme events) [25]; and (ii) frequent events. For the first case, extreme value theory has become a reference. It involves the analysis of the tail of the distribution. For the second case, known distributions such as Poisson, Gamma or Weibull are considered. Studies extending the extreme value theory for the multivariate case exist but are rather difficult to apply for non-statisticians [17].

Temporal Approaches. In temporal approaches, the time dimension is explicitly considered. Here, \(e_o^t\) will be identified on a set of K time series, each time series represents a variable X measured at equal intervals over a time period T such that the time of occurrence of \(e_o^t\), namely \(t_o\), is included in T (see Fig. 4). The set of time series which actually represent the studied data is denoted by \(S_T=\{X_k (t);t \in T\}\), \(k \in [1..K]\). Temporal approaches may consider unique time series (i.e. univariate time series) or several time series. Two types of methodologies are possible.

Approaches Dealing with Univariate Time Series. In this special case, a unique time series is under consideration. The event prediction can follow two patterns:

-

Time series forecasting and event detection. For this case, time series values are forecasted. Then the target event is detected. It is important to note that event prediction on the basis of time series data is different from time series forecasting. The difference consists in the nature of the predicted outcome. For event prediction, the outcome is an event, hence the goal is to identify the time of occurrence of the event through the analysis of the effect the precursor factors have on time series data. For time series forecasting, the outcome consists in the future values of the time series. The authors in [54] applied this approach for computer systems failure prediction.

-

Time series event prediction. We refer to time series event [43, 45] as a notable variation in the time series values that characterizes the occurrence of the target event under study. In this special case, researchers analyze variations, mainly trends, in time series data, preceding the time series event and deduce temporal patterns that can be used for prediction.

Approaches Dealing with Multivariate Time Series. Most works under this category deduce temporal patterns from multiple time series followed by clustering or classification of these patterns in order to deduce future events [8, 41]. These approaches adopt the same strategy as with time series event prediction but they are more adapted to the complex aspect of multivariate time series.

Event Oriented Approaches. When the available data is a collection of events, event prediction strategy follows a different path, where the central focus becomes the chronological interrelations between events data and a special target event, the latter can a simple or complex event. In what follows, we will detail two cases: the first is event sequence identification, which is adapted for simple events, and the second is complex event processing which is adapted to complex events.

Event Sequence Identification. Within event sequence identification, we consider a set L of secondary events \(\{e_l^s\}\) with \(l\in [1..L]\) (see Fig. 5). These events are events occurring around the target event and can be used to predict the target event. The secondary event \(e_l^s\) occurrences are denoted by \(\{e_{lz}^s\} \)with \(z\in [1..Z_l]\); \(Z_l\) the total number of occurrences for the secondary event with index l. The secondary event \(e_l^s\) is also described by a set of K variables \(\{X_k\}\) with \(k\in [1..K]\) (the authors in [59] described these variables as a set feature value pairs). All events set \(\{e_{lz}^s \}\) (with \(l\in [1..L]\) and \(z\in [1..Z_l]\)), in addition to \(\{e_i^t\}\) (with \(i\in [1..n]\)), are considered over a common time interval T and temporal sequences are deduced. These events and the corresponding variables describing each event occurrence represent the data format for event sequence identification approaches.

This category of approaches is mainly used in online system failure prediction [34, 49] where secondary events are identified from computer log files.

Complex Event Processing. A complex event is a sequence \({<}e_1,\ldots ,e_m{>}\) of different simple events chronologically related. The CEP aim at detecting complex events on the basis of an event space as a dataset. The CEP solutions has been applied successfully to predict heart failures [36], where simple events like symptoms are detected and hence an alert predicting the heart stroke (complex event) is enabled. Other applications include computer system failure prediction [6], Internet of things failure prediction [56] and bad traffic prediction [1].

5 Hybrid Approaches to Event Prediction

The working principle of hybrid approaches is given in Fig. 6. As shown in this figure, hybrid approaches combine steps from generative and inferential approaches. The starting point is both available historical data (like inferential approaches) and knowledge about system generating the event (like generative approaches). The outputs of these two parallel steps are combined into a general model. The next step consists in model calibration and validation against real-world datasets (similarly to generative approaches). Finally, simulation of the future states of the system is performed and the predicted outcome is deduced.

This category can be further subdivided into two sub-groups:

-

Scenario based approaches. These approaches construct a mathematical model of the system generating the target event. Then, they vary the model input data according to different possible scenarios extracted from the historical data records. The various outputs of the model represent all possible results. Scenario based approaches are often seen as solution to uncertainty issues [28]. A classic example of scenario based approaches is ensemble streamflow prediction [23], which is mainly used in flood prediction.

-

Mixed data models approaches. These approaches combine models constructed from observed data with models based on physics laws. Hence they employ generative and inferential modeling techniques. The authors in [30] design this type of approaches by ’conceptual models’. Generally, generative models involve a considerable amount of historical data, so inferential models, such as time series modelling techniques, are used to generate the required input data.

6 Discussion

Table 1 provides some examples illustrating the application of discussed categories of methods in different application domains. This table shows that some approaches are devoted to some specific event types. For example, hybrid approaches are widely applied in hydrology, especially for flood prediction. Event sequence identification is mainly applied for computer system failure prediction. This is due to the availability of secondary events through system logs. Multi-agent simulation requires strong knowledge in computer science and may be too complex for non-specialists. This explains its application for restrained fields. Qualitative prediction approaches are well adapted to predict low risk related events such as in financial context. However, they can be unreliable for major events such as floods and earthquakes.

The approaches depicted in the taxonomy have several drawbacks. For instance, generative and hybrid approaches fail to produce a model that generates exactly the real-world outcomes of the studied systems [38]. Dynamical system modeling approaches perform the prediction under the assumption that the system generating the event is deterministic. However errors due to the incomplete modeling of the system make this assumption very strong in some cases. For instance, earthquake prediction studies until now fail to model the dynamics of tectonic plaques accurately [39].

Within inferential approaches, the authors in [25] argue that in event frequency analysis, fitting event characteristic variables to a known probability law can lead to inaccurate results [25]. The predictive ability of event frequency analysis approaches is relatively limited but they can be used to assist the prediction process by analysing the studied phenomenon. In addition, the authors in [2] remark that inferential approaches fail to analyze the data holistically and they mainly focus on a truncated aspect of the data. In addition, they fail to take into account the system dynamics and interactions between variables [2]. At this level, one should observe that classification approaches can take into account interactions between variables. Furthermore stream mining models, prepared to deal with concept drift, can address system dynamics by evolving the machine learning model.

The authors in [2] advocate that qualitative approaches are more effective if they are combined with quantitative analysis, since a holistic consideration of event context, its dynamics but also temporal evolution by experts may overcome the restrictive view of data by quantitative approaches. The advantage of classification based approaches as a prediction technique is the availability of a range of proven tools and computing packages, making its application open to large public. However, classification based approaches fail to handle uncertainty in data and do not take into account preferences in input variables. In addition, classification based approaches neglect time dimension, as stated in [5]. Furthermore, the approximate time to event occurrence defined by [59] as lead time can be inaccurately estimated, which is a drawback for risk prevention procedures, especially concerning major events such as floods and earthquakes. At this level, we should mention that the proposal of [26] presents some solutions to address this issue by using composed labels having the form (Event, Time) for predicating event occurrence time and (Event,Intensity,Time) for predicating event occurrence time and intensity.

An interesting approach to reduce the effect of these shortcomings is to combine classification and pattern identification techniques, as suggested by [5]. In this respect, the authors in [19, 20] combine self-organising maps and temporal patterns to predict firms failure. More specifically, the authors in [20] use the term ‘failure trajectory’ to refer to temporal patterns representing the firm health over time while the author in [19] uses the term ‘failure process’ as a temporal pattern, and refers to it as a typology of firm behaviour over time. In both cases the patterns are used to classify firms; hence predict business failure event. More recently, the authors in [26] introduce rough set based classification techniques with an explicit support of temporal patterns identification.

7 Conclusion

This paper introduces a taxonomy of event prediction approaches. It represents a generic view of event prediction approaches that oversteps the problem considered and application domain. The proposed taxonomy has several practical and theoretical benefits. First, it extends the application domain of existing and new event prediction approaches. Second, opens road for designing and developing more advanced and context-independent techniques. Third, it helps users in selecting the appropriate approach to use in a given problem.

Several points need to be investigated in the future. First, the proposed taxonomy is far from exhaustive. We then intend to extend the present work by considering additional application domains and event types. Second, several event prediction approaches can be used for the same event type. Then, it would be interesting to design a generic guideline or some rules permitting to select the event prediction method to be used in a given problem, which will reduce the cognitive effort required from the expert.

References

Akbar, A., Khan, A., Carrez, F., Moessner, K.: Predictive analytics for complex IoT data streams. IEEE Internet Things J. 4(5), 1571–1582 (2017)

Alaka, H., Oyedele, L., Owolabi, H., Ajayi, S., Bilal, M., Akinade, O.: Methodological approach of construction business failure prediction studies: a review. Constr. Manag. Econ. 34(11), 808–842 (2016)

Austin, P., Lee, D., Steyerberg, E., Tu, J.: Regression trees for predicting mortality in patients with cardiovascular disease: what improvement is achieved by using ensemble-based methods? Biometrical J. 54(5), 657–673 (2012)

Aydin, I., Karakose, M., Akin, E.: The prediction algorithm based on fuzzy logic using time series data mining method. World Acad. Sci. Eng. Technol. 51(27), 91–98 (2009)

Balcaen, S., Ooghe, H.: 35 years of studies on business failure: an overview of the classic statistical methodologies and their related problems. Br. Acc. Rev. 38, 63–93 (2006)

Baldoni, R., Montanari, L., Rizzuto, M.: On-line failure prediction in safety-critical systems. Future Gener. Comput. Syst. 45, 123–132 (2015)

Barbot, S., Lapusta, N., Avouac, J.P.: Under the hood of the earthquake machine: toward predictive modeling of the seismic cycle. Science 336(6082), 707–710 (2012)

Batal, I., Cooper, G., Fradkin, D., Harrison Jr., J., Moerchen, F., Hauskrecht, M.: An efficient pattern mining approach for event detection in multivariate temporal data. Knowl. Inf. Syst. 46(1), 115–150 (2015)

Bergstrom, S.: Development and application of a conceptual runoff model for Scandinavian catchments. Techncial report, SMHI RHO 7 (1976)

Blazkov, S., Beven, K.: Flood frequency prediction for data limited catchments in the Czech Republic using a stochastic rainfall model and topmodel. J. Hydrol. 195(1–4), 256–278 (1997)

Bosse, T., Sharpanskykh, A., Treur, J.: Integrating agent models and dynamical systems. In: Baldoni, M., Son, T.C., van Riemsdijk, M.B., Winikoff, M. (eds.) DALT 2007. LNCS (LNAI), vol. 4897, pp. 50–68. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-77564-5_4

Brunner, G.: HEC-RAS river analysis system hydraulic reference manual. version 5.0. Technical report, Hydrologic Engineering Center, Davis, CA (2016)

Cabedo, J., Tirado, J.: Rough sets and discriminant analysis techniques for business default forecasting. Fuzzy Econ. Rev. 20(1), 3–37 (2015)

Casulli, V., Stelling, G.: Numerical simulation of 3D quasi-hydrostatic, free-surface flows. J. Hydraul. Eng. 124(7), 678–686 (1998)

Cheng, M.Y., Hoang, N.D.: Evaluating contractor financial status using a hybrid fuzzy instance based classifier: case study in the construction industry. IEEE Trans. Eng. Manag. 62(2), 184–192 (2015)

Damle, C., Yalcin, A.: Flood prediction using time series data mining. J. Hydrol. 333, 305–316 (2006)

Denny, M., Hunt, L., Miller, L., Harley, C.: On the prediction of extreme ecological events. Ecol. Monogr. 93(3), 397–421 (2009)

Dodig-Crnkovic, G., Giovagnoli, R.: Computing nature-a network of networks of concurrent information processes. In: Dodig-Crnkovic, G., Giovagnoli, R. (eds.) Computing Nature, vol. 7, pp. 1–22. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-37225-4_1

du Jardin, P.: Bankruptcy prediction using terminal failure processes. Eur. J. Oper. Res. 242(1), 286–303 (2015)

du Jardin, P., Séverin, E.: Predicting corporate bankruptcy using a self-organizing map: an empirical study to improve the forecasting horizon of a financial failure model. Decis. Support Syst. 51(3), 701–711 (2011)

Epstein, J.: Generative Social Science: Studies in Agent-Based Computational Modeling. Princeton University Press, Princeton (2006)

Florido, E., Martínez-Álvarez, F., Morales-Esteban, A., Reyes, J., Aznarte-Mellado, J.: Detecting precursory patterns to enhance earthquake prediction in Chile. Comput. Geosci. 76, 112–120 (2015)

Franz, K., Hartmann, H., Sorooshian, S., Bales, R.: Verification of national weather service ensemble streamflow predictions for water supply forecasting in the colorado river basin. J. Hydrometeorol. 4(6), 1105–1118 (2003)

Fülöp, L., Beszédes, A., Tóth, G., Demeter, H., Vidács, L., Farkas, L.: Predictive complex event processing: a conceptual framework for combining complex event processing and predictive analytics. In: Proceedings of the Fifth Balkan Conference in Informatics, BCI 2012, pp. 26–31. ACM, New York (2012)

Ghil, M., et al.: Extreme events: dynamics, statistics and prediction. Nonlinear Process. Geophys. 18, 295–350 (2011)

Gmati, F.E., Chakhar, S., Lajoued Chaari, W., Chen, H.: A rough set approach to events prediction in multiple time series. In: Mouhoub, M., Sadaoui, S., Ait Mohamed, O., Ali, M. (eds.) IEA/AIE 2018. LNCS (LNAI), vol. 10868, pp. 796–807. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-92058-0_77

Hamerly, G., Elkan, C.: Bayesian approaches to failure prediction for disk drives. In: Proceedings of the Eighteenth International Conference on Machine Learning, ICML 2001, pp. 202–209. Morgan Kaufmann Publishers Inc., San Francisco (2001)

Hull, T.: A deterministic scenario approach to risk management. In: Enterprise Risk Management Symposium, Society of Actuaries, April edn, Chicago, IL, pp. 1–7 (2010)

Iturriaga, F., Sanz, I.: Bankruptcy visualization and prediction using neural networks: a study of U.S. commercial banks. Expert Syst. Appl. 42(6), 2857–2869 (2015)

Devia, G.K., Ganasri, B., Dwarakish, G.: A review on hydrological models. Aquatic Procedia 4, 1001–1007 (2015)

Li, Y., Lawley, M.A., Siscovick, D.S., Zhang, D., Pagán, J.A.: Agent-based modeling of chronic diseases: a narrative review and future research directions. Preventing Chronic Dis. 13 (2016). https://doi.org/10.5888/pcd13.150561

Lin, W.Y., Hu, Y., Tsai, C.F.: Machine learning in financial crisis prediction: a survey. IEEE Trans. Syst. Man Cybern. 42(4), 421–436 (2012)

Hopson, T.M., Webster, P.: A 1–10-day ensemble forecasting scheme for the major river basins of Bangladesh: forecasting severe floods of 2003–07. J. Hydrometeorol. 11(3), 618–641 (2010)

Mannila, H., Toivonen, H., Verkamo, A.I.: Discovery of frequent episodes in event sequences. Data Min. Knowl. Discov. 1(3), 259–289 (1997)

Martínez-Álvarez, F., Troncoso, A., Morales-Esteban, A., Riquelme, J.: Computational intelligence techniques for predicting earthquakes. In: International Conference on Hybrid Artificial Intelligence Systems, pp. 287–294 (2011)

Mdhaffar, A., Rodriguez, I., Charfi, K., Abid, L., Freisleben, B.: CEP4HFP: complex event processing for heart failure prediction. IEEE Trans. NanoBiosci. 16(8), 708–717 (2017)

Merkuryeva, G., Merkuryev, Y., Sokolov, B., Potryasaev, S., Zelentsov, V., Lektauers, A.: Advanced river flood monitoring, modelling and forecasting. J. Comput. Sci. 10, 77–85 (2014)

Meyers, R.: Extreme Environmental Events: Complexity in Forecasting and Early Warning. Springer, New York (2010)

Mitsa, T.: Temporal Data Mining. CRC Press, Boca Raton (2010)

Morales-Esteban, A., Martínez-Álvarez, F., Troncoso, A., Justo, J., Rubio-Escudero, C.: Pattern recognition to forecast seismic time series. Expert Syst. Appl. 37, 8333–8342 (2010)

Morchen, F., Ultsch, A.: Discovering temporal knowledge in multivariate time series. In: Weihs, C., Gaul, W. (eds.) Classification - The Ubiquitous Challenge, pp. 272–279. Springer, Heidelberg (2005). https://doi.org/10.1007/3-540-28084-7_30

Nwogugu, M.: Decision-making, risk and corporate governance: new dynamic models/algorithms and optimization for bankruptcy decisions. Appl. Math. Comput. 179(1), 386–401 (2006)

Povinelli, R.: Time series data mining: identifying temporal patterns for characterization and prediction of time series events. Ph.D. thesis, Marquette University, Milwaukee, WI (1999)

Povinelli, R.J.: Identifying temporal patterns for characterization and prediction of financial time series events. In: Roddick, J.F., Hornsby, K. (eds.) TSDM 2000. LNCS (LNAI), vol. 2007, pp. 46–61. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-45244-3_5

Povinelli, R., Feng, X.: A new temporal pattern identification method for characterization and prediction of complex time series events. IEEE Trans. Knowl. Data Eng. 15(2), 339–352 (2003)

Preston, D., Protopapas, P., Brodley, C.: Event discovery in time series. In: Apte, C., Park, H., Wang, K., Zaki, M. (eds.) Proceedings of the 2009 SIAM International Conference on Data Mining, pp. 61–72. SIAM (2009). https://doi.org/10.1137/1.9781611972795.6

Rafiei, M., Adeli, H.: NEEWS: a novel earthquake early warning model using neural dynamic classification and neural dynamic optimization. Soil Dyn. Earthq. Eng. 100, 417–427 (2017)

Razmi, A., Golian, S., Zahmatkesh, Z.: Non-stationary frequency analysis of extreme water level: application of annual maximum series and peak-over threshold approaches. Water Resour. Manag. 31(7), 2065–2083 (2017)

Sahoo, R., et al.: Critical event prediction for proactive management in large-scale computer clusters. In: Proceedings of the Ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 426–435. ACM, New York (2003)

Samuel, O., Grace, G., Sangaiah, A., Fang, P., Li, G.: An integrated decision support system based on ANN and Fuzzy\(\_\)AHP for heart failure risk prediction. Expert Syst. Appl. 68, 163–172 (2017)

Tak-chung, F.: A review on time series data mining. Eng. Appl. Artif. Intell. 24(1), 164–181 (2011)

Tamari, M.: Financial ratios as a means of forecasting bankruptcy. Manag. Int. Rev. 6(4), 15–21 (1966)

Thielen, J., Bartholmes, J., Ramos, M.H., de Roo, A.: The European flood alert system-part 1: concept and development. Hydrol. Earth Syst. Sci. 13(2), 125–140 (2009)

Vilalta, R., Apte, C., Hellerstein, J., Ma, S., Weiss, S.: Predictive algorithms in the management of computer systems. IBM Syst. J. 41(3), 461–474 (2002)

Vrugt, J., ter Braak, C., Clark, M., Hyman, J.M., Robinson, B.: Treatment of input uncertainty in hydrologic modeling: doing hydrology backward with Markov chain Monte Carlo simulation. Water Resour. Res. 44(12) (2008). https://doi.org/10.1029/2007WR006720

Wang, C., Vo, H., Ni, P.: An IoT application for fault diagnosis and prediction. In: 2015 IEEE International Conference on Data Science and Data Intensive Systems, pp. 726–731. IEEE (2015)

Wang, S.: Online monitoring and prediction of complex time series events from nonstationary time series data. Ph.D. thesis, Rutgers University-Graduate School-New Brunswick (2012)

Wang, Y., Gao, H., Chen, G.: Predictive complex event processing based on evolving Bayesian networks. Pattern Recogn. Lett. 105, 207–216 (2018)

Weiss, G., Hirsh, H.: Learning to predict rare events in categorical time-series data. Techncal report, AAAI (1998). www.aaai.org

Yan, X.B., Lu, T., Li, Y.J., Cui, G.B.: Research on event prediction in time-series data. In: Proceedings of International Conference on Machine Learning and Cybernetics, Shanghai, vol. 5, pp. 2874–2878, August 2004

Yue, S., Ouarda, T., Bobee, B., Legendre, P., Bruneau, P.: The Gumbel mixed model for flood frequency analysis. J. Hydrol. 226, 88–100 (1999)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Gmati, F.E., Chakhar, S., Chaari, W.L., Xu, M. (2019). A Taxonomy of Event Prediction Methods. In: Wotawa, F., Friedrich, G., Pill, I., Koitz-Hristov, R., Ali, M. (eds) Advances and Trends in Artificial Intelligence. From Theory to Practice. IEA/AIE 2019. Lecture Notes in Computer Science(), vol 11606. Springer, Cham. https://doi.org/10.1007/978-3-030-22999-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-22999-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22998-6

Online ISBN: 978-3-030-22999-3

eBook Packages: Computer ScienceComputer Science (R0)