Abstract

A key 2005 collection of papers (Royer 2008) showed how complex the study of mathematical cognition (MC) had become already in the early 2000s, incorporating a broad range of scientific, educational, and humanistic perspectives into its modus operandi. Studies published in the journal Mathematical Cognition have also revealed how truly expansive the field is, bringing together researchers and scholars from diverse disciplines, from neuroscience to semiotics. This volume has aimed to provide a contemporary snapshot of how the study of MC is developing. In this final chapter, the objective is to provide a selective overview of different approaches from the past as a concluding historical assessment.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

A key 2005 collection of papers (Royer 2008) showed how complex the study of mathematical cognition (MC) had become already in the early 2000s, incorporating a broad range of scientific, educational, and humanistic perspectives into its modus operandi. Studies published in the journal Mathematical Cognition have also revealed how truly expansive the field is, bringing together researchers and scholars from diverse disciplines, from neuroscience to semiotics. This volume has aimed to provide a contemporary snapshot of how the study of MC is developing. In this final chapter, the objective is to provide a selective overview of different approaches from the past as a concluding historical assessment.

The interdisciplinary study of MC became a concrete plan of action after the publication of Lakoff and Núñez’s 2000 book, Where Mathematics Comes from, following on the coattails of intriguing works by Dehaene (1997) and Butterworth (1999). Lakoff and Núñez argued that MC is no different neurologically from linguistic cognition, since both involve blending information from different parts of the brain to produce concepts. This is why we use language to learn math. The most salient manifestation of blending in both linguistic and mathematical cognition can be seen in metaphor (as studies in this volume have saliently shown). If metaphor is indeed at the core of MC then it brings mathematics directly into the sphere of language and culture where it is shaped symbolically and textually. This was the conclusion deduced as well by American philosopher Max Black in his groundbreaking 1962 book, Models and Metaphors, in which Black argued that the cognitive source of science and mathematics was the same one that involved the same kind of metaphorical thinking that characterizes discourse. Indirectly, Black laid the foundations for a humanistic-linguistic study of MC with his radical idea for the era in which it was written.

The interdisciplinary study of MC has produced a huge database of findings, theories, and insights into how mathematics intersects with other neural faculties such as language and drawing. The field has not just produced significant findings about how math is processed in the brain, but also reopened long-standing philosophical debates about the nature of mathematics. In this chapter a general characterization of MC that extends the classic views will be discussed at first. Then, it will selectively discuss various works and findings that can be used to determine whether math is separate or not from language, neurologically and cognitively. Finally, it will revisit the Platonist-versus-constructivist debate on the basis of these patterns, which is intrinsically a cognitive debate.

Mathematical Cognition: A Selective Historical Survey

Mathematical cognition is defined in two main ways—first, it is defined as the awareness of structural patterns among quantitative and spatial concepts; second, it is defined as the awareness of how symbols stand for these concepts and how they encode them (for example, Radford 2010).

A historical point of departure for investigating MC is Immanuel Kant’s (1790: 278) assertion that thinking mathematically involves “combining and comparing given concepts of magnitudes, which are clear and certain, with a view to establishing what can be inferred from them.” He argued further that the combination and comparison become explicit through the “visible signs” that we use to represent them—thus integrating the two definitions above predictively. So, a diagram of a triangle (a visible sign) compared to that of a square (another visible sign) will show the differentiation between the two concretely. As trivial as Kant’s definition might seem, upon further consideration it is obvious that the kind of visualization that he describes now falls under the rubric of spatial cognition, and the claim that visible signs guide it is consistent with various psychological and semiotic theories of MC (see, for example, Stjernfelt 2007, Danesi 2013). As we know today, mental visualization stems from the brain’s ability to synthesize scattered bits of information into holistic entities that can be understood consciously through representations such as diagrams.

Kant’s main idea that diagrams reveal thought patterns was given a semiotic-theoretical formulation by Charles Peirce’s existential graph theory (Peirce 1931–1958, vol. 2: 398–433, vol. 4: 347–584). An existential graph is a diagram that displays how the parts of some concept are visualized as related to each other. For example, a Venn diagram can be used to show how sets are related to each other in a holistic visual way. These do not portray information directly, but the process of thinking about it (Peirce, vol. 4: 6). Peirce called his existential graphs “moving pictures of thought” (Peirce, vol. 4: 8–11). As Kiryuschenko (2012: 122) has aptly observed, for Peirce “graphic language allows us to experience a meaning visually as a set of transitional states, where the meaning is accessible in its entirety at any given ‘here and now’ during its transformation.”

The gist of the foregoing discussion is that diagrams and visual signs might mirror the nature of MC itself—an idea that has been examined empirically in abundance (Shin 1994; Chandrasekaran et al. 1995; Hammer 1995; Hammer and Shin 1996, 1998; Allwein and Barwise 1996; Barker-Plummer and Bailin 1997, 2001; Kulpa 2004; Stjernfelt 2007; Roberts 2009). The main implication is that the study of MC must take semiotic notions, such as those by Peirce, into account in order to better explain the findings of neuroscientists in this domain. In effect, diagrams represent our intuitions about quantity, space, and relations in a visually expressive way that appears to mirror the actual imagery in the brain, or more specifically what Lakoff and Johnson (1980) call image schemata—mental outlines of abstractions. The intuitions are probably universal (first type of definition); the visual representations, which include numerals, are products of historical processes (second type of definition).

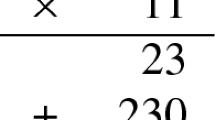

Algebraic notation, too, is a diagrammatic strategy for compressing information, much like pictography does in reproducing referents in compressed semiotic forms (Danesi and Bockarova 2013). An equation is an existential graph consisting of signs (letters, numbers, symbols) organized in such a way as to reflect the structure of events that it aims to represent. It may show that some parts are tied to a strict order, whereas others may be unconstrained as to sequential structure. As Kauffman (2001: 80) observes, Peirce’s existential graphs contain arithmetical information in an economical form:

Peirce’s Existential Graphs are an economical way to write first order logic in diagrams on a plane, by using a combination of alphabetical symbols and circles and ovals. Existential graphs grow from these beginnings and become a well-formed two dimensional algebra. It is a calculus about the properties of the distinction made by any circle or oval in the plane, and by abduction it is about the properties of any distinction.

An equation such as the Pythagorean one (c2 = a2 + b2) is an existential graph, since it is a visual representation of the relations among the variables (originally standing for the sides of the triangle). But, being a graph, it also tells us that the parts relate to each other in many ways other than in terms of the initial triangle referent. It reveals hidden structure, such as the fact that there are infinitely many Pythagorean triples, or sets of three integers that satisfy the equation. Expressed in language (“the square on the hypotenuse is equal to the sum of the squares on the other two sides”), we would literally not be able to see this hidden implication. Once the equation exists as a graph, it becomes the source for further inferences and insights, which (as is well known) gave rise to a hypothesis, namely Fermat’s Last Theorem, whereby only when n = 2 does the general formula hold (cn = an + bn) (Taylor and Wiles 1995). This, in turn, has led to many other discoveries (Danesi 2013). To use Susan Langer’s (1948) distinction between discursive and presentational cognition, the equation tells us much more than the statement (a discursive act) because it “presents” inherent structure holistically, as an abstract form. We do not read a diagram, a melody, or an equation as individual bits and pieces (notes, shapes, symbols), but presentationally, as a totality which encloses and reveals much more meaning. Mathematical notation is visually presentational, which as research has shown, may be the source for how abstract ideas emerge (Barwise and Etchemendy 1994; Allwein and Barwise 1996; Cummins 1996; Chandrasekaran et al. 1995).

Needless to say, mathematicians have always used diagrams to carry out their craft. Some diagrammatic practices, such as Cartesian geometry, become actual fields of mathematics in themselves; set theory, for example, is an ipso facto theory of mathematics, based on Venn diagrams (1880, 1881) which were introduced so that mathematicians could literally see the logical implications of mathematical patterns and laws. These are, as mentioned, externalized image schemata (Lakoff and Johnson 1980, 1999; Lakoff 1987; Johnson 1987; Lakoff and Núñez 2000) which allow us to gain direct cognitive access to hidden structure in mathematical phenomena. Actually, the shift from sentential logic to diagram logic started with Euler, who was the first to represent categorical sentences as intersecting circles, embedded circles, and so on (Hammer and Shin 1996, 1998). It actually does not matter whether the schema is a circle, a square, a rectangle, or a freely drawn form; it is the way it portrays pattern that cuts across language (and languages) and allows us to envision a relation or concept in outline form. The power of the diagrams over sentences lies in the fact that no additional conventions, paraphrases, or elaborations are needed—the relationships holding among sets are shown by means of the same relationships holding among the schemata representing them. Euler was aware, however, of both the strengths and weaknesses of visual representation. For instance, in the statement “No A is B. Some C is A. Therefore, Some C is not B,” no single diagram can be envisioned to represent the two premises, because the relationship between sets B and C cannot be fully specified in one single diagram. Venn (1881: 510) tackled Euler’s dilemma by showing how partial information can be visualized (such as overlaps or intersections among circles). But Peirce pointed out that Venn’s system had no way of representing existential statements, disjunctive information, probabilities, and some specific kinds of logical relations. He argued that “All A are B or some A is B” cannot be represented by neither the Euler nor the Venn systems in a single diagram.

Among the first to investigate the relation between imagery and mathematical reasoning was Jean Piaget, who sought to understand the development of number sense in relation to symbolism (summarized in Piaget 1952). In one experiment, he showed a 5-year-old child two matching sets of six eggs placed in six separate egg cups. He then asked the child whether there were as many eggs as egg cups (or not)—the child usually replied in the affirmative. Piaget then took the eggs out of the cups, bunching them together, leaving the egg cups in place. He then asked the child whether or not all the eggs could be put into the cups, one in each cup and none left over. The child answered negatively. Asked to count both eggs and cups, the child would correctly say that there was the same amount. But when asked if there were as many eggs as cups, the child would again answer “no.” Piaget concluded that the child had not grasped the relational properties of numeration, which are not affected by changes in the positions of objects. Piaget showed, in effect, that 5-year-old children have not yet established in their minds the symbolic connection between numerals and number sense (Skemp 1971: 154).

A key study by Yancey et al. (1989) has shown that training students how to use visualization (diagrams, charts, etc.) to solve problems results in improved performance. As Musser, Burger, and Peterson (2006: 20) have aptly put it: “All students should represent, analyze, and generalize a variety of patterns with tables, graphs, words, and, when possible, symbolic rules.” Another study by Ambrose (2002) suggests, moreover, that students who are taught appropriately with concrete strategies, but not allowed to develop their own abstract representational grasp of arithmetic, are less likely to develop arithmetical fluency.

Is Math Cognition Species Specific?

The study of MC has led to a whole series of existential-philosophical questions. For example: Intuitive number sense may be a cross-species faculty, but the use of symbols to represent numbers is specific human trait. As the philosopher Ernst Cassirer (1944) once put it, we are “a symbolic species,” incapable of establishing knowledge without symbols. So is math cognition specific to the human species?

Neuroscientist Brian Butterworth (1999) is well known for his investigation of this question. He starts with the premise that we all possess a fundamental number sense, which he calls “numerosity.” Numbers do not exist in the brain in the same way verbal signs such as words do; they constitute a separate kind of intelligence with its own brain module, located in the left parietal lobe. But this does not guarantee that mathematical competence will emerge homogeneously in all individuals. It is a phylogenetic trait that varies ontogenetically. Rather, the reason a person falters at math is not because of a “wrong gene” or “engine part” in the brain, but because the individual has not fully developed numerosity, and the reason is due to environmental and personal psychological factors.

Finding hard evidence to explain why numerosity emerged from the course of human evolution is a difficult venture. Nevertheless, there is a growing body of research that is supportive of Butterworth’s basic thesis—that number sense is instinctual and that it may be separate from language. In one study, Izard et al. (2011) looked at Euclidean concepts in an indigenous Amazonian society, called the Mundurucu. The team tested the hypothesis that certain aspects of non-perceptible Euclidean geometry map onto intuitions of space that are present in all humans (such as intuitions of points, lines, and surfaces), even in the absence of formal mathematical training. The subjects included adults and age-matched children controls from the United States and France as well as younger American children without training in geometry. The responses of Mundurucu adults and children converged with that of mathematically educated adults and children and revealed an intuitive understanding of essential properties of Euclidean geometry. For instance, on a surface described to them as perfectly planar, the Mundurucu’s estimations of the internal angles of triangles added up to ∼180 degrees, and when asked explicitly they stated that there exists one single parallel line to any given line through a given point. These intuitions were also present in the group of younger American participants. The researchers concluded that, during childhood, humans develop geometrical intuitions that spontaneously accord with the principles of Euclidean geometry, even in the absence of training in such geometry. There is however contradictory evidence that geometric notions are not innate, but subject to cultural influences (Núñez et al. 1999). In one study, Lesh and Harel (2003) got students to develop their own models of a problem space, guided by prompts. Without the latter, they were incapable of coming up with them. It might be that Euclidean notions may be universal and that these are concretized in specific cultural ways. For now, there is no definitive answer to the issue one way or the other.

The emergence of abilities such as speaking and counting are a consequence of four critical evolutionary events—bipedalism, a brain enlargement unparalleled among species, an extraordinary capacity for toolmaking, and the advent of the tribe as a basic form of human collective life (Cartmill et al. 1986). Bipedalism liberated the fingers to count and gesture. Although other species, including some non-primate ones, are capable of tool use, only in the human species did complete bipedalism free the hand sufficiently to allow it to become a supremely sensitive and precise manipulator and grasper, thus permitting proficient toolmaking and tool use in the species. Shortly after becoming bipedal, the neuro-paleontological evidence suggests that the human species underwent rapid brain expansion. The large brain of modern-day Homo is more than double that of early toolmakers. This increase was achieved by the process of neoteny, that is, by the prolongation of the juvenile stage of brain and skull development in neonates. Like most other species, humans have always lived in groups. Group life enhances survivability by providing a collective form of life. The early tribal collectivities have left evidence that gesture (as inscribed on surfaces through pictography) and counting skills occurred in tandem. This supports the co-development of language and numerosity that Lakoff and Núñez (2000) suggest is part of brain structure.

Keith Devlin (2000, 2005) entered the debate with the notion of an innate “math instinct.” If there is some innate capacity for mathematical thinking, which there must be, otherwise no one could do it, why does it vary so widely, both among individuals in a specific culture and across cultures? Devlin connects the math ability to language, since both are used by humans to model the world symbolically. But this then raises another question: Why, then, can we speak easily, but not do math so easily (in many cases)? The answer, according to Devlin, is that we can and do, but we do not recognize that we are doing math when we do it. As he argues, our prehistoric ancestors’ brains were essentially the same as ours, so they must have had the same underlying abilities. But those brains could hardly have imagined how to multiply 15 by 36 or prove Fermat’s Last Theorem.

One can argue that there are four orders involved in learning how to go from counting to, say, equations. The first is the instinctive ability itself to count. This is probably innate. Using signs to stand for counting constitutes a second order. It is the level at which counting concepts are represented by numeral symbols. The third order is the level at which numerals are organized into a code of operations based on counting processes (adding, taking away, comparing, dividing, and so on). Finally, a fourth order inheres in the capacity to generalize the features and patterns of counting and numeral representations. This is where representations such as equations come into the developmental-evolutionary picture.

Stanislas Dehaene’s (1997) work brings forth experimental evidence to suggest that the human brain and that of some chimps come with a wired-in aptitude for math. The difference in the case of the latter is an inability to formalize this innate knowledge and then use it for invention and discovery. Dehaene has catalogued evidence that rats, pigeons, raccoons, and chimpanzees can perform simple calculations, describing ingenious experiments that show that human infants also show a parallel manifestation of number sense. This rudimentary number sense is as basic to the way the brain understands the world as is the perception of color. But how then did the brain leap from this ability to trigonometry, calculus, and beyond? Dehaene shows that it was the invention of symbolic systems that started us on the climb to higher mathematics. He argues this by tracing the history of numbers, from early times when people indicated a number by pointing to a part of their body (even today, in many societies in New Guinea, the word for six is “wrist”), to early abstract numbers such as Roman numerals (chosen for the ease with which they could be carved into wooden sticks), to modern numerals and number systems. Dehaene argues, finally, that the human brain does not work like a computer, and that the physical world is not based on mathematics—rather, mathematics evolved to explain the physical world the way that the eye evolved to provide sight.

Studies inspired by both Butterworth’s and Dehaene’s ideas have become widespread in MC circles (for example, Ardila and Rosselli 2002; Dehaene 2004; Isaacs et al. 2001; Dehaene et al. 2003; Butterworth et al. 2011). Dehaene (1997) himself showed that when a rat is trained to press a bar 8 or 16 times to receive a food reward, the number of bar presses will approximate a Gaussian distribution with peak around 8 or 16 bar presses. When rats are more hungry, their bar-pressing behavior is more rapid, so by showing that the peak number of bar presses is the same for either well-fed or hungry rats, it is possible to disentangle time from number of bar presses. Similarly, researchers have set up hidden speakers in the African savannah to test natural (untrained) behavior in lions (McComb et al. 1994). The speakers play a number of lion calls, from 1 to 5. If a single lioness hears, for example, three calls from unknown lions, she will leave, but if she is with four of her sisters, they will go and explore. This suggests that not only can lions tell when they are “outnumbered” but also that they can do this on the basis of signals from different sensory modalities, suggesting that numerosity involves a multisensory neural substratum.

Blending Theory

As mentioned above, the study of MC started proliferating and diversifying after Lakoff and Núñez (2000) claimed that the proofs and theorems of mathematics are arrived at via the same cognitive mechanisms that underlie language—analogy, metaphor, and metonymy. This claim has been largely substantiated with neurological techniques such as fMRI and other scanning devices, which have led to adopting the notion of blending, whereby concepts in the brain are sensed as “informing” each other in a common neural substrate (Fauconnier and Turner 2002). Determining the characteristics of this substrate is an ongoing goal of research on MC (Danesi 2016).

Blending can be used, for example, to explain negative numbers. These are derived from two basic metaphors, which Lakoff and Núñez call grounding and linking. Grounding metaphors encode basic ideas, being directly “grounded” in experience. For example, addition develops from the experience of counting objects and then inserting them in a collection. Linking metaphors connect concepts within mathematics that may or may not be based on physical experiences. Some examples of this are the number line, inequalities, and absolute value properties within an epsilon-delta proof of limit. Linking metaphors are the source of negative numbers, which emerge from a connective form of reasoning within the system of mathematics. They are linkage blends, as Alexander (2012: 28) elaborates:

Using the natural numbers, we made a much bigger set, way too big in fact. So we judiciously collapsed the bigger set down. In this way, we collapse down to our original set of natural numbers, but we also picked up a whole new set of numbers, which we call the negative numbers, along with arithmetic operations, addition, multiplication, subtraction. And there is our payoff. With negative numbers, subtraction is always possible. This is but one example, but in it we can see a larger, and quite important, issue of cognition. The larger set of numbers, positive and negative, is a cognitive blend in mathematics … The numbers, now enlarged to include negative numbers, become an entity with its own identity. The collapse in notation reflects this. One quickly abandons the (minuend, subtrahend) formulation, so that rather than (6, 8) one uses -2. This is an essential feature of a cognitive blend; something new has emerged.

This kind of metaphorical (connective) thinking occurs because of gaps that are felt to inhere in the system. As Godino, Font, Wilhelmi, and Lurduy (2011: 250) cogently argue, notational systems are practical (experiential) solutions to the problem of counting:

As we have freedom to invent symbols and objects as a means to express the cardinality of sets, that is to say, to respond to the question, how many are there?, the collection of possible numeral systems is unlimited. In principle, any limitless collection of objects, whatever its nature may be, could be used as a numeral system: diverse cultures have used sets of little stones, or parts of the human body, etc., as numeral systems to solve this problem.

Fauconnier and Turner (2002) have proposed arguments along the same lines, giving substance to the notion that ideas in mathematics are based on blends deriving from experiences and associations within these experiences. Interestingly, the idea that metaphor plays a role in mathematical logic seems to have never been held seriously until very recently, even though, as Marcus (2012: 124) observes, mathematical terms are mainly metaphors:

For a long time, metaphor was considered incompatible with the requirements of rigor and preciseness of mathematics. This happened because it was seen only as a rhetorical device such as “this girl is a flower.” However, the largest part of mathematical terminology is the result of some metaphorical processes, using transfers from ordinary language. Mathematical terms such as function, union, inclusion, border, frontier, distance, bounded, open, closed, imaginary number, rational/irrational number are only a few examples in this respect. Similar metaphorical processes take place in the artificial component of the mathematical sign system.

Actually, already in the 1960s, a number of structuralist linguists prefigured blending theory, by suggesting that mathematics and language shared basic structural properties (Hockett 1967; Harris 1968). Their pioneering writings were essentially exploratory investigations of structural analogies between mathematics and language. They argued, for example, that both possessed the feature of double articulation (the use of a limited set of units to make complex forms ad infinitum), ordered rules for interrelating internal structures, among other things. Many interesting comparisons emerged from these writings, which contained an important subtext—by exploring the structures of mathematics and language in correlative ways, we might hit upon deeper points of contact and thus at a common cognitive origin for both. Those points find their articulation in the work of Lakoff and Núñez and others working within the blending paradigm. Mathematics makes sense when it encodes concepts that fit our experiences of the world—experiences of quantity, space, motion, force, change, mass, shape, probability, self-regulating processes, and so on. The inspiration for new mathematics comes from these experiences as it does for new language.

A classic example of this was Gödel’s famous proof, which Lakoff has argued (see Bockarova and Danesi 2012: 4–5) was inspired by Cantor’s diagonal method. As is well known, Gödel proved that within any formal logical system there are results that can be neither proved nor disproved. Gödel found a statement in a set of statements that could be extracted by going through them in a diagonal fashion—now called Gödel’s diagonal lemma. That produced a statement, S, like Cantor’s C, that does not exist in the set of statements. Cantor’s diagonal and one-to-one matching proofs are mathematical metaphors—associations linking different domains in a specific way (one-to-one correspondences). This insight led Gödel to envision three metaphors of his own: (1) the “Gödel number of a symbol,” which is evident in the argument that a symbol in a system is the corresponding number in the Cantorian one-to-one matching system (whereby any two sets of symbols can be put into a one-to-one relation); (2) the “Gödel number of a symbol in a sequence,” which is manifest in the demonstration that the nth symbol in a sequence is the nth prime raised to the power of the Gödel number of the symbol; and (3) “Gödel’s central metaphor,” which was Gödel’s proof that a symbol sequence is the product of the Gödel numbers of the symbols in the sequence.

The proof exemplifies how blending works. When the brain identifies two distinct entities in different neural regions as the same entity in a third neural region, they are blended together. Gödel’s metaphors come from neural circuits linking a number source to a symbol target. In each case, there is a blend, with a single entity composed of both a number and a symbol sequence. When the symbol sequence is a formal proof, a new mathematical entity appears—a “proof number.”

It is relevant to turn to the ideas of René Thom (1975, 2010) who called discoveries in mathematics “catastrophes,” that is, mental activities that subvert or overturn existing knowledge. He called the process “semiogenesis,” which he defined as the emergence of “pregnant” forms within symbol systems themselves, that is, as forms that emerge by happenstance through contemplation and manipulation of the previous forms. As this goes on, every so often, a catastrophe occurs that leads to new insights, disrupting the previous system. Discovery is indeed catastrophic, but why does the brain produces catastrophes in the first place? Perhaps the connection between the brain, the body, and the world is so intrinsic that the brain cannot really understand itself.

Epilogue: Selected Themes

The chapters of this book span the interdisciplinary scope of MC study, from the empirical to the educational and speculative, as well as examining aspects of mathematical method, such as proof, and what this tells us about the nature of MC. The objective has been twofold: to show how this line of inquiry can be enlarged profitably through an expanded pool of participating disciplines and to shed some new light on math cognition itself from within this pool. Only in this way can progress be made in grasping what math cognition truly is. Together, the chapters of this book constitute a mixture of views, findings, and theories that, when collated, do hopefully give us a better sense of what math cognition is.

References

Alexander, J. (2012). On the cognitive and semiotic structure of mathematics. In: M. Bockarova, M. Danesi, and R. Núñez (eds.), Semiotic and cognitive science essays on the nature of mathematics, pp. 1–34. Munich: Lincom Europa.

Allwein, G. and Barwise, J. (eds.) (1996). Logical reasoning with diagrams. Oxford: Oxford University Press.

Ambrose, C. (2002). Are we overemphasizing manipulatives in the primary grades to the detriment of girls? Teaching Children Mathematics 9: 16–21.

Ardila A. and Rosselli M. (2002). Acalculia and dyscalculia. Neuropsychology Review 12: 179–231.

Barker-Plummer, D. and Bailin, S. C. (1997). The role of diagrams in mathematical proofs. Machine Graphics and Vision 8: 25–58.

Barker-Plummer, D. and Bailin, S. C. (2001). On the practical semantics of mathematical diagrams. In: M. Anderson (ed.), Reasoning with diagrammatic representations. New York: Springer.

Barwise, J. and Etchemendy, J. (1994). Hyperproof. Stanford: CSLI Publications.

Black, M. (1962). Models and metaphors. Ithaca: Cornell University Press.

Bockarova, M., Danesi, M., and Núñez, R. (eds.) (2012). Semiotic and cognitive science essays on the nature of mathematics. Munich: Lincom Europa.

Butterworth B., Varma S., Laurillard D. (2011). Dyscalculia: From brain to education. Science 332: 1049–1053

Butterworth, B. (1999). What counts: How every brain is hardwired for math. Michigan: Free Press.

Cartmill, M., Pilbeam, D., and Isaac, G. (1986). One hundred years of paleoanthropology. American Scientist 74: 410–420.

Cassirer, E. (1944). An essay on man. New Haven: Yale University Press.

Chandrasekaran, B., Glasgow, J., and Narayanan, N. H. (eds.) (1995). Diagrammatic reasoning: Cognitive and computational perspectives. Cambridge: MIT Press.

Cummins, R. (1996). Representations, targets, and attitudes. Cambridge: MIT Press.

Danesi, M. (2013). Discovery in mathematics: An interdisciplinary perspective. Munich: Lincom Europa.

Danesi, M. (2016). Language and mathematics: an interdisciplinary guide. Berlin: Mouton de Gruyter.

Danesi, M. and Bockarova, M. (2013). Mathematics as a modeling system. Tartu: University of Tartu Press.

Dehaene, S. (1997). The number sense: How the mind creates mathematics. Oxford: Oxford University Press.

Dehaene, S. (2004). Arithmetic and the brain. Current Opinion in Neurobiology 14: 218–224.

Dehaene, S., Piazza, M., Pinel, P., and Cohen, L. (2003). Three parietal circuits for number processing. Cognitive Neuropsychology 20: 487–506

Devlin, K. J. (2000). The math gene: How mathematical thinking evolved and why numbers are like gossip. New York: Basic.

Devlin, K. J. (2005). The math instinct: Why you’re a mathematical genius (along with lobsters, birds, cats and dogs). New York: Thunder’s Mouth Press.

Fauconnier, G. and Turner, M. (2002). The way we think: Conceptual blending and the mind’s hidden complexities. New York: Basic.

Gödel, K. (1931). Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme, Teil I. Monatshefte für Mathematik und Physik 38: 173–189.

Godino, J. D., Font, V., Wilhelmi, R. and Lurduy, O. (2011). Why is the learning of elementary arithmetic concepts difficult? Semiotic tools for understanding the nature of mathematical objects. Educational Studies in Mathematics 77: 247–265.

Hammer, E. (1995). Reasoning with sentences and diagrams. Notre Dame Journal of Formal Logic 35: 73–87.

Hammer, E. and Shin, S. (1996). Euler and the role of visualization in logic. In: J. Seligman and D. Westerståhl, (eds.), Logic, language and computation, Volume 1. Stanford: CSLI Publications.

Hammer, E., and Shin, S. (1998). Euler’s visual logic. History and Philosophy of Logic 19: 1–29.

Harris, Z. (1968). Mathematical structures of language. New York: John Wiley.

Hockett, C. F. (1967). Language, mathematics and linguistics. The Hague: Mouton.

Isaacs E. B, Edmonds C. J., Lucas A. and Gadian D. G. (2001). Calculation difficulties in children of very low birthweight: A neural correlate. Brain 124: 1701–1707.

Izard, V. Pica, P., Pelke, E. S., and Dehaene, S. (2011). Flexible intuitions of Euclidean geometry in an Amazonian indigene group. PNAS 108: 9782–9787.

Johnson, M. (1987). The body in the mind: The bodily basis of meaning, imagination and reason. Chicago: University of Chicago Press.

Kant, I. (1790. Critique of pure reason, trans. J. M. D. Meiklejohn. CreateSpace Platform.

Kauffman, L. K. (2001). The mathematics of Charles Sanders Peirce. Cybernetics & Human Knowing 8: 79–110.

Kiryushchenko, V. (2012). The visual and the virtual in theory, life and scientific practice: The case of Peirce’s quincuncial map projection. In: M. Bockarova, M. Danesi, and R. Núñez (eds.), Semiotic and cognitive science essays on the nature of mathematics. Munich: Lincom Europa.

Kulpa, Z. (2004). On diagrammatic representation of mathematical knowledge. In: A. Sperti, G. Bancerek, and A. Trybulec (eds.), Mathematical knowledge management. New York: Springer.

Lakoff, G. (1987). Women, fire and dangerous things: What categories reveal about the mind. Chicago: University of Chicago Press.

Lakoff, G. and Johnson, M. (1980). Metaphors we live by. Chicago: Chicago University Press.

Lakoff, G. and Johnson, M. (1999). Philosophy in flesh: The embodied mind and its challenge to western thought. New York: Basic.

Lakoff, G. and Núñez, R. (2000). Where mathematics comes from: How the embodied mind brings mathematics into being. New York: Basic Books.

Langer, S. K. (1948). Philosophy in a new key. New York: Mentor Books.

Lesh, R. and Harel, G. (2003). Problem solving, modeling, and local conceptual development. Mathematical Thinking and Learning 5: 157.

Marcus, S. (2012). Mathematics between semiosis and cognition. In: M. Bockarova, M. Danesi, and R. Núñez (eds.), Semiotic and cognitive science essays on the nature of mathematics, pp. 98–182. Munich: Lincom Europa.

McComb, K., Packer, C., and Pusey, A. (1994). Roaring and numerical assessment in contests between groups of female lions, Panthera leo. Animal Behavior 47: 379–387.

Musser, G. L., Burger, W. F., and Peterson, B. E. (2006). Mathematics for elementary teachers: A contemporary approach. Hoboken: John Wiley.

Núñez, R., Edwards, L. D., and Matos, F. J. (1999). Embodied cognition as grounding for situatedness and context in mathematics education. Educational Studies in Mathematics 39: 45–65.

Peirce, C. S. (1931–1958). Collected papers of Charles Sanders Peirce, ed. by C. Hartshorne, P. Weiss and A.W. Burks, vols. 1–8. Cambridge: Harvard University Press.

Piaget, J. (1952). The child’s conception of number. London: Routledge and Kegan Paul.

Radford, L. (2010). Algebraic thinking from a cultural semiotic perspective. Research in Mathematics Education 12: 1–19.

Roberts, D. D. (2009). The existential graphs of Charles S. Peirce. The Hague: Mouton.

Royer, J. (ed.) (2008). Mathematical cognition. Charlotte, NC: Information Age Publishing.

Shin, S. (1994). The logical status of diagrams. Cambridge: Cambridge University Press.

Skemp, R. R. (1971). The psychology of learning mathematics. Harmondsworth: Penguin.

Stjernfelt, F. (2007). Diagrammatology: An investigation on the borderlines of phenomenology, ontology, and semiotics. New York: Springer.

Taylor, R. and Wiles, A. (1995). Ring-theoretic properties of certain Hecke algebras. Annals of Mathematics 141: 553–572.

Thom, R. (1975). Structural stability and morphogenesis: An outline of a general theory of models. Reading: John Benjamins.

Thom, R. (2010). Mathematics. In: T. A. Sebeok and M. Danesi (eds.), Encyclopedic dictionary of semiotics, 3rd edition. Berlin: Mouton de Gruyter.

Venn, J. (1880). On the employment of geometrical diagrams for the sensible representation of logical propositions. Proceedings of the Cambridge Philosophical Society 4: 47–59.

Venn, J. (1881). Symbolic logic. London: Macmillan.

Yancey, A., Thompson, C., and Yancey, J. (1989). Children must learn to draw diagrams. Arithmetic Teacher 36: 15–19.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Danesi, M. (2019). Epilogue: So, What Is Math Cognition?. In: Danesi, M. (eds) Interdisciplinary Perspectives on Math Cognition. Mathematics in Mind. Springer, Cham. https://doi.org/10.1007/978-3-030-22537-7_20

Download citation

DOI: https://doi.org/10.1007/978-3-030-22537-7_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22536-0

Online ISBN: 978-3-030-22537-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)