Abstract

This paper presents an improved effective particle swarm optimization algorithm named SCPSO. In SCPSO, in order to overcome disadvantages connected with premature convergence, a new approach associated with the social coefficient is included. Instead of random selected social coefficients, the author has proposed dynamically changing coefficients affected by experience of particles. The presented method was tested on a set of benchmark functions and the results were compared with those obtained through MPSO-TVAC, standard PSO (SPSO) and DPSO. The simulation results indicate that SCPSO is an effective optimization method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Particle swarm optimization (PSO) is a method modeled on the behavior of the swarm of insects in their natural environment. It was developed by Kennedy and Eberhart in 1995 [1,2,3] and nowadays has been successfully applied in many areas of science and engineering connected with optimization [4,5,6,7,8,9,10]. However, likewise other evolutionary optimization methods, PSO can experience some problems related to the convergence speed and escaping from a local optima [11]. Important parameters that affect the effectiveness of PSO are acceleration coefficients called cognitive and social coefficients. The cognitive coefficient affects local search [12] whereas the social coefficient maintains the right velocity and direction of global search. A fine tuning social coefficient can help overcome disadvantages connected with premature convergence and improve the efficiency of PSO.

Many various approaches at the right choice of coefficients have been studied. Eberhart and Kenedy [13] recommended a fixed value of the acceleration coefficient. In their opinion, both social and cognitive coefficients should be the same and equal to 2.0. Ozcan [14] and Clerc [15] in their research agreed that the coefficients should be the same but proved they should rather be equal to 1.494 what results in faster convergence. According to Venter and Sobieszczański [16], the algorithm performs better when coefficients are different and propose to applied a small cognitive coefficient (c1 = 1.5) and a large social coefficient (c2 = 2.5). A different approach has been proposed by Ratnaweera et al. [17]. The authors examined the efficiency of a self-organizing PSO with time-varying acceleration coefficients. They concluded that the PSO performance can be greatly improved by using a simultaneously decreasing cognitive coefficient and an increasing social coefficient. Hierarchical PSO with jumping time-varying acceleration coefficients for real-world optimization was proposed by Ghasemi et al. [18]. The particle swarm optimization with time varying acceleration coefficients were also explored in [19,20,21,22]. A different approach based on the nonlinear acceleration coefficient affected by the algorithm performance was recommended by Borowska [23]. According to Mehmod et al. [24] algorithm PSO performs faster with fitness-based acceleration coefficients. PSO based on self-adaptation acceleration coefficient strategy was suggested by Guo and Chen [25]. A new model on social coefficient was presented by Cai et al. [26]. In order not to lose some useful information inside the swarm, they proposed to use the dispersed social coefficient with an information index about the differences of particles. Additionally, to provide a diversity of the particles, a mutation strategy was also introduced. A novel particle swarm concept based on the idea of two types of agents in the swarm with adaptive acceleration coefficients were considered by Ardizzon et al. [27].

This paper presents an improved effective particle swarm optimization algorithm named SCPSO. In SCPSO, a new approach connected with the social coefficient is proposed in order to better determine the velocity value and search direction of the particles and, consequently, to improve the convergence speed as well as to find a better solution. The presented method was tested on a set of benchmark functions and the results were compared with those obtained through MPSO-TVAC with a time-varying acceleration coefficient [17], the standard PSO (SPSO) and DPSO with the dispersed strategy [26]. The simulation results indicate that SCPSO is an effective optimization method.

2 The Standard PSO Method

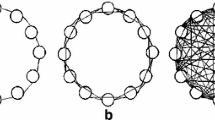

In the PSO method, the optimization process is based on the behavior of the swarm of individuals. In practice, a swarm represents a set of particles each of which is a point in the coordinate system that possess a position represented by a vector Xi = (xi1, xi2, …, xiD) and a velocity represented by a vector Vi = (vi1, vi2, …, viD). In the first step of the algorithm, both the position and velocity of each particle are randomly generated. In subsequent iterations, their positions and velocities in the search space are updated according to the formula:

where the inertia weight w controls the impact of previously found velocity of particle on its current velocity. Factors c1 and c2 are cognitive and social acceleration coefficients, respectively. They decide how much the particles are influenced by their best found positions (pbest) as well as by the best position found by the swarm (gbest). The variables r1 and r2 are random real numbers selected between 0 and 1. In each iteration, the locations of the particles are evaluated based on the objective function. The equation of the objective function depends on an optimization problem. Based on the assessment, each particle keeps its knowledge about the best position that has found so far and the highest quality particle is selected and recorded in the swarm. This knowledge is updated in each step of the algorithm. In this way particles move in the search space towards the optimum.

3 The SCPSO Strategy

The proposed SCPSO is a new variant of the PSO algorithm in which the new approach connected with the social acceleration coefficient has been introduced. In the original PSO, to find an optimal value, particles follow two best values: the best position found by the particle itself and the best value achieved by the swarm. The direction and rate of particle speed are affected by the acceleration coefficients, that are the real numbers, the same in a whole swarm and randomly selected between 0 and 1. It implies that some information connected with swarm behaviours can be lost or not taken into account. Owing to this, a new approach for calculating the social coefficient has been introduced. The value of this coefficient is not constant but is changing dynamically as a function of the maximal and minimal fitness of the particles. It also depends on the current and total numbers of iterations. In each iteration, a different social coefficient is established. The equations describing this relationship are as follows:

where a parameter k determines the current number of iterations, fmin and fmax are the values of maximal and minimal fitness in the current iteration, respectively, itermax describes the maximal number of iterations. To secure the diversity of the particles and help omit local optima, the mutation operator is applied. The proposed approach helps maintain the right velocity values and search direction of the particles and improves the convergence speed.

4 Simulation Results

The presented SCPSO method was tested on the benchmark functions described in Table 1. The results of these tests were compared with the simulation results of the dispersed particle swarm optimization (DPSO) [17], the standard PSO and the modified version (MPSO-TVAC) with a time-varying accelerator coefficient [26].

For all tested functions, the applied population consisted of 100 particles with four different dimension sizes D = 30, 50, 100 and 200, respectively. The inertia weight was linearly decreasing from 0.9 to 0.4. All settings (the acceleration coefficients and their values) regarding the MPSO-TVAC and DPSO were adopted from Ratnawera [17] and Cai [26], respectively. For the modified MPSO-TVAC, c1 coefficient is decreasing from 2.5 to 0.5. The value of c2 coefficient is increasing from 0.5 to 2.5. In the DPSO algorithm, c1 and cup coefficients are equal to 2.0 while the value of clow coefficient is set to 1.0. Detailed settings of the parameter values of all tested methods were collected in Table 2. The details of MPSO-TVAC and DPSO used for comparison can be found in [17] and [26], respectively. For each case, the simulations were run 20 times. The maximum number of iterations depends on the dimension and was equal to 1500 for 30 dimensions, 2500 for 50 dimensions, 5000 for 100 dimensions, and 10000 for 200 dimensions, respectively.

The exemplary results of the simulations are shown in Tables 3, 4, 5, 6 and 7. The presented values have been averaged over 20 trials.

The results of the performed simulations show that the SCPSO algorithm with the proposed approach achieves superior optimization performance over the remaining tested algorithms. In almost all considered cases, the average function values found by the SCPSO algorithm were lower than those achieved by the remaining tested methods. The mean values of Rastrigin, Brown and Schwefel problems achieved by the SCPSO are lower, despite higher (in most cases) standard deviations. For Ackley function, in almost all cases (except the case with 30 dimension) the mean values achieved by SCPSO were lower than those achieved by the other algorithms. The standard deviations were also lower, which indicates greater stability of SCPSO. For 30 dimensions, the outcomes found by SCPSO were a bit worse than the mean values found by MPSO-TVAC but better than the results achieved by DPSO and SPSO.

The proposed approach extends the adaptive capability of the particles and improves their search direction. The mutation strategy helps SCPSO maintain diversity between particles in the search space and facilitates the avoidance of the overcome premature convergence problem.

The increase in the number of particles (with the same dimension) resulted in faster convergence of the algorithms and allowed to find better optimal values. In turn, the decrease in the number of particles in the swarm caused the increase in dispersion of the results, and deterioration of the results.

5 Summary

In this paper, a novel version of particle swarm optimization algorithm called SCPSO has been implemented. The changes are connected with social cooperation and movement of the particles in the search space and has been introduced to improve the convergence speed and to find a better quality solution. The influence of the introduced changes on a swarm motion and performance of the SCPSO algorithm was studied on a set of known benchmark functions.

The results of the described investigations were compared with those obtained through MPSO-TVAC with time-varying accelerator coefficient, the standard PSO and the dispersed particle swarm optimization (DPSO). The proposed strategy improved the algorithm performance. The new algorithm was more effective over MPSO-TVAC, SPSO and DPSO in almost all cases.

References

Kennedy, J., Eberhart, R.C.: Particle swarm optimization. In: IEEE International Conference on Neural Networks, Perth, Australia, pp. 1942–1948 (1995)

Kennedy, J., Eberhart, R.C., Shi, Y.: Swarm Intelligence. Morgan Kaufmann Publishers, San Francisco (2001)

Robinson, J., Rahmat-Samii, Y.: Particle swarm optimization in electromagnetics. IEEE Trans. Antennas Propag. 52, 397–407 (2004)

Guedria, N.B.: Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl. Soft Comput. 40, 455–467 (2016)

Dolatshahi-Zand, A., Khalili-Damghani, K.: Design of SCADA water resource management control center by a bi-objective redundancy allocation problem and particle swarm optimization. Reliab. Eng. Syst. Saf. 133, 11–21 (2015)

Mazhoud, I., Hadj-Hamou, K., Bigeon, J., Joyeux, P.: Particle swarm optimization for solving engineering problems: a new constraint-handling mechanism. Eng. Appl. Artif. Intell. 26, 1263–1273 (2013)

Yildiz, A.R., Solanki, K.N.: Multi-objective optimization of vehicle crashworthiness using a new particle swarm based approach. Int. J. Adv. Manuf. Technol. 59, 367–376 (2012)

Hajforoosh, S., Masoum, M.A.S., Islam, S.M.: Real-time charging coordination of plug-in electric vehicles based on hybrid fuzzy discrete particle swarm optimization. Electr. Power Syst. Res. 128, 19–29 (2015)

Yadav, R.D.S., Gupta, H.P.: Optimization studies of fuel loading pattern for a typical Pressurized Water Reactor (PWR) using particle swarm method. Ann. Nucl. Energy 38, 2086–2095 (2011)

Puchala D., Stokfiszewski K., Yatsymirskyy M., Szczepaniak B.: Effectiveness of fast Fourier transform implementations on GPU and CPU. In: Proceedings of the 16th International Conference on Computational Problems of Electrical Engineering (CPEE), Ukraine, pp. 162–164 (2015)

Shi, Y., Eberhart, R.C.: Empirical study of particle swarm optimization. In: Proceedings of the Congress on Evolutionary Computation, vol. 3, pp. 1945–1950 (1999)

Shi, Y., Eberhart, R.C.: Parameter selection in particle swarm optimization. In: Porto, V.W., Saravanan, N., Waagen, D., Eiben, A.E. (eds.) EP 1998. LNCS, vol. 1447, pp. 591–600. Springer, Heidelberg (1998). https://doi.org/10.1007/BFb0040810

Eberhart, R.C., Kennedy, J.: A new optimizer using particle swarm theory. In: Proceedings of the 6th International Symposium on Micro Machine and Human Science, Japan, pp. 39–43 (1995)

Ozcan, E., Mohan, C.K.: Particle swarm optimization: surfing the waves. In: Proceedings of IEEE Congress on Evolutionary Computation, pp. 1944–1999 (1999)

Clerc, M.: The swarm and the queen: towards a deterministic and adaptive particle swarm optimization. In: Proceedings of ICEC, Washington, DC, pp. 1951–1957 (1999)

Venter, G., Sobieszczanski-Sobieski, J.: Particle swarm optimization. In: Proceedings of 43rd AIAA/ASME/ASCE/AHS/ASC Structure, Structure Dynamics and Materials Conference, pp. 22–25 (2002)

Ratnaweera, A., Halgamuge, S.K., Watson, H.C.: Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans. Evol. Comput. 8(3), 240–255 (2004)

Ghasemi, M., Aghaei, J., Hadipour, M.: New self-organising hierarchical PSO with jumping time-varying acceleration coefficients. Electron. Lett. 53, 1360–1362 (2017)

Chaturvedi, K.T., Pandit, M., Srivastava, L.: Particle swarm optimization with time varying acceleration coefficients for non-convex economic power dispatch. Electr. Power Energy Syst. 31, 249–257 (2009)

Mohammadi-Ivatloo, B., Moradi-Dalvand, M., Rabiee, A.: Combined heat and power economic dispatch problem solution using particle swarm optimization with time varying acceleration coefficients. Electr. Power Syst. Res. 95, 9–18 (2013)

Jordehi, A.R.: Time Varying Acceleration Coefficients Particle Swarm Optimization (TVACPSO): a new optimization algorithm for estimating parameters of PV cells and modules. Energy Convers. Manag. 129, 262–274 (2016)

Beigvand, S.D., Abdi, H., Scala, M.: Optimal operation of multicarrier energy systems using time varying acceleration coefficient gravitational search algorithm. Energy 114, 253–265 (2016)

Borowska, B.: Social strategy of particles in optimization problems. In: Advances in Intelligent Systems and Computing. Springer (2019, in Press)

Mehmood, Y., Sadiq, M., Shahzad, W.: Fitness-based acceleration coefficients to enhance the convergence speed of novel binary particle swarm optimization. In: Proceedings of International Conference on Frontiers of Information Technology (FIT), pp. 355–360 (2018)

Guo, L., Chen, X.: A novel particle swarm optimization based on the self-adaptation strategy of acceleration coefficients. In: Proceedings of International Conference on Computational Intelligence and Security, pp. 277–281. IEEE (2009)

Cai, X., Cui, Z., Zeng, J., Tan, Y.: Dispersed particle swarm optimization. Inf. Process. Lett. 105, 231–235 (2008)

Ardizzon, G., Cavazzini, G., Pavesi, G.: Adaptive acceleration coefficients for a new search diversification strategy in particle swarm optimization algorithms. Inf. Sci. 299, 337–378 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Borowska, B. (2019). Influence of Social Coefficient on Swarm Motion. In: Rutkowski, L., Scherer, R., Korytkowski, M., Pedrycz, W., Tadeusiewicz, R., Zurada, J. (eds) Artificial Intelligence and Soft Computing. ICAISC 2019. Lecture Notes in Computer Science(), vol 11508. Springer, Cham. https://doi.org/10.1007/978-3-030-20912-4_38

Download citation

DOI: https://doi.org/10.1007/978-3-030-20912-4_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20911-7

Online ISBN: 978-3-030-20912-4

eBook Packages: Computer ScienceComputer Science (R0)