Abstract

Technology provides Virtual Reality (VR) with increasing levels of realism: high-performance head-mounted displays enable delivering immersive experiences that provide users with higher levels of engagement. Moreover, VR platforms, treadmills, and motion tracking systems add a physical dimension to interaction that aims at increasing realism by enabling users to use their body to control characters’ movements in a virtual scenario. However, current systems suffer from one main limitation: the physical simulation space is confined, whereas VR supports rendering infinitely large scenarios. In this paper, we investigate the human factors involved in the design of physically-immersive VR environments, with specific regard to the perception of virtual and physical space in locomotion tasks. Finally, we discuss strategies for designing experiences that enable optimizing the use of the available physical space and support larger virtual scenarios without impacting realism.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the recent years, the increasing quality and efficiency of Virtual Reality (VR), combined with the development of high-resolution Head-Mounted Displays (HMD), resulted in novel paradigms such as Immersive Virtual Reality, which are pushing the boundaries of VR experiences. Several studies demonstrated the importance of locomotion in VR [1] and suggested that actual movement results in better immersion and realism than traditional screen-based or immersive VR [2]. Physically-Immersive Virtual Reality (PIVR) enables individuals to walk on omnidirectional platforms and treadmills [3]. Alternatively, higher levels of realism can be achieved using systems that enable users to move in a contained physical space where a low-latency motion-capture infrastructure acquires their position, orientation, and movement, and represents them in the simulated environment, in real-time. As a result, this type of wearable technology for immersive virtual reality detaches the infrastructure from the user [4] who can explore a sophisticated virtual world by walking in an empty room while experiencing the VR scene using a headset; also, they can interact with the environment thanks to specific controllers such as wands, wearable devices [5, 6], and objects equipped with appropriate markers. Consequently, PIVR is especially suitable for applications that require higher levels of engagement. For instance, previous studies proposed its use for training law enforcement officers and emergency responders [7] and for simulating of safety- or mission-critical tasks [4, 8]. Nevertheless, it can enhance gameplay and experiences involving digital art.

Nevertheless, one of the main limitations of PIVR based on motion tracking is the physical space in which users can move, which is constrained by the area of the motion-capture infrastructure and by the size of the room where the system is installed: although virtual environments are potentially infinite, boundaries are set in the VR scene to prevent users from walking out of the motion-sensing area or into a wall. Several devices (e.g., concave omnidirectional platforms that keep users walking on the spot) attempt to address this discrepancy, though they reduce realism because they introduce restraints that prevent free movement and physical interaction between multiple players.

In this paper, we focus on the relationship between physical and virtual spaces in PIVR. Specifically, we analyze the main challenges in the design of scalable VR simulations that are congruent with the limitation of their fixed-size motion-capture infrastructure. Furthermore, we study the human factors involved in the perception of physical and virtual areas, and we detail several methods, such as, folding, layering, and masking, that can be utilized for reducing the perceived size discrepancy. Finally, we discuss the findings of an experiment in which we demonstrate how multiple techniques and geometries can be combined to generate the illusion of a much larger physical area that matches the size of the virtual scene. As a result, small-scale motion-capture systems can support large PIVR simulations without affecting the user experience.

2 Related Work

Physically-Immersive Virtual Reality based on low-latency motion tracking has the potential of taking VR beyond the barriers and limitations of current immersive omnidirectional locomotion platforms. The authors of [7] introduce a modular system based on PIVR for training emergency responders and law enforcement officers with higher levels of realism. In addition, the advantage of the system is two-fold: scenarios can be created and loaded dynamically without requiring any modification to the physical space; moreover, as the system is portable, it represents an affordable alternative to travelling to disaster cities and training grounds. Similarly, [9] describes the concept of a museum that uses PIVR to create a walkable virtual space for artwork. Given its depth and realism, physically immersive VR can open new opportunities for currently available software: in [10], the authors evaluate users’ reaction to alternative input methods and dynamics, such as, motion sickness, in the context of a porting of Minecraft as a tool for promoting user-generated virtual environments [11]. Novel applications include sports, where physically-immersive VR can be utilized as a simulation platform as well as a data acquisition system for evaluating and improving the performance of athletes [12]. Similarly, embodied experiences [13] can be utilized to increase physical engagement of eSports practitioners [14].

Unfortunately, one of the limitations of PIVR is that the size of the virtual scenario is limited by the physical space where the simulation takes place. Although current motion tracking technology enables covering larger areas, this type of infrastructure is associated with high assembly, running, and maintenance costs. Conversely, more affordable commercially-available technology supports tracking users over smaller areas (typically 5 × 5 m). Ultimately, regardless of the potential size of a virtual world, the VR scenario is limited by the infrastructure, which, in turn, defines the boundaries of the physical simulation area. Traditional approaches based on virtual locomotion have been studied in the context of PIVR and primarily consist in techniques for enabling users to navigate large VR environments using controllers [14]. Although they are especially useful when the physical space of the simulation is limited, they affect realism and engagement. Potential solutions based on the use of GPS for tracking the user over open spaces [15] are not suitable for real-time applications. Alternative techniques confine users in the simulation space by creating virtual boundaries that they are not supposed to cross. For example, they position players on a platform where they can move and interact with elements located beyond edges. Appropriate skybox and scenario design give the illusion of being in a larger space, though the walkable area is limited.

Conversely, the aim of our research is to leverage the same physical space of the simulation area in ways that support designing infinitely-large virtual scenarios that conveniently reuse the same physical space without having the users realize it. By doing this, a small simulation area can support a much larger virtual scenario. Different strategies might be suitable for dynamically reconfiguring the virtual scenario in order to reuse the same walkable physical space without the user realizing it, or without affecting user experience. For instance, the virtual space could be organized over multiple levels stacked vertically or horizontally and elements in the scenario (e.g., elevators, corridors, portals, and doors) or narrative components could be utilized to transport the user from one level another.

3 Experimental Study

We developed a preliminary pilot study aimed at evaluating whether it is possible to implement techniques that programmatically change the virtual scenario in a way that triggers users into thinking that they are moving in a physical space that is much larger than the actual simulation area. To this end, we designed an experiment that compares a static scenario with a dynamic environment that programmatically changes as the user walks in the simulation area. Our purpose was to test the concept and study individuals’ awareness of the relation between virtual space and physical space, and to evaluate their reaction and user experience. The goal of this pilot study was to determine efficacy of the experimental design and applicability of the instrument.

The experimental software consisted of a set of virtual scenarios implemented using Unity3D, one of the most popular VR engines. For the purpose of this study, two scenarios were utilized, each representing a simple, square maze surrounded by walls (see Fig. 2), so that subject could explore them.

3.1 Participants

We recruited 21 participants (8 females and 13 males) to realize a preliminary study and test our hypothesis. All participants were aged 18–24 and healthy, drawn from a student population at a medium-sized university in the American Midwest. Most of them were gamers but none had any significant experience with immersive headsets or PIVR before the experiment, other than testing the device for a very short time at exhibitions. Internal Review Board (IRB) approval was granted for this protocol.

3.2 Hardware and Software Setup

The experiment was hosted in an empty space in which we created a dedicated simulation area of 6 x 6 meters. Two base stations were located at two opposite corners facing one another so that they covered the entire area. We utilized an HTC Vive Pro head-mounted display (HMD) equipped with a wireless adapter. This allowed subjects to move freely minimizing spatial cues or safety issues related to corded setups. The wireless setup makes it easier for subjects to be immersed into the virtual environment. The equipment was connected to a desktop PC supporting the requirements of the VR setup. Figure 1 demonstrates the infrastructure of the experiment and its configuration.

The mazes were the same size (approximately 4 × 4 m) and their structure was similar, because they were built using the same type of components. We built three reusable building blocks: each module consists of a square structure measuring 2 × 2 m surrounded by walls and had two openings located on two adjacent sides. The inner configuration of each module was different, in that it contained walls varying in shape and position.

Each module was designed so that they could be attached next to one another by appropriately rotating them and connecting their openings, creating the illusion of a never-ending path. Four modules are utilized to create a walkable 4 × 4 maze enclosed in walls. The height of all the walls, including those surrounding the maze, was the same and it was set at 2.75 m so that subjects could not see over them. The VR scenario was built using 1:1 scale and was placed in the middle of the simulation area. The two mazes utilized in the experiment (see Fig. 3) were different: the static maze was obtained by assembling four blocks that did not change during the experiment. The dynamic maze was implemented by adding a software component that, upon certain events, programmatically replaced the opposite module of the maze with a building block selected at random and appropriately rotated to be consistent with the rest of the structure of the maze. Each module is designed so that the participant cannot see the “exit” opening while standing near the “entrance” opening. This allows for manipulating two modules out of sight of the participant which makes it possible to dynamically generate changes to the maze layout. Colliders (a particular type of trigger in the Unity3D software) were placed in each of the openings: walking through an opening resulted in intersecting a collider which, in turn, triggered the event for replacing the module opposite to the position of the user. This was to prevent the user from witnessing changes happening in the structure of the scenario. As a result, by dynamically changing no more than two pieces at a time, the dynamic maze resulted in a never-ending sequence of randomly-selected modules.

The experimental software was designed to track the subject in real time and collect data from the simulation. Specifically, the position of the VR camera (representing the location of the subject’s head) and its orientation (representing the rotation and line-of-sight of the subject’s head) over three axes were recorded at 1 Hz interval, which was enough for the purpose of the experiment. This, in turn, was utilized to calculate the approximate distance travelled by the subject. Moreover, the simulation software recorded data associated to events (e.g., hitting a collider) when they occurred, including time of the event, subject’s position, and configuration of the maze. In addition to acquiring experimental data, a debriefing mode was developed to enable importing the data points from the experiment and reviewing the path walked by the subject, their orientation, and the events triggered during the test.

3.3 Experimental Protocol and Tasks

Participants entered the experiment room blindfolded (so that they could not see the size of the simulation area) and they were seated on a chair positioned in the simulation area, where they were equipped with the VR headset. Their ears were covered by the earphones of the HMD to avoid auditory cues that could help them estimate the size of the room or their position and orientation. Two assistants closely supervised subjects during the experiment to avoid any incidents and to prevent them from accidentally walking out of the simulation area. The experiment consisted in two different tasks, one involving the static maze (Task 1) and one realized in the programmatically generated maze (Task 2).

Task 1 - static maze. In this task, subjects were asked to keep walking in a direction until they passed a total of six slanted walls (see Fig. 2, item B), which corresponded to 3 laps of the maze.

Task 2 - dynamic maze. This task involved a dynamically-generated maze and asked subjects to walk past six slanted walls as in task 1. However, as the software was programmed to change one module in the scenario and replace it with a random one, the number of lapses was a factor of the dynamic configuration of the maze.

Tasks were divided into trials, each consisting in walking past two slanted walls. After they completed a trial, subjects were interrupted, and they were asked to solve a simple arithmetic problem (i.e., calculate a sum) before continuing. Then, they were asked to indicate the spot in which they started the task by pointing it with their finger its direction. In the third trial, subjects were asked to close their eyes and they were taken to a different location of the maze, before asking them to point the starting spot.

At the beginning of each tasks, subjects were placed in the same spot, located in an inset between two walls, so they could not see the full width of the maze. At the end of each task, subjects were asked to point (with their finger) to the spot in which they started, and to estimate the distance that they walked. At the end of the experiment, subjects were asked to estimate whether the physical space in which they moved was larger or smaller than the virtual space, using a Likert scale, and to report the perceived differences between the two mazes. The experiment had no time limit, but duration was recorded.

4 Results and Discussion

All the subjects were able to successfully complete the experiment and commented that the experience was very realistic, despite the simple structure of the scenario. None of them deviated from the path even if they did not receive any specific instructions, and they avoided inner and outer walls. Table 1 shows a summary of the experimental results. Regardless of individuals’ accuracy in the perception of space, 15 participants (71%) reported that they walked further in the dynamic maze than in the static maze. This is consistent with the structure of the dynamic maze, in which slanted walls were further apart. As a result, participants walked a longer distance, as measured by the experiment software.

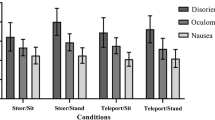

Data show that subjects were able to preserve their spatial awareness in the static maze, as the difference between the actual starting point and the area they indicated was approximately 30°, on average. Conversely, changing the configuration of the maze results in a shift of more than 60°, in the first trial. Indeed, subjects’ spatial awareness degraded in the second trial, in which orientation dispersion increased. Nevertheless, the static maze resulted in 10° increase, whereas the programmatically-generated scenario had the most impact (50° increase). Both trial 1 and 2 show that the dynamic maze is twice as effective in disorienting subjects compared to the static maze. Figure 4 shows the increase in orientation dispersion. Specifically, changing the virtual structure of the maze produced similar results to the effect obtained by physical disorientation obtained by blindfolding subjects and physically moving them in a different location of the maze.

Figure 5 represents the angle distribution of orientation diversion. Data show that static structure had minor impact on spatial awareness, whereas changing the configuration of the maze disoriented individuals. A one-way between-subjects ANOVA was conducted to compare the differences between task 1 and task 2 (first two trials): results show that changing the structure of the maze has a statistically significant effect at p < 0.05 level [F (1,82) = 4.11, p = 0.046)].

Moreover, when they were asked if they noticed any difference between the first and second maze, only 3 subjects (14%) were able to identify the difference between the two mazes, whereas most of the subjects perceived the dynamic maze as larger. All participants associated the static maze to a square shape. In contrast, the dynamic maze was perceived as having a polygonal shape with more than 4 sides by 23% of the subjects.

5 Conclusion and Future Work

PIVR provides incredible opportunities for improving the user experience of virtual environments and opens new possibilities for designing innovative applications studying interaction dynamics, despite the infrastructure constraints limit the available physical space, which, in turns, prevents creating infinitely large, walkable virtual environments.

In this paper, we introduced a new technique for overcoming some of the issues that affect locomotion PIVR especially in small simulation areas. Specifically, we explored the possibility of designing dynamic scenarios that programmatically reconfigure the environment without the users realizing it, so that a new piece of the virtual world unfolds as they walk over the same physical space. Although the small size of the sample might limit the applicability of this study, our findings demonstrate the feasibility of the proposed technique and suggest it as an interesting direction for improving the design of immersive spaces. Our experiment showed that the proposed method can be utilized to modify individuals’ spatial awareness and, consequently, their ability to correctly perceive the dimensional relationship between physical and virtual space without disrupting the user experience. This, in turn, enables to seamlessly generate the feeling of a much larger walkable physical space that matches the size of the virtual world. Additionally, the technique described in this paper can be combined with other methods to further modify users’ perception of the size of the simulation area. In our future work, we will explore factors, such as, size and configuration of the environment, lighting, visual and auditory cues, and cognitive aspects. Furthermore, we will incorporate data from spatial ability tests (e.g., visuospatial imagery, mental rotations test, topographical memory, etc.) to evaluate the relationship between spatial ability in physical and in simulated worlds, to be able to compare our data with previous research [16]. Finally, in a follow-up paper, we will report data regarding to users’ orientation while exploring the maze: as suggested by previous studies on immersive content [17], users might show similar navigation patterns, which, in turn, can be leveraged to introduce changes in the environment that contribute to modifying perception of space.

References

Nabiyouni, M., Saktheeswaran, A., Bowman, D.A., Karanth, A.: Comparing the performance of natural, semi-natural, and non-natural locomotion techniques in virtual reality. In: 2015 IEEE Symposium on 3D User Interfaces (3DUI), pp. 3–10, March 2015. IEEE (2015)

Huang, H., Lin, N.C., Barrett, L., Springer, D., Wang, H.C., Pomplun, M., Yu, L.F.: Analyzing visual attention via virtual environments. In: SIGGRAPH ASIA 2016 Virtual Reality Meets Physical Reality: Modelling and Simulating Virtual Humans and Environments, p. 8, November 2016. ACM (2016)

Warren, L.E., Bowman, D.A.: User experience with semi-natural locomotion techniques in virtual reality: the case of the Virtuix Omni. In: Proceedings of the 5th Symposium on Spatial User Interaction, p. 163, October 2017. ACM (2017)

Grabowski, M., Rowen, A., Rancy, J.P.: Evaluation of wearable immersive augmented reality technology in safety-critical systems. Saf. Sci. 103, 23–32 (2018)

Scheggi, S., Meli, L., Pacchierotti, C., Prattichizzo, D.: Touch the virtual reality: using the leap motion controller for hand tracking and wearable tactile devices for immersive haptic rendering. In: ACM SIGGRAPH 2015 Posters, p. 31, July 2015. ACM (2015)

Caporusso, N., Biasi, L., Cinquepalmi, G., Trotta, G.F., Brunetti, A., Bevilacqua, V.: A wearable device supporting multiple touch-and gesture-based languages for the deaf-blind. In: International Conference on Applied Human Factors and Ergonomics, pp. 32–41, July 2017. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-60639-2_4

Carlson, G., Caporusso, N.: A physically immersive platform for training emergency responders and law enforcement officers. In: International Conference on Applied Human Factors and Ergonomics, pp. 108–116, July 2018. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93882-0_11

Caporusso, N., Biasi, L., Cinquepalmi, G., Bevilacqua, V.: An immersive environment for experiential training and remote control in hazardous industrial tasks. In: International Conference on Applied Human Factors and Ergonomics, pp. 88–97, July 2018. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-94619-1_9

Hernández, L., Taibo, J., Seoane, A., López, R., López, R.: The empty museum. Multi-user interaction in an immersive and physically walkable VR space. In: Proceedings of 2003 International Conference on Cyberworlds, pp. 446–452, December 2003. IEEE (2003)

Porter III, J., Boyer, M., Robb, A.: Guidelines on successfully porting non-immersive games to virtual reality: a case study in minecraft. In: Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play, pp. 405–415, October 2018. ACM (2018)

Lenig, S., Caporusso, N.: Minecrafting virtual education. In: International Conference on Applied Human Factors and Ergonomics, pp. 275–282, July 2018. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-94619-1_27

Cannavò, A., Pratticò, F.G., Ministeri, G., Lamberti, F.: A movement analysis system based on immersive virtual reality and wearable technology for sport training. In: Proceedings of the 4th International Conference on Virtual Reality, pp. 26–31, February 2018. ACM (2018)

Ekdahl, D., Ravn, S.: Embodied involvement in virtual worlds: the case of eSports practitioners. Sport Ethics Philos. 1–13 (2018)

Bozgeyikli, E., Raij, A., Katkoori, S., Dubey, R.: Locomotion in virtual reality for room scale tracked areas. Int. J. Hum.-Comput. Stud. 122, 38–49 (2019)

Hodgson, E., Bachmann, E.R., Vincent, D., Zmuda, M., Waller, D., Calusdian, J.: WeaVR: a self-contained and wearable immersive virtual environment simulation system. Behav. Res. Methods 47(1), 296–307 (2015)

Coxon, M., Kelly, N., Page, S.: Individual differences in virtual reality: Are spatial presence and spatial ability linked? Virtual Real. 20(4), 203–212 (2016)

Caporusso, N., Ding, M., Clarke, M., Carlson, G., Bevilacqua, V., Trotta, G.F.: Analysis of the relationship between content and interaction in the usability design of 360° videos. In: International Conference on Applied Human Factors and Ergonomics, pp. 593–602, July 2018. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-94947-5_60

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Caporusso, N., Carlson, G., Ding, M., Zhang, P. (2020). Immersive Virtual Reality Beyond Available Physical Space. In: Ahram, T. (eds) Advances in Human Factors in Wearable Technologies and Game Design. AHFE 2019. Advances in Intelligent Systems and Computing, vol 973. Springer, Cham. https://doi.org/10.1007/978-3-030-20476-1_32

Download citation

DOI: https://doi.org/10.1007/978-3-030-20476-1_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20475-4

Online ISBN: 978-3-030-20476-1

eBook Packages: EngineeringEngineering (R0)