Zusammenfassung

Routing a connection from its source to its destination is a fundamental component of network design. The choice of route affects numerous properties of a connection, most notably cost, latency, and availability, as well as the resulting level of congestion in the network. This chapter addresses various algorithms, strategies, and tradeoffs related to routing.

At the physical optical layer, connections are assigned a unique wavelength on a particular optical fiber, a process known as wavelength assignment (). Together with routing, the combination of these two processes is commonly referred to as . In networks based on all-optical technology, WA can be challenging. It becomes more so when the physical properties of the optical signal need to be considered. This chapter covers several WA algorithms and strategies that have produced efficient designs in practical networks.

A recent development in the evolution of optical networks is flexible networking, where the amount of spectrum allocated to a connection can be variable. Spectrum assignment is analogous to, though more complex than, wavelength assignment; various heuristics have been proposed as covered in this chapter. Flexible (or elastic) networks are prone to more contention issues as compared to traditional optical networks. To maintain a high degree of capacity efficiency, it is likely that spectral defragmentation will be needed in these networks; several design choices are discussed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Routing a connection (or circuit) from its source to its destination is a fundamental component of network design. The choice of route affects numerous properties of a connection, most notably cost, latency, and availability. Furthermore, from a more macro perspective, routing determines the resulting level of congestion in a network, which may impact the amount of traffic that ultimately can be carried. This chapter addresses various algorithms, strategies, and tradeoffs related to routing. While much of the discourse applies to networks in general, the emphasis is on routing in the physical optical layer. Relevant network terminology and some of the fundamentals of optical systems are introduced in Sect. 12.1.

Network routing is a well-researched topic. Several commonly used routing algorithms have been developed to find an optimal path through the network with respect to a particular property. These algorithms are reviewed in Sect. 12.2. For a connection of relatively low criticality, it is sufficient to calculate a single path from source to destination. However, a failure that occurs along that path will bring the connection down. To guard against such failures, more critical connections are established with some level of protection. Some commonly used protection schemes rely on calculating two or more diverse paths between the source and destination. Optimal disjoint-path routing algorithms exist for this purpose as well, as covered in Sect. 12.3.

As noted above, the routing strategy that is utilized affects the congestion level in the network. Poor routing may lead to unbalanced load in the network, with some sections heavily utilized whereas others are lightly loaded. Such imbalances result in premature blocking in the network where it may not be possible to accommodate a new connection request due to insufficient capacity in portions of the network. Various routing strategies and their effect on network load are discussed in Sect. 12.4.

If multiple connections are being routed at once, then the order in which they are processed may also affect the blocking level. For example, it may be desirable to give precedence to geographically longer connections or connections that must traverse congested areas of the network, as it is likely more challenging to find a suitable path for these connections. Selecting the routing order is, in general, an arbitrary procedure; with rapid design runtimes, several orderings can be tested and the best result selected. Routing order is covered in Sect. 12.5.

Historically, connections in telecommunications networks have been unicast, with a single source and a single destination. However, with services such as video distribution growing in importance, connections directed from one source to multiple destinations are desirable. Establishing a multicast tree from the source to the set of destinations is more capacity efficient than utilizing multiple unicast connections. Furthermore, the optical layer is well suited for delivering multicast services. Section 12.6 discusses routing algorithms that find the optimal, or near-optimal, multicast tree.

The end-to-end path is the high-level view of how a connection is carried in the network. When examined more closely at the physical optical layer, it is necessary to assign a portion of the electromagnetic spectrum to carry the connection at each point along its path. Optical networks typically employ wavelength-division multiplexing () technology, where connections are assigned a unique wavelength on a particular optical fiber and the various wavelengths are multiplexed together into a single WDM signal on that fiber. Selecting the wavelengths to assign to each connection is the process known as wavelength assignment; this topic is introduced in Sect. 12.7. The combination of the routing and wavelength assignment processes is commonly referred to as RWA.

The underlying optical system technology determines the level of difficulty involved with wavelength assignment. With one class of technology, known as optical-electrical-optical (O-E-O), where connections repeatedly go from the optical domain to the electrical domain and back, the wavelengths assigned to connections on one link have no impact on the wavelengths that can be assigned on any other link. With such technology, wavelength assignment is a simple process. O-E-O technology, however, does have numerous drawbacks, as is outlined in Sect. 12.1.

In contrast, optical-optical-optical (O-O-O), or all-optical, technology potentially enables connections to remain in the optical domain from source to destination. While there are many advantages to this technology, it does lead to challenges in the wavelength assignment process. In practice, most networks fall somewhere in between pure O-E-O and O-O-O, where connections enter the electrical domain at intermediate points of the path, but do so infrequently. It is common practice to refer to these networks as all-optical, despite this term being a misnomer; this chapter makes use of this naming convention as well. The respective properties of O-E-O and all-optical networks drive the routing and wavelength assignment processes and are discussed further in Sect. 12.1.

Poor wavelength assignment in all-optical networks can lead to blocking, where the capacity exists to carry a new connection but no wavelength is free to assign to that connection. This is known as wavelength contention. Various wavelength assignment heuristics that are simple to implement yet effective in minimizing wavelength contention have been developed, as outlined in Sect. 12.8.

Another important design decision is whether routing and wavelength assignment should be treated as separate processes or whether they should be combined into a single operation. This dichotomy is discussed in Sect. 12.9.

In an all-optical network, there are numerous practical challenges of wavelength assignment that are tied to the physical optical system. Some of the more important effects are introduced in Sect. 12.10.

A recent development in the evolution of optical networks is flexible networking, introduced in Sect. 12.11. In traditional optical networks, there is a fixed number of wavelengths that can be carried on each optical fiber, and each such wavelength occupies a fixed amount of spectrum. In contrast, with flexible (or elastic) networks, the amount of spectrum allocated to a connection can be variable, potentially leading to a more efficient use of the fiber capacity. Selecting the portion of the spectrum to assign to a given connection is known as spectrum assignment. As elucidated in Sect. 12.12, spectrum assignment is analogous to, though more complex than, wavelength assignment. Various heuristics have been proposed to specifically address the nuances of spectrum assignment.

Flexible networks tend to lead to more contention issues as compared to traditional optical networks. This is especially true if the network is dynamic, such that connections are frequently established and then torn down. Dynamism is expected to result in fragmented spectrum utilization where many small blocks of unutilized spectrum exist as opposed to large contiguous blocks of free spectrum. To maintain a high degree of capacity efficiency, it is likely that spectral defragmentation would need to be performed on a periodic basis, where connections are shifted to different regions of the spectrum (and possibly rerouted) to create larger chunks of available spectrum. Defragmentation techniques are discussed in Sect. 12.12.

Network design can be performed on different time scales. In long-term network planning, there is sufficient time between the planning and provisioning processes such that any additional equipment required by the plan can be deployed. The planning emphasis is on determining the optimal strategy for accommodating a set of demands; the runtime of the design algorithms is not critical. In real-time operation, there is little time between planning and provisioning. It is assumed that the traffic must be accommodated using whatever equipment is already deployed in the network, even if that sacrifices optimality. Any design calculations must be performed quickly (milliseconds to minutes time scale, depending on the requirements of the network). The long-term and real-time dichotomy is also referred to in the literature as offline and online design, respectively. Methodologies for both time scales are discussed in this chapter.

A number of algorithms are introduced in this chapter. Implementation of many of these algorithms, using the C programming language, can be found in [12.1].

1 Terminology

Network terminology that is relevant to the topics of this chapter is defined here. Additionally, a high-level comparison of O-E-O and all-optical networks is provided, with an emphasis on the aspects that affect routing and wavelength assignment.

Network nodes are the sites in the network that source, terminate, and/or switch traffic. Sites that serve only to amplify the optical signal are not considered nodes. Network nodes are depicted as circles in the figures contained here. Network links are the physical optical fibers that run between the nodes, with the fibers typically deployed in pairs. Links are almost always bidirectional, with one fiber of the pair carrying traffic in one direction and the other fiber carrying traffic in the opposite direction. Network links are depicted in figures as a single line. In discussions on routing, links are often referred to as hops.

The interconnection pattern among the nodes represents the network topology. The nodal degree is the number of fiber-pairs incident on a node. The nodal degree may also be defined as the number of links incident on a node. A link may be populated with multiple fiber-pairs, such that the two definitions are not always equivalent.

In a network that employs O-E-O technology (i. e., an O-E-O network), the connection signal is carried in the optical domain on the links but is converted to the electrical domain at each node that is traversed by the connection. An end-to-end path looks as depicted in Fig. 12.1a-ca. The process of O-E-O conversion at each node cleans up the signal; more precisely, the signal is re-amplified, re-shaped, and re-timed. This is known as 3R regeneration. (Note that passing a signal through a line optical-amplifier is considered 1R regeneration, as it only re-amplifies the signal.) Regeneration equipment is needed for each individual signal that traverses a node; i. e., the WDM signal is demultiplexed into its constituent wavelengths and each one of the wavelengths that is routed through the node is passed through a regenerator. (Terminal equipment, as opposed to a regenerator, is used for a wavelength that is destined for the node as opposed to being routed through the node; a regenerator often comprises back-to-back terminal equipment, as discussed further in Sect. 12.7.1.) Thus, a network node may be equipped with hundreds of regenerators. (Limited multiwavelength regeneration has been demonstrated but is still in the early stages of research.)

The connection path from node A to node Z is shown by the dotted line. (a) In an O-E-O network, regeneration occurs at each node along the path of the connection. (b) In a true all-optical network, there is no regeneration along the path. The connection remains in the optical domain from end to end. (c) With an optical reach of \({\mathrm{2000}}\,{\mathrm{km}}\) and the link distances as shown, two regenerations are required for the connection. (© Monarch Network Architects LLC)

The large amount of equipment required for regeneration poses drawbacks in terms of cost, power consumption, reliability, and space requirements. In O-E-O networks, regeneration is the major contributor to these factors in the optical layer. Minimizing the number of nodes that a signal must traverse is desirable, as it minimizes the number of regenerations.

Conversely, a true all-optical signal that remains in the optical domain is illustrated in Fig. 12.1a-cb. No regenerations are present along the end-to-end path. The network nodes are equipped with elements such as reconfigurable optical add/drop multiplexers (s) that allow the signal to remain in the optical domain. This is known as optical bypass of the node.

An important property of all-optical networks is the optical reach, which is the distance that an optical signal can travel before it needs to be regenerated. (In practice, there are numerous factors other than distance that affect where regeneration is required [12.1]. For simplicity, we only consider distance here.) Most optical-bypass-enabled systems have an optical reach on the order of \(1500--3000\,{\mathrm{km}}\). If the length of the end-to-end path is longer than the optical reach, then the signal needs to be regenerated, possibly more than once, as illustrated in Fig. 12.1a-cc. This is more often the case in core networks (also known as backbone networks, long-haul networks, or cross-country networks), where some of the end-to-end paths may be a few thousand kilometers in extent.

Regeneration is almost always performed in a network node as opposed to at an intermediate point along a link. Thus, regeneration typically occurs prior to the signal traveling exactly a distance equal to the optical reach. This may lead to more regeneration being required than predicted by the connection distance. For example, in Fig. 12.1a-cc, the total end-to-end path distance is \({\mathrm{3900}}\,{\mathrm{km}}\), but with an optical reach of \({\mathrm{2000}}\,{\mathrm{km}}\), two regenerations are required, not one. Furthermore, a study performed on several realistic backbone networks indicated that the shortest path was not the path with the fewest number of required regenerations for roughly \({\mathrm{1}}\%\) of the source/destination pairs in those networks [12.1].

Taking advantage of extended optical reach and ROADMs to remove all, or at least most, of the required regeneration provides significant advantages in terms of cost, power consumption, reliability, and space. The drawback is that if a signal traverses a node in the optical domain, then it must be carried on the same wavelength into and out of the node. This is known as the wavelength continuity constraint. (Wavelength conversion in the optical domain is possible; however, it is costly and complex and has not been widely commercialized.) Thus, the presence of optical bypass creates an interdependence among the links of a network, where the wavelength assigned to a connection on one link affects the wavelengths that can be assigned to connections on other links. This leads to wavelength assignment being a critical aspect of network design in all-optical networks. Note that such an interdependence does not exist in O-E-O networks where regeneration at every node along a path allows the wavelengths to be assigned independently on each link.

2 Shortest-Path Routing Algorithms

We first consider algorithms that find a single path that is the shortest between a given source and destination [12.2]. If multiple paths are tied for the shortest, then one of the paths is found. Depending on the algorithm used, ties may be broken differently.

The class of shortest-path algorithms discussed here assumes that each link is assigned a cost metric and that the metric is additive. With an additive metric, the metric for a path equals the sum of the metrics of each link composing that path. Furthermore, there can be no cycles in the network where the sum of the link metrics around the cycle is negative. With these assumptions, a shortest-path algorithm finds the path from source to destination that minimizes the total cost metric.

These algorithms can be applied whether or not the links in the network are bidirectional. A link is bidirectional if traffic can be routed in either direction over the link. Different metrics can be assigned to the two directions of a bidirectional link. However, if the network is bidirectionally symmetric, such that the traffic flow is always two-way and such that the cost metric is the same for the two directions of a link, then a shortest path from source to destination also represents, in reverse, a shortest path from destination to source. In this scenario, which is typical of telecommunications networks, it does not matter which endpoint is designated as the source and which is designated as the destination.

There are numerous metrics that are useful for network routing. If the link metric is the geographic distance of the link, then the shortest-path algorithm does, indeed, find the path with the shortest distance. If all links are assigned a metric of unity (or any constant positive number), then the shortest-path algorithm finds the path with the fewest hops. Using the negative of the logarithm of the link availability as the metric produces the most reliable path, assuming that the failure rate of a path is dominated by independent link failures. (Note that the logarithm function can be used in general to convert a multiplicative metric to an additive metric.) Other useful metrics for routing in an all-optical network are the link noise figure and the link optical signal-to-noise ratio () [12.1].

In spite of the link metric not necessarily being associated with distance, these algorithms are commonly still referred to as shortest-path algorithms.

2.1 Dijkstra Algorithm

The best known shortest-path algorithm is the Dijkstra algorithm [12.2]. The algorithm works by tracking the shortest path discovered thus far to a particular node, starting with a path length of zero for the source node. It considers the resulting length if the path is extended to a neighboring node. If the path to the neighboring node is shorter than any previously discovered paths to that node, then the path to that neighbor is updated. Dijkstra is classified as a greedy algorithm because it makes the optimal decision at each step without looking ahead to the final outcome. Unlike many greedy algorithms, it is guaranteed to find the optimal solution; i. e., the shortest path from source to destination.

2.2 Breadth-First-Search Algorithm

An alternative shortest-path algorithm is breadth-first search () [12.3]. It works by discovering nodes that are one hop away from the source, then the nodes that are two hops away from the source, then the nodes that are three hops away from the source, etc., until the destination is reached.

As with Dijkstra, BFS produces the shortest path from source to destination. Additionally, if there are multiple paths that are tied for the shortest, BFS breaks the tie by finding the one that has the fewest number of hops. This can be useful in all-optical networks where the likelihood of wavelength contention increases with the number of hops in a path. Note that Dijkstra does not have a similar tie-breaking property; its tie-breaking is somewhat more arbitrary (e. g., it may depend on the order in which the link information is stored in the database).

2.3 Constrained Shortest-Path Routing

A variation of the shortest-path problem arises when one or more constraints are placed on the desired path; this is known as the constrained shortest path () problem. Some constraints are straightforward to handle. For example, if one is searching for the shortest path subject to all links of the path having at least \(N\) wavelengths free, then prior to running a shortest-path algorithm, all links with fewer than \(N\) free wavelengths are removed from the topology. As another example, the intermediate steps of the BFS shortest-path algorithm can be readily used to determine the shortest path subject to the number of path hops being less than \(H\), for any \(H> 0\) (similar to [12.4]). However, more generally, the CSP problem can be difficult to solve; for example, determining the shortest path subject to the availability of the path being greater than some threshold, where the availability is based on factors other than distance. Various heuristics have been proposed to address the CSP problem [12.5]. Some heuristics have been developed to specifically address the scenario where there is just a single constraint; this is known as the restricted shortest path () problem. Additionally, a simpler version of the multiconstraint problem arises when any path satisfying all of the constraints is desired, not necessarily the shortest path; this is known as the multiconstrained path () problem. An overview, including a performance comparison, of various heuristics that address the RSP and MCP problems can be found in [12.6].

2.4 \(K\)-Shortest-Paths Routing Algorithms

Routing all connection requests between a particular source and destination over the shortest path can lead to network congestion, as is discussed more fully in Sect. 12.4. It is generally advantageous to have a set of paths to choose from for each source/destination pair. A class of routing algorithms that is useful for finding a set of \(K\) possible paths between a given source and destination is the \(K\)-shortest-paths algorithm. Such an algorithm finds the shortest path, the second shortest path, the third shortest path, etc., up until the \(K\)-th shortest path or until no more paths exist. A commonly used \(K\)-shortest-paths algorithm is Yen’s algorithm [12.7]. As with shortest-path algorithms, the link metrics must be additive along the path.

It is important to note that the \(K\) paths that are found are not necessarily completely diverse with respect to the links that are traversed; i. e., some links may appear in more than one of the \(K\) paths. \(K\)-shortest-paths routing is typically used for purposes of load balancing, not for protection against failures. Explicitly routing for failure protection is covered in Sect. 12.3.

2.5 Shortest-Distance Versus Minimum-Hop Routing

As stated earlier, a variety of link metrics can be used in a shortest-path algorithm. Two of the most common metrics in a telecommunications network are link distance and unity (i. e., 1), producing the path of shortest geographic distance and the path of fewest hops, respectively. The most effective metric to use depends on the underlying network technology.

With O-E-O networks, the cost of a connection in the optical layer is dominated by the number of required regenerations. Regeneration occurs at every node that is traversed by the connection. Thus, utilizing 1 as the metric for all links in order to find the path with the least number of hops (and hence the fewest traversed nodes) is advantageous from a cost perspective. This is illustrated in Fig. 12.2 for a connection between nodes A and Z. Path 1 is the shortest-distance path at \({\mathrm{900}}\,{\mathrm{km}}\) but includes four hops. Path 2, though it has a distance of \({\mathrm{1200}}\,{\mathrm{km}}\), is typically lower cost in an O-E-O network because it has only two hops and, thus, requires fewer regenerations.

Selecting a metric to use with all-optical networks is not as straightforward. Assuming that the number of regenerations required for a connection is dominated by the path distance, then searching for the shortest-distance path will typically minimize the number of regenerations. However, wavelength assignment for a connection becomes more challenging as the number of path hops increases. For all-optical networks, one effective strategy is to generate candidate paths by invoking a \(K\)-shortest-paths algorithm twice, once with distance as the link metric and once with 1 as the link metric. Assuming that link load is not a concern, the most critical factor in selecting one of the candidate paths is typically cost. Thus, the candidate path that requires the fewest number of regenerations is selected. If there are multiple paths tied for the fewest regenerations, then the one with the fewest number of hops is selected.

This strategy is illustrated in Fig. 12.3. Three of the possible paths between nodes A and Z are: Path 1: A–B–C–Z of distance \({\mathrm{2500}}\,{\mathrm{km}}\) and three hops; Path 2: A–D–E–F–G–Z of distance \({\mathrm{1500}}\,{\mathrm{km}}\) and five hops; and Path 3: A–H–I–J–Z of distance \({\mathrm{1600}}\,{\mathrm{km}}\) and four hops. Assume that the optical reach is \({\mathrm{2000}}\,{\mathrm{km}}\), such that there can be no all-optical segment that is longer than this distance. With this assumption, Path 1 requires one regeneration, whereas Paths 2 and 3 do not require any regeneration. Of the latter two paths, Path 3 has fewer hops and is, thus, more desirable despite it being longer than Path 2 (again, assuming that link load is not a factor).

Assume that this is an all-optical network with an optical reach of \({\mathrm{2000}}\,{\mathrm{km}}\). Path 1, A–B–C–Z, has the fewest hops but requires one regeneration. Path 2, A–D–E–F–G–Z, and Path 3, A–H–I–J–Z, require no regeneration. Of these two lowest-cost paths, Path 3 is preferred because it has fewer hops (© Monarch Network Architects LLC)

3 Disjoint-Path Routing for Protection

Network customers desire a certain level of availability for each of their connections. Availability is defined as the probability of being in a working state at a given instant of time. The desired availability is typically specified contractually in the service level agreement () with the network provider. A common cause of connection failure is the failure of one or more links in the end-to-end path. Link failures are typically caused by fiber cuts or optical amplifier failures. Being able to route around the failed link allows the connection to be restored to the working state.

In some protection schemes, it is necessary to first identify which link has failed; the detour route around that failed link is then utilized. Determining the location of a failure can be time consuming. To expedite the restoration process and improve availability, network providers often utilize protection schemes where the same backup path is utilized regardless of where the failure has occurred in the original (i. e., primary) path. This allows the protection process to commence prior to the completion of the fault location process. In order to implement such a failure-independent protection scheme, also known as path protection, it is necessary that the backup path be completely link-disjoint from the primary path. If a very high level of availability is desired for a connection, then it may be necessary to protect against node failures in addition to link failures. In this scenario, the backup path must be completely link and node disjoint from the primary path. However, it should be noted that node failures occur much less frequently than link failures, such that node disjointness is often not required.

Shortest-path algorithms find the single shortest path from source to destination. With path protection, it is desirable to find two disjoint paths where the overall sum of the link metrics on the two paths is minimized. One of the paths is used as the primary path (typically the shorter one) with the other serving as the backup path.

It may seem reasonable to find the desired two paths by invoking a shortest-path algorithm twice. After the first invocation, the links (and intermediate nodes if node disjointness is also required) composing the first path are removed from the network topology that is used in the second call of the algorithm. Clearly, if a path is found by the second invocation, then that path is guaranteed to be disjoint from the first path that was found.

Although this strategy may appear to be reasonable, there are potential pitfalls. First, it inherently assumes that the shortest disjoint pair of paths includes the shortest path. As shown in Fig. 12.4a-c, this is not always the case. In this example, the source is node A and the destination is node Z. Assume that this is an O-E-O network, with all links assigned a metric of 1. The shortest path (i. e., the fewest-hops path) is A–B–C–Z, as shown in Fig. 12.4a-ca. If the links of this path are removed and the shortest-path algorithm is invoked again, then the resulting path is A–F–H–I–J–K–L–Z; see Fig. 12.4a-cb. The sum of the metrics of these two paths is 10. However, the shortest disjoint pair of paths is actually A–B–D–E–Z and A–F–G–C–Z, as illustrated in Fig. 12.4a-cc; this pair of paths has a total metric of 8. Note that the shortest path, A–B–C–Z, is not part of the optimal solution.

The pair of disjoint paths with the fewest number of total hops is desired between nodes A and Z. (a) The first call to the shortest-path algorithm returns the path shown by the dotted line. (b) The network topology after pruning the links composing the shortest path. The second call to the shortest-path algorithm finds the path indicated by the dashed line. The total number of hops in the two paths is ten. (c) The shortest pair of disjoint paths between nodes A and Z is shown by the dotted and dashed lines; the total number of hops in these two paths is only eight (© Monarch Network Architects LLC)

Not only may the two-invocation method produce a suboptimal result, it may fail completely. This is illustrated in Fig. 12.5a-c. Assume that this is an all-optical network, and the metric is the physical distance as shown next to each link. In this figure, the shortest path from A to Z is A–D–C–Z, as shown in Fig. 12.5a-ca. If the links of this path are removed from the topology, as shown in Fig. 12.5a-cb, then no other paths exist between nodes A and Z. Thus, the process fails to find a disjoint pair of paths despite the existence of such a pair, as shown in Fig. 12.5a-cc: A–B–C–Z and A–D–E–Z. This type of scenario, where the two-invocation methodology fails despite the existence of disjoint paths, is called a trap topology.

The shortest pair of disjoint paths is desired between nodes A and Z. (a) The first call to the shortest-path algorithm returns the path shown by the dotted line. (b) The network topology after pruning the links composing the shortest path. The second call to the shortest-path algorithm fails, as no path exists between nodes A and Z in this pruned topology. (c) The shortest pair of disjoint paths between nodes A and Z, shown by the dotted and dashed lines (© Monarch Network Architects LLC)

Rather than using the two-invocation method, it is recommended that an algorithm specifically designed to find disjoint paths be used. The two most commonly used shortest-disjoint-paths algorithms are the Suurballe algorithm [12.8, 12.9] and the Bhandari algorithm [12.3]. Both algorithms make use of a shortest-path algorithm; however, extensive graph transformations are performed as well to ensure that the shortest pair of disjoint paths is found, assuming such a pair exists. Both algorithms require that the link metric be additive and both algorithms are guaranteed to produce the optimal result. They can be utilized to find the shortest pair of link-disjoint paths or the shortest pair of link-and-node-disjoint paths. The runtimes of the Suurballe and Bhandari algorithms are about the same; however, the latter may be more easily adapted to various network routing applications. We focus on its use here.

The Bhandari algorithm is readily extensible. For example, a mission-critical connection may require a high level of availability such that three disjoint paths are needed, one primary with two backups. If a failure occurs on the primary path, the first backup path is used. If a failure occurs on the backup path prior to the primary path being repaired, then the connection is moved to the second backup path. The Bhandari algorithm can be used to find the shortest set of three disjoint paths, if they exist. More generally, the Bhandari algorithm can be used to find the shortest set of \(N\) disjoint paths, for any \(N\), assuming \(N\) such paths exist. (In most optical networks, however, there are rarely more than just a small number of disjoint paths between a given source and destination, especially in a backbone network.)

In some scenarios, completely disjoint paths between the source and destination do not exist. In such cases, the Bhandari algorithm can be utilized to find the shortest maximally-disjoint set of paths. The resulting paths minimize the number of links (and optionally nodes) that are common to multiple paths. This is illustrated in Fig. 12.6, where the shortest maximally-disjoint pair of paths is A–C–D–G–H–Z and A–E–F–D–G–I–J–K–Z. Note that link DG is common to both paths.

3.1 Disjoint-Path Routing with Multiple Sources and/or Destinations

An important variation of the shortest-disjoint-paths routing problem exists when there is more than one source and/or destination, and each of the source/destination paths must be mutually disjoint for protection purposes. (Note that this is different from multicast routing, where the goal is to create a tree from one source to multiple destinations.) This protection scenario arises, for example, when backhauling traffic to multiple sites, utilizing redundant data centers in cloud computing, and routing through multiple gateways in a multidomain topology [12.1]. The example of Fig. 12.7a is used to illustrate the basic strategy. We assume that this is a backhauling example, where node A backhauls its traffic to two diverse sites, Y and Z. (Backhauling refers to the general process of transporting traffic from a minor site to a major site for further distribution.) Additionally, it is required that the paths from A to these two sites be disjoint.

(a) A disjoint path is desired between one source (node A) and two destinations (nodes Y and Z). (b) A dummy node is added to the topology and connected to the two destinations via links that are assigned a metric of zero. A shortest-disjoint-paths algorithm is run between node A and the dummy node to implicitly generate the desired disjoint paths, as shown by the dotted line and the dashed line (© Monarch Network Architects LLC)

In order to apply the shortest-disjoint-paths algorithm, a dummy node is added to the network topology as shown in Fig. 12.7b. Links are added from both Y and Z to the dummy node, and these links are assigned a metric of 0. The shortest-disjoint-paths algorithm is then run with node A as the source and the dummy node as the destination. This implicitly finds the desired disjoint paths as shown in the figure.

A similar strategy is followed if there are \(D\) possible destinations, with \(D\) > 2, and disjoint paths are required from the source to \(M\) of the destinations, with \(M{\leq}D\). Each of the \(D\) destinations is connected to a dummy node through a link of metric 0. An extensible shortest-disjoint-paths algorithm such as the Bhandari algorithm is invoked between the source and the dummy node to find the desired \(M\) disjoint paths. Note that this procedure implicitly selects \(M\) of the \(D\) destinations that produce the shortest such set of disjoint paths.

If the scenario is such that there are multiple sources and one destination, then the dummy node is connected to each of the sources via links with a metric of 0. The shortest-disjoint-paths algorithm is then run between the dummy node and the destination. If there are both multiple sources and multiple destinations, then two dummy nodes are added, one connected to the sources and one connected to the destinations. The shortest-disjoint-paths algorithm is then run between the two dummy nodes. The algorithm does not allow control over which source/destination combinations will result.

3.2 Shared-Risk Link Groups

One challenge of routing in practical networks is that the high-level network topology may not reveal interdependencies among the links. Consider the network topology shown in Fig. 12.8a and assume that it is desired to find the shortest pair of disjoint paths from node A to node Z. From this figure, it appears that the paths A–B–Z and A–Z are the optimal solution. However, the fiber-level depiction of the network, shown in Fig. 12.8b, indicates that links AB and AZ are not fully disjoint. The corresponding fibers partially lie in the same conduit exiting node A such that a rupture to the conduit likely causes both links to fail. These two links are said to constitute a shared-risk link group (). Links that are a member of the same SRLG are not fully disjoint. It is desirable to avoid a solution where an SRLG link is in the primary path and another link in that same SRLG is in the backup path.

(a) In the link-level view of the topology, links AB and AZ appear to be disjoint. (b) In the fiber-level view, these two links lie in the same conduit exiting node A and are, thus, not fully diverse. A single cut to this section of conduit can cause both links to fail. (c) Graph transformation to account for the SRLG extending from node A (© Monarch Network Architects LLC)

For the SRLG configuration depicted in Fig. 12.8b, it is straightforward to find truly disjoint paths. First, a graph transformation is performed as shown in Fig. 12.8c, where a dummy node is added and each link belonging to the SRLG is modified to have this dummy node as its endpoint instead of node A. A link of metric 0 is added between node A and the dummy node. The shortest-disjoint-paths algorithm is run on the modified topology to find the desired solution: paths A–B–Z and A–C–D–Z. (While it is not necessary in this example, for this type of SRLG graph transformation, the shortest-disjoint-paths algorithm should be run with both link and node disjointness required [12.1].)

The scenario of Fig. 12.8 is one class of SRLG, known as the fork configuration. There are several other SRLG configurations that appear in practical networks [12.1, 12.3]. There are no known computationally efficient algorithms that are guaranteed to find the optimal pair of disjoint paths in the presence of any type of SRLG. In some cases, it may be necessary to employ heuristic algorithms that are not guaranteed to find the optimal (shortest) set of disjoint paths [12.10].

4 Routing Strategies

Monitoring network load is an essential aspect of network design. If too much traffic is carried on a small subset of the links, it may result in an unnecessarily high blocking rate, where future connections cannot be accommodated despite the presence of free capacity on most network links. The routing strategy clearly affects the network load. We discuss three of the most common routing strategies here.

4.1 Fixed-Path Routing

The simplest routing strategy is known as fixed-path routing. One path is calculated for each source/destination pair, and that path is utilized for every connection request between those two nodes. If any link along that path has reached its capacity (e. g., 80 connections are already routed on a link that supports 80 wavelengths), then further requests between the source/destination pair are blocked.

This strategy is very simple to implement, as it requires no calculations to be performed on an ongoing basis; all calculations are performed up front. Routing is a simple binary decision: either all links of the precalculated path have available capacity for the new connection, or they do not.

The drawback to fixed-path routing is that it is completely nonadaptive. The same path is selected regardless of the load levels of the links along that path. Such load-blind routing typically leads to uneven load distribution among the links, which ultimately may lead to premature blocking.

Despite this drawback, fixed-path routing is employed by many (if not most) network service providers, where the shortest-distance path is the one path selected for each source/destination pair. The rationale for this strategy is that it minimizes the latency of the connection. (Latency is the propagation delay from source to destination; in the optical layer, latency is typically dominated by the distance of the path.) While there are some network customers where latency differences on the order of microseconds can be critical (e. g., enterprises involved with electronic financial trading [12.11]), for most applications, a tolerance of a few extra milliseconds is acceptable. In most realistic networks, it is possible to find alternative paths that are very close in distance to the shortest path such that the increase in latency is acceptably small. By not considering such alternative paths, fixed-path routing is overly restrictive and can lead to poor blocking performance.

4.2 Alternative-Path Routing

A second routing strategy is alternative-path routing. In contrast to fixed-path routing, a set of candidate paths is calculated for each source/destination pair. At the time of a connection request, one of the candidate paths is selected, typically based on the current load in the network. For example, the candidate path that results in the lowest load on the most heavily loaded link of the path is selected. This enables adaptability to the current load levels in the network.

It is well known that alternative-path routing can lead to lower blocking levels as compared to fixed-path routing [12.12, 12.13]. One study performed by a service provider on its own large backbone network demonstrated one to two orders of magnitude lower blocking probability using alternative-path routing, even when limiting the length of the alternative paths for purposes of latency [12.14].

Furthermore, a large number of candidate paths is not required to achieve significant blocking improvements. Generating on the order of three candidate paths is often sufficient to provide effective load balancing. The key to the success of this strategy lies in the choice of the candidate paths. There is typically a relatively small set of links in a network that can be considered the hot links. These are the links that are likely to be in high demand and, thus, likely to become congested. An effective strategy to determine the expected hot links is to perform a design for a typical traffic profile where all connections in the profile follow the shortest path. The links that are the most heavily loaded in this exercise are generally the hot links.

With knowledge of the likely hot links, the candidate paths for a given source/destination pair should be selected such that the paths exhibit diversity with respect to these links. It is not desirable to have a particular hot link be included in all of the candidate paths, if possible, as this will not provide the opportunity to avoid that link in the routing process. Note that there is no requirement that the candidate paths for a given source/destination be completely diverse. While total path disjointness is necessary for protection, a less severe diversity requirement is sufficient for purposes of load balancing [12.15].

For completeness, we mention one variation of alternative-path routing known as fixed alternative-path routing. In this scheme, a set of candidate paths is generated and ordered. For each connection request, the first of the candidate paths (based on the fixed ordering) that has free capacity to accommodate the request is selected. Thus, load balancing is implemented only after one or more links in the network are full, thereby providing limited benefit as compared to fixed-path routing.

4.3 Dynamic Routing

With dynamic routing, no routes are precalculated. Rather, when a connection request is received by the network, a search is performed for a path at that time. Typically, path selection is based on cost and/or load. For example, consider assigning each link in an O-E-O network a value of \(\mathrm{LARGE}+L_{j}\), where LARGE is a very large constant and \(L_{j}\) is a metric that reflects the current load level on link \(j\). (Any load-related metric can be used, as long as the metric is additive.) Running a shortest-path algorithm with these link values will place the first priority on minimizing the number of hops in the path, due to the dominance of the LARGE component. In an O-E-O network, minimizing the number of hops also minimizes the number of regenerations required. The second priority is selecting a path of minimal load, as defined by the metric. Once the network contains several full or close-to-full links, the priorities may shift such that each link \(j\) is assigned a metric of simply \(L_{j}\). With this assignment, the path of minimal load is selected regardless of the number of hops.

Dynamic routing clearly provides the most opportunity for the path selection process to adapt to the current state of the network. This may appear to be the optimal routing strategy; however, there are important ramifications in an all-optical network. By allowing any path to be selected as opposed to limiting the set of candidate paths to a small set of precalculated paths, connections between a given source/destination pair will tend to follow different paths. This has the effect of decreasing the network interference length, which can potentially lead to more contention in the wavelength assignment process. The interference length is the average number of links shared by two paths that have at least one link in common [12.16]. In addition, if the all-optical network makes use of wavebands, where groups of wavelengths are treated as a single unit, then the diversity of paths produced by a purely dynamic strategy can be detrimental from the viewpoint of efficiently packing the wavebands. Note that wavebands may be required with some of the technology that has been proposed for achieving large fiber capacity in future networks, as is discussed in Sect. 12.12.5 [12.17, 12.18].

Another drawback to dynamic routing is the additional delay inherent in the scheme. This is especially true in a centralized architecture where any route request must be directed to a single network entity (e. g., a path computation element () [12.19, 12.20]). Delays incurred due to communication to and from the PCE, as well as possible queuing delays within the PCE, may add tens of milliseconds to the route calculation process. For dynamic applications that require very fast connection setup, this additional delay may be untenable.

To summarize this section on routing strategies, studies have shown that alternative-path routing provides a good compromise between adaptability and calculation time, and is more amenable to wavelength assignment in an all-optical network. If all candidate paths between a source/destination pair are blocked, then dynamic routing can be invoked.

5 Routing Order

In real-time network operation, connection requests are processed as soon as they are received. Thus, the routing process typically consists of searching for a path for just one connection (if batched routing is employed, then there may be a small number of connections to be routed at one time). In contrast, with long-term network design, routing is likely to be performed on a large traffic matrix comprising hundreds of connections. The order in which the connections are routed may have a significant impact on the ultimate level of network loading and blocking.

An effective ordering approach is to give routing priority to the source/destination pairs for which finding a path is likely to be more problematic. Thus, factors such as the relative locations of the two endpoints, whether or not a disjoint protection path is required for the connection, and whether or not the candidate paths for the connection contain a large number of hot links should be considered when assigning the routing order. For example, finding a path for a source/destination pair that is located at opposite ends of a network is likely to be more difficult than finding a path between an adjacent source and destination. It is typically advantageous to route such a connection early in the process when the links are relatively lightly loaded to minimize the constraints. Similarly, requiring disjoint paths for purposes of protection is already a significant constraint on the possible number of suitable paths. Giving priority to routing connections that require protection may enhance the likelihood that a feasible pair of disjoint paths can be found.

One enhancement of this strategy is to combine it with a metaheuristic such as simulated annealing [12.21]. A baseline solution is first generated based on the priority ordering method described above. The ordering that generated the baseline result is passed to the simulated annealing process. In each step of simulated annealing, the orderings of two connections are swapped, and the routing process rerun. If the result is better (e. g., less blocking or lower levels of resulting load), this new ordering is accepted. If the result is worse, the new ordering is accepted with some probability (this allows the process to extricate itself from local minima). The probability threshold becomes lower as the simulated annealing process progresses. If simulated annealing is run long enough, the overall results are likely to improve as compared to the baseline solution.

Other ordering schemes can be found in [12.1]. With rapid design runtimes, several orderings can be tested and the best result selected.

6 Multicast Routing

Video distribution is one of the major drivers of traffic growth in networks. Such services are characterized by a single source delivering the same traffic to a set of destinations. One means of delivering these services is to establish multiple unicast connections between the source and each of the destinations. Alternatively, a single multicast tree that includes each of the destinations can be established [12.22]. These two options are illustrated in Fig. 12.9. The multicast tree eliminates duplicate routing on various links of the network (e. g., link QR in Fig. 12.9) and is thus more capacity efficient. One study in a realistic backbone network showed that multicast provides a factor of roughly two to three improvement in capacity as compared to multiple unicast connections, where capacity was measured as the average number of wavelengths required on a link [12.1].

A tree that interconnects the source to all of the destinations is known as a Steiner tree (where it is assumed that all links are bidirectionally symmetric; i. e., two-way links with the same metric in both directions). The weight of the tree is the sum of the metrics of all links that compose the tree. As with point-to-point connections, it is often desirable to find the routing solution that minimizes the weight of the tree. Finding the Steiner tree of minimum weight is, in general, a difficult problem to solve unless the source is broadcasting to every node in the network. However, several heuristics exist to find good approximate solutions to the problem [12.23]. Two such heuristics are minimum spanning tree with enhancement () [12.24, 12.25] and minimum paths () [12.26]. Examples of these heuristics, along with their C-code implementation, can be found in [12.1]. Based on studies utilizing realistic backbone networks, MP tends to produce better results, although the relative performance of MSTE improves as the number of destinations increases [12.1]. In the case of broadcast, an algorithm such as Prim’s or Kruskal’s can be used to optimally find the tree of minimum weight [12.2].

The multicast algorithms enumerated above provide a single path from the source to any of the destinations. If a failure occurs along that path, connectivity with that destination is lost. Furthermore, due to the tree topology, a link failure along the tree often disconnects several destinations. Providing protection for a multicast tree, where connectivity with all destinations is maintained regardless of any single link failure, can be cumbersome and may require a large amount of protection resources.

Various strategies have been devised for providing multicast protection [12.27, 12.28, 12.29]. One approach common to several of these strategies is to make use of segment-based protection, where the multicast tree is conceptually partitioned into segments, and each segment is protected separately. A very different concept that has been applied to multicast protection is network coding [12.30, 12.31, 12.32]. With this approach, which has applications beyond just multicast protection, the destinations receive independent, typically linear, combinations of various optical signals rather than the individual optical signals that originated at the source. Processing at one or more nodes is required to create these signal combinations. The processing is preferably performed in the optical domain, but electrical processing may be required to generate more complex signal combinations. With proper processing of the received data, the destination can recreate the original signals. For protection purposes, the transmissions are sent over diverse paths and the signal combinations are such that if one signal is lost due to a failure, it can be recovered (almost) immediately from the other signals that are received. To mine the full benefits of network coding, there must be multiple signals that can be advantageously combined, as is often the case when routing a large amount of multicast traffic. Network coding may result in a more efficient use of network resources; however, even if the amount of required capacity is approximately the same as in a more conventional shared-mesh restoration approach, the recovery time is typically much faster.

Another important design aspect of multicast routing in an all-optical network is the selection of regeneration sites, where judicious use of regeneration may reduce the cost of the multicast tree. For example, it may be advantageous to favor the branching points of a multicast tree for regeneration. Refer to the tree of Fig. 12.10a,b, where the source is node Q, and the multicast destinations are nodes W, X, Y, and Z. Assume that the optical reach is \({\mathrm{2000}}\,{\mathrm{km}}\). In Fig. 12.10a,ba, the signal is regenerated at the furthest possible node from the source without violating the optical reach. This results in regenerations at nodes S and T. If, however, the regeneration occurs at the branching point node R as in Fig. 12.10a,bb, then no other regeneration is needed, resulting in a lower cost solution.

6.1 Manycast Routing

In one variation of multicast routing, only \(N\) of the \(M\) destinations must be reached by the multicast tree, where \(N<M\). The routing goal is still to produce the lowest-weight multicast tree; however, the routing algorithm must incorporate the selection of the \(N\) destinations as well. This scenario is known as manycast.

Manycast is useful in applications such as distributed computing. For example, there may be \(M\) processing centers distributed in the network, but an end user requires the use of only \(N\) of the processors. The goal of the end user is typically to minimize latency; it does not have a preference as to which \(N\) data centers are utilized. Optimal, or near-optimal, manycast routing, where the link metric is distance, is useful for such a scenario.

Various heuristic algorithms have been proposed for manycast routing, where the goal is to find the manycast tree of lowest weight. One effective heuristic in particular is a variation of the MP multicast algorithm [12.33].

7 Wavelength Assignment

Routing is one critical aspect of network design. Another important component is wavelength assignment, where each routed connection is assigned to a portion of the spectrum centered on a particular wavelength. Each time a connection enters the electrical domain, the opportunity exists (typically) to change the wavelength to which the connection has been assigned. The wavelength assignment process must satisfy the constraint that no two connections can be assigned the same wavelength on a given fiber. If this constraint were to be violated, the connections would occupy the same portion of the spectrum and interfere with each other. Note that when a link is populated with multiple fiber pairs, there can be multiple connections carried on the same wavelength on the link as long as each of the connections is routed on a different fiber.

7.1 Interaction Between Regeneration and Wavelength Assignment

As discussed in Sect. 12.1, all traffic that is routed through a node in an O-E-O network is regenerated. Regeneration is most commonly accomplished through the use of two back-to-back transponders, as shown in Fig. 12.11. A signal enters and exits a transponder in the optical domain but is converted to the electrical domain internally. The transponders interface to each other on a common wavelength (typically \({\mathrm{1310}}\,{\mathrm{nm}}\)) through what is known as the short-reach interface. At the opposite end, the transponder receives and transmits a WDM-compatible wavelength, where the wavelength is generally in the \({\mathrm{1500}}\,{\mathrm{nm}}\) range. The WDM-compatible wavelengths that are received/transmitted by the two transponders (i. e., \(\lambda_{j}\) and \(\lambda_{k}\) in Fig. 12.11) can be selected independently, thereby enabling wavelength conversion. (Wavelengths are also referred to as lambdas, with wavelength \(j\) represented by \(\lambda_{j}\).)

Because of the flexibility afforded by regeneration, wavelengths can be assigned arbitrarily in an O-E-O network as long as each connection on a fiber is assigned a unique wavelength. This is illustrated in Fig. 12.12, where the lower most connection enters the node from the West link on wavelength 1 and exits the node on the East link on wavelength 4. In the reverse direction, wavelength 4 is converted to wavelength 1. (By convention, the nodal fibers at a degree-two node are referred to as West and East; it may have no correlation to the actual geography of the node.) There is no requirement that the wavelength must be changed. As shown in Fig. 12.12, another connection enters and exits the node on \(\lambda_{2}\).

A representative node in an O-E-O network. Wavelengths can be assigned independently to the connections on each link as long as each wavelength assigned on a fiber is unique. The lower-most connection is carried on \(\lambda_{1}\) on the West link and carried on \(\lambda_{4}\) on the East link (© Monarch Network Architects LLC)

In contrast, signals in an all-optical network are not regenerated at every node along the path. It is only required that a signal be regenerated prior to it traveling a distance that is longer than the system's optical reach. If a signal is not regenerated at a node (i. e., it traverses the node in the optical domain), then it is carried on the same wavelength into and out of the node. More generally, if the signal traverses \(N\) consecutive nodes in the optical domain, then it is carried on the same wavelength on \(N+1\) links. The wavelength assignment process consists of finding a single wavelength that is available on each of these links. We use the term all-optical segment to refer to a portion of a connection that rides in the optical domain without any conversion to the electrical domain.

The difficulty of assigning wavelengths clearly depends on two factors: the number of links in an all-optical segment and the utilization level on those links. As the number of links in an all-optical segment increases, the difficulty in finding a wavelength that is free on each one of the links typically increases as well. Similarly, as the utilization level of a link increases, fewer wavelengths are available, thus making it less likely that a free wavelength can be found on the entire all-optical segment.

This presents an interesting tradeoff. Each regeneration requires the deployment of two transponders, adding to the cost, power consumption, and failure rate of the associated connection. However, wavelength assignment becomes simpler the more regeneration that is present. This is illustrated in Fig. 12.13. Figure 12.13a shows a true all-optical connection that remains in the optical domain from node A to node Z. In order to find a suitable wavelength to assign to this connection, the same wavelength must be available on all seven links that compose this end-to-end path. Figure 12.13b shows that same connection with an intermediate regeneration at node D. The presence of regeneration simplifies the wavelength assignment process. One needs to find a wavelength that is free on the three links from node A to node D and a wavelength that is free on the four links from node D to node Z. There is no requirement that these two wavelengths be the same.

(a) The entire end-to-end connection is carried in the optical domain. The same wavelength must be available on all seven links traversed by the connection. (b) The connection is regenerated at node D, creating two all-optical segments: AD and DZ. These two segments can be assigned a different wavelength. (c) Assume that an available wavelength cannot be found from A to D, but \(\lambda_{j}\) is available from A to C, and \(\lambda_{k}\) is available from C to D. An additional regeneration can be added at node C to make wavelength assignment feasible for this connection (© Monarch Network Architects LLC)

If wavelength assignment fails for a connection, where a suitable wavelength cannot be found for one or more of the all-optical segments composing the connection’s path, then at least four design options exist. First, the connection request can be blocked. Second, it may be possible to select different regeneration locations for the connection, thereby producing a different set of all-optical segments. Wavelength assignment may be feasible on this alternate set of segments. For example, in Fig. 12.1a-cc (this figure appeared in Sect. 12.1), the two required regenerations are chosen to be at nodes C and F, producing the all-optical segments AC, CF, and FZ. Alternatively, the two regenerations could be placed at nodes B and E without violating the optical-reach constraint (there are other alternatives as well). This choice produces a completely different set of all-optical segments: AB, BE, and EZ. If moving the regenerations does not produce a feasible wavelength assignment, or if there are no regenerations for the connection, then a third option is to route the connection on a different path and re-attempt the wavelength assignment process on the new path. As a fourth option, the connection can remain on the same path, but one or more regenerations can be added even though they are not required due to optical-reach concerns, thus incurring greater cost. This last option is illustrated in Fig. 12.13c. Assume that a wavelength is available along the DZ segment, but no single wavelength is available on all of the links of the AD segment. Furthermore, assume that \(\lambda_{j}\) is available on the links from node A to node C, and \(\lambda_{k}\) is available on the link between node C and node D. By adding a regeneration at node C (and its attendant costs), wavelength assignment for this connection becomes feasible.

Numerous studies have been performed to study the level of blocking that results due to a failure of the wavelength assignment process (assuming extra regenerations cannot be added to alleviate wavelength contention). The consensus of the majority of these studies is that sparse regeneration provides enough opportunities for wavelength conversion, resulting in a relatively low level of blocking due to wavelength contention [12.1, 12.12, 12.34, 12.35]. In a continental-scale network, the regeneration that is required based on optical reach is minimal (e. g., three regenerations in a connection that extends from the East coast to the West coast in a United States backbone network) but sufficient to achieve low levels of wavelength contention. In networks of smaller geographic extent, e. g., metro networks, the optical reach may be longer than any end-to-end connection such that regeneration is not required. However, there are other network functionalities that limit the extent of any all-optical segment. For example, low-data-rate traffic may need to be processed periodically by a grooming switch or router to make better use of the fiber capacity. Currently, grooming devices operate in the electrical domain such that the grooming process concurrently regenerates the signal. Thus, sparse grooming translates to sparse regeneration, which, in turn, allows sparse wavelength conversion. The net effect is that wavelength contention can remain low in a range of networks. Furthermore, it has been shown that just a small amount of extra regeneration effectively eliminates wavelength contention in a typical network [12.35].

It should be noted that some studies appear to indicate that wavelength contention is a major problem that results in excessive blocking. Further investigation of the details of these studies may reveal flaws in the underlying assumptions. For example, the study may not take advantage of regeneration as an opportunity to convert the wavelength. Another possible weakness is that effective wavelength assignment algorithms may not be employed. Wavelength assignment algorithms are covered in the next section.

8 Wavelength Assignment Algorithms

In this section, we assume that routing and wavelength assignment are two separate steps; Sect. 12.9 considers integrated approaches. It is assumed that one or more new connection requests are passed to the network design process. In the first step, each connection in the set is routed. Once a path has been selected for a connection, the required regeneration locations along that path are determined. This yields a set of all-optical segments (i. e., the portions of the paths that lie between an endpoint and a regeneration or between two regenerations). The list of all-optical segments is passed to the wavelength assignment algorithm, which is responsible for selecting a feasible wavelength for each segment. In order for a wavelength assignment to be feasible, any two all-optical segments that are routed on the same fiber at any point along their respective paths must be assigned different wavelengths. Furthermore, the wavelength assignment must be compatible with the technology of the underlying optical system. An optical system supports a limited number of wavelengths on a fiber, thereby placing a bound on the number of different wavelengths that can be used in the assignment process. For example, a backbone network system may support 80 wavelengths on a fiber, whereas a metro network deployment may support only 40 wavelengths (there are typically fewer wavelengths needed in a metro network and 40-wavelength technology is of lower cost than 80-wavelength technology).

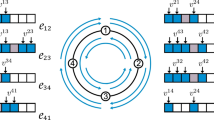

The wavelength assignment problem is analogous to the graph coloring problem, where each node of a graph must be assigned a color subject to the constraint that any two nodes that are adjacent in the graph topology must be assigned different colors. The objective is to color the graph using as few colors as possible. To elucidate the analogy with wavelength assignment, let each all-optical segment in a network design correspond to a node in the coloring graph. Links are added between any two nodes of the coloring graph if the paths of the two corresponding all-optical segments have any fibers in common. The resulting graph is known as the conflict graph, which is illustrated for a small example with four all-optical segments in Fig. 12.14. (This graph is also called the auxiliary graph.) Solving the graph coloring problem on this graph produces the wavelength assignment; i. e., the color assigned to a node represents the wavelength assigned to the all-optical segment corresponding to that node. The resulting wavelength assignment is such that the minimum number of wavelengths is used.

(a) Four all-optical segments, each one numbered, are routed as indicated by the dotted lines. It is assumed that there is one fiber-pair on each link. (b) The resulting conflict graph, where each node represents one of the all-optical segments. A link exists between two nodes if the corresponding all-optical segments are routed on the same fiber on any link in the original graph

There are no known polynomial-time algorithms for optimally solving the graph coloring problem for general instances of the problem. This implies that there are no corresponding efficient algorithms that can optimally solve general instances of the wavelength assignment problem. Thus, heuristic algorithms are typically used. The heuristics encompass two aspects: (1) generating the order in which the all-optical segments are assigned a wavelength and (2) selecting a wavelength for each of the segments. As with routing order, there are many strategies that can be used for ordering the all-optical segments. Some of the strategies developed for ordering the nodes in a graph coloring can readily be extended to this problem, most notably the Dsatur strategy [12.36].

Developing heuristics to select which wavelength to assign to an all-optical segment is a well-researched topic, and numerous such heuristics have been proposed [12.37]. They differ in factors such as complexity and the amount of network-state information that needs to be monitored. In spite of the array of proposals, two of the simplest heuristics, both proposed in the very early days of optical-network research, remain the algorithms most commonly used for wavelength assignment. These heuristics are first-fit and most-used [12.38], described in further detail below. Both of these algorithms are suitable for any network topology and provide relatively good performance in realistic networks. For example, wavelength contention does not generally become an issue until there are at least a few links in the network with roughly \({\mathrm{85}}\%\) of the wavelengths used. Whether first-fit or most-used performs better for a particular network design depends on the network topology and the traffic. In general, the differences in performance are small. One advantage of first-fit is that, in contrast to most-used, it does not require any global knowledge, making it more suitable for distributed implementation.

Either of the schemes can be applied whether there is a single fiber-pair or multiple fiber-pairs on a link. Note that there are wavelength assignment schemes specifically designed for the multiple fiber-pair scenario, most notably the least-loaded scheme [12.12]. This has been shown to perform better than first-fit and most-used when there are several fiber-pairs per link [12.37]. As fiber capacities have increased, however, systems with several fiber-pairs on a link have become a less common occurrence.

8.1 First-Fit Algorithm

In the first-fit algorithm, each wavelength is assigned an index from 1 to \(W\), where \(W\) is the maximum number of wavelengths supported on a fiber. No correlation is required between the order in which a wavelength appears in the spectrum and the index number assigned. The indices remain fixed as the network design evolves. Whenever wavelength assignment is needed for an all-optical segment, the search for an available wavelength proceeds in an order from the lowest index to the highest index. The first wavelength that is available on all links that compose the all-optical segment is assigned to the segment. First-fit is simple to implement and requires only that the status of each wavelength on each link be tracked.

Due to the interdependence of wavelength assignment across links, the presence of failure events, and the presence of network churn (i. e., the process of connections being established and then later torn down), the indexing ordering does not guarantee the actual assignment order on a particular link. Thus, relying on the indexing scheme to enforce a particular assignment ordering on a link for performance purposes is not prudent; for more details, see [12.1].

8.2 Most-Used Algorithm

The most-used algorithm is more adaptive than first-fit but requires more computation. Whenever a wavelength needs to be assigned to an all-optical segment, a wavelength order is established based on the number of link-fibers on which each wavelength has already been assigned in the network. The wavelength that has been assigned on the most link-fibers already is given the lowest index, and the wavelength that has been assigned on the second-most link-fibers is given the second lowest index, etc. Thus, the indexing order changes depending on the current state of the network. After the wavelengths have been indexed, the assignment process proceeds as in first-fit. The motivation behind this scheme is that a wavelength that has already been assigned on many fibers will be more difficult to use again. Thus, if a scenario arises where a heavily-used wavelength can be used, it should be assigned.

9 One-Step RWA

When routing and wavelength assignment are treated as separate steps in network design, it is possible that the routing process produces a path on which the wavelength assignment process fails (assuming extra regenerations are not added to alleviate the encountered wavelength contention). Alternatively, one can consider integrated routing and wavelength assignment methodologies such that if a path is selected, it is guaranteed to be feasible from a wavelength assignment perspective as well.

Various one-step RWA methodologies are discussed below, all of which impose additional processing and/or memory burdens. When the network is not heavily loaded, implementing routing and wavelength assignment as independent steps typically produces feasible solutions. Thus, under these conditions, the multistep process is favored, as it is usually faster. However, under heavy load, using a one-step methodology can provide a small improvement in performance [12.1]. Furthermore, under heavy load, some of the one-step methodologies may be more tractable, as the scarcity of free wavelengths should lead to lower complexity.

9.1 Topology Pruning

One of the earliest advocated one-step algorithms starts with a particular wavelength and reduces the network topology to only those links on which this wavelength is available. The routing algorithm (e. g., a shortest-path algorithm) is run on this pruned topology. If no path can be found, or if the path is too circuitous, another wavelength is chosen and the process run through again on the corresponding pruned topology. The process is repeated with successive wavelengths until a suitable path is found. With this approach, it is guaranteed that there will be a free wavelength on any route that is found. If a suitable path cannot be found after repeating the procedure for all of the wavelengths, the connection request is blocked.

In a network with regeneration, using this combined routing and wavelength assignment procedure makes the problem unnecessarily more difficult because it implicitly searches for a wavelength that is free along the whole extent of the path. As discussed earlier, it is necessary to find a free wavelength only along each all-optical segment, not along the end-to-end connection. One variation of the scheme is to select ahead of time where the regenerations are likely to occur for a connection and apply the combined routing and wavelength assignment approach to each expected all-optical segment individually. However, the route that is ultimately found could be somewhat circuitous and require regeneration at different sites than where was predicted, so that the process may need to be run through again. Overall, this strategy is less than ideal.

9.2 Reachability-Graph Transformation

A more direct integrated RWA approach is to create a transformed graph whenever a new connection request needs to be routed and assigned a wavelength, where the newly-formed graph represents feasible all-optical segments; i. e., feasible with respect to both optical reach and available wavelengths [12.1, 12.39]. The process to form this graph, specifically selecting the nodes that are added to this graph, depends on the timeframe of the network design. In long-term network planning, it is assumed that there is time to deploy any equipment that may be required for a design. Under these planning conditions, every network node of the real network topology appears in the transformed graph. In contrast, with real-time network operation, the required connection setup times do not allow for equipment to be deployed; only equipment that is already available in the network can be utilized for the new traffic. In this scenario, only nodes with available regeneration equipment, plus the source and the destination of the new connection request, are added to the transformed graph.

After adding the nodes to the transformed graph, a link is added between a pair of nodes in this graph only if there exists a regeneration-free path between the nodes in the true topology and there exists at least one wavelength that is available along the path. Even if there are multiple regeneration-free paths between a node pair, or multiple wavelengths free on a path, at most one link is added between a node pair. This transformed graph is referred to as the reachability graph.