Abstract

In this expository paper we give an overview of the statistical properties of Hamilton-Jacobi Equations and Scalar Conservation Laws. The first part (Sects. 2–4) is devoted to the recent proof of Menon-Srinivasan Conjecture. This conjecture provides a Smoluchowski-type kinetic equation for the evolution of a Markovian solution of a scalar conservation law with convex flux. In the second part of the paper (Sects. 5 and 6) we discuss the question of homogenization for Hamilton-Jacobi PDEs and Hamiltonian ODEs with deterministic and stochastic Hamiltonian functions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

The primary goal of these notes is to give an overview of the statistical properties of solutions to the Cauchy problem for the Hamilton-Jacobi Equation

or, the scalar conservation law

where either H or \(\rho ^0=\rho ^0(x)\) is random. Note that if u satisfies (1.1) and \(d=1\), then \(\rho =u_x\) satisfies (1.2). As is well-known, the PDE (1.1) or (1.2) does not possess classical solutions even when the initial data is smooth. In the case of Eq. (1.1), we may consider viscosity solutions to guarantee the uniqueness under some standard assumptions on the initial data and H. In the case of (1.2) with \(d=1\), we consider the so-called entropy solutions.

We will be mostly concerned with the following two scenarios:

-

(1) \(d=1\), \(H(x,t,p)=H(p)\) is convex in p and independent of (x, t), with initial data \(\rho ^0\) that is either a white noise, or a Markov process.

-

(2) \(d\ge 1\), and H(x, t, p) is a stationary ergodic process in (x, t), and may not be convex in p.

Our aim is to give an overview of various classical and recent results and formulate a number of open problems. Sections 2–4 are devoted to (1), where we derive an evolution equation for the Markovian law of \(\rho \) as a function of x or t. Sections 4 and 5 are devoted to (2), where we address the question of homogenization for such Hamiltonian functions.

2 Scalar Conservation Law with Random Initial Data

We first recall the following important features of the solutions to (1.2) when \(d=1\), \(H(x,t,p)=H(p)\) is convex in p, and independent of (x, t):

- (i) :

-

If a discontinuity of \(\rho \) occurs at \(x=x(t)\), and \(\rho _{\pm }=\rho (x(t)\pm ,t)\) represent the left and right limits of \(\rho \) at x(t), then for a weak solution of (1.2) we must have the Rankin-Hugoniot Equation:

$$ \frac{dx}{dt}=-H[\rho _-,\rho _+]=:-\frac{H(\rho _+)-H(\rho _-)}{\rho _+-\rho _-}. $$ - (ii) :

-

By an entropy solution, we mean a week solution for which the entropy condition is satisfied. In the case of convex H, the entropy condition is equivalent to the requirement

$$ \rho _-<\rho _+. $$ - (iii) :

-

If \(\rho ^0\) has a discontinuity with \(\rho _->\rho _+\), then such a discontinuity disappears instantaneously by inserting a rarefaction wave between \(\rho _-\) and \(\rho _+\). That is a solution of the form

$$ G\left( \frac{x-c}{t}\right) , $$where \(G=(H')^{-1}\).

We next state three results.

(i) (Burgers Equation with Lévy Initial Data)

When \(H(p)= \frac{1}{2}{p^2}\), (1.2) is the well-known inviscid Burgers’ equation, which has often been considered with random initial data. Burgers studied (1.2) in his investigation of turbulence [5]. Carraro and Duchon [6] defined a notion of statistical solution to Burgers’ equation and realized that it was natural to consider Lévy process initial data. This statistical solution approach was further developed in 1998 by the same authors [7] and by Chabanol and Duchon [8]. In fact any (random) entropy solution is also a statistical solution, but the converse is not true in general. In 1998, Bertoin [4] proved a closure theorem for Lévy initial data.

Theorem 1

Consider Burgers’ equation with initial data \(\rho ^0(x)\) which is a Lévy process without negative jumps for \(x \ge 0\), and \(\rho ^0(x) = 0\) for \(x < 0\). Assume that the expected value of \(\rho ^0(1)\) is non-positive, \({\mathbb E}\rho ^0(1) \le 0\). Then, for each fixed \(t > 0\), the process \(x\mapsto \rho (x,t) -\rho (0,t)\) is also a Lévy process with

where the exponent \(\psi \) solves the following equation:

Remark 2.1(i) The requirement \({\mathbb E}\rho ^0(1) \le 0\) can be relaxed with minor modifications to the theorem, in light of the following elementary fact. Suppose that \(\rho ^0(x)\) and \(\hat{\rho }^0(x)\) are two different initial conditions for Burgers’ equation, which are related by \(\hat{\rho }^0(x) = \rho ^0(x) + cx\). It is easy to check that the corresponding solutions \(\rho (x,t)\) and \(\hat{\rho }(x,t)\) are related for \(t > 0\) by

Using this we can adjust a statistical description for a case where \({\mathbb E}\rho ^0(1) >0\) to cover the case of a Lévy process with general mean drift.

(ii) Sinai [26] and Aurell, Frisch, She [3] considered Burgers equation with Brownian motion initial data, relating the statistics of solutions to convex hulls and addressing pathwise properties of solutions. \(\square \)

(ii) (Burgers Equation with white noise initial data)

Groeneboom [15] considers the white noise initial data. In other words, take two independent Brownian motions \(B^{\pm }\), and take a two sided Brownian motion for the initial data

Theorem 2

Let \(\rho =u_x\), where u is a viscosity solution of the PDE \(u_t=\frac{1}{2}u_x^2\), subject to the initial condition \(u(x,0)=u^0(x)\), with \(u^0\) given as in (2.2). Then the process \(x\mapsto \rho (x,t)\) is a Markov jump process with drift \(-t^{-1}\) and a suitable jump measure \(\nu (t,\rho _-,\rho _+)\ d\rho _+\).

We also refer to [13] for an explicit and simple formula expressing the one-point distribution of \(\rho \) in terms of Airy functions.

(iii) A different particular case,

corresponds to the problem of determining Lipschitz minorants, and has been investigated by Abramson and Evans [1].

3 Menon-Srinivasan Conjecture

In 2007 Menon and Pego [19] used the Lévy-Khintchine representation for the Laplace exponent and observed that the evolution according to Burgers’ equation in (2.1) corresponds to a Smoluchowski coagulation equation [2], with additive collision kernel, for the jump measure of the Lévy process \(\nu (\cdot , t)\). The jumps of \(\nu (\cdot , t)\) correspond to shocks in the solution \(\rho (\cdot , t)\). Regarding the sizes of the jumps as the usual masses in the Smoluchowski equation, it is plausible that Smoluchowski equation with additive kernel should be relevant.

It is natural to wonder whether this evolution through Markov processes with simple statistical descriptions is specific to the Burgers-Lévy case, or an instance of a more general phenomenon. The biggest step toward understanding the problem for a wide class of H is found in a 2010 paper of Menon and Srinivasan [20]. Here it is shown that when the initial condition \(\rho ^0\) is a strong Markov process with positive jumps only, the solution \(\rho (\cdot , t)\) remains Markov for fixed \(t > 0\). The argument is adapted from that of [4] and both [20] and [4] use the notion of splitting times (due to Getoor [14]) to verify the Markov property according to its bare definition. In the Burgers-Lévy case, the independence and homogeneity of the increments can be shown to survive, from which additional regularity is immediate using standard results about Lévy processes. As [20] points out, without these properties it is not clear whether a Feller process initial condition leads to a Feller process in x at later times. Nonetheless, [20] presents a very interesting conjecture for the evolution of the generator of \(\rho (\cdot , t)\), which has a remarkably nice form.

To prepare for the statement of Menon-Srinivasan Conjecture, we first examine the following simple scenario for the solutions of the PDE

Imagine that the initial data \(\rho ^0\) satisfies an ODE of the form

for some \(C^1\) function \(b^0:{\mathbb R}\rightarrow {\mathbb R}\). We may wonder whether or not this feature of \(\rho ^0\) survives at later times. That is, for some function \(b(\rho ,t)\), we also have

for \(t>0\). For (3.3) to be consistent with (3.1), observe

and as we calculate mixed derivatives, we arrive at

As a result b must satisfy

For a classical solution, all we need to do is solving the ODE (3.3) for the initial data \(b(\rho ,0)=b^0(\rho )\) for each \(\rho \). When H is convex, the solution may blow up in finite time. More precisely,

-

If \(b^0(\rho )\le 0\), then \(b^0(\rho )\le b(\rho ,t)\le 0\) for all t and there would be no blow-up.

-

If \(b^0(\rho )>0\), then there exists some finite \(T(\rho )>0\) such that \(b(\rho ,t)\) is finite in the interval \([0,T(\rho ))\), and \(b(\rho ,T(\rho ))=\infty \).

In fact the Eq. (3.4) is really “the method of characteristics” in disguise, and the blow-up of solutions is equivalent to the occurrence of shock discontinuity.

To go beyond what (3.4) offers, we now take a jump kernel \(f^0(\rho ,d\rho _*)\) and assume that \(\rho ^0(x)\) is a realization of a Markov process with infinitesimal generator

In words, \(\rho ^0\) solves the ODE (3.3), with some occasional random jumps with rate \(f^0\). We are assuming that the jumps are all positive to avoid rarefaction waves. We may wonder whether the same picture is valid at later times. That is, for fixed \(t>0\), the solution \(\rho (x,t)\), as a function of x is a Markov process with the generator

Menon-Srinivasan Conjecture roughly suggests that if H is convex, and we start with a Markov process with generator \({\mathcal L}^0\), then we have a Markov process at a later time with a generator of the form \({\mathcal L}^t\). Moreover, the drift of the generator satisfies (3.4), and the jump kernel \(f(\rho ,d\rho _*,t)\) solves an integral equation. Before we derive an equation for the evolution of f, observe that when we assert that \(\rho (x,t)\) is a Markov process in x, we are specifying a direction for x. More precisely, we are asserting that if \(\rho (a,t)\) is known, then the law of \(\rho (x,t)\) can be determined uniquely for all \(x>a\). We are doing this for all \(t>0\). In practice, we may try to determine \(\rho (x,t)\) for \(x>a(t)\), provided that \(\rho (a(t),t)\) is specified. For example, we may wonder whether or not we can determine the law of \(\rho (x,t)\) with the aid of the following procedure:

-

The process \(t\mapsto \rho (a(t),t)\) is a Markov process and its generator can be determined. Using this Markov process, we take a realization of \(\rho (a(t),t)\), with some initial choice for \(\rho (a(0),0)\).

-

Once \(\rho (a(t),t)\) is selected, we use the generator \({\mathcal L}^t\), to produce a realization of \(\rho (x,t)\) for \(x\ge a(t)\).

To materialize the above procedure, we need to make sure that for some choice of a(t), the process \(\rho (a(t),t)\) is Markovian with a generator that can be described. For a start, we may wonder whether or not we can even choose \(a(t)=a\) a constant function. Put it differently, not only \(x\mapsto \rho (x,t)\) is a Markov process for fixed \(t\ge 0\), the process \(t\mapsto \rho (x,t)\) is a Markov process for fixed x. As it turns out, this is the case if H is also increasing. In general, if we can find a negative constant c such that \(H'(\rho )> c\), then \(\hat{\rho }(x,t):=\rho (x-ct,t)\) satisfies

for \(\hat{H}(\rho )=H(\rho )-c\rho \), which is increasing. Hence, the process \(t\mapsto \hat{\rho }(x,t)=\rho ( x-ct,t)\) is expected to be Markovian. In summary

-

If H is increasing in the range of \(\rho \), then \(\rho \) is also Markovian on vertical lines \(x=constant\).

-

If \(H'\) is bounded below by a negative constant c, then \(\rho \) is Markovian on straight lines that are titled to the right with the slope \(-c\).

To simplify the matter, from now on, we make two assumptions on H:

The main consequences of these two assumptions are

-

All the jump discontinuities are positive i.e. \(\rho _-<\rho _+\).

-

The speed of shocks are always negative.

We now argue that in fact the process \(t\mapsto \rho (x,t)\) is a (time-inhomogeneous) Markov process with a generator \({\mathcal M}_t\) that is independent of x because the PDE (3.1) is homogeneous (i.e. H is independent of x). Indeed

To explain the form of \({\mathcal M}_t\) heuristically, observe that the ODE \(\frac{d\rho }{dx}=b(\rho ,t)\) leads to the ODE

On the other hand, if we fix x, then \(\rho (x,t)\) experiences a jump discontinuity when a shock on the right of x crosses x. Given any \(t>0\), a shock would occur at some \(s>t\) because all shock speeds are negative; it is just a matter of time for a shock on the right of x to cross x. We can also calculate the rate at which this happens because we have the law of the first shock on the right of x, and its speed. Observe

-

The process \(x\mapsto \rho (x,t)\) is a homogeneous Markov process with a generator that changes with time.

-

The process \(t\mapsto \rho (x,t)\) is an inhomogeneous Markov process with a generator that does not depend on x. It is only the initial data \(\rho (x,0)\) that is responsible for the changes of the statistics of \(\rho (x,t)\), as x varies.

We are now in a position to derive formally an evolution equation for the generator \({\mathcal L}^t\), under the assumption (3.6). Indeed if we define

for \(t<T\), then we expect

Differentiating these equations yields

As a result

As we match the drift parts of both sides of (3.8), we simply get (3.4). Matching the jump parts yields a kinetic-type equation of the form

for a quadratic operator Q and a linear operator C. The operator Q is independent of b and is given by

If we set

then \(Q=Q^+-Q^-\), with

To define the operator C we need to assume that \(f(\rho _-,d\rho _+)=f(\rho _-,\rho _+)d\rho _+\) has a \(C^1\) density. With a slight abuse of notion, we write \(f(\rho _-,\rho _+)\) for the density of the measure \(f(\rho _-,d\rho _+)\), and write C again for the action of the operator C on the density f:

Menon-Srinivasan Conjecture has been established in [16] and [17]:

Theorem 3

Assume H is a \(C^2\) function that satisfies (3.6). Let \(\rho \) be an entropic solution of (3.1) such that \(\rho (x,0)=0\), for \(x\le 0\), and \(\rho (x,0)\) is a Markov process with generator \({\mathcal L}^0\), for \(x\ge 0\). Assume that b and f satisfy (3.4) and (3.9) respectively. Then the processes \(t\mapsto \rho (0,t)\) and \(x\mapsto \rho (x,t)\) are Markov processes with generators \({\mathcal M}_t\) and \({\mathcal L}^t\) respectively.

The typical situation, for Smoluchowski and other kinetic equations is that we have some (stochastic or deterministic) dynamics defined on a finite system, and these kinetic equations emerge upon passage to a scaling limit. The dynamics might not be definable for the infinite system, and the kinetic equation should describe statistics only approximately for a large but finite system. In the setting of Theorems 1, 2 and 3, the kinetic equations give statistics exactly without passage to a rescaled limit. We view this unusual circumstance as demanding an explanation. Further, our treatment in Sect. 4 below (tracking shocks as inelastically colliding particles) seems quite at home in the kinetic context.

4 Heuristics for the Proof of Theorem 3

Let us write \(x_i(t)\) for the location of the i-th shock and \(\rho _i(t)=\rho (x_i(t)+,t)\). We also write \(\phi _x(m_0;t)\) for the flow associated with the velocity b; the function \(m(x)=\phi _x(m_0;t)\) satisfies

We can readily find the evolution \(\mathbf q =(x_i,\rho _i:i\in {\mathbb Z})\), and \(\varvec{\hat{q}}=(z_i,\rho _i:i\in {\mathbb Z})\), with \(z_i=x_{i+1}-x_i\):

-

$$ \dot{x}_i=-v^i:=-H[\hat{\rho }_{i-1},\rho _i],\ \ \ \ \ \ \dot{z}_i=-(v^{i+1}-v^i), $$

where \(\hat{\rho }_{i-1}(t)=\phi _{z_{i-1}}(\rho _{i-1}(t),t)\).

-

$$ \dot{\rho }_i=w^i:=\big (H'(\rho _i)-H[\hat{\rho }_{i-1},\rho _i]\big )b(\rho _i,t). $$

-

When \(z_i\) becomes 0, the pair \((\rho _i,z_i)\) is omitted from \(\varvec{\hat{q}}(t)\). The outcome after a relabeling is denoted by \(\varvec{\hat{q}}^i(t)\).

Write

We think of \(\varvec{\hat{q}}(t)\) as a deterministic process that has an infinitesimal generator

in the interior of \(\varDelta \). We only take those G such that on the boundary face of \(\varDelta \) with \(z_i=0\), we have \(G\big (\varvec{\hat{q}}\big )=G\big (\varvec{\hat{q}}^i\big )\). This stems from the fact that we are interested in the function \(\rho (x)=\rho (x;{\hat{\mathbf{q }}})\) associated with \({\hat{\mathbf{q }}}\) (or \(\mathbf q \)) that is defined by

Note that \(\rho (x;{\hat{\mathbf{q }}})=\rho (x;{\hat{\mathbf{q }}}^i)\) whenever \(z_i=0\).

We make an ansatz that the law of \(\varvec{\hat{q}}(t)\) is of the form:

For this to be the case, we need to have

This equation should determine f and \(\lambda \) if our ansatz is correct. To determine  , we take a test function G and carry out the following calculation: After some integration by parts, we formally have

, we take a test function G and carry out the following calculation: After some integration by parts, we formally have

where

where \(\varOmega _i^3\) represents the boundary contribution associated with \(z_i=0\), and

To explain the form of \(\varOmega ^3_i\), observe that when \(z_i=0\), we remove the ith-particle and relabel the particles to its right. The expression \(f\big (\hat{\rho }_{i-1},\rho _{i},t\big ) f\big (\rho _{i},\rho _{i+1},t\big )\ d\rho _i\), that appears in \(\mu \), can be rewritten as \(f\big (\hat{\rho }_{i-1},\rho _{*},t\big ) f\big (\rho _{*},\rho _{i+1},t\big )\ d\rho _*\). The variable \(\rho _{i+1}\) becomes \(\rho _i\) after our relabeling, and its integral with respect to \(\rho _*\) is a function of \(\big (\hat{\rho }_{i-1},\rho _{i},t\big )\). If we replace this function with \(f\big (\hat{\rho }_{i-1},\rho _{i},t\big )\), we recover the measure \(\mu \).

On the other hand

To make the above formal calculation rigorous, we switch from the infinite sum to a finite sum. For this, we restrict the dynamics to an interval, say [0, L]. The configuration now belongs to

with \(\varDelta ^L_n\) denoting the set

Again, what we have in mind is that \(\rho _i(t)=\rho (x_i(t)+,t)\) with \(x_1,\dots ,x_n\) denoting the location of all shocks in (0, L). For our purposes, we need to come up with a candidate for the law \(\mu (t,d\mathbf q )\) of \(\mathbf q (t)\) in \(\varDelta _L\). The restriction of \(\mu \) to \(\varDelta _L^n\) is denoted by \(\mu ^n\) and is given by

where f solves (3.9) and \(\ell \) is the law of \(\rho (0,t)\), which is a Markov process with generator \({\mathcal M}={\mathcal M}_t\):

To simplify the presentation, we assume

As for the dynamics of q, we have the following rules:

- (i) :

-

So long as \(x_i\) remains in \((x_{i-1},x_{i+1})\), it satisfies

$$ \dot{x}_i=-v^i:=-H[\hat{\rho }_{i-1},\rho _i], $$where \(\hat{\rho }_{i-1}(t)=\phi _{z_{i-1}}(\rho _{i-1}(t),t)\).

- (ii) :

-

We have \(\dot{\rho }_0=w^0:=H'(\rho _0)b(\rho _0,t)\) and for \(i>0\),

$$ \dot{\rho }_i=w^i:=\big (H'(\rho _i)-H[\hat{\rho }_{i-1},\rho _i]\big )b(\rho _i,t). $$ - (iii) :

-

When \(z_i=x_{i+1}-x_i\) becomes 0, then \(\mathbf q (t)\) becomes \(\mathbf q ^i(t)\), that is obtained from \(\mathbf q (t)\) by omitting \((\rho _i,x_i)\).

- (iv) :

-

With rate

$$ H[\hat{\rho }_n,\rho _{n+1}]f\big (\hat{\rho }_n,\rho _{n+1},t), $$the configuration q gains a new particle \((x_{n+1},\rho _{n+1})\), with \(x_{n+1}=L\). This new configuration is denoted by \(\mathbf q (\rho _{n+1})\).

We note that since H is increasing, all velocities are negative. Moreover, when the first particle of location \(x_1\) crosses the origin, a particle is lost.

We wish to establish (4.1). We write \(G^n\) for the restriction of a smooth function \(G:\varDelta ^L\rightarrow {\mathbb R}\) to \(\varDelta ^L_n\). Recall that we only consider those test functions G that cannot differentiate between \(\mathbf q \) and \(\mathbf q ^i\) (respectively \(\mathbf q (\rho _{n+1})\)), when \(x_i=x_{i+1}\) (respectively \(x_{n+1}=L\)). We need to verify

for all \(n\ge 0\). Here and below, we write \(\nu ^n\) for the restriction of a measure \(\nu \) to \(\varDelta ^L_n\). Also, given \(H:\varDelta _L\rightarrow {\mathbb R}\), we write \(H^n\) for the restriction of the function H to the set \(\varDelta ^L_n\). To verify (4.3), we show

for every \(C^1\) function G. It is instructive to see why (4.3) (or its integrated version (4.4)) is true when \(n=0\) and 1 before treating the general case. As we will see below, the cases \(n=0,1\) are already equivalent to the Eq. (3.9). As a warm-up, we first assume that \(n=0\) and \(b=0\). In this case the Eq. (4.3) is equivalent to the fact that the law \(\ell \) of \(\rho (0,\cdot )\) is governed by a Markov process with generator \({\mathcal M}_t\). The case \(n=0\) and general b leads to the general form of \({\mathcal M}_t\) for the evolution of \(\rho (0,\cdot )\), and an equation for \(\lambda \) that is a consequence of (3.9). The full Eq. (3.9) shows up when we consider the case \(n=1\).

The case \(n=0\) and \(b=0\) . As it turns out, the function \(\lambda (\rho ,t)=\lambda (\rho )\) is independent of time when \(b=0\). We simply have

On the other hand, the right-hand side of (4.4) is of the form \(\varOmega ^1_0+\varOmega ^2_0\), where \(\varOmega ^1_0\) comes from rule \(\mathbf (i) \), and \(\varOmega ^2_0\) comes from the stochastic boundary dynamics. Indeed

which we get from the boundary terms when we apply an integration by parts to the integral

We note that the other terms of the integration by parts formula contribute to the case \(n=1\) and do not contribute to our \(n=0\) case. Moreover,

From this and (4.6) we learn

as desired. \(\square \)

The case \(n=0\) and general b. To ease the notation, we write

When \(n=0\), the right-hand side of (4.4) equals

This simplifies to

where \(\varLambda (\rho _0,t)\) equals

We need to match \(\varLambda (\rho _0,t)\) with the corresponding term on left-hand side of (4.4), which, by (4.2) takes the form

where

We are done if we can verify

Equivalently

For this, it suffices to check

Note that if \(u(y,\rho )=A\big (\phi _y(\rho ;t),t\big )\), then

Hence for (4.7), it suffices to show

To have a more tractable formula, let us write \(T_yh(m)=h(\phi _y(m;t))\). The family of operators \(\{T_y:\ y\in {\mathbb R}\}\), is a group in y. Moreover, if \(({\mathcal B}h)(m)=b(m,t)h'(m)\), then

Using this, we may rewrite (4.8) as

This for \(y=0\) takes the form

Because of our choice of \(\lambda \), namely

we can deduce (4.10) from (3.9) after integrating both sides of (3.9) with respect to \(\rho _-\). On account of (4.11), the claim (4.10) would follow if we can show

This is true for \(y=0\). Differentiating with respect to y yields

where we used (3.4) for the third equality. As a result.

This completes the proof of (4.2), when \(n=0\). \(\square \)

As we have seen so far, the case \(n=0\) is valid if (4.11), a consequence of the kinetic equation (3.9), is true. On the other hand the case \(n=1\) is equivalent to the kinetic equation. Before embarking on the verification of (4.3) for \(n=1\), let us make some compact notions for some of the expressions that come into the proof. Given a realization \(\mathbf q =\big (0,\rho _0,x_1,\rho _1,\dots ,x_n,\rho _n\big )\in \varDelta _n^L,\) we define

where \(\lambda \) is defined by (4.12). Note that by (4.13),

The case \(n=1\). We have \(\dot{\mu }^1=X_1\mu ^1\), where

On the other hand  , with

, with

where

Here,

-

The term \(Y_{11}\) comes from an integration by parts with respect to the variable \( \rho _0\). The dynamics of \(\rho _0\) as in rule (ii) is responsible for this contribution.

-

The terms \(Y_{12}\) and \(Y_{13}\) come from an integration by parts with respect to the variable \( \rho _1\). The dynamics of \(\rho _1\) as in rule (ii) is responsible for these two contributions.

-

The term \(Y_{14}\) comes from an integration by parts with respect to the variable \(x_1\). The dynamics of \(x_1\) as in rule (i) is responsible for this contribution.

-

The term \(Y_{15}\) comes from the boundary term \(x_1=0\) in the integration by parts with respect to the variable \(x_1\) when there are two particles at \(x_1\) and \(x_2\). This boundary condition represents the event that \(x_1\) has reached the origin after which \(\rho _0\) becomes \(\rho _1\), and \((x_2,\rho _2)\) is relabeled \((x_1,\rho _1)\).

-

The term \(Y_{16}\) comes from the boundary term \(x_2=L\) in the integration by parts with respect to the variable \(x_2\), and the stochastic boundary dynamics as in the rule (iv). The boundary term \(x_2=L\) cancels part of the contribution of the boundary dynamics as we have already seen in our calculation in the case \(n=0\).

-

The rule (iii) is responsible for the term \(Y_{17}\). When \(n=2\), the particles at \(x_1\) and \(x_2\) travel towards each other with speed \(H[\hat{\rho }_1,\rho _2]-H[\hat{\rho }_0,\rho _1]\). As \(x_2\) catches up with \(x_1\), the particle \(x_1\) disappears and its density \(\rho _1=\hat{\rho }_1\) is renamed \(\rho _*\), and is integrated out. We then relabel \((x_2,\rho _2)\) as \((x_1,\rho _1)\).

We wish to show that \(X_1=Y_1\). After some cancellation, this simplifies to

where

(The same cancellation led to the Eq. (4.7).) Observe that \(\varGamma (\mathbf q ,t)=\varGamma (\rho _0,x_1,t)+\varGamma (\rho _1,L-x_1,t)\). Moreover, by (4.7),

As a result,

Using this, we learn that the equality \(X'_1=Y'_1\) is equivalent to the identity

By the group property (4.9), we can assert that for any \(C^1\) function h,

We use this and the definition of the quadratic operator Q in (3.10) to deduce that \(X'_1=Y'_1\) is equivalent to the identity

Here we are acting the quadratic operator Q on functions because we are assuming that \(f(\rho _,d\rho _+,t) =f(\rho _,\rho _+,t) \ d\rho _+\), is absolutely continuous with respect to the Lebesgue measure. We now use (4.13) to assert that \(X'_1=Y'_1\) is equivalent to the identity

On the other hand, by the definition of \(\varGamma \),

where we used the group property (4.9) for the second equality. This leads to

which is exactly our kinetic equation! \(\square \)

General n. We write \(\dot{\mu }^n=X_n\mu ^n\). We have,

From this, (4.14) and (3.10) we deduce

For the right-hand side of (4.3) we write  , where

, where

with \(Y_{15}\) independent of n and defined before, \(Y_{n6}=-A(\hat{\rho }_n,t)\), and

where we used (4.15) for the first and fifth equality. We wish to show that \(X_n=Y_n\). From

and some cancellation, the equality \(X_n=Y_n\) simplifies to \(X'_n=Y'_n\), where

and \(Y'_n=Y''_{11}+Y_{n3}+Y'_{n4}\ \). Observe that \(Y'_{n4}=Y'_{n41}+Y'_{n42}+Y'_{n43}\), and \(Y_{n3}=Y_{n31}+Y_{n32}\), where

From these decompositions, we learn that \(X'_n=Y'_n\) is equivalent to \(X''_n=Y''_n\), where

and \(Y''_n=Y''_{11}+Y_{n32}+Y'_{n42}+Y'_{n43}.\) By the group property (4.9),

This allows us to write

Hence

as desired. For the third equality, we have used (4.9). This completes the proof. \(\square \)

So far, we have been able to formally show that the law of \(\mathbf q (t)\) is \(\mu (d\mathbf q ,t)\), by verifying the forward Eq. (4.3) for every n (recall that n represents the number of particles/shock discontinuities in the interval (0, L)). Our verification of (4.3) is rather tedious but elementary. Our verification is formal at this point because the evolution of \(\mathbf q \) is governed by a discontinuous deterministic dynamics that is interrupted by stochastic Markovian entrance of new particles at the boundary point L. By selecting a pair \(\ell \) and f that are differentiable with respect to time, it is not hard to justify our calculation for the left-hand side of (4.3), as it appeared in (4.16). It is the justification of the right-hand side as in (4.17) that requires additional work.

Writing \(\varPhi _s^t(\mathbf q )\) for \(\mathbf q (t)\) with initial condition \(\mathbf q (s)=\mathbf q \), it suffices to show that for every nice function \(G:\varDelta _L\rightarrow {\mathbb R}\),

Clearly (4.18) implies

which means that the law of \(\mathbf q (t)\) is given by our candidate \(\mu (d\mathbf q ,t)\). For a rigorous proof of this, we calculate the left time-derivative of \({\mathbb E}\ G\big (\varPhi _s^t(\mathbf q )\big )\) by hand and show that this left-derivative equals to

5 Homogenizations for Hamiltonian ODEs

The Hamilton-Jacobi PDE may be used to model the growth of an interface that is described as a graph of a height function. More precisely, the graph of a solution

of the Hamilton-Jacobi equation

describes an interface at time t in microscopic coordinates. If the ratio of micro to macro scale is a large number n, then

is the corresponding macroscopic height function. In practice n is large and we may obtain a simpler description of our model if the large n limit of \(u^n\) exists and satisfies a simple equation. Indeed \(u^n\) satisfies

and this equation must be solved for an initial condition of the form \(u^n(x,0)=g(x)\), where g represents the initial macroscopic height function. Let us define

the job of the operator \(\varGamma _n\) is to turn a macroscopic height function to its associated microscopic height function. We also write \(T_t=T_t^H\) for the semigroup associated with the PDE (5.1). More precisely, \(T_tu^0(x)=u(x,t)\) means

In terms of the operators \(T_t\) and \(\varGamma _n\), we simply have \(u^n=\big (\varGamma _n^{-1}\circ T_{nt}\circ \varGamma _n\big )(g).\) Put it differently,

where \(\gamma _n(x,p)=(nx,p)\). If we write T(H) for \(T^H_1\), then in particular we have

The hope is that under some assumptions on H, the large n-limit of \(u^n\) exists and the limit \(\bar{u}\) provides a reduced and simpler description of the growth model under study. For example, when H is 1-periodic in x-variable, the high oscillations of \(H\circ \gamma _n\), may result in the convergence of \(u^n\) to a function \(\bar{u}\), that solves the homogenized equation

When this happens, we write  .

.

More generally, write \({\mathcal H}\) for the space of all \(C^1\) Hamiltonian functions and define the natural translation operator

for every \(a\in {\mathbb R}^d\). We then take a probability measure \({\mathbb P}\) on \({\mathcal H}\) that is translation invariant and ergodic. We wish to take advantage of the ergodicity to assert that \(T^{H\circ \gamma _n}_t\rightarrow T^{\bar{H}}_t\), \({\mathbb P}\)-almost surely, as \(n\rightarrow \infty \). If this happens for a deterministic function \(\bar{H}\), then we write  . We note

. We note

-

If \({\mathbb P}\) is supported on the set

$$ A:=\big \{\tau _aH^0:\ a\in {\mathbb R}^d\big \}, $$for some 1-periodic Hamiltonian function \(H^0\), then A is isomorphic to the d-dimensional torus and we are back to the periodic scenario.

-

If \({\mathbb P}\) is supported on the topological closure (with respect to the uniform norm), of the set

$$ A:=\big \{\tau _aH^0:\ a\in {\mathbb R}^d\big \}, $$for some Hamiltonian function \(H^0\), and this closure is a compact set, then \(H^0\) is almost periodic and the homogenization would allow us to find the large n-limit of \(T^{H\circ \gamma _n}_t\rightarrow T^{\bar{H}}_t\), for almost all choices of H in the compact support of \({\mathbb P}\). In this case \(\bar{A}\) has the structure of an Abelian Lie group and \({\mathbb P}\) is the corresponding Haar measure.

To explore the homogenization question further, we discuss the connection between Hamiltonian ODE and Hamilton-Jacobi PDE. For a classical solution, the method of characteristics suggests that at least for short times, we can solve (5.2) in terms of the flow of the Hamiltonian ODE

Equivalently we write \(\dot{z}=J\nabla H(z)\), where \(z=(x,p)\), and

with I denoting the \(d\times d\) identity matrix. Writing \(\phi _t=\phi ^H_t\) for the flow of (5.5), we have

provided that the left-hand side remains a graph of a function. As we mentioned earlier, the Eq. (5.2) does not possess \(C^1\) solutions in general. This has to do with the fact that if \(\phi _t\) folds the graph of \(\nabla u^0\), then the left-hand side of (5.6) is no longer a graph of a function and (5.6) has no chance to be true. One possibility is that we trim the left-hand side (5.6) and hope for

For this to work, we have to give-up the differentiability of u. This geometric and rather naive idea does not suggest how the trimming should be carried out.

Alternatively, we may add a small viscosity term of the form \(\varepsilon \varDelta u\) to the right-hand side of (5.1) to guarantee the existence of a unique classical solution, and pass to the limit \(\varepsilon \rightarrow 0\). The outcome is known as a viscosity solution (see [12]). As it turns out, under some coercivity assumption on H, we can guarantee the existence of a solution that is differentiable almost everywhere. We can now modify the right-hand side of (5.7) accordingly and wonder whether or not

is true. The answer is affirmative if H is convex in p. However (5.8) may fail if we drop the convexity assumption. To explain this in the case of piecewise smooth solutions, we recall that if H is convex in p, the only discontinuity we can have is a shock discontinuity. In this case, at every point (a, t), with \(t>0\), we can find a solution \((x(s),p(s):s\in [0,t])\) (the so-called backward characteristic) such that \(x(t)=a\). If \(\rho =u_x\) is continuous at a, this backward characteristic is unique and \(p(t)=\rho (a,t)\). If \(\rho \) is discontinuous at (a, t), then \(\rho (a,t)\) is multi-valued and for each possible value p of \(\rho (a,t)\), there will be a solution to the Hamiltonian ODE with \((x(t),p(t))=(a,p)\). In both cases, we still have (5.8).

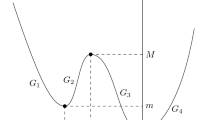

The situation is far more complex when H is not convex. What may cause the violation of (5.8) is the occurrence of a rarefaction type solutions. To explain this, let us assume that \(d=1\), and H depends on p only. There are three momenta (or densities) \(a_1<a_2<a_3\) such that

-

The graph of H is convex and below its cord in \([a_1,a_2]\).

-

The graph of H is concave and above its cord in \([a_2,a_3]\).

-

The graph of H is below its cord in the interval \([a_1,a_3]\).

Now imagine that we have two discontinuities at x(t) and y(t) with \(x(t)<y(t)\), and both are shock discontinuities. Assume

-

The left and right values of \(\rho \) at x(t) are \(a'_2(t)<a'_3(t)\).

-

The left and right values of \(\rho \) at y(t) are \(a'_3(t)>a'_1(t)\).

-

These two shock discontinuities meet at some instant \(t_0\) with \(a_i'(t_0)=a_i\).

As a result, at the moment \(t_0\) the two shock discontinuities are replaced with a rarefaction wave. Now if we take a point (x, t) inside the fan of this rarefaction wave (for which necessarily \(t>t_0\)), then at such (x, t) the connection with the initial data is lost and \((x,u_x(x,t))\) does not belong to the left-hand side of (5.8).

Motivated by the failure of (5.8) for viscosity solutions, we formulate a question.

Question 5.1:

Is there a notion of generalized solution for (5.1) for which (5.8) is always true?

Using some ideas from topology and symplectic geometry the notion of geometric solution has been developed by Chaperon [9,10,11], Sikorav [25] and Viterbo [27]. The main features of this solution is as follows:

-

(i)

The geometric solution satisfies (5.8) always.

-

(ii)

The geometric solution satisfies (5.2) at every differentiability point of u.

-

(iii)

The geometric solution coincides with the viscosity solution when H is convex in p.

-

(iv)

Writing \(\hat{T}_tu^0\) for the geometric solution of (5.2) with the initial condition \(u^0\), we do not in general have \(\hat{T}_t\circ \hat{T}_s=\hat{T}_{t+s}\) (except when H is convex in p).

Needless to say the last feature of the geometric solution is a serious flaw and does not provide a satisfactory answer for Question 5.1. Nonetheless the geometric solution provides a useful notion that helps us to connect the Eq. (5.2) to the Hamiltonian ODEs.

Because of the intimate relation between the Hamilton-Jacobi Equation and the Hamiltonian ODE, we may wonder whether a homogenization phenomenon occurs for the latter. More precisely, does the high-n limit of

exist in a suitable sense? Note that \(H\circ \gamma _n\) has no pointwise limit and the existence of pointwise limit of \(\phi ^{H\circ \gamma _n}_t\) is not expected either. Writing \(\phi _H\) for \(\phi ^H_1\), we may wonder in what sense, if any, the sequence \(\phi _{H\circ \gamma _n}\) has a limit. We note

We now discuss the existence of some interesting metric on the space \({\mathcal H}\) that is weaker than uniform norm and is closely related to the flow properties of the Hamiltonian ODEs. More importantly, there is a chance that \(H\circ \gamma _n\) converges with respect to such metrics.

There are two metrics on \({\mathcal H}\) that are well-suited for our purposes. These metrics were defined by Hofer and Viterbo; the proofs of non-triviality of these metrics are highly non-trivial. Let us write down a wish-list for what our metric should satisfy.

Let us write \({\mathcal D}\) for the space of maps \(\varphi \) such that \(\varphi =\phi _H\) for some smooth Hamiltonian function \(H: {\mathbb R}^{2d}\times [0,1]\rightarrow {\mathbb R}\). (Any such map is symplectic as we will see later.) Assume that there exists a function \(E:{\mathcal D}\rightarrow [0,\infty )\) with the following properties: For \(\varphi ,\psi ,\tau \in {\mathcal D}\),

- (i) :

-

\(E(\varphi )=E(\varphi ^{-1})\).

- (ii) :

-

\(E(\varphi )=E\big (\tau ^{-1}\varphi \tau )\).

- (iii) :

-

\(E(\varphi \psi )\le E(\varphi )+E(\psi )\).

- (iv) :

-

\(E(\varphi )=0\) if and only if \(\varphi = id\).

- (v) :

-

\(E\big (\gamma _{\ell }^{-1}\varphi \gamma _\ell \big )=\ell ^{-1}E(\varphi )\), where \(\gamma _\ell (x,p)=(\ell x,p)\) and \(\ell \in (0,\infty )\).

Here and below we simply write \(\varphi \psi \) for \(\varphi \circ \psi \) and think of \({\mathcal D}\) as a group with multiplication given by the map composition.

From E, we build a metric D on \({\mathcal D}\) by \(D(\varphi ,\psi )=E\big (\varphi \psi ^{-1}\big )\). This metric has the following properties:

Proposition 1

(i) \(D(\varphi \tau ,\psi \tau )=D(\tau \varphi ,\tau \psi )=D(\varphi ,\psi )\) for \(\varphi ,\psi ,\tau \in {\mathcal D}\).

(ii) For \(\varphi _1,\psi _1\dots ,\varphi _k,\psi _k\), we have

(iii) For \(S_n(\varphi )=\gamma _{n}^{-1}\circ \varphi ^n\circ \gamma _n,\) we have

In the case of a homogenization, we expect \(S_n(\varphi )\rightarrow \bar{\varphi }\), where \(\bar{\varphi }=\phi _{\bar{H}}\), for a Hamiltonian function \(\bar{H}\) that is independent of x. Write \({\mathcal D}_0\) for the space of such \(\bar{\varphi }\). We note that \(S_n(\bar{\varphi })=\bar{\varphi }\). As a result, for any \(\bar{\varphi } \in {\mathcal D}_0\),

by Proposition 1(iii). As was noted by Viterbo [28], (5.9) implies that the set of limit points of the sequence \((S_n(\varphi ):\ n\in {\mathbb N})\) is a singleton: If \(\bar{\varphi }\) and \(\bar{\psi }\) are two limit points, then given \(\delta >0\), we find \(n,m\in {\mathbb N}\) such that

From this and (5.9) we learn,

because \(S_{nm}=S_n\circ S_m\). Hence \(D(\bar{\varphi },\bar{\psi })\le 2\delta \). By sending \(\delta \rightarrow 0\) we deduce that \(\bar{\varphi }=\bar{\psi }\).

A natural question is whether we have homogenization with respect to such a metric.

Question 5.2:

Given \(\varphi \in {\mathcal D}\), does the large n limit of the sequence \(\big \{S_n(\varphi )\big \}\) exist with respect to a metric D as above? \(\square \)

6 Lagrangian Manifolds and Viterbo’s Metric

The Question 5.2 has been answered affirmatively by Viterbo [28] when the Hamiltonian H is periodic in x and the metric D is the Viterbo’s metric. We continue with a brief discussion of Viterbo’s metric.

To simplify our presentation, let us assume that H is 1-periodic in x. We may also regard \(u(\cdot ,t)\) as a function on the d-dimensional torus \({\mathbb T}^d\).

To examine the left-hand side of (5.8), assume that the initially the solution of the ODE (5.5) satisfies the relationship \(p=\nabla u^0(x)\), for some smooth function \(u^0\). Whenever (5.6) is true, then at time t we have a similar relationship between the components of \(\phi _t(x,p)\). Let us write \(M^t:=\phi _t(M^0)\), where

To get a feel for \(M^t=\phi _t^H(M^0)\), observe that \(M^0\) is a graph of a an exact derivative. Let us refer to such manifolds as an exact Lagrangian graph. In general if

then vectors of the form

are tangents to M at x. What makes M exact is that if \(X=\nabla u\), then the matrix \(A=DX=D^2u\) is symmetric. To state this directly in terms of the tangent vectors, observe

Hence the symmetry of A is equivalent to  identically. (Here \(\bar{\omega }\) is the standard symplectic 2-form of \({\mathbb R}^{2d}\).) Motivated by this we call a manifold M Lagrangian if the restriction of \(\bar{\omega }\) to M is identically 0. The point of this definition is that if \(M^0\) is the graph of an exact derivative, then \(\varphi (M^0)\) may not be a graph of a function. However, when \(\varphi \) preserves the form \(\bar{\omega }\), then \(\varphi (M^0)\) is always a Lagrangian. We say a map \(\varphi \) is symplectic if it preserves \(\bar{\omega }\) in the following sense:

identically. (Here \(\bar{\omega }\) is the standard symplectic 2-form of \({\mathbb R}^{2d}\).) Motivated by this we call a manifold M Lagrangian if the restriction of \(\bar{\omega }\) to M is identically 0. The point of this definition is that if \(M^0\) is the graph of an exact derivative, then \(\varphi (M^0)\) may not be a graph of a function. However, when \(\varphi \) preserves the form \(\bar{\omega }\), then \(\varphi (M^0)\) is always a Lagrangian. We say a map \(\varphi \) is symplectic if it preserves \(\bar{\omega }\) in the following sense:

for every \(x\in {\mathbb T}^d\) and every pair of vectors \(a,b\in {\mathbb R}^{2d}\).

It is well-known that the correct topology for the viscosity solution comes from the uniform norm; this has to do with the fact the viscous approximation of Hamilton-Jacobi Equation satisfies a maximum principle that survives as we send the viscous term to 0. Since we are now interested in Hamiltonian ODE, we may try to define some kind of metrics on Lagrangian manifolds of the form \(\phi _t^H\big (M^0\big )\), where \(M^0\) is an exact Lagrangian. Let us write \({\mathcal L}_0\) for the set of exact Lagrangian graphs, and define

When M is the graph of \(\nabla u\), for some \(C^1\) function \(u:{\mathbb T}^d\rightarrow {\mathbb R}\), we refer to u as the generating function of M. When this is the case, we write \({\mathcal G}(M)=u\). We also write

Viterbo defines a metric on \({\mathcal L}\) that is a generalization of the \(L^\infty \)-metric on its generating function. In other words, the metric D is defined in such way that if \(M^0\) and \(M^1\) are two exact Lagrangian graphs, then

where by \(\Vert \cdot \Vert _\infty \) we really mean the total oscillation:

This definition is quite natural because \({\mathcal L}(u)={\mathcal L}(u+c)\), for any constant c.

To guess how to extend the definition of this metric to \({\mathcal L}\), we need to develop a better understanding of the Hamiltonian ODEs. First, we claim that there exists a functional \({\mathcal I}={\mathcal I}^H\) on the space of the paths \(z(\cdot )=(x,p)(\cdot )\), such that \(\dot{z}=J\nabla H(z,t)\) if and only if \(z(\cdot )\) is a critical point of \({\mathcal I}\). Writing the Hamiltonian ODE as \(J\dot{z}+\nabla H(z,t)=0\), it is not hard to come up with an example for \({\mathcal I}\); we use a quadratic term to produce the linear part \(J\dot{z}\), and H to produce \(\nabla H\). The following function \({\mathcal I}:C^1([0, 1];{\mathbb T}^d\times {\mathbb R}^d)\rightarrow {\mathbb R}\), is the integral of the celebrated Cartan-Poincaré form:

Formally, \(\partial {\mathcal I}(z)=-J\dot{z}-\nabla H(z,t)\). More precisely, if \(\eta :[0,1]\rightarrow {\mathbb T}^d\times {\mathbb R}^d\), satisfies \(\eta (0)=\eta (1)=0\), then \(\psi (\delta )={\mathcal I}(z+\delta \eta )\) satisfies

We now use this to come up with a generating-like function for \(M^1=\phi _H\big (M^0\big )\), where \(M^0={\mathcal G}(u^0)\). To this end, let us define

In words, \(\varGamma (a)\) consists of position/momentum paths with the position component reaching a at time 1. We note that if \(z\in \varGamma (a)\) and \(\eta \in \varGamma (0)\), then \(z+\delta \eta \in \varGamma (a)\) for all \(\delta \in {\mathbb R}\). We then define \(\hat{\mathcal I}:\varGamma (a)\rightarrow {\mathbb R}\) by

Since we want to use \(\hat{\mathcal I}\) to build a generating function for \(M^1\), observe that \(\varGamma (0)\) is an infinite dimensional vector space and any \(z\in \varGamma (a)\) can be written as

with \(\xi \in \varGamma (0)\). If \(M^1\) is still a graph of function and has a generating function \(u^1\), then what is happening is that we have a solution z satisfying \(\dot{z}=J\nabla H(z,t)\) with

Moreover, if u solves (1.1), then \(u^1(x)=u(x,1)\). Note that if \(w(t)=u(x(t),t)\), then \(\dot{w}=p\cdot \dot{x}-H(z,t)\), or

To separate x(1) from the rest of information in the path \(z(\cdot )\), we define \({\mathcal J}:{\mathbb T}^d\times \varGamma (0)\rightarrow {\mathbb R}\), by

In other words, if \(z=(a,0)+\xi =(x,p)\), and \(\xi =(x',p)\), then \(x'(t)=x(t)-x(1)=x(t)-a\). Now, if we set

for \(z\in \varGamma (a)\), and \(\eta =(\hat{x},\hat{p})\in \varGamma (0)\), then

We can now assert

On the other hand, if we set \(\bar{\psi }(\delta )=\hat{\mathcal I}\big (z+(\delta b,0)\big ) ={\mathcal J}(a+\delta b;\xi )\), then

As a result, if \(\partial _{\xi }{\mathcal J}(a;\xi )=0\), then

From this we deduce

where \(a=x(1)\) represents the position at time 1. We think of \({\mathcal J}(a;\xi )\) as a generalized generating function (or in short GG function) of \(M=M^1\). The Lagrangian \(M^1\) is exact if for every \((a,p)\in {\mathbb T}^d\times {\mathbb R}^d\), there is at most one solution z to the Hamiltonian ODE with \(x(1)=a, p(1)=p.\) Our aim is to associate a nonnegative number E(M) to \(M\in {\mathcal L}\) that in the case of an exact Lagrangian \(M={\mathcal G}(u)\),

where \(E^\pm (M)\) are two critical values of u, namely the maximum and minimum of u. In the case of a non-exact M, we may use the functional \({\mathcal J}={\mathcal J}_M\) to select two critical points \(z^\pm =(a^\pm ,\xi ^\pm )\) of the functional \({\mathcal J}_M\) to define

The main question now is how to select the critical paths \(z^\pm \). The classical theories of Morse and Lusternik-Schnirelman would provide us with systematic ways of selecting critical values of a scalar-valued function on a manifold. (See for example Appendix E of [21] for an introduction on LS Theory.) These theories are applicable if the underlying manifold is finite-dimensional and their generalizations to infinite dimensional setting are highly nontrivial. (Floer Theory is a prime example of such generalization.) However in our setting it is possible to approximate the functional \({\mathcal I}\) or \({\mathcal J}\) with a function that is defined on \({\mathbb T}^d\times {\mathbb R}^N\) for a suitable N that depends on H and \(u^0\) and could be large. More precisely, we may try to find a generating function \(S:{\mathbb T}^d\times {\mathbb R}^N\rightarrow {\mathbb R}\) such that

In fact any manifold of this form is automatically a Lagrangian manifold, simply because the tangent vectors at a point of the form \((x, S_x(x,\xi ))\) are still of the form \(\big (v, A(x,\xi )v\big ); v\in {\mathbb R}^d\), where \(A=S_{xx}\) is a symmetric matrix.

To explain the existence of such finite dimensional generating functions, we need to make another observation about the flows of Hamiltonian ODEs.

We may regard the symplectic property of \(\varphi =\phi _1^H\), as saying that its graph

is Lagrangian with respect to the 2-form \(\omega \oplus (-\omega )\) in \({\mathbb R}^{4d}\). This Lagrangian manifold is an exact graph when the set \(Gr(\varphi )\) can be expressed as a graph of the gradient of a scalar-valued function. But now because of the form of the symplectic form \(\omega \oplus (-\omega )\), this must be done in a twisted way. More precisely, if \(\varphi (x,p)=(X,P)\), then the generating function would depend for example on (X, p). In the case of an exact symplectic map, we may find a scalar-valued function S(X, p) such that

The identity map has the generating function \(p\cdot X\). This suggests writing \(S(X,p)=X\cdot p-w(X,p)\) with w periodic in X. In terms of w,

Now imagine that \(M=\varphi (M^0)\), where both \(M^0\) and \(\varphi \) are exact with generating functions \(u^0\) and \(S(X,p)=X\cdot p-w(X,p)\). Then

is a GG function for \(M^1\): If \(\xi =(x,p)\), then

As a result

because \(\hat{S}_X=p-w_X(X,p)=P\).

As we mentioned earlier, the identity map has a generating function. Using Implicit Function Theorem, it is not hard to show that any symplectic map that is \(C^1\)-close to the identity also possesses a generating function. Now if \(\varphi =\phi _H\) is the time-one map associated with a smooth Hamiltonian, then we can find \(\delta >0\) sufficiently small, such that the map \(\varphi =\phi ^H_\delta \) is sufficiently close to the identity map and possesses a generating function. In general, each \(\phi ^H\) can be expressed as \(\varphi ^1\circ \dots \circ \varphi ^N\) with each \(\varphi ^i\) possessing a generating function as above. If each \(\varphi ^i\) has a generating function of the form \(X\cdot p-w^i(X,p)\), then \(M=\varphi (M^0)\) has a generating function of the form

We refer to [22] and Chapter 9 of [21] for more details on generating functions.

So far we know that our Lagrangian manifolds possess finite-dimensional generating functions. The next question to address is that how we can select appropriate critical values \(E^\pm (M)\) for \(\hat{S}(X;\xi )\).

For the rest of this section, we assume that M is a Lagrangian manifold with a generating function \(S(x,\xi )\). More precisely,

and \(S(x,\xi )\) is a nice perturbation of a quadratic function in \(\xi \). By this we mean that there exists a quadratic function \(B(\xi )= A\xi \cdot \xi \) such that A is an invertible symmetric matrix, and

We wish to put a metric on the space \({\mathcal L}\) of such Lagrangians. For this, we first wish to define the size E(M) of a Lagrangian manifold M. If M is an exact Lagrangian graph with generating function u, we simply set

If M can be represented as in (6.1), then E(M) is defined by

where \(E^-(M)\) and \(E^+(M)\) are two critical values of the generating function S that are the analog of \(\min u\) and \(\max u\). To explain our strategy for selecting \(E^{\pm }(M)\), first imagine that \(S(x,\xi )=u(x)+B(\xi )\). Then we still have \(E^-(M)=\min u=u(x_-)\) and \(E^+(M)=\max u=u(x_+)\), because both \((x_{\pm },0)\) are critical points of S. After all 0 is a critical value for B. We may apply Lusternik-Schnirelman (LS) Theory, to assert that the function S also has two critical points that are very much the analogs of \((x_\pm ,0)\). (See [28] and Appendix E of [21].) We are now ready to define a metric on the space Lagrangian manifolds who possess generating function as in (6.1). If M and \(M'\) are two Lagrangian manifolds with generating functions S and \(S'\) respectively, then we define a new generating function

This new generating function produces a new Lagrangian manifold

This generating function is a bounded perturbation of \(\big (B\ominus B'\big )(\xi _1,\xi _2)=B(\xi _1)-B(\xi _2)\). We set

We now would like to use the above metric to define a metric for Hamiltonian functions or their corresponding flows that was defined by Viterbo:

Theorem 4

(Viterbo [28]) The large n-limit of \(H\circ \gamma _n\) exists with respect to the Viterbo Metric D. Moreover, if the limit is denoted by \({\mathcal B}(H)\), then \({\mathcal B}\) satisfies the following properties

-

(i) For every symplectic \(\varphi \in {\mathcal D}\), we have \({\mathcal B}(H\circ \varphi )={\mathcal B}(H)\).

-

(ii) If \(\{H,K\}:=J\nabla H\cdot K=0\), then \({\mathcal B}(H+K)={\mathcal B}(H)+{\mathcal B}(K)\).

This should be compared with the Lions-Papanicolaou-Varadhan [18] homogenization result.

Theorem 5

Assume that H(x, p) is a \(C^1\), x-periodic Hamiltonian function with

Then the large n limit of \(T^{H\circ \gamma _n}\) exists. The limit is of the form \(T^{\bar{H}}\), for a Hamiltonian function  that is independent of x.

that is independent of x.

In fact  when H is convex in p; otherwise they could be different. Moreover, Theorem 6.2 has been extended to the random ergodic setting when H is convex in p in Rezakhanlou-Tarver [23] and Souganidis [24]. A natural question is whether or not Theorem 6.1 can be extended to the random setting.

when H is convex in p; otherwise they could be different. Moreover, Theorem 6.2 has been extended to the random ergodic setting when H is convex in p in Rezakhanlou-Tarver [23] and Souganidis [24]. A natural question is whether or not Theorem 6.1 can be extended to the random setting.

Question 6.1:

Can we extend Viterbo’s metric (or Hofer’s metric) to the random setting and does the large n limit of \(H\circ \gamma _n\) exist for a stationary ergodic Hamiltonian H? \(\square \)

References

Abramson, J., Evans, S.N.: Lipschitz minorants of Brownian motion and Lévy processes. Probab. Theory Relat. Fields 158, 809–857 (2014)

Aldous, D.J.: Deterministic and stochastic models for coalescence (aggregation and coagulation): a review of the mean-field theory for probabilist. Bernoulli 5, 3–48 (1999)

Aurell, E., Frisch, U., She, Z.-S.: The inviscid Burgers equation with initial data of Brownian type. Commun. Math. Phys. 148, 623–641 (1992)

Bertoin, J.: The Inviscid Burgers Equation with Brownian initial velocity. Commun. Math. Phys. 193, 397–406 (1998)

Burgers, J.M.: A Mathematics model illustrating the theory of turbulence. In: Von Mises, R., Von Karman, T. (eds.) Advances in Applied Mechanics, vol. 1, pp. 171–199. Elsevier Science (1948)

Carraro, L., Duchon, J.: Solutions statistiques intrinsèques de l’équation de Burgers et processus de Lévy. Comptes Rendus de l’Académie des Sciences. Série I. Mathématique 319, 855–858 (1994)

Carraro, L., Duchon, J.: Équation de Burgers avec conditions initiales à accroissements indépendants et homogènes. Annales de l’Institut Henri Poincaré (C) Non Linear Analysis 15, 431–458 (1998)

Chabanol, M.-L., Duchon, J.: Markovian solutions of inviscid Burgers equation. J. Stat. Phys. 114, 525–534 (2004)

Chaperon, M.: Une idée du type géodésiques brisées pour les systèmes hamiltoniens. C. R. Acad. Sci. Paris Sér. I Math. 298, 293–296 (1984)

Chaperon, M.: Familles Génératrices. Cours donné à l’école d’été Erasmus de Samos (1990)

Chaperon, M.: Lois de conservation et géométrie symplectique. C. R. Acad. Sci. Paris Sér. I Math. 312, 345–348 (1991)

Evans, L.C.: Partial Differential Equations. Graduate Studies in Mathematics. American Mathematical Society, USA (2010)

Frachebourg, L., Martin, Ph.A.: Exact statistical properties of the Burgers equation. J. Fluid Mech. 417, 323–349 (1992)

Getoor, R.K.: Splitting times and shift functionals. Z. Wahrscheinlichkeitstheorie verw. Gebiete 47, 69–81 (1979)

Groeneboom, P.: Brownian motion with a parabolic drift and airy functions. Probab. Theory Relat. Fields 81, 79–109 (1989)

Kaspar, D., Rezakhanlou, F.: Scalar conservation laws with monotone pure-jump Markov initial conditions. Probab. Theory Related Fields 165, 867–899 (2016)

Kaspar, D., Rezakhanlou, F.: Kinetic statistics of scalar conservation laws with piecewise-deterministic Markov process data (preprint)

Lions, P.L., Papanicolaou, G., Varadhan, S.R.S.: Homogenization of Hamilton-Jacobi equations (unpublished)

Menon, G., Pego, R.L.: Universality classes in Burgers turbulence. Commun. Math. Phys. 273, 177–202 (2007)

Menon, G., Srinivasan, R.: Kinetic theory and Lax equations for shock clustering and Burgers turbulence. J. Statist. Phys. 140, 1–29 (2010)

Rezakhanlou, F.: Lectures on Symplectic Geometry. https://math.berkeley.edu/rezakhan/symplectic.pdf

Rezakhanlou, F.: Hamiltonian ODE, Homogenization, and Symplectic Topology. https://math.berkeley.edu/rezakhan/WKAM.pdf

Rezakhanlou, F., Tarver, J.E.: Homogenization for stochastic Hamilton-Jacobi equations. Arch. Ration. Mech. Anal. 151, 277–309 (2000)

Souganidis, P.E.: Stochastic homogenization of Hamilton-Jacobi equations and some applications. Asymptot. Anal. 20(1), 1–11 (1999)

Sikorav, J.-C.: Sur les immersions lagrangiennes dans un fibré cotangent admettant une phase génératrice globale. C. R. Acad. Sci. Paris Sér. I Math. 302, 119–122 (1986)

Sinai, Y.G.: Statistics of shocks in solutions of inviscid Burgers equation. Commun. Math. Phys. 148, 601–621 (1992)

Viterbo, C.: Solutions of Hamilton-Jacobi equations and symplectic geometry. Addendum to: Séminaire sur les Equations aux Dérivées Partielles. 1994–1995 [école Polytech., Palaiseau, 1995]

Viterbo, C.: Symplectic Homogenization (2014). arXiv:0801.0206v3

Acknowledgments

These notes are based on the mini course that was given by the author at Institut Henri Poincaré, Centre Emile Borel during the trimester Stochastic Dynamics Out of Equilibrium. The author thanks this institution for hospitality and support. He is also very grateful to the organizers of the program for invitation, and an excellent research environment. Special thanks to two anonymous referees for their careful reading of these notes and their many insightful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Rezakhanlou, F. (2019). Stochastic Solutions to Hamilton-Jacobi Equations. In: Giacomin, G., Olla, S., Saada, E., Spohn, H., Stoltz, G. (eds) Stochastic Dynamics Out of Equilibrium. IHPStochDyn 2017. Springer Proceedings in Mathematics & Statistics, vol 282. Springer, Cham. https://doi.org/10.1007/978-3-030-15096-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-15096-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-15095-2

Online ISBN: 978-3-030-15096-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)