Abstract

Over the earlier decades of the last century, researchers began to explore the use of people as “measuring instruments.” For food, this systematized effort began in earnest with ratings of liking of different products, with such ratings being analyzed for differences (Peryam and Pilgrim 1957). Measurement by itself, no matter how well executed, was not sufficient. It would take a change of world view, from measure of single items or happenstance discovery of relation to the world of systematics, DOE, design of experiments.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Introduction: Systematics

Over the earlier decades of the last century, researchers began to explore the use of people as “measuring instruments.” For food, this systematized effort began in earnest with ratings of liking of different products, with such ratings being analyzed for differences (Peryam and Pilgrim 1957). Measurement by itself, no matter how well executed, was not sufficient. It would take a change of world view, from measure of single items or happenstance discovery of relation to the world of systematics, DOE, design of experiments.

The new psychophysics has had a direct impact on the world of product design and perhaps one that was anticipated. More enterprising researchers realized, however, that they had a tool by which to understand how ingredients drove responses, whether the response be the perceived sensory intensity of the food or beverage (e.g., the sweetness of cola) or the degree of liking. Psychophysicists, specializing in the study of the relations between sensory magnitude and physical intensity, soon began to contribute to this effort, especially with simple systems, such as colas and some foods (Moskowitz et al. 1979.) What is important to keep in mind is that these curves provided foundational knowledge. It was quickly discovered that the same percent change in ingredients could very well produce radically different perceived changes. Doubling the concentration of a flavor ingredient, for example, was seen to be less effective than doubling the concentration of sugar for the same food. We will elaborate this type of thinking below, when we deal with stimulus-response analysis and response surface designs.

Stimulus-Response Analysis

Product design becomes far more powerful when we move from testing one product or several unrelated products to a set of prototypes that are systematically varied. The original thinking comes from both statisticians who promoted the idea of DOE (design of experiments) and from psychophysicists who promoted the idea of uncovering lawful relations between physical stimuli and subjective responses.

Beyond the design of experiments, the creation of the systematically varied prototypes, lies an entire body of statistics known as regression analysis or curve fitting. With regression analysis one discovers how a physical ingredient or set of ingredients drives a response, the most important response being overall liking. Furthermore, with nonlinear regression analysis, it becomes easier to uncover optimal or most highly liked product formulations within the range of prototypes tested but a formulation that is not necessarily one of the prototypes created (Box et al. 2005; Cochran 1950).

The author’s experience is that a reasonably simple polynomial fits the data regression modeling typically creates either a linear plot, described by the equation, and shown schematically in Fig. 1 (left panels), or described by a quadratic equation, and shown in Fig. 1 (right panels). The plot can either reflect a one-ingredient system (panel A, panel B) or a two-or-more ingredient system (panel C, panel D). We present a visual only of the two-ingredient system, but the mathematical modeling can accommodate many more independent variables, even when we cannot easily visualize the model. The plots represent the type of relations one might observe when the dependent variable (ordinate) continues to increase linearly with increases in the independent variable (panel A, panel C) or when the dependent maximizes at some intermediate point of one or both independent variables (panel B, panel D.)

Regression modeling plays an important role in product design for at least two reasons:

-

1.

Regression provides insights into what might be important, thus giving direction for product design. The developer creates a quantitative structure to discover what operationally varied factor might be important, rapidly providing insights that could not be obtained were the effort to be focused on one product.

-

2.

The second, perhaps more important, is that regression analysis forces the necessary shift from focusing on one product to focusing on many products. One soon realizes the futility of efforts to understand the drivers of liking by relating the rating of liking for one product to the rating of sensory attributes for the same product, using as inputs both the sensory and the liking ratings assigned to a single product by many respondents. The reliance on one product alone produces a fallacious approach which confuses the “noise- or variability-based” information one learns from the variation in responses to one product with the “signal-based” information from the variation in responses to many products. As the intellectual development of the field proceeded, this change in focus, from the study of variability to the study of patterns, would inevitably lead to more powerful tools.

The question often arises as to the reason for the late adoption of experimental design and modeling in the world of food development. Almost 50 years ago, the author had the opportunity to meet and chat with two leaders in experimental design, Mr. Al May of Pillsbury Inc., and Dr. Robert Carbonell of Standard Brands, Inc. (later a division of Nabisco, Inc.). The discussions of both of those meetings, one in Minneapolis with Al May, the other in New Jersey with Bob Carbonell, focused on this topic. Both suggested quite separately that the field of product design was simply not ready. Both were strong proponents of design and could show success, but the “Zeitgeist” was strongly influenced by the spirit of expert panels and descriptive analysis. It would take a generational shift, pushed by quite young researchers in their first real jobs, these being Dr. Herbert Meiselman (editor of this volume) and Dr. Howard Moskowitz (author of this chapter). Both researchers would pull the world of evaluation out from the cloister of expert panel to the world of consumers, whether these be soldiers (studied by Meiselman) or civilians (studied by Moskowitz, first for the US Army, and later for the food industry).

1970s–2000: Large-Scale Response Surface Modeling and Subsequent Business Success

As we proceed with the history, we come to new developments, best called “systematics.” Product development would evolve to a systematic exploration of alternative prototypes. The logic of the approach might be obvious, since it has produced in its wake many corporate successes, the approach new to managers at the time of the efforts, 1970s–2000s. There were four main obstacles, cultural barriers that would have to be overcome. It’s relevant to note these barriers because they apply to many approaches which may disrupt conventionally accepted methods, even though these new approaches produced better results:

-

1.

Effort deflected from the standard procedures: To produce the prototypes often required plant runs, taking away valuable plant time from producing products.

-

2.

Resistance to experimentation because some prototypes are clearly not optimal: Product developers were accustomed to presenting one or two prototypes to be tested. The notion of testing “nonoptimal products” was simply not reasonable.

-

3.

Fear of technically sophisticated methods: Many researchers were not familiar with thinking and statistics of systematic experimentation.

-

4.

Expansion of responsibilities requires breaking down conventional corporate silos: Sensory analysis worked with in-house panels, not with external panels, and positioned themselves as a testing service rather than as a design service.

Food design would change beginning in the 1970s, primarily because of the cooperation of market research and psychophysics, consumer researchers, and the functionalist agenda. During the 1970s and 1980s sensory researcher took a different path, enjoying acceptance as the in-house specialists. Their in-house roles entailed building their staff, conducting the preliminary in-house testing of products for early-stage development, and occasionally vying with market researchers. Furthermore, food research done by the in-house specialists moved away from representative consumer panelists, which was left to the market research world. The strategy moved the sensory world away from consumer research, toward a focus on execution rather than on business problem-solving.

At the same time, market researchers, focusing as they did on consumer responses, began to recognize the value of psychophysics, not so much as a science but rather as a tool by which to design products. In the world of consumers, marketing researchers already enjoyed a dramatic competitive advantage versus the sensory professional. They did not experience the existential crisis facing the sensory world, namely, the prospect of abandoning one’s expertise (descriptive analysis) for another world view (product design). Rather, the problem faced by market researchers was to understand how these new methods, collectively called RSM, response surface methods, could improve what the researcher was already doing, namely, testing one or two products with consumers in order to drive market success.

Some original work had been done in the early 1970s with the sensory professionals at Fermco Biochemics, Inc., on the sweetness and likeability of mixtures of aspartame, cyclamate, and saccharin in a cola beverage, the aforementioned study (Moskowitz et al. 1979.)

The 1970s witnessed the beginning of a three-decade use of large-scale studies to design new food products. These studies involved products as varied as Pizza Hut pizzas (traditional, pan), General Food’s Maxwell House Coffee (both roast and ground and instant), Tropicana’s Grovestand® Orange Juice, Vlasic’s Zesty Pickle®, and indeed the full range of pickles (from level 1–4), Campbell Soup’s Prego® (pasta sauce), Campbell Chicken Soup®, Hormel Food’s Spam®, Oscar Mayer low fat deli meats, and Diet Dr. Pepper Cherry®, a carbonated beverage.

Reading the above, one might get the impression that the world of the 1970s to about 2010 was open to these new methods of experimental design. The answer is a definite yes as well as a definite no. Several large-scale RSM studies run to optimize products, generated new products, and in some cases major new business opportunities. But the story is more complex. The studies were funded by top management, usually requested either by marketing research convincing R&D or by R&D product development requesting the opportunity to try these new approaches to create products.

The Zeitgeist of the 1970s: Openness and Optimism

Now that we have heard about the actual experience, the interesting things to share are the “why” and the “what” or why did the Zeitgeist encourage or perhaps better, allow, these studies, and what did these studies comprise?

The Zeitgeist of the 1970s was one of optimism. The Vietnam War was finishing, computers were coming on the scene with the first microcomputers to appear in 1976, and many of the top managers in companies realized that the next decade would see more competitors arise to grab revenues and share. Added to that was the generation of young, better educated top managers, and a few middle-managers as well.

Food design in the 1970s might be summarized as optimistic, characterized by open competition, bubbling up with technology that could be used after a short stint of studying, and perhaps most important, an openness to exploration. The business climate was such that the market research suppliers were able to meet with clients and with top management, to present the latter with their arguments about why these new RSM methods, and multiple products were a good thing to try. The deadening effort of preferred supplier contracts “uber alles” had not yet taken hold, to destroy the spirit of exploration. In short, people were open to ideas, not wedded to control through process.

What Was and Remains Involved in the RSM Effort

One cannot disguise the reality that creating an array of systematically varied products according to an experimental design demands concentration, resources, and hope. Marketing management must restrain its desire for “quick” hits. Product developers must accept the job of making products, even if in the heart they just “know” from experience that some prototypes in the design will be no good and must stop themselves from rejecting the efforts by their complaints that it is a waste of time and effort to create prototypes which are clearly going to score poorly in a test.

In the end, RSM dictated that the developer should create as few as 6–8 prototypes to as many as 45 or more. The effort takes time and resources, but enlightened management accepted the necessity of disciplined effort. Once the prototypes were developed, it was a matter of testing the products among consumers, a task that turned into a modest extension of what was already being done.

Unexpected Benefits from RSM: Sensory Preference Segmentation and Cost-Based Optimizations

Although the skeptic might criticize what would become relatively high expenses for these tests compared to the in-house preference tests with employees or compared to the competitively bid preference tests or acceptance tests run by market researchers, the outcomes of these large-scale RSM studies proved to the management how valuable the results were in terms of business. In fact, the success of corporations, especially the Campbell Soup Company and Prego, led to one of the most popular TED talks, that was given by Malcolm Gladwell. The talk was a first, celebrating the marriage of psychophysical thinking, product design, and corporate vision, and was repeated and updated 8 years later (Gladwell 2012).

We can enumerate at least three different uses and thus business benefits of the RSM data, beyond simply getting the single best product. These different benefits, often ignored today in the haste to launch, and in the slavery of timelines and budgets, have provided corporations with decades of revenue and powerful business intelligence.

-

1.

Sensory preference segmentation. We know that people vary. As noted above, the sensory specialist involved in training descriptive panels ignored this issue as simply not relevant. The conventional market researcher, working in the world of paired preference tests or even single product tests (so-called pure monadic tests) treated the variability as interesting, not as a something relevant directly to business but an “insight,” something to include in the report and perhaps some signal of “something going on that could be understood.” In other words, no one knew what to do with the variability.

Psychophysicists had also found individual variability in what people liked, and in fact when one instructed the respondent to rate the “liking” of a set of sugar solutions of different concentrations presented in irregular order, the results looked like the modal curve in the left panel of Fig. 2. The modal curve may have been an average curve, but it was hiding a very important message from nature. It quickly became obvious that people were different in a consistent manner. Some people liked increasing concentrations of sugar water, i.e., they preferred higher sweetness. Others preferred lower concentrations of sugar water, i.e., they preferred lower sweetness. These individual differences appeared to be systematic in the context of the evaluation of an array of different products. When the results from RSM were analyzed to reveal different groups of consumers with clearly different sensory preferences in actual foods, the newly revealed, now intuitively so clearly obvious, drove the development of profitable new lines of related products in the same, in order to satisfy the preferences of the different taste-preference segments.

Sensory-liking curves. The figure shows the typical sensory-liking curve with a single optimum (so-called bliss point), obtained from averaging the results from the total panel (left figure), and the underlying set of sensory-liking curves, one per respondent, which combine to generate the total panel data (right figure)

-

2.

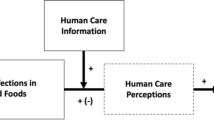

Optimizing products subject to imposed constraints. Experimental designs allow the research to create equations relating the ingredients to acceptance, to sensory perceptions, to objective measures (e.g., calories, nutrition), and even to image attributes that one does not ordinary think of as sensory or liking attributes, e.g., “perceived healthfulness.” Table 1 shows a large-scale experimental design, used to deal with solving the conundrum of how the company could discover a mixture of ingredients, so that the addition of that mixture to a flagship product allowed it to reduce salt in the product. By using various ingredients, combined by design using RSM, the company could empirically develop the necessary knowledge to reduce the sodium content to the desired level, and yet maintain product acceptability. Such efforts generated many different in-market products, such as orange juice, pizza, coffee, and others mentioned above.

Creating the experimental design and developing products are followed by the evaluation of the prototypes, then the creation of equations relating the ingredients, and combinations of ingredients to each of the ratings, and physical measures (e.g., Na level.) The corporate effort to create the prototypes and test these prototypes with relatively few respondents per prototype (e.g., 300 respondents, each tasting five to eight samples) generates the necessary data.

The equations enable the product designer to identify the precise combination of ingredients which satisfy specific objectives, even when the actual combination of ingredients had not been directly tested but lies within the range of ingredients covered by the prototype. The approach is described extensively in Moskowitz (1994).

Table 2 shows the estimated formulation for four products, with formula levels constrained to lie within the range tested of the six ingredients. The estimated formulations were required to obey imposed constraint(s), on the objective amount of sodium (Na), and the estimated subjective ratings, respectively.

-

3.

Reverse engineering . The RSM approach enables the product designer and developer to specify a sensory and/or objective profile (e.g., profile of physical measures) and, in turn, identify the approximate formulation which will generate the desired sensory profile as closely as possible. At the same time, the independent variables and the dependent variables must all lie within imposed constraints. The imposed constraint may be simply the levels achieved in the study (e.g., low versus high ingredient level for ingredients or low versus high sensory levels achieved in the study.) The imposed constraint may be more demanding, such as duplicating a sensory profile or objective profile, remaining within the limits of the independent variables (ingredients) already tested but achieving some predetermined benchmark, such as a basic, high level of acceptance.

The approach, reverse engineering, was suggested by the author as a cost-efficient, rapid, evidenced-based method to translate an “image” profile into an ingredient profile, without need to profoundly understand the product from years of prior experience (Moskowitz 1994). Image profiles might be complicated attributes with specific levels for the rating of “for adults,” “for evening,” and “seems expensive,” respectively. These three attributes (adult, evening, expensive) are neither sensory attributes nor evaluative, liking or purchase attributes. They are something else, a new type of description. Yet, they can be incorporated into the RSM study, optimized, and reverse engineered, respectively.

In some cases, the reverse engineering approach revealed that the self-profiled ideal or the product with so-called perfect JAR scores (i.e., just about right on everything) did not produce the desired, optimal products (author’s observations). The experimental design comprised the necessary array of prototypes to create the model. The respondents rated the products both on JAR scales for specific sensory attributes. The respondents also rated the desired “ideal product” on the same scale that they used to profile the products. A product configured to produce the self-designed ideal or a product configured to produce JAR values close to 0 did not end up being the optimal product (Moskowitz 2001), although one might think so from the very simplicity and directness of the experiment (Moskowitz 1972) (Table 3).

Table 3 Estimated formulations, liking, sensory profile, and Na level for a product specified in terms of a goal sensory profile to be matched

The Industry Pivots to Efficient Designs

The early 1990s witnessed the proliferation of personal computers, the increasing sophistication of company employees, and also a fight between the corporate staff and the increasingly powerful purchasing department. These developments would wreak havoc on the development of smarter, more effective product design. Companies would become more focused on survival in an increasingly competitive environment, where knowledge was created and deployed increasingly faster. To maintain corporate stock prices, most visible and funded efforts focused on cutting costs. Perhaps the most devastating effect was actions of the corporate-purchasing departments, which in their attempt to cut costs insisted on standardizing consumer measurements, limiting knowledge about markets and consumers to a few “approved” vendors.

The immediate effects of these corporate changes were to reduce almost all efforts in product design to small, easy-to-run, inexpensive studies. The foundational studies which ended up making hundreds of millions of dollars, and even in some cases more, were abandoned in favor of so-called beauty contests, to pick the “best performer” from a set of competitors. Optimization turned into these beauty contests, so that when one “optimized” a product, one simply picked the best from a set of candidates, rather than synthesizing the best product using an underlying model embedding one’s knowledge.

Smaller Experiments, Agile Thinking

In 2014 the author was invited to participate in a panel on creating healthier products. The request was to present to the audience a feasible method for improving the chances of product success, based upon experimental design. The key was “feasible.” The invitation in 2014 was extended during the retrenchment of corporate efforts. The rationale for the invitation was that a few professionals recognized the need for more effective product design which would create products that people wanted, rather than just products introduced by nervous marketers as a knee-jerk reaction to the action of competitors which had just a new entry into the market.

It was at the 2014 IFT meeting on health, held in the winter in Chicago, that the author suggested a new version of experimental design, requiring exactly 8 prototypes and 25 respondents who would test all prototypes in a random order, rating each prototype on a battery of up to 20 attributes (see Fig. 3, Panel 1, Input Data). These rating attributes would comprise a key evaluative criterion (e.g., overall liking), sensory attributes (strength of an attribute such as sweetness), liking attributes (liking of the attribute, e.g., liking of texture), and “image attributes” (more complex attribute such as perceived healthfulness or perceived caloric density, etc.). The actual work for developing the paradigm had begun in 2007, but the nature of the scientific and business communities was such that the relevance of accurate, affordable, fast was only really accepted at the start of the second decade of the twenty-first century.

The rapid development approach, limited to 3–7 variables, each tested at two levels, eight prototypes, 25 respondents, with each respondent evaluating every one of the eight prototypes. The figure shows the process from (1) data input (summarized across respondents), to (2) schematic curves showing how sensory attributes drive liking for total and for segments, to (3) models showing how the ingredients drive liking and sensory perceptions, and finally (4) a map of the products for Segment 2, with the size of the letter proportional to the degree of liking assigned by Segment 2 to the particular prototype

The process appears in Fig. 3, which provides a sense of the data inputs and the key analyses:

-

1.

Section 1 shows part of the data table, revealing the eight prototypes, the formulations assuming that we are working with four variables (A, B, C, D = A × B), 25 respondents who evaluated each of the prototypes on liking, and sensory attributes. Section 1 also shows the results from two sensory preference segments, emerging from clustering the 25 respondents based on the patterns of ingredients which drive the rating of overall liking.

-

2.

Section 2 shows how two sensory attributes “drive” the key evaluative criterion of overall liking, for both the total panel of 25 respondents, and for the two sensory preference segments, respectively.

-

3.

Section 3 shows how the ratings can be deconstructed into the contributions of the independent variables. In our case we are dealing with four independent variables, A, B, C, and D, respectively. The additive constant corresponds to the product defined as all four independent variables set to option 0. Thus, Section 3 shows how the change from option 0 to option 1 “drives” the ratings.

-

4.

Section 4 shows a two-dimensional map. The coordinates of the map are created from the first two factors of the sensory attributes. These factors can be thought of as sensory primaries, although they are of purely mathematical nature. The factors are orthogonal to each other, meaning that they are statistically independent of each other. The factors are estimated after the structure is rotated according to a criterion which makes the solution simple to understand. The convention is a quartimax rotation, but any other rotation will do. Finally, the products can be embedded in this map because each product has two coordinates, for Factor A and for Factor B, respectively. The size of the letters corresponds to the degree of liking of the product. We see the map for Segment 2. The objective of the mapping is to identify the “white space,” defined as areas near high levels of liking for the segment but not occupied by a product. The actual mapping exercise generates three maps, one for Total Panel, and one map each for Segment 1 and for Segment 2, respectively. The actual mechanics of the mapping are easier to do with standard statistical programs currently available.

The foregoing approach is certainly small, agile, fast, and cost-effective. It represents a different way of thinking, one more appropriate to the realities which are emerging during the latter part of the second decade of this twenty-first century. The old adage “the perfect is the enemy of the good” has never been truer:

-

1.

Cost-effective “good” is better than expensive “perfect.” In the interests of project costs, give valuable data, always keeping budget in mind. Appropriate sampling is important but not as important as speed and quality of results. The result of today’s emphasis on speed is that companies are forced to accept convenience samples for product testing. Thus, the approach we provide uses convenience samples, not so much to identify the best product, as to identify the best formulation areas, and the nature of the segments. To get a “sense” of what’s important, the convenience sample is adequate. This sense of what’s important is to guide product developers.

-

2.

Better is “good.” With one product to test, the researcher either must have remarkable insight about what to recommend or be able to skirt the issue. On the other hand, with eight products, systematically varied, the likelihood is much higher that some of the products will be either good or point the way to “better.”

-

3.

Fast is good. Speed has never been more important. There is a business adage that it is better to be 80% right and on time than 100% right but late. With the proliferation of computers, artificial intelligence, and do it yourself research, the demands for speed ever increase. What was acceptable a decade ago seems interminably slow. Tomorrow is no excuse. Now is the new “on time.” Furthermore, being able to do a study of this type on a weekly basis ensures that the product designer/developer will quickly discover the “right product” or, sadly, discover that there is no “right product.” Discovering that it’s impossible to do what is requested is as valuable as actually doing what is requested.

The Migration of Systematic Thinking to the Up-Front Design of Ideas

No history of product design can leave out the important topic of design through product description, also known as concept testing and concept optimization. The foregoing history presented the tapestry of product design through the creation of actual designs, the more traditional history to which product designers and developers are accustomed. Product design through description, so-called promise testing, concept testing, and concept optimization, share in this history, but in comparison to the foregoing efforts with actual products, the history of design through description is shorter and far less a history of competing ideas and world views. Our treatment of design at the concept level will not require much space, simply because it has not emerged in a tortured way out of competing histories, nor out of competing groups in the corporation (Moskowitz et al. 2008.)

Promise Testing and Concept Testing

Our short history begins with the evaluation of needs and opportunities. Whether the evaluation was/is formal or informal, the process is pretty much the same. Ideas emerge, whether these ideas deal with the product features, the product benefits to the user, the nature of how the product is purchased, stored, prepared, and so forth. The ideas can even touch upon reasons to buy, generating marketing-oriented rather than design-and-development-oriented ideas.

These ideas are scored, either internally or externally, to provide a set of resource material. From the ratings of these different ideas, so-called promise testing two streams flow. The first is a product concept; the second is a prototype. Both the concept and the prototypes may be created by the same group, R&D, or the product concept may be created by the marketers and delivered to the R&D developers, who put the prototype(s) together, based upon the concept. The path of concept and prototype may be intimately linked through the process or may proceed separately until a final concept/product test is done. That final test measures how well does the product perform, as well as how the product fits the concept or the concept fit the product.

Whether the product developer should work from the specifications provided by the concept or the marketer should work from the product created by developer’s best efforts remains a bone of contention in corporations. The traditional approach follows the typical sequence of marketing dictating to developers, but sometimes the developers themselves are far better about sensing the aspects of a “great product,” leaving the marketing to describe and market the “newer and better product.”

Often the promise tests (also called idea screening), whether done formally or informally, lead quickly to the creation of full test concepts. These concepts are descriptions of products. They may be simple phrases (Fig. 4a) or a densely written paragraph (Fig. 4b).

(a): Example of a concept comprising a simple set of phrases, stacked one atop the other. The respondent reads the concept as a totality and rates the concept on a set of scales. This type of concept format is often used to guide developers, because they need to know what parts of the concept (i.e., product description) are acceptable, and what parts are not, and need to be changed. (b): Example of a densely worded concept. This type of concept is often tested by marketing research, primarily to determine the likelihood of product success in the marketplace

The analysis of responses to concepts is usually done by calculating the percent of the respondents who assigned a specific rating denoting high acceptance. For example, one of the most popular ways to identify what a good product idea might be instructs respondents to rate the likelihood of purchase using an anchored five-point scale, with the key evaluative criterion being the percent of respondent who assign a purchase intent rating of either 5 (definitely would purchase) or 4 (probably would purchase). The full scale ranges from 1 (definitely not purchase) to 3 (might/might not purchase) to 5 (definitely purchase). To get a sense of the promise of the product from the concept scores, the researcher compares the distribution of ratings assigned to the test concepts again the distribution of other products, whose market history is known. This comparison allows an estimate of the potential for business success, based upon the previous data, so-called “norms.”

The rating scale need not be a 1–5 scale but might be a scale of value, e.g., select the dollar value that would be appropriate for the product described in the vignette. The respondent would select a dollar value from an array of different dollar values, with these dollar values presented in irregular order. Instead of a value for purchase intent or liking, the respondent assigns a “fair economic value” to the concept.

Experimentally Designed Concepts

We finish this chapter with the use of experimental design, this time to create product ideas rather than to create product prototypes. The method uses the group of research approaches known as conjoint measurement (Moskowitz et al. 2008). In simple terms, the researcher identifies the general features of the products (called variables or silos) and the options or alternatives of each feature (called level or element). Coming up with the variables and levels can be done either by marketing or by R&D.

There are many different experimental designs that one can use. The common feature is that the experimental design mixes and matches the elements, creating different combinations, to produce a variety of product descriptions. The respondent inspects each combination as a new product idea and rates interest or some other evaluative rating. In some newly emerging efforts, the respondent might inspect the combination, rating it on price the respondent would pay, or selecting an end use from a set of different uses, or selecting a feeling or emotion from a list of different feelings.

The analysis proceeds in a straightforward manner, much in the same way as the analysis of the products in Fig. 3, Section 1. The experimental design defines the different combinations or concepts. The experimental design may be one of several types, with the proviso that the elements or messages of the concept are presented in a way that makes the elements statistically independent of each other. This statistical property of independence results from the ability to create concepts which have “true zeros.” The true zero is the total absence of any element from a variable (e.g., message about taste or message about ingredient). The experimental design allows for “true zeros,” namely, that in the test combination an entire variable or silo might be absent. It makes no difference to the respondent, who simply proceeds, giving a “gut reaction.” The analysis uses ordinary least-square regression analysis to relate the presence/absence of each element to the rating of purchase intent, price, or the selection of an emotion or an end use.

There are a variety of alternative ways to set up the design. In the end, however, what is desired is simply the decomposition of the response to the combinations (test product ideas) to the part-worth contribution of each idea, each level. From that, one can identify mind-sets (people who respond to different aspects of the product) and can synthesize new-to-the-world combinations of elements or features, satisfying different, marketing-imposed or technology-imposed constraints.

Let us finish this discussion by considering a study of yogurt, whose results are shown in Table 4. The respondents evaluated a set of 48 vignettes or test concepts, created by experimental design, rating each test concept by selecting a dollar value believed to be appropriate. Table 4 shows the results of deconstructing the experimentally designed concepts for yogurt into the dollar values of the different elements.

The product design can now look at the data, either for the total panel of 312 respondents or at subgroups of respondents whose data (dollar values for specific elements) suggest that they attend to different types of elements in the concept. There are respondents who value health, versus respondents who value sensory pleasure, versus respondents who evaluate science. From these groups, the product designer can identify the nature of the product to appeal to each mind-set. The next step consists of translating the winning ideas into actual prototypes.

Conclusion: Where Have We Been, and Where Are We Heading?

Ask a nontechnical person, someone not in the food or beverage industry, how products are designed, one is likely to get a stare of incomprehensibility. To the ordinary consumer “out there” in the population, there is no notion of how products are designed, at least not food products. The same person might have a mental “model” of how cars or electronics are designed, because the media often features a “look behind the product.”

We have summarized a century of design in two chapters (also see Chap. 1, “The Origin and Evolution of Human-Centered Food Product Research” by Moskowitz and Meiselman in this volume). The upshot is that we have progressed from a quick “cook and look” in the early days to the involvement of professionals; these professionals spanning a range from culinary professionals to statisticians to sensory specialists and market researchers. In a phrase, we have almost the entire corporate “kitchen sink” involved in design, in one way or another.

With all these people involved, one might expect that the odds of a product being successful in the market would be very high. Yet, high failure rates continue to plague the food industry, with numbers above 50% and often far higher bandied about. We would not accept a washing machine with such a failure rate and certainly not an automobile. Any lawyer or doctor who suffers from such a failure rate for “ordinary clients” would probably be hounded out of the profession, accused of malpractice.

The question then is why the failure and where are we heading in the world of product design? As of this writing, 2019, the tools are in place for increased success in product design. The procedures for testing are efficient after more than 60 years of experience by corporate researchers as well as by contract testing services. Segmentation to open new opportunities now enjoys almost 35 years of scientific and business acceptance. Thus, all the “pieces are in place.”

What is needed now is the willingness to bring product design to the next level, to treat design as one treats architecture, whether of a house or of a computer chip. That is, the time has come in product design to move beyond the ever-so-attractive “cook and look,” limited to the creative mind of the developers and marketers. The time has come to institute formal experimental design, simple, inexpensive but powerful research with the focus on the patterns of acceptance created by an array of related products and no longer to rely on the clever, intuitive, insightful researcher whose job is to “connect the dots” and weave together a “story.” We need fewer dots, fewer stories, and in simple terms, more structure, more books of systematized results that can be used again and again to guide development. Science and foundational research should guide us about what to change and how to change the features of products and, in turn, tell us ahead of time what to expect. Testing should confirm the up-front, foundational design “driving” the in-going design, and not end up providing data which needs a storyteller and a story to render the results coherent and compelling to management.

References

Box, G. E., Hunter, J. S., & Hunter, W. G. (2005). Statistics for experimenters: Design, innovation, and discovery (Vol. 2). New York: Wiley-InterScience.

Cochran, W. G. (1950). Experimental design. New York: Wiley.

Gladwell, M. (2012). TED radio hour. https://www.npr.org/2012/05/04/151899611/malcolm-gladwell-what-does-spaghetti-sauce-have-to-do-with-happiness

Moskowitz, H. R. (1972). Subjective ideals and sensory optimization in evaluating perceptual dimensions in food. Journal of Applied Psychology, 56, 60–66.

Moskowitz, H. R. (1994). Food concepts and products: Just-in-time development. Trumbull: Food and Nutrition Press.

Moskowitz, H. R. (2001). Sensory directionals for pizza: A deeper analysis. Journal of Sensory Studies, 16, 583–600.

Moskowitz, H. R., Wolfe, K., & Beck, C. (1979). Sweetness and acceptance optimization in cola flavored beverages using combinations of artificial sweeteners—A psychophysical approach. Journal of Food Quality, 2, 17–26.

Moskowitz, H. R., Porretta, S., & Silcher, M. (2008). Concept research in food product design and development. New York: Wiley.

Peryam, D. R., & Pilgrim, F. J. (1957). The hedonic scale method of measuring food preference. Food Technology, 11(January), 32.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this entry

Cite this entry

Moskowitz, H.R. (2020). Commercial Product Design: Psychophysics, Systematics, and Emerging Opportunities. In: Meiselman, H. (eds) Handbook of Eating and Drinking. Springer, Cham. https://doi.org/10.1007/978-3-030-14504-0_151

Download citation

DOI: https://doi.org/10.1007/978-3-030-14504-0_151

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-14503-3

Online ISBN: 978-3-030-14504-0

eBook Packages: Behavioral Science and PsychologyReference Module Humanities and Social SciencesReference Module Business, Economics and Social Sciences