Abstract

A linear gyroscopic system is of the form:

where the mass matrix M is a symmetric positive definite real matrix, the gyroscopic matrix G is real and skew symmetric, and the stiffness matrix K is real and symmetric. The system is stable if and only if the quadratic eigenvalue problem \(\det (\lambda ^2 M+\lambda G + K)=0\) has all eigenvalues on the imaginary axis.

In this chapter, we are interested in evaluating robustness of a given stable gyroscopic system with respect to perturbations. In order to do this, we present an ODE-based methodology which aims to compute the closest unstable gyroscopic system with respect to the Frobenius distance.

A few examples illustrate the effectiveness of the methodology.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Gyroscopic systems play an important role in a wide variety of engineering and physics applications, and vary from the design of urban structures (buildings, highways, and bridges), to aircraft industry, and to the motion of fluids in flexible pipes.

In its most general form, a gyroscopic system is modeled by means of a linear differential system on a finite-dimensional space, as follows:

Here, x(t) corresponds to the generalized coordinates of the system, M = M T represents the mass matrix, G = −G T and K = K T are related to gyroscopic and potential forces, D = D T and N = −N T are related to dissipative (damping) and nonconservative positional (circulatory) forces, respectively. Therefore, the gyroscopic system (1) is not conservative when D and N are nonzero matrices.

The stability of the system is determined by its associated quadratic eigenvalue problem:

In particular, the system is said to be strongly stable if all eigenvalues of (2) lie in the open left half plane, weakly stable if all eigenvalues of (2) lie in the closed left half plane, that is, there is at least one pure imaginary eigenvalue and all such eigenvalues are semi-simple. It is unstable otherwise.

Although nonconservative systems are of great interest, especially in the context of nonlinear mechanics (see [8] for reference), this work is confined to conservative systems. Thus, the equation of motion is given by:

In particular, the spectrum of (2) is characterized by Hamiltonian symmetry. We note indeed that for any eigenvalue λ with a corresponding pair of left and right eigenvectors (y, x), that is:

also \(\overline \lambda ,-\lambda ,-\overline \lambda \) are eigenvalues with corresponding pairs of left and right eigenvectors \((\overline y,\overline x), (x,y), (\overline x,\overline y)\), respectively.

Let us define the matrix pencil \(\mathcal Q(\lambda )= M\lambda ^2 +G \lambda +K\) such that the associated quadratic eigenvalue problem reads

In the absence of gyroscopic forces, it is well known that the system \( M\ddot x(t) + Kx(t) = 0\) is stable for K positive definite and unstable otherwise. When G is nonzero, then the system is weakly stable (see [11]) if the stiffness matrix K is positive definite, and may be unstable if K ≤ 0 and K is singular. In the latter case, indeed, the 0 eigenvalue can be either semi-simple (thus the system is stable) or defective (unstable). Indeed, as numbers in the complex plane, the eigenvalues are symmetrically placed with respect to both the real and imaginary axes. This property has two important consequences. On one hand, the eigenvalues can only move on the axis they belong to unless coalesce occurs; on the other hand, stability of system (3) only holds if all eigenvalues are purely imaginary.

Basically, for a conservative gyroscopic system, strong stability is impossible, since the presence of an eigenvalue on the left half plane would imply the existence of its corresponding symmetric one in the right half plane. The only possibility for the system to be stable is to be marginally stable (a particular case of weak stability), which requires that all eigenvalues lie on the imaginary axis, and the only way to lead the system to instability is a strong interaction (coalescence of two or more eigenvalues, necessary for them to leave the imaginary axis). The stiffness matrix K, for which no information about its signature is provided, plays a fundamental role in the stability of the system, and many stability results are available in the literature, based on the mutual relationship of G and K, as reported in [6, 7, 10] and references therein, and summarized in [12]. Given a marginally stable system of the form (3), the aim of this work is to find a measure of robustness of the system, that is the maximal perturbation that retains stability.

The paper is organized as follows. In Sect. 2, we phrase the problem in terms of structured distance to instability and present the methodology we adopt. In Sect. 3, we illustrate the system of ODEs for computing the minimal distance between pairs of eigenvalues. In Sect. 4, we derive a variational formula to compute the distance to instability. In Sect. 5, we present the method, and in Sect. 6, some experiments.

2 Distance to Instability

Distance to instability is the measure of the smallest additive perturbation which leads the system to be unstable. To estimate the robustness of (3), we will use the Frobenius norm. In order to preserve the Hamiltonian symmetry of the system, we will allow specific classes of perturbations. Indeed, gyroscopic forces and potential energy will be subject to additive skew-symmetric and symmetric perturbations, respectively. In [9], such a measure of robustness is called strong stability, which seems to be misleading according to the definitions in Sect. 1. Nevertheless, the author’s aim was to find a neighboring system, that is an arbitrarily close system which retains stability and symmetry properties. Interesting results on stability are presented, allowing sufficiently small perturbations. However, our goal is to characterize these perturbations, and give a measure of “how small” they need to be to avoid instability. The distance to instability is related to the ε-pseudospectrum of the system.

In particular, we assume that M is fixed and we allow specific additive perturbations on G and K.

Therefore, let us define the structured ε-pseudospectrum of [G, K] as follows:

We will call ε ⋆ the sought measure, meaning that for every ε < ε ⋆ the system remains marginally stable. Moreover, as mentioned in Sect. 1, the only way to lead the system to instability is a strong interaction, which means that at least two ε ⋆-pseudoeigenvalues coalesce. Exploiting this property, we will compute the distance to instability in two phases: an outer iteration will change the measure ε of the perturbation, and an inner iteration will allow the ε-pseudoeigenvalues to move on the imaginary axis, according to the fixed ε, until determining the candidates for coalescence. The following remark suggests to limit our interest to systems in which the stiffness matrix is not positive definite.

Remark 1

When K is positive definite, the distance to instability of the system coincides with the distance to singularity of the matrix K, which is trivially equal to the absolute value of the smallest eigenvalue of K, because of the Hamiltonian symmetry.

2.1 Methodology

We make use of a two-level methodology.

First, we fix as ε the Frobenius norm of the admitted perturbation [ΔG, ΔK]. Then, given a pair of (close) eigenvalues λ 1, λ 2 on the imaginary axis, we look for the perturbations associated to a minimum of the distance |λ 1 − λ 2| on the imaginary axis. This is obtained by integrating a suitable gradient system for the functional |λ 1 − λ 2|, preserving the norm of the perturbation [ΔG, ΔK].

The external method controls the perturbation level ε to the aim of finding the minimal value ε ∗ for which λ 1 and λ 2 coalesce. The method is based on a fast Newton-like iteration.

Two-level iterations of a similar type have previously been used in [4, 5] for other matrix-nearness problems.

To formulate the internal optimization problem, we introduce the functional, for ε > 0:

where λ 1,2(ΔG, ΔK) are the closest eigenvalues on the imaginary axis of the quadratic eigenvalue problem \(\det (M \lambda ^2 + (G+\varDelta G) \lambda + (K + \varDelta K))=0\).

Thus, we can recast the problem of computing the distance to instability as follows:

-

(1)

For fixed ε, compute

$$\displaystyle \begin{aligned}{}[\varDelta G(\varepsilon), \varDelta K(\varepsilon)] \longrightarrow \mathop{\min}\limits_{\varDelta G, \varDelta K: \| [\varDelta G, \varDelta K] \|{}_F = \varepsilon} f_\varepsilon(\varDelta G,\varDelta K) := f(\varepsilon) {} \end{aligned} $$(6)with

$$\displaystyle \begin{aligned} \varDelta G + \varDelta G^T \quad \mbox{and} \quad \varDelta K+ \varDelta K^T=0. \end{aligned} $$(7) -

(2)

Compute

$$\displaystyle \begin{aligned} \varepsilon^* \longrightarrow \mathop{\min}\limits_{\varepsilon > 0} \{\varepsilon: f(\varepsilon)=0\}. {} \end{aligned} $$(8)that means computing a pair (ΔG ∗, ΔK ∗) of norm ε ∗ such that λ 1(ε ∗) is a double eigenvalue of the quadratic eigenvalue problem \(\det (M\lambda ^2 + (G+\varDelta G_*) \lambda + (K + \varDelta K_*))=0\).

2.2 Algorithm

In order to perform the internal minimization at (6), we locally minimize the functional f ε(ΔG, ΔK) over all [ΔG, ΔK] of at most unit Frobenius norm, by integrating a steepest-descent differential equation (identifying the gradient system to the functional (5)) until a stationary point. The key instrument to deal with eigenvalue optimization is a classical variational result concerning the derivative of a simple eigenvalue of a quadratic eigenvalue problem.

In order to perform the minimization at (8), instead, denoting the minimum value of f ε(ΔG, ΔK) by f(ε), we determine then the smallest perturbation ε ⋆ > 0 such that f(ε ⋆) = 0, by making use of a quadratically convergent iteration.

Remark 2

Given a fixed ε, we compute all the possible distances between the eigenvalues, in order to identify the eigenpair which coalesces first (global optimum).

The whole method is summarized later by Algorithm 2.

3 The Gradient System of ODEs

In this section, the goal is to design a system of differential equations that, for a given ε, will find the closest pair of ε-pseudoeigenvalues on the imaginary axis. Indeed, this turns out to be a gradient system for the considered functional, which allows to obtain a useful monotonicity property along its analytic solutions.

To this intent, let us define the two-parameter operator:

Let λ = λ(τ), and let λ 0 satisfy the quadratic eigenvalue problem (4).

Assuming that λ 0 is a simple eigenvalue, then by Theorem 3.2 in [1]:

where x 0 and y 0 are the right and left eigenvectors of Q at λ 0, respectively.

Under this assumption, therefore, by the variational result (5.3) in [1], the derivative of λ with respect to τ is well defined and given by:

Next, let us consider the matrix-valued functions G ε(t) = G + εΔG(t) and K ε(t) = K + εΔK(t), where the augmented matrix [ΔG, ΔK] satisfies (7), and

The corresponding quadratic eigenvalue problem is Q ε(t, λ)x = 0, where:

Moreover, let λ 1(t) = i θ 1(t) and λ 2(t) = i θ 2(t), with θ 1(t) > θ 2(t) be two purely imaginary eigenvalues of Q ε(t, λ)x = 0, corresponding to the eigenvalue of minimal distance of Q ε(t, λ)x = 0.

Let λ 1 = i θ 1 and λ 2 = i θ 2 with \(\theta _1, \theta _2 \in {\mathbb R}\).

Conventionally assume θ 1 > θ 2.

For i = 1, 2, let y i such that

be real and positive. This is naturally possible by suitably scaling the eigenvectors. Then, applying (10) gives

where—for a pair of matrices A, B—we denote the Frobenius inner product:

The derivative of [ΔG(t), ΔK(t)] must be chosen in the direction that gives the maximum possible decrease of the distance between the two closest eigenvalues, along the manifold of unitary Frobenius norm matrices [ΔG, ΔK]. Notice that constraint (11) is equivalent to

We have the following optimization result, which allows us to determine the constrained gradient of f ε(ΔG, ΔK).

Theorem 3

Let \([\varDelta G, \varDelta K]\in {\mathbb R}^{n,2n}\) a real matrix of unit norm satisfying conditions (7)–(11), x i and y i right and left eigenvectors relative to the eigenvalues λ i = i θ i , for i = 1, 2, of Q ε(t, λ)x = 0. Moreover, let γ i , with i = 1, 2, be two real and positive numbers and consider the optimization problem:

with

where \(\mathcal {M}_{\mathit{\text{Skew}}}\) is the manifold of skew-symmetric matrices and \(\mathcal {M}_{\mathit{\text{Sym}}}\) the manifold of symmetric matrices.

The solution \(Z^\star =[Z_G^\star ,Z_K^\star ]\) of (14) is given by:

where μ > 0 is a suitable scaling factor, and

where Skew(B) denotes the skew-symmetric part of B and Sym(B) denotes the symmetric part of B.

Proof

Preliminarily, we observe that for a real matrix:

the orthogonal projection (with respect to the Frobenius inner product) onto the manifolds \(\mathcal {M}_{\text{Sym}}\) of symmetric matrices and \(\mathcal {M}_{\text{Skew}}\) of skew-symmetric matrices are, respectively, Sym(B) and Skew(B). In fact:

and

Looking at (14), we set the free gradients:

The proof is obtained by considering the orthogonal projection (with respect to the Frobenius inner product) of the matrices (which can be considered as vectors) − ϕ G and − ϕ K onto the real manifold \(\mathcal {M}_{\text{Skew}}\) of skew-symmetric matrices and onto the real manifold \(\mathcal {M}_{\text{Sym}}\) of symmetric matrices, and further projecting the obtained rectangular matrix onto the tangent space to the manifold of real rectangular matrices with unit norm.

3.1 The System of ODEs

Following Theorem 3, we consider the following system of ODEs, where we omit the dependence of t:

with η, f G, and f K as in (16).

This is a gradient system, which implies that the functional f ε(ΔG(t), ΔK(t)) decreases monotonically along solutions of (17), until a stationary point is reached, which is generically associated to a local minimum of the functional.

4 The Computation of the Distance to Instability

As mentioned in Sect. 1, the only way to break the Hamiltonian symmetry is a strong interaction, that is two (or more) eigenvalues coalesce. This property allows us to reformulate the problem of distance to instability in terms of distance to defectivity (see [3]). In particular, since the matrices G and K must preserve their structure, we will consider a structured distance to defectivity. Because of the coalescence, we do not expect the distance between the eigenvalues to be a smooth function with respect to ε when f ε = 0.

As an illustrative example, consider the gyroscopic system described by the equation:

The minimal distance among the eigenvalue of this system is achieved by the conjugate pair closest to the origin, that is, |θ 1| = |θ 2|, and coalescence occurs at the origin, as shown in Fig. 1 (left).

Let us substitute the stiffness matrix in (18) with − I, that is:

Although |θ 1| = |θ 2| still holds, strong interaction does not occur at the origin. Here, two pairs coalesce at the same time, as shown in Fig. 1 (right).

4.1 Variational Formula for the ε-Pseudoeigenvalues with Respect to ε

We consider here the minimizers ΔG(ε) and ΔK(ε) computed as stationary points of the system of ODEs (17) for a given ε, and the associated eigenvalues λ i(ε) = i θ i(ε) of the quadratic eigenvalue problem with ε < ε ∗ (which implies θ 1(ε) ≠ θ 2(ε)). We assume that all the abovementioned quantities are smooth functions with respect to ε, which we expect to hold generically.

Formula (10) is useful to compute the derivative of the ε-pseudoeigenvalues with respect to ε. We need the derivative of the operator Q w.r.t. ε, which appears to be given by:

Here, the notation \( A'= \frac {d A}{d \varepsilon }\) is adopted. Assuming that λ = λ 0 is a simple eigenvalue, and x 0 and y 0 are the right and left eigenvectors of Q at λ 0 respectively, then

Claim

\(y_0^* (\varDelta G' \lambda +\varDelta K') x_0 =0\).

The norm conservation ||[ΔG, ΔK]||F = 1, which is equivalent to \( || \varDelta G ||{ }^2_F + ||\varDelta K] ||{ }^2_F=1\), implies that 〈ΔG, ΔG′〉 = 0 = 〈ΔK, ΔK′〉. Also:

and

Therefore:

and

The previous expression provides f′(ε). Hence, for ε < ε ⋆ we can exploit its knowledge. Since generically coalescence gives rise to a defective pair on the imaginary axis, we have that the derivative of f(ε) is singular at ε ⋆.

Our goal is that of approximating ε ⋆ by solving f(ε) = δ with δ > 0 a sufficiently small number. For ε close to ε ⋆, ε < ε ⋆ we have generically (see [3])

which corresponds to the coalescence of two eigenvalues. For an iterative process, given ε k, we use formula (20) to compute f′(ε) and estimate γ and ε ⋆ by solving (21) with respect to γ and ε ⋆. We denote the solution as γ k and \(\varepsilon ^\star _k\), that is:

and then compute

An algorithm based on previous formulæ is Algorithm 2, which does not add any additional cost to the algorithm since the computation of f′(ε k) is very cheap.

Unfortunately, since the function f(ε) is not smooth at ε ⋆, and vanishes identically for ε > ε ⋆, the fast algorithm has to be complemented by a slower bisection technique to provide a reliable method to approximate ε ⋆.

5 The Complete Algorithm

The whole Algorithm 2 follows:

Algorithm 2: Algorithm for computing ε ⋆

6 Numerical Experiments

We consider here some illustrative examples with M = I, from [2, 10, 13]. In the following, ε u is chosen as the distance between the largest and the smallest eigenvalues, whereas ε 0 = 0 and ε 1 is obtained by (23).

6.1 Example 1

Let  and

and  .Also in this example, the stiffness matrix is positive definite, and the distance to singularity is ε

⋆ = 3 which coincides with the distance to instability.

.Also in this example, the stiffness matrix is positive definite, and the distance to singularity is ε

⋆ = 3 which coincides with the distance to instability.

6.2 Example 2

Let us consider the equation of motion \(M\ddot x(t) + G \dot x(t) + K x(t) = 0\), with:

Here, the two closest eigenvalues of the system are the complex conjugate θ 1 = −θ 2 = 2.1213e − 02 and coalescence occurs at the origin, with ε ⋆ = 4.6605e − 01. Figure 2 illustrates these results. On the left, a zoom-in of the eigenvalues of system (24) near the origin is provided. In the center, coalescence occurs for the perturbed system \(M\ddot x(t) + (G+ \varepsilon ^\star \varDelta G )\dot x(t) + (K+\varepsilon ^\star \varDelta K) x(t) = 0\). On the right, the two eigenvalues become real after the strong interaction, namely, for ε > ε ⋆, and the positive one leads the system to instability.

A zoom-in, before, during, and after strong interaction for system (24)

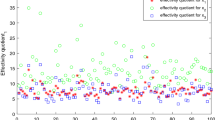

6.3 Example 3

This problem arises in the vibration analysis of a wiresaw. Let n be the dimension of the matrices. Let

and G = (g jk) where \(g_{jk}= \frac {4jk}{j^2-k^2}v\) if j + k is odd, and 0 otherwise.

The parameter v is a real nonnegative number representing the speed of the wire. For v ∈ (0, 1), the stiffness matrix is positive definite. Here, we present two cases in which v > 1, and K is negative definite.

First, consider n = 4 and v = 1.1. Then, the system is marginally stable, and the distance to instability is given by ε ⋆ = 4.6739e − 02. The eigenvalues i θ 1 = i3.4653 and i θ 2 = i2.5859 coalesce, as well as their respective conjugates.

References

Andrew, A.L., Chu, K.W.E., Lancaster, P.: Derivatives of eigenvalues and eigenvectors of matrix functions. SIAM. J. Matrix Anal. Appl. 14(4), 903–926 (1993)

Beckte, T., Higham, N.J., Mehrmann, V., Schder, C., Tisseur, F.: NLEVP: a collection of nonlinear eigenvalue problems. ACM Trans. Math. Softw. 39(2), Article 7 (2013)

Buttà, P., Guglielmi, N., Manetta, M., Noschese, S.: Differential equations for real-structured (and unstructured) defectivity measures. SIAM J. Matrix Anal. Appl. 36(2), 523–548 (2015)

Guglielmi, N., Overton, M.L.: Fast algorithms for the approximation of the pseudospectral abscissa and pseudospectral radius of a matrix. SIAM J. Matrix Anal. Appl. 32(4), 1166–1192 (2011)

Guglielmi, N., Kressner, D., Lubich, C.: Low rank equations for Hamiltonian nearness problems. Numer. Math. 129(2), 279–319 (2015)

Huseyin, K., Hagedorn, P., Teschner, W.: On the stability of linear conservative gyroscopic systems. J. Appl. Maths. Phys. (ZAMP) 34(6), 807–815 (1983)

Inman, D.J., Saggio III, F.: Stability analysis of gyroscopic systems by matrix methods. AIAA J. Guid. Control Dyn. 8(1), 150–152 (1985)

Kirillov, O.N.: Destabilization paradox due to breaking the Hamiltonian and reversible symmetry. Int. J. Non Linear Mech. 42(1), 71–87 (2007)

Lancaster, P.: Strongly stable gyroscopic systems. Electron. J. Linear Algebra 5, 53–66 (1999)

Lancaster, P.: Stability of linear gyroscopic systems: a review. Linear Algebra Appl. 439, 686–706 (2013)

Merkin, D.R.: Gyroscopic Systems. Gostekhizdat, Moscow (1956)

Seyranian, A., Stoustrup, J., Kliem, W.: On gyroscopic stabilization. Z. Angew. Math. Phys. 46(2), 255–267 (1995)

Yuan, Y., Dai, H.: An inverse problem for undamped gyroscopic systems. J. Comput. Appl. Math. 236, 2574–2581 (2012)

Acknowledgements

N. Guglielmi thanks the Italian M.I.U.R. and the INdAM GNCS for financial support and also the Center of Excellence DEWS.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Guglielmi, N., Manetta, M. (2019). Stability of Gyroscopic Systems with Respect to Perturbations. In: Bini, D., Di Benedetto, F., Tyrtyshnikov, E., Van Barel, M. (eds) Structured Matrices in Numerical Linear Algebra. Springer INdAM Series, vol 30. Springer, Cham. https://doi.org/10.1007/978-3-030-04088-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-04088-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-04087-1

Online ISBN: 978-3-030-04088-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)