Abstract

In a multi-objective game, each individual’s payoff is a vector-valued function of everyone’s actions. Under such vectorial payoffs, Pareto-efficiency is used to formulate each individual’s best-response condition, inducing Pareto-Nash equilibria as the fundamental solution concept. In this work, we follow a classical game-theoretic agenda to study equilibria. Firstly, we show in several ways that numerous pure-strategy Pareto-Nash equilibria exist. Secondly, we propose a more consistent extension to mixed-strategy equilibria. Thirdly, we introduce a measurement of the efficiency of multiple objectives games, which purpose is to keep the information on each objective: the multi-objective coordination ratio. Finally, we provide algorithms that compute Pareto-Nash equilibria and that compute or approximate the multi-objective coordination ratio.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Game theory and microeconomics assume that individuals evaluate outcomes into scalars. However, bounded rationality can hardly be modeled consistently by agents simply comparing scalars: “The classical theory does not tolerate the incomparability of oranges and apples” [25]. Money is another case of scalarization of the values of outcomes. For instance, while ‘making money’ theoretically creates value [26], the tobacco industry making money and killing approximately six million people every year [31] is hardly a creation of valueFootnote 1.

In this work, we assume that agents evaluate outcomes over a finite set of distinct objectivesFootnote 2; hence, agents have vectorial payoffs. For instance, in the case of tobacco consumers, this slightly more informative model would keep the information on these three objectives [4]: smoking pleasure, cigarette cost and consequences on life expectancy. In literature, this model was called games with vectorial payoffs, multi-objective games or multi-criteria games; and several applications were considered (see e.g. [30, 32]). Indeed, behaviors are less assumptively modeled by a partial preference: the Pareto-dominance. Using Pareto-efficiency in place of best-response condition induces Pareto-Nash (PN) equilibria as the solution concept for stability, without even assuming that individuals combine the objectives in a precise manner. Pareto-Nash equilibria encompass the outcomes, even under unknown, uncertain or inconsistent preferences.

This paper more particularly addresses two unexplored issues.(1) The algorithmic aspects of multi-objective games have never been studied. (2) Also, the efficiency of Pareto-Nash equilibria has never been a concern.

Related Literature on Mixed-Strategies and Similar Strategy Spaces. Games with vectorial payoffs, or multi-objective games, were firstly introduced in the late fifties by Blackwell and Shapley [1, 24]. The former shows the existence of a mixed-strategy Pareto-Nash equilibrium in finite two-player zero-sum multi-objective games. The later generalizes this existence result to finite multi-objective games. Both use a definition of mixed-strategy Pareto-Nash equilibria that suffers an inconsistency: pure-strategy Pareto-Nash equilibria are not included in the set of mixed-strategy Nash equilibria (see Sect. 4). Nonetheless, there is an established literature on games with vector payoffs that uses this definition. Deep formal works generalized known existence results [24] to individual action-sets being compact convex subsets of a normed space [29]. Weak Pareto-Nash equilibria can be approximated [16].

Works Related to Pure Strategies and Algorithms. [30] achieves to characterize the entire set of Pareto-Nash equilibria by mean of augmented Tchebycheff norms. However, the number of dimensions that parameterize these Tchebycheff norms is algorithmically prohibitive. [20] shows that a MO potential function guarantees that a Pareto-Nash equilibrium exists in finite MO games.

In Sect. 3, we show in three different settings that pure-strategy Pareto-Nash equilibria are guaranteed to exist, or very likely to be numerous. In Sect. 4, we show an inconsistency in the current concept of mixed-strategy PN equilibrium, and propose an extension to solve this flaw. In Sect. 5, in the fashion of the price of anarchy [14], we define a measurement of the worst-case efficiency of individualistic behaviors in games, compared to the optimum. In the multi-objective case, it is far from trivial, as worst-case equilibria and optima are not uniquely defined. In Sect. 6, we show how to compute the set of (worst) pure-strategy Pareto-Nash equilibria for several game structures, and provide algorithms to compute and approximate our multi-objective coordination ratioFootnote 3.

2 Preliminaries

Definition 1

A multi-objective game (MO game, or MOG) is defined by the following tuple \(\left( N,\{A^{i}\}_{i\in N},{\mathcal {D}},\{\varvec{u}^{i}\}_{i\in N}\right) \):

-

The agents set is \(N=\lbrace 1,\ldots ,n\rbrace \). Agent i decides action \(a^{i}\) in action-set \(A^{i}\).

-

The shared list of objectives is denoted by \({\mathcal {D}}=\lbrace 1,\ldots ,d\rbrace \) and every agent \(i\in N\) gets her payoff from function \(\varvec{u}^{i}:A=A^{1}\times \ldots \times A^{n}\rightarrow \mathbb {R}^{d}\) which maps every overall action to a vector-valued payoff; e.g., real \(u_{k}^{i}(\varvec{a})\) is the payoff of agent i on objective k for action-profile \(\varvec{a}=(a^{1},\ldots ,a^{n})\).

In the subjective theory of value, every individual evaluates her endowment \((u_{1}^{i},\ldots ,u_{d}^{i})\) however she wants based on an utility function \(v^{i}:\mathbb {R}^d\rightarrow \mathbb {R}\). The theory of multi-objective games [1, 24] aims at allowing for individuals that behave according to several unknown, uncertain, or inconsistent utility functions. These utility functions are reduced to their common denominator: the Pareto-dominance, as defined below. That vector \(\varvec{y}\in \mathbb {R}^d\) weakly-Pareto-dominates and respectively Pareto-dominates vector \(\varvec{x}\in \mathbb {R}^d\) is denoted and defined by:

For the preferences of individuals, given an adversary action-profile\(\varvec{a}^{-i}=(a^j\mid j\ne i)\), this defines a partial rationality on set\(\varvec{u}^{i}(A^{i},\varvec{a}^{-i})=\{\varvec{u}^{i}(b^{i},\varvec{a}^{-i})\mid b^i\in A^i\}\), which is less assumptive than complete orders, since it does not presume any individual utility function \(v^{i}:\mathbb {R}^{d}\rightarrow \mathbb {R}\). Formally, given a finite set of vectors \(X\subseteq \mathbb {R}^d\), the set of Pareto-efficient vectors is defined as the following set of non-Pareto-dominated vectors:

Since Pareto-dominance is a partial order, it induces a multiplicity of Pareto-efficient vectors. These are the best compromises between objectives. Similarly, let \(\text{ WST }[X]=\{\varvec{y} \in X|\forall \varvec{x}\in X,\text{ not }(\varvec{y}\succ \varvec{x})\}\) denote the worst vectors.

In a multi-objective game, individuals behave according to the Pareto -dominance, inducing the solution concept Pareto-Nash equilibrium (\(\text{ PN }\)), formally defined as any action-profile \(\varvec{a}\in A\) such that for every agent \(i\in N\):

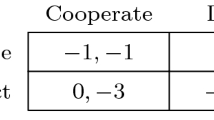

We call these conditions Pareto-efficient responses. Let \(\text{ PN }\subseteq A\) denote the set of Pareto-Nash equilibria. For instance, in Fig. 1, action-profile \((b^{1},b^{2},a^{3},b^{4},b^{5})\) is a PN equilibrium, since each action, given the adversary local action profile (column), is Pareto-efficient among the given agent’s two actions (rows). In this example, there are 13 Pareto-Nash equilibria (depicted in Fig. 2).

Such an encompassing solution concept provides the first phase for bounding the efficiency of games. It is well-known that individualistic behaviors can be far from the optimum/maximum in terms of utilitarian evaluation \(u(\varvec{a})=\sum _{i\in N}u^{i}(\varvec{a})\). In single-objective gamesFootnote 4, this inefficiency is measured by the Coordination Ratio \(\text{ CR }=\frac{\min [u(PN)]}{\max [u(A)]}\) [14], which is more commonly known as the Price of Anarchy [23]. However, in the multi-objective case, the utilitarian social welfare \(\varvec{u}(\varvec{a})=\sum _{i\in N}\varvec{u}^{i}(\varvec{a})\) is a vector-valued function \(\varvec{u}:A\rightarrow \mathbb {R}^d\) with respect to d objectives. To study the efficiency of Pareto-Nash equilibria, we introduce:

-

set of equilibria outcomes \(\quad \mathcal {E}\quad =\quad \varvec{u}(\text{ PN })\quad (\subset \mathbb {R}^d),\)

-

set of efficient outcomes \(\quad \mathcal {F}\quad =\quad \text{ EFF }[\varvec{u}(A)]\quad (\subset \mathbb {R}^d)\).

3 Numerous Pure Strategy Pareto-Nash Equilibria Exist

This section demonstrates the existence of pure strategy Pareto-Nash equilibria. Firstly, we write how the existence results from single-objective (SO) games can be retrieved in MO games. Secondly, we generalize the equilibria existence results of single-objective potential games to multi-objective potential games. Thirdly, we show that on average, numerous Pareto-Nash equilibria exist.

3.1 Reductions from MO Games to SO Games

In the literature, most rationalities are constructed by means of a utility function \(v^{i}:\mathbb {R}^{d}\rightarrow \mathbb {R}\), which is monotonic with respect to the Pareto-dominance, that is:

Such functions are called Pareto-monotonic. For instance, these include positive weighted sums, Cobb-Douglas utilities, and utility functions in general as assumed by the Arrow-Debreu theorem.

A straightforward consequence is that the set of Pareto-efficient vectors contains the optima of any Pareto-monotonic utility function. Formally, given a MOG \(\varGamma \), from Pareto-monotonic utility functions \(V=(v^{i}:\mathbb {R}^d\rightarrow \mathbb {R}|i\in N)\) the single-objective game \(V\circ \varGamma =(N,\{A^{i}\}_{i\in N},\{v^{i}\circ \varvec{u}^{i}\}_{i\in N})\) results from the given utilities, and one has: \(\text{ PN }(V\circ \varGamma )\subseteq \text{ PN }(\varGamma ).\) In other words, Pareto-Nash equilibria encompass the game’s outcome, regardless of the unknown preferences.

Also, inclusion \(\text{ PN }(V\circ \varGamma )\subseteq \text{ PN }(\varGamma )\) argues for the guaranteed existence of numerous PN equilibria in MO games, under the following assumptions:

-

1.

the structure of the SO game on every objective is the same,

-

2.

equilibria are guaranteed in that structure of SO game,

-

3.

and a positive linear combination of the MO game induces that SO game.

This remark is the canonical argument used in previous results (e.g. [20, 24]).

3.2 Multi-objective Potentials

We now explore potential games, as introduced for congestion games by Robert Rosenthal [15, 22] and recently generalized to MO games [20]. The existence of an MO potential function guarantees that at least one Pareto-Nash equilibrium exists [20]. We go further and completely characterize the set of PN equilibria.

Definition 2

An MO game \(\varGamma =\left( N,\{A^{i}\}_{i\in N},{\mathcal {D}},\{\varvec{u}^{i}\}_{i\in N}\right) \) admits (exact) potential function \(\varvec{\varPhi }:A\rightarrow \mathbb {R}^{d}\) if and only if for every action-profile \(\varvec{a}\in A\), for every agent \(i\in N\) and for every action \(b^{i}\in A^{i}\), one has:

That is, function \(\varvec{\varPhi }\) additively accumulates the vectorial values of each deviation.

Definition 3

Given a vector valued function \(\varvec{\varPhi }:A\rightarrow \mathbb {R}^{d}\), let the set of locally efficient action-profiles \(\text{ LOC }(\varvec{\varPhi })\) be the set of action-profiles \(\varvec{a}\in A\) such that:

Set \(\text{ LOC }(\varvec{\varPhi })\) corresponds to a generalization of local optima for function \(\varvec{\varPhi }\), and is non-empty if sets N, \({\mathcal {D}}\) and A are finite. Moreover, due to the loose requirement for local efficiency, set \(\text{ LOC }(\varvec{\varPhi })\) is likely to contain numerous action-profiles.

Theorem 1

Let \(\varGamma =\left( N,\{A^{i}\}_{i\in N},{\mathcal {D}},\{\varvec{u}^{i}\}_{i\in N}\right) \) be a finite multi-objective gameFootnote 5 that admits potential function \(\varvec{\varPhi }\). Then, it holds that:

This theorem completely characterizes the set of Pareto-Nash equilibria as the set of locally efficient action-profiles for function \(\varvec{\varPhi }\), which is a non-empty set with numerous action-profiles. More generally, Theorem 1 also holds when sets N and \({\mathcal {D}}\) are finite and sets \(A^i\) are just compact.

3.3 Likelihood of Equilibrium in Random Games

Another manner to study whether a \(\text{ PN }\)-equilibrium exists is to provide a probability distribution on a family of finite games and then discuss the probability of \(\text{ PN }\)-equilibrium existence. A similar methodology was successfully applied [7, 9, 21] to SO games in several settings where every SO payoff \(u^{i}(\varvec{a})\) is independently and identically distributed by a uniform distribution on continuous intervals [0, 1]. At the heart of this subsection, let random variable Z denote the number of pure Nash-equilibria action-profiles in the game. In the SO case, there is almost surely only one best response. However, when considering MO games, a main technical difference lies in the average number of “best responses” (or here, Pareto-efficient responses), which in most cases exceeds 1, due to the surface-like shape of the Pareto-efficient set in \(\mathbb {R}^{d}\), surface which is \((d-1)\) dimensional. Here, we assume a probability distribution \(\mathbb {P}_{n,\alpha ,\beta }\), that builds randomly the Pareto-efficient response tables of an n-agent normal form game with \(\alpha \) actions-per-agent: for every agent i and every adversary action-profile \(\varvec{a}^{-i}\in \prod _{j\ne i}A^{j}\), there is a fixed number \(\beta :1<\beta \le \alpha \) of Pareto-efficient responses, for the sake of simplicity.

Theorem 2

Given numbers \(n\ge 2\) of agents, \(\alpha \ge 2\) of actions-per-agent and \(\beta \le \alpha \) of Pareto-efficient responses, based on probability distribution \(\mathbb {P}_{n,\alpha ,\beta }\), the number Z of Pareto-Nash equilibria satisfies \(\mathbb {E}[Z] = \beta ^n\) and:

It argues for the existence of numerous Pareto-Nash equilibria when there are enough agents and efficient responses, and follows from the Bienaymé-Tchebychev inequality. For instance, (given \(\gamma =1/2\)) the probability that the number of Pareto-Nash equilibria Z is between \((1/2)\beta ^{n}\) and \((3/2)\beta ^{n}\), is at least \(1-4\beta ^{-n}\), which for \(\beta =2\) efficient responses and \(n=5\) agents, gives \(\mathbb {P}(16\le Z\le 48)\ge 7/8\).

4 Consistent Extension to Mixed Strategies

To guarantee equilibrium existence by means of fixed-point theorems on compact sets [17, 27], the finite action sets of every agent are expanded to include mixed strategies. That is: every agent i decides a probability distribution \(p^{i}\) in the set \(\varDelta (A^{i})\) of probability distributions over his action-set \(A^{i}\). Each payoff function \(\varvec{u}^{i}\) is redefined to be the expected utility

under the mixed-strategy profile \(\varvec{p}=(p^{1},\ldots ,p^{n})\in \prod _{i\in N}\varDelta (A^{i})\). This defines a mixed-extension of the original game. The stability concept induced is called a mixed-strategy Nash equilibrium.

In MOGs, Pareto-Nash equilibria based on their original definition by Blackwell [1] and Shapley [24] (below) are those usually considered [2, 5, 28, 32].

Definition 4

Given finite MO game \(\varGamma =\left( N,\{A^{i}\}_{i\in N},\{{\mathcal {D}}\},\{\varvec{u}^{i}\}_{i\in N}\right) \), a mixed-strategy profile \(\varvec{p}=(p^{1},\ldots ,p^{n})\in \prod _{i\in N}\varDelta (A^{i})\) is a mixed-strategy Pareto-Nash equilibrium if and only if it satisfies for every agent i:

The rational behind this first definition is the following. For every agent i, mixed-strategy \(p^{i}\in \varDelta (A^{i})\) acts as a convex-combination of set of vectorial payoffs \(\varvec{u}^{i}(A^{i},\varvec{p}^{-i})\) and the best-response condition is replaced by the fact that mixed-strategy \(p^{i}\) should have a Pareto-efficient evaluation \(\varvec{u}^{i}(p^{i},\varvec{p}^{-i})\) among the elements of this convex set of evaluations \(\{\varvec{u}^{i}(q^{i},\varvec{p}^{-i})\in \mathbb {R}^{d}\mid q^{i}\in \varDelta (A^{i})\}\). That is, a mixed-strategy Pareto-Nash equilibrium is a pure-strategy Pareto-Nash equilibrium in finite game \(\varGamma \)’s mixed extension. However, as depicted in Fig. 3, Definition 1 fails to fulfill two fundamental requirements:

-

1.

Pure-strategy equilibria must be included in mixed-strategy equilibria.

-

2.

Mixed-strategies also enable to model a risk-averse agent.

Proof

Figure 3 demonstrates these side effects.

To fulfill the two requirements, instead of efficient mixed actions, we consider mixtures of efficient pure-actions. As in Fig. 3, it corrects both side effects.

Definition 5

Given a finite multi-objective game \(\left( N,\{A^{i}\}_{i\in N},\{{\mathcal {D}}\},\{\varvec{u}^{i}\}_{i\in N}\right) \), a mixed-strategy Pareto-Nash equilibrium is a mixed-strategy profile\(\varvec{p}=(p^{1},\ldots ,p^{n})\in \prod _{i\in N}\varDelta (A^{i})\), such that for every agent i and action \(a^{i}\in A^{i}\) if \(a^{i}\) is played with positive probability \(p^{i}(a^{i})>0\), then it holds that

This generalized definition connects in the single-objective case to a less know definition of Nash-equilibria (see [18], p. 30, Theorem 2.1). In this alternative definition, each mixed strategy must be a mixture of pure-strategies that are best-responses. In other words, the support of each mixed strategy must be included in the set of pure-strategy best-responses. Furthermore, concerning existence, since this revised definition contains the former one, (which is guaranteed to exist) the new definition is guaranteed to exist too.

5 Multi-objective Coordination Ratio

In the single-objective case, the coordination ratio measures the efficiency loss of equilibria compared to the optimum. In MO games, we claim that it is critical to study efficiency with respect to every objective. Even after the actions, the game analyst still has access to the vectorial payoffs. In this section, we follow the agenda outlined in the introduction, to define a multi-objective coordination ratio \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\) of the set of equilibria outcomes \(\mathcal {E}\) to the set of efficient outcomes \(\mathcal {F}\), that fills the critical purpose to keep information on each objective.

First, we state the list of desirable properties that we want the ratio to satisfy. For the purpose of having meaningful divisions and ratios, some vectors are positive in this section. Given vectors \(\varvec{\rho },\varvec{y}\in \mathbb {R}^d\) and \(\varvec{z}\in \mathbb {R}^d_{+}\), vector \(\varvec{\rho }\star \varvec{y}\in \mathbb {R}^d\) is defined by \(\forall k\in {\mathcal {D}},(\varvec{\rho }\star \varvec{y})_{k}=\rho _{k}y_{k}\). Vector \(\varvec{y}/\varvec{z}\in \mathbb {R}^d\) is defined by \(\forall k\in {\mathcal {D}},(\varvec{y}/\varvec{z})_{k}=y_{k}/z_{k}\). Given vector \(\varvec{r}\in \mathbb {R}^d\) and set of vectors Y, set \(\varvec{r}\star Y\) is defined by \(\{\varvec{r}\star \varvec{y}\in \mathbb {R}^d_{+}|\varvec{y}\in Y\}\) and for \(\varvec{r}\in \mathbb {R}^d_{+}\), set \(Y/\varvec{r}\) is defined by \(\lbrace \varvec{y}/\varvec{r}\in \mathbb {R}^d|\varvec{y}\in Y\rbrace \). Given \(\varvec{x}\in \mathbb {R}^d\), cone \(\mathcal {C}(\varvec{x})\) denotes \(\{\varvec{y}\in \mathbb {R}^d~|~\varvec{x}\succsim \varvec{y}\}\), and given \(X\subset \mathbb {R}^d\), cone-union \(\mathcal {C}(X\text {)}\) is defined by \(\cup _{\varvec{x}\in X}\mathcal {C}(\varvec{x})\). Vector \(\varvec{0}\) denotes a vector with d zeros, and \(\varvec{1}\) denotes a vector with d ones.

The first property that we require from \(\text{ MO-CR }[\mathcal {E},\mathcal {F}]\) is to be on a multi-objective ratio scale. Given \(\mathcal {E},\mathcal {F}\subset \mathbb {R}^d_{+}\) and \(\varvec{r}\in \mathbb {R}^d_{+}\), the following shall hold.

To fix these ideas one can think of \(d=1\) and given two positive numbers e, f, to the properties of ratio e / f. Equation (1) states that MO-CR is expressed in a multi-objective space. Equations (2), (3) and (4) state that MO-CR is well-centered and sensitive on each objective to multiplications of outcomes, which is what we want. For instance, if \(\mathcal {E}\) is three times better on objective k, then so is MO-CR. If there are two times more efficient opportunities in \(\mathcal {F}\) on objective \(k'\), then MO-CR is one half on objective \(k'\). In other words, the efficiency of each objective independently reflects on MO-CR in a ratio-scale. Equation (5) states that if all equilibria outcomes are efficient (i.e. \(\mathcal {E}\subseteq \mathcal {F}\)), then this amounts to \(\varvec{1}\in \text {MO-CR}[\mathcal {E},\mathcal {F}]\), i.e. the MO game is fully efficient.

These requirements rule out a set of first ideas. For instance, we can rule out comparisons of equilibria outcomes to ideal vector \({\mathcal {I}}=(\max _{z\in \mathcal {F}}\lbrace z_{k}\rbrace |k\in {\mathcal {D}})\) does not satisfy requirement (5) to have \(\varvec{1}\in \text {MO-CR}[\mathcal {E},\mathcal {F}]\) when \(\mathcal {E}\subseteq \mathcal {F}\). By starting from a social welfare \(f:\mathbb {R}^d_{+}\rightarrow \mathbb {R}_+\), taking ratio \(\min f(\mathcal {E})/\max f(\mathcal {F})\), induces the same problem.

This measurement should also be non-dictatorial, in the sense that no point of view should be imposed on what the overall efficiency is: no prior choice must be done on the set of efficient outcomes. Formally, if two sets of efficient outcomes \(\mathcal {F},\mathcal {F}'\subset \mathbb {R}^d_{+}\) differ even slightly, then this must reflect at least for some numerator set \(\mathcal {E}\) onto ratio \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\). This amounts to a disjunction on efficient outcomes. Finally \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\) must provide guaranteed efficiency ratios that hold for every equilibrium outcome \(\varvec{y}\in \mathcal {E}\), which amounts to a conjunction on equilibria outcomes. The definition below follows from these requirements.

Firstly, the efficiency of one equilibrium \(\varvec{y}\in \mathcal {E}\) is quantified without prior choices on what efficient outcome should we compare it to, as required:

The idea is that we do not take sides with any efficient outcome. Instead, we define with flexibility and without a dictatorship a disjunctive set of guaranteed efficiency ratios, which lets the differences between two sets of efficient outcomes \(\mathcal {F},\mathcal {F}'\subset \mathbb {R}^d_{+}\) reflect onto ratio \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\).

Secondly, in MOGs, on average, there are many Pareto-Nash equilibria. An efficiency guarantee \(\varvec{\rho }\in \mathbb {R}^d\) should hold for every equilibrium outcome. It induces this conjunctive definition of the set of guaranteed vectorial ratios:

In fact, because of the conjunction on equilibria outcomes, the set \(R[\mathcal {E},\mathcal {F}]\) only depends on sets \(\text{ WST }[\mathcal {E}]\) (instead of set \(\mathcal {E}\)) and \(\mathcal {F}\).

Finally, if two bounds on efficiencies \(\varvec{\rho }\) and \(\varvec{\rho }'\) are such that \(\varvec{\rho }\succ \varvec{\rho }'\) (e.g. the former guarantees fraction \(\varvec{\rho }=(0.75,0.75)\) of efficiency and the later fraction \(\varvec{\rho }'=(0.5,0.5)\)), then \(\varvec{\rho }'\) brings no more information; hence, MO-CR is defined using \(\text{ EFF }\) on the guaranteed efficiency ratios \(R[\text{ WST }[\mathcal {E}],\mathcal {F}]\). These points are summed up in the following definition:

Definition 6

(MO-CR). Given an MO game, vector \(\varvec{\rho }\in \mathbb {R}^d\) bounds its inefficiency (i.e. \(\varvec{\rho }\in R[\mathcal {E},\mathcal {F}]\)) if and only if the following holds (see Fig. 4):

The multi-objective coordination ratio \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\) is then defined as:

The most famous results of the coordination ratio (or price of anarchy) are stated analytically on families of games, for instance on congestion games [3, 23]. Such results would also be desirable in the multi-objective case. However, the underlying proofs do not survive this generalization: while best response inequalities can be summed in single-objective cases, here, non-Pareto-dominances cannot. This issue is independent of the chosen efficiency measurement and motivates numerical approaches, as proposed in the next section.

6 Computation

In this section, we provide algorithms for computing the set of pure-strategy Pareto-Nash equilibria and for computing the multi-objective coordination ratio.

6.1 Computing Pure-Strategy Pareto-Nash Equilibria

If the MO game is given in normal form, then it is made of the MO payoffs of every agent \(i\in N\) on every action-profile \(\varvec{a}\in A\). Since there are \(n\alpha ^{n}\) such vectors, where recall that n is the number of agents, \(\alpha \) the number of actions per agent and d the number of objectives, the length of this input is \(L(n)=n\alpha ^{n}d\). Then, enumeration of the action-profiles works efficiently with respect to length function L, using a simple argument similar to [11].

Theorem 3

Given a MO game in normal form, computing the set of the best (resp. worst) equilibria outcomes \(\text{ EFF }[\mathcal {E}]\) (resp. \(\text{ WST }[\mathcal {E}]\)) takes polynomial time

Moreover, if \(d=2\), this complexity is lowered to quasi-linear-time

Graphical games provide compact representations of massive multi-agent games when the payoff functions of the agents only depend on a local subset of the agents [13]. Graphical games can be generalized in a straightforward manner to assuming vectorial payoffs. Formally, there is a support graph \(G=(N,E)\) where each vertex represents an agent, and an agent i’s evaluation function only depends on the actions of the agents in his inner-neighbourhood \(\mathcal {N}(i)=\{j\in N|(j,i)\in E\}\). That is \(\varvec{u}^i:A^{\mathcal {N}(i)}\rightarrow \mathbb {R}^d\) maps each local action-profile \(\varvec{a}^{\mathcal {N}(i)}\in A^{\mathcal {N}(i)}\) to a multi-objective payoff \(\varvec{u}^i(\varvec{a}^{\mathcal {N}(i)})\in \mathbb {R}^d\).

Definition 7

(Multi-objective graphical game (MOGG)). An MOGG is a tuple \(\left( G=(N,E), \{A^i\}_{i\in N}, {\mathcal {D}}, \{\varvec{u}^i\}_{i\in N}\right) \). N is the set of agents. \(\{A^i\}_{i\in N}\) are their individual action-sets. \({\mathcal {D}}\) is the set of all objectives. Every function \(\varvec{u}^i:A^{\mathcal {N}(i)}\rightarrow \mathbb {R}^d\) is vector-valued, and its scope is vertex i’s neighborhood.

Figure 1 pictures a didactic instance of an MOGG. In the same manner as computing equilibria in graphical games was reduced to junction-tree algorithms [6], it is also possible to exploit a generalized MO junction-tree algorithm [8, 10]. However, even though this MO junction-tree algorithm is not in polynomial time (but rather pseudo-polynomial time), it still remains faster than browsing the Cartesian product of action-sets and is tractable on average, as experimented in the appendix. Symmetric games [12] can also be generalized to MOGs:

Definition 8

In a multi-objective symmetric game, individual payoffs are not impacted by the agents’ identities. There is one sole action-set \(A^{*}\) for every agent i. So, when deciding action \(a^{*}\in A^{*}\), the multi-objective reward only depends on the number of agents that decided every action. Consequently, the game is not specified for every action-profile \(\varvec{a}\in A=\prod _{i\in N}A^{*}\) and every agent i, but rather for every action \(a^{*}\in A^{*}\) and every configuration \(c:A^{*}\rightarrow \mathbb {N}\), where number \(c(a^{*})\in \mathbb {N}\) indicates the number of agents deciding action \(a^{*}\). Therefore, the utility is given by a function \(\varvec{u}^{*}\) such that \(\varvec{u}^{*}(a^{*},c)\in \mathbb {R}^{d}\) is the payoff for deciding action \(a^{*}\) when configuration c occurs.

There is a number \({n+\alpha -1 \atopwithdelims ()\alpha -1}\) of configurationsFootnote 6 to which the MO symmetric game associates MO vectors. As a consequence, generalizing to vectorial payoffs, the representation length is \(L=\alpha {n+\alpha -1 \atopwithdelims ()\alpha -1}d\), and when the numbers \(\alpha \) and d are fixed constant, length is \(L(n)\in \varTheta \left( \alpha n^{\alpha }d\right) \). Quite simply, for computing \(\mathcal {E}\), \(\text{ EFF }[\mathcal {E}]\) and \(\text{ WST }[\mathcal {E}]\), configurations enumeration already takes polynomial time.

Theorem 4

Given a multi-objective symmetric game with fixed \(\alpha \),

-

computing \(\text{ PN }\) and \(\mathcal {E}\) takes time \(O(n^{\alpha }\alpha ^{2}d)=O(L\alpha )\);

-

computing \(\text{ EFF }[\mathcal {E}]\) and \(\text{ WST }[\mathcal {E}]\) takes time \(O(n^{2\alpha }d)=O(L^{2})\). If \(d=2\), this lowers to \(O(L(\alpha +\log _{}(L)))\).

6.2 Computing MO-CR

In this subsection, we address the problem of computing the set \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\), given sets of worst equilibria outcomes \(\text{ WST }[\mathcal {E}]\) and efficient outcomes \(\mathcal {F}\). Algorithm 1 (below) computes such set. In the algorithm, set \(D^{t}\) denotes a set of vectors. Given two vectors, \(\varvec{x},\varvec{y}\in \mathbb {R}^d_{+}\), let \(\varvec{x}\wedge \varvec{y}\) denote the vector defined by \(\forall k\in {\mathcal {D}},~(\varvec{x}\wedge \varvec{y})_{k}=\min \{x_{k},y_{k}\}\), let \(\varvec{x}^{\varvec{y}}\in \mathbb {R}^d_{+}\) be the vector defined by \(\forall k\in {\mathcal {D}}, (\varvec{x}^{\varvec{y}})_k=(x_k)^{y_k}\), and recall that \(\forall k\in {\mathcal {D}},~(\varvec{x}/\varvec{y})_{k}=x_{k}/y_{k}\).

Theorem 5

Algorithm 1 outputs \(\text {MO-CR}[\mathcal {E},\mathcal {F}]\) in poly-time \(O((qm)^{2d-1}d),\) where \(q=|\text{ WST }[\mathcal {E}]|\) and \(m=|\mathcal {F}|\) denote the size of the inputs, and d is fixed.

Proof

Algorithm 1 calculates product \(\cap _{\varvec{y}\in \text{ WST }[\mathcal {E}]}\cup _{\varvec{z}\in \mathcal {F}}\mathcal {C}(\varvec{y}/\varvec{z})\), where there could be \(m^q\) terms in the output. This set-algebra of cone-unions is compact.

A decisive corollary is that given an MO game with length L that satisfies \(q=O(\text {poly}(L))\), \(m=O(\text {poly}(L))\) and both sets \(\text{ WST }[\mathcal {E}]\) and \(\mathcal {F}\) are computable in time \(O(\text {poly}(L))\), then one can compute \(\text {MO-CR}\) in polynomial time \(O(\text {poly}(L))\). For instance, it is the case with MO normal forms or MO symmetric games. So this approach is not intractable in the most basic cases.

6.3 Approximation of the MO-CR for MO Compact Representations

Unfortunately, Algorithm 1 is not practical when the MO game has a compact form and cardinalities q, m are exponentials with respect to the compact size of the game’s representation. For instance, this is the case for multi-objective graphical games. Theorem 6 below answers this issue by taking only a small and approximate representation of sets \(\text{ WST }[\mathcal {E}]\) and \(\mathcal {F}\), in order to output a guaranteed approximation of sets \(\text {MO-CR}\) or \(R[\text{ WST }[\mathcal {E}],\mathcal {F}]\). This suggests the following general method:

-

1.

Given a compact MOG representation, compute quickly an approximation \(E^{(\varepsilon )}\) of \(\text{ WST }[\mathcal {E}]\) and an approximation \(F^{(\varepsilon ')}\) of \(\mathcal {F}\).

-

2.

Then, given \(E^{(\varepsilon )}\) and \(F^{(\varepsilon ')}\), use Algorithm 1 to approximate the MO-CR.

For this general method to be implemented rigorously, we must specify the precise definitions of the two approximations required in input, for the desired output to be indeed some approximation of the MO-CR.

Firstly, let us specify the output. The ratios in \(R[\text{ WST }[\mathcal {E}],\mathcal {F}]\) must be represented, even approximately, but only by using valid ratios of efficiency, as below.

Definition 9

(\((1+\varepsilon )\)-covering). Given \(R\subset \mathbb {R}^d_{+}\) and \(\varepsilon >0\), \(R^{(\varepsilon )}\subset R\) is a \((1+\varepsilon )\)-covering of R, if and only if:

For instance, \(R[\text{ WST }[\mathcal {E}],\mathcal {F}]\) is \((1+0)\)-covered by \(\text {MO-CR}=\text{ EFF }[R[\text{ WST }[\mathcal {E}],\mathcal {F}]]\). Denote \(\varvec{\varphi }:\mathbb {R}^d_{+}\rightarrow \mathbb {N}^d\) the discretization into the \((1+\varepsilon )\)-logarithmic grid. Given a vector \(\varvec{x}\in \mathbb {R}^d_{+}\), \(\varvec{\varphi }(x)\) is defined by: \(\forall k\in {\mathcal {D}},~~\varphi _k(x)=\lfloor \log _{(1+\varepsilon )}(x_k)\rfloor \). A typical implementation of \((1+\varepsilon )\)-coverings are the logarithmic \((1+\varepsilon )\)-coverings, which consist in taking one vector of R in each reciprocal image of \(\varvec{\varphi }(R)\). That is, for each \(\varvec{l}\in \varvec{\varphi }(R)\), take one \(\varvec{\rho }\) in \(\varvec{\varphi }^{-1}(\varvec{l})\). The logarithmic grid is depicted in Fig. 5.

Now we must specify rigorously what approximate representations \(E^{(\varepsilon _1)}\) of set \(\text{ WST }[\mathcal {E}]\), and \(F^{(\varepsilon _2)}\) of set \(\mathcal {F}\) we should take in input, in order to guarantee that \(R[E^{(\varepsilon _1)},F^{(\varepsilon _2)}]\) is an \((1+\varepsilon )\)-covering of \(R[\text{ WST }[\mathcal {E}],\mathcal {F}]\). Definitions 10 and 11 come from the need of specific approximate representations that will carry the guarantees to the approximate final output \(R[E^{(\varepsilon _1)},F^{(\varepsilon _2)}]\).

Definition 10

(\((1+\varepsilon )\)-under-covering). Given \(\varepsilon >0\), \(E\subset \mathbb {R}^d_{+}\) and \(E^{(\varepsilon )}\subset \mathbb {R}^d_{+}\), \(E^{(\varepsilon )}\) \((1+\varepsilon )\)-under-covers E if and only if:

The first condition states that \(E^{(\varepsilon )}\) bounds E from below. The second condition states that this lower bound is precise within a multiplicative \((1+\varepsilon )\). Given E, one can implement Definition 10 by using the log-grid (see e.g. Fig. 5):

where \(\varvec{\varphi }(\text{ WST }[\mathcal {E}])=\{\varvec{\varphi }(\varvec{y})\in \mathbb {N}^d\mid \varvec{y}\in \text{ WST }[\mathcal {E}]\}\), and given \(\varvec{l}\in \mathbb {N}^d\), the vector \(\varvec{e}^{\varvec{l}}\) is defined by \((\varvec{e}^{\varvec{l}})_k=(1+\varepsilon )^{l_k}\). Now let us state what approximation is required on the set of efficient outcomes \(\mathcal {F}\).

Definition 11

(\((1+\varepsilon )\)-stick-covering). Given \(\varepsilon >0\), \(F\subset \mathbb {R}^d_{+}\) and \(F^{(\varepsilon )}\subset \mathbb {R}^d_{+}\), \(F^{(\varepsilon )}\) \((1+\varepsilon )\)-stick-covers F if and only if:

The first condition is easily satisfiable by \(F^{(\varepsilon )}\subseteq F\). The second condition states that \(F^{(\varepsilon )}\) sticks to F. Given F, one can implement Definition 11 as in Fig. 5: Take one element of \(\mathcal {F}\) per cell of the logarithmic grid, and then take \(\text{ WST }\) of this set of elements. Now we can state that with an approximate Phase 1, the precision transfers to Phase 2 in polynomial time, as follows.

Lemma 1

Given \(\varepsilon _1,\varepsilon _2>0\) and approximations E of \(\mathcal {E}\) and F of \(\mathcal {F}\), if

holds, then it follows that \(R[E,F]\subseteq R[\mathcal {E},\mathcal {F}]\) and:

Equations (6) and (7) state approximation bounds as in Definitions 10 and 11. Equations (6) state that \((1+\varepsilon _1)^{-1}\mathcal {E}\) bounds below E which bounds below \(\mathcal {E}\). Equations (7) state that \(\mathcal {F}\) bounds below F which bounds below \((1+\varepsilon _2)\mathcal {F}\). Crucially, whatever the sizes of \(\mathcal {E}\) and \(\mathcal {F}\), there exist such approximations E and F with respective sizes \(O((1/\varepsilon _1)^{d-1})\) and \(O((1/\varepsilon _2)^{d-1})\) [19], yielding the approximation scheme below.

Theorem 6

(Approximation Scheme for MO-CR). Given a compact MOG of representation length L, precisions \(\varepsilon _1,\varepsilon _2>0\) and two algorithms to compute approximations E of \(\mathcal {E}\) and F of \(\mathcal {F}\) in the sense of Eqs. (6) and (7) that take time \(\theta _{\mathcal {E}}(\varepsilon _1,L)\) and \(\theta _{\mathcal {F}}(\varepsilon _2,L)\), one can approximate \(R[\mathcal {E},\mathcal {F}]\) in the sense of Eq. (8) in time \(O\left( \theta _{\mathcal {E}}(\varepsilon _1,L) \quad +\quad \theta _{\mathcal {F}}(\varepsilon _2,L) \quad +\quad {(\varepsilon _1 \varepsilon _2)^{-(d-1)(2d-1)}}\right) \).

For MO graphical games, Phase 1 could be instantiated with approximate junction-tree algorithms on MO graphical models [8]. For MO symmetric action-graph games, in the same fashion, one could generalize existing algorithms [12]. More generally, for the worst equilibria \(\text{ WST }[\mathcal {E}]\) and the efficient outcomes \(\mathcal {F}\), one could also use meta-heuristics with experimental guarantees.

7 Conclusion: Discussion and Prospects

Along with equilibrium existence, potential functions also usually guarantee the convergence of best-response dynamics. This easily generalizes to dynamics where every deviation step is an individual Pareto-improvement. However, when studying a dynamics based on a refinement of the Pareto-dominance, convergence is not always guaranteed.

Pareto-Nash equilibria, which encompass the possible outcomes of MO games, very likely exist. The precision of PN-equilibria inevitably relies on the uncertainty on preferences. A promising research path would be to linearly constrain the utility functions of agents. This would induce a polytope and would boil down to another MO game where every objective corresponds to an extreme point of the induced polytope. The efficiency of several multi-objective games could be analyzed by using the contributions in this paper.

Notes

- 1.

Tobacco consumers are free to value and choose cigarettes how it pleases them. However, is value the same when they inhale, as when they die suffocating?

- 2.

It is a backtrack from the subjective theory of value, which typically aggregates values on each objective/commodity into a single scalar by using an utility function.

- 3.

All the proofs are in the long paper on arxiv.

- 4.

In the single-objective case, Pareto-Nash and Nash equilibria coincide.

- 5.

In a finite multi-objective game, sets N, \(\{A^{i}\}_{i\in N}\) and \({\mathcal {D}}\) are finite.

- 6.

To enumerate the number of ways to distribute number n of symmetric agents into \(\alpha \) parts, one enumerates the ways to choose \(\alpha -1\) “separators” in \(n+\alpha -1\) elements.

References

Blackwell, D., et al.: An analog of the minimax theorem for vector payoffs. Pac. J. Math. 6(1), 1–8 (1956)

Borm, P., Tijs, S., van den Aarssen, J.: Pareto equilibria in multiobjective games. Methods Oper. Res. 60, 303–312 (1988)

Christodoulou, G., Koutsoupias, E.: The price of anarchy of finite congestion games. In: Proceedings of the Thirty-seventh Annual ACM Symposium on Theory of Computing, pp. 67–73. ACM (2005)

Conover, C.: Is Smoking Irrational? Frobes/Healthcare, Fiscal, And Tax (2014)

Corley, H.: Games with vector payoffs. J. Optim. Theory an Appl. 47(4), 491–498 (1985)

Daskalakis, C., Papadimitriou, C.: Computing pure Nash equilibria in graphical games via Markov random fields. In: ACM-EC, pp. 91–99 (2006)

Dresher, M.: Probability of a pure equilibrium point in n-person games. J. Combin. Theory 8(1), 134–145 (1970)

Dubus, J.P., Gonzales, C., Perny, P.: Multiobjective optimization using GAI models. In: IJCAI, pp. 1902–1907 (2009)

Goldberg, K., Goldman, A., Newman, M.: The probability of an equilibrium point. J. Res. Nat. Bureau Stand. B. Math. Sci. 72(2), 93–101 (1968)

Gonzales, C., Perny, P., Dubus, J.P.: Decision making with multiple objectives using GAI networks. Artif. Intell. 175(7), 1153–1179 (2011)

Gottlob, G., Greco, G., Scarcello, F.: Pure Nash equilibria: hard and easy games. J. Artif. Intell. Res. 24, 357–406 (2005)

Jiang, A.X., Leyton-Brown, K.: Computing pure Nash equilibria in symmetric action graph games. In: AAAI, vol. 1, pp. 79-85 (2007)

Kearns, M., Littman, M.L., Singh, S.: Graphical models for game theory. In: Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence, pp. 253–260. Morgan Kaufmann Publishers Inc. (2001)

Koutsoupias, E., Papadimitriou, C.: Worst-case equilibria. In: Meinel, C., Tison, S. (eds.) STACS 1999. LNCS, vol. 1563, pp. 404–413. Springer, Heidelberg (1999). https://doi.org/10.1007/3-540-49116-3_38

Monderer, D., Shapley, L.S.: Potential games. Games Econ. Behav. 14(1), 124–143 (1996)

Morgan, J.: Approximations and well-posedness in multicriteria games. Ann. Oper. Res. 137(1), 257–268 (2005)

Nash, J.: Equilibrium points in n-person games. Proc. Nat. Acad. Sci. 36(1), 48–49 (1950)

Papadimitriou, C.: The complexity of finding Nash equilibria. Algorithmic Game Theory 2, 30 (2007)

Papadimitriou, C.H., Yannakakis, M.: On the approximability of trade-offs and optimal access of web sources. In: Proceedings of the 41st Annual Symposium on Foundations of Computer Science, pp. 86–92. IEEE (2000)

Patrone, F., Pusillo, L., Tijs, S.: Multicriteria games and potentials. Top 15(1), 138–145 (2007)

Rinott, Y., Scarsini, M.: On the number of pure strategy Nash equilibria in random games. Games Econ. Behav. 33(2), 274–293 (2000)

Rosenthal, R.W.: A class of games possessing pure-strategy Nash equilibria. Int. J. Game Theory 2(1), 65–67 (1973)

Roughgarden, T.: Intrinsic robustness of the price of anarchy. In: Proceedings of the Forty-First Annual ACM Symposium on Theory of Computing, pp. 513–522. ACM (2009)

Shapley, L.S.: Equilibrium points in games with vector payoffs. Naval Res. Logist. Q. 6(1), 57–61 (1959)

Simon, H.A.: A behavioral model of rational choice. Q. J. Econ. 69, 99–118 (1955)

Smith, A.: An Inquiry into the Nature and Causes of the Wealth of Nations. Edwin Cannan’s annotated edition (1776)

Von Neumann, J., Morgenstern, O.: Theory of Games and Economic Behavior. Princeton University Press, Princeton (1944)

Voorneveld, M.: Potential games and interactive decisions with multiple criteria. Center for Economic Research, Tilburg University (1999)

Wang, S.: Existence of a Pareto equilibrium. J. Optim. Theory Appl. 79(2), 373–384 (1993)

Wierzbicki, A.P.: Multiple criteria games - theory and applications. J. Syst. Eng. Electr. 6(2), 65–81 (1995)

World-Health-Organization: WHO report on the global tobacco epidemic (2011)

Zeleny, M.: Games with multiple payoffs. Int. J. Game Theory 4(4), 179–191 (1975)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ismaili, A. (2018). On Existence, Mixtures, Computation and Efficiency in Multi-objective Games. In: Miller, T., Oren, N., Sakurai, Y., Noda, I., Savarimuthu, B.T.R., Cao Son, T. (eds) PRIMA 2018: Principles and Practice of Multi-Agent Systems. PRIMA 2018. Lecture Notes in Computer Science(), vol 11224. Springer, Cham. https://doi.org/10.1007/978-3-030-03098-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-030-03098-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03097-1

Online ISBN: 978-3-030-03098-8

eBook Packages: Computer ScienceComputer Science (R0)