Abstract

We explore order reduction techniques to solve the algebraic Riccati equation (ARE), and investigate the numerical solution of the linear-quadratic regulator problem (LQR). A classical approach is to build a low dimensional surrogate model of the dynamical system, for instance by means of balanced truncation, and then solve the corresponding ARE. Alternatively, iterative methods can be used to directly solve the ARE and use its approximate solution to estimate quantities associated with the LQR. We propose a class of Petrov-Galerkin strategies based on Krylov subspaces that simultaneously reduce the dynamical system while approximately solving the ARE by projection. This methodology significantly generalizes a recently developed Galerkin method, based on Krylov subspaces, by using a pair of projection spaces, as it is often done in model order reduction (MOR) of dynamical systems. Numerical experiments illustrate the advantages of the new class of methods over classical approaches when dealing with large matrices.

Part of this work was supported by the Indam-GNCS 2017 Project “Metodi numerici avanzati per equazioni e funzioni di matrici con struttura”. The author “Valeria Simoncini” is a member of the Italian INdAM Research group GNCS.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Optimal control problems for partial differential equations (PDEs) are an extremely important topic for many industrial applications in different fields, from aerospace engineering to economics. The problem has been investigated with different strategies as open-loop (see e.g. [23]) or closed-loop (see e.g. [18, 19]).

In this work we are interested in feedback control for linear dynamical systems and quadratic cost functionals which is known as the Linear Quadratic Regulator (LQR) problem. Although most models are nonlinear, LQR is still a very interesting and powerful tool, for instance in the stabilization of nonlinear models under perturbations, where a control in feedback form can be employed.

The computation of the optimal policy in LQR problems requires the solution of an algebraic Riccati equation (ARE), a quadratic matrix equation with the dimension of the dynamical system. This is a major bottleneck in the numerical treatment of the optimal control problem, especially for high dimensional systems such as those stemming from the discretization of a PDE.

Several powerful solution methods for the ARE have been developed throughout the years for small dynamical systems, based on spectral decompositions. The large scale case is far more challenging, as the whole spectral space of the relevant matrices cannot be determined because of memory and computational resource limitations. For these reasons, this algebraic problem is a very active research topic, and major contributions have been given in the past decade. Different approaches have been explored: variants of the Newton method have been largely employed in the past [11, 27], while only more recently reduction type methods based on Krylov subspaces have emerged as a feasible effective alternative; see, e.g., [12, 24, 38] and references therein. The recent work [36] shows that a Galerkin class of reduction methods onto Krylov subspaces for solving the ARE can be naturally set into the MOR framework for the original dynamical system. This fact is particularly striking because most literature has so far treated the solution of the Riccati equation as a distinct problem from the reduction process, whereas it is now clear that the MOR perspective provides a natural setting also for the solution of the Riccati equation. In the context of linear-quadratic optimal regulator problems, H 2 and H ∞ controller design and balancing-related model reduction often an approximation to the Riccati solution matrix is assumed to be available, from which other key quantities are determined; see the discussion in [36]. By exploiting the same space for the reduction of the model and the projection of the Riccati equation, it is possible to determine an approximate control function satisfying certain optimality property [36]. Here we deepen the MOR connection by using the Petrov-Galerkin Krylov subspaces commonly used in MOR to directly approximate the Riccati solution.

As already mentioned, the LQR problem is more complicated when dealing with PDEs because its discretization leads to a very large system of ODEs and, as a consequence, the numerical solution of the ARE is computationally more demanding. To significantly lower these computational costs and memory requirements, model order reduction techniques can be employed. Here, we distinguish between two different concepts of reduction approaches.

A first methodology projects the dynamical system into a low dimensional system whose dimensions are much smaller than the original one; see, e.g., [14]. Therefore, the corresponding reduced ARE is practical and feasible to compute on a standard computer. The overall methodology thus performs a first-reduce-then-solve strategy. This approach has been investigated with different model reduction techniques like Balanced Truncation (BT) in e.g.[4], Proper Orthogonal Decomposition (POD, [39, 40]) in e.g. [5, 28] and via the interpolation of the rational functions, see e.g. [7, 15, 20]. A different approach has been proposed in [35] where the basis functions are computed from the solution of the high dimensional Riccati equation in a many query context. For the sake of completeness, we would like to mention that model order reduction has been applied to the Linear Quadratic Gaussian (LQG) problem which may involve the solution of two Riccati equations (see e.g. [9, 10, 16]).

We note that basis generation in the context of model order reduction for optimal control problems is an active research topic (see e.g. [2, 3, 28, 30]). Furthermore, the computation of the basis functions is made by a Singular Value Decomposition (SVD) of the high dimensional data which can be very expensive. One way to overcome this issue was proposed in [1] by means of randomized SVD which is a fast and accurate alternative to the SVD, and it is based on random samplings.

A second methodology follows a reduce-while-solve strategy. In this context, recent developments aim at reducing the original problem by subspace projection, and determining an approximate solution in a low dimensional approximation space. Proposed strategies either explicitly reduce the quadratic equation (see, e.g., [38] and references therein), or approximately solve the associated invariant subspace problem (see, e.g., [8] and its references). As already mentioned, these recently developed methods have shown to be effective alternatives to classical variants of the Newton method, which require the solution of a linear matrix equation at each nonlinear iteration; see, e.g., [13] for a general description.

The aim of this paper is to discuss and compare the aforementioned MOR methodologies for LQR problems. In particular, we compare the two approaches of reducing the dynamical system first versus building surrogate approximation of the ARE directly, using either Galerkin or Petrov-Galerkin projections. The idea of using a Petrov-Galerkin method for the ARE appears to be new, and naturally expands the use of two-bases type as typically employed for transfer function approximation.

To set the paper into perspective we start recalling LQR problem and its order reduction in Sect. 2. In Sect, 3 we describe reduction strategies of dynamical systems used in the small size case, such as proper orthogonal decomposition and balanced truncation. Section 4 discusses the new class of projection strategies that attack the Riccati equation, while delivering a reduced model for the dynamical system. Finally, numerical experiments are shown in Sect. 5 and conclusions are derived in Sect. 6.

2 The Linear-Quadratic Regulator Problem and Model Order Reduction

In this section we recall the mathematical formulation of the LQR problem. We refer the reader for instance to classical books such as e.g. [31] for a comprehensive description of what follows. We consider a linear time invariant system of ordinary differential equations of dimension n:

with \(A\in \mathbb R^{n\times n}, B \in \mathbb R^{n\times m}, C \in \mathbb R^{p\times n}\) and \(D \in \mathbb R^{p\times m}\). Usually, \(x(t):[0,\infty ]\rightarrow \mathbb R^n\) is called the state, \(u(t):[0,\infty ]\rightarrow \mathbb R^m\) the input or control and \(y(t): [0,\infty ]\rightarrow \mathbb R^p\) the output. Furthermore, we assume that A is passive. This may be viewed as a restrictive hypothesis, since the problems we consider only require that (A, B) are stabilizable and (A T, C T) controllable, however this is convenient to ensure that the methods we analyze are well defined. In what follows, without loss of generality, we will consider D ≡ 0. We also define the transfer function for later use:

Next, we define the quadratic cost functional for an infinite horizon problem:

where \(R\in \mathbb R^{m\times m}\) is a symmetric positive definite matrix. The optimal control problem reads:

The goal is to find a control policy in feedback form as:

with the feedback gain matrix \(K\in \mathbb R^{m\times n}\) and \(P\in \mathbb R^{n\times n}\) is the unique symmetric and positive (semi-)definite matrix that solves the following ARE:

which is a quadratic matrix equation for the unknown P.

We note that the numerical approximation of Eq. (6) can be very expensive for large n. Therefore, we aim at the reduction of the numerical complexity by projection methods.

Let us consider a general class of tall matrices \(V,W\in \mathbb R^{n\times r}\), whose columns span some approximation spaces. We chose these two matrices such that they are biorthogonal, that is W T V = I r. Let us now assume that the matrix P, solution of (6), can be approximated as

Then the residual matrix can be defined as

The small dimensional matrix P r can be determined by imposing the so-called Petrov-Galerkin condition, that is orthogonality of the residual with respect to range(V ), which in matrix terms can be stated as \(V^T \mathcal {R}(P_r)V = 0\). Substituting the residual matrix and exploiting the bi-orthogonality of V and W we obtain:

where

It can be readily seen that Eq. (7) is again a matrix Riccati equation, in the unknown matrix \(P_r\in \mathbb R^{r\times r}\), of much smaller dimension than P, provided that V and W generate small spaces. We refer to this equation as the reduced Riccati equation. The computation of P r allows us to formally obtain the approximate solution WP r W T to the original Riccati equation (6), although the actual product is never computed explicitly, as the approximation is kept in factorized form.

The optimal control for the reduced problem reads

with the reduced feedback gain matrix given by \(K_r = KV \in \mathbb R^{m\times r}\). Note that this u r(t) is different from the one obtained by first approximately solving the Riccati equation and with the obtained matrix defining an approximation to u(t); see [36] for a detailed discussion.

The Galerkin approach is obtained by choosing V = W with orthonormal columns when imposing the condition on the residual.

To reduce the dimension of the dynamical system (1), we assume to approximate the full state vector as x(t) ≈ V x r(t) with a basis matrix \(V \in \mathbb R^{n\times r}\), where \(x_r(t):[0,\infty )\rightarrow \mathbb R^r\) are the reduced coordinates. Plugging this ansatz into the dynamical system (1), and requiring a so called Petrov-Galerkin condition yields

The reduced transfer function is then given by:

The presented procedure is a generic framework for model reduction. It is clear that the quality of the approximation depends on the approximation properties of the reduced spaces. In the following sections, we will distinguish between the methods that directly compute V, W upon the dynamical systems (see Sect. 3) and those that readily reduce the ARE (see Sect. 4). In particular, for each method we will discuss both Galerkin and Petrov-Galerkin projections, to provide a complete overview of the methodology. The considered general Petrov-Galerkin approach for the ARE appears to be new.

3 Reduction of the Dynamical System

In this section we recall two well-known techniques as POD and BT to compute the projectors W, V starting from the dynamical systems.

3.1 Proper Orthogonal Decomposition

A common approach is based on the snapshot form of POD proposed in [39], which works as follows. We compute a set of snapshots x(t 1), …, x(t k) of the dynamical system (1) corresponding to a prescribed input \(\bar u(t)\) and different time instances t 1, …, t k and define the POD ansatz of order r for the state x(t) by

where the basis vectors \(\{\psi _i\}_{i=1}^r\) are obtained from the SVD of the snapshot matrix X = [x(t 1), …, x(t k)], i.e. X = ΨΣΓ T, and the first r columns of Ψ = (Ψ 1, …, Ψ n) form the POD basis functions of rank r. Hence we choose the basis vectors V = W = (Ψ 1, …, Ψ r) for the reduction in (8). Then, the evolution dynamics can be projected using a Galerkin method as in [29].

This technique strongly relies on the choice of a given input u, whose optimal selection is usually unknown. In this work, we decide to collect snapshots following the approach suggested in [28] as considers a linearization of the ARE (which corresponds to a Lyapunov equation). Therefore, the snapshots are computed by the following equation:

where c i is the i-th column of the matrix C. The advantage of this approach is that Eq. (11) is able to capture the dynamics of the adjoint equation which is directly related to the optimality conditions, and we do not have to choose a reference input \(\bar u(t).\) In order to obtain the POD basis, one has to simulate the high dimensional system and subsequently perform a SVD. As a consequence, the computational cost may become prohibitive for large scale problems. Algorithm 1 summarizes the method.

Algorithm 1 POD method to compute the reduced Riccati

Require: A, C, r

1: for i = 1, …, p do

2: Simulate system (11) with initial condition c i.

3: Build the snapshots matrix X = [X, x i(t 1), …, x i(t k)]

4: end for

5: Compute the reduced SVD of X = V ΣW T

6: Solve the reduced Riccati equation (7) for P r.

3.2 Balanced Truncation

The BT method is a well-established model order reduction technique for linear time invariant systems (1). We refer to [4] for a complete description of the topic. It is based on the solution of the reachability Gramian R and the observability Gramian O which solve, respectively, the following Lyapunov equations

We determine the Cholesky factorization of the Gramians

Then, we compute the reduced SVD of the Hankel operator Υ T Φ and set

where \(U, V\in \mathbb R^{n\times r}\) are the first r columns of the left and right singular vectors of the Hankel operator and Σ = diag(σ 1, …, σ r) matrix of the first r singular values.

The idea of BT is to neglect states that are both, hard to reach and hard to observe. This is done by eliminating states that correspond to low Hankel singular values σ i. This method is very popular in the small case regime (e.g. n ≈ O(103)) also because the whole procedure can be verified by a-priori error bounds in several system norms, and the Lyapunov equations can be solved very efficiently. In the large scale these equations need to be solved approximately; see, e.g., [12, 17].

In summary, the procedure first solves the two Lyapunov equations at a given accuracy for large matrices and then determines biorthogonal bases for the reduction spaces by using a combined spectral decomposition of the obtained solution matrices. For consistency with the other projection strategies, in the large scale setting we solve Eq. (12) using adaptive rational Krylov subspaces as in [17]. We refer to [37] for alternatives. The algorithm is summarized in Algorithm 2.

Algorithm 2 BT method to compute the reduced Riccati

Require: A, B, C and the dimension of the reduced problem r

1: Compute R, O from (12) and their Cholesky factorization (13)

2: Compute the reduced SVD of the Hankel operator

3: Set W = ΥUΣ 1∕2, V = ΥV Σ 1∕2,

4: Solve the reduced Riccati equation (7) for P r.

4 Adaptive Reduction of the Algebraic Riccati Equation

In the previous section the reduced problem was obtained by a sequential procedure: first system reduction of a fixed order r and then solution of the reduced Riccati equation (7). A rather different strategy consists of determining the reduction bases while solving the Riccati equation. In this way, we combine both the reduction of the original system and of the ARE. While the reduction bases V and W are being generated by means of some iterative strategy, it is immediately possible to obtain a reduced Riccati equation by projecting the problem onto the current approximation spaces. The quality of the two spaces can be monitored by checking how well the Riccati equation is solved by means of its residual; if the approximation is not satisfactory, the spaces can be expanded and the approximation improved.

The actual space dimensions are not chosen a-priori, but tailored with the accuracy of the approximate Riccati solution. Mimicking what is currently available in the linear equation literature, the reduced problem can be obtained by imposing some constraints that uniquely identify an approximation. The idea is very natural and it was indeed presented in [24], where a standard Krylov basis was used as approximation space. However, only more recently, with the use of rational Krylov bases, a Galerkin approach has shown its real potential as a solver for the Riccati equation; see, e.g., [22, 38]. A more general Petrov-Galerkin approach was missing. We aim to fill this gap. In the following we give more details on these procedures.

Given an approximate solution \(\tilde P\) of (6) written as \(\tilde P = W Y W^T\) for some Y to be determined, the former consists to require that the residual matrix is orthogonal to this same space, range(W), so that in practice V = W. The Petrov-Galerkin procedure imposes orthogonality with respect to the space range(V ), where V is different from W, but with the same number of columns.

In [24] a first implementation of a Galerkin procedure was introduced, and the orthonormal columns of V spanning the (block) Krylov subspace \(\mathcal {K}_r(A^T, C^T)= \mathrm {range}([ C^T, A^T C^T, \ldots , (A^T)^{r-1} C^T])\); see also [26] for a more detailed treatment and for numerical experiments. Clearly, this definition generates a sequence of nested approximation spaces, that is \(\mathcal {K}_r(A^T, C^T)\subseteq \mathcal {K}_{r+1}(A^T, C^T)\), whose dimension can be increased iteratively until a desired accuracy is achieved. More recently, in [22] and [38], rational Krylov subspaces have been used, again in the Galerkin framework. In particular, the special case of the extended Krylov subspace \(\mathcal {K}_r(A^T, C^T) + \mathcal {K}_r((A^T)^{-1}, (A^T)^{-1} C^T)\) was discussed in [22], while the fully rational space

was used in [38]. The rational shift parameters σ = {σ 2, …, σ r} can be computed on the fly at low cost, by adapting the selection to the current approximation quality [17]. Note that dim(\(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma }) ) \le r p\), where p is the number of columns of C T. In [38] it was also shown that a fully rational space can be more beneficial than the extended Krylov subspace for the Riccati equation. In the following section we are going to recall the general procedure associated with the Galerkin approach, and introduce the algorithm for the Petrov-Galerkin method, which to the best of the authors’ knowledge is new. In both cases we use the fully rational Krylov subspace with adaptive choice of the shifts.

It is important to realize that \(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma })\) does not depend on the coefficient matrix BB T of the second-order term in the Riccati equation. Nonetheless, experimental evidence shows good performance. This issue was analyzed in [33, 36] where however the use of the matrix B during the computation of the parameters was found to be particularly effective; a justification of this behavior was given in [36]. In the following we thus employ this last variant when using rational Krylov subspaces. More details will be given in the next section.

4.1 Galerkin and Petrov-Galerkin Riccati

In the Galerkin case, we will generate a matrix W whose columns span the rational Krylov subspace \(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma })\) in an iterative way, that is one block of columns at the time. This can be obtained by an Arnoldi-type procedure; see, e.g., [4]. The algorithm, hereafter GARK for Galerkin Adaptive Rational Krylov, works as follows:

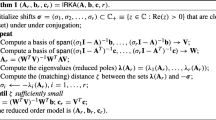

Algorithm 3 GARK method to compute the reduced Riccati equation

Require: A, C, σ

1: for r = 1, 2, … do

2: Expand the space \(\mathcal {K}_r(A^T,C^T, {\boldsymbol \sigma })\);

3: Update the reduced matrices A r, B r and C r with the newly generated vectors;

4: Solve the reduced Riccati equation for P r;

5: Check the norm of the residual matrix \(\mathcal {R}(P_r)\)

6: If satisfied stop with P r and the basis W of \(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma })\).

7: end for

The residual norm can be computed cheaply without the actual computation of the residual matrix; see, e.g., [38]. The parameters σ j can be computed adaptively as the space grows; we refer the reader to [17] and [36] for more details.

In the general Petrov-Galerkin case, the matrix W is generated the same way, while we propose to compute the columns of V as the basis for the rational Krylov subspace \(\mathcal {K}_r(A,B, {\boldsymbol \sigma })\); note that the starting block is now B, and the coefficient matrix is the transpose of the previous one. The two spaces are now constructed and expanded at the same time, so that the two bases can be enforced to be biorthogonal while they grow. For completeness, we report the algorithm in the Petrov-Galerkin setting in Algorithm 4 (hereafter PGARK for Petrov-Galerkin Adaptive Rational Krylov).

Algorithm 4 PGARK method to compute the reduced Riccati equation

Require: A, B, C, σ

1: for r = 1, 2, … do

2: Expand the spaces \(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma })\), \(\mathcal {K}_r(A, B, {\boldsymbol \sigma })\);

3: Update the reduced matrices A r, B r and C r with the newly generated vectors;

4: Solve the reduced Riccati equation for P r;

5: Check the norm of the residual matrix \(\mathcal {R}(P_r)\)

6: If satisfied stop with P r and the basis W of \(\mathcal {K}_r(A^T, C^T, {\boldsymbol \sigma })\).

7: end for

The parameters σ j are computed for one space and used also for the other space. In this more general case, the formula for the residual matrix norm is not as cheap as for the Galerkin approach. We suggest the following procedure. We first recall that for rational Krylov subspace \(\mathcal {K}_r(A, B, {\boldsymbol \sigma })\) the following relation holds (we assume here full dimension of the generated space after r iterations):

for certain vector \(\hat w\) orthogonal to W, which changes as the iterations proceeds, that is as the number of columns W grows; we refer the reader to [32] for a derivation of this relation, which highlights that the distance of range(W) from an invariant subspace of A is measured in terms of a rank-one matrix. We write

where we also used the fact that C T = WE for some matrix E. If \([W, \hat w]\) had orthonormal columns, as is the case for Galerkin, then \(\|{\mathcal R}(P_r)\|{ }^2 = 2 \|P_r a_r\|{ }^2\), which can be cheaply computed.

To overcome the nonorthogonality of \([W, \hat w]\), we suggest to perform a reduced QR factorization of \([W, \hat w]\) that maintains its columns orthogonal. This QR factorization does not have to be redone from scratch at each iteration, but it can be updated as the matrix W grows. If \([W, \hat w] = Q_W R_W\) with \(R_W\in \mathbb R^{(r+1)m\times (r+1)m}\) upper triangular, then

The use of coupled (bi-orthogonal) bases has the recognized advantage of explicitly using both matrices C and B in the construction of the reduced spaces. This coupled basis approach has been largely exploited in the approximation of the dynamical system transfer function by solving a multipoint interpolation problem; see, e.g., [4] for a general treatment and [6] for a recent implementation. In addition, coupled bases can be used to simultaneously approximate both system Gramians leading to a large-scale BT strategy; see, e.g., [25] for early contributions using bi-orthogonal standard Krylov subspaces.Footnote 1 On the other hand, a Petrov-Galerkin procedure has several drawbacks associated with the construction of the two bases. More precisely, the two bi-orthogonal bases are generated by means of a Lanczos-type recurrence, which is known to have both stability and breakdown problems in other contexts such as linear system and eigenvalue solving. At any iteration it may happen that the new basis vectors w j and v j are actually orthogonal or quasi-orthogonal to each other, giving rise to a possibly incurable breakdown [21]. We have occasionally experienced this problem in our numerical tests, and it certainly occurs whenever CB = 0. In our specific context, an additional difficulty arises. The projected matrix A r = W T A T V is associated with a bilinear rather than a linear form, so that its field of values may be unrelated to that of A T. As a consequence, it is not clear the type of hypotheses we need to impose on the data to ensure that the reduced Riccati equation (7) admits a unique stabilizable solution. Even in the case of A symmetric, the two bases will be different as long as C≠B T. All these questions are crucial for the robustness of the procedure and deserve a more throughout analysis which will be the topic of future research.

From an energy-saving standpoint, it is worth remarking that the Petrov-Galerkin approach uses twice as many memory allocations than the Galerkin approach, while performing about twice the number of floating point operations. In particular, constructing the two bases requires two system solves, with A T − s j I and with \(A-\bar s_jI\) respectively, at each iteration. Therefore, unless convergence is considerably faster, the Petrov-Galerkin approach may not be superior to the Galerkin method in the solution of the ARE.

5 Numerical Experiments

In this section we present and discuss our numerical tests. We first compare the methods we have introduced in the previous sections on two test cases. Then we linger over the large scale implementation of BT we have adopted to make comparisons with projection based strategies, to highlight the difficulties that may arise when the approximation is performed in two separate steps.

We consider the discretization of the following linear PDE

where \(\varOmega \subset \mathbb R^2\) is an open interval, \(w:\varOmega \times [0, \infty ]\rightarrow \mathbb R^2\) denotes the state, and the parameters ε, γ and c are real positive constants. The initial value is w 0 and the function \({\mathbf {1}}_{\varOmega _B}\) is the indicator function over the domain \(\varOmega _B\subset \mathbb R^2\). Note that we deal with zero Dirichlet boundary conditions. The problem in (14) includes the heat equation, for ε ≠ 0, γ = 0, c = 0, reaction-diffusion equations for ε ≠ 0, γ = 0, c ≠ 0 and a class of convection-diffusion equations for ε ≠ 0, γ ≠ 0 and c = 0. Furthermore, we define an output of interest by:

where \(\varOmega _C\subset \mathbb R^2.\) Space discretization of Eq. (14) by standard centered finite differences together with a rectangular quadrature rule for (15) lead to a system of the form (1). In general, the dimension n of the dynamical system (1) is rather large (i.e., n ≫ 1000) and the numerical treatment of the corresponding ARE is computationally expensive or even unfeasible. Therefore, model order reduction is appropriate to lower the dimension of the optimal control problem (4). We will report experiments with small size problems, where all discussed methods can be employed, and with large size problems, where only the Krylov subspace based strategies are applied.

The numerical simulations reported in this paper were performed on a Mac-Book Pro with 1 CPU Intel Core i5 2.3 GHz and 8GB RAM and the codes are written in MatlabR2013a. In all our experiments, small dimensional Lyapunov and Riccati equations are solved by means of built-in functions of the Matlab Control Toolbox.

Whenever appropriate, the quality of the current approximation of the ARE is monitored by using the relative residual norm:

and the dimension of the surrogate model r is chosen such that R P < 10−6. After the ARE solution is approximated the ultimate goal is to compute the feedback control (5). Therefore, we also report the error in the computation of feedback gain matrix K as the iterations proceed:

and we measure the quality of our surrogate model also by the \(\mathcal {H}_2\)-error

where

In particular, the approximation of the transfer function is one of the main targets of MOR, where the reduced system is used for analysis purposes, while the approximation of the feedback gain matrix is monitored to obtain a good control function.

5.1 Test 1: 2D Linear Heat Equation

In the first example we consider the linear reaction-diffusion equation. In (14) we chose γ = 0, ε = 1, c = 400, Ω = [0, 1] × [0, 1], and Ω B = [0.4, 0.6] × [0.4, 0.6]. In (1), the matrix A is obtained by centered five points finite difference discretization. We consider a small problem stemming from a spatial discretization step Δx = 0.05, leading to a system of dimension n = 441. The matrix C in (1) is given by the indicator function over the domain Ω C = [0.3, 0.7] × [0.3, 0.7] and R ≡ I m in (3).

The left panel of Fig. 1 shows the residual norm history of the reduced ARE (7) when the two projection matrices V, W are computed by each of the four algorithms explained in the previous sections. We can thus appreciate how the approximation proceeds as the reduced space is enlarged. We note that POD requires more basis functions to achieve the desired tolerance for R P than the other approaches, while the BT algorithm is the fastest. The POD method is a snapshot dependent method and thus it is crucially influenced by the choice of the initial input u(t) and the results may be different for other choices of the snapshots set. All the other proposed techniques are, on the contrary, input/output independent. We note that under this setting the PGARK is not stable for the first reduced coordinates; see some additional comments on the issue in Test 2.

In the middle panel of Fig. 1, we show how well the feedback gain matrix K can be approximated with reduction methods. It is interesting to see that the basis functions computed by GARK and PGARK are able to approximate the matrix K very well. Furthermore, we note that BT does not decrease monotonically.

Finally, we would like to show the quality of the computed basis functions in the approximation of the dynamical system in the right panel of Fig. 1. In this example, BT approximates the transfer function very well with a lower number of basis functions.

Last remark goes to the iterative methods GARK and PGARK . We showed that, although the basis functions are built upon information of the ARE, they are also able to approximate the dynamical systems and the feedback gain matrix. This is clearly not unexpected, as the generated approximation spaces are tightly related to classical model order reduction strategies with (rational) Krylov subspaces [4]. Nonetheless, as opposed to MOR methods, the quality of the generated spaces all leans on solving the Riccati equation, which also provides important quantities for the dynamical systems. This is a crucial point that motivates us to further investigate these methods in the context of the LQR problem. Projection methods are specifically designed to handle large dimension n. Together with the large scale version of BT, in Fig. 2 we report the CPU time of the iterative methods, for Δx ∈{0.1, 0.05, 0.025, 0.0125, 0, 00625, 0.003125}. We note that the methods reach the desired accuracy in a few seconds even for n = O(105). On the contrary, POD would be way more expensive since its cost heavily depends on the original dimension of the problem n, e.g., via the computation of the snapshots.

5.2 Test 2: 2D Linear Convection-Diffusion Equation

We consider the linear convection-diffusion equation in (14) with γ = 50, ε = 1, c = 0, Ω = [0, 2] × [0, 2] and Ω B = [0.2, 0.8] × [0.2, 0.8]. In (1), the matrix A is given by centered five points finite difference discretization plus an upwind approximation of the convection term (see e.g. [34]). The spatial discretization step is Δx = 0.1 and leads to a system of dimension n = 441. The matrix C in (1) is given by the indicator function over the domain Ω C = [0.1, 0.9] × [0.1, 0.9], and R ≡ I m in (3).

The left panel of Fig. 3 shows the residual norm history associated with the reduced ARE (7), with different projection techniques. We note that the methods converge with a different number of basis functions. We also note that in this example we can observe instability of the PGARK method as discussed in Sect. 4.1. As it is typically done in the algebraic linear system setting, in case of incidental quasi-orthogonality of the basis vectors in PGARK, one could “look a-ahead” and generate subsequent vectors, by accordingly modifying the implementation; see, e.g., [21] and references therein. We have not pursued this here, but it should be implemented if a robust piece of software is desired.

Middle panel of Fig. 3 reports the error in the approximation of the feedback gain matrix K. It is very interesting to observe that even on this convection dominated problem all the methods can reach an accuracy of order 10−6.

Finally, we show the error in the approximation of the transfer function in the right panel of Fig. 3. In this example, we can see that the PGARK method performs better than the others but it is rather unstable; this well known instability problem will be analyzed in future work. The discussion upon the quality of the basis function we had in Test 1 still hold true. The iterative methods are definitely a feasible alternative to well-known techniques as BT and POD.

In the right panel of Fig. 2, we show the CPU time of BT, GARK and PGARK for different dimensions n of the dynamical system. We note that, again, the Galerkin projection reaches the desired accuracy faster than the two Petrov-Galerkin methods (PGARK and BT). This is an interesting result, since it seems to show that generating two spaces is not strictly necessary to achieve good accuracy at high performance.

5.3 Test 3: A Discussion on Large Scale Balanced Truncation

In this experiment we report on some of the shortcomings we have experienced with the version of balanced truncation that we have implemented for handling the large scale setting; here the Lyapunov equations were solved using projection onto rational Krylov spaces. These problems mainly arise because of the two-step procedure: first the approximate solution of the two Lyapunov equations in (12), then the projection of the Riccati equation onto the spaces of the two obtained approximate Gramians. This is precisely what can be avoided in the projected Petrov-Galerkin approach. Indeed, while constructing the same spaces as those used by the Gramian solvers, GARK readily obtains an approximation to the sought after Riccati solution, without the intermediate approximation of the Gramians.

The first difficulty consists of choosing the stopping tolerance for iteratively solving the two Lyapunov equations, so that the two Gramians are good enough to produce an acceptable Riccati approximate solution. A tolerance simply smaller than that used in the stopping criterion for the Riccati equation is not sufficient. Rather, it should be a few orders of magnitude higher. In Table 1 we report what happens to the Riccati solution for the data in the two previous examples (here n = 103, 041), for different values of the Lyapunov solvers tolerance.

For the sake of the analysis, we also considered a matrix A stemming from the five point stencil finite difference discretization of the operator

in the unit square, and the same values of B and C as in Test 1. Results are reported in the rightmost group of columns in Table 1.

Clearly, the Lyapunov equation tolerance granting convergence to the Riccati equation is data dependent. For the data in Test 2, requiring a tolerance one order of magnitude lower than the final one is enough, whereas this is not so for Test 1. For the operator \(\mathcal {L}\) the situation is even more severe.

The second difficulty arises in case the iterative schemes for approximately computing the two Gramians converge to the requested accuracy in a quite different number of iterations. In this case, the Riccati equation can be projected onto a space whose dimension is at most the one obtained by the lowest rank basis. The projection procedure then stops, irrespective of the final accuracy of the obtained approximate Riccati solution. This phenomenon is reported in Fig. 4, for the data associated with (19) and the selections C = 1 and B = [−1, 1, −1, 1, …, 1] (alternating ones). The employed Lyapunov solver for B converges in 14 iterations, with a solution of rank 10, while the solver for C takes 28 iterations, and yields a solution of rank 27. The balanced truncation procedure terminates after 10 iterations, with an unsatisfactory relative residual norm above 10−3. The figure also reports the convergence of the projection methods, which are able to reach the desired accuracy with similar convergence history.

Convergence history for GARK, PGARK and BT for \(\mathcal {L}(w)\) in (19) and specific B and C

6 Conclusions

We have proposed a comparison of different model order reduction techniques for the ARE. We distinguished between two different strategies: (1) First reduction of the dynamical system complexity and then the solution of the corresponding reduced ARE whereas; (2) Simultaneous solution of the ARE and determination of the reduction spaces. The strength of the second strategy is its flexibility for very high dimensional problem, where problems in the class (1) may have memory and computational difficulties, or, in the case of large scale BT, parameter tuning issues. Experiments on both small and large dimensional problems confirm the promisingly good approximation properties of rational Krylov methods as compared to more standard approaches for the approximation of more challenging quantities in the LQR context.

Notes

- 1.

We are unaware of any available implementation of rational Krylov subspace based approaches for large scale BT either with single or coupled bases, that simultaneously performs the balanced truncation while approximating the Gramians.

References

Alla, A., Kutz, J.: Randomized model order reduction, submitted (2017). https://arxiv.org/pdf/1611.02316.pdf

Alla, A., Graessle, C., Hinze, M.: A posteriori snapshot location for POD in optimal control of linear parabolic equations. ESAIM Math Model. Numer Anal. (2018). https://doi.org/10.1051/m2an/2018009

Alla, A., Schmidt, A., Haasdonk, B.: Model order reduction approaches for infinite horizon optimal control problems via the HJB equation. In: Benner, P., Ohlberger, M., Patera, A., Rozza, G., Urban, K. (eds.) Model Reduction of Parametrized Systems, pp. 333–347. Springer International Publishing, Cham (2017)

Antoulas, A.C.: Approximation of Large-Scale Dynamical Systems, Advances in Design and Control. SIAM, Philadelphia (2005)

Atwell, J., King, B.: Proper orthogonal decomposition for reduced basis feedback controllers for parabolic equations. Math. Comput. Model. 33, 1–19 (2001)

Barkouki, H., Bentbib, A.H., Jbilou, K.: An adaptive rational method Lanczos-type algorithm for model reduction of large scale dynamical systems. J. Sci. Comput. 67, 221–236 (2015)

Beattie, C., Gugercin, S.: Model reduction by rational interpolation. In: Model Reduction and Approximation: Theory and Algorithms. SIAM, Philadelphia (2017)

Benner, P., Bujanović, Z.: On the solution of large-scale algebraic Riccati equations by using low-dimensional invariant subspaces. Linear Algebra Appl. 488, 430–459 (2016)

Benner, P., Heiland, J.: LQG-balanced truncation low-order controller for stabilization of laminar flows. In: King, R. (ed.) Active Flow and Combustion Control 2014, vol. 127 of Notes on Numerical Fluid Mechanics and Multidisciplinary Design, pp. 365–379. Springer International Publishing, Cham (2015)

Benner, P., Hein, S.: MPC/LQG for infinite-dimensional systems using time-invariant linearizations. In: Tröltzsch, F., Hömberg, D. (eds.) System Modeling and Optimization. IFIP AICT, vol. 291, pp. 217–224. Springer, Berlin (2013)

Benner, P., Saak, J.: A Galerkin-Newton-ADI method for solving large-scale algebraic Riccati equations. Tech. Rep. 1253, DF Priority program (2010)

Benner, P., Saak, J.: Numerical solution of large and sparse continuous time algebraic matrix Riccati and Lyapunov equations: a state of the art survey. GAMM-Mitteilungen 36, 32–52 (2013)

Benner, P., Li, J.-R., Penzl, T.: Numerical solution of large-scale Lyapunov equations, Riccati equations, and linear-quadratic optimal control problems. Numer. Linear Algebra Appl. 15, 1–23 (2008)

Benner, P., Cohen, A., Ohlberger, M., Willcox, K. (eds.): Model Reduction and Approximation: Theory and Algorithms. Computational Science & Engineering. SIAM, Philadelphia (2017)

Borggaard, J., Gugercin, S.: Model reduction for DAEs with an application to flow control. In: King, R. (ed.) Active Flow and Combustion Control 2014. Notes on Numerical Fluid Mechanics and Multidisciplinary Design, vol. 127, pp. 381–396. Springer, Cham (2015)

Braun, P., Hernández, E., Kalise, D.: Reduced-order LQG control of a Timoshenko beam model. Bull. Braz. Math. Soc. N. Ser. 47, 143–155 (2016)

Druskin, V., Simoncini, V.: Adaptive rational Krylov subspaces for large-scale dynamical systems. Syst. Control Lett. 60, 546–560 (2011)

Falcone, M., Ferretti, R.: Semi-Lagrangian Approximation Schemes for Linear and Hamilton-Jacobi Equations. SIAM, Philadelphia (2014)

Grüne, L., Pannek, J.: Nonlinear Model Predictive Control. Theory and Algorithms. Springer, Berlin (2011)

Gugercin, S., Antoulas, A.C., Beattie, C.: \(\mathcal {H}_2\) model reduction for large-scale linear dynamical systems. SIAM J. Matrix Anal. Appl. 30, 609–638 (2008)

Gutknecht, M.H.: A completed theory of the unsymmetric Lanczos process and related algorithms. I. SIAM J. Matrix Anal. Appl. 13, 594–639 (1992)

Heyouni, M., Jbilou, K.: An extended Block Krylov method for large-scale continuous-time algebraic Riccati equations. Electron. Trans. Numer. Anal. 33, 53–62 (2008–2009)

Hinze, M., Pinnau, R., Ulbrich, M., Ulbrich, S.: Optimization with PDE Constraints. Mathematical Modelling: Theory and Applications. Springer, New York (2009)

Jaimoukha, I.M., Kasenally, E.M.: Krylov subspace methods for solving large Lyapunov equations. SIAM J. Numer. Anal. 31, 227–251 (1994)

Jaimoukha, I.M., Kasenally, E.M.: Oblique projection methods for large scale model reduction. SIAM J. Matrix Anal. Appl. 16, 602–627 (1995)

Jbilou, K.: Block Krylov subspace methods for large algebraic Riccati equations. Numer. Algorithms 34, 339–353 (2003)

Kleinman, D.: On an iterative technique for Riccati equation computations. IEEE Trans. Autom. Control 13, 114–115 (1968)

Kramer, B., Singler, J.: A POD projection method for large-scale algebraic Riccati equations. Numer. Algebra Control Optim. 4, 413–435 (2016)

Kunisch, K., Volkwein, S.: Galerkin proper orthogonal decomposition methods for a general equation in fluid dynamics. SIAM J. Numer. Anal. 40, 492–515 (2006)

Kunisch, K., Volkwein, S.: Proper orthogonal decomposition for optimality systems. Math. Model. Numer. Anal. 42, 1–23 (2008)

Lancaster, P., Rodman, L.: Algebraic Riccati Equations. Oxford University Press, Oxford (1995)

Lin, Y., Simoncini, V.: Minimal residual methods for large scale Lyapunov equations. Appl. Numer. Math. 72, 52–71 (2013)

Lin, Y., Simoncini, V.: A new subspace iteration method for the algebraic Riccati equation. Numer. Linear Algebra Appl. 22, 26–47 (2015)

Quarteroni, A., Valli, A.: Numerical Approximation of Partial Differential Equations. Springer Series in Computational Mathematics. Springer, Berlin (1994)

Schmidt, A., Haasdonk, B.: Reduced basis approximation of large scale parametric algebraic Riccati equations. ESAIM Control Optim. Calc. Var. (2017). https://doi.org/10.1051/cocv/2017011

Simoncini, V.: Analysis of the rational Krylov subspace projection method for large-scale algebraic Riccati equations. SIAM J. Matrix Anal. Appl. 37, 1655–1674 (2016)

Simoncini, V.: Computational methods for linear matrix equations. SIAM Rev. 58, 377–441 (2016)

Simoncini, V., Szyld, D.B., Monslave, M.: On two numerical methods for the solution of large-scale algebraic Riccati equations. IMA J. Numer. Anal. 34, 904–920 (2014)

Sirovich, L.: Turbulence and the dynamics of coherent structures. Parts I-II. Q. Appl. Math. XVL, 561–590 (1987)

Volkwein, S.: Model reduction using proper orthogonal decomposition. Lecture notes, University of Konstanz (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Alla, A., Simoncini, V. (2018). Order Reduction Approaches for the Algebraic Riccati Equation and the LQR Problem. In: Falcone, M., Ferretti, R., Grüne, L., McEneaney, W. (eds) Numerical Methods for Optimal Control Problems. Springer INdAM Series, vol 29. Springer, Cham. https://doi.org/10.1007/978-3-030-01959-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-01959-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01958-7

Online ISBN: 978-3-030-01959-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)