Abstract

Recent advances in contemporary Virtual Reality (VR) technologies are going to have a significant impact on everyday life. Through VR it is possible to virtually explore a computer-generated environment as a different reality, and to immerse oneself into the past or in other virtual environments without leaving the current real-life situation. Cultural heritage (CH) monuments are ideally suited both for thorough multi-dimensional geometric documentation and for realistic interactive visualisation in immersive VR applications. Furthermore, VR is increasingly in use for virtual locations to enhance a visitor’s experience by providing access to additional materials for review and deepening knowledge either before or after the real visit. Using today’s available 3D technologies a virtual place is no longer just a presentation of geometric environments on the Internet or a virtual tour of the place using panoramic photography. Additionally, the game industry offers tools for interactive visualisation of objects to motivate users to virtually visit objects and places. This paper presents the generation of virtual 3D models for different cultural heritage monuments and their processing for data integration into the two game engines Unity and Unreal. The workflow from data acquisition to VR visualisation using the VR system HTC Vive, including the necessary programming for navigation and interactions, is described. Furthermore, the use (including simultaneous use of multiple end-users) of such a VR visualisation for CH monuments is discussed.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Virtual Reality (VR) is defined as the representation and simultaneous perception of the reality and its physical characteristics in an interactive virtual environment, generated by a computer in real time. This technology will change our everyday future and our working life. It is already obvious that this new technology will offer great opportunities for many applications such as medicine, technics, engineering, computer science, architecture, cultural heritage and many others. VR typically refers to computer technologies that use software to generate realistic images, sounds and interactions that replicate a real environment, and simulate a user’s physical presence in this environment. Furthermore, VR has been defined as a realistic and immersive simulation of a three-dimensional environment, created using interactive software and hardware, and experienced or controlled by movement of the user’s body or as an immersive, interactive experience generated by a computer. VR offers an attractive opportunity to visit objects in the past [1] or places, which are not easily accessible, often from positions which are not possible in real life. Moreover, these fundamental options are increasingly being implemented today through so-called “serious games”, which embed information in a virtual world and create an entertaining experience (edutainment) through the flow of and interaction with the game [2, 3]. One of the first virtual museums using the VR system HTC Vive as a HMD for immersive experiences was introduced in [4].

In this contribution the workflow from 3D data recording to the generation of a VR application is presented using different practical examples, which were created by the Photogrammetry & Laser Scanning lab of HafenCity University (HCU) Hamburg.

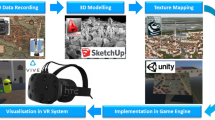

2 Workflow

The workflow for the production of a VR application at HCU Hamburg is schematically represented in Fig. 1. The data acquisition of real objects takes place with digital photogrammetry and/or with terrestrial (TLS) and airborne laser scanning (ALS), while for objects which no longer exist, historical sources such as maps and perspective views are used. The acquired 3D data is mainly modelled in AutoCAD with geometric primitives, in order to avoid using memory-intensive surface models with high number of triangles. The texture mapping of the CAD models is carried out in both the software Autodesk 3ds Max and directly in the game engine. After importing the data into the game engine they are further processed and prepared for visualisation. The implementation of the data in the game engine also includes appropriate programming of navigation and interactions for the user in the VR environment. The interactive visualisation of the created VR environment was then produced using the VR system HTC Vive. Edler et al. [5] present a different workflow for the construction of an interactive cartographic VR environment using open-source software for exploring urban landscapes.

3 Data Recording and Processing

For the 3D recording of historic buildings digital photogrammetry and terrestrial laser scanning are used. The photogrammetric data acquisition and 3D modelling of North German castles and manor houses, for example, are described in [6]. The combined evaluation of photogrammetric and terrestrial laser scanning data for the construction of a CAD model of the Old-Segeberg Town House in Bad Segeberg, Germany is published in [7] (Fig. 2). The wooden model of the main building of Solomon’s temple, which was constructed from 1680 to 1692 in Hamburg and is exhibited today in the museum for Hamburg’s history. A photo series was taken with a DSLR camera Nikon D800 from the outside and also from the inside after dismantling of the building into its individual parts. Using these photo sequences scaled point clouds were generated in Agisoft PhotoScan, which were afterwards used for the detailed 3D construction of the temple building in AutoCAD (Fig. 2).

The Almaqah temple located in Yeha in northern Ethiopia exist today only as ruins. The foundation walls of the temple remain upright above the ground, while only ancient remains of the monumental building are visible. These are the subject of a current excavation of the Sana’a branch office of the Orient department of the German Archaeological Institute. Point clouds from terrestrial laser scanning were used by an architect and a geomatics engineer as the basis for a virtual reconstruction of the two buildings (Fig. 2 right) [8].

For the virtual 3D reconstruction of the entire town Segeberg in the year 1600 both previously constructed, detailed building models of the Old-Segeberg Town House and of the church St. Marien, and historical sources such as maps and perspective views were used in combination with the expert knowledge of a historian (Fig. 3). From the previously constructed Town House twelve different variants were derived and virtually distributed throughout the environment, while special buildings such as the castle, town hall, monastery, storehouse and others were constructed using information of the Braun-Hogenberg perspective view of the town in the year 1588 and the historian’s expert knowledge [9]. The positions of the buildings, which are overlaid onto Google Earth (Fig. 3 left), originate from historical maps. The reconstruction of the approximately 300 buildings was carried out in AutoCAD and the texture mapping of the objects was accomplished in 3ds Max. The terrain model of Segeberg and the surrounding environment is based on airborne laser scanning data, which was provided by the Federal State Office for Surveying and Geo-Information Schleswig-Holstein in Kiel, Germany. The historical lime mountain, which no longer exists, was physically modelled and reconstructed on the basis of historical sources from 1600 using butter first (initial model) and then gypsum. The gypsum model was later photogrammetrically recorded from image sequences, in order to derive the scaled historical lime mountain model from point clouds (Fig. 3 right), which was finally integrated in the latest laser scanning terrain model [10].

4 Game Engines and VR System

4.1 Game Engines Used

A game engine is a software framework designed for the creation and development of video games for consoles, mobile devices and personal computers. The core functionality typically provided by a game engine includes a rendering engine for 2D or 3D graphics to display textured 3D models (spatial data), a physics engine or collision detection (and collision response) for the interaction of objects, an audio system to emit sound, scripting, animation, artificial intelligence, networking, streaming, memory management, threading, localisation support, scene graph, and may include video support for cinematics. For the projects at HCU Hamburg the Unreal Engine 4 (UE4) from Epic Games and the game engine Unity from Unity Technologies are used. Both products allow the development of computer games and other interactive 3D graphics applications for various operating systems and platforms. All necessary computations using the game engines run in real-time. UE4 was selected due to the opportunity to develop application and interaction logics using a visual programming language called Blueprints. Visual programming with Blueprints does not require the writing of machine-compliant source code. Thus it provides opportunities for non-computer scientists to program all functions for a VM using graphical elements. The two game engines are free for non-commercial use and very well-suited to the development of virtual reality application of cultural heritage monuments.

4.2 VR System Used

For the visualisation of the VR application the Virtual Reality System HTC Vive is used (Fig. 4 left). The HTC Vive, developed by HTC and by Valve Corporation, was released on April 5, 2016. It offers a room scale VR experience through tracking the Head Mounted Display (HMD) and the motion controller, which control interactions in the virtual environment, using “Lighthouse” technology. The Lighthouse technology guarantees a very precise and temporally high-resolution determination of the current position and orientation of the user within the interaction area of 4.6 m × 4.6 m (Fig. 4 right). The visual presentation happens in the VR glasses with a resolution of 1080 × 1200 pixel per eye using a frame rate of 90 Hz.

Components (left) and schematic setup (right) of the VR system HTC Vive (www.vive.com).

5 Implementation and Generation of VR Applications

5.1 Data Reduction for VR Applications

All modelled and textured objects are transferred from 3ds Max into the game engine using appropriate file formats such as FBX. The CAD modelling is carried out by solid modelling based mainly on photogrammetric and TLS data. These CAD elements are divided into triangles and quads after the transfer to 3ds Max and/or into the engine, which significantly increases the data volume. In principle, more triangles and/or quads require more computing power, which in turn calls for high performance PCs with powerful graphics cards for VR visualisation. Thus, the general tendency exists to try to reduce the data set on one hand, but there is also a conflicting drive to obtain a visually attractive representation of the object. Therefore, it can be essential for data reduction to replace geometrical details with appropriate textures in order to still represent required details. In addition, the settings for export/import into different software packages should be compatible with the modelling and texture mapping of the objects.

Thus, the number of polygons of the Selimiye mosque had to be substantially reduced in order to guarantee homogeneous movement of the user without latency and interactions in the virtual 3D model using the VR system HTC Vive (Fig. 5). In the first step the number of polygons was reduced from 6.5 to 1.5 million. Interactive visualization thereby permits an investigation of the 3D data in intuitive form and in previously unseen quality. Through the free choice of the viewing angle, similar to the real, human movement, the 3D objects are observed from different distances, so that modelling errors are easily identified. The following modelling errors were visible after the visual inspection of the virtual Selimiye mosque in the VR system HTC Vive: (A) gaps and shift of textures, (B) double surfaces of object elements, (C) missing elements, which are available in the original data structure of the mosque, and (D) errors in the proportionality of items compared to the original structure of the mosque. After correction of all detected errors the number of polygons could be reduced to 0.9 million, which was acceptable for the visualisation of the virtual Selimiye mosque in the VR system [11].

5.2 Landscape Design

Another substantial aspect of VR is the environment of the building/monument to be visualised. If a real digital terrain model (DTM) is available, it is then possible to integrate the DTM in form of a height-coded greyscale image as a so-called height map into the engine. For the generation of terrain the Landscape module of the Game Engine is used, which in UE4 allows intelligent and fast presentation via adaptive resolution of the terrain (level of detail) including vegetation objects such as trees. Since the engine, however, generally does not work with geodetic coordinate systems, scale adjustment is required with respect to the scaling of area sections as well as height data. Nevertheless, geodetic professionals demand that all geodata is represented in correct relative positions and in the correct dimensions. This is very essential for VR applications, since all objects are presented on a 1:1 scale in the viewer.

5.3 Locomotion and Navigation in VR

Most VR applications use HTC Vive for natural, human locomotion in which real movements are converted into virtual motion, while the spatial restriction of the interaction area is extended by teleportation. The teleportation function is driven by the motion controller, which enable VR visitors to bridge larger distances (Fig. 6 left). The speed of bridging space for very large distances can be increased and the user can also click at configured hotspots to jump directly to the requested and pre-defined place in VR.

Due to the immense spatial expanse of many generated VR environments, such as the mosque or the temple, the flight option was developed as a further artificial movement for the user. Using the motion controller the user can guide the direction and the speed of the flight, whereby the flight mode is activated by permanently pressing the trigger button of the motion controller (Fig. 6 right). The movement function for the VR application of the mosque in Edirne and Solomon’s temple was implemented based on the SteamVR package of the Unity Asset Store.

5.4 Interactions and Animations in VR

For the control of interactions and for the release of animations the VR system HTC Vive supplies two controllers, for which the configuration of the buttons is accordingly programmed. For the virtual museum Old-Segeberg Town House the following interactions and animations were integrated in the VR application (Fig. 7): 52 info boards with text and photos, animation of the architectural history of the building over six epochs, and animations of objects that no longer exist in the building, such as fire-places [4]. They are controlled with the right hand, while the left hand is only used for the mobile display of information.

Interactions and animations in der VR application – f.l.t.r. Virtual Museum Old-Segeberg Town House using the controller for the request of information by clicking the info sign and animating the history of building construction phases, the building Meridian Circle of the astronomical observatory Hamburg-Bergedorf with the interactively opened dome for watching the starlit sky with the telescope.

For the production of a VR application of the astronomical observatory Hamburg-Bergedorf the two buildings Meridian Circle and Equatorial were constructed (Fig. 7 right). These two modelled buildings are no longer active in use since the telescopes are no longer in situ. Therefore, two non-original telescopes were integrated in each virtual building, in order to watch the starlit sky using the telescope after interactively opening the dome of the building.

5.5 Multiple Users in VR

Using a HMD for the virtual reality application significantly increases the immersive experience, since all senses are sealed off from the external world around the user. In order to bring a social component into the VR application, several users can meet each other in the VR environment, in order to investigate together the virtual object, while each user is at another location in the real world (Fig. 8). Therefore, each other user appears as an avatar in the appropriate virtual position. The independent network solution Photon (Photon Unity Networking) is used to synchronize the movements of the different users. The voices are recorded by the microphone integrated in the HMD and played for all users relative to the position of the speaker (spatial audio). Photon also provides server infrastructure, which is free for use up to a certain user number. The functionality for several users was used for the VR application of the Selimiye mosque and the city model Duisburg 1566, when four users visited the virtual model at the same time at different locations in order to examine and discuss together the geometrical quality of the modelled 3D data. During the common virtual visit the VR visitors could communicate via the integrated microphones. Since the user groups were located at two different locations in Hamburg, Istanbul and Bochum respectively, travel expenses could be saved for these meetings.

5.6 Developed Virtual Applications

Since August 2016 some VR applications were already developed for projects in the Laboratory for Photogrammetry & Laser Scanning of the HafenCity University Hamburg and in lectures, which are illustrated in Fig. 9. Selected VR applications are currently presented at scientific conferences and trade fairs, in demos at the Photogrammetry & Laser Scanning lab and in the museum Old-Segeberg Town House (virtual museum and Segeberg 1600).

Different VR applications of cultural heritage monuments developed at HCU Hamburg – f.l.t.r. Selimiye-mosque Edirne, Duisburg 1566, bailiwick Pinneberg, Solomon’s Temple (top), astronomical observatory Hamburg-Bergedorf, West Tower Duderstadt and Segeberg 1600, and Almaqah temple in Yeha, Ethiopia (bottom).

6 Conclusions and Outlook

In this contribution the workflow from 3D data acquisition of an object over 3D modelling and texture mapping up to immersive, interactive visualization of the generated objects in a programmed VR application was presented showing different examples. Following lessons have been learned: Both game engines Unity and Unreal demonstrate their great potential for the development of VR applications. However, the game engine Unreal allows non-programming experts the easy-to-use option visual programming to avoid direct code writing. The file format FBX is the best choice for data transfer into the game engine. The VR system HTC Vive supplies a real immersive experience, which permits the users to dive into a virtual environment without having ever seen the object in real life or experienced in the real scale. The functionality of the VR system enables simultaneous visits by multiple users thus supporting new possibilities such as holding common discussions within the virtual model about aspects of architecture, structural analysis, history, virtual restoration of the object and many others. Moreover, the VR application permits a detailed geometrical quality inspection of the 3D models in the framework for a visual inspection using the high degree of mobility and unusual perspectives of the viewer. The development of VR applications also offers capabilities for difficult areas such as complex buildings, dangerous zones, destroyed cultural heritage monuments and tunnels, in order to simulate and train disaster control operations. Beyond that, such VR applications can also offer possibilities for therapy to humans with claustrophobia, acrophobia and aviophobia.

Not only individual cultural heritage monuments, (historic) buildings or building ensembles are suitable for interactive VR visualisations, but also whole towns and cities as soon as they are available as 3D models. Since the winter semester 2016/2017 Virtual Reality is also included in the lecture module Visualisation of the master study program Geodesy and Geoinformatics at HafenCity University Hamburg.

References

Gaitatzes, A., Christopoulos, D., Roussou, M.: Reviving the past: cultural heritage meets virtual reality. In: Proceedings of the Conference on Virtual Reality, Archaeology and Cultural Heritage, pp. 103–110 (2001)

Anderson, E.F., McLoughlin, L., Liarokapis, F., Peters, C., Petridis, P., De Freitas, S.: Developing serious games for cultural heritage: a state-of-the-art review. Virtual Reality 14(4), 255–275 (2010)

Mortara, M., Catalano, C.E., Bellotti, F., Fiucci, G., Houry-Panchetti, M., Petridis, P.: Learning cultural heritage by serious games. J. Cult. Herit. 15(3), 318–325 (2014)

Kersten, T., Tschirschwitz, F., Deggim, S.: Development of a virtual museum including a 4D presentation of building history in virtual reality. In: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 42-2/W3, pp. 361–367 (2017)

Edler, D., Husar, A., Keil, J., Vetter, M., Dickmann; F.: Virtual Reality (VR) and Open Source Software: A Workflow for Constructing an Interactive Cartographic VR Environment to Explore Urban Landscapes. Kartographische Nachrichten – Journal of Cartography and Geographic Information, issue 1, pp. 5–13, Bonn, Kirschbaum Verlag (2018)

Kersten, T., Acevedo Pardo, C., Lindstaedt, M.: 3D Acquisition, Modelling and Visualization of north German Castles by Digital Architectural Photogrammetry. In: The International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, 35(5/B2), pp. 126–132 (2004)

Kersten, Th., Hinrichsen, N., Lindstaedt, M., Weber, C., Schreyer, K., Tschirschwitz, F.: Architectural Historical 4D Documentation of the Old-Segeberg Town House by Photogrammetry, Terrestrial Laser Scanning and Historical Analysis. In: Lecture Notes in Computer Science, vol. 8740, pp. 35–47, Springer Internat. Publishing Switzerland (2014)

Lindstaedt, M., Mechelke, K., Schnelle, M., Kersten, T.: Virtual reconstruction of the Almaqah temple of yeha in ethiopia by terrestrial laser scanning. In: The Internat. Archives of Photogrammetry, Remote Sensing & Spatial Inform. Sciences, 38(5/W16) (2011)

Deggim, S., Kersten, T., Tschirschwitz, F., Hinrichsen, N.: Segeberg 1600 – reconstructing a historic town for virtual reality visualisation as an immersive experience. In: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 42(2/W8), pp. 87–94 (2017)

Deggim, S., Kersten, T., Lindstaedt, M., Hinrichsen, N.: The return of the Siegesburg - 3D-reconstruction of a disappeared and forgotten monument. In: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 42(2/W3), pp. 209–215 (2017)

Kersten, T., et al.: The Selimiye Mosque of Edirne, Turkey – an immersive and interactive virtual reality experience using HTC Vive. In: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XLII-5/W1, pp. 403–409 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Kersten, T.P., Tschirschwitz, F., Deggim, S., Lindstaedt, M. (2018). Virtual Reality for Cultural Heritage Monuments – from 3D Data Recording to Immersive Visualisation. In: Ioannides, M., et al. Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection. EuroMed 2018. Lecture Notes in Computer Science(), vol 11197. Springer, Cham. https://doi.org/10.1007/978-3-030-01765-1_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-01765-1_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01764-4

Online ISBN: 978-3-030-01765-1

eBook Packages: Computer ScienceComputer Science (R0)