Abstract

We propose a new bio-plausible model based on the visual systems of Drosophila for estimating angular velocity of image motion in insects’ eyes. The model implements both preferred direction motion enhancement and non-preferred direction motion suppression which is discovered in Drosophila’s visual neural circuits recently to give a stronger directional selectivity. In addition, the angular velocity detecting model (AVDM) produces a response largely independent of the spatial frequency in grating experiments which enables insects to estimate the flight speed in cluttered environments. This also coincides with the behaviour experiments of honeybee flying through tunnels with stripes of different spatial frequencies.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Insects though with a mini-brain have very complex visual processing systems which is the fundamental of the motion detection. How visual information are processed, especially how insects estimate flight speed have been met with strong interest for a long time. Here we use Drosophila as instance whose visual processing pathways have been researched the most among insects by using both anatomy, two-photon imaging and electron microscope technologies, to explain generally how signals are processed in insects’ visual systems, inspiring us to build up new bio-plausible neural network for estimating angular velocity of image motion.

Drosophila have tens of thousands of ommatidia, each of which has its small lens containing 8 photoreceptors R1-R8 sending their axons into the optic lobe to form a visual column. Optic lobe, as the most important part of the visual system, consists of four retinotopically organized layers, lamina, medulla, lobula and lobula plate. The number of columns in optic lobe is the same with the number of ommatidia [1]. Each column contains roughly one hundred neurons and can process light intensity increments (ON) and decrements (OFF) signals in parallel way simultaneously [2]. In each column, visual signals of light change can be transformed to motion signals by this visual system with ON and OFF pathways [3] (see Fig. 1). Visual signals of light change can be transformed to motion signals by these two pathways in each column [3] (see Fig. 1).

Visual system of Drosophila with ON and OFF pathways. In each column of the visual system, the motion information are mainly captured by photoreceptors R1-R6, and processed by lamina cells L1-L3, medulla neurons (Mi1, Mi9, Tm1, Tm2, Tm3, Tm9) and T4, T5 neurons. The lobula plate functioning as a map of visual motion which has four layers representing four cardinal directions (front to back, back to front, upward and downward). T4 and T5 cells showing both preferred motion enhancement and non-preferred direction suppression are first to give a strong directional selectivity [5]. This figure referenced Takemura and Arenz’s figures [3, 4].

How the visual system we describe above detects motions has been researched for a long time. Hassenstein and Richardt proposed an elementary motion detector (EMD) model to describe how animals sense motion [6]. This HR detector uses two neighbouring viewpoints as a pair to form a detecting unit. The delayed signal from one input multiplies the signal from another without delay to get a directional response (Fig. 2a). This ensures the motion of preferred direction have a higher response than non-preferred direction. Another competing model called BL model, proposed by Barlow and Levick implements the non-preferred direction suppression instead [7]. BL detector uses signal from one input without delay to divide the input from another delayed arm located on preferred side to get a directional selective response (Fig. 2b). Both models can be implemented in Drosophila’s visual system since patch-clamp recordings showed a temporal delay for Mi1 regard to Tm3 in ON pathway and Tm1 with regard to Tm2 in OFF pathway [8]. This also provides the neural fundamental for delay and correlation mechanism.

Contrast of the Motion detectors. (a) In Hassenstein-Reichardt detector, a delayed signal from left photoreceptor multiplies the signal from right to give a preferred direction enhancement response. (b) In Barlow-Levick detector, a delayed signal from right divides the signal from left to suppress null direction response. (c) A recently proposed Full T4 detector combines both PD enhancement and ND suppression. (d) Proposed angular velocity detecting unit (AVDU) detector combines the enhancement and suppression with a different structure.

Recently, a HR/BL hybrid model called Full T4 model has been proposed based on the finding that both preferred enhancement and non-preferred suppression is functioning in Drosophila’s visual circuits [9]. The motion detector they proposed consists of three input elements. The delayed signal from left arm multiplies the undelayed signal from middle arm, and then the product is divided by the delayed signal from right arm to give the final response (Fig. 2c). Circuits connecting T4 or T5 cells that are anatomically qualified to implement both two mechanisms also give a support to this hybrid model [3]. According to their simulation, this model structure can produce a stronger directional selectivity than HR model and BL model. However, one problem of the models we mentioned above is that they prefer particular temporal frequency and cause the ambiguity that a response could correspond to two different speeds. Though they can give a directional response for motion, it’s hard to estimate the motion speed. So these models can only explain part of the motion detection, while some of the descending neurons, according to Ibbotson’s records, shows that the response grows monotonically as the angular velocity increases [10]. What’s more, the response is largely independent with the spatial frequency of the stimulus, which is also coincident with the corridor behaviour experiments of honeybee [11].

In order to solve this problem, Riabinina presents a angular velocity detector mainly based on HR model [12]. The key point of this model is that it uses the summation of the absolute values of excitation caused by differentiation of signal intensity over time, which is strongly related to the temporal frequency and independent of the angular velocity, as the denominator to eliminate the temporal dependence of the final output. Cope argues that this model simulates a circuit that separates to the optomotor circuit which requires more additional neurons and costs more energy. Instead, Cope proposes a more bio-plausible model as an extension to the optomotor circuit which uses the ratio of two HR model with different delays [13]. The main idea is that the ratio of two bell shaped response curves with different optimal temporal frequencies can make a monotonic response to eliminate the ambiguity. The problem is that the delays is chose by undetermined coefficients method, and need to be finely tuned which may weaken the robustness of the model.

Neural structure under recent researches inspires us building up a new angular velocity detection model. We agree that visual motion detection systems is complex and should have three or more input elements like Full T4 model as the new researches indicate. But the structure of the models with both enhancement and suppression implemented can be very different from Full T4 model. Here we give an example AVDU (Fig. 2d) for reference. AVDU (angular velocity detector unit) uses the product of the delayed signal from left arm and undelayed signal from middle arm to divide by the product of the delayed signal from middle arm and undelayed one from right arm. This structure combines the HR and BL model together to give a directional motion response. What’s more, according to our simulation, AVDU is suitable as a fundamental unit for angular velocity detection model that is largely independent to spatial frequency of the grating pattern.

2 Results

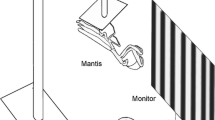

Based on proposed AVDU detector, we build up the angular velocity detecting model (AVDM) to estimate visual motion velocity in insects’ eyes. AVDM consists of an ommatidial pattern with 27 horizontal by 36 vertical ommatidia per eye to cover the field of view which is 270\(^\circ \) horizontally by 180\(^\circ \) vertically. Each 3 adjacent ommatidia in the horizontal direction form a detector for horizontal progressive image motion. And each detector consists of two AVDUs with different sampling rates to produce a directional response for preferred progressive motion (i.e. image motion on left eye when flying backward). The ratio of two AVDUs with different sampling rates then produce a response largely independent of the spatial frequencies of the sinusoidal grating. The output of all detectors then are summed and averaged to give a response representing the velocity of the visual image motion (see Fig. 3).

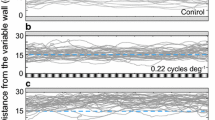

We simulated the OFF pathway of the Drosophila’s visual neural circuits when the sinusoidal grating moving in preferred direction. The normalized responses of AVDM over different velocities and spatial periods in contrast of experimental results [14] can been seen from Fig. 4. The response curves of AVDM are generally in accordance with the experimental data. Especially when the spatial period is 14\(^\circ \), the curve shows a notable lower response than other spatial periods. This might be caused by the suppression of high temporal frequency of T4/T5 cells [4] since the descending neurons are located downstream of optomotor circuit. This can also be explained by Jonathan’s research on spatial frequency tuning of bumblebee Bombus impatiens which indicates that high spatial frequency affects the speed estimation [15]. And this will be discussed in later researches.

In order to get a more general results, the spatial period of the grating and the angular velocity of the image motion are chosen widely (Fig. 5). All response curves under different periods show nearly monotonic increasing potential. And the responses weakly depend on the spatial period of the grating. This coincides with the responses of the descending neurons according to Ibbotson’s records [10, 14]. And this is important for insects estimating flight speed or gauging distance of foraging journey in a clutter environment.

Contrast of AVDM and experimental records under different angular velocities. (a) The responses of AVDM over different spatial periods. (b) The responses of one type of descending neuron (DNIII\(_{4}\)) over different spatial periods based on Ibbotson’s records [14].

Contrast of responses of two other models. (a) Riabinina’s model uses rad/s as the velocity metric and the spatial frequencies is 10 \({\text {m}}^{-1}\) (solid), 20 \({\text {m}}^{-1}\) (dashed), 30 \({\text {m}}^{-1}\) (dotted) and 40 \({\text {m}}^{-1}\) (dot-dashed) [12]. (b) Cope’s model uses spikes to represent the model response and uses method of undetermined coefficients to decide the two delays of the correlation system [13].

Though the results of Riabinina’s model use different velocity and spatial frequency metric and Cope’s model use spikes as the final output, the trend of the curves can show the performances of the models. So we give their results here as reference (Fig. 6). In general, AVDM performs better than Riabinina’s model whose response curves of 4 different spatial frequencies are separate from each other [12]. Cope’s model is more bio-plausible than Riabinina’s model which is based on optomotor circuit. But it only performs well when the speed is around 100 deg/s, and the semilog coordinate outstands that part, while honeybee mainly maintains a constant angular velocity of 200–300 deg/s in open flight [16]. Another problem of Cope’s model is that the response of grating with very high frequency should be lower rather than maintain spatial independence according to Ibbotson’s records on descending neuron [10, 14]. Our model AVDM uses a bandpass temporal frequency filter simulated by experimental data [4] to deal with this problem. As you can see, AVDM produces a lower response when the spatial period is 14\(^\circ \) and shows response largely independence on spatial period ranging from 36\(^\circ \) to 72\(^\circ \) (Fig. 5).

3 Methods

All simulations were carried out in Matlab (© The MathWorks, Inc.), And the layout of the AVDM neural layers is given below.

3.1 Input Signals Simulation

The input signal is simulated using two dimensional images frames with sinusoidal grating moving across the vision. AVDU1 processes all input images while AVDU2 only samples half the total images. The spatial period \(\lambda \) (deg) of the grating and the moving speed V (deg/s) are treated as variables. This naturally induces a temporal frequency of V/\(\lambda \) (Hz) and an angular frequency \(\omega = 2\pi V/\lambda \).

Considering the sinusoidal grating moving in visual field of the detecting unit with three receptors A, B and C, let \(I_{0}\) be the mean light intensity, then the signal in receptor A can be expressed as \(I_{0} + m \cdot sin(\omega t)\). Let \(\varDelta \phi \) denotes the angular separation between the neighbouring receptors, then the signal of receptor B is \(I_{0} + m \cdot sin(\omega (t-\varDelta \phi /V))\), and the signal of receptor C is \(I_{0} + m \cdot sin(\omega (t-2\varDelta \phi /V))\). So the input signal of one eye can be expressed as:

where (x, y) denotes the location of the ommatidium.

3.2 AVDM Neural Layers

(1) Photoreceptor. The first layer of the AVDM neural network receiving the input signals of light intensity change to get the primary information of visual motion:

(2) ON & OFF Pathways. The luminance changes are separated to two pathways according to the neural structures of the Drosophila visual systems, with ON representing light increments and OFF representing light decrements:

(3) Delay and Correlation. The signals are delayed and correlated following the structure of AVDU. Here we take one AVDU as example, let \(S_1, S_2, S_3\) donate the input signal of photoreceptor A (left), B (middle), C (right), and \(S_1^D, S_2^D\) donate the temporal delayed signal of A and B, then we have the following expression:

similarly we can get \(S_2 \approx M \cdot cos[\omega (t-\varDelta \phi /V)]\), \(S_2^D \approx M \cdot cos[\omega (t-\varDelta \phi /V+\varDelta T)]\) and \(S_3 \approx M \cdot cos[\omega (t-2\varDelta \phi /V)]\), where \(\varDelta T\) is the temporal delay of the model.

According to the structure of AVDU, the response of the detector can be expressed as \(\overline{(S_1^D \cdot S_2)/(S_2^D \cdot S_3)}\), where the bar means the response is averaged over a time period to remove fluctuation caused by oscillatory input. What’s more, we set a lower bound of 0.01 on denominator to avoid the output being too high. This also can be explained by the tonic firing rate of neurons.

(4) Ratio and Average. If we set temporal delay as 6ms, and take two sampling rates as 1ms per frame and 2ms per frame, then we can get the responses of AVDU under different angular velocities, spatial periods and sampling rates. According to our simulation, though the response curves of different sampling rates have different values, the shapes are very similar. That means that using the ratio of the responses under different sampling rates can largely get rid of the influence of spatial frequency. The output of detectors each composed of three neighboring photoreceptors are then summed up and averaged over the whole visual field.

(5) Band-Pass Temporal Frequency Filter. We use the records of temporal tuning of the Drosophila to simulate the band-pass temporal frequency filter here [4]. According to Arenz’s experiments, the tuning optimum of the temporal frequency will shift from 1 Hz to 5 Hz with application of the octopamine agonist CDM (simulating the Drosophila shifts from still to flying). So we set the temporal frequency filter as a bell-shaped response curve which achieves its optimum at 5 Hz under semilog coordinate. In fact HR completed model can naturally be a temporal frequency filter with little modification since it has a particular temporal frequency preferred bell-shaped curve.

4 Discussion

We proposed a bio-plausible model, the angular velocity detecting model (AVDM), for estimating the image motion velocity using the latest neural circuits discoveries of the Drosophila visual systems. We presented a new structure AVDU as a part of the model to implement both preferred direction motion enhancement and non-preferred direction motion suppression, which is found in Drosophila’s neural circuits to make a stronger directional selectivity. And we use the ratio of two AVDUs with different sampling rates to give spatial frequency independent responses for estimating the angular velocity. In addition this can be used as the fundamental part of the visual odometer by integrating the output the AVDM. This also provides a possible explanation about how visual motion detection circuits connecting the descending neurons in the ventral nerve cord.

Using the ratio of two AVDUs with different sampling rates is twofold. One of the reason is that it can be realized in neural circuits naturally since one AVDU only needs to process part of the visual information while the structure and even the delay of two AVDUs are the same. It’s easier than using the ratio of two HR-detectors with different delays as Cope’s model did [13], because signals are passed with two different delays means there should have two neurotransmitters in one circuit or there are two circuits. Another reason is that the response of individual AVDU is largely dependent on the spatial frequency of the grating, and the ratio of different sampling rates, according to our simulation, can get rid of the influence of the spatial frequency.

Here we only simulate ON pathway of the visual systems with T4 cells. OFF pathway dealing with brightness decrements is similar. Further, models for forward, upward and downward motion detector can be constructed using the same structure since they can be parallel processed.

References

Fischbach, K.F.: Dittrich APM: the optic lobe of drosopholia melanogaster. I. A Golgi analysis of wild-type structure. Cell Tissue Res. 258(3), 441–475 (1989). https://doi.org/10.1007/BF00218858

Joesch, M., Weber, F., Raghu, S.V., Reiff, D.F., Borst, A.: ON and OFF pathways in Drosophila motion vision. Nature 17(1), 300–304 (2011). https://doi.org/10.1038/nature09545

Takemura, S.Y., Nern, A., Chklovskii, D.B., Scheffer, L.K., Rubin, G.M., Meinertzhagen, I.A.: The comprehensive connectome of a neural substrate for ‘ON’ motion detection in Drosophila. eLife 6, e24394 (2017). https://doi.org/10.7554/eLife.24394

Arenz, A., Drews, M.S., Richter, F.G., Ammer, G., Borst, A.: The temporal tuning of the Drosophila motion detectors is determined by the dynamics of their input elements. Curr. Biol. 27, 929–944 (2017). https://doi.org/10.1016/j.cub.2017.01.051

Haag, J., Mishra, A., Borst, A.: A common directional tuning mechanism of Drosophila motion-sensing neurons in the ON and in the OFF pathway. eLife 6, e29044 (2017). https://doi.org/10.7554/eLife.29044

Hassenstein, B., Reichardt, W.: Systemtheoretische analyse der zeit-, reihenfolgen- und vorzeichenauswertung bei der bewegungsperzeption des rüsselkäfers chlorophanus. Zeitschrift Für Naturforschung B 11(9–10), 513–524 (1956). https://doi.org/10.1515/znb-1956-9-1004

Barlow, H.B., Levick, W.R.: The mechanism of directionally selective units in rabbit’s retina. J. Physiol. 178, 477–504 (1965). https://doi.org/10.1113/jphysiol.1965.sp007638

Behnia, R., Clark, D.A., Carter, A.G., Clandinin, T.R., Desplan, C.: Processing properties of ON and OFF pathways for Drosophila motion detection. Nature 512, 427–430 (2014). https://doi.org/10.1038/nature13427

Haag, J., Arenz, A., Serbe, E., Gabbiani, F., Borst, A.: Complementary mechanisms create direction selectivity in the fly. eLife 5, e17421 (2016). https://doi.org/10.7554/eLife.17421

Ibbotson, M.R.: Evidence for velocity-tuned motion-sensitive descending neurons in the honeybee. Proc. Biol. Sci. 268(1482), 2195 (2001). https://doi.org/10.1098/rspb.2001.1770

Srinivasan, M.V., Lehrer, M., Kirchner, W.H., Zhang, S.W.: Range perception through apparent image speed in freely flying honeybees. Vis. Neurosci. 6(5), 519–535 (1991). https://www.ncbi.nlm.nih.gov/pubmed/2069903

Riabinina, O., Philippides, A.O.: A model of visual detection of angular speed for bees. J. Theor. Biol. 257(1), 61–72 (2009). https://doi.org/10.1016/j.jtbi.2008.11.002

Cope, A., Sabo, C., Gurney, K.N., Vasislaki, E., Marshall, J.A.R.: A model for an angular velocity-tuned motion detector accounting for deviations in the corridor-centering response of the Bee. PLoS Comput Biol. 12(5), e1004887 (2016). https://doi.org/10.1371/journal.pcbi.1004887

Ibbotson, M.R., Hung, Y.S., Meffin, H., Boeddeker, N., Srinivasan, M.V.: Neural basis of forward flight control and landing in honeybees. Sci. Rep. 7(1), 14591 (2017). https://doi.org/10.1038/s41598-017-14954-0

Dyhr, J.P., Higgins, C.M.: The spatial frequency tuning of optic-flow-dependent behaviors in the bumblebee Bombus impatiens. J. Exp. Biol. 213(Pt 10), 1643–50 (2010). https://doi.org/10.1242/jeb.041426

Baird, E., Srinivasan, M.V., Zhang, S., Cowling, A.: Visual control of flight speed in honeybees. J. Exp. Biol. 208(20), 3895–905 (2005). https://doi.org/10.1242/jeb.01818

Acknowledgments

This research is supported by EU FP7-IRSES Project LIVCODE (295151), HAZCEPT (318907) and HORIZON project STEP2DYNA (691154).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, H., Peng, J., Baxter, P., Zhang, C., Wang, Z., Yue, S. (2018). A Model for Detection of Angular Velocity of Image Motion Based on the Temporal Tuning of the Drosophila. In: Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I. (eds) Artificial Neural Networks and Machine Learning – ICANN 2018. ICANN 2018. Lecture Notes in Computer Science(), vol 11140. Springer, Cham. https://doi.org/10.1007/978-3-030-01421-6_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-01421-6_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01420-9

Online ISBN: 978-3-030-01421-6

eBook Packages: Computer ScienceComputer Science (R0)