Abstract

MOOCs have attracted a large number of learners with different education background all over the world. Despite its increasing popularity, MOOCs still suffer from the problem of high drop-out rate. One important reason may be due to the difficulty in understanding learning demand and user interests. To helper users find the most suitable courses, personalized course recommendation technology has become a hot research topic in e-learning and data mining community. One of the keys to the success of personalized course recommendation is a good user modeling method. Previous works in course recommendation often focus on developing user modeling methodology which learns latent user interests from historic learning data. Recently, interactive course recommendation has become more and more popular. In this paradigm, recommender systems can directly query user interests through survey tables or questionnaires and thus the learned interests may be more accurate. In this paper, we study the user interest acquisition problem based on the interactive course recommendation framework (ICRF). Under this framework, we systematically discuss different settings on querying user interests. To reduce performance-cost score, we propose the ICRF user interest acquisition algorithm that combines representative sampling and interest propagation algorithm to acquire user interests in a cost-effective way. With extensive experiments on real-world MOOC course enrollment datasets, we empirically demonstrate that our selective acquisition strategy is very effective and it can reduce the performance-cost score by 30.25% compared to the traditional aggressive acquisition strategies.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Since 2012, MOOCs (Massive open online courses) have attracted a large number of learners all over the world. Every day, millions of people with different education and demographics background study free online courses in popular MOOC platforms, such as CourseraFootnote 1 and EdxFootnote 2. Compared with traditional university-level education, MOOCs dramatically speed up the way how college courses are delivered to normal crowd. From the view of higher education, MOOCs may have the potential to largely reduce the cost of higher education in a near future.

However, despite its increasing popularity, MOOCs still suffer from the problem of high drop-out rate (or low completion rate). According to the annual report of Coursera, the drop-out rate in Coursera’s start-up stage ever reaches 91% [1]. Such a high drop-out rate not only means a waste of huge amount of human learning efforts but also brings negative opinions to MOOCs industry. Why does the vast majority of students fail to finish free online courses? One reason may be due to the difficulty in understanding learning demand and user interests. On the one hand, a good modeling of user interests can effectively help understand what kind of course students may like. On the other hand, a good modeling of learning motivation can also help estimate how likely a student will finish courses and thus avoids unnecessary course drop-out. However, due to the limitation of current MOOC platforms, it is usually difficult for users to explicit reveal their true interests and motivation.

To tackle this problem, personalized course recommendation technology has become a hot research topic in e-learning and data mining community. One of the key components in classic personalized course recommendation technology is user modeling which tries to learn the latent user interests from historic behavior data so that the decision module in recommendation system, i.e., a machine learning system, can estimate the likelihood of course preferences more accurately. Many works have been proposed, such as course enrollment pattern analysis [4, 13], profile text mining [5], and resource visiting log analysis [6] and so on. These methods have shown good recommendation performance. However, they are all passive as they do not actively query user interests from users but passively collect user behavior data to learn latent user interests. Due to the sparsity and noise problem, the modeling effect may not be satisfying.

Recently, the interactive course recommendation approaches have become more and more popular. Main-stream MOOC platforms, such as Coursera, have adopted such techniques to help students choose online courses (see Fig. 1). In these systems, before a user selects courses, the platform will show a list of survey tables (or questionnaires) to let her choose what topics she likes most. After the user submits the survey tables, the recommender system will integrate these explicit interest data with user demographics data together for better recommendation. For example, in Fig. 1, after a user selects the topics “Data Science” and “Machine Learning”, the Coursera platform will recommend related courses, such as Machine Learning Foundations and Probabilistic Machine Learning. This recommendation mechanism has been shown to give more accurate courses to users. However, very few of research works have systematically studied the effect of human-system interaction in user interest acquisition.

In this paper, we study the user interest acquisition problem based on the interactive course recommendation framework (ICRF). We first give a formal model of this framework. After that, we systematically discuss different settings on integrating user interests into recommender system. We propose the ICRF user interest acquisition algorithm that selectively acquires interests from a small group of representative users and propagates the acquired interests to all other users. With extensive experiments on real-world MOOC course enrollment datasets, we find that selectively request users to show their interests before course selection do help improve the recommendation performance. On average, our strategy can significantly reduce the performance-cost score by 30.25% compared to the traditional aggressive acquisition strategy.

The rest of this paper is organized as follows. Section two reviews previous work in the field of course recommendation with a focus on user modeling methods. Section three describes the model of ICRF and proposes the ICRF user interest acquisition algorithm. Section four discusses the dataset, experimental setting and results. The last section concludes this paper and presents future works.

2 Related Works

In this section, we review literature of recommender system in the field of course recommendation domain with a focus on user modeling methods. Different from the movie or music recommendation, the course recommendation domain usually have complex context [1, 2, 7, 10].

Traditional data mining methods are first explored for user modeling in course recommendation. Aher et al. [4] use k-means clustering and Apriori association rule algorithm. Zhang et al. [13] develop a course recommendation system that implements distributed Apriori algorithm on computer clusters. These user modeling methods can reveal students’ course enrollment pattern and show reasonable recommendation performance. However, they rely on analyzing the historical course enrollment behavior. Thus, traditional rule based methods may suffer from the cold-start problem when serving new users without any historical behavior [3].

To tackle the cold-start problem, several works try to explore contextual information, such as user profile, course prerequisite and other auxiliary information. Piao et al. [5] propose a similarity based user modeling method for MOOC course recommendation based on mining profile keywords such as job titles and skills. Jing et al. [6] propose a hybrid user modeling framework that mixes three item-based collaborative filtering scores based on user access behavior, user demographics and course prerequisite. Yu et al. [8] propose a method to mine discussion comments for user recommendation. Salehi et al. [11] propose a method combing sequential pattern mining and attribute based collaborative filtering. Social network based methods are also discussed in Zhang et al. [12]. These methods can be viewed as an extension of neighborhood-based collaborative filtering methods. They are all based on complex similarity metrics learned from contextual data. However, a major limitation of these methods is a lack of differentiable model that can be mathematically optimized to improve generalization ability.

Recently, latent factor models have become a popular research direction in the field of course recommendation. Due to their solid mathematical ground and good ability on modeling latent contextual behavior, these methods have shown better performance than item-based collaborative filtering methods. For example, Elbadrawy et al. [9] propose an ensemble of matrix factorization models each of which learns latent factors of students and courses at different levels of granularity. Sahebi et al. [14] propose a tensor factorization model for quiz recommendation. Their methods can model the temporal relationship between student knowledge and skill improvement.

Due to the effectiveness of latent factor model in course recommendation, we also use this type of recommendation methods in this paper. More specifically, we use the popular factorization machine [15] which learns the second-order interactions between features. This is especially useful for datasets with extreme sparsity, such as course recommendation datasets.

3 Interactive Course Recommendation Framework

In this section, we first describe the general model of the interactive course recommendation framework (ICRF). Second, we discuss various settings of user interest acquisition. Last, we propose the ICRF user interest acquisition algorithm to acquire user interest in a cost-effective way.

3.1 Framework Modeling

In this paper, we adopt the popular rating based recommendation approach. The goal of rating based recommendation is to learn a regression function that can accurately estimate user ratings on different courses.

Different from the traditional rating based recommendation, in ICRF, we explicitly model user interests. Specifically, we use U to denote the set of all users, C to denote the set of all courses, I to denote the domain of user interests and R to denote the rating interval (usually between zero and one)Footnote 3. The framework can be defined as

We use factorization machine (FM) [15] as the basic regression function. The regression function of FM of degree two in ICRF is defined as

where w and V are the model parameters and x is the feature vector that concatenates u, c and Iu as \( \left[ {\varvec{u}^{T} ,\varvec{c}^{T} ,\varvec{I}_{\varvec{u}}^{T} } \right] \). The most important part of FM is the inner product of vi and vj on the cross product of the i-th feature and the j-th feature. This has been proved to allow high quality estimation of pair-wise feature interaction under sparsity [15].

In a typical ICRF application scenario, the recommendation process usually contains four steps (as shown in Fig. 2). First, a user tags a set of topic options in the interest survey table. The survey tables usually contains many topic options which include, for example, academic topics, habit topics and professional career topics. Second, the system acquires interest data from the survey table. Third, the system conducts rating estimation on the triplet (u, c, Iu) for any \( c \in C \). Finally, the estimated top rated courses will be shown as a ranking list to the user for further selection.

The most important part of ICRF is the second step (user interest acquisition). To represent user interests I in a computational way, we assume that any user interests can be modeled as a multivariate normal distribution of a k-dimensional random vector

where \( I_{u}^{\left( i \right)} \) represents the degree of likelihood of user interests on the i-th application specific topic and \( \varvec{ \mu } \) and \( {\sum } \) are the parameters.

3.2 User Interest Acquisition

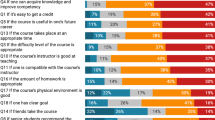

Based on the above discussion, many interesting research questions can be discussed in ICRF. In this paper, we systematically study the user interest acquisition problem by answering how user and recommender system interact with each other. In real-world ICRF application, we can find four setting of user interest acquisition scenarios (see Table 1).

From the view of recommender system, there are two type of acquisition strategies including aggressive acquisition and selective acquisition.

Aggressive acquisition. The recommender system sends survey tables to every users. By doing so, the recommender system can build a user interests database for all users. Therefore, with the supplementary user interest information, the recommender system can model users better.

Selective acquisition. From the view of cost-sensitive learning, acquiring user interests is not free. Answering professional or privacy related questions can take users’ time and even confuse them. To reduce the acquisition cost, it may be more realistic to intelligently acquire user interests from a partial group of representative users. Other user’s interests can be deduced from these representative users. Thus, a significant amount of acquisition cost can be saved.

On the other side, from the view of users, there are two type of answering strategies including cooperative answering and reluctant answering.

Cooperative answering. When users receive survey tables either through emails or web portal, they are always willing to provide their course preferences and interests. For recommender systems, it means that users are very cooperative to provide personal interest data. Thus, the cost of sending survey tables will not be wasted.

Reluctant answering. A user may be reluctant to fill survey tables either because they are too busy or uncertain about questions. In such a case, the recommender system wastes amount of cost to send survey tables.

3.3 ICRF User Interest Acquisition Algorithm

Understanding the advantage and limitation of different settings of user interest acquisition is important for ICRF. In this paper, we propose the ICRF user interest acquisition algorithmFootnote 4 (see Algorithm 1) to empirically study this problem. As the SR setting (selective acquisition + reluctant answering) is the most complex, we will mainly discuss ICRF algorithm for SR in this section. Other settings can be easily modified from SR. The algorithm consists of an initialization part, a selective acquisition part and an interest propagation part.

In the initialization part, as it is very costly to collect true user interest online (step 1 and step 2 in Fig. 2), we have to resort simulation approaches. Specifically, we simulate the interest acquisition procedure by designing an oracle componentFootnote 5 (i.e., oracles in active learning literature [18]) which can generate the true user interests. Specifically, for each user, we assume that her interests can be estimated based on the average topic distribution (learned by the LDA model [20]) on all her enrolled courses (step 1). Second, we define the probabilistic function e as a reluctant trigger which mimics the reluctant behavior (i.e., user will answer only when \( e\left( u \right) > h \)) for AR (aggressive acquisition + reluctant answering) and SR (selective acquisition + reluctant answering) settings (step 2).

In the selective acquisition part, we first adopt the representative sampling strategy to build the selective user pool \( U^{\left( s \right)} \) (step 3). Specifically, we use k-means clustering to group users into different set and choose the users that are most closed to the clustering centroids as representative users. The recommender system with selective acquisition strategy will only query interest data for users in \( U^{\left( s \right)} \). Second, for each user, we generate her user interests either as \( O\left( u \right) \) or \( \emptyset \) based on the in-pool condition and reluctant trigger condition (step 5). We also add an acquisition cost to the total cost V (step 6). The acquired user interests Iu will be added in a new training set \( \tilde{D}^{T} \) (step 7).

In the interest propagation part (from step 8 to step 11), we propose a simple interest propagation algorithm to iteratively update interests for not-queried users (i.e., \( u{ \in }U - U^{\left( s \right)} \)). The idea is borrowed from the famous PageRank [19] algorithm which has been applied to many domains, such as keyword extraction [16]. The interest propagation algorithm relies on the user nearest neighbor graph. We form the graph edges by connecting each pair of users if they are among each other’s k nearest neighbors (measured by Euclidean distance on demographics data). The damping factor d plays the role of absorbing interest from other random users.

4 Experiments

In this section, we first describe the datasets used in our experiments. Second, we present the experimental configuration. Third, we conduct experiments to study the following research questions:

-

RQ1. Is the selective acquisition strategy better than the aggressive acquisition strategy in terms of the performance-cost score?

-

RQ2. How does the interest propagation method improve performance-cost score?

-

RQ3. Are the reluctant answering behavior harmful?

4.1 Dataset

The dataset used in this work is provided by a MOOC platform deployed at our universityFootnote 6. As there are usually no explicit rating data for MOOC courses, we use video watching progress as pseudo ratings. Generally speaking, the higher the ratings, the more likely students may finish all videos. Thus, the goal of course recommendation becomes recommending courses that students are likely to finish all videos.

This dataset contains 319,408 course enrollment events spanning from Sept 2014 to April 2015. During this period, 93,063 students have enrolled in 79 MOOC courses. For each student and each course, the dataset provides categorical features and text features (see Table 2). For example, an undergraduate student in College of Compute Science can be represented as a sparse 0/1 feature vector where only the feature index in “Undergraduate”, “Student” and “College of Computer Science” are one. For each course, the dataset also provides title, introduction and syllabus text that can be used to learn topic distribution.

In our experiments, we split the full dataset into six groups by a time window of three months (see Table 3). Specifically, in each time window, the first two months of data is used as the training set and the rest one is used as the testing set. Thus, in total, six groups of datasets will be used in our experiments.

4.2 Configuration

Experimental Protocols. For each group of dataset, we use the ICRF user interest acquisition algorithm to generate explicit user interests for users in both training and testing sets. For the selective acquisition strategy, we set the cluster number of k-means algorithm as 500. The k parameter to build the nearest neighbor graph is set as 100. Following the work of [16], we also set the damping factor d in the interest propagation algorithm as 0.85. When the oracle queries a user, we accumulate the total cost by one.

We use the famous libFM [17] library as the implementation of FM. The popular SGD (stochastic gradient descent) optimization method is used to train FM regression functions on the training set. In our pilot experiments, we find that setting the learning rate as 0.001 and the regularization parameter as 0.0001 for SGD can have reasonable performance. Thus, we use these hyper-parameters to train FM. The number of training iteration is restricted as 50.

To evaluate each strategy in a cost-sensitive way, we compare the performance-cost score (PCS) of different strategies. Intuitively, a good user interest acquisition strategy should not only have good recommendation performance (e.g., low RMSE score) but also have low acquisition cost (i.e., the lower the PCS score is, the better the strategy performs). Thus, we define a new evaluation metric by combining RMSE and cost acquisition as

Implement of Other Settings. We summarize the differences of the four settings in Table 4. By default, the ICRF algorithm implements the SR settings. We can easily modified ICRF algorithm to implement the other settings by removing not used components.

4.3 Aggressive Acquisition VS Selective Acquisition (RQ1)

Table 5 compares the PCS scores between AC (aggressive acquisition + cooperative answering) and SC (selective acquisition + cooperative answering). We can see that on all the six datasets, SC outperforms AC significantly. On average, the SC strategy can reduce the PCS score by 30.25% compared to AC. This means that in real-world interactive course recommendation application, the selective acquisition strategy that intelligently acquires interests from representative users and propagates their interest to other similar users is much better than the aggressive acquisition strategy that blindly acquires interest from all users.

4.4 Effect of Interest Propagation (RQ2)

The selective acquisition strategy relies on the interest propagation (IP) algorithm to propagate interests to users not in the selected pools. How about the performance of SC without IP? To study this issue, we compare SC with two alternatives. For each user not in the selected pools, the SC-IP strategy assigns no interests while the SC-IP+Center strategy assigns the interests of cluster centers where the user belongs to.

From Table 6, we can see that on all the six datasets the SC strategy outperforms the other two variants. This demonstrates that propagating interests to similar users is important in the ICRF user interest acquisition algorithm.

4.5 Is Reluctant Answering Harmful (RQ3)

When users refuse to answer survey tables, the recommender system will waste amount of cost. How will be such reluctant behavior harmful to ICRF framework? To study this issue, we conduct experiments for SR (selective acquisition + reluctant answering) and AR (aggressive acquisition + reluctant answering) on the I and the V datasets where we find the smallest and biggest PCS improvement of SC over AC (both strategies do not consider reluctant behavior). The reluctant thresholds are set from 0.3 to 0.7.

Table 7 tabulates the PCS scores at different reluctant thresholds. We can see that with the increasing of reluctant thresholds, the PCS scores of both AR and SR continue to increase. This means that reluctant answering behavior do have negative impact on performance-cost score. However, the PCS scores of SR are always lower than AR in all cases. It means that the selective acquisition strategy is more robust than the aggressive acquisition strategy when reluctant users exist.

5 Conclusion

In this paper, we study the problem of user interest acquisition in interactive course recommendation. Different from traditional course recommendation methods where user modeling is conducted by mining historic user behavior data, in this paper, we study a new paradigm where recommender systems can directly query user interests through survey tables or questionnaires. We describe the formal model of ICRF (Interactive Course Recommendation Framework) and propose the ICRF user interest acquisition algorithm to reduce performance-cost score. Specifically, we use k-means clustering to choose representative users for querying oracles and propose an interest propagation algorithm to deduce interests for not selected users. With extensive experiments on real-world MOOCs enrollment datasets, we empirically demonstrate that our selective acquisition strategy is very effective and it outperforms the traditional aggressive acquisition strategy by 30.25% in terms of performance-cost score.

In our future work, we plan to study the ICRF framework by reinforcement learning where interest acquisition actions will be decided by a policy agent that learns the optimal policy from the environment to maximize expected recommendation reward.

Notes

- 1.

- 2.

- 3.

The ratings in real-world MOOC platforms can have different numerical intervals. To simplify our work, we assume those ratings can be normalized in the interval between zero and one.

- 4.

In the rest of this paper, the ICRF user interest acquisition algorithm and the ICRF algorithm will be used interchangeably.

- 5.

The oracle refers to a virtual agent that is omniscient to know true user interests.

- 6.

The MOOC platform provides free online courses for both on-campus students and off-campus professional employees in China.

References

Koller, D.: MOOCs on the move: How coursera is disrupting the traditional classroom. Knowledge@Wharton Podcast (2012)

Parameswaran, A., Venetis, P., Garcia-Molina, H.: Recommendation systems with complex constraints: a course recommendation perspective. ACM Trans. Inf. Syst. 29(4), 20:1–20:33 (2011)

Schein, A.I., Popescul, A., Ungar, L.H., Pennock, D.M.: Methods and metrics for cold-start recommendations. In: Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 253–260. ACM, Tampere (2002)

Aher, S.B., Lobo, L.M.R.J.: Combination of machine learning algorithms for recommendation of courses in E-Learning System based on historical data. Knowledge-Based Syst. 51, 1–14 (2013)

Piao, G., Breslin, J.G.: Analyzing MOOC entries of professionals on LinkedIn for user modeling and personalized MOOC recommendations. In: Proceedings of the 2016 Conference on User Modeling Adaptation and Personalization, pp. 291–292. ACM, Halifax (2016)

Jing, X., Tang, J.: Guess you like: course recommendation in MOOCs. In: 2017 Proceedings of the International Conference on Web Intelligence, pp. 783–789. ACM, Leipzig (2017)

Hou, Y., Zhou, P., Wang, T., Yu, L., Hu, Y., Wu, D.: Context-Aware Online Learning for Course Recommendation of MOOC Big Data. arXiv preprint (2016)

Yu, Y., Wang, H., Yin, G., Wang, T.: Reviewer recommendation for pull-requests in GitHub. Inf. Softw. Technol. 74(C), 204–218 (2016)

Elbadrawy, A., Karypis, G.: Domain-aware grade prediction and top-n course rec-ommendation. In: Proceedings of the 10th ACM Conference on Recommender Systems, pp. 183–190. ACM, Boston (2016)

Klašnja-Milicevic, A., Ivanovi, M., Nanopoulos, A.: Recommender Systems in e-Learning Environments: A Survey of the State-of-the-art and Possible Extensions. Artif. Intell. Rev. 44(4), 571–604 (2015)

Salehi, M., Nakhai Kamalabadi, I., Ghaznavi Ghoushchi, M.B.: Personalized recommendation of learning material using sequential pattern mining and attribute based collaborative filtering. Educ. Inf. Technol. 19(4), 713–735 (2014)

Zhang, Y., Wang, H., Yin, G., Wang, T., Yu, Y.: Social media in GitHub: the role of @-mention in assisting software development. Sci. China Inf. Sci. 60(3),032102 (2017)

Zhang, H., Huang, T., Lv, Z., et al.: MCRS: a course recommendation system for MOOCs. Multimed. Tools Appl. 77(6), 7051–7069 (2018)

Sahebi S., Lin Y., Brusilovsky P.: Tensor Factorization for Student Modeling and Performance Prediction in Unstructured Domain, pp. 502–506. Raleigh, NC, USA (2016)

Rendle S.: Factorization Machines, pp. 995–1000. Sydney, Australia (2010)

Mihalcea R., Tarau P.: Textrank: bringing order into text. In: Proceedings of the 2004 Conference On Empirical Methods In Natural Language Processing, pp. 404–411. (2004)

Rendle, S.: Factorization machines with libFM. ACM Trans. Intell. Syst. Technol. 3(3), 1–22 (2012)

Settles B.: Active learning. Synth. Lect. Artif. Intell. Mach. Learn. 6(1), 1–114 (2012)

Page L.: PageRank: Bringing Order to the Web. Stanford Digital Library Project (2002)

Blei, D., Ng, A., Jordan, M.: Latent dirichlet allocation. J. Mach. Learn. Res. 3, 993–1022 (2003)

Acknowledgments

We thank the reviewers for their helpful comments. This work is supported by the National Key Research and Development Program of China (2018YFB1004502), the National Natural Science Foundation of China (61702532) and the Key Program of National Natural Science Foundation of China (61532001, 61432020).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Li, X., Wang, T., Wang, H., Tang, J. (2018). Understanding User Interests Acquisition in Personalized Online Course Recommendation. In: U, L., Xie, H. (eds) Web and Big Data. APWeb-WAIM 2018. Lecture Notes in Computer Science(), vol 11268. Springer, Cham. https://doi.org/10.1007/978-3-030-01298-4_20

Download citation

DOI: https://doi.org/10.1007/978-3-030-01298-4_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01297-7

Online ISBN: 978-3-030-01298-4

eBook Packages: Computer ScienceComputer Science (R0)