Abstract

The human visual system consists of a large, yet unknown number of cortical areas. We summarize the efforts which have led to the identification of 19 retinotopic areas in human occipital cortex, using the macaque visual cortex as a guide. In this process retinotopic mapping has proven far superior to the study of functional properties. Macaques and humans share early areas (V1, V2, and V3), a motion-sensitive middle temporal (MT/V5) cluster as well as six other areas. The remaining human occipital areas either result from reorganization of a group of monkey areas or seem to be specifically human. Several regions sensitive to motion and even higher-order motion have been described in parietal cortex, the retinotopic organization of which is still under debate. On the other hand, both dorsal and ventral regions are sensitive to shape, which is most pronounced in the lateral occipital complex (LOC) extending into the fusiform gyrus. The anterior part of this complex is flanked by specialized regions devoted to processing faces and bodies and represents “visual objects” rather than image properties. Its exact organization requires further investigation.

Access provided by CONRICYT – Journals CONACYT. Download protocol PDF

Similar content being viewed by others

Key words

1 Introduction

The human visual system is located in the occipital lobe and extends rostrally into the parietal and temporal lobes. It is estimated to encompass 30 % of human cortex [1]. Functional imaging gives us direct access to the function of this important part of human cortex . One way to study this system is to consider a number of perceptual or visual cognitive functions and to localize their neural correlates. An alternative is to consider the visual system as an anatomically organized collection of cortical areas and subcortical centers that process retinal information and transform it into messages appropriate for processing in the nonvisual cerebral regions to which the visual system projects. A critical aim in visual neuroscience is to define the different cortical areas that make up the human visual system. In other species, such as the nonhuman primates, cortical areas are defined by the combination of four criteria: (1) cyto- and myelo-architectonics, (2) anatomical connections with other (known) areas, (3) topographic organization, i.e., retinotopic organization, and (4) functional properties. It is important to note that while not all criteria may apply to each area, it is essential to obtain as much converging information as possible. In the nonhuman primate, 30 or more visual cortical areas have been identified using these criteria, although it is fair to state that even in these species there is discussion about the exact definition of areas, especially those at the higher levels in the system [1]. The definition of the visual cortical areas is only a first step in understanding the visual system; next is the investigation of the nature of the processing performed by these areas and the flow of information through the areas as a function of the behavioral context and task demands.

Recent advances in brain imaging have provided powerful tools for the definition and mapping of cortical areas. Functional magnetic resonance imaging (fMRI) provides insights into the functional characteristics of cortical areas by means of specific contrasts of brain activity that isolate a functional property. For example, in the monkey in which a number of visual areas have been identified using anatomical and neurophysiological measurements, fMRI has shown that a small number of functional characteristics, defined by a few subtractions, allow the definition of six motion-sensitive regions in the monkey superior temporal sulcus (STS) [2]. Functional MRI can also provide evidence for retinotopic organization. It actually is more powerful than single cell studies in this respect, as it is less biased in its sampling and the measure required is simply responsiveness. It has been suggested that the topology of an area, that is, its localization with respect to neighboring areas, might be a valuable addition for the identification of areas [3]. Imaging has not yet provided clear means to obtain histological structure, although at high field (7.0 T) the stria of Gennari becomes visible, and myelin density can be measured indirectly at 3 T [4]. The situation is slightly better for anatomical connections, as diffusion tensor imaging (DTI) [5, 6] is increasingly seen as a potential measure of connectivity between areas, although the methodological issues remain formidable [7]. In the present chapter we will provide an overview of how these two fMRI strategies, functional specialization and retinotopic organization, have been used for defining cortical areas.

Despite all its strengths functional imaging has severe limitations due to its limited temporal and spatial resolution. With the present 3 T systems a few millimeters can be resolved. While this is ample to define cortical regions it is a long way from the resolution of the single neuron. In fact, fMRI signals are only indirect, hemodynamic reflections of average activity of thousands of neurons. Hence, fMRI is very sensitive at detecting average activity levels, but it has great difficulty in measuring neuronal selectivity. It has been proposed that repetition suppression can be used to measure neuronal tuning, but the case for it might be overstated as in single neurons the tuning of the adaptation is narrower than the response tuning [8–10]. Recent developments using multivoxel pattern analysis (MVPA) [11] provide sensitive tools for studying neural representations beyond the resolution of conventional fMRI approaches. Yet the estimation provided by this analysis depends heavily on the clustering of neurons with similar properties, like those in cortical columns, and the discrimination provided falls quite short of what single neurons can achieve. For example, single V1 neurons can signal orientation differences of 5°–10° with an 84 % chance of success [12]. MVPA of human V1 has so far yielded values of 35° [13]. Therefore, much can be gained by combining functional imaging in humans with knowledge derived from invasive studies, such as single cell recordings in nonhuman primates. The combination has become possible with the advent of fMRI in the awake monkey [14]. Indeed this allows parallel imaging experiments leading to the definition of cortical regions and their characteristics in the two species, paving the way for establishing homologies. Once a homology is established, one can test whether the neuronal properties in that area apply to the human homolog. Indeed, comparison of the single cell recordings and fMRI in the monkey using similar stimuli allows one to derive an fMRI signature of a neuronal property. One can then verify that the human homolog also exhibits this fMRI signature [15, 16]. Hence, the definition of cortical areas in both species is a critical step for knowledge transfer from animal models to the human visual system .

2 Methodological Issues

2.1 Stimulus Definition

Definition of the visual stimulus is important as it determines to a large degree the brain activation pattern and thus the experimental findings reported. It is important to note that a precise stimulus description is crucial for repeating an experiment and replicating the results. For example, very different stimuli are used for defining motion-responsive areas. A motion localizer used to localize human middle temporal (hMT/V5) region often consists of random dots, but may also consist of gratings, either rectangular or circular. Random dots may have different densities, sizes, luminance, etc., or the whole pattern may be of a different size. Random dots may translate in one or several directions, but may also rotate or move radially. All these paradigms, using very different stimuli, are referred to as motion localizers, but because of their differences they may result in activation of different cortical regions, reducing the value of the localization.

2.2 Tasks

One of the main challenges in brain imaging is investigating the link between neural activity and human behavior. Recent studies using parametric stimulus manipulation employ detection or discrimination tasks [17–19] rather than passive viewing of the stimuli. These paradigms allow correlation between behavioral data (psychometric functions) and fMRI activations. This approach is important for discerning the functional role of different cortical areas and evaluating their contribution to behavior. Further, attentionally demanding tasks (e.g., detection of changes in the fixation target, 1-back matching task) are used during scanning to ensure that observers pay attention across all stimulus conditions and that activation differences across conditions are not simply due to differences in the general arousal of the participants or the task difficulty across conditions. For example, when mapping the lateral occipital complex (LOC) , participants view intact and scrambled images of objects. It is possible that higher activations for intact images of objects are due to the fact that these images attract the participants’ attention more than scrambled images. To control for this potential confound observers are instructed to perform a task on different properties of the fixation target or the stimulus (e.g., dimming of the fixation point or part of the shape) [20] that entail similar attention across all stimulus conditions. Another task that has been adopted for controlling attentional confounds is the 1-back matching task (detect a repeat of an intact or scrambled image) [21]. This task is more demanding for scrambled than intact images, thus excluding the possibility that higher activations for intact images of objects are due to attentional differences .

2.3 Control of Eye Movements

Control of fixation is mandatory in motion response studies, retinotopic mapping experiments, and in spatial attention studies. Although in the past it was acceptable to show that the subjects fixated well based on off-line measurements, standards have evolved. In addition, precise eye movement records, provided by infrared corneal reflection methods, allow one to remove the effect of residual eye movements that occur despite fixation. In general, in all visual experiments, control of fixation will ensure that the part of visual field stimulated is known and will remove eye movements as a source of unwanted and uncontrolled activations.

2.4 fMRI Designs and Paradigms

The conventional fMRI approach for identifying cortical areas involved in different processes and cognitive tasks entails a subtraction of activations between different stimulus types that are presented in blocked or event-related designs.

One of the limitations of these fMRI paradigms is that they average across neural populations that may respond homogeneously across stimulus properties or may be differentially tuned to different stimulus attributes. Thus, in most cases, it is impossible to infer the properties of the underlying imaged neural populations. fMRI adaptation (or repetition suppression) paradigms [22–27] have recently been employed to study the properties of neuronal populations beyond the limited spatial resolution of fMRI. These paradigms capitalize on the reduction of neural responses for stimuli that have been presented for prolonged time or repeatedly [28, 29]. A change in a specific stimulus dimension that elicits increased responses (i.e., rebound of activity) identifies neural populations that are tuned to the modified stimulus attributes. fMRI adaptation paradigms have been used in both monkey and human fMRI studies as a sensitive tool that allows us to investigate: (a) the sensitivity of the neural populations to stimulus properties, and (b) the invariance of their responses within the imaged voxels. Adaptation across a change between two stimuli suggests a common neural representation invariant to that change, while recovery from adaptation suggests neural representations sensitive to specific stimulus properties. For example, recent imaging studies tested whether fMRI measurements can reveal neural populations in early visual areas sensitive to elementary visual features, e.g., orientation, color, and direction of motion [30–34]. Consider the case of motion direction: after prolonged exposure to the adapting motion direction, observers were tested with the same stimulus in the same or in an orthogonal motion direction. Decreased fMRI responses were observed in MT when the test stimuli were at the same motion direction as the adapting stimulus. However, recovery from this adaptation effect was observed for stimuli presented at an orthogonal direction. These studies suggest that the neural populations in human MT are sensitive to direction of motion [31, 34]. Using the same procedure in the monkey, Nelissen et al. [2] indeed observed adaptation in MT/V5 but also in other motion-sensitive regions, such as the medial superior temporal (MST) region. Similarly, recent studies have shown stronger adaptation in hMT/V5+ for coherently than transparently moving plaid stimuli. These findings provide evidence that fMRI adaptation responses are linked to the activity of pattern-motion rather than component-motion cells in MT/MST [32]. Thus, these studies suggest that the fMRI signal can reveal neural selectivity consistent with the selectivity established by neurophysiological methods. However, recent studies comparing fMRI adaptation and neurophysiology in monkeys call for cautious interpretation of the relationship between fMRI adaptation effects and neural selectivity or invariance at higher levels in the system [10]. In particular, fMRI adaptation in a given cortical area may be the result of adaptation at earlier or later stages of processing that is propagated along the visual areas. Hence in higher-order areas receiving from multiple inputs fMRI adaptation might reflect adaptation of one of the inputs, while recordings show that local neuronal responses driven by the other inputs are not adapted.

Interestingly, novel MVPA methods [11, 35, 36] provide an alternative approach for investigating neural selectivity based on fMRI signals. Unlike conventional univariate analysis, MVPA takes advantage of the information across multiple voxels in a cortical area and allows us to characterize neural representations of features that are encoded at a higher spatial resolution in the brain than the typical resolution of fMRI. These classification analyses have been used successfully for the decoding of elementary visual features (e.g., orientation [13, 37], motion direction [38], and object categories [39–42]). The weakness of the MVPA approach is its dependence on the clustering of neurons with similar properties. This is also the case for a third technique which is has been proposed to infer neuronal selectivity from fMRI measurements: measuring the tuning of individual voxels [43]. Just as MVPA, tuning of voxels is prone to false negative results, as the grouping of neurons for higher-order selectivity is frequently unknown. In contrast adaptation fMRI is prone to false positives as inputs may adapt and not the local neuronal activity. For all these methods greater caution is required at higher level in the cortex.

2.5 Whole Brain Versus Region of Interest Analyses

The statistical evaluation of activation differences between stimulus and tasks is typically conducted by comparing responses for each voxel using the general linear model. Analysis of activation patterns across the whole brain (whole brain analysis) reveals clusters of activations in different anatomical regions that show significant differences in their functional processing. This approach has allowed researchers to identify and localize cortical regions with different functions and evaluate their involvement in various cognitive tasks. In contrast, region of interest (ROI) analysis focuses on specific cortical areas identified anatomically or functionally following standard mapping procedures (e.g., retinotopic mapping ). The advantage of this approach is that it allows us to zoom in on specific cortical regions and investigate their neural computations using parametric stimulus manipulations. Such manipulations result in fine stimulus variations and differences in behavioral performance. Identifying fMRI activations that reflect these fine differences in neural processing may require the high signal-to-noise ratio that is possible when scanning and analyzing smaller regions of cortex. However, ROI analyses are limited in two respects: (a) the ROI may be outside the volume scanned or analyzed, (b) the voxels of interest (i.e., voxels that show differential activations across conditions) may cover a smaller cortical volume than the ROI; as a result, the differential activations may be averaged out within the ROI. Taken together, whole brain and ROI analyses can be used as complementary tools for studying the functional roles of cortical regions. Whole brain analyses search the entire brain for regions involved in the analysis of a given stimulus or a cognitive task, while ROI methods are more appropriate for finer investigation of the neural processing in these cortical regions [44, 45].

3 Retinotopic Organization

3.1 Early Visual Areas (V1, V2, V3)

Initially, positron emission tomography (PET) studies have concentrated on the retinotopy of V1 [46], which is a large area of known localization in the calcarine sulcus. With the advent of fMRI, mapping was extended to areas neighboring V1 [47] (but see also [48]). An additional step was the introduction of angular and eccentricity periodic sweeping stimuli that generate eccentricity and polar angle maps based on phase encoding of stimulus position [49]. This allowed the mapping of all three early areas (V1, V2, V3, Fig. 1) [51–53], in which polar angle and eccentricity vary along orthogonal directions on the cortical surface. The eccentricity varies from the central representation at the posterior tip of the calcarine fissure to that of large eccentricities rostrally along the calcarine. Polar angle varies in dorsoventral direction with the lower field being represented above the calcarine and the upper field below (Fig. 1). The three early visual areas are also shown on the flatmaps of Fig. 2 which cover a smaller eccentricity range (0.25°–7.75°) compared to that in Fig. 1 (0°–12°). Figures 1 and 2 show retinotopic maps of individual subjects and these maps exhibit quite some variability. To derive a more general representation one generates maximum probability maps (MPM) which plot in each voxel the area with the highest probability for a given set of subjects. These maps depend heavily on the quality of the inter-subject alignment [20, 56, 57] and this is dramatically improved by using the novel multimodal surface matching technique [57]. The resulting MPM of the left hemisphere is shown on the inflated brain in Fig. 3a, b, and on the flatmap in Fig. 3c. These maps are freely available in Caret [56], and can be used to identify activations without the need to spend valuable time mapping the retinotopic areas in the subjects under study. Of course, when investigating the actual properties of retinotopic areas, the direct mapping remains a superior strategy.

The sweeping stimulus retinotopy paradigm . Two stimuli are used to measure the retinotopic maps in the cortex. Expanding ring stimuli map eccentricity, and rotating wedge stimuli map polar angle. The phase of the best-fitting sinusoid for each voxel indicates the position in the visual field that produces maximal activation for that voxel. Thus, these pseudocolor phase maps are used to visualize the retinotopic maps. Data area is shown for the left hemisphere (medial view) of one subject. Because of the heavy folding of human cortex, these retinotopic maps are best seen on flattened hemispheres (from Dougherty et al. [50])

The 18 retinotopic areas defined in the polar angle (a) and eccentricity (b) maps by Georgieva et al. [54], and Kolster et al. [55]; right hemisphere of subject 1. Stars: central visual field, purple: eccentricity ridge, white dotted lines: horizontal meridian, black full and dashed lines: lower and upper vertical meridian (from Abdollahi et al. [56])

(a and b) MPMs of the retinotopic areas (MSM-retino registration) in the left hemisphere on the inflated cortical surface, lateral (a) and medial (b) views; (c) schematic representation of retinotopic organization of the 18 areas shown on the retinotopic MPM: upper (+) and lower (−) fields and central vision (stars); same color code as in (a). The location of V6 and V6A in the parieto-occipital sulcus is indicated in (b); Note that the MPM of areas V2 and V3 does not include the large eccentricities, otherwise V3 would abut V6

The three early visual cortical areas all have a large, complete representation of the contralateral hemifield, with the upper quadrant projecting ventrally and lower quadrant dorsally. The representation of the vertical meridian (VM) constitutes the boundary between V1 and V2 as well as the anterior boundary of V3. The representations of the horizontal meridian (HM) split the V1 representation and constitute the boundary between V2 and V3. The central representations of the three areas are fused in the central confluence (Figs. 1, 2, and 3). This retinotopic organization is very similar in humans and macaques (Fig. 4). This is not surprising as the presence of three early visual areas is a feature of primates [59, 60]. In all three areas the central representation is magnified compared to that of the periphery [51]. Duncan and Boynton [61] observed a correlation between magnification factor in V1 of human subjects and Vernier acuity but not grating acuity. The surface of V1 has been estimated from histological specimens to range between 2000 and 4500 mm2, while the central 12° occupy 2200 mm2 according to one imaging study [50]. Comparison between histological and fMRI estimates is difficult because of the difficulty of estimating the shrinkage in the histological specimens and the portion of V1 occupied by the central representation [62]. In both types of studies large variation between individuals (a factor of 2) were observed. A similar range of variation has been observed in the macaque, in which the average surface of V1 is roughly half the size of its human counterpart [63]. The surface of human V2 is estimated to be 80 % of that of V1—that of V3 60 %. Hence, cortical magnification is somewhat lower in V2 and V3 than in V1 [50, 51], but magnification factors decrease with eccentricity at similar rates in V1, V2, and V3 [50]. In fact the relative size of V1, V2, and V3 depend on the eccentricity range explored, e.g. in the Abdollahi et al. study [56] V2 is actually slightly larger than V1.

Comparison of retinotopic layout of monkey (a) and human (b) visual cortex. Adapted with permission from Vanduffel et al. [58]. Stars indicate central representations

The retinotopic maximum probability maps allow also comparison with other parcellations of the same region, in particular those based on morphological features. Figure 5a comparers the retinotopic regions with the average myelin density maps based on the T1/T2 ratio [4]. It shows that the three early visual cortical areas are heavily myelinated. The three early areas correspond relatively closely to the cytoarchitectonic areas hOc1, hOc2, and the combination of hOc3d and hOc3v, respectively (Fig. 5b). On the other hand the retinotopic parcellation has little in common with earlier attempts to parcel occipital cortex using DTI [65]. The comparisons in Fig. 5 also indicate that the central 7.75° of the visual field are represented in roughly half of the V1–V3 surface.

(a) Outlines (black lines) of retinotopic MPMs superimposed on myelin density(blue to red color) maps of left hemisphere (L) of 196 subjects. Color code: myelin content in percentiles of the normalized T1w/T2w distribution. (b) outlines (white) of retinotopic MPM superimposed on the cytoarchitectonic MPM of left (L) hemisphere. Black lines: 50 % contours of PAs of hOc1, hOc2, hOc5 from Fischl et al. [64]. Inset color code of cytoarchitectonic areas (from Abdollahi et al. [56])

3.2 Two Middle-Level Areas : Human MT/V5 and V3A

V5 or the Middle Temporal (MT) area in humans was initially localized in the ascending branch of the inferior temporal sulcus (ITS) [66, 67]. This identification was supported by the fMRI study of Tootell et al. [68], showing that this region of human cortex has properties, such as luminance and color contrast sensitivity, similar to those of macaque MT/V5. Subsequently this region has been referred to as human MT/V5+ [52] to indicate that probably it corresponds not just to MT/V5 of the macaque but also to several of its satellites. It has proven difficult to demonstrate a retinotopic organization in this region. Huk et al. [69] have suggested that the MT/V5 complex in humans contains a posterior retinotopic part, considered the homolog of MT/V5, and an anterior part driven by ipsilateral stimuli [70], considered the homolog of MST. One of the drawbacks of this parcellation was the absence of an homolog of the fundus of the superior temporal (FST) area. Also the retinotopic organization of what was believed to be MT in humans [69, 71] seems opposite to that of macaque MT in which the lower visual field projects in the dorsal part of MT [72, 73]. The breakthrough occurred when refining the sweeping technique proved that MT and its satellites could be mapped in the macaque (Fig. 4) [74]. Applying the same strategy to humans yielded a MT cluster organized exactly as in the monkey and including four retinotopic areas, considered homologues of MT, MSTv, FST, and V4t (Figs. 2 and 3). The critical point was to identify the central visual field representation in the eccentricity maps, as it corresponds to the center of the cluster from which the four areas radiate. This center is distinct from the central confluence (Fig. 2) and separated from it by a representation of the periphery, the so-called peripheral edge (purple in Fig. 2), which was initially noted by Tootell and coworkers [75]. It is noteworthy that in both species the cluster does not included MSTd involved in optic flow processing [76]. There is at present little consensus on the criteria to define the human counterpart of this MST component [69, 77].

In humans, V3A has a similar retinotopic organization as in macaque: it is defined by a hemifield representation in which the representations of the two quadrants, separated by the HM, are neighbors and occupies the banks of the transverse sulcus [78]. The posterior quadrant is the lower quadrant, separated from that of V3d by a lower VM. In contrast to macaque V3A, hV3A is motion sensitive [14, 78, 79]. In the initial mapping study [68] the central representation of V3A was considered to be fused with that of V1–V2–V3. Subsequent studies [80–82] have shown that the central representation is separated from and located more dorsal than that of the V1–3 confluence, as it generally is in monkeys (5/8 hemispheres in [73]). It has also been noted in humans that this foveal projection, which V3A shares with V3B (see below), can vary considerably in clarity, being well defined in about half (13/30) hemispheres [83]. In fact the retinotopic organization of dorsal occipital cortex is more complex than initially assumed based on the monkey model, and this part of cortex includes one or more retinotopic areas in addition to V3A (see below). It is noteworthy that in all primates the visual cortex includes an MT area, but that the presence of an area V3A in new world monkeys in unclear [60, 84].

3.3 The Fate of V4 in Human Visual Cortex

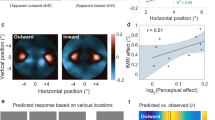

In their 1995 study, Sereno et al. [51] reported an upper quadrant representation anterior to V3v, that they labeled V4v as it occupied the same position as ventral V4 in macaque. Many studies have replicated that finding of a lower quadrant in front of V3v, but it has proven difficult to identify a corresponding dorsal V4 quadrant in front of dorsal V3 [75]. One possible explanation was that standard mapping technique locating meridians did not apply. Indeed, in the macaque the horizontal meridian, which represents the anterior border of ventral V4, forms the boundary of dorsal V4 only over a short distance, as it curves to join the HM splitting MT/V5 into two halves [73, 85]. Hence, we [3] and others [75] have suggested that the region between V3/V3A and hMT/V5+, which we refer to as LOS [20], is the homolog of macaque dorsal V4. Indeed it is located in a position similar to that of dorsal V4 and has functional properties relatively similar to those of macaque dorsal V4, for example, is sensitive to 3D shape from motion (Fig. 7), to 2D shape [20], and kinetic boundaries [87, 88].

Yet, subsequent mapping studies concentrating on the central 6° of the visual field have suggested that the two halves of macaque V4 have become separated in humans and are each integrated into a separate representation of the contralateral hemifield. Brewer et al. [89] have shown that a lower quadrant was located in front of the upper quadrant initially labeled V4v, with the eccentricity running at right angle to the polar variations. They proposed that this hemifield, located in front of V3v (Figs. 2 and 3) should be considered human V4. They went on to describe two additional maps located in front of hV4: ventral occipital (VO)1 and VO2, each supposedly containing a hemifield representation. Interestingly, the two face areas, the fusiform and occipital face areas are located just lateral to hV4 and VO2, respectively. In the same vein, Larsson and Heeger [83] have described a complete hemifield representation in front of V3d, which they refer to as lateral occipital (LO)1. The posterior half of this region is a lower quadrant that was initially described by Smith et al. [90] as V3B. Thus, the posterior parts of hV4 and LO1 apparently seem more responsive, explaining why they were discovered first. Just as is the case ventrally, a second hemifield representation has been described in front of LO1: LO2, of which the anterior border is close to hMT/V5+. The LO1–2/hV4 scheme led to the suggestion that beyond V1–3 the monkey occipital cortex was not an adequate model for human cortex [81], prompting some [91] to attempt to rescue the monkey model by suggesting that human V4 was similar to that of the monkey.

Our mapping results also favor the LO1–2/hV4 scheme [54, 92]. The final resolution of this problem came with the recognition that the retinotopic organization of occipito-temporal cortex in the monkey is more complex than initially appreciated. A recent study showed that cytoarchitectonic area TEO, located just in front of V4, and which initially was thought to contain a single retinotopic map [93] in fact corresponds to four retinotopic areas: V4A, OTd and PITv and PITd (Fig. 4 [94, 95]). This resolved the problem in the sense that human cortex also includes a cluster similar to the PIT cluster [55] and considered homologous to the monkey PITs, and that the region between V3 and the PIT cluster contains six quadrant representations in both species. In the monkey four of these quadrants are part of a split organization and only two combine into a hemifield, while in humans all quadrants form hemifield representations.

3.4 Dorsal Occipital and Intraparietal Areas

Human V3A has been suggested to share its central representation with an area referred to as V3B, located in front of V3A and dorsally from the LO1/LO2 pair [83, 96]. V3B occupied in this scheme a position initially referred to as V7 [97]. V7 is now instead described as an area rostro-dorsal to a complex of dorsal occipital areas, the V3A complex, which includes four hemifields organized pairwise (Figs. 3 and 4). The lower pair, V3A/V3B shares its peripheral representation (P-cluster) like hV4 and VO1, while the upper pair V3C/V3D shares a central representation (C-cluster). Area V7 instead is a parietal area corresponding to IPS0 of Swisher et al. [98] and seems to correspond to the ventral intraparietal sulcus (VIPS) motion-sensitive region [79, 82, 99], located in the most ventral part of the occipital part of human intraparietal sulcus (IPS) [100]. In fact V7 is part of another C-cluster sharing its center with V7A [101], corresponding to IPS1 and likely the homologue of the pair CIP1–2 described in the monkey [102, 103].

In the human parieto-occipital sulcus (POS) Pitzalis et al. [104] have described human V6, which borders the dorsal parts of V2 and V3, representing large eccentricities in the lower visual field (Fig. 3b), and seems to be homologous in both species . It represents the contralateral hemifield, but with an emphasis on the periphery of the visual field rather than the center. Pitzalis et al. [105] described lower-field only representation in the opposite bank of the POS (Fig. 3b), which they labeled human V6A. This area shows strong pointing responses, unlike V6, and likely belongs to parietal cortex.

Finally, several attempts have been made to parcel visual regions in human IPS. Using standard retinotopic mapping, Swisher et al. [98] described four retinotopic maps, labeled IPS1–4, separated by VM representations. Konen and Kastner [106] added IPS5 and SPL1, relying again only on polar angle maps. Responses to standard retinotopic stimuli are weak in this region, and within anterior parts of IPS moving stimuli are more appropriate to map retinotopic organization than are black and white flickering checkerboards (Fig. 1). Others have used attentional stimuli [107], delayed saccade stimuli [108–110] or stereoscopic stimuli [101] to map retinotopic organization. Progress will come not just from using more appropriate stimuli but also recognizing that eccentricity has to be mapped in addition to polar angle in order to identify correctly retinotopic clusters, which seem to be the dominant organization. Our preliminary results suggest that human IPS includes two additional C-clusters including together four to eight areas. Further work is need to understand the retinotopic organization of this part of human parietal cortex and its relationship to the monkey organization in four areas (LIPv, LIPd, VIP, and AIP)

3.5 Conclusions

Human occipital cortex is now almost completely mapped and includes 19 areas: early areas V1–V3, middle areas LO1–2, hV4, ventral areas VO1–2, dorsal areas V3A–D and hV6, plus the occipito-temporal MT and PIT clusters. The competing scheme using only polar angle maps to define areas [111] only lists 12 occipital retinotopic areas .

Most (13/19) areas are similar to those in the monkey (Fig. 4), if we admit the proposal of Orban et al. [112] that TFO1–2 located ventrally to V4/PITv in the monkey are the homologues of VO1–2. The main inter-species differences are the reorganization of V4/V4A/OTd into LO1–2/hV4, perhaps related to the separation of the PITs from the central confluence [55], and the emergence of areas V3B–D. These latter areas seem to have no counterpart in the monkey in which V3A neighbors CIP1–2, and may relate to the expansion of IPL in humans giving rise to the occipital part of IPS. It is noteworthy that clear homologies are present both at early and high-order level in the occipital cortex, refuting the idea that the human visual system divergence more and more from its monkey counterpart as one ascends into the hierarchy. Also homologous areas may differ in functional properties, e.g. V3A is motion sensitive in humans and not in monkeys.

In human occipital cortex all areas beyond V1–3 have a hemifield organization, while in macaque hemifield representations seemed for a long time the exception and split representations, with separate dorsal and ventral quadrants, the rule. Indeed most initially known areas (V1–4) had split organization with MT/V5 and V3A being the exceptions. With most areas mapped, only 5/16 areas have a split representation in the monkey (Fig. 4), still a larger proportion than in humans (3/19). What is the benefit of the hemifield arrangement? As noticed earlier the dorsal region between V3/V3A and hMT/V5+, in macaque as well as in human, has some particular functional characteristics, such as 3D shape from motion sensitivity. The advantage of the human arrangement is that this sensitivity applies to the whole visual field, while in macaque it applies only to the lower field. This might be an evolutionary advantage explaining the changes in this region, which has expanded considerably in humans. More generally the hemifield organization shortens the distance between neurons with RFs in upper and lower field allowing a better integration across the visual field. This apparently outweighs the need for shorter distances across neighboring areas which favors split representations.

Finally it is worth mentioning that most if not all of occipital cortex is retinotopically organized in both species, and that this organization, again in both species, is maintained in the visual parts of parietal cortex but not temporal cortex [103], with the exception of parahippocampal cortex [113].

4 Motion-Sensitive Regions

4.1 Low-Level Motion Regions

The two most prominent motion-sensitive regions in human visual cortex are human MT/V5+ and V3A (see earlier). They display the highest z scores in a contrast between moving and static random dots. Their activation remains significant at low stimulus contrasts typical of the magnocellular stream [78]. In the occipital cortex motion responses have also been noted in lingual gyrus, probably corresponding to ventral V2, V3, and in parts of LOS [20, 79, 114, 115]. This activation pattern depends heavily on the size of the stimuli. With large stimuli, lower-order motion additionally recruits hV6 [116].

In the early studies it was noted that some parietal regions were also responsive to motion in a contrast between moving and static random dots. Sunaert et al. [79] described four motion-sensitive regions in the IPS. The ventral IPS (VIPS) region is located at the bottom of the IPS near hV3A. This region, we believe corresponds to V7 (see above). The parieto-occipital IPS (POIPS) region is located dorsally with respect to VIPS, at the junction of the parieto-occipital sulcus and IPS, in the vicinity of hV6. Not surprisingly, it represents mainly the peripheral visual field [82] (Fig. 6). The dorsal IPS medial and anterior (DIPSM and DIPSA) regions are located in the horizontal part of IPS, and both represent mainly the central visual field [82] (Fig. 6). They are considered the homolog of anterior part of lateral intraparietal (LIP) region (DIPSM) and posterior part of anterior intraparietal (AIP) region (DIPSA), and indeed DIPSA is located just behind the region referred to as human homolog of AIP based on activation by grasping actions [117]. All these regions are also activated by 3D shape from motion [100], which just as motion itself has a much more extensive representation in human IPS than in macaque IPS (Fig. 7) [86, 118]. We have speculated that this might in part be due to the more extensive tool use in humans than in monkeys, and using a tool indeed activates DIPSM and DIPSA [119]. These different parietal regions may be engaged in different visuomotor control circuits, for example, in the control of heading [120], or tracking [121]. Furthermore, it has been shown that flicker is rejected gradually from hMT/V5+ to the more anterior IPS regions [72, 99, 122].

Human motion-sensitive regions: distinction between central and peripheral visual field. (a) Stimulus configuration in experiment 1: the randomly textured pattern (RTP) was positioned either centrally or 5° into left and right visual field (red dot indicates fixation point). (b and c) Statistical parametric maps (SPMs) showing voxels significant (yellow: p < 0.0001 uncorrected for multiple comparisons, corresponding to a false discovery rate of less than 5 % false positives; red: p < 0.001 uncorrected) in the group random-effects analysis (experiment 1, n = 16) for the subtraction moving minus stationary conditions for the centrally (b) and peripherally (right visual field) (c) positioned stimulus, rendered on the posterior and superior views of the standard human brain. Further statistical testing revealed that the interaction between type of stimulus (motion, stationary) and location (center, periphery) was significant (random effects analysis) in DIPSA (Z = 3.12, p < 0.001 uncorrected and Z = 3.58, p < 0.001 uncorrected for right and left, respectively), DIPSM (Z = 3.58, p < 0.001 uncorrected and Z = 4.35, p < 0.0001 uncorrected for right and left, respectively) and weakly in POIPS (Z = 2.69, p < 0.01 uncorrected and Z = 2.24, p < 0.01 uncorrected for right and left, respectively). (d) Overlap of voxels (p < 0.001 uncorrected; yellow) in the group random-effects analysis for the subtraction moving minus stationary conditions for the centrally (red) and peripherally (right and left visual field; green) positioned stimulus (experiment 1), rendered on the posterior and superior views of the standard human brain. (e) Stimulus configuration in experiment 2: RTP was positioned centrally or at 5° eccentricity on upper or lower vertical or horizontal meridian. (f–i) SPMs showing voxels significant (p < 0.05 corrected) in experiment 2 (n = 3) for the subtraction moving minus stationary conditions for the stimuli positioned in the central visual field (f, red), peripherally left and right on the horizontal meridian (g, green), and on the lower (h, blue) and upper vertical meridian (i, white), rendered on the superior view of the standard human brain (posterior part). (j) SPM showing voxels that are active only in the central condition (obtained by exclusive masking of the subtraction in (f) with those in (g–i)). The opposite procedure, subtractions (g–i) masked by that in (f), yielded no active voxels. R right, L left, VF visual field. White and yellow numbers in (a) and (e) indicate eccentricity and diameter (diameter), respectively. Numbers in (b–d) correspond to the activation sites listed: 1 and 8: hV3A; 2 and 9: lingual gyrus; 3, 10, and 11: hMT/V5+; 4: LOS; 5 and 12: VIPS; 13: POIPS; 6: DIPSM; and 7: DIPSA [82]

Visual cortical regions sensitive to 3D shape from motion in human and macaque. Statistical parametric maps (SPMs) for the subtraction viewing of 3D rotating lines minus viewing of 2D translating lines (p < 0.05, corrected) of a single human (a) and monkey (M4) (b) subject projected on the posterior part of the flattened right hemisphere. White stippled and solid lines: vertical and horizontal meridian projections (from separate retinotopic mapping experiments); black stippled lines: motion-responsive regions from separate motion localizing tests; purple stippled lines: region of interspecies difference encompassing V3 and intraparietal sulcus. PCS post-central sulcus, IPS intraparietal sulcus, LaS lateral sulcus, POS parieto-occipital sulcus, CAS calcarine sulcus, STS superior temporal sulcus, ITS inferior temporal sulcus, CoS collateral sulcus, IOS inferior occipital sulcus, OTS occipito-temporal sulcus, PMTS posterior middle temporal sulcus, AMTS anterior middle temporal sulcus (modified from Vanduffel et al. [86])

Further regions sensitive to motion, but not to 3D shape from motion, are V6 and premotor regions corresponding to the frontal eye field (FEF) [79, 100, 116], as well as a region in the posterior insula, caudal to the somatosensory opercular complex [123], which we refer to as posterior insular cortex (PIC) region [79, 118] and which might be the homolog of a visual region located next to the posterior insular vestibular cortex (PIVC) in macaques [14, 124, 125].

4.2 The Kinetic Occipital (KO) Region

Using kinetic gratings, that is, stimuli in which random dots move in opposite directions in alternate stripes, and comparing them to luminance gratings or uniform motion, our group [87, 126, 127] discovered a region located between V3/V3A and hMT/V5+ that appeared selective for kinetic boundaries and that we referred to as the kinetic occipital (KO) region. Recent work by Zeki et al. [128] has proposed that KO responds to boundaries defined by other cues (e.g., colors). These findings do not dispute the responsiveness of KO to kinetic gratings as several groups have observed these responses [83, 129]. Although they have been presented differently, these findings are in fact consistent with our PET [127] and fMRI studies [87] showing responses in KO for both kinetic and luminance gratings, suggesting that KO responds to contours of different nature, not just kinetic contours. However, it is important to emphasize that in contrast with responses in hMT/V5+ and other motion-sensitive regions, KO is selective for kinetic contours as opposed to uniform motion. Thus, we meant selectivity in the motion domain, not in the domain of cues defining contours, when we stated [87] that KO is selective for kinetic boundaries. In the Van Oostende et al. study [87] we observed overlap of the KO region with response to the LO localizer. Indeed, Larsson and Heeger [83] in their study identifying LO1/2 showed that the maximal response to kinetic gratings compared to transparent motion, the contrast most sharply defining KO [87], was strongest in LO1 and V3A/B. The coordinates of LO1 [83] are very similar to those of KO (±31, −91, 0, and −32, −92, 0 [87]), supporting the identifying LO1 as the core region of KO. Thus KO is another functionally defined region that is incorporated into retinotopic regions, as those become known, the human motion area [66], or hMT/V5+, being the primary example, and EBA [130] another one [92].

4.3 High-Level Motion Area

All these motion-sensitive regions are low-level motion regions in the sense that they are driven by motion of light over the retina. Claeys et al. [99] provided evidence for an attention-based motion-sensitive region in the inferior parietal lobule (IPL). This region has activated equiluminant color gratings in which one of the colors is more salient than the other, a paradigm tapping third-order motion [131, 132]. In addition this region has a bilateral representation of the visual field, while all other motion-sensitive areas have mainly a contralateral representation .

5 Shape-Sensitive Regions

There is accumulating evidence that neuronal processes supporting object recognition are coarsely localized in the ventral visual stream [133] that contains a hierarchy of cortical processing stages (V1 → V2 → V4 → IT). The highest stages of this stream (i.e., anterior inferior temporal cortex, AIT or anterior TE in the monkey, and the rostral part of LOC in the human [20, 21, 134, 135]) are thought to be involved in shape processing and support object recognition (Fig. 8). But how are these neuronal representations that support object recognition constructed in the brain? In the monkey, the visual system has been suggested to recruit a hierarchical network of areas across the ventral visual pathway [133, 145] with selectivity for features of increasing complexity from early to later stages of processing [146]. Recent neuroimaging studies suggest a similar organization in the human brain. That is, local image features (e.g., position, orientation) are shown to be processed at the first stage of cortical processing (V1) [11, 37] while complex shapes and even abstract object categories (faces, bodies, places) are represented toward the end of the pathway in the LOC [147–150]. Combined monkey and human fMRI studies showed that the perception of global shapes involves both early (retinotopic) and higher (occipito-temporal) visual areas that may integrate local elements to global shapes at different spatial scales [151, 152]. However, unlike neurons in early visual areas that integrate local information about global shapes within the neighborhood of their receptive fields, neural populations in the LOC represent the perceived global form of objects. In particular, recent imaging studies [153] have shown fMRI adaptation in LOC when the perceived shape of visual stimuli was identical but the image contours differed (because occluding bars occurred in front of the shape in one stimulus and behind the shape in the other). In contrast, recovery from adaptation was observed when the contours were identical but the perceived shapes were different (because of a figure-ground reversal).

(a) Shape-sensitive regions in human occipital, temporal, and parietal cortex adapted from Sawamura et al. [136]: yellow: voxels significant (p < 0.05; corrected) in the subtraction 32-objects minus identical condition; black and blue lines: borders of shape-sensitive regions [i.e., voxels significant in the subtractions intact vs. scrambled images] obtained by Sawamura et al. [136] and Denys et al. [20] respectively. Numbers: local maxima listed in black. (b) Face, place, and body patches in human occipital and occipito-temporal cortex: activations are projected onto flattened right hemisphere of fsaverage atlas. Faces (red)- and body (dark blue) selective regions: approximate probabilistic data of Engell and McCarthy [137]; Dynamic facial expressions (ocre): real data from Zhu et al. [138]; ATFP: approximate data from (Rajimehr et al. [139]; aSTS: approximate data from (Pitcher et al. [140], showing Talairach coordinates of individual activations (local maxima). Scene patches (light blue) from Nasr et al. [141]. Biological motion sensitivity (green shape main effect, white kinematics main effect, white interaction): approximate data from Jastorff and Orban [142]. Regions sensitive for primate vocalizations (black outlines) and intelligible speech (dashed yellow outlines): real data from Joly et al. [143]. Retinotopy: approximate probabilistic data from PALS-B12 atlas; VIPS, POIPS, DIPSM, DIPSA, and phAIP (green dashed outlines): are approximate locations from Jastorff et al. [144],. White star is central representation in V6

The idea of a single, general ventral stream processing objects, has been contradicted by the recent findings of multiple specialized regions processing faces, bodies, and scenes (Fig. 8b [58])This has led to the view that in addition to a general purpose object processing system housed in LOC, the human ventral pathway includes also category specific processing regions [154]. This compromise is not very satisfactory as it implied a dissociation between semantic and visual definition of categories and the fact that general purpose mechanisms for categorization have been located in prefrontal and parietal cortex [155] and not in inferotemporal cortex of the monkey [156]. Therefore, we have recently proposed that the ventral visual pathway is organized in three stages [103]: first a retinotopic stage which included the phPIT cluster, processing visual features of the image; second the anterior part of LOC, corresponding to monkey TE, processing real world entities (RWE), a general term covering objects, faces, and bodies, and third the temporal pole, processing known, complete RWEs. Furthermore the second stage operates in parallel with more dorsal regions processing actions and more ventral regions processing scenes. This middle stage is subdivided into a more dorsal substream processing shape and a more ventral substream processing material properties (color, texture etc.).

The parallel streams and substreams for general shape, faces, bodies, and material properties start in the rostral part of the retinotopic cortex, as shown by the overlap between the caudal face, body and color patches, and retinotopic cortex. For example OFA overlaps with retinotopic cortex but also the posterior two thirds of EBA [92]. At more anterior levels, i.e. the second and third stage, retinotopy is absent, as stated above. Central-periphery organization has been reported at this level [157, 158] but this is the simple consequence of the fact that faces require detail available in central vision while scenes require at least moderately large eccentricities. Finally along these streams and substreams the visual information is gradually abstracted away from the image properties. This is best documented for the LOC, and its monkey counterpart TE [23, 136, 146, 148, 159], i.e. the general shape substream, but likely applies to all (sub)streams [160]. In particular, representations in the anterior subregion of the LOC in the fusiform gyrus (pFs) were shown to be largely invariant to size and position, but not invariant to the direction of illumination and rotation around the vertical axis. In contrast, representations in the posterior subregion of the LOC in the lateral occipital (LO) cortex did not show size or position invariance [23, 24].

6 Depth Processing and 3D Shape Perception

Neurophysiological studies have revealed selectivity for binocular disparity at multiple levels of the visual hierarchy in the monkey brain from early visual areas, to object- and motion-selective areas and the parietal cortex (for reviews: [161–164]). Imaging studies have identified multiple human brain areas in the visual, temporal, and parietal cortex that show stronger activations for stimuli defined by binocular or monocular depth cues than for 2D versions of these stimuli. In particular, areas V3A [165–168] and V3B/LO1/KO [87, 128, 129, 169] have been implicated in the analysis of disparity-defined surfaces and boundaries. Furthermore, studies have employed parametric manipulations to investigate the neural correlates of surface depth (i.e., near vs. far) judgments [167, 170] and 3D shape perception [19]. Finally, several recent studies suggest that areas involved in disparity processing, primarily in the temporal and parietal cortex, are also engaged in the processing of monocular cues to depth (e.g., texture, motion, shading) [20, 86, 100, 171–178] and the combination of binocular and monocular cues for depth perception [179].

Depth relates to the distance from the fixation point and needs to be combined with eye position information to yield distance from the observer. The derivatives of depth provide information about surface orientation and object shape. Gradient selective neurons extracting these derivatives from monocular or binocular image(s) shave been amply documented in parietal and temporal cortex of the monkey [15]. A systematic set of fMRI studies [15] have documented the parietal and temporal regions involved in this extraction in humans. While 3D shape from texture, motion, and disparity is extracted both in dorsal and ventral pathways, 3D shape from shading is predominantly processed in ventral regions close to the phPIT cluster. Systematic combination of single cell recordings, monkey and human fMRI with identical stimuli have allowed to infer the presence of gradient selective neurons in some of the human regions such as pFST or DIPSA [15, 58].

7 Processing of Observed Actions

The visual processing of actions performed by others has been largely neglected in studies of the visual system [180]. Recent studies [144, 181] have shown that this information is processed in regions homologous to the upper and lower bank of middle and rostral STS of the monkey [112, 182]: posterior MTG/pSTG and posterior OTS/posterior fusiform cortex respectively. These areas are also involved in processing biological motion [112, 142, 183].

8 Conclusions

The human visual system likely includes about 40–45 cortical areas. About two/thirds of these have been identified so far, using retinotopic mapping, which proved more efficient than functional properties or morphological features. Further progress can be expected from mapping retinotopic organization with functionally more specific stimuli than black and white checkerboards and from mapping higher-order visual attributes, such as 3D shape or actions, combined with detection of gradients in maps relying on morphological features and/or connections [184].

References

Van Essen DC (2004) Organization of visual areas in macaque and human cerebral cortex. In: Chalupa LM, Werner JS (eds) The visual neurosciences, vol 1. MIT Press, Cambridge, MA, pp 507–521

Nelissen K, Vanduffel W, Orban GA (2006) Charting the lower superior temporal region, a new motion-sensitive region in monkey superior temporal sulcus. J Neurosci 26:5929–5947

Orban GA, Van Essen D, Vanduffel W (2004) Comparative mapping of higher visual areas in monkeys and humans. Trends Cogn Sci 8:315–324

Glasser MF, Van Essen DC (2011) Mapping human cortical areas in vivo based on myelin content as revealed by t1- and t2-weighted MRI. J Neurosci 31:11597–11616

Dougherty RF et al (2005) Occipital-callosal pathways in children validation and atlas development. Ann N Y Acad Sci 1064:98–112

Schmahmann JD et al (2007) Association fibre pathways of the brain: parallel observations from diffusion spectrum imaging and autoradiography. Brain 130:630–653

Van Essen DC, Jbabdi S, Sotiropoulos SN, Chen C, Dikranian K, Coalson T, Harwell J, Behrens TE, Glasser MT (2013) Mapping connections in humans and non-human primates: aspirations and challenges for diffusion imaging. In: Johansen-Berg H, Behrens TE (eds) Diffusion MRI from quantitative measurement to in vivo neuroanatomy. Academic, Amsterdam

Krekelberg B, Boynton GM, van Wezel RJA (2006) Adaptation: from single cells to BOLD signals. Trends Neurosci 29:250–256

Kovács G, Kaiser D, Kaliukhovich DA, Vidnyánszky Z, Vogels R (2013) Repetition probability does not affect fMRI repetition suppression for objects. J Neurosci 33:9805–9812

Sawamura H, Orban GA, Vogels R (2006) Selectivity of neuronal adaptation does not match response selectivity: a single-cell study of the fMRI adaptation paradigm. Neuron 49:307–318

Haynes J-D, Rees G (2006) Decoding mental states from brain activity in humans. Nat Rev Neurosci 7:523–534

Vogels R, Orban GA (1990) How well do response changes of striate neurons signal differences in orientation: a study in the discriminating monkey. J Neurosci 10:3543–3558

Haynes J-D, Rees G (2005) Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci 8:686–691

Vanduffel W et al (2001) Visual motion processing investigated using contrast agent-enhanced fMRI in awake behaving monkeys. Neuron 32:565–577

Orban GA (2011) The extraction of 3D shape in the visual system of human and nonhuman primates. Annu Rev Neurosci 34:361–388

Orban GA (2002) Functional MRI in the awake monkey: the missing link. J Cogn Neurosci 14:965–969

Boynton GM, Demb JB, Glover GH, Heeger DJ (1999) Neuronal basis of contrast discrimination. Vision Res 39:257–269

Zenger-Landolt B, Heeger DJ (2003) Response suppression in v1 agrees with psychophysics of surround masking. J Neurosci 23:6884–6893

Chandrasekaran C, Canon V, Dahmen JC, Kourtzi Z, Welchman AE (2007) Neural correlates of disparity-defined shape discrimination in the human brain. J Neurophysiol 97:1553–1565

Denys K et al (2004) The processing of visual shape in the cerebral cortex of human and nonhuman primates: a functional magnetic resonance imaging study. J Neurosci 24:2551–2565

Kourtzi Z, Kanwisher N (2000) Cortical regions involved in perceiving object shape. J Neurosci 20:3310–3318

Buckner RL et al (1998) Functional-anatomic correlates of object priming in humans revealed by rapid presentation event-related fMRI. Neuron 20:285–295

Grill-Spector K et al (1999) Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron 24:187–203

Grill-Spector K, Malach R (2001) fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol (Amst) 107:293–321

Grill-Spector K, Henson R, Martin A (2006) Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci 10:14–23

Koutstaal W et al (2001) Perceptual specificity in visual object priming: functional magnetic resonance imaging evidence for a laterality difference in fusiform cortex. Neuropsychologia 39:184–199

Vuilleumier P, Henson RN, Driver J, Dolan RJ (2002) Multiple levels of visual object constancy revealed by event-related fMRI of repetition priming. Nat Neurosci 5:491–499

Lisberger SG, Movshon JA (1999) Visual motion analysis for pursuit eye movements in area MT of macaque monkeys. J Neurosci 19:2224–2246

Müller JR, Metha AB, Krauskopf J, Lennie P (1999) Rapid adaptation in visual cortex to the structure of images. Science 285:1405–1408

Tootell RB et al (1995) Visual motion aftereffect in human cortical area MT revealed by functional magnetic resonance imaging. Nature 375:139–141

Huk AC, Ress D, Heeger DJ (2001) Neuronal basis of the motion aftereffect reconsidered. Neuron 32:161–172

Huk AC, Heeger DJ (2002) Pattern-motion responses in human visual cortex. Nat Neurosci 5:72–75

Engel SA, Furmanski CS (2001) Selective adaptation to color contrast in human primary visual cortex. J Neurosci 21:3949–3954

Tolias AS, Smirnakis SM, Augath MA, Trinath T, Logothetis NK (2001) Motion processing in the macaque: revisited with functional magnetic resonance imaging. J Neurosci 21:8594–8601

Norman KA, Polyn SM, Detre GJ, Haxby JV (2006) Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci 10:424–430

Cox DD, Savoy RL (2003) Functional magnetic resonance imaging (fMRI) “brain reading”: detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage 19:261–270

Kamitani Y, Tong F (2005) Decoding the visual and subjective contents of the human brain. Nat Neurosci 8:679–685

Kamitani Y, Tong F (2006) Decoding seen and attended motion directions from activity in the human visual cortex. Curr Biol 16:1096–1102

Williams MA, Dang S, Kanwisher NG (2007) Only some spatial patterns of fMRI response are read out in task performance. Nat Neurosci 10:685–686

O’Toole AJ, Jiang F, Abdi H, Haxby JV (2005) Partially distributed representations of objects and faces in ventral temporal cortex. J Cogn Neurosci 17:580–590

Hanson SJ, Matsuka T, Haxby JV (2004) Combinatorial codes in ventral temporal lobe for object recognition: Haxby (2001) revisited: is there a “face” area? Neuroimage 23:156–166

Haxby JV et al (2001) Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430

Serences JT, Saproo S, Scolari M, Ho T, Muftuler LT (2009) Estimating the influence of attention on population codes in human visual cortex using voxel-based tuning functions. Neuroimage 44:223–231

Friston KJ, Rotshtein P, Geng JJ, Sterzer P, Henson RN (2006) A critique of functional localisers. Neuroimage 30:1077–1087

Saxe R, Brett M, Kanwisher N (2006) Divide and conquer: a defense of functional localizers. Neuroimage 30:1088–1096

Fox PT et al (1986) Mapping human visual cortex with positron emission tomography. Nature 323:806–809

Schneider W, Noll DC, Cohen JD (1993) Functional topographic mapping of the cortical ribbon in human vision with conventional MRI scanners. Nature 365:150–153

Shipp S, Watson JD, Frackowiak RS, Zeki S (1995) Retinotopic maps in human prestriate visual cortex: the demarcation of areas V2 and V3. Neuroimage 2:125–132

Engel SA et al (1994) fMRI of human visual cortex. Nature 369:525

Dougherty RF et al (2003) Visual field representations and locations of visual areas V1/2/3 in human visual cortex. J Vis 3:586–598

Sereno MI et al (1995) Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science 268:889–893

DeYoe EA et al (1996) Mapping striate and extrastriate visual areas in human cerebral cortex. Proc Natl Acad Sci U S A 93:2382–2386

Engel SA, Glover GH, Wandell BA (1997) Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex 7:181–192

Georgieva S, Peeters R, Kolster H, Todd JT, Orban GA (2009) The processing of three-dimensional shape from disparity in the human brain. J Neurosci 29:727–742

Kolster H, Peeters R, Orban GA (2010) The retinotopic organization of the human middle temporal area MT/V5 and its cortical neighbors. J Neurosci 30:9801–9820

Abdollahi RO et al (2014) Correspondences between retinotopic areas and myelin maps in human visual cortex. Neuroimage 99:509–524

Robinson EC et al (2013) Multimodal surface matching: fast and generalisable cortical registration using discrete optimisation. In: Lect Notes Comput Sci (including Subser. Lect Notes Artif Intell Lect Notes Bioinformatics) 7917 LNCS. pp 475–486

Vanduffel W, Zhu Q, Orban GA (2014) Monkey cortex through fMRI glasses. Neuron 83:533–550

Lyon DC, Kaas JH (2002) Evidence for a modified V3 with dorsal and ventral halves in macaque monkeys. Neuron 33:453–461

Rosa MGP, Tweedale R (2005) Brain maps, great and small: lessons from comparative studies of primate visual cortical organization. Philos Trans R Soc Lond B Biol Sci 360:665–691

Duncan RO, Boynton GM (2003) Cortical magnification within human primary visual cortex correlates with acuity thresholds. Neuron 38:659–671

Adams DL, Sincich LC, Horton JC (2007) Complete pattern of ocular dominance columns in human primary visual cortex. J Neurosci 27:10391–10403

Van Essen DC, Newsome WT, Maunsell JHR (1984) The visual field representation in striate cortex of the macaque monkey: asymmetries, anisotropies, and individual variability. Vision Res 24:429–448

Fischl B et al (2008) Cortical folding patterns and predicting cytoarchitecture. Cereb Cortex 18:1973–1980

Hagmann P et al (2008) Mapping the structural core of human cerebral cortex. PLoS Biol 6:1479–1493

Zeki S et al (1991) A direct demonstration of functional specialization in human visual cortex. J Neurosci 11:641–649

Watson JD et al (1993) Area V5 of the human brain: evidence from a combined study using positron emission tomography and magnetic resonance imaging. Cereb Cortex 3:79–94

Tootell RB et al (1995) Functional analysis of human MT and related visual cortical areas using magnetic resonance imaging. J Neurosci 15:3215–3230

Huk AC, Dougherty RF, Heeger DJ (2002) Retinotopy and functional subdivision of human areas MT and MST. J Neurosci 22:7195–7205

Dukelow SP et al (2001) Distinguishing subregions of the human MT+ complex using visual fields and pursuit eye movements. J Neurophysiol 86:1991–2000

Smith AT, Wall MB, Williams AL, Singh KD (2006) Sensitivity to optic flow in human cortical areas MT and MST. Eur J Neurosci 23:561–569

Van Essen DC, Maunsell JH, Bixby JL (1981) The middle temporal visual area in the macaque: myeloarchitecture, connections, functional properties and topographic organization. J Comp Neurol 199:293–326

Fize D et al (2003) The retinotopic organization of primate dorsal V4 and surrounding areas: a functional magnetic resonance imaging study in awake monkeys. J Neurosci 23:7395–7406

Kolster H et al (2009) Visual field map clusters in macaque extrastriate visual cortex. J Neurosci 29:7031–7039

Tootell RB, Hadjikhani N (2001) Where is “dorsal V4” in human visual cortex? Retinotopic, topographic and functional evidence. Cereb Cortex 11:298–311

Tanaka K et al (1986) Analysis of local and wide-field movements in the superior temporal visual areas of the macaque monkey. J Neurosci 6:134–144

Morrone MC et al (2000) A cortical area that responds specifically to optic flow, revealed by fMRI. Nat Neurosci 3:1322–1328

Tootell R et al (1997) Functional analysis of V3A and related areas in human visual cortex. J Neurosci 17:7060–7078

Sunaert S, Van Hecke P, Marchal G, Orban GA (1999) Motion-responsive regions of the human brain. Exp Brain Res 127:355–370

Press WA, Brewer AA, Dougherty RF, Wade AR, Wandell BA (2001) Visual areas and spatial summation in human visual cortex. Vision Res 41:1321–1332

Wandell BA, Dumoulin SO, Brewer AA (2007) Visual field maps in human cortex. Neuron 56:366–383

Orban GA et al (2006) Mapping the parietal cortex of human and non-human primates. Neuropsychologia 44:2647–2667

Larsson J, Heeger DJ (2006) Two retinotopic visual areas in human lateral occipital cortex. J Neurosci 26:13128–13142

Lyon DC, Kaas JH (2002) Evidence from V1 connections for both dorsal and ventral subdivisions of V3 in three species of new world monkeys. J Comp Neurol 449:281–297

Gattass R, Sousa AP, Gross CG (1988) Visuotopic organization and extent of V3 and V4 of the macaque. J Neurosci 8:1831–1845

Vanduffel W et al (2002) Extracting 3D from motion: differences in human and monkey intraparietal cortex. Science 298:413–415

Van Oostende S, Sunaert S, Van Hecke P, Marchal G, Orban GA (1997) The kinetic occipital (KO) region in man: an fMRI study. Cereb Cortex 7:690–701

Nelissen K, Vanduffel W, Sunaert S, Janssen P, Tootell RB, Orban GA (2000) Processing of kinetic boundaries investigated using fMRI and double-label deoxyglucose technique in awake monkeys. Soc Neurosci Abstr 26:1584

Brewer AA, Liu J, Wade AR, Wandell BA (2005) Visual field maps and stimulus selectivity in human ventral occipital cortex. Nat Neurosci 8:1102–1109

Smith AT, Greenlee MW, Singh KD, Kraemer FM, Hennig J (1998) The processing of first- and second-order motion in human visual cortex assessed by functional magnetic resonance imaging (fMRI). J Neurosci 18:3816–3830

Hansen KA, Kay KN, Gallant JL (2007) Topographic organization in and near human visual area V4. J Neurosci 27:11896–11911

Ferri S, Kolster H, Jastorff J, Orban GA (2013) The overlap of the EBA and the MT/V5 cluster. Neuroimage 66:412–425

Boussaoud D, Desimone R, Ungerleider LG (1991) Visual topography of area TEO in the macaque. J Comp Neurol 306:554–575

Janssens T, Zhu Q, Popivanov ID, Vanduffel W (2014) Probabilistic and single-subject retinotopic maps reveal the topographic organization of face patches in the macaque cortex. J Neurosci 34:10156–10167

Kolster H, Janssens T, Orban GA, Vanduffel W (2014) The retinotopic organization of macaque occipitotemporal cortex anterior to V4 and caudoventral to the middle temporal (MT) cluster. J Neurosci 34:10168–10191

Wandell BA, Brewer AA, Dougherty RF (2005) Visual field map clusters in human cortex. Philos Trans R Soc Lond B Biol Sci 360:693–707

Tootell RBH, Tsao D, Vanduffel W (2003) Neuroimaging weighs in: humans meet macaques in “primate” visual cortex. J Neurosci 23:3981–3989

Swisher JD, Halko MA, Merabet LB, McMains SA, Somers DC (2007) Visual topography of human intraparietal sulcus. J Neurosci 27:5326–5337

Claeys KG, Lindsey DT, De Schutter E, Orban GA (2003) A higher order motion region in human inferior parietal lobule: evidence from fMRI. Neuron 40:631–642

Orban GA, Sunaert S, Todd JT, Van Hecke P, Marchal G (1999) Human cortical regions involved in extracting depth from motion. Neuron 24:929–940

Kolster H, Peeters R, Orban GA (2011) Ten retinotopically organized areas in human paritetal cortex. Soc Neurosci Abstr 851.10

Arcaro MJ, Pinsk MA, Li X, Kastner S (2011) Visuotopic organization of macaque posterior parietal cortex: a functional magnetic resonance imaging study. J Neurosci 31:2064–2078

Orban GA, Zhu Q, Vanduffel W (2014) The transition in the ventral stream from feature to real-world entity representations. Front Psychol 5:695

Pitzalis S et al (2006) Wide-field retinotopy defines human cortical visual area v6. J Neurosci 26:7962–7973

Pitzalis S et al (2013) The human homologue of macaque area V6A. Neuroimage 82:517–530

Konen CS, Kastner S (2008) Two hierarchically organized neural systems for object information in human visual cortex. Nat Neurosci 11:224–231

Silver MA, Ress D, Heeger DJ (2005) Topographic maps of visual spatial attention in human parietal cortex. J Neurophysiol 94:1358–1371

Sereno MI, Pitzalis S, Martinez A (2001) Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science 294:1350–1354

Schluppeck D, Curtis CE, Glimcher PW, Heeger DJ (2006) Sustained activity in topographic areas of human posterior parietal cortex during memory-guided saccades. J Neurosci 26:5098–5108

Schluppeck D, Glimcher P, Heeger DJ (2005) Topographic organization for delayed saccades in human posterior parietal cortex. J Neurophysiol 94:1372–1384

Wang L, Mruczek REB, Arcaro MJ, Kastner S (2015) Probabilistic maps of visual topography in human cortex. Cereb Cortex 25(10):3911–3931

Orban GA, Jastorff J (2014) Functional mapping of motion regions in human and nonhuman primates. In: Chalupa LM, Werner JS (eds) The new visual neuroscience, vol 1. MIT Press, Cambridge, MA, pp 777–791

Arcaro MJ, McMains SA, Singer BD, Kastner S (2009) Retinotopic organization of human ventral visual cortex. J Neurosci 29:10638–10652

Sunaert S, Van Hecke P, Marchal G, Orban GA (2000) Attention to speed of motion, speed discrimination, and task difficulty: an fMRI study. Neuroimage 11:612–623

Rees G, Friston K, Koch C (2000) A direct quantitative relationship between the functional properties of human and macaque V5. Nat Neurosci 3:716–723

Pitzalis S et al (2010) Human V6: the medial motion area. Cereb Cortex 20:411–424

Binkofski F et al (1998) Human anterior intraparietal area subserves prehension: a combined lesion and functional MRI activation study. Neurology 50:1253–1259

Orban GA et al (2003) Similarities and differences in motion processing between the human and macaque brain: evidence from fMRI. Neuropsychologia 41:1757–1768

Stout D, Chaminade T (2007) The evolutionary neuroscience of tool making. Neuropsychologia 45:1091–1100

Peuskens H et al (2001) Human brain regions involved in heading estimation. J Neurosci 21:2451–2461

Gori M et al (2012) Long integration time for accelerating and decelerating visual, tactile and visuo-tactile stimuli. Multisens Res 26:53–68

Braddick OJ, O’Brien JMD, Wattam-Bell J, Atkinson J, Turner R (2000) Form and motion coherence activate independent, but not dorsal/ventral segregated, networks in the human brain. Curr Biol 10:731–734

Eickhoff SB, Grefkes C, Zilles K, Fink GR (2007) The somatotopic organization of cytoarchitectonic areas on the human parietal operculum. Cereb Cortex 17:1800–1811

Grüsser OJ, Pause M, Schreiter U (1990) Vestibular neurones in the parieto-insular cortex of monkeys (Macaca fascicularis): visual and neck receptor responses. J Physiol 430:559–583

Grüsser O-J, Guldin WO, Mirring S, Salah-Eldin A (1994) Comparative physiological and anatomical studies of the primate vestibular cortex. In: Albowitz B, Albus K, Kuhnt U, Nothdurft H-C, Wahle P (eds) Structural and functional organization of the neocortex. Proceedings of a Symposium in the Memory of Otto D. Creutzfeldt, May 1993, Exp Brain Res Series 24. pp 358–371

Orban GA et al (1995) A motion area in human visual cortex. Proc Natl Acad Sci U S A 92:993–997

Dupont P et al (1997) The kinetic occipital region in human visual cortex. Cereb Cortex 7:283–292

Zeki S, Perry RJ, Bartels A (2003) The processing of kinetic contours in the brain. Cereb Cortex 13:189–202

Tyler CW, Likova LT, Kontsevich LL, Wade AR (2006) The specificity of cortical region KO to depth structure. Neuroimage 30:228–238

Downing PE, Jiang Y, Shuman M, Kanwisher N (2001) A cortical area selective for visual processing of the human body. Science 293:2470–2473

Lu ZL, Sperling G (1995) Attention-generated apparent motion. Nature 377:237–239

Lu ZL, Lesmes LA, Sperling G (1999) The mechanism of isoluminant chromatic motion perception. Proc Natl Acad Sci U S A 96:8289–8294

Ungerleider LG, Mishkin M (1982) Two cortical visual systems. Anal Vis Behav 549:549–586

Malach R et al (1995) Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A 92:8135–8139

Kanwisher N, Chun MM, McDermott J, Ledden PJ (1996) Functional imaging of human visual recognition. Cogn Brain Res 5:55–67

Sawamura H, Georgieva S, Vogels R, Vanduffel W, Orban GA (2005) Using functional magnetic resonance imaging to assess adaptation and size invariance of shape processing by humans and monkeys. J Neurosci 25:4294–4306

Engell AD, McCarthy G (2013) Probabilistic atlases for face and biological motion perception: an analysis of their reliability and overlap. Neuroimage 74:140–151

Zhu Q et al (2012) Dissimilar processing of emotional facial expressions in human and monkey temporal cortex. Neuroimage 66C:402–411

Rajimehr R, Young JC, Tootell RBH (2009) An anterior temporal face patch in human cortex, predicted by macaque maps. Proc Natl Acad Sci U S A 106:1995–2000

Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N (2011) Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 56:2356–2363

Nasr S et al (2011) Scene-selective cortical regions in human and nonhuman primates. J Neurosci 31:13771–13785

Jastorff J, Orban GA (2009) Human functional magnetic resonance imaging reveals separation and integration of shape and motion cues in biological motion processing. J Neurosci 29:7315–7329

Joly O et al (2012) Processing of vocalizations in humans and monkeys: a comparative fMRI study. Neuroimage 62:1376–1389

Jastorff J, Begliomini C, Fabbri-Destro M, Rizzolatti G, Orban GA (2010) Coding observed motor acts: different organizational principles in the parietal and premotor cortex of humans. J Neurophysiol 104:128–140

Felleman DJ, Van Essen DC (1991) Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex 1:1–47

Tanaka K, Saito H, Fukada Y, Moriya M (1991) Coding visual images of objects in the inferotemporal cortex of the macaque monkey. J Neurophysiol 66:170–189

Grill-Spector K, Malach R (2004) The human visual cortex. Annu Rev Neurosci 27:649–677

Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I (2005) Invariant visual representation by single neurons in the human brain. Nature 435:1102–1107

Reddy L, Kanwisher N (2006) Coding of visual objects in the ventral stream. Curr Opin Neurobiol 16:408–414

Privman E et al (2007) Enhanced category tuning revealed by intracranial electroencephalograms in high-order human visual areas. J Neurosci 27:6234–6242

Altmann CF, Bülthoff HH, Kourtzi Z (2003) Perceptual organization of local elements into global shapes in the human visual cortex. Curr Biol 13:342–349

Kourtzi Z, Tolias AS, Altmann CF, Augath M, Logothetis NK (2003) Integration of local features into global shapes: monkey and human fMRI studies. Neuron 37:333–346