Abstract

The brain must solve a wide range of different temporal problems, each of which can be defined by a relevant time scale and specific functional requirements. Experimental and theoretical studies suggest that some forms of timing reflect general and inherent properties of local neural networks. Like the ripples on a pond, neural networks represent rich dynamical systems that can produce time-varying patterns of activity in response to a stimulus. State-dependent network models propose that sensory timing arises from the interaction between incoming stimuli and the internal dynamics of recurrent neural circuits. A wide-variety of time-dependent neural properties, such as short-term synaptic plasticity, are important contributors to the internal dynamics of neural circuits. In contrast to sensory timing, motor timing requires that network actively generate appropriately timed spikes even in the absence of sensory stimuli. Population clock models propose that motor timing arises from internal dynamics of recurrent network capable of self-perpetuating activity.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

The nervous system evolved to allow animals to adapt to and anticipate events in a dynamic world. Thus the need to tell time was among the earliest forces shaping the evolution of the nervous system. But telling time is not a singular biological problem: estimating the speed of moving objects, determining the interval between syllables, or anticipating when the sun will rise, are all temporal problems with distinct computational requirements. Because of the inherent complexity, diversity, and importance of time to animal evolution, biology has out of necessity devised numerous solutions to the problem of time.

Humans and other animals time events across a wide range of temporal scales, ranging from microsecond differences in the time it takes sound to arrive in the right and left ear, to our daily sleep-wake cycles, and beyond if we consider the timing of infradian rhythms such as menstrual cycles. At a societal and technological level humans also keep track of time over many orders of magnitude, from the nanosecond accuracy of the atomic clocks used for global-positioning systems to the clocking of our yearly trip around the sun. It is noteworthy that in the technological realm we can use the same devices to tell the time across the full spectrum of time scales: for example, atomic clocks are used to time nanosecond delays in the arrival of signals from different satellites, as well as to make adjustments to the calendar year. Virtually all modern man-made clocks—from an atomic clock to a grandfather clock—rely on the same simple principle: an oscillator that generates events at some fixed interval and a counter that integrates events (“tics”) to provide an estimate of time with a resolution equal to the period of the oscillator. In stark contrast, evolution has devised fundamentally different mechanisms for timing across different time scales, and even multiple mechanisms to solve temporal problems within the same time scale. The fact that there are numerous biological solutions to the problem of telling time likely reflects a number of factors. First, the biological building blocks of the brain lack the speed, accuracy, and counting precision of the electronic components that underlie modern man-made clocks. Second, the features required of a biological timer vary depending on whether its function is to process speech, or to control the circadian fluctuations of sleep-wake cycles. Third, different temporal problems, such as sound localization, capturing the temporal structure of animal vocalizations, or estimating when the sun will rise emerged hundreds of millions of years apart during evolution; and were thus subject to entirely different evolutionary pressures and potential solutions. The result is that while animals need to discriminate microsecond differences between the arrival of sounds to each ear and the hours that govern their sleep-wake cycles, the timing mechanisms responsible for both these tasks have nothing in common. In other words, the “clock” responsible for the millisecond timing does not have an hour hand, and our circadian clock does not have a second hand.

For the above reasons, any discussion of timing should be constrained to specific scales and tasks. This chapter will focus on the scale of tens of milliseconds to a few seconds. It is within this range in which the most sophisticated forms of timing lie. Computationally speaking, timing on shortest and longest scales is mostly limited to detection of isolated intervals and durations. But within the scale of tens of milliseconds to seconds, the brain must process and generate complex temporal patterns. It is within this range in which most animals generate and decode the complex temporal structure of auditory signals used for communication. For example, in human language, the duration and intervals between different speech segments is critical on many different levels, from the timing of the interval between syllables and words [1–4] to the overall prosody in which the rhythm and speed of speech influence our interpretation of affect speech recognition and for the determination of prosody [5]. For example, the pauses between words contribute to the interpretation of ambiguous sentences such as “Kate or Pat and Tony will come to the party” (i.e., will Kate or Pat as well as Tony come, versus, will Kate or, Pat and Tony, come) [2]. Additionally, on the motor side the complex motor patterns necessary for speech production, playing the piano, or performing highly coordinated motor patterns animals must perform to hunt are heavily dependent on the brain’s ability to produce timed motor outputs [6]. Perhaps the easiest way to express the unique sophistication of temporal processing on the scale of tens of milliseconds to seconds is by pointing out that human language can be effectively reduced to a purely temporal code. In Morse code there is a single communication channel and all information is transmitted in the order, interval, duration, and pattern of events. It is a testament to the brain’s ability to process temporal information that humans can learn to communicate with Morse code, but this ability is constrained to a specific time scale, the brain simply does not have the hardware to understand Morse code with ‘dot’ and ‘dash’ durations of a few millisecond or of many seconds: the ability to process complex patterns is lost at both very fast and very slow speeds!

In this chapter we will focus on a class of models mentioned in fourth chapter (Hass and Durstewitz, this volume) termed state-dependent networks, that offers a general framework of the mechanisms underlying timing on the scale of tens of milliseconds to a few seconds. This class of models is unique in that it provides a framework to process both simple forms of interval and duration discrimination, as well as the ability to process complex spatiotemporal patterns characteristic of speech or Morse code. A key principle in this framework is that precisely because timing is such an important computational problem it is proposed that neurons and neural circuits evolved precisely to solve temporal problems, and thus that timing on the scale of tens of milliseconds to a few seconds should be seen as an intrinsic, as opposed to a dedicated (fifth chapter), computation. Thus under this framework timing is simply one of the main computational tasks neural networks were “designed” to perform.

Timing with Neural Dynamics

The principle underling most man-made clocks is that by counting the cycles of an oscillator that tics at a fixed frequency it is possible to keep track of time. It is important to note, however, that there are ways to tell time that do not rely on oscillators. In principle, any dynamic system, regardless of whether it exhibits periodicity or not, can potentially be used to tell time—indeed this statement is a truism since dynamics refers to systems that change over time. Consider a child sliding down a water slide, if she goes down from the same initial position every time, she will take approximately the same amount of time to reach the bottom every time. We could mark the slide to represent 1 s intervals, which would have smaller spacing at the top and larger spacings at the bottom where the velocity is higher. Thus as the child crosses the different lines we could tell if she started approximately 1, 2, 3, or 4 s ago. The point is, is that any dynamical system that can be follow the same trajectory again and again has information about time. Indeed, in his famous experiments on motion Galileo applied this same concept when analyzing the speed a ball roles down an inclined plane.

A slightly more appropriate analogy to prepare us for how neural dynamics can be used to tell time is a liquid. A pebble thrown into a pond will create a spatiotemporal pattern of ripples: the concentric waves that travel outwards from the point of entry. If you were shown two pictures of these ripples you could easily tell which picture was taken first based on the diameter of ripple pattern, and importantly with some knowledge of the system and a bit of math, you could estimate how long after the pebble was thrown in were both pictures taken. Now let’s consider what happens when we throw in second pebble: the pattern produced by a second pebble will be a complex interaction between the internal state of the liquid (the current pattern of ripples). In other words the ripple pattern produced by the second pebble will be a function of the inter-pebble interval, because the interaction between the internal state of the system and subsequent “inputs”. As we will see below this notion of an evolving internal state and the interaction between that internal state and new inputs is key to state-dependent network models—particularly in the context of sensory timing.

Networks of neurons are a complex dynamic system—not just any dynamic system, but arguably one of the most complex dynamic systems known. Defining the internal state of neural network, however, is not as straightforward as it might seem, so it will be useful to distinguish between two components that characterize the state of neural networks: the active and hidden state.

Active States

Traditionally, the state of a neural network is defined by which neurons are firing at a given point in time–I will refer to this as the active state. We can formally define the active state of a network composed of N neurons as an N-dimensional vector that is composed of zeros and ones—where a zero signifies that a neuron is not firing and a one means that it is (depending on the size of the time bin we can also represent each value as a real number representing the firing rate). Such a vector forms a point in N-dimensional space, and defines which neurons are active at a time point t. Over the course of multiple time bins these points form a path (a neural trajectory) through state space (Fig. 1A). Because the trajectory plays out in time each point can potentially be used to tell time. One of the first models to suggest that the changing population of active neurons can be used to encode time was but forth by Michael Mauk in the context of the cerebellum [7–9]. The cerebellum has a class of neurons termed granule cells, and these are the most common type of neuron in your brain—more than half the neurons in the brain are granule neurons [10]. Mauk proposed that one reason there are so many granule cells is because they do not only code for a particular stimulus or body position but the amount of time that has elapsed since any given stimulus was presented. The model assumes that a stimulus will trigger a certain population of active granules cells, and that at each time point t + 1 this neuronal population will change, effectively creating a neural trajectory that plays out in time. Why would the population of granule cells change in time in the presence of a constant (non-time varying) stimulus? The answer lies in the recurrency, or feedback, that is characteristic of many of the brain’s circuits. As we will see below the recurrency can ensure that which neurons are active at time t is not only dependent on the synapses that are directly activated by the input, but also depends on the ongoing activity within the network; thus the neurons active at t + 1 is a function of both the input and which neurons were active at t. Under the appropriate conditions feedback mechanisms can create continuously changing patterns of activity (neural trajectories) that encode time.

Neural trajectories. A) The changing patterns of activity of a neural network can be represented as neural trajectories. Any pattern of activity can be represented in a space where the number of dimensions correspond to the number of units. In the simple case of two neurons trajectories can be plotted in 2 dimensional space where each point corresponds to the number of spikes within a chosen time window. In this schematic two different trajectories (blue and red) are elicited by two different stimuli, and because the trajectories evolve in time, the location of the each point in space codes for the amount of time that has elapsed since the onset of either stimulus (from Buonomano and Maass [73])

Numerous in vivo electrophysiology studies have recorded reproducible neural trajectories within neural circuits. These neural trajectories have been observed in response to either a brief stimulus or prolonged time-varying stimuli [11–14]. Other studies have demonstrated that these trajectories contain temporal information [15–20]. While these results support the notion that time can be encoded in the active state of networks of neurons, it has not yet been clearly demonstrated that the brain is actually using these neural trajectories to tell time.

Hidden States

Defining the state of a neural network is more complicated then simply focusing on the active state. Even a perfectly silent network can respond to the same input in different manners depending on its recent history of activity. Put another way, even a silent network can contain a memory of what happened in the recent past. This is because neurons and synapses have a rich repertoire of time-dependent properties that influence the behavior of neurons and thus of networks. On the time scale of tens of milliseconds to a few seconds, time-dependent neural properties include short-term synaptic plasticity [21, 22] slow inhibitory postsynaptic potentials [23, 24], metabotropic glutamate currents [25], ion channel kinetics [26, 27], and Ca2+ dynamics in synaptic and cellular compartments [28, 29], and NMDA channel kinetics [30]. I refer to these neuronal and synaptic properties as the hidden network state, because they are not accessible to the downstream neurons (or to the neuroscientist performing extracellular recordings) but will nevertheless strongly influence the response of neurons to internally or externally generated inputs.

Much of the work on the hidden-states of neural networks has focused on short-term synaptic plasticity, which refers to the fact that the strength of a synapse is not a constant but varies in time in a use-dependent fashion. For example, if after a long silent period (many seconds) an action potential is triggered in a cortical pyramidal neuron might produce a postsynaptic potential (PSP) of 1 mV in a postsynaptic neuron. Now if a second spike is triggered 100 ms after the first spike the PSP could be 1.5 mV. Thus the same synapse can have multiple different strengths depending on its recent activity. This short-term plasticity can take the form of either depression or facilitation, depending on whether the second PSP is smaller or larger then the ‘baseline’ PSP, respectively. An example of short-term facilitation between cortical pyramidal neurons is shown in Fig. 2. Most of the brain’s synapses undergo depression or facilitation for the duration of a time scale of hundreds of milliseconds [21, 31–33], but some forms short-term synaptic plasticity can last for seconds [21, 34, 35].

Short-term synaptic plasticity. Each trace represent the voltage of a postsynaptic neuron during the paired recording of two connected layer 5 pyramidal neurons from the auditory cortex of a rat. The amplitude of the EPSP (that is, the synaptic strength) changes as a function of use. In this case facilitation is observed. The strength of the second EPSP is larger than the first, and the degree of facilitation is dependent on the interval, the largest degree of facilitation is observed at 25 ms (from Reyes and Sakmann [31])

It is important to note that short-term synaptic plasticity is a type of a very short-lasting memory. The change in synaptic strength is in effect a memory that a given synapse was recently used. Furthermore, the memory is time-dependent: the change in synaptic strength changes smoothly in time. For example in the case of short-term facilitation of EPSPs between cortical pyramidal neurons the amplitude of the second of a pair of EPSPs generally increases a few tens of milliseconds after the first EPSP and then decays over the next few hundred milliseconds. Because of this temporal signature the STP plasticity provides a potential ‘clock’—in the sense that it contains information about the passage of time. But as we will see it is unlikely that individual synapses are literally telling time, rather theoretical and experimental evidence suggests that short-term synaptic plasticity contributes to time-dependent changes in the active states of neural networks, which do code for time.

Hidden and Active States, and Sensory and Motor Timing

Consider a highly sophisticated temporal task of communicating using Morse code. As mentioned above, Morse code is a temporal code, in the sense that there is only a single spatial channel: all information is conveyed in the interval, order, and number of the “dots” (short elements) and “dashes” (long elements). Understanding Morse code requires that our auditory system parse the intervals and duration of the signals, but generating Morse code, requires that the motor system produce essentially these same durations and intervals. Does the brain use the same timing circuits for both the sensory and motor modalities? This important question, relates to one discussed throughout this book. Are the mechanisms underlying timing best described as dedicated—i.e., there is a specialized and centralized mechanism responsible for timing across multiple time scales and processing modalities. Or, conversely is timing intrinsic—i.e., is timing a general property of neural circuits and processed in a modality specific fashion [36]. State-dependent network models are examples of intrinsic models of timing, and argue that because virtually all neural circuits exhibit active and hidden states that most neural circuits can potentially tell time. But different circuits are likely to be more or less specialized to tell time. Additionally, different circuits likely rely on the active or hidden states to different degrees to tell time. This point is particularly important when considering the difference between sensory and motor timing. In a sensory task, such as interval discrimination, you might be asked to judge if two tones were separated by 400 ms or not; in a motor production task you might be asked to press a button twice with an interval as close to 400 ms as possible. Note that in the sensory task the critical event is the arrival of the second externally generated tone. Your brain must somehow record the time of this external event and determine whether it occurred 400 ms after the first. But in the motor task your brain must actively generate an internal event at 400 ms. This difference is potentially very important because sensory timing can be achieved ‘passively’: time is only readout when the network is probed by an external stimulus. But because the network could be silent during the inter-tone interval it is entirely possible that the time is ‘kept’ entirely by the hidden state (until the arrival of the second tone, when the hidden state is translated into an active state). In contrast, motor timing cannot rely exclusively on the hidden state: in order to generate a timed response there should be a continuously evolving pattern of activity (although there are some exceptions to this statement). Thus, although sensory and motor timing may in some cases rely on the same mechanisms and circuits, it is useful to consider them separately because of the potential differences between the contributions of hidden and active states to sensory and motor timing.

Sensory Timing

The central tenet of state-dependent network models of sensory timing is that most neural circuits can tell time as a result of the interaction between the internal state networks and incoming sensory information. Computer simulations have demonstrated how both the hidden and active states of neural networks can underlie the discrimination of simple temporal intervals and durations, as well as of complex spatiotemporal patterns such as speech [37–43]. These models have been based on spiking models of cortical networks that incorporate hidden states, generally short-term synaptic plasticity. The networks are typically recurrent in nature, that is, the excitatory units synapse back on to themselves. Critically, however, in these models the recurrent connections are generally relatively weak, meaning that the positive feedback is not strong enough to generate self-perpetuating activity. In other words in the absence of input these networks will return to a silent (or baseline spontaneous activity) active state.

To understand the contributions of the hidden and active states it is useful to consider the discrimination of intervals versus durations or complex time-varying stimuli. Interval discrimination must rely primarily on the hidden state. For example, consider the discrimination of two very brief auditory tones presented 400 ms apart. After the presentation of the first tone the network rapidly returns to a silent state—thus the active state cannot “carry” the timing signal—but the hidden state can “remember” the occurrence of the first tone (provided the second tone is presented within in the time frame of the time constants of short-term synaptic plasticity). But for a continuous stimulus, such as duration discrimination, or the discrimination of words spoken forwards or backwards, the temporal information can be encoded in both the hidden and active state because the stimulus itself is continuously driving network activity.

To understand the contribution of hidden states alone to temporal processing we will first consider very simple feedforward networks (that is, there are no excitatory recurrent connections capable of driving activity in the absence of input). These simple circuits rely primarily on short-term synaptic plasticity to tell time, and while they cannot account for the processing of complex temporal patterns, experimental data suggest they contribute to interval selectivity in frogs, crickets, and electric fish [44–48].

Sensory Timing in a Simple Circuit

Figure 3 provides an example of a very simple feedforward circuit that can discriminate a 100 ms interval from 50 and 200 ms intervals. The circuit reflects a virtually universal architecture in neural circuits: feedforward excitation and disynaptic inhibition [49, 50]. The prototypical disynaptic circuits is composed of a single Input, an excitatory (Ex) and inhibitory (Inh) neuron, where both neurons receive excitatory synapses from Input, and the excitatory neuron also receives inhibition from the inhibitory neuron for a total of three synapses: Input → Ex, Input → Inh, and Inh → Ex. There are many ways short-term synaptic plasticity can generate interval selectivity. In this example the excitatory synapses onto the excitatory and inhibitory neuron exhibit paired-pulse facilitation (the second EPSP will be stronger then the first). Selectivity arises from dynamics changes in the balance of excitation impose by short-term synaptic plasticity. In this example the short-term facilitation onto the Inh neuron is sufficient to make it fire to the second pulse at 50 ms but not during the 100 or 200 ms intervals. The short-term facilitation onto the Ex neurons is strong enough to make it fire to the 50 and 100 ms intervals, but it does not fire to the 50 ms interval because the spike in the Inh neuron prevents the spike in the Ex neuron. Note that this assumes the inhibition is fast enough to prevent the spike in the Ex neuron even though it must travel through an additional neuron. Experimental evidence clearly demonstrates this is the case [50, 51]: inhibitory neurons have faster time constants and synapse on the cell soma of pyramidal neurons (thus avoiding the dendritic conduction delay). Simply changing the synaptic strength of the Input → Ex and Input → Inh synapses can cause the Ex unit to fire selectively to the 50 or 200 ms interval.

Simulation of interval selectivity based on short-term plasticity. (a) Schematic of a feedfoward disynaptic circuit. Such circuits are almost universally observed throughout the brain. They are characterized by an input that excites both an inhibitory and excitatory neuron (for example, thalamocortical axons synapse on both excitatory and inhibitory neurons), and feedfoward inhibition (the excitatory units receives inhibition from the inhibitory neuron). Each of the three synapses exhibit short-term synaptic plasticity. (b) Short-term synaptic plasticity (the hidden state) can potentially be used to generate interval selective neurons. Perhaps the simplest scenario is one in which both the excitatory and inhibitory neurons receive inputs that exhibit paired-pulse facilitation. In this example, a the Ex units spikes is selective to the 100 ms interval because at 50 ms it is inhibited by the spike in the inhibitory neurons, and at the 200 ms interval short-term facilitation is no longer strong enough to drive it to threshold

This simple model provides an example of how dynamic changes in the balance of excitation and inhibition produced by short-term synaptic plasticity could potentially underlie the discrimination of intervals in simple feed-forward circuits. Importantly, there is experimental evidence that suggest that this is precisely the mechanism underlying interval selectivity in some cases. Some species of frogs communicate though a series of “pulses” and the rate and the number of pulses provides species-specific signals. The neuroscientist Gary Rose and his colleagues have identified neurons in the midbrain of these species that respond with some degree of selectivity to the interval between the pulses [44, 46, 52, 53]. Similarly, the interval between brief auditory or bioelectrical pulses in crickets and electric fish, respectively, are important for communication [47, 48]. In these animals frequency and interval selective neurons have also been identified. Figure 4 shows an example of a fish midbrain neuron that does generally not spike to sequences of electrical discharges presented at intervals of 10 or 100 ms, but responds robustly to intervals of 50 ms. Analysis of the mechanisms underlying these example of temporal selectivity indicate that it arises from dynamic changes in the balance of excitation and inhibition produced by short-term synaptic plasticity [44, 46–48]. In other words in these simple feedforward networks the hidden state of the networks (in the form of short-term synaptic plasticity) seems to account for the experimentally observed temporal selectivity.

Temporal selectivity in midbrain neurons. (a) Voltage traces from a neuron in the midbrain of an electric fish. Each trace represents the delivery of trains of electrical pulses presented at intervals of 100 (left), 50 (middle), and 10 (right) ms. The rows represent three separate repetition of the trains. The electrical pulses were delivered in the chamber, picked up by the fish’s electroreceptors and indirectly transmitted to the neuron in the exterolateral nucleus. This neuron was fairly selective to pulses delivered at intervals of 50 ms. (b) The temporal tuning can be represented by plotting the mean number of spikes (normalized) per electrical pulse or the normalized mean PSP amplitude over a range of different intervals (10–100 ms). From Carlson [47]

Sensory Timing in Recurrent Circuits

While theoretical and experimental studies suggest that simple feedforward circuits can perform simple types of temporal discrimination, it is unlikely that such circuits can account for the flexibility, diversity, and complexity characteristic of discrimination of complex time-varying patterns typical of speech, music, or Morse code. For complex temporal and spatiotemporal forms processing, complex recurrent networks that contain a rich repertoire of connectivity patterns and hidden states are likely necessary.

Let’s consider what might happen in the auditory cortex or an early auditory sensory area during a simple interval discrimination task, and the role of the active and hidden states. The main input layer of the sensory cortex is Layer IV, but neurons in all layers can be activated by the tone, and there is a high degree of both feedforward and recurrent connectivity within any given cortical circuit. Thus in response to a brief tone some complex pattern of active neurons will be elicited, and this pattern will comprise the active state. Generally speaking, within tens of milliseconds after the end of the tone neurons in the auditory cortex will return to their baseline levels of activity—suggesting that the active state does not encode the presentation of the tone after it is over. Now during an interval discrimination task a second tone of the same frequency will be presented at a specific interval after the onset of the first, let’s assume the intertone interval was 100 ms. If there was no ‘memory’ of the first tone the second one should activate the same population of neurons. However, because of short-term synaptic plasticity (the hidden state) the strength of many of the synapses may be different at the arrival of the first and second tone resulting in the activation of distinct subsets of neurons. This is illustrated in Fig. 5a, which illustrates of a computer simulation of a network composed of 400 excitatory and 100 inhibitory neurons. Even when same exact input pattern is presented to t = 0 and t = 100 ms, many neurons respond differentially to the first and second tone because of the state-dependency of the network (in this case as a result of the hidden state). As show in the lower panels the change in the network state (defined by both the active and hidden states) can be represented in 3D space to allow for the visualization of the time-dependent changes in network state. The difference in these populations can be used to code for the interval between the tones [37, 39]. State-dependent network models predict that as information flows through different cortical areas, the encoding of temporal and spatiotemporal information may increase, but could begin at early sensory areas such as the primary auditory cortex. Indeed, a number of studies have reported that a small percentage of primary auditory cortex neurons are sensitive to the interval between pairs of tone of the same or different frequencies [54–56], however there is as yet no general agreement as to the mechanisms underlying this form of temporal sensitivity.

Simulation of a state-dependent network. (a) Each line represents the voltage of a single neuron in response to two identical events separated by 100 ms. The first 100 lines represent 100 excitatory units (out of 400), and the remaining lines represent 25 inhibitory units (out of 100). Each input produces a depolarization across all neurons in the network, followed by inhibition. While most units exhibit subthreshold activity, some spike (white pixels) to both inputs, or selectively to the 100 ms interval. The Ex units are sorted according to their probability of firing to the first (top) or second (bottom) pulse. This selectivity to the first or second event arises because of the difference in network state at t = 0 and t = 100 ms. (b) Trajectory of the network in response to a single pulse (left panel). The trajectory incorporates both the active and hidden states of the network. Principal component (PC) analysis is used to visualize the state of the network in 3D space. There is an abrupt and rapidly evolving response beginning at t = 0, followed by a slower trajectory. The fast response is due to the depolarization of a large number of units (changes in the active state), while the slower change reflects the short-term synaptic dynamics (the hidden state). The speed of the trajectory in state-space can be visualized by the rate of change of the color code and by the distance between the 25 ms marker spheres. Because synaptic properties cannot be rapidly “reset,” the network cannot return to its initial state (arrow) before the arrival of a second event. The right panel shows the trajectory in response to a 100 ms interval. Note that the same stimulus produces a different fast response to the second event, in other words the same input produced different responses depending on the state of the network at the arrival of the input (modified from Karmarkar and Buonomano [43])

An elegant aspect of the state-dependent network models is that it provides a general framework for temporal and spatiotemporal processing, it does not simply address the mechanisms of interval selectivity, but the processing of complex temporal patterns and speech [37, 38, 40, 42]. This robustness arises from the fact that any stimulus will be naturally and automatically encoded in the context of the sensory events that preceded it. But this robustness is both a potential computational advantage and disadvantage. An advantage because it provides a robust mechanism for the encoding of temporal and spatiotemporal information—for example, in speech the meaning of the syllable tool is entirely different if it is preceded by an s (stool). But the strength of this framework is also its potential downside, that is, sometimes it is necessary to encode identify sensory events independently of their context—for example if you hear one-two-three or three-two-one the two in middle still has the same value independently of whether it was preceded by one or three.

The state-dependent nature of these networks has led to a number of experimental predictions. One such prediction is that in an interval discrimination task timing should be impaired if interval between two intervals being compares is itself short. One can think of this as being a result of the network not having sufficient time to ‘reset’ in between stimuli. This prediction has been experimentally tested. When the two intervals being judged (100 ms standard) were presented 250 ms apart, the ability to determine which was longer was significantly impaired compared to when they were presented 750 ms apart [43]. Importantly, if the two intervals are presented at 250 ms apart, but the first and second tones were presented at different frequencies (e.g., 1 and 4 kHz), interval discrimination was not impaired. The interpretation is that the preceding stimuli can ‘interfere’ with the encoding of subsequent stimuli when all the tones are of the same frequency because, all tones activate the same local neural network (as a result of the tonotopic organization of the auditory system); but if the first interval is presented at a different frequency there is less ‘interference’ because the low frequency tones to not strongly change the state of the local high frequency network. These results provide strong support for the hypothesis that timing is locally encoded in neural networks and that it relies on the interaction between incoming stimuli on the internal state of local cortical networks.

These results are not inconsistent with the notion that we can learn to process intervals, speech, or Morse code patterns independent of the preceding events. But they do suggest that the computational architecture of the brain might be to naturally encode the spatiotemporal structure of sensory events occurring together on the time scale of a few hundred milliseconds, and that learning might be necessary in order to disentangle events or “temporal objects” that are temporally proximal. Indeed, this view is consistent with the observation that during the early stages of learning a language words are easier to understand if they are presented a slow rate, and if the words are presented at a fast rate we lost the ability to parse speech and grasp the independent meaning of each one.

Motor Timing

If you are asked to press a button 1 s after the onset of a tone, there must be an active internal ‘memory’ that leads to the generation of a movement after the appropriate delay. In contrast to sensory timing, where an external event can be used to probe the state of a network, motor timing seems to require an active ongoing internal signal. Thus, motor timing cannot be accomplished exclusively through the hidden state of a network. Rather, motor timing is best viewed as being generated by ongoing changes in the active state of a neural networks.

Motor timing on the scale of hundreds of milliseconds to a few seconds encompasses a wide range of phenomenon studied with a number of different tasks including. (1) Tapping, where subjects are asked to tap a finger with a fixed period [57, 58]. (2) Eyeblink conditioning, many animals including mice, rabbits, and humans can be conditioned to blink at a certain interval (generally less than 1 s) after the onset of a conditioned stimulus such as a tone, by pairing the tone with the present of an aversive stimulus [59, 60]. (3) Spatiotemporal reproduction, motor timing has also been studied using a slightly more complex task in which humans are asked to reproduce a spatiotemporal pattern using their fingers—much like one would while playing the piano [61]. An example of such a task is shown in Fig. 6a. This task is of interest because it requires that multiple intervals be produced in succession, i.e., the end of one interval is the beginning of the next. The fact that this task is easily performed constrains the mechanisms underlying timing, for example it makes it unlikely that motor timing relies on a single timer that requires a significant amount of time to be reset. Indeed, analysis of this task has been used to argue that motor timing relies on a timer that times continuous from the first element (t = 0) through out the entire pattern, as opposed to being reset at each interval [61].

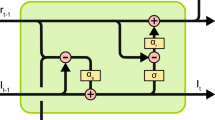

Simulation of a population clock in a recurrent neural network. (a) Network architecture. A randomly connected network composed of 1,800 randomly connected firing rate units. This recurrent network receives a single input. The four outputs are used to generate a spatial temporal pattern, and can be interpreted as four finger that much press the keys of a piano in a specific spatiotemporal pattern. (b) The output units were trained to produce the pattern shown (three different runs overlaid) in response to a brief input (black line). Training consist of adjusting the weights of the recurrent units onto the readout units (red lines in panel a). Output traces are shifted vertically for visual clarity. The dashed black trace represents a constant input tonic input to the recurrent network. Colored rasters represent a subset (20) of the recurrent units. In these units activity ranges from −1 (blue) to 1 (red) (modified from Laje et al. [61]).

It seems likely that there are multiple neural mechanisms contributing to different types of motor timing, of particularly importance may be the distinction between motor timing tasks that require the generation of simple intervals, or periodic or aperiodic patterns. But models based on dynamics changes in the population of active neurons can potentially account for not only a wide range of motor timing tasks, but for the generation of complex spatiotemporal motor patterns. Such models, have been referred to as a population clock [62, 63]. Specifically, in these models timing emerges from the internal dynamics of recurrently connected neural networks, and time is inherently encoded in the evolving activity pattern of the network—a population clock [6, 62].

As mentioned above, one of the first examples of such a population clock was proposed in the context of timing in the cerebellum [7, 8]. Specifically, that in response to a continuous tonic input a continuously varying population of granule cells will be active as a result of the a negative feed back loop where granule cells excite Golgi neurons, which in turn inhibit the granule cells. In response to a constant stimulus, conveyed via the mossy fibers, the Gr cell population response is not only a function of the current stimulus, but is also dependent on the current state of the Gr-Go network. As a result of the feed-back loop, simulations reveal that a dynamically changing trajectory of active Gr cells is created in response to a stimulus [7, 64, 65]. This pattern will trace a complex trajectory in neuron space, and since each point of the trajectory corresponds to a specific population vector of active Gr cells, the network inherently encodes time. Time can then be read-out by the Purkinje cells (the ‘readout’ neurons), which sample the activity from a large population of Gr cells. Importantly, the Purkinje cells can learn to generate timed motor responses through conventional associative synaptic plasticity coupled to the reinforcement signal from the inferior olive [60]. In this framework, the pattern of Gr cell activity would be expected not only to encode all potentially relevant stimuli, but also to be capable of generating a specific time-stamp of the time that has elapsed since the onset of each potential stimulus. This scheme clearly requires a very high number of distinct Gr cell patterns. Indeed, the fact that there are over 5 × 1010 Gr cells in the human cerebellum [49] suggests that they are uniquely well-suited and indeed designed to encode the large number of representations that would arise from having to encode the time from onset for each potential stimulus.

There is strong experimental evidence that the cerebellum is involved in motor timing [59, 64, 66]. But it is also clear that other areas of the brain are also capable of motor timing—indeed even in the presence of large cerebellar lesions timing is often only mildly impaired, not abolished. Additionally, because the cerebellum lacks any recurrent excitation it is not capable of generating self-perpetuating activity or time response in the absence of a continuous input. Cortical circuits, however, have abundant excitatory recurrent connections, and are able to operate in a truly self-perpetuating regime.

To understand how a network can generate self-perpetuating activity which can be used for timing it is useful to consider simpler and less biologically realistic models. An example of such a model is shown in Fig. 6. The units of the network do not spike but can vary their “activity” levels according to an analog input–output function. These “firing rate” units are typically represented by a sigmoid, and the output can take on any value between −1 and 1 [62, 67]. The network is composed of 1,800 sparsely connected units, each with a time constant of 10 ms (the time constant of the units is important because if the longest hardwired temporal property in the network is 10 ms, yet the network is capable of timing many second it means that timing arises as an emergent property of the network). As shown in Fig. 6, a brief input can trigger a complex spatiotemporal pattern of activity within the recurrent network; and this pattern can be used to generate multiple, complex spatiotemporal output patterns several seconds in duration. Different output patterns can be triggered by different brief input stimuli. The results shown are from a network with four outputs (each representing a finger). The network is trained to reproduce the desired target pattern every time the corresponding “go” signal is activated. In this scenario learning takes place by adjusting the weights on to the readout units.

A potential problem with this class of models, that will not be addressed in detail here, is that they tend to exhibit chaotic behavior—that is, they are very sensitive to noise. However, a number of studies have begun to address this limitation through feedback and training the weights of the recurrent networks [68, 69].

Note that the population clock framework shown in Fig. 6 does not simply encode time, but accounts for both the spatial and temporal aspects of complex spatiotemporal patterns. That is, the spatial pattern, the timing, and the order of the fingers are all encoded in a multiplexed fashion in the recurrent network plus the readout.

Conclusion

Humans time events on scales that span microseconds to days and beyond. And in contrast to the clocks in our pockets and our wrists, which tell time across scales from milliseconds to years, biology has devised fundamentally different mechanisms for timing across scales. The framework proposed in this chapter proposed that, within the range of tens of hundreds of millisecond to a few seconds, timing is fundamentally unlike man made clocks that rely on oscillators and counters. Rather, theoretical and experimental studies suggest that timing on this scale is fundamentally related to dynamics: the changing states and patterns of activity that networks inevitably undergo as a consequence of the physical properties of neurons and circuits.

An important concept within this framework is that timing can be a local and inherent computation performed by neural networks. Yet these networks can operate in different modes or regimes, relying more on hidden states in the case of sensory timing, and more on active states in motor timing. A powerful feature of the state-dependent network framework is its generality, it is not limited to simple intervals or duration but equally well suited for complex sensory and motor patterns.

While there is not yet any concrete experimental data regarding the mechanisms underlying any form of timing there is mounting experimental evidence supporting the notion of state-dependent mechanisms and that timing relies on neural dynamics. For example in the sensory domain there are numerous examples of interval and frequency selectivity that seem to clearly rely on the hidden state, particularly short-term synaptic plasticity [44–48]. Similarly, in vivo studies in birds, rats, and monkeys have demonstrated that there is a population code for time. That is, in relation to an onset event it is possible to use the population activity of neurons to determine how much time has elapsed [15–17, 19, 20, 70], however it remains to be proven that this information is causally being used by the brain to tell time. Furthermore, in vitro data suggests that timed responses can also be observed in isolated cortical networks in vitro [71, 72].

Although the notion that timing is not the product of a central clock may run counter to our intuitions about the passage of time, it is entirely consistent with the fact that in most cases time is not an independent dimension of sensory stimuli, but rather spatially and temporal processing are often intimately entwined components of sensory and motor processing. Given the biological importance of time it seems suitable that timing on the scale of hundreds of milliseconds in particular would rely on local and general properties of the brain’s hardware, rather than on a dedicated architecture that would require communication between a central clock and the diverse sensory and motor circuits that require timing.

Section Summary

These last three chapters on models or timing do not provide a comprehensive picture of all theoretical and computational work on the neural mechanisms of timing, but nevertheless, they highlight the diversity and complexity of the potential mechanisms of timing. A common theme in all three chapters is the issue of whether timing should be viewed as relying on dedicated or intrinsic neural processes. Fourth chapter (Hass and Durstewitz, this volume) provided a sample of different models including both dedicated and intrinsic models, while the last two chapters contrasted the prototypical examples of dedicated and intrinsic models. Fifth chapter (Meck and co-workers) reviewed the main instantiation of a dedicated model—one based on pacemaker-accumulator mechanisms—and subsequent extensions of this approach including the Striatal Beat-Frequency model. This chapter described an example of an intrinsic model in which most neural circuits could perform some temporal computations as an inherent consequence of neural dynamics and time-dependent neural properties.

As highlighted in fourth chapter the models discussed above are in no way mutually exclusive. Timing encompasses are large range of different computations which likely rely on a collection of different mechanisms. Of particular relevance in the issue of time-scale, and it is possible that dedicated mechanisms contribute to timing on the scale of many seconds, while intrinsic mechanisms underlie timing on the subsecond scale. Indeed such a dichotomy resonates with the notion that timing on the longer engages subjective and cognitive mechanisms, while those on shorter scale are unconscious and perceptual in nature.

References

Liberman AM, Delattre PC, Gerstman LJ, Cooper FS. Tempo of frequency change as a cue for distinguishing classes of speech sounds. J Exp Psychol. 1956;52:127–37.

Scott DR. Duration as a cue to the perception of a phrase boundary. J Acoust Soc Am. 1982;71(4):996–1007.

Schirmer A. Timing speech: a review of lesion and neuroimaging findings. Brain Res Cogn Brain Res. 2004;21(2):269–87.

Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270(5234):303–4.

Breitenstein C, Van Lancker D, Daum I. The contribution of speech rate and pitch variation to the perception of vocal emotions in a German and an American sample. Cogn Emot. 2001;15(1):57–79.

Mauk MD, Buonomano DV. The neural basis of temporal processing. Annu Rev Neurosci. 2004;27:307–40.

Buonomano DV, Mauk MD. Neural network model of the cerebellum: temporal discrimination and the timing of motor responses. Neural Comput. 1994;6:38–55.

Mauk MD, Donegan NH. A model of Pavlovian eyelid conditioning based on the synaptic organization of the cerebellum. Learn Mem. 1997;3:130–58.

Medina JF, Mauk MD. Computer simulation of cerebellar information processing. Nat Neurosci. 2000;3(Suppl):1205–11.

Herculano-Houzel S. The human brain in numbers: a linearly scaled-up primate brain. Front Hum Neurosci. 2009;3:32 (Original Research Article).

Broome BM, Jayaraman V, Laurent G. Encoding and decoding of overlapping odor sequences. Neuron. 2006;51(4):467–82.

Engineer CT, Perez CA, Chen YH, Carraway RS, Reed AC, Shetake JA, et al. Cortical activity patterns predict speech discrimination ability. Nat Neurosci. 2008;11:603–8.

Churchland MM, Yu BM, Sahani M, Shenoy KV. Techniques for extracting single-trial activity patterns from large-scale neural recordings. Curr Opin Neurobiol. 2007;17(5):609–18.

Schnupp JW, Hall TM, Kokelaar RF, Ahmed B. Plasticity of temporal pattern codes for vocalization stimuli in primary auditory cortex. J Neurosci. 2006;26(18):4785–95.

Itskov V, Curto C, Pastalkova E, Buzsáki G. Cell assembly sequences arising from spike threshold adaptation keep track of time in the hippocampus. J Neurosci. 2011;31(8):2828–34.

Jin DZ, Fujii N, Graybiel AM. Neural representation of time in cortico-basal ganglia circuits. Proc Natl Acad Sci U S A. 2009;106(45):19156–61.

Lebedev MA, O’Doherty JE, Nicolelis MAL. Decoding of temporal intervals from cortical ensemble activity. J Neurophysiol. 2008;99(1):166–86.

Crowe DA, Averbeck BB, Chafee MV. Rapid sequences of population activity patterns dynamically encode task-critical spatial information in parietal cortex. J Neurosci. 2010;30(35):11640–53.

Hahnloser RHR, Kozhevnikov AA, Fee MS. An ultra-sparse code underlies the generation of neural sequence in a songbird. Nature. 2002;419:65–70.

Long MA, Jin DZ, Fee MS. Support for a synaptic chain model of neuronal sequence generation. Nature. 2010;468(7322):394–9. doi:10.1038/nature09514.

Zucker RS. Short-term synaptic plasticity. Annu Rev Neurosci. 1989;12:13–31.

Zucker RS, Regehr WG. Short-term synaptic plasticity. Annu Rev Physiol. 2002;64:355–405.

Newberry NR, Nicoll RA. A bicuculline-resistant inhibitory post-synaptic potential in rat hippocampal pyramidal cells in vitro. J Physiol. 1984;348(1):239–54.

Buonomano DV, Merzenich MM. Net interaction between different forms of short-term synaptic plasticity and slow-IPSPs in the hippocampus and auditory cortex. J Neurophysiol. 1998;80:1765–74.

Batchelor AM, Madge DJ, Garthwaite J. Synaptic activation of metabotropic glutamate receptors in the parallel fibre-Purkinje cell pathway in rat cerebellar slices. Neuroscience. 1994;63(4):911–5.

Johnston D, Wu SM. Foundations of cellular neurophysiology. Cambridge: MIT Press; 1995.

Hooper SL, Buchman E, Hobbs KH. A computational role for slow conductances: single-neuron models that measure duration. Nat Neurosci. 2002;5:551–6.

Berridge MJ, Bootman MD, Roderick HL. Calcium signalling: dynamics, homeostasis and remodelling. Nat Rev Mol Cell Biol. 2003;4(7):517–29.

Burnashev N, Rozov A. Presynaptic Ca2+ dynamics, Ca2+ buffers and synaptic efficacy. Cell Calcium. 2005;37(5):489–95.

Lester RAJ, Clements JD, Westbrook GL, Jahr CE. Channel kinetics determine the time course of NMDA receptor-mediated synaptic currents. Nature. 1990;346(6284):565–7.

Reyes A, Sakmann B. Developmental switch in the short-term modification of unitary EPSPs evoked in layer 2/3 and layer 5 pyramidal neurons of rat neocortex. J Neurosci. 1999;19:3827–35.

Markram H, Wang Y, Tsodyks M. Differential signaling via the same axon of neocortical pyramidal neurons. Proc Natl Acad Sci U S A. 1998;95:5323–8.

Dobrunz LE, Stevens CF. Response of hippocampal synapses to natural stimulation patterns. Neuron. 1999;22(1):157–66.

Fukuda A, Mody I, Prince DA. Differential ontogenesis of presynaptic and postsynaptic GABAB inhibition in rat somatosensory cortex. J Neurophysiol. 1993;70(1):448–52.

Lambert NA, Wilson WA. Temporally distinct mechanisms of use-dependent depression at inhibitory synapses in the rat hippocampus in vitro. J Neurophysiol. 1994;72(1):121–30.

Ivry RB, Schlerf JE. Dedicated and intrinsic models of time perception. Trends Cogn Sci. 2008;12(7):273–80.

Buonomano DV, Merzenich MM. Temporal information transformed into a spatial code by a neural network with realistic properties. Science. 1995;267:1028–30.

Lee TP, Buonomano DV. Unsupervised formation of vocalization-sensitive neurons: a cortical model based on short-term and homeostatic plasticity. Neural Comput. 2012;24:2579–603.

Buonomano DV. Decoding temporal information: a model based on short-term synaptic plasticity. J Neurosci. 2000;20:1129–41.

Maass W, Natschläger T, Markram H. Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 2002;14:2531–60.

Maass W, Natschläger T, Markram H. A model of real-time computation in generic neural microcircuits. Adv Neural Inf Process Syst. 2003;15:229–36.

Haeusler S, Maass W. A Statistical analysis of information-processing properties of lamina-specific cortical microcircuit models. Cereb Cortex. 2007;17(1):149–62.

Karmarkar UR, Buonomano DV. Timing in the absence of clocks: encoding time in neural network states. Neuron. 2007;53(3):427–38.

Edwards CJ, Leary CJ, Rose GJ. Counting on inhibition and rate-dependent excitation in the auditory system. J Neurosci. 2007;27(49):13384–92.

Edwards CJ, Leary CJ, Rose GJ. Mechanisms of long-interval selectivity in midbrain auditory neurons: roles of excitation, inhibition, and plasticity. J Neurophysiol. 2008;100(6):3407–16.

Rose G, Leary C, Edwards C. Interval-counting neurons in the anuran auditory midbrain: factors underlying diversity of interval tuning. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2011;197(1):97–108.

Carlson BA. Temporal-pattern recognition by single neurons in a sensory pathway devoted to social communication behavior. J Neurosci. 2009;29(30):9417–28.

Kostarakos K, Hedwig B. Calling song recognition in female crickets: temporal tuning of identified brain neurons matches behavior. J Neurosci. 2012;32(28):9601–12.

Shepherd GM. The synaptic organization of the brain. New York: Oxford University; 1998.

Carvalho TP, Buonomano DV. Differential effects of excitatory and inhibitory plasticity on synaptically driven neuronal input–output functions. Neuron. 2009;61(5):774–85.

Pouille F, Scanziani M. Enforcement of temporal fidelity in pyramidal cells by somatic feed-forward inhibition. Science. 2001;293:1159–63.

Edwards CJ, Alder TB, Rose GJ. Auditory midbrain neurons that count. Nat Neurosci. 2002;5(10):934–6.

Alder TB, Rose GJ. Long-term temporal integration in the anuran auditory system. Nat Neurosci. 1998;1:519–23.

Sadagopan S, Wang X. Nonlinear spectrotemporal interactions underlying selectivity for complex sounds in auditory cortex. J Neurosci. 2009;29(36):11192–202.

Zhou X, de Villers-Sidani É, Panizzutti R, Merzenich MM. Successive-signal biasing for a learned sound sequence. Proc Natl Acad Sci U S A. 2010;107(33):14839–44.

Brosch M, Schreiner CE. Sequence sensitivity of neurons in cat primary auditory cortex. Cereb Cortex. 2000;10(12):1155–67.

Keele SW, Pokorny RA, Corcos DM, Ivry R. Do perception and motor production share common timing mechanisms: a correctional analysis. Acta Psychol (Amst). 1985;60(2–3):173–91.

Ivry RB, Hazeltine RE. Perception and production of temporal intervals across a range of durations – evidence for a common timing mechanism. J Exp Psychol Hum Percept Perform. 1995;21(1):3–18 [Article].

Perrett SP, Ruiz BP, Mauk MD. Cerebellar cortex lesions disrupt learning-dependent timing of conditioned eyelid responses. J Neurosci. 1993;13:1708–18.

Raymond J, Lisberger SG, Mauk MD. The cerebellum: a neuronal learning machine? Science. 1996;272:1126–32.

Laje R, Cheng K, Buonomano DV. Learning of temporal motor patterns: an analysis of continuous vs. reset timing. Front Integr Neurosci. 2011;5:61 (Original Research).

Buonomano DV, Laje R. Population clocks: motor timing with neural dynamics. Trends Cogn Sci. 2010;14(12):520–7.

Buonomano DV, Karmarkar UR. How do we tell time? Neuroscientist. 2002;8(1):42–51.

Medina JF, Garcia KS, Nores WL, Taylor NM, Mauk MD. Timing mechanisms in the cerebellum: testing predictions of a large-scale computer simulation. J Neurosci. 2000;20(14):5516–25.

Yamazaki T, Tanaka S. The cerebellum as a liquid state machine. Neural Netw. 2007;20(3):290–7.

Ivry RB, Keele SW. Timing functions of the cerebellum. J Cogn Neurosci. 1989;1:136–52.

Sussillo D, Toyoizumi T, Maass W. Self-tuning of neural circuits through short-term synaptic plasticity. J Neurophysiol. 2007;97(6):4079–95.

Jaeger H, Haas H. Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science. 2004;304(5667):78–80.

Sussillo D, Abbott LF. Generating coherent patterns of activity from chaotic neural networks. Neuron. 2009;63(4):544–57.

Pastalkova E, Itskov V, Amarasingham A, Buzsaki G. Internally generated cell assembly sequences in the rat hippocampus. Science. 2008;321(5894):1322–7.

Buonomano DV. Timing of neural responses in cortical organotypic slices. Proc Natl Acad Sci U S A. 2003;100:4897–902.

Johnson HA, Goel A, Buonomano DV. Neural dynamics of in vitro cortical networks reflects experienced temporal patterns. Nat Neurosci. 2010;13(8):917–9. doi:10.1038/nn.2579.

Buonomano DV, Maass W. State-dependent Computations: Spatiotemporal Processing in Cortical Networks. Nat Rev Neurosci. 2009;10:113–125.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Buonomano, D.V. (2014). Neural Dynamics Based Timing in the Subsecond to Seconds Range. In: Merchant, H., de Lafuente, V. (eds) Neurobiology of Interval Timing. Advances in Experimental Medicine and Biology, vol 829. Springer, New York, NY. https://doi.org/10.1007/978-1-4939-1782-2_6

Download citation

DOI: https://doi.org/10.1007/978-1-4939-1782-2_6

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4939-1781-5

Online ISBN: 978-1-4939-1782-2

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)