Abstract

Monitoring can aid in diagnosis and treatment. Historically, monitoring has progressed from physical observation to technological innovation. Fundamental physical principles both guide and limit the design and scope of monitors. Without a therapeutic intervention and an understanding of what exactly is being monitored and how, the impact of any monitoring is lessened. Computers have enabled real-time processing of data to assist our treatment.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

What Is the Purpose of Monitoring?

Why do we monitor? Monitoring, in the best circumstances, results in an improved diagnosis, allowing for more efficacious therapy. This was recognized over a century ago by the noted neurosurgeon, Harvey Cushing, to quote:

In all serious or questionable cases the patient's pulse and blood-pressure, their usual rate and level having been previously taken under normal ward conditions, should be followed throughout the entire procedure, and the observations recorded on a plotted chart. Only in this way can we gain any idea of physiological disturbances—whether given manipulations are leading to shock, whether there is a fall of blood-pressure from loss of blood, whether the slowed pulse is due to compression, and so on. [1]

Monitoring also allows titration of medication to a specific effect, whether it is a specific blood pressure, pain level, or electroencephalogram (EEG) activity. Despite all our uses of monitoring and technologies, clear data on their benefit is limited [2, 3]. Use of monitoring may not markedly change outcomes, despite changing intermediary events. However, simple logic dictates that we still need to monitor our patients, i.e., we do not need a randomized controlled trial (RCT) to continue our practice. This was humorously pointed out in a British Medical Journal article regarding RCT and parachutes: To paraphrase, those who don’t believe parachutes are useful since they haven’t been studied in an RCT, should jump out of a plane without one [4]. Our ability to monitor has improved over the years, changing from simple observation and basic physical exam to highly sophisticated technologies. No matter how simple or complicated our monitoring devices or strategies, all rely on basic physical and physiological principles.

History of Monitoring

Historically, patients were monitored by simply observing or palpating or listening: Is the skin pink? Or blue? Or pale? Palpating the pulse, is it strong, thready, etc.? Are respirations audible as well as visible? Monitoring has progressed from these large, grossly observable signals, recorded on pen and paper, to much smaller, insensible signals, and finally to complex analyzed signals, able to be stored digitally and used in control loops.

Pressure Monitoring

These first observations as referenced by Dr. Cushing involved large signals that are easy to observe without amplification (e.g., inspiratory pressure, arterial pressure, venous pressure via observation of neck veins). Pressure was one of the first variables to be monitored as the signal is fairly large, either in centimeters of water or millimeters of mercury. These historical units were physically easy to recognize and had a real-world correlate, i.e., the central venous pressure rose to a particular height in a tube marked with a scale, or the Korotkoff sounds were auscultated when the mercury column was at specific height. The use of the metric system and Systeme International is slowly replacing these units.

Monitoring pressure, while a large observable signal, is actually quite complex. In 1714 when Stephen Hales first directly measured arterial pressure in a horse by using a simple manometer, the column of blood rose to 8 ft 3 in. above the left ventricle [5]. Due to the height of the column, inertial forces, and practicality, this method is not used today. Pressures in the living organism are not static, but are dynamic and changing. Simple manometry as shown in Fig. 1.1 (allowing fluid to reach its equilibrium state against gravity in a tube) worked well for slowly changing pressures such as venous pressure, but the inertia of the fluid does not allow for precise measurement of the dynamic changes in arterial pressure [7]. Indirect measurement of blood pressure was pioneered by the method of Scipione Riva-Rocci in 1896 wherein the systolic blood pressure was determined by inflating a cuff linked to a mercury manometer until the radial pulse was absent. In 1905 Nicolai Korotkoff discovered that by auscultation, one could infer the diastolic pressure as well [5, 8]. In current use, arterial blood pressure is measured using computer-controlled, automated, noninvasive devices [9] or arterial cannulation [10] as well as the older methods. These methods may not have complete agreement, noninvasive blood pressure (NIBP) reading higher than arterial blood pressure during hypotension and lower during hypertension [11]. Use of the Riva-Rocci method (modified by using a Doppler ultrasound probe for detection of flow) has been reported to measure systolic blood pressure in patients with continuous flow left ventricular assist devices as the other noninvasive methods cannot be used [12]. What is old (measuring only systolic blood pressure by an occlusive method) is new again.

Manometry. A difference in gas pressure (P) in the two arms of the manometer tube performs work by moving the indicator fluid out of the higher-pressure arm until it reaches that point where the gravitational force (g) on the excess fluid in the low-pressure arm balances the difference in pressure. If the diameters of the two arms are matched, then the difference in pressure is a simple function of the difference in height (h) of the two menisci (Reproduced from Rampil et al. [6]; with kind permission from Springer Science + Business Media B.V.)

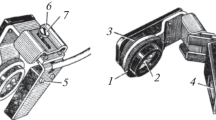

Development of pressure transducers as shown in Fig. 1.2 allowed analysis of the waveform to progress. Multiple technologies for pressure transducers exist. A common method is to use a device that changes its electrical resistance to pressure. This transducer is incorporated into an electronic circuit termed a Wheatstone bridge, wherein the changing resistance can be accurately measured and displayed as a graph of pressure versus time. Piezoelectric pressure transducers also exist which directly change their voltage output related to the pressure. These technologies, along with simple manometry, can be used to measure other pressures such as central venous pressure, pulmonary artery pressure, and intracranial pressure. The physiological importance and clinical relevance of course depends on all of these as well as the method of measurement.

Variable capacitance pressure transducers. Most pressure transducers depend on the principle of variable capacitance, in which a change in pressure alters the distance between the two plates of a capacitor, resulting in a change in capacitance. Deflection of the diaphragm depends on the pressure difference, diameter to the fourth power, thickness to the third power, and Young’s modulus of elasticity (Reproduced from Cesario et al. [13]; with kind permission from Springer Science + Business Media B.V.)

Information about the state of the organism can be contained in both the instantaneous and long epoch data. Waveform analysis of the peripheral arterial signal, the pulse contour, has been used to try and determine stroke volume and cardiac output [7, 14]. Looking at a longer time frame, the pulse pressure variation induced by the respiratory signal has been analyzed to determine the potential response to fluid therapy [15]. Electronic transducers changed the pressure signal into an electronic one that could be amplified, displayed, stored, and analyzed.

Electrical Monitoring

With the advent of technology and electronics (and the elimination of flammable anesthetic agents) in the twentieth century, monitoring accelerated. Within the technological aspects of monitoring, the electromagnetic spectrum has become one of the most fruitful avenues for monitoring. Electrical monitoring yields the electrocardiogram (ECG), electroencephalogram (EEG) (raw and processed), somatosensory-evoked potentials (SSEP), and neuromuscular block monitors (simple twitch and acceleromyography). We could now measure the electrical activity of the patient, both for cardiac and neurologic signals. Computers facilitate analysis of complex signals from these monitors.

The first electrocardiogram was recorded using a capillary electrometer (which involved observing the meniscus of liquid mercury and sulfuric acid under a microscope) by AD Waller who determined the surface field lines of the electrical activity of the heart [16]. Einthoven used a string galvanometer in the early 1900s, improving the accuracy and response time over the capillary electrometer [17]. In 1928, Ernstene and Levine compared a vacuum tube amplifier to Einthoven’s string galvanometer for ECG [18], concluding that the vacuum tube device was satisfactory. The use of any electronics in the operating theater was delayed until much later because the electronics were an explosion hazard in the presence of flammable anesthetics such as ether. Early intraoperative ECG machines were sealed to prevent any flammable gases or vapors from entering the area where ignition could occur.

The electroencephalogram (EEG) records the same basic physiology as the ECG (electrical activity summated by numbers of cells). However, the amplitude is tenfold smaller and the resistance much greater, creating larger technological hurdles. Using a string galvanometer, Berger in 1924 recorded the first human EEG from a patient who had a trepanation resulting in exposure of the cortex [19]. Further refinements led to development of scalp electrodes for the more routine determination of EEG. Processing the EEG can take the complex signal and via algorithms simplify it to a single, more easily interpreted number. The raw, unprocessed EEG still has value in determining the fidelity of the simple single number often derived from processed EEG measurements [20]. Somatosensory-evoked potentials can be used to evaluate potential nerve injury intraoperatively by evaluating the tiny signals evoked in sensory pathways and summating them over time to determine a change in the latency or amplitude of the signal [21].

The simple “twitch” monitor used to detect the degree of neuromuscular blockade caused by administration of either depolarizing or non-depolarizing muscle relaxants is a form of active electrical monitoring. Four supramaximal input stimuli at 0.5-s intervals (2 Hz) stimulate the nerve and the response is observed. Rather than simply seeing or feeling the “twitch,” a piezoelectric wafer can be attached to the thumb and the acceleration recorded electronically. Acceleromyography may improve the reliability by decreasing the “human factor” of observation as well as optimizing the muscle response if combined with preloading of the muscle being stimulated [22]. Understanding that electromagnetic waves can interfere with each other explains some of the modes of interference between equipment [23].

Light Monitoring

Many gases of interest absorb light energy in the infrared range. Since multiple gases can absorb in this range, there can be interference, most notably for nitrous oxide [24], as well as false identifications. Intestinal gases such as methane can interfere as well [25]. Capnography has multiple uses in addition to detecting endotracheal intubation in the operating room, such as detection of cardiac arrest, effectiveness of resuscitation, and detection of hypoventilation [26]. In the arrest situation, it must be remembered that less CO2 is produced, and other modalities may be indicated, such as bronchoscopy, which uses anatomic determination of correct endotracheal tube placement rather than physiological [27].

Pulse oximetry utilizes multiple wavelengths, both visible and IR, and complex processing to result in the saturation number displayed. In its simplest form, pulse oximetry can be understood as a combination of optical plethysmography, i.e., measuring the volume (or path length the light is traveling), correcting for the non-pulsatile (non-arterial) signal, and measuring the absorbances of the different species of hemoglobin (oxygenated, deoxygenated). The ratio of absorbances obtained is empirically calibrated to determine the percent saturation [28]. The use of multiple wavelengths can improve the accuracy of pulse oximetry and potentially provide for the measurement of other variables of interest (carboxyhemoglobin, methemoglobin, total hemoglobin) [29, 30].

Acoustic Monitoring

Sound is a longitudinal pressure wave. Auscultation with a stethoscope still has a place in modern medicine: An acute pneumothorax can be diagnosed by auscultation of decreased breath sounds, confirmed by percussion and hyperresonance, and treated by needle decompression (completing the process). A “simple” stethoscope actually has complex physics behind its operation. The bell and diaphragm act as acoustic filters, enhancing transmission of some sounds and impeding others to allow better detection of abnormalities [31].

Modern uses of sound waves have increased the frequency of the sound waves used to improve the spatial and temporal resolution, providing actual images of the internal structures in three dimensions [32]. Now only of historical use, A-mode ultrasonography (standing for amplitude mode) displayed the amplitude of the signal versus distance, useful for detecting a pericardial effusion or measuring fetal dimensions. B-mode ultrasound stands for “brightness mode” and produced a “picture” where the amplitude was converted to brightness. Multiple B-mode scans combine to produce the now common two-dimensional ultrasonography. M-mode echo displays the “brightness” over time, giving very fine temporal resolution [33]. These are still subject to physical limitations, i.e., sound transmission is relatively poor through air or bone (hence, the advantage of transesophageal echocardiography (TEE) vs. surface echocardiography), and fast-moving objects are better resolved using M-mode echo. The Doppler principle, involving the shift in wavelength by moving objects, can be used to detect and measure blood velocity in various vessels.

Temperature Monitoring

Common household thermometers use liquid (or a combination of metals) that expands with heat, obviously impractical for intraoperative monitoring. For continual monitoring, a thermistor is convenient. A thermistor works as part of a Wheatstone bridge, wherein the change in resistance of the thermistor is easy calibrated and converted into a change in temperature. Small, intravascular thermistors are used in pulmonary artery catheters for thermodilution monitoring of cardiac output as shown in Fig. 1.3. Newer electronic thermometers use IR radiation at the tympanic membrane or temporal artery. Unfortunately, despite ease of use, the accuracy is not as good as other methods [35].

Thermodilution for cardiac output measurement via Stewart-Hamilton indicator-dilution formula. The integral of change in temperature (area under the curve) is inversely related to cardiac output. A smooth curve with a rapid upstroke and slower delay to baseline should be sought. Sources of error include ventilatory variation, concurrent rapid-volume fluid administration, arrhythmias, significant tricuspid or pulmonary regurgitation, intracardiac shunt, and incomplete injection volume (causing overestimation of cardiac output). Lower cardiac output states result in relative exaggeration of these errors. Intraoperatively, ventilation can be temporarily suspended to measure cardiac output during exhalation, and several measurements should be averaged. If a second peak in the thermodilution curve is seen, a septal defect with recirculation of cooled blood through a left-to-right shunt should be suspected (Reproduced from Field [34]; with kind permission from Springer Science + Business Media B.V.)

Chemical Monitoring

Glucose was one of the first chemistries monitored in medicine, being related to diabetes [36]. The evolution of glucose measurements parallels that of many other measurement values, starting with chemical reagents, such as “Benedict’s solution,” mixed in actual test tubes, to miniaturization, to enzyme associated assays. Current point-of-care glucometers were primarily designed for home use and self-monitoring and their accuracy can be suspect [37]. But controversy and disagreement between different methods of measurement is a long-standing tradition in medicine [38].

Blood gas analysis began with the Clark electrode for oxygen in the early 1950s [39], followed by Severinghaus electrode for CO2 in the late 1950s [40, 41]. Other ions (calcium, sodium, etc.) can be measured by using ion-selective barriers and similar technologies. Most chemical measurements involve removing a sample from the patient. Optode technology allows continuous, invasive measurement directly in the patient. This technology uses optically sensitive reagent exposed to the body fluids via a membrane, and the information transmitted via a fiberoptic cable [42–44]. Advantages to these continuous techniques have yet to be seen.

Respiratory carbon dioxide was identified by chemist Joseph Black in the 1700s. He had previously discovered the gas in other products of combustion. Most respiratory analysis is done by infrared absorption. (Oxygen is a diatomic gas and does not absorb in the infrared range so either amperometric fuel cell measurements or paramagnetism is used.) Exhaled carbon dioxide can be detected and partially quantitated in the field by pH-induced color changes, akin to litmus paper. Of note, gastric acid can produce color changes suggestive of respiratory CO2, providing a false assurance that the endotracheal tube is in the trachea, not in the esophagus [45]. More information is provided using formal capnography [27].

Point-of-care testing uses different reactions than standard laboratory test and results may not be directly comparable [46]. A test may be both accurate and precise, but not clinically useful in a particular situation. Measurement of a single value in the coagulation cascade may contain insufficient information to predict the outcome of an intervention. For example, antiphospholipid antibodies can increase the measured prothrombin time, while the patient is actually hypercoagulable [47].

Flow Monitoring

It is a source of regret that the measurement of flow is so much more difficult than the measurement of pressure. This has led to an undue interest in the blood pressure manometer. Most organs, however, require flow rather than pressure…

Jarisch, 1928 [48]

Flow is one of the most difficult variables to measure. The range of interest can vary greatly, from milliliters per minute in blood vessels to dozens of liters per minute in ventilation. Multiple techniques can be used to attempt to measure flow. Flow in the respiratory system and the anesthetic machine can be measured using variations on industrial and aeronautical devices (pitot tubes, flow restrictors combined with pressure sensors) and have an advantage that the flow can be directed through the measuring device. Cardiac output and organ flow are much more difficult to measure.

Adolf Fick proposed measuring cardiac output in the late 1800s using oxygen consumption and the arterial and venous oxygen difference [49]. A variation of this method uses partial rebreathing of CO2. Most measures of cardiac output are done with some variation of the indicator-dilution technique [14, 50]. Most techniques do not measure flow directly, but measure an associated variable. Understanding the assumptions of measurement leads to a better understanding of the accuracies and inaccuracies of the measurement. Indicator-dilution techniques work via integrating the concentration change over time and can work for various indicators (temperature, carbon dioxide, dyes, lithium, and oxygen) with different advantages and disadvantages. Temperature can be either a room temperature or ice-cold fluid bolus via a pulmonary artery catheter (with a thermistor at the distal end) or a heat pulse via a coil built into the catheter. Similar to injecting a hot or cold bolus, chemicals, such as lithium, can be injected intravenously and measured in an arterial catheter and the reading converted to a cardiac output [51]. An easy conceptual way to picture the thermodilution techniques (and to determine the direction of an injectate error) is to imagine trying to measure the volume of a teacup versus a swimming pool by placing an ice cube in each. The temperature change will be much greater in the teacup because of its smaller volume (correlates to the flow or cardiac output) than in the swimming pool. Decreasing the amount of injectate or increasing its temperature will overestimate the volume.

Measuring cardiac output by Doppler technique involves measuring the Doppler shift, calculating the velocity of the flow, measuring the cross sectional area and ejection time, and calculating the stroke volume. Then cardiac output is simply stroke volume times heart rate, assuming the measurement is made at the aortic root. Most clinical devices measure the velocity in the descending aorta and use a nomogram or other correction factors to determine total output [52].

Processed Information

Monitoring has progressed from large, grossly observable signals, recorded on pen and paper, to much smaller, unable to be sensed signals, and finally to complex analyzed signals, able to be stored digitally and used in control loops.

Data when obtained from monitoring can be stored using information systems or further analyzed in multiple manners. Processed data can reveal information that is not otherwise apparent. The SSEP can use data summation to elucidate a signal from a very noisy EEG background. Other processed EEG methods to measure depth of anesthesia use combinations of Fourier transform, coherence analysis, and various proprietary algorithms to output a single number indicating depth. Pulse oximetry and NIBP are two common examples of a complex signal being simplified into simpler numbers. Pulse contour analysis attempts to extract stroke volume from the arterial waveform [53].

Interactive monitors (where the system is “pinged”), either via external means (NMB monitor, SSEP) or internal changes (systolic pressure variation, pulse pressure variation, respiratory variation), can be thought of as “dynamic indices” wherein the information is increased by monitoring the system in several states or under conditions of various stimulation [54].

Automated feedback loops have been studied for fluid administration, blood pressure, glucose, and anesthetic control [55–57]. Even if automated loops provide superior control under described conditions, clinically humans remain in the loop.

Conclusion

Although monitoring of patients had been ongoing for years, the ASA standards for basic anesthetic monitoring were first established in 1986 and periodically revised. Individual care units (obstetric, neuro intensive care, cardiac intensive care, telemetry) may have their own standards, recommendations, and protocols.

While not all monitoring may need to be justified by RCT, not all monitoring may be beneficial. The data may be in error and affect patient treatment in an adverse manner. Automated feedback loops can accentuate this problem. Imagine automated blood pressure control when the transducer falls to the floor: Sudden artifactual hypertension is immediately treated resulting in actual hypotension and hypoperfusion.

All measured values have some variation. Understanding the true accuracy and precision of a device is difficult. We have grown accustomed to looking at correlations, which provide some information but give a “good” value merely by virtue of correlation over a wide range, not true accuracy nor precision. A Bland-Altman analysis provides more information and a better method to compare two monitoring devices, by showing the bias and the precision. The Bland-Altman analysis still has limitations, as evidenced by proportional bias, wherein the bias and precision may be different at different values [58]. Receiver operator curves are yet another manner of assessment for tests that have predictive values.

The ultimate patient monitor would measure all relevant parameters of every organ, displayed in an intuitive and integrated manner; aid in our differential diagnosis: track ongoing therapeutic interventions; and reliably predict the future: the ultimate patient monitor is a physician.

References

Cushing H. Technical methods of performing certain operations. Surg Gynecol Obstet. 1908;6:237–46.

Pedersen T, Moller AM, Pedersen BD. Pulse oximetry for perioperative monitoring: systematic review of randomized, controlled trials. Anesth Analg. 2003 Feb;96(2):426–31. Table of contents.

Moller JT, Pedersen T, Rasmussen LS, Jensen PF, Pedersen BD, Ravlo O, et al. Randomized evaluation of pulse oximetry in 20,802 patients: I. Design, demography, pulse oximetry failure rate, and overall complication rate. Anesthesiology. 1993 Mar;78(3):436–44.

Smith GC, Pell JP. Parachute use to prevent death and major trauma related to gravitational challenge: systematic review of randomised controlled trials. BMJ. 2003 Dec 20;327(7429):1459–61.

Karnath B. Sources in error in blood pressure measurement. Hospital Physician. 2002;38(3):33–7.

Rampil I, Schwinn D, Miller R. Physics principles important in anesthesiology, Atlas of anesthesia, vol. 2. New York: Current Medicine; 2002.

Thiele RH, Durieux ME. Arterial waveform analysis for the anesthesiologist: past, present, and future concepts. Anesth Analg. 2011 Oct;113(4):766–76.

Segall HN. How Korotkoff, the surgeon, discovered the auscultatory method of measuring arterial pressure. Ann Intern Med. 1975 Oct;83(4):561–2.

Tholl U, Forstner K, Anlauf M. Measuring blood pressure: pitfalls and recommendations. Nephrol Dial Transplant. 2004 Apr;19(4):766–70.

Brzezinski M, Luisetti T, London MJ. Radial artery cannulation: a comprehensive review of recent anatomic and physiologic investigations. Anesth Analg. 2009 Dec;109(6):1763–81.

Wax DB, Lin HM, Leibowitz AB. Invasive and concomitant noninvasive intraoperative blood pressure monitoring: observed differences in measurements and associated therapeutic interventions. Anesthesiology. 2011 Nov;115(5):973–8.

Wieselthaler G. Non-invasive blood pressure monitoring in patients with continuous flow rotary LVAD. ASAIO. 2000;46(2):196.

Cesario D, Reynolds D, Swerdlow C, Shivkumar K, Weiss J, Fonarow G, et al. Novel implantable nonpacing devices in heart failure, Atlas of heart diseases, vol. 15. New York: Current Medicine; 2005.

Funk DJ, Moretti EW, Gan TJ. Minimally invasive cardiac output monitoring in the perioperative setting. Anesth Analg. 2009 Mar;108(3):887–97.

Cannesson M, Le Manach Y, Hofer CK, Goarin JP, Lehot JJ, Vallet B, et al. Assessing the diagnostic accuracy of pulse pressure variations for the prediction of fluid responsiveness: a "gray zone" approach. Anesthesiology. 2011 Aug;115(2):231–41.

Sykes AH. A D Waller and the electrocardiogram, 1887. Br Med J (Clin Res Ed). 1987 May 30;294(6584):1396–8.

Rivera-Ruiz M, Cajavilca C, Varon J. Einthoven's string galvanometer: the first electrocardiograph. Tex Heart Inst J. 2008;35(2):174–8.

Ernstene AC. A comparison of records taken with the einthoven string galvanometer and the amplifier-type electrocardiograph. Am Heart J. 1928;4:725–31.

Collura TF. History and evolution of electroencephalographic instruments and techniques. J Clin Neurophysiol. 1993 Oct;10(4):476–504.

Bennett C, Voss LJ, Barnard JP, Sleigh JW. Practical use of the raw electroencephalogram waveform during general anesthesia: the art and science. Anesth Analg. 2009 Aug;109(2):539–50.

Tsai SW, Tsai CL, Wu PT, Wu CY, Liu CL, Jou IM. Intraoperative use of somatosensory-evoked potential in monitoring nerve roots. J Clin Neurophysiol. 2012 Apr;29(2):110–7.

Claudius C, Viby-Mogensen J. Acceleromyography for use in scientific and clinical practice: a systematic review of the evidence. Anesthesiology. 2008 Jun;108(6):1117–40.

Patel SI, Souter MJ. Equipment-related electrocardiographic artifacts: causes, characteristics, consequences, and correction. Anesthesiology. 2008 Jan;108(1):138–48.

Severinghaus JW, Larson CP, Eger EI. Correction factors for infrared carbon dioxide pressure broadening by nitrogen, nitrous oxide and cyclopropane. Anesthesiology. 1961 May–Jun;22:429–32.

Mortier E, Rolly G, Versichelen L. Methane influences infrared technique anesthetic agent monitors. J Clin Monit Comput. 1998 Feb;14(2):85–8.

Kodali BS. Capnography outside the operating rooms. Anesthesiology. 2013 Jan;118(1):192–201.

Cardoso MM, Banner MJ, Melker RJ, Bjoraker DG. Portable devices used to detect endotracheal intubation during emergency situations: a review. Crit Care Med. 1998 May;26(5):957–64.

Alexander CM, Teller LE, Gross JB. Principles of pulse oximetry: theoretical and practical considerations. Anesth Analg. 1989 Mar;68(3):368–76.

Aoyagi T, Fuse M, Kobayashi N, Machida K, Miyasaka K. Multiwavelength pulse oximetry: theory for the future. Anesth Analg. 2007 Dec;105(6 Suppl):S53–8. Tables of contents.

Shamir MY, Avramovich A, Smaka T. The current status of continuous noninvasive measurement of total, carboxy, and methemoglobin concentration. Anesth Analg. 2012 May;114(5):972–8.

Rappaport M. Physiologic and physical laws that govern auscultation, and their clinical application. Am Heart J. 1941;2(3):257–318.

Hung J, Lang R, Flachskampf F, Shernan SK, McCulloch ML, Adams DB, et al. 3D echocardiography: a review of the current status and future directions. J Am Soc Echocardiogr. 2007 Mar;20(3):213–33.

Feigenbaum H. Role of M-mode technique in today's echocardiography. J Am Soc Echocardiogr. 2010 Mar;23(3):240–57. 335–7.

Field L. Electrocardiography and invasive monitoring of the cardiothoracic patient, Atlas of cardiothoracic anesthesia, vol. 1. New York: Current Medicine; 2009.

Sessler DI. Temperature monitoring and perioperative thermoregulation. Anesthesiology. 2008 Aug;109(2):318–38.

Clarke SF, Foster JR. A history of blood glucose meters and their role in self-monitoring of diabetes mellitus. Br J Biomed Sci. 2012;69(2):83–93.

Rice MJ, Pitkin AD, Coursin DB. Review article: glucose measurement in the operating room: more complicated than it seems. Anesth Analg. 2010 Apr 1;110(4):1056–65.

Davison JM, Cheyne GA. History of the measurement of glucose in urine: a cautionary tale. Med Hist. 1974 Apr;18(2):194–7.

Clark Jr LC, Wolf R, Granger D, Taylor Z. Continuous recording of blood oxygen tensions by polarography. J Appl Physiol. 1953 Sep;6(3):189–93.

Severinghaus JW, Bradley AF. Electrodes for blood pO2 and pCO2 determination. J Appl Physiol. 1958 Nov;13(3):515–20.

Severinghaus JW. First electrodes for blood PO2 and PCO2 determination. J Appl Physiol. 2004 Nov;97(5):1599–600.

Halbert SA. Intravascular monitoring: problems and promise. Clin Chem. 1990 Aug;36(8 Pt 2):1581–4.

Wahr JA, Tremper KK. Continuous intravascular blood gas monitoring. J Cardiothorac Vasc Anesth. 1994 Jun;8(3):342–53.

Ganter M, Zollinger A. Continuous intravascular blood gas monitoring: development, current techniques, and clinical use of a commercial device. Br J Anaesth. 2003 Sep;91(3):397–407.

Srinivasa V, Kodali BS. Caution when using colorimetry to confirm endotracheal intubation. Anesth Analg. 2007 Mar;104(3):738. Author reply 9.

Douglas AD, Jefferis J, Sharma R, Parker R, Handa A, Chantler J. Evaluation of point-of-care activated partial thromboplastin time testing by comparison to laboratory-based assay for control of intravenous heparin. Angiology. 2009 Jun–Jul;60(3):358–61.

Perry SL, Samsa GP, Ortel TL. Point-of-care testing of the international normalized ratio in patients with antiphospholipid antibodies. Thromb Haemost. 2005 Dec;94(6):1196–202.

Prys-Roberts C. The measurement of cardiac output. Br J Anaesth. 1969 Sep;41(9):751–60.

Geerts BF, Aarts LP, Jansen JR. Methods in pharmacology: measurement of cardiac output. Br J Clin Pharmacol. 2011 Mar;71(3):316–30.

Reuter DA, Huang C, Edrich T, Shernan SK, Eltzschig HK. Cardiac output monitoring using indicator-dilution techniques: basics, limits, and perspectives. Anesth Analg. 2010 Mar 1;110(3):799–811.

Garcia-Rodriguez C, Pittman J, Cassell CH, Sum-Ping J, El-Moalem H, Young C, et al. Lithium dilution cardiac output measurement: a clinical assessment of central venous and peripheral venous indicator injection. Crit Care Med. 2002 Oct;30(10):2199–204.

Schober P, Loer SA, Schwarte LA. Perioperative hemodynamic monitoring with transesophageal Doppler technology. Anesth Analg. 2009 Aug;109(2):340–53.

Lahner D, Kabon B, Marschalek C, Chiari A, Pestel G, Kaider A, et al. Evaluation of stroke volume variation obtained by arterial pulse contour analysis to predict fluid responsiveness intraoperatively. Br J Anaesth. 2009 Sep;103(3):346–51.

Marik P. Hemodynamic parameter to guide fluid therapy. Transfusion Alter Transfusion Med. 2010;11(3):102–12.

Rinehart J, Liu N, Alexander B, Cannesson M. Review article: closed-loop systems in anesthesia: is there a potential for closed-loop fluid management and hemodynamic optimization? Anesth Analg. 2012 Jan;114(1):130–43.

Liu N, Chazot T, Hamada S, Landais A, Boichut N, Dussaussoy C, et al. Closed-loop coadministration of propofol and remifentanil guided by bispectral index: a randomized multicenter study. Anesth Analg. 2011 Mar;112(3):546–57.

Luginbuhl M, Bieniok C, Leibundgut D, Wymann R, Gentilini A, Schnider TW. Closed-loop control of mean arterial blood pressure during surgery with alfentanil: clinical evaluation of a novel model-based predictive controller. Anesthesiology. 2006 Sep;105(3):462–70.

Morey TE, Gravenstein N, Rice MJ. Assessing point-of-care hemoglobin measurement: be careful we don't bias with bias. Anesth Analg. 2011 Dec;113(6):1289–91.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Szocik, J.F. (2014). Overview of Clinical Monitoring. In: Ehrenfeld, J., Cannesson, M. (eds) Monitoring Technologies in Acute Care Environments. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-8557-5_1

Download citation

DOI: https://doi.org/10.1007/978-1-4614-8557-5_1

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-8556-8

Online ISBN: 978-1-4614-8557-5

eBook Packages: MedicineMedicine (R0)