Abstract

This chapter presents two methodologies, Selection-Integrated Optimization (SIO) and Comprehensive Product Platform Planning (CP3), which convert the inherently combinatorial product family optimization problem into continuous optimization problems. These conversions enable one-step product family optimization without presuming the choice of platform and scaling design variables. Such approaches also enable taking full advantage of continuous optimization methods.

Portions of this paper appeared in S. Chowdhury, A. Messac, and R. A. Khire (2011) Comprehensive Product Platform Planning (CP^3) Framework, ASME Journal of Mechanical Design, 133(11), Paper No. 101004 (© ASME 2011), reprinted with permission.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In developing a successful product family, designers must translate the qualitative leveraging strategies into useful customer requirements to guide platform-based product development (Simpson et al. 2006). To this end, the engineering design community has developed and employed effective quantitative methods over the last two decades. The majority of these quantitative methods use some form of numerical optimization. A classification of these quantitative methods and a brief description of each class of methods are provided in the book-chapter by Simpson (2006). In this chapter, product platform planning refers to such quantitative design of a family of products.

Among the optimization-based product family design (PFD) methods, the class of methods that promises to avoid suboptimal solutions is the single-stage approach with platform variables determined during optimization (Khire et al. 2006). A brief summary of this class of methods is provided in the book-chapter by Simpson (2006). The quantification of the product design is generally a continuous process, whereas the platform identification is inherently a discrete process. The simultaneous (1) identification of platform and scaling design variables and (2) determination of the corresponding variable values are a challenging task (Khajavirad and Michalek 2008). The likely presence of complex nonlinear system functions further adds to this challenge. Powerful mixed-discrete nonlinear optimization methods need to be employed to address this challenge. As discussed in the book-chapter by Simpson (2006), genetic algorithms (GA) are suitable for this purpose.

An effective GA-based product family design method was developed by Khajavirad et al. (2009). In this GA-based method, a decomposition solution strategy is developed using the binary non-sorting genetic algorithm-II (NSGA-II) (Deb et al. 2002). This method provides flexibility in allowing the formation of a platform whenever a design variable (value) is shared by more than one product, and not necessarily all products in the family. This strategy eliminates the “all or none” restriction (Simpson 2006). The significant computational expense of the binary GA approach, especially in the case of large-scale problems, is addressed using a parallelized sub-GA solution strategy. Similar flexibility in platform creation is also presented by Chen and Wang (2008). The latter paper presents a PFD method that uses a 2-level chromosome genetic algorithm (2-LCGA) and proposes an information theoretical approach that incorporates fuzzy clustering and Shannon’s entropy to identify platform design variables. However, in this method, the process of platform creation precedes performance optimization of the product family. Consequently, the method developed by Chen and Wang (2008) exhibits the limiting attributes of the “two-step” approach. Another GA-based product family design method was presented by Jiao et al. (2007a), in which a generic method was developed to address various product family design scenarios. This method also included a hybrid constraint-handling technique to address complex and distinguishing constraints at different stages along the evolutionary process. The efficiency of the method developed by Jiao et al. (2007a) was illustrated by applying it to design a family of electric motors.

Product family design is a complex combinatorial optimization problem, which is also known to be intractable or NP-hard (Jiao et al. 2007b). The presence of discrete (integer) design variables appreciably increases the burden on the applied optimization method, particularly when one is dealing with large-scale real-life products (e.g., automobiles) and/or a large number of product variants (e.g., electric motors). In addition, the choice of optimization algorithms becomes limited when solving such a mixed-discrete optimization problem. Hence, an effective continuous approximation of the single-step platform planning model could be expected to provide a welcome reprieve in this scenario. In the recent years, two such promising methods have been reported, which:

-

Develop a tractable continuous approximation of the one-step product platform planning problem.

-

Solve the approximated problem using standard continuous optimization methods.

This chapter presents the development of the two one-step continuous PFD approaches: (1) the Selection-Integrated Optimization [SIO (Khire 2006)] and (2) the Comprehensive Product Platform Planning [CP3 (Chowdhury et al. 2011)]. Section 12.2 provides the formulations of the integrated product platform planning models in these two approaches. Section 12.3 presents the continuous optimization strategies developed and implemented in these two approaches. Section 12.4 discusses the results from the application of these approaches to design families of universal electric motors. Closing remarks are offered in Sect. 12.5.

2 Integrated Product Planning Models

2.1 Selection-Integrated Optimization (SIO) Model

The SIO method, introduced by Khire and Messac (2008), solves a continuous approximation of the product family design problem. A penalty function is formulated to represent the lack of commonality among products. Subsequently, product family optimization is performed by implementing the Variable-Segregating Mapping-Function (VSMF) scheme in the order shown in Fig. 12.1. The motivation of the SIO methodology is to eliminate a significant source of suboptimality involved in the adaptive system optimization (or product family design) procedure caused by the separation of the (1) selection and (2) optimization processes. The next section discusses the development of the VSMF strategy and how it is implemented within the SIO framework to create and optimize a continuous approximation of the product family design problem.

The example of the universal electric motor family is used to illustrate the formulation and the application of the SIO method. As seen from the book-chapter by Simpson (2006), the design configuration of the motor changes to satisfy different output torque requirements. For the motor, a change in the design configuration involves the scaling of the following design variables: cross-sectional area of the armature (\( {A_{wa }} \)), cross-sectional area of the field pole wire (\( {A_{wf }} \)), number of wire turns on each field pole (\( {N_s} \)), number of wire turns on the armature (\( {N_c} \)), stack length of the motor (\( L \)), radius of the stator (\( {r_0} \)), and thickness of the stator (\( t \)). The scaling of these design variables is likely to incur an additional manufacturing cost because of factors such as complex tooling requirements. These additional factors contribute to the penalty, which is measured in terms of the difference in the values of the above scaling variables.

2.1.1 Penalty Function Formulation

The penalty function measure is applicable mostly in the case of scale-based product families; it is defined in terms of the design variables. Reducing the penalty function seeks to increase the commonality among the products in a family. Mathematically, the penalty function, \( {f_{\mathrm{ pen}}} \), is expressed as the summation of ratios of the standard deviation and mean values of each design variable within the product family, as given by

where n is the number of design variables that participate in platform planning, the parameter \( {\sigma_{{{x_i}}}} \) represents the standard deviation of the generic ith design variable across all the product variants, and \( {\mu_{{{x_i}}}} \) represents the mean of the generic ith design variable across all the product variants. In the case of the motor family, the penalty function is therefore expressed as

where the subscripts indicate which variable the standard deviation and the mean parameters are associated with—e.g., \( {\sigma_L} \) represents the standard deviation of the motor stack length across all the motor variants and \( {\mu_L} \) represents the mean of the motor stack length across all the motor variants. It is important to note that this penalty function can be further advanced by incorporating weights for the terms corresponding to each design variable (a weighted sum). The minimization of the above penalty function results in the minimization of the standard deviations, which encourages the design variables to become a part of the platform.

2.1.2 SIO Problem Formulation

In the bi-objective problem for the electric motor product family (with \( N \) motor variants), the performance and the penalty objective functions are combined into an aggregate objective function (AOF). The optimization problem is formulated as

where \( {T^k} \), \( P_{out}^k \), \( M_{total}^k \), \( {H^k} \), and \( {\eta^k} \), respectively, represent the torque, the power output, the total mass, the magnetization intensity, and the efficiency of the kth motor; \( {w_1} \) and \( {w_2} \) are the weights for the performance and the penalty objectives, respectively; and \( {f_{\mathrm{ per}}} \) is the overall performance objective of the family. In the case of the motor family, Khire (2006) used the average of the individual motor efficiencies as the performance objective:

2.2 Comprehensive Product Platform Planning (CP3) Model

Several existing PFD methodologies [including recent methods (Chen and Wang 2008)] do not readily represent the platform planning process by a mathematical model that is independent of the optimization process. In other words, the models are formulated such that a particular class of optimization algorithms must be leveraged to effectively optimize the PFD. The development of generalized models of the platform planning process would provide the leverage to choose from different classes of optimization algorithms to solve the problem; this choice practically depends on the complexity of the concerned system and the user’s experience with particular optimization methods. Moreover, a generalized mathematical model can facilitate helpful investigation of the underlying mathematics (complex and combinatorial) of product platform planning.

Existing PFD models also tend to make a clear distinction between scale-based and module-based product families. From Table 8 in the book-chapter by Simpson (2006), it can be seen that a majority of the existing PFD methods are reported to be suitable for “either scale-based or module-based” product families. The scale-based PFD methods assume that each product is comprised of all the design variables involved in the family; as a result, these methods do not readily apply to modular product families. On the other hand, typical modular PFD methods often cannot readily account (without platform/scaling assumptions) for the simultaneous presence of modular and scalable product attributes. The simultaneous presence of modular and scalable product attributes is common for complex real-life systems/products—e.g., in a laptop, the DVD drive can have both modular (DVD, DVD-RW, Blue-ray Disc) and scalable (drive speeds = 1×, 2×, …, N× 1.35 MB/s) properties.

The Comprehensive Product Platform Planning (CP3) method, developed by Chowdhury et al. (2011), formulates a generalized model that is (1) independent of the eventual solution strategy and (2) seeks to avoid traditional distinctions between modular and scalable families. This model not only allows effective design optimization of product families but also promotes further investigation of the underlying processes in product platform planning, e.g., (1) how sensitive are the commonality indices to the number of product variants in each platform and (2) how the production volume of each product variant affects optimal product platform planning. Other important features of the CP3 model are:

-

This model avoids the “all or none” restriction (Simpson 2006); this approach thus allows the formation of subfamilies.

-

This model facilitates simultaneous (1) selection of platform/scaling design variables and (2) optimization of the physical design of the product variants.

-

This model yields a mixed-integer nonlinear programming problem (MINLP) for the optimization process.

In the subsequent sections, the formulation of the CP3 model is presented and is followed by an illustration of this model using the example of a representative “4 products-5 variables” family. This section is concluded with the definition of the overall optimization problem yielded by the CP3 model.

2.2.1 Formulation of the CP3 Model

The CP3 model is founded on the concept of a mixed-integer nonlinear equality constraint (Chowdhury et al. 2011). This formulation is illustrated using a representative family of two products that are comprised of three design variables each. Table 12.1 shows the variables involved in this example. For a design variable, \( {x_{kj }} \), in Table 12.1, the superscript (\( k \)) and the subscript (\( j \)) represent the product number and the variable number, respectively. The binary-integer variables (represented by \( \lambda \)) given in Table 12.1 are defined as

Hence, the \( \lambda \) variables determine the commonality among products, with respect to the system design variables. The general MINLP problem, formulated to represent the design optimization of the product family example (shown in Table 12.1), is given by

and where \( {f_{\mathrm{ perf}}} \) and \( {f_{\mathrm{ cost}}} \) are the objective functions that represent the performance and the cost of the product family, respectively. In Eq. (12.6), \( {g_i} \) and \( {h_i} \), respectively, represent the inequality and equality constraints related to the physical design of the product. The first equality constraint in Eq. (12.6), which involves the generic parameters \( \lambda_j^{kl }, \) is termed the commonality constraint. This constraint can be represented in a compact matrix format as

This formulation can be extended to a general product family, comprising \( N \) products and \( n \) design variables, as given by

The matrix \( \varLambda \) is called the commonality constraint matrix. This matrix is a symmetric block diagonal matrix, where the \( j \)th block corresponds to the \( j \)th design variable. An explicit representation of each block of the \( \varLambda \) matrix is given by

The commonality constraint matrix can be derived from the generalized commonality matrix \( \lambda \) that is expressed as

It can be observed from Eq. (12.10) that the commonality matrix is also a symmetric block diagonal matrix. The off-diagonal elements of the commonality matrix (\( \lambda_j^{kl } \)) are called the commonality variables in the remainder of this chapter. The diagonal elements of the commonality matrix (\( \lambda_j^{kk } \)) determine whether the \( j \)th variable is included in product-\( k \). This commonality matrix definition is similar to that presented by Khajavirad and Michalek (2008). Every block of the constraint commonality matrix can be expressed as a function of the corresponding commonality matrix block:

In typically modular product families, different products might comprise different types and different numbers of modules. Each module is comprised of a particular set of design variables that are also known as module attributes; these attributes may be shared by more than one module in a complex system. Consequently, different products can be comprised of physically different sets of design variables. In order to address a modular product family design, a product platform planning model should account for the inclusion, the exclusion, and the substitution of design variables. These three possibilities can be captured by the novel commonality matrix. In this context, one of the following three distinct scenarios can occur:

-

1.

The \( j \)th design variable is required in product-\( k \); consequently, \( \lambda_j^{kk }=1 \) would be known a priori (fixed).

-

2.

The \( j \)th design variable is not required for product-\( k \); consequently, \( \lambda_j^{kk }=0 \) would be known a priori (fixed).

-

3.

Inclusion of the \( j \)th design variable is optional for product-\( k \); the corresponding \( \lambda_j^{kk } \) element is allowed to be determined during the course of product family optimization (treated as a variable).

If the third scenario occurs, the CP3 model does not make any prior assumptions regarding the attribute values or whether a module (or the corresponding attributes) is shared by multiple products (forms a platform or not). If the first scenario occurs, the module attributes themselves may scale among the products that must include them. The commonality matrix blocks representing the scaling design variables (attributes included in all product variants) should have the diagonal elements fixed at one. Therefore, careful specification/management of the diagonal elements of the commonality matrix automatically addresses the modularity/scalability properties of the design variables, without imposing limiting distinctions. This model allows a coherent consideration of the likely combination of scaling and modular attributes in a product family, which is uniquely helpful.

To further explain the helpful capability of the CP3 model to flexibly address combined modular-scaling families, a family of laptops example is considered. Three laptops (a 15 in., a 13 in., and a netbook) are being designed, and the manufacturer decides that the 15 in. will have a DVD drive, the 13 in. might/might not have a DVD drive (based on the ensuing overall product cost), and the netbook will not have a DVD drive (due to space constraints). A key constituent design variable of the DVD-drive module is the drive speed/data rate that is a scaling attribute: e.g., “1× or 2× 1.35 MB/s.” In this case, the “3 × 3” commonality matrix block corresponding to this design variable (drive speed/data rate) can be expressed as

where the order of the products is [15 in., 13 in., netbook] and the commonality variables λ 12, λ 21, and λ 22 are to be determined during optimization. This commonality matrix formulation (Eq. 12.10) thus provides a generalized product platform planning model, which can address a wide variety of product families without making limiting distinctions between scalable and modular attributes.

It is helpful to note that factors, such as (1) the product-module architecture and (2) the availability of module options in a modular family, demand additional considerations which are not explicitly addressed by the current CP3 model. For example, in the case of laptops, technologically differing/substitutable types of DVD drives are commercially available: e.g., DVD, DVD-RW, and Blue-ray Disc. Although, these DVD-drive options have the same drive-speed attribute, the commonality representation in the CP3 model would not readily apply if two laptop variants were to use two different DVD-drive types. Moreover, the modules in a product are often not independent of each other from a design and/or operation perspective; in that case, inclusions/exclusion/substitution of modules can be mutually dependent (e.g., web camera and microphone), which leads to dependent commonality matrix blocks. Such “module interdependency” that can be defined within the conceived product architecture is not addressed by the current CP3 model. Appropriate considerations of the underlying product architecture and module options should further advance the applicability of the CP3 model and are considered a key area for future research.

2.2.2 Representative Illustration of the CP3 Model

The proposed CP3 model is illustrated using an example of a product family comprising four products. The entire family has an exhaustive set of five different variables. It is helpful at this point to provide a careful definition of a generalized product platform—“A product platform is said to be created when more than one product variant in a family share a particular design variable.” In this case “sharing a design variable between two products” can be defined as the products having the same value of the concerned design variable. Table 12.2 shows the platform plan of the sample product family. Each uppercase letter in Table 12.2, except the “−” symbol, represents a platform. Blocks in Table 12.2, displaying similar letters, imply that the corresponding products are members of a particular variable-based platform. A block displaying the “−” symbol represents non-platform (scaling) design variable values, which implies that the corresponding design variable value is not shared by more than one product. Blocks without any specified symbol/letter (blank) imply that the particular variable is not included in the corresponding product.

A product platform plan, as shown in the example (in Table 12.2), entails classifying design variables (in the entire family) into the following three categories:

-

1.

Platform design variable: A design variable that is shared by all the different kinds of products in the family, e.g., variable \( {x_1} \) in Table 12.2.

-

2.

Sub-platform design variable: A design variable that is shared by a particular subset of the different kinds of products in the family, leading to subfamily, e.g., variable \( {x_5} \) in Table 12.2. Sub-platforms may also lead to multiple subfamilies with respect to a design variable, e.g., variable \( {x_3} \) in Table 12.2.

-

3.

Non-platform design variable: A design variable that is not shared by more than one product in the family, e.g., variables \( {x_2} \) and \( {x_4} \) in Table 12.2.

The diagonal blocks of the commonality matrix, corresponding to each design variable for the product family illustrated in Table 12.2, are given by

The corresponding five diagonal blocks of the commonality constraint matrix (\( \varLambda \)), determined from the commonality matrix, are given by

2.2.3 The Generalized MINLP Problem

The generalized MINLP problem for a family of \( N \) products, comprising a global set of \( n \) design variables, can be expressed as

and where the matrices \( \varLambda \) and \( \lambda \) are given by Eqs. 12.8 and 12.10, respectively. It is helpful to note that, although the matrix \( \lambda \) is a variable for the MINLP problem, certain diagonal elements (\( \lambda_l^{kk } \)) are known a priori (see Sect. 12.2).

3 One-Step Continuous Optimization Frameworks

3.1 Product Family Design Using Selection-Integrated Optimization (SIO)

The SIO method was introduced by Khire and Messac (2008) primarily to solve the optimization of adaptive systems. The Variable-Segregating Mapping Function (VSMF) provides a continuous approximation of the discrete problem posed by adaptive systems. In adaptive system optimization, the objective is to minimize the change/variation in the design variables while maximizing the overall system performance. The analogy between adaptive system design and product family design is evident (Khire 2006). A product family design problem can therefore be reformulated into a similar problem, where the penalty function to be minimized represents the overall variance in the design variables in the product family.

In conventional two-step PFD approaches, if the difference in the values of a design variable (among the product variants), \( \Delta {x_k} \), is smaller than a prespecified threshold difference (Fellini et al. 2005), \( \Delta x_k^{th }, \) the corresponding design variable \( (\Delta {x_k} \)) is fixed across all product variants (a platform variable); otherwise, it is made scalable. Figure 12.2 provides a graphical representation of this mapping.

The conventional mapping is not continuous and therefore is not suitable for tractable application of gradient-based algorithms or other standard continuous optimization strategies. More importantly, the threshold value (\( \Delta x_k^{th } \)) is usually heuristically chosen, which again introduces a source of suboptimality. The Variable-Segregating Mapping Function (VSMF) overcomes these two issues. The VSMF facilitates the automatic segregation of the platform variables from the scaling design variables. By including the VSMF in the optimization problem, the SIO methodology segregates the design variables in the course of the optimization process.

3.1.1 Variable-Segregating Mapping Functions (VSMF)

The Variable-Segregating Mapping Function (VSMF) is a family of continuous functions that progressively approximates the discontinuous mapping shown in Fig. 12.2. A generic VSMF is defined for all design variables. Details of the VSMF are provided in Fig. 12.3b. The VSMF is defined in terms of two normalized and nondimensional variables: (1) \( {{\overline{{\Delta x}}}_{\mathrm{ act}}} \), representing actual variation, and (2) \( {{\overline{{\Delta x}}}_{\mathrm{ map}}} \), representing mapped variation. In the case of PFD, variation represents the difference between the highest and the lowest values of a design variable among the product variants.

The VSMF is defined such that it satisfies the following properties:

-

1.

It is a monotonically increasing smooth function (continuous first derivative).

-

2.

The threshold value is set at \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}=1 \), at point a in Fig. 12.3b.

-

3.

At \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}=0 \) (point O), \( {{\overline{{\Delta x}}}_{\mathrm{ map}}}=0 \).

-

4.

At \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}=1 \) (point b), \( {{\overline{{\Delta x}}}_{\mathrm{ map}}}=1 \).

-

5.

For \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}\geq 0 \) (segment b–c), \( {{\overline{{\Delta x}}}_{\mathrm{ map}}}={{\overline{{\Delta x}}}_{\mathrm{ act}}} \).

-

6.

The VSMF contains a point s between \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}=0 \) and \( {{\overline{{\Delta x}}}_{\mathrm{ act}}}=1 \); this point has an interesting and important property, which is discussed next.

The point s divides the VSMF into two parts, depicted by O-s and s-b in Fig. 12.3b. The coordinates of point s are governed by a special parameter \( \alpha \) as given by

By varying \( \alpha \) between 1 and 0, a family of VSMFs can be obtained—a property exploited for segregating the fixed from the adaptive design variables. It is important to note that \( \alpha \) is not a design variable in the SIO methodology and is instead a VSMF parameter that facilitates the progressive approximation of the discreteness involved in the design variable selection process. For \( \alpha =1 \), the VSMF follows the straight line O-a1-b-c in Fig. 12.3b. If the value of \( \alpha \) is progressively lowered towards zero, point s travels from point a1 to point a, thereby causing the VSMF to progressively approximate the original discontinuous mapping depicted by O-a-b-c in Fig. 12.3b. This progression bears a significant similarity to homotopy-based approaches (Watson and Haftka 1989).

The point s can be called the separating point. Based on this point, Khire (2006) proposed the following segregation criterion:

where \( 0.5\alpha \) is the coordinate of the separating point s along the vertical axis, which vanishes as \( \alpha \) goes to zero. If the condition in Eq. (12.17) is not satisfied, then the design variable (\( {x_k} \)) is considered to be a scaling variable.

3.1.2 Implementation of VSMF in SIO-Based PFD

In order to implement VSMF, the penalty function is redefined as

where each variable with the bar overhead represents the mapped variation in the corresponding motor design variable. The above defined penalty objective function is used as one of the objectives in the bi-objective PFD problem presented in Eq. (12.3), with the design variables modified according to the VSMF:

For the \( k \)th variable, the VSMF-based modification defined in Eq. (12.19) is performed using the following set of expressions:

In Eq. (12.21), \( {{\left( {\Delta {x_k}} \right)}_{\mathrm{ th}}} \) is the threshold value of the \( k \)th variable, which can be defined as

where \( x_k^{{\max ^*}} \) and \( x_k^{{\min ^*}} \), respectively, represent the highest and the lowest values of the \( k \)th variable among the ten motor variants, after each product has been individually optimized for maximum performance. In Eq. (12.22), \( {{\left( {{{{\overline{{\Delta x}}}}_k}} \right)}_{\mathrm{ map}}} \) is estimated from \( {{\left( {{{{\overline{{\Delta x}}}}_k}} \right)}_{\mathrm{ act}}} \) using the generic VSMF mapping, as defined in the previous section.

In SIO-based PFD, the optimal product platform plan is obtained by an iterative solution process, where the bi-objective optimization problem (given by Eq. 12.3) is solved at each iteration. The value of \( \alpha \) is lowered by 0.1 at the beginning of each iteration except the first one (i.e., \( \Delta \alpha =0.1 \)), starting with \( \alpha =0.9 \) and ending at \( \alpha =0.1 \) (i.e., ten iterations). The optimization solution from the previous iteration is used as the starting guess for the design variables in the current iteration, which are subsequently modified using the VSMF. The process of identifying platform and scaling variables using the VSMF-based SIO method is illustrated in Fig. 12.4.

In Fig. 12.4, the stars show the typical locations of the optimal solutions obtained from each repetition of the optimization problem. As shown in Fig. 12.4a, as the value of \( \alpha \) is lowered in each repetition, the design variables that are going to be fixed (platform) move closer to segment O-a0 on their corresponding VSMFs. We note that on segment O-a0, the mapped change in the design variable is zero and therefore represents a fixed design variable. Also, with each repetition, the scaling design variables move closer to or further than point b (see Fig. 12.4b). Hence, the SIO methodology segregates the platform variables from the scaling variables within the optimization process, thereby allowing the optimality of the resulting product family design.

3.2 Comprehensive Product Platform Planning (CP3) Optimization

The combination of binary variables (\( \lambda_j^{kl } \)) and continuous design variables (\( x_j^{kl } \)) in the CP3 model presents a classical mixed-integer problem. The presence of a high number of binary commonality variables \( (\lambda_j^{kl }) \)in the platform planning model demands extensive computational resources. A new Platform Segregating Mapping Function (PSMF) is proposed to convert the mixed-integer problem into a tractable continuous optimization problem. Unlike the VSMF mapping the PSMF mapping avoids the “all or none” assumption. Particle Swarm Optimization (PSO) was subsequently implemented by Chowdhury et al. (2011) to solve the continuous optimization problem.

3.2.1 Platform Segregating Mapping Function (PSMF)

Prior to the investigation of this new solution methodology, the commonality constraint (from Eq. 12.8) is reformulated as

where \( \varepsilon \) is the aggregate tolerance specified to allow for platform creation. A careful analysis of the modified commonality constraint (Eq. 12.24) indicates that, for any two products \( k \) and \( l \), a commonality matrix variable (\( \lambda_j^{kl } \)) is a decreasing function of the squares of the corresponding design variable difference: \( \Delta x_j^{kl }=\left| {x_j^k-x_j^l} \right| \). At the same time, the design variable differences between various pairs of products are often not independent of each other. A function that can represent the commonality between two products (k and l) with respect to a particular design variable (j) is similar to the function that relates the commonality variable, \( \lambda_j^{kl }, \)to the design variables \( x_j^k \) and \( x_j^l \). This function must have the following properties:

-

1.

The function must be continuous (defined at \( \Delta x_j^{kl }=0 \)) and well behaved.

-

2.

The function must have a maximum at \( \Delta x_j^{kl }=0 \) and must then decrease when \( \Delta x_j^{kl } \) increases (asymptotically tending to zero).

-

3.

These functions, collectively, must allow a coherent consideration of the commonalities between various pairs of products with respect to a particular design variable.

A set of Gaussian distribution functions, collectively called the Platform Segregating Mapping Function (PSMF), is developed to approximate the relationship between the commonality variables and the corresponding physical design variables. The required properties listed above are inherent in this set of Gaussian distribution functions. Interestingly, each Gaussian function in the set also provides a measure of the probability (\( p_{kl}^j \)) of the \( j \)th design variable to be shared between product-\( k \) and product-\( l \). It is helpful to note that other functions that have similar properties can also be implemented to construct the PSMF. The PSMF that relates product-\( k \) and product-\( l \) with respect to the \( j \)th design variable is given by

where the coefficient \( a \) is assumed to be equal to 1. In Eq. (12.25), the design variable value (\( x_j^l \)) serves as the mean of the Gaussian kernel, and the parameter \( {\sigma_j} \) represents the standard deviation of the Gaussian kernel for the \( j \)th design variable. This standard deviation can be determined by specifying the full width at one-tenth maximum (\( \Delta {x_{10 }} \)) as expressed by

where \( p(x) \) represents the probability at \( x \). Subsequently, the commonality matrix can be expressed as

A representative plot of the PSMF for a particular design variable (\( j \)th variable, normalized), in a sample family of five products, is shown in Fig. 12.5. In this figure, the design variable for product-\( k \), \( x_j^k \), can be mapped onto the Gaussian kernel of any other product-\( l \) (where \( l\ne k \)), yielding the corresponding commonality variable, \( \lambda_j^{kl } \). By providing a pairwise representation of commonality among products, the PSMF mapping allows a product platform to be formed whenever a design variable value is the same across more than one product. It can be readily observed that this set of distribution functions (PSMF) provides a unique representation of the product commonalities by converting an essentially combinatorial problem into a tractable continuous problem. This representation can also be used to further investigate the underlying mathematics of product platform planning.

In the CP3 optimization, initially, an optimal design that maximizes performance is obtained separately for each product variant in the family. The optimized design variable values, thus determined, are used to set a modified range, \( \Delta {x_j}, \)for the application of the PSMF on each design variable (\( j \)th design variable), similar to the approach in the SIO technique (Khire and Messac 2008). This modified range is used to evaluate the full width at one-tenth maximum (\( {{\left( {\Delta {x_{10 }}} \right)}_j} \)) for the \( j \)th design variable, using

where \( {{\overline{{\Delta x}}}_{10 }} \) represents the normalized full width at one-tenth maximum, which is explicitly specified during the execution of the algorithm.

The CP3 model is solved using a sequence of \( {N_{\mathrm{ stage}}} \) Particle Swarm Optimizations (PSOs), with decreasing values (in a geometric progression) of the parameter \( {{\overline{{\Delta x}}}_{10 }} \). This multistage optimization results in sharper Gaussian kernels with every subsequent stage, rendering a progressively rigorous application of the commonality constraint; this process is illustrated in Fig. 12.6. This figure shows a five-stage application of the PSMF, with the specified initial and final normalized full width at one-tenth maximum (\( {{\overline{{\Delta x}}}_{10}}^{\max }=1.0 \) and \( {{\overline{{\Delta x}}}_{10}}^{\min }=0.1 \)). In the final stage, design variable values (e.g., \( x_j^k \) and \( x_j^l \)) residing within the same Gaussian kernel (sharp dome in Fig. 12.6) would practically indicate that the corresponding products (product-\( k \) and product-\( l \)) share the \( j \)th design variable.

Optimization is performed on the approximated MINLP problem to minimize a simplified weighted sum of the performance and the cost objectives. The modified optimization problem is defined as

In this problem definition, a value of 0.5 is used for the objective weight \( {w_1} \), and the term \( \mathrm{ PSMF}(X) \) is given by Eq. (12.25). The objective \( {f_{\mathrm{ per}}} \) is given by Eq. (12.4), and the objective \( {f_{\mathrm{ cost}}} \) is evaluated using a cost decay function. This cost decay function accounts for the volume of production; details of the cost decay function can be found in the paper by Chowdhury et al. (2011). As is well known, the weighted-sum method entails certain limitations associated with non-convex Pareto frontiers. Importantly, we note that the CP3 optimization approach can be implemented using other powerful methods that aggregate multiple objectives into one objective such as Physical Programming (Messac et al. 2002a, b). The process of application of the PSMF technique using PSO is summarized by the pseudocode in Fig. 12.7.

3.2.2 Choice of Optimization Algorithm

The optimization process in the CP3 method is performed using an effective variation of the standard Particle Swarm Optimization (PSO) algorithm. Particle Swarm Optimization is one of the most popular heuristic optimization algorithms, introduced by Kennedy and Eberhart (1995). Conceptually, the search characteristics of PSO mimic the natural collective movement of animals, such as bird flocks, bee swarms, and fish schools. In the recent literature, PSO has been used to address various aspects of product family design (Yadav et al. 2008; Moon et al. 2011).

It is however important to note that neither the optimization of the CP3 model nor the application of the PSMF-based solution strategy is restricted to the use of the PSO algorithm. Owing to the generic formulation of the PFD process provided by the CP3 model, the approximated continuous optimization problem obtained using the PSMF technique can also be solved using other standard algorithms, such as the sequential quadratic programming (SQP), the real-coded NSGA-II algorithm (Deb et al. 2002), the strength Pareto evolutionary algorithm (SPEA: Zitzler et al. 2004), the differential evolution algorithm (Price et al. 2005), and the single-objective modified predator–prey (MPP) algorithm (Chowdhury and Dulikravich 2010).

Population-based heuristic algorithms are however preferable in this case, since the commonality constraint is expected to be multimodal. Heuristic algorithms, such as NSGA-II (Deb et al. 2002) and MPP (Chowdhury et al. 2009), are typically useful for multi-objective optimization; these algorithms can be leveraged to explore a bi-objective optimization scenario with performance and cost as separate objectives. The original MINLP problem yielded by the CP3 model can also be directly solved using typical MINLP solvers such as branch and bound and cutting plane techniques or using binary genetic algorithms (e.g., bin-NSGA-II). However, solving the MINLP problem directly may prove to be unreasonably challenging and computationally expensive owing to the high dimensionality of the commonality variables.

4 Application to Family of Electric Motors

4.1 Application of the SIO Method

The SIO method was applied by Khire (2006) to design a family of ten electric motors that satisfies ten different torque requirements, as specified in the book-chapter by Simpson (2006). Sequential quadratic programming (SQP) was used to perform the optimization. The SIO method converged to a product family design in which the variables \( {A_{wa }} \), \( {A_{wf }} \), \( {N_s} \), \( {N_c} \), \( L \), and \( t \) were shared by all the motors (i.e., platform variables) and the variable \( {r_0} \) scaled across the family (scaling variable). The design variable values of the ten motors in the optimized family are provided in Table 12.3 [reported by Khire (2006)]. In this table, the ith motor is depicted by M-i; the variables \( {A_{wf }} \) and \( {A_{wf }} \) are expressed in mm2; the variables \( L \), \( {r_0} \), and \( t \) are expressed in cm; and the variable \( I \) is expressed in Amp.

The overall platform plan of the motors obtained by the SIO method is similar to that obtained using the two-stage method [described in Sect. 3.3 of the book-chapter by Simpson (2006)]. Motor designers have confirmed that varying the stator radius is indeed one of the most effective ways of achieving torque variations among motor variants. However, varying stack length is generally more cost effective from a manufacturing perspective according to Black and Decker [Sect. 3.3 of the book-chapter by Simpson (2006)].

Khire (2006) also provided an investigation of the robustness of the SIO methodology; application of the method was analyzed for different starting values of the VSMF parameter, \( \alpha \), and for different values of its specified increment between iterations (\( \varDelta \alpha \)). Khire (2006) concluded that in order to save computation time, it is advisable to start at \( \alpha =0.9 \) and terminate the iteration once the trend of design variable segregation is observed. The value of \( \varDelta \alpha \) must be selected such that it fulfills two criteria: (1) convergence of the solution and (2) manageability of the computation cost. Based on numerical experimentation, a favorable range for the Δα value between 0.06 and 0.1 was suggested by Khire (2006). It is helpful to note that the suggestions regarding the values of initial \( \alpha \) and \( \varDelta \alpha \) (presented here) are particularly applicable for the family of motors and hence are not necessarily universal.

4.2 Application of the CP3 Method

The CP3 method was applied by Chowdhury et al. (2011) to design the same family of ten electric motors (with ten different torque requirements) as specified in the book-chapter by Simpson (2006). Initially, optimization is performed on each motor individually (using PSO), in order to maximize the individual motor efficiencies subjected only to the physical design constraints (specified in Eq. 12.3). A new set of variable limits is determined from the highest and the lowest values of each optimized design variable, among the ten motors. The new design variable limits are used to execute step 4 to step 9 of the pseudocode given in Fig. 12.7.

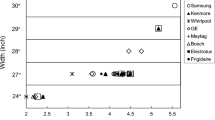

The optimized platform plan obtained for the motor family is illustrated in Table 12.4. Each uppercase letter in Table 12.4 represents a platform; blocks in Table 12.4, displaying similar letters, imply that the corresponding products are members of a particular platform (that share the corresponding design variable). A block displaying the “−” symbol represents a scaling design variable value, thereby implying that the corresponding design variable value is not shared by more than one product. The efficiencies of the motors in the optimized family are also provided in Table 12.4.

Ten sub-platforms are formed in the motor family. The design of Motor-10 is observed to be completely unique (no variable sharing with other motors). On the other hand, Motor-3 shares the maximum number of variables with one or more other motors. None of the design variables are shared across all the products. The thickness of the stator exhibits a strong platform property—Motor-1 and Motor-3 have the same stator thickness, and Motor-2 and Motor-4 to Motor-9 have the same stator thickness. The number of wire turns on each field pole is different for each motor, making it a typical scaling variable.

4.3 Comparison of CP3 and SIO Results

The optimal motor family produced by SIO offered significantly higher degree of commonality than that produced by CP3. On the other hand, the efficiencies of the ten individual motors are higher in the case of the family yielded by CP3. It is helpful to note that the SIO and the CP3 involve different commonality objectives. Hence, a direct comparison of the performances of these two methods is not feasible.

A comparison of their results can however be made if the commonality in the final optimized motor families is represented in terms of the standard commonality index [CI (Martin and Ishii 1996)], which is mathematically defined as

where \( u \) represents the actual number of unique parts in the entire product family and \( {n_k} \) represents the number of parts in the kth product. The “\( -\max \left( {{n_k}} \right) \)” term is included in the definition to ensure that the CI varies between 0 and 1. According to this definition:

-

When the product variants in a family are identical (i.e., all parts are shared among all products), the value of CI is a maximum of 1.

-

When the product variants are completely different from each other (i.e., no parts are shared among the product variants), the value of CI is a minimum of zero.

In the case of the motor family, each motor variant comprises seven variables that participate in platform planning, i.e., \( {n_k}=7 \). For the optimized motor family obtained by the SIO method, the actual number of unique parts (\( u \)) is 16. The actual number of unique parts (\( u \)) in the optimized motor family obtained by the CP3 method is 52. In order to facilitate easy comparison, the results of the optimized motor families obtained by the two methods are summarized in Table 12.5.

It is observed from Table 12.5 that the average motor efficiency of the optimized family obtained by CP3 is approximately 9 % higher than that obtained by SIO. On the other hand, the commonality (in terms of CI) in the optimized motor family obtained by SIO is three times of that obtained by CP3. Hence, the optimized motor families obtained by the two methods are trade-off solutions with respect to each other. It is also important to note that both methods use an aggregate objective function, in which the performance objective has a weight of 0.5 (the second objective being different); if lower weights are used for the performance objective, the resulting total number of unique parts (in the optimized family) is expected to decrease in both methods.

Overall, it is evident that since the CP3 method is not restricted by the “all or none” assumption (as seen from the results in Table 12.4), it is applicable to a wider variety of commercial product families (compared to SIO). An “all or none” approach would also demand a higher compromise of the product performances to achieve commonality among products, which was the case when the SIO method was applied. However, the optimization involved in the SIO method is more tractable than that involved in the CP3 method, since the latter yields multimodal commonality constraints; this attribute makes SIO relatively easier to implement. Therefore SIO can be a particularly useful product family design method in cases where the user desires to have an “all or none” platform plan.

5 Closing Remarks

Quantitative design of product families should be an integral part of the entire concept-to-shelf process for a line of products. Unfortunately, the planning of product platforms and the quantification of the individual product attributes are often a complex and system-dependent mathematical problem—involving a mix of integer and continuous variables and highly nonlinear functions. Simplification of the product platform planning process and the development of generic protocols are therefore necessary to take the quantitative PFD approaches from research labs to industrial applications.

This chapter summarizes the formulation of two methods that seek to develop and solve a more tractable continuous approximation of the PFD problem. The Selection-Integrated Optimization (SIO) method uses a continuous mapping function to quantify the tendency of a variable to become common among products. An optimization-based iterative process is used to simultaneously segregate the design variables into platform and scaling types and quantify the optimal variable values. Similarly, the Comprehensive Product Platform Planning (CP3) method uses a set of continuous kernel functions to map each design variable’s tendency towards commonality for each pair of products (in the family). Together with the mapping scheme, Particle Swarm Optimization is implemented through an iterative process to segregate the design variables into platform and scaling types. The pairwise mapping strategy allows the CP3 method to avoid the “all or none” assumption. More importantly, the CP3 method formulates a generic product platform planning model—one that can be solved using any standard continuous optimization method.

The conversion of a complex PFD problem into a tractable continuous form helps the designer better exploit the potential of quantitative optimization. We encourage interested researchers to build on the foundation laid down by these two PFD methods and develop more comprehensive (yet tractable) one-step approaches that can be readily applied to a wider variety of problems.

References

Chen C, Wang LA (2008) Modified genetic algorithm for product family optimization with platform specified by information theoretical approach. J Shanghai Jiaotong Univ (Sci) 13:304–311

Chowdhury S, Dulikravich GS (2010) Improvements to single-objective constrained predator–prey evolutionary optimization algorithm. Struct Multidiscip Optim 41:541–554

Chowdhury S, Dulikravich GS, Moral RJ (2009) Modified predator–prey algorithm for constrained and unconstrained multi-objective optimisation. Int J Math Model Numer Optim 1:1–38

Chowdhury S, Messac A, Khire R (2011) Comprehensive product platform planning framework. ASME J Mech Des (special issue on Designing Complex Engineered Systems) 133:101004-1–101004-15

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multi-objective genetic algorithm: Nsga-II. IEEE Trans Evol Comput 6:182–197

Fellini R, Kokkolaras M, Papalambros PY, Perez-Duarte A (2005) Platform selection under performance bounds in optimal design of product families. ASME J Mech Des 127:524–535

Jiao J, Zhang Y, Wang Y (2007a) A generic genetic algorithm for product family design. J Intell Manuf 18:233–237

Jiao J, Simpson TW, Siddique Z (2007b) Product family design and platform-based product development: a state-of-the-art review. J Intell Manuf 18:5–29

Kennedy J, Eberhart RC (1995) Particle swarm optimization. IEEE Int Conf Neural Netw 4:1942–1948

Khajavirad A, Michalek JJ (2008) A decomposed gradient-based approach for generalized platform selection and variant design in product family optimization. ASME J Mech Des 130:071101-1–071101-8

Khajavirad A, Michalek JJ, Simpson TW (2009) An efficient decomposed multiobjective genetic algorithm for solving the joint product platform selection and product family design problem with generalized commonality. Struct Multidiscip Optim 39:187–201

Khire R (2006) Selection-integrated optimization (SIO) methodology for adaptive systems and product family optimization. PhD Thesis, Rensselaer Polytechnic Institute, Troy, NY

Khire R, Messac A (2008) Selection-integrated optimization (sio) methodology for optimal design of adaptive systems. ASME J Mech Des 130:101401-1–101401-13

Khire R, Messac A, Simpson TW (2006) Optimal design of product families using selection integrated optimization (SIO) Methodology. In: 11th AIAA/ISSMO multidisciplinary analysis and optimization conference, AIAA-2006-6924, Portsmouth, VA, September

Martin M, Ishii K (1996) Design for variety: a methodology for understanding the costs of product proliferation. In: ASME design engineering technical conferences and computers in engineering conference, ASME, Irvine, CA, 96-DETC/DTM-1610

Messac A, Martinez MP, Simpson TW (2002a) Effective product family design using physical programming. Eng Optim 124:245–261

Messac A, Martinez MP, Simpson TW (2002b) Introduction of a product family penalty function using physical programming. ASME J Mech Des 124:164–172

Moon SK, Park KJ, Simpson TW (2011) Platform strategy for product family design using particle swarm optimization. In: ASME 2011 international design engineering technical conferences, Washington, DC

Price K, Storn RM, Lampinen JA (2005) Differential evolution: a practical approach to global optimization, 1st edn. Springer, New York

Simpson TW (2006) Methods for optimizing product platforms and product families. In: Simpson TW, Siddique Z, Jiao J (eds) Product platform and product family design. Springer, New York, pp 133–156

Simpson TW, Siddique Z, Jiao RJ (2006) Platform-based product family development. In: Simpson TW, Siddique Z, Jiao RJ (eds) Product platform and product family design. Springer, New York, pp 1–15

Watson LT, Haftka RT (1989) Modern homotopy methods in optimization. Comput Meth Appl Mech Eng 74:289–305

Yadav SR, Dashora Y, Shankar R, Chen FTS, Tiwari MK (2008) An interactive particle swarm optimisation for selecting a product family and designing its supply chain. Int J Comput Appl Technol 31:168–186

Zitzler E, Laumanns M, Bleuler S (2004) A tutorial on evolutionary multiobjective optimization. In: Metaheuristics for multiobjective optimisation. Springer, Berlin, pp 3–37

Acknowledgements

This work has been supported by the National Science Foundation under Awards no. CMMI 0946765 and CMMI 1100948. Any opinions, findings, conclusions, and recommendations presented in this chapter are those of the authors and do not reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media New York

About this chapter

Cite this chapter

Messac, A., Chowdhury, S., Khire, R. (2014). One-Step Continuous Product Platform Planning: Methods and Applications. In: Simpson, T., Jiao, J., Siddique, Z., Hölttä-Otto, K. (eds) Advances in Product Family and Product Platform Design. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-7937-6_12

Download citation

DOI: https://doi.org/10.1007/978-1-4614-7937-6_12

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-7936-9

Online ISBN: 978-1-4614-7937-6

eBook Packages: EngineeringEngineering (R0)