Abstract

Fast and accurate models are indispensable in contemporary microwave engineering. Kernel-based machine learning methods applied to the modeling of microwave structures have recently attracted substantial attention; these include support vector regression and Gaussian process regression. Among them, Bayesian support vector regression (BSVR) with automatic relevance determination (ARD) proved to perform particularly well when modeling input characteristics of microwave devices. In this chapter, we apply BSVR to the modeling of microwave antennas and filters. Moreover, we discuss a more efficient version of BSVR-based modeling exploiting variable-fidelity electromagnetic (EM) simulations, where coarse-discretization EM simulation data is used to find a reduced number of fine-discretization training points for establishing a high-fidelity BSVR model of the device of interest. We apply the BSVR models to design optimization. In particular, embedding the BSVR model obtained from coarse-discretization EM data into a surrogate-based optimization framework exploiting space mapping allows us to yield an optimized design at a low computational cost corresponding to a few evaluations of the high-fidelity EM model of the considered device. The presented techniques are illustrated using several examples of antennas and microstrip filters.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Bayesian support vector regression

- Computer-aided design (CAD)

- Electromagnetic (EM) simulation

- Microwave engineering

- Space mapping

- Surrogate-based optimization

- Surrogate

1 Introduction

Full-wave electromagnetic (EM) simulations based on the method of moments and/or finite elements play a ubiquitous part in microwave engineering, as they permit highly accurate evaluation of microwave structures such as planar antennas and filters. Such simulations, however, are costly in computational terms, and their use for tasks requiring numerous analyses (e.g., statistical analysis and parametric design optimization) might become infeasible under certain conditions (for instance, a genetic algorithm optimization might necessitate thousands of full-wave analyses of candidate geometries of the structure to be optimized). Hence, surrogate models are used instead. Trained on a training set consisting of a limited number of input-output pairs (such as adjustable antenna geometry parameters and frequency as input, and the magnitude of the input reflection coefficient |S 11| obtained from full-wave simulations as output), these models, by virtue of their ability to generalize over the input space, make it possible to quickly obtain the desired performance characteristics for inputs not previously presented to the model.

The kernel-based machine learning method most widely used for microwave modeling tasks has been support vector regression (SVR) utilizing an isotropic Gaussian kernel [1]. It has recently been shown [2] that Bayesian support vector regression (BSVR) [3] using a Gaussian kernel with automatic relevance determination (ARD) significantly outperforms the above standard SVR with an isotropic kernel in modeling |S 11| versus the frequency of CPW-fed slot antennas with multiple tunable geometry variables. BSVR is in essence a version of Gaussian process regression (GPR) [4]; the Bayesian framework enables efficient training of the multiple hyperparameters of the ARD kernel by minimizing the negative log probability of the data given the hyperparameters. Such training of multiple hyperparameters is intractable under standard SVR, which employs a grid-search/cross-validation approach towards this end. In addition to its advantageous Bayesian-based features, BSVR also exhibits certain desirable properties of standard SVR, such as quadratic programming and sparseness of solutions, i.e., solutions that are fully characterized by the set of SVs, which is a subset of the training set.

In this chapter, we explore BSVR within both global and local modeling contexts. Global, or “library-type,” surrogate models aim at giving accurate predictions over the entire input space, and can be used for a variety of applications (e.g., optimization and statistical analysis). In contrast, local/trust region models only apply to a subregion of input space specified by the optimization algorithm within which the model usually is embedded.

Similar to many other global modeling methods, a drawback of BSVR is the high starting cost of gathering the fine-discretization full-wave simulation data necessary to train the model so that it has high predictive accuracy. We address this problem by exploiting the sparseness property of BSVR to reduce the amount of expensive high-fidelity data required for training (see Sects. 3–5). Earlier methods aimed at optimal data selection for microwave modeling problems include various adaptive sampling techniques that aim, within optimization contexts, to reduce the number of samples necessary to ensure the desired modeling accuracy. This is done by iterative identification of the model and the addition of new training samples based on the actual model error at selected locations (e.g., [5]) or expected error values (statistical infill criteria, e.g., [6]); [5, 6] were local/trust region models.

Our approach entails first training an auxiliary BSVR model using fast, inexpensive coarse-discretization data selected by means of traditional experimental design procedures, and then taking the support vectors of this model simulated at a high mesh density as training data for the actual (high-fidelity) BSVR model. (A similar approach was adopted in [7], but only standard SVR with an isotropic kernel was used to model comparatively uncomplicated underlying functions.) The role of the auxiliary model can be viewed as locating regions of the design space where more samples are needed compared to other regions—for example, because the response is more variable with respect to the design and/or frequency variables. Our modeling approach is demonstrated using both planar antenna and microstrip filter examples (see Sects. 4 and 5, respectively). We also evaluate the accuracy of our reduced-data BSVR surrogates by using them within a space mapping (SM) optimization framework.

As to local BSVR modeling, we consider surrogates for variable-fidelity EM-driven optimization (see Sect. 6). In this approach, the optimization is carried out using SM, whereas the underlying coarse model is created by approximating coarse-discretization EM simulation data using BSVR. The high-fidelity EM simulation is only launched to verify the design produced by the space-mapped BSVR coarse model and obtain the data for its further correction. This allows us to significantly reduce the computational cost of the design optimization process as illustrated using two antenna examples.

The above sections are preceded by a short overview in Sect. 2 of the BSVR framework, and followed by some summary remarks (see Sect. 7).

2 Modeling Using Bayesian Support Vector Regression

In this section, we briefly give an overview of the formulation of Bayesian support vector regression (BSVR).

Consider a training data set of n observations, \(\mathcal {D} = \{(\boldsymbol{u}_{i}, y_{i} )\mid i = 1, \dots, n\}\). The BSVR formulation, which is explained at length in [3], follows the standard Bayesian regression framework for GPR in which training targets y i corresponding to input vectors u i are expressed as y i =f(u i )+δ i , where δ i are independent, identically distributed noise variables; and the underlying function f is a random field. If f=[f(u 1) f(u 2) … f(u n )], then Bayes’s theorem gives the posterior probability of f given the training data \(\mathcal {D}\) as

with p(f) the prior probability of f, \(p(\mathcal {D}|\mathbf{f})\) the likelihood, and \(p(\mathcal {D})\) the evidence. The likelihood is given by

where p(δ i )∝exp(−ζL(δ i ))with L(δ i ) the loss function, and ζ a constant. In standard GPR [4] the loss function is quadratic; the crucial point in the BSVR formulation is that a new loss function, the soft insensitive loss function, is used that combines advantageous properties of both the ε-insensitive loss function (sparseness of solutions) of standard SVR [8], and Huber’s loss function (differentiability). It is defined as [3]:

where 0<β≤1, and ε>0.

Solving for the maximum a posteriori (MAP) estimate of the function values entails solving the primal problem [3, Eqs. (19)–(21)], with the corresponding dual problem given by

subject to \(0 \le \alpha_{i},\alpha_{i}^{*} \le C\), i=1,…,n. In the above, Σ is an n×n matrix with Σ ij =k(u i ,u j ) and k(⋅) is the kernel function. In particular, the Gaussian kernel with ARD (used throughout in this work) is given by

where u ik and u jk are the kth elements of the ith and jth training input vectors. The hyperparameter vector θ, which includes \(\sigma_{f}^{2}\), τ k , κ, C, and ε, can be determined by minimizing the negative log probability of the data given the hyperparameters [3],

with Σ M an m×m submatrix of Σ corresponding to the off-bound support vectors, I the m×m identity matrix, f MP=Σ(α−α ∗), and Z s defined as [3, Eq. (15)]. The length scale τ k associated with the kth input dimension can be considered the distance that has to be traveled along that dimension before the output changes significantly [4]. The regression estimate at a test input u ∗ can be expressed as

Training points corresponding to \(|\alpha_{i} - \alpha_{i}^{*}| > 0\) are the support vectors (SVs); of these, points corresponding to \(0 < |\alpha_{i} - \alpha_{i}^{*}| < C\) are termed off-bound SVs. Usually, the lower the parameter β in the loss function, the smaller the number of SVs [3]; β determines the density function of the additive noise associated with training targets.

3 BSVR Modeling with Reduced Data Sets

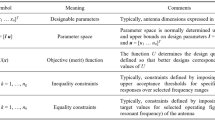

In this section, we discuss a method of exploiting EM simulations of variable fidelity in order to reduce the computational cost of creating the BSVR model. The model response of interest typically is |S 11| or |S 21| over a specified frequency range for a particular antenna or filter geometry. A model input (column) vector u consists of the set of adjustable geometry parameters u and a frequency value f; thus we have u=[x T f]T. The (scalar) response of a model at a specific frequency, for example, the model response R f , which is the fine-discretization full-wave simulated S-parameters, is denoted as R f (u), or R f (x,f). Suppose now that a BSVR surrogate R s of the CPU-intensive high-fidelity model R f has to be constructed. As noted earlier, the computational cost of gathering sufficient data to train R s typically is high. To address this, first, an auxiliary BSVR model R s.aux of the antenna (or filter) is set up with training data obtained from coarse-discretization full-wave simulations (these simulations are referred to as the low-fidelity full-wave model R c ). The training set for R s.aux consists of n input vectors x i , i=1,…,n, and associated targets y i =R c (u i ), where u i contains geometry parameters and a frequency value as noted above, and y i is the corresponding simulated |S 11| (or |S 21|) value. The SVs obtained from R s.aux are then simulated at the (high) mesh density of R f , providing the reduced fine-discretization training set for R s .

As experience has shown that the coarsely simulated targets R c and finely simulated targets R f of microwave structures such as antennas or filters are usually reasonably well correlated (in Sects. 4 and 5 we give coarse and fine meshing densities for specific examples to indicate by how much they can differ), we assume that the regions of the input space which support the crucial variations in the coarse response surface will also support the crucial variations in the fine response surface. Hence, the SVs of the coarse model should largely capture the crucial variations in the fine data as well, and along with target values obtained through fine-discretization simulations should make an adequate reduced-data training set for a high-fidelity BSVR model, i.e., R s .

4 Modeling and Optimization of Antennas Using BSVR

In this section, we present examples illustrating how global BSVR models for the reflection coefficients of planar slot antennas can be set up based on reduced finely discretized data sets. We then use these models for design optimization. We consider three examples of antennas with highly nonlinear |S 11| responses as a function of tunable geometry parameters and frequency: a narrowband coplanar waveguide (CPW)-fed slot dipole antenna, an ultra-wideband (UWB) CPW-fed T-shaped slot antenna, and a broadband probe-fed microstrip patch with two U-shaped parasitic elements. We furthermore evaluate the accuracy of our reduced-data BSVR surrogates by using them within a space mapping (SM) optimization framework [9–14].

4.1 Slot Dipole Antenna (Antenna 1)

Figure 1 shows the geometry of a CPW-fed slot dipole antenna on a single-layer dielectric substrate. The design variables were x=[W L]T mm, and the input space was specified as 5≤W≤10 mm and 28≤L≤50 mm. Other dimensions/parameters were w 0=4.0 mm, s=0.5 mm, h=1.6 mm, and ε r =4.4. We were concerned with |S 11| over the frequency band 2.0–2.7 GHz (visual inspection revealed that |S 11|-versus-frequency responses over this band varied substantially throughout the above geometry input space). Using CST Microwave Studio [15] on a dual-core 2.33 GHz Intel CPU with 2 GB RAM, we considered a high-fidelity model R f (∼130,000 mesh cells, simulation time 12 min) and a low-fidelity model R c (∼5,000 mesh cells, simulation time 30 s).

For training input data, 99 geometries were selected at random from the input space using Latin hypercube sampling (LHS), with three frequencies per geometry uniformly randomly sampled from the above frequency range such that, in general, each geometry had a different set of frequencies. The total number of training points was n=99×3=297; training input vectors had the form \(\{\boldsymbol{u}_{i} = [\boldsymbol{x}_{i}^{T}\ f_{i}]^{T} = [W_{i}\ L_{i}\ f_{i}]^{T} | i = 1,\ldots, n\}\), with W i and L i the design variables corresponding to the ith input vector, and f i a frequency value within the range of interest. Test data consisted of 100 new geometries, also obtained via LHS, with 71 equally spaced frequencies per geometry. The training data were simulated at the R c mesh density, and used to train the BSVR model R s,aux for three different values of β at the low end of its possible range (β∈{0.05,0.15,0.25}; as noted earlier, usually the smaller the value of β, the smaller the number of SVs). Each R s,aux was used to predict the test data (also simulated at the R c mesh density). %RMSE (percentage root mean square error normalized to the target range) values were in the vicinity of 1.1 %; this high predictive accuracy confirmed that the training set was sufficiently large.

For each R s,aux model, the SVs were identified and simulated at the R f mesh density. BSVR models fitted to these fine-discretization data gave the desired surrogate models R s . For comparison, surrogate models R s,full trained on the full fine-discretization training data set (n=297) were also set up. Table 1 gives, for each of the β values, the %RMSE values obtained with R s,aux on the coarse-discretization test data, and R s and R s,full on the fine-discretization test data.

Also given in the table is n SV, the number of SVs associated with R s,aux (and therefore the number of training points for R s ), and n SV/n, the proportion of the full training data that were SVs of R s,aux. The %RMSE values obtained for R s and R s,full were either the same, or only marginally higher in the case of R s , indicating that reducing the number of training points from n to n SV by using the SVs of R s,aux as training points for R s incurred insignificant accuracy loss. In all cases, the reduction in training data was considerable: for example, for β=0.15 the number of SVs was 176, which is 59 % of the original training data set. For ready comparison, Table 1 also explicitly lists the computational cost of generating the training data for the models, expressed in terms of the number of fine-discretization simulations R f (for each model it simply equals the number of training points). In terms of total CPU time (which was proportional to the costs in the table), these numbers translate to about 12 h for R s (β=0.15), and 20 h for R s,full.

4.2 UWB T-Shaped Slot Antenna (Antenna 2)

Figure 2 shows the antenna layout [16]. The design variables were x=[a x a y a b]T mm, with design space 35≤a x ≤45 mm, 20≤a y ≤35 mm, 2≤a≤12 mm, and 10≤b≤30 mm (w 0=4.0 mm, s 0=0.3 mm, s 1=1.7 mm; the single-layer substrate had height h=0.813 mm and dielectric constant ε r =3.38). The frequency band of interest was 2–8 GHz (as before, visual inspection confirmed that |S 11|-versus-frequency responses varied substantially throughout the geometry input space). Using CST Microwave Studio [15], we considered a high-fidelity model R f (∼2,962,000 mesh cells, simulation time 21 min) and a low-fidelity model R c (∼44,500 mesh cells, simulation time 20 s).

The training data consisted of 294 geometries obtained by LHS, with 12 frequencies per geometry, randomly selected as before (n=3,528). The test data comprised 49 new LHS geometries, with 121 equally spaced frequencies per geometry (as before, the value of n was determined by the performance of R s,aux on the test data simulated at the coarse mesh density).

The surrogate models R s,aux, R s , and R s,full were set up similarly to those for Antenna 1. Table 1 gives, for three values of β, the %RMSEs obtained with R s,aux on the coarse test data and with R s, and R s,full on the fine test data; as well as counts of SVs.

In general, %RMSE values of R s were only somewhat higher than those of R s,full, suggesting as before that reducing the number of training points from n to n SV by using the SVs of R s,aux as fine-discretization training points for R s has little effect on prediction accuracy. The CPU time required to generate fine-discretization training data for R s in the case β=0.05 (i.e., the model used in the optimization below) was approximately 56 h; the CPU time for R s,full was 103 h.

4.3 Microstrip Antenna with Parasitic Elements (Antenna 3)

Figure 3 shows the antenna geometry [17]. The design variables were x=[a b c d e]T mm, with design space 14≤a≤22 mm, 0.4≤b≤2 mm, 0.4≤c≤2 mm, 0.4≤d≤2 mm, and 0.4≤e≤2 mm. The main patch had dimensions a 0=5.8 mm and b 0=13.1 mm. The lateral dimensions of the dielectric material and the metal ground were l x =20 mm and l y =25 mm. The dielectric substrate height, h, was 0.4 mm, and its relative permittivity, ε r , was 4.3. The feed pin offset from the main patch center, l 0, was 5.05 mm, and the pin was 0.5 mm in diameter. The frequency band of interest was 4–7 GHz.

The training data were 400 geometries obtained by LHS, with 16 randomly selected frequencies per geometry (n=6,400). The test data comprised 50 new LHS geometries with 121 equally spaced frequencies per geometry. We considered a high-fidelity model R f (∼440,500 mesh cells, simulation time 12 min) and a low-fidelity model R c (∼25,700 mesh cells, simulation time 15 s). It is instructive to consider three randomly picked responses from the training data, shown in Fig. 4. In spite of what appears to be a narrowly circumscribed input space (cf. the boundaries on the b, c, d, and e dimensions), the responses show considerable variety from one training point to the next. Furthermore, while within-training point coarse and fine responses agreed to some extent for some regions of the frequency band, there were considerable differences for others.

Surrogate models were constructed as before. The %RMSE values obtained with R s,aux on the coarse test data, and R s and R s,full on the fine test data for β∈{0.15,0.20,0.25} are shown in Table 1, as well as n SV. The greatest data reduction, by 43 %, occurred for β=0.15, while the %RMSE only increased from 5.53 % (full model) to 5.77 % (reduced model). The CPU time necessary to simulate fine-discretization training data for R s for β=0.05 (i.e., the model used for the optimization) was approximately 49 h; for R s,full it was 80 h.

To further explore the influence of mesh density on our method, a second coarse model R cc (∼8,000 mesh cells, simulation time 8 s), i.e., coarser than R c , was generated, and corresponding surrogate models constructed. The predictive results for the new R s were similar to previous results; e.g., for β=0.2 the predictive %RMSE was 5.82 %, although the number of SVs increased somewhat to 3,992 (see Table 1). In order to evaluate the general similarity between the coarsely and finely simulated data, Pearson product-moment correlation coefficients were computed for the respective |S 11| values, i.e., for |S 11| of R c and R f ; and also for R cc and R f (using all training geometries with 121 equally spaced frequency points per geometry). The correlation coefficients were 0.74 and 0.51, respectively, suggesting some robustness to our procedure.

4.4 Application Examples: Antenna Optimization

The full and reduced BSVR models were used to perform design optimization of the antenna structures considered in Sects. 4.1 through 4.3. We again note that our models are intended as multipurpose global models that give accurate predictions for the whole of the input space; multiple optimization runs corresponding to any number of sets of design specifications constitute one kind of repeated-use application. The initial design in each case is the center of the region of interest x (0). The design process starts by directly optimizing the BSVR model. Because of some limitations in the accuracy of the models given the design context (linear responses were modeled—the preferred choice given the Gaussian kernel—but logarithmic responses (in decibels) are optimized), the design is further refined by means of the SM iterative process [14]

where \(\boldsymbol{R}_{\mathrm{su}}^{(i)}\) is a surrogate model, enhanced by frequency and output SM [14]. The surrogate model setup is performed using an evaluation of R f at x (i). U implements design specifications. For simplicity, we use the symbol R co to denote either of R s.full or R s , which can be considered the “coarse” models in the SM context. Let R co(x,F) denote the explicit dependency of the model on the frequency (F is the set of frequencies of interest at which the model is evaluated). The surrogate model is defined as

with

and

the affine frequency scaling (shift and scaling). The frequency scaling parameters are calculated as

i.e., to minimize the misalignment between the high-fidelity and the scaled low-fidelity model responses at x (i). Although the models are evaluated at a discrete set of frequencies, the information at other frequencies can be obtained through interpolation. The misalignment is further reduced by the output SM (10); this ensures zero-order consistency (i.e., \(\boldsymbol{R}_{\mathrm{su}}^{(i)}(\boldsymbol{x}^{(i)}) = \boldsymbol{R} _{f}(\boldsymbol{x}^{(i)})\)) between the surrogate and R f [18]. The algorithm (8) working with the SM surrogate model (9)–(12) typically requires only three to four iterations to yield an optimized design, with the cost of each iteration effectively equal to a single evaluation of the high-fidelity model.

Figure 5 shows the responses of the reduced BSVR and fine models at the initial designs as well as the responses of the fine models at the final designs obtained for both antenna structures. The reduced BSVR models correspond to β values in Table 1 of 0.15 (Antenna 1), 0.05 (Antenna 2), and 0.15 (Antenna 3). Table 2 summarizes the results. One can see that the design quality and cost (expressed in terms of number of R f evaluations) are very similar for the BSVR models obtained using full and reduced data sets (the CPU times associated with three R f evaluations (Antennas 1 and 2) and four R f evaluations (Antenna 3) were 36 min, 63 min, and 48 min, respectively).

For comparison, we also optimized the three antennas using a conventional (not surrogate-based) method, namely a state-of-the-art pattern search algorithm [19, 20] that directly relied on fine-discretization full-wave simulations (i.e., R f ) for its objective function evaluations. While the maximum |S 11| values at the final designs obtained for Antennas 1, 2, and 3 (−21.6 dB, −11.6 dB, and −10.7 dB, respectively) were similar to those obtained using our BSVR models and the above SM procedure, the computational expense for the conventional optimization was at least an order of magnitude larger (i.e., 40, 148, and 201 R f evaluations for Antennas 1, 2, and 3, respectively, compared to the 3, 3, and 4 R f evaluations reported in Table 2). This accentuates how much faster optimization can be realized when accurate models such as BSVR models are available: our approach reduces by up to 43 % the high initial cost of setting up these multipurpose global models (in comparison the cost of the optimization using SM is insignificant).

5 Modeling and Optimization of Filters Using BSVR

Here we discuss global BSVR models based on reduced finely discretized data sets for the |S 21| responses of two microstrip filters: a capacitively coupled dual-behavior resonator microstrip bandpass filter, and an open-loop ring resonator (OLRR) bandpass filter. As before, we use these models for design optimization.

5.1 Capacitively Coupled Dual-Behavior Resonator (CCDBR) Microstrip Bandpass Filter

Consider the capacitively coupled dual-behavior resonator (CCDBR) bandpass filter [21] implemented in microstrip lines, shown in Fig. 6(a). The three design variables were x=[L 1 L 2 L 3]T. The design variable space for the BSVR models was defined by the center vector x 0=[3 5 1.5]T mm and size vector δ=[1 1 0.5]T mm such that the variable ranges were x 0±δ mm (x 0 and δ were guesses, guided to some extent by expert knowledge of the filters and a very rudimentary exploration of the design space). The substrate height was h=0.254 mm and the relative permittivity was ε r =9.9; the value of S was 0.05 mm, while the microstrip line widths w 1 and w 2 were 0.25 mm and 0.5 mm, respectively. We were interested in the filter response over the frequency range 2 to 6 GHz. The high-fidelity model R f of the filter was simulated using FEKO [22] (total mesh number 715, simulation time about 15 s per frequency). The low-fidelity model R c was also simulated in FEKO (total mesh number 136, simulation time 0.6 s per frequency).

In order to set up the training data input vectors, 400 geometries were randomly selected from the design space using Latin hypercube sampling (LHS) [23]. For each geometry, 12 simulation frequencies were selected by uniform random sampling from the above frequency range, yielding a total of n=400×12=4,800 training input vectors of the form {x i =[L 1i L 2i L 3i f i ]T|i=1,…,n}, with L 1i , L 2i , and L 3i the design variables corresponding to the ith input vector, and f i a frequency value within the range of interest. The corresponding output scalars, obtained from FEKO simulations, were y i =|S 21i |. The test data were likewise obtained from 50 new geometries, also obtained via LHS, with 41 equally spaced frequencies per geometry. The training data were simulated at the R c mesh density and used to train the BSVR model R s,aux for β=0.1, 0.2, and 0.3 (β is the loss function parameter in Eq. (3) discussed above). R s,aux was used to make predictions on the test data (also simulated at the R c mesh density).

The %RMSE (root mean square error normalized to the target range expressed as a percentage) was around 4.13 % for the three β values, which was acceptable for this highly nonlinear problem, and indicated that the training set was sufficiently large. Next, for each value of β the n SV SVs of R s,aux were simulated at the R f mesh density. BSVR models fitted to these reduced training sets gave the desired surrogate models R s .

For comparison, surrogates R s,full trained on the full fine-discretization training data (n=4,800) were also set up. Table 3 gives, for the three β values, the %RMSEs obtained with R s,aux on the coarse test data, and with R s and R s,full on the fine test data; as well as the number of SVs obtained in each instance. The highly similar %RMSEs obtained with R s and R s,full indicate that reducing the number of expensive fine-discretization training points from n to n SV incurred negligible accuracy loss, even for a reduction in data as large as 48 % (β=0.1). Figure 7(a) shows typical predictive results for |S 21| versus frequency obtained for the test geometry x=[2.794 4.407 1.491]T mm. Some discrepancy can be observed when comparing the RMSE values for R s.aux in Table 3 to those for R s (and R s.full). This occurs because the coarse model responses are slightly smoother as functions of frequency (i.e., they do not contain as much detail particularly in the passband) than the fine model ones, which make them easier to model; this results in a lower value of %RMSE. Using a finer mesh for the R c model would reduce this discrepancy. The RMSE values for R s and R s.full nevertheless were good given the highly nonlinear nature of the modeling problem, and of sufficient accuracy to yield good optimization results, as we show in Sect. 4. The total computational time necessary to gather the training data for setting up R s.full was 20 h, whereas the corresponding time for setting R s (including both low- and high-fidelity model evaluations) was 11.2, 11.4, and 12.8 h (for β=0.1, 0.2, and 0.3, respectively) on a quad core PC with a 2.66 GHz Intel processor and 4 GB RAM. Thus the computational savings due to the proposed technique vary between 36 % (for β=0.3) to 44 % (for β=0.1).

5.2 Open-Loop Ring Resonator (OLRR) Bandpass Filter

The filter geometry [24] is shown in Fig. 6(b). The seven design variables were x=[L 1 L 2 L 3 L 4 S 1 S 2 G]T. The design space was described by x 0=[40 8 6 4 0.2 0.1 1]T mm and δ=[2 1 0.4 0.4 0.1 0.05 0.2]T mm. The substrate parameters were h=0.635 mm and ε r =10.2, while the microstrip line widths were W 1=0.4 mm and W=0.6 mm. The frequency range of interest was 2 to 4 GHz. High- and low-fidelity models were simulated in FEKO [22] (total mesh number 1,084 and simulation time 40 s per frequency for R f ; total mesh number 148 and simulation time 0.8 s per frequency for R c ). The training data comprised 400 geometries obtained by LHS [23], with 12 randomly selected frequencies per geometry (n=4,800), while the test data were 50 new LHS geometries with 81 equally spaced frequencies per geometry. Setting up R s,aux, R s , and R s,full proceeded in a manner similar to the earlier filter. Table 3 gives the %RMSE values obtained with R s,aux on the coarse test data and with R s and R s,full on the fine test data; as well as the SV counts. In general, %RMSE values of R s were only marginally higher than those of R s,full, suggesting as before that reducing the number of training points from n to n SV by using the SVs of R s,aux as fine-discretization training points for R s has little effect on the prediction accuracy. The greatest reduction in data (51 %) was obtained for β=0.1. Figure 7(b) shows representative predictive results for |S 21| versus frequency, in particular for the test geometry x=[38.088 8.306 5.882 4.029 0.193 0.061 0.985]T mm. The computational time necessary to gather the training data for setting up R s.full was 53.3 h. The corresponding time for setting up R s (including both low- and high-fidelity model evaluations) was 27.2, 29.3, and 31.4 h (β=0.1, 0.2, and 0.3, respectively). The computational savings due to the proposed technique range from 41 % (for β=0.3) to 49 % (for β=0.1).

5.3 Filter Optimization Using BSVR Surrogates

The BSVR developed in Sects. 5.1 and 5.2 is used to perform design optimization of the filters. The optimization methodology is essentially the same as described in Sect. 4.4 and involves iterative correction and optimization of the surrogates in order to (locally) improve their accuracy [25].

The design process starts by directly optimizing the BSVR model (for each filter, we used the BSVR surrogates corresponding to β=0.1). Each iteration (see Eq. (8)) requires only one evaluation of the high-fidelity model. The CCDBR bandpass filter had design specifications |S 21|≥−3 dB for 3.8≤f≤4.2 GHz; and |S 21|≤−20 dB for 2.0≤f≤3.2 GHz, and 4.8≤f≤6.0 GHz (f denotes frequency). Figure 8 shows the responses of the high-fidelity model R f as well as the responses of R s and R s.full at the initial design x (0)=[3 6 1.5]T mm. The high-fidelity model responses at the optimized designs found using both R s and R s.full are shown in Fig. 9 (these designs were [3.47 4.79 1.01]T mm and [3.21 4.87 1.22]T mm, respectively). In both cases, the design process is accomplished in three iterations, which correspond to a design cost of four high-fidelity model evaluations.

For the OLRR bandpass filter, the design specifications were |S 21|≥−1 dB for 2.85≤f≤3.15 GHz, and |S 21|≤−20 dB for 2.0≤f≤2.5 GHz and 3.5≤f≤4.0 GHz. Figure 10 shows the responses of R f , R s , and R s.full at the initial design x (0)=[40.0 8.0 6.0 4.0 0.1 0.1 1.0]T mm. The responses at the optimized designs obtained using R s and R s.full are shown in Fig. 11 (these designs were [39.605 8.619 6.369 3.718 0.300 0.069 0.986]T mm and [39.010 8.219 5.786 4.260 0.268 0.050 1.068]T mm, respectively). In both cases, the design process is accomplished in two iterations, which correspond to the design cost of three high-fidelity model evaluations.

6 Variable-Fidelity Optimization Using Local BSVR Surrogates

In this section, we discuss the application of BSVR surrogates defined locally (i.e., in a specific region of the input space) towards low-cost design optimization of antennas [26].

As we have shown, BSVR models can be accurate; however, similarly to other approximation-based modeling methods, considerable computational overhead is necessary to acquire the training data. This is not convenient when using approximation surrogates for ad hoc optimization of a specific structure.

Here, we describe a computationally efficient antenna design methodology that combines space mapping (as the optimization engine), and coarse model response surface approximation implemented through BSVR. BSVR serves to create a fast coarse model of the antenna structure. In order to reduce the computational cost of creating the latter, it is obtained from coarse discretization EM simulation data.

6.1 Optimization Algorithm

As mentioned before, the main optimization engine is space mapping (SM) [26]. The generic SM optimization algorithm produces a sequence of approximate solutions to the problem (1), x (0),x (1),…, as follows:

where \(\boldsymbol{R}_{s}^{(i)}\) is the SM surrogate model at iteration i. x (0) is the initial design. The surrogate model is constructed using the underlying coarse model R c and suitable auxiliary transformations [27]. The elementary SM transformations include input SM [28] with the surrogate defined as \(\boldsymbol{R} _{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{R}_{c}(\boldsymbol{x} + \boldsymbol{c}^{(i)})\), multiplicative output SM [27], \(\boldsymbol{R}_{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{A}^{(i)}\cdot \boldsymbol{R}_{c}(\boldsymbol{x})\), additive output SM [28], \(\boldsymbol{R} _{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{R}_{c} (\boldsymbol{x}) + \boldsymbol{d}^{(i)}\), and frequency scaling [27], \(\boldsymbol{R}_{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{R}_{c.f}(\boldsymbol{x};\boldsymbol{F}^{(i)})\). In frequency SM, it is assumed that the coarse model is an evaluation of a given performance parameter over a certain frequency range, i.e., R c (x)=[R c (x;ω 1)…R c (x;ω m )]T, and the frequency-scaled model is then given as R c.f (x;F (i))=[R c (x;s(ω 1))…R c (x;s(ω m ))]T, where s is a scaling function dependent on the set of parameters F (i). Typically, a linear scaling function \(s(\omega) = f_{0}^{(i)}+ f_{1}^{(i)}\omega\) is used.

Parameters of SM transformations are obtained using the parameter extraction (PE) process, which, in the case of input SM, takes the form

Formulation of PE for the other transformations is similar [28].

Because PE and surrogate model optimization may require a large number of coarse model evaluations, it is beneficial that R c is fast, which is usually not possible for antenna structures, where the only universally available (and yet accurate) type of coarse model is the output of coarse-discretization EM simulations. To alleviate this problem, we construct the coarse model by using a fixed number of such low-fidelity simulations as training data for the coarse model, so that, once set up, the coarse model can be used by the SM algorithm without further reference to the EM solver.

In order to improve the convergence properties of the algorithm, it is embedded in the trust region framework [29], so that the new design x (i+1) is found only in the vicinity of the current one, x (i), as follows:

where δ (i) is the trust region radius updated in each iteration according to the standard rules [29]. Within this framework, the designs that do not improve the specification error are rejected, and the search is repeated with the reduced value of δ (i).

The steps in the modeling procedure are as follows. First, we find an approximate optimum of the coarse model R cd (i.e., the low-fidelity full-wave EM simulations). Then we construct a BSVR surrogate R c , only using relatively densely spaced training data in the vicinity of this optimum, thus further enhancing the computational efficiency. Once constructed, R c is used as the basis for the iterative optimization (13)–(15).

6.2 Antenna Optimization Examples

As examples, we consider again the CPW-fed slot dipole antenna of Sect. 4.1 (Antenna 1, Fig. 1) and the CPW-fed T-shaped slot antenna of Sect. 4.2 (Antenna 2, Fig. 2).

For Antenna 1, we have two design variables, x=[W L]T mm. Using CST Microwave Studio [15], we consider a high-fidelity model R f (∼130,000 mesh cells, simulation time 12 min), and a low-fidelity model R cd (∼5,000 mesh cells, simulation time 30 s). The initial design is x init=[7.5 39.0]T mm. The starting point of the SM optimization is the approximate optimum of the low-fidelity model, x (0)=[5.0 43.36]T mm, found using a pattern search algorithm [19]. The computational cost of this step was 26 evaluations of R cd. The BSVR coarse model has been created using 100 low-fidelity model samples allocated using LHS [23] in the vicinity of x (0) defined by deviation d=[1 3]T mm. The size of this vicinity must be sufficiently large to allow the coarse model to “absorb” the misalignment between the low- and high-fidelity models at x (0) through appropriate SM transformations.

The low-fidelity model at the initial design, as well as the low- and high-fidelity model responses at x (0), are shown in Fig. 12. In this case, the major discrepancy between the models is a frequency shift. Therefore, the primary SM transformation used for this example is frequency scaling applied to all designs, x (0),x (1),…,x (i), considered during the optimization run. The SM surrogate is then enhanced using a local additive output SM [27] so that the entire SM model has the form \(\boldsymbol{R}_{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{R}_{c.f} (\boldsymbol{x};\boldsymbol{F}^{(i)}) + [\boldsymbol{R}_{f}(\boldsymbol{x}^{(i)}) - \boldsymbol{R}_{c}(\boldsymbol{x}^{(i)})]\).

The final design, x (4)=[5.00 41.56]T mm, is obtained in four SM iterations. The high-fidelity model response at x (4) is shown in Fig. 13. At this design, we have |S 11|≤−18.3 dB over the entire frequency band of interest. The design cost is summarized in Table 4 and corresponds to about ten evaluations of the high-fidelity model. Figure 14 shows the convergence plot and the evolution of the specification error versus iteration index.

For Antenna 2, the design variables are x=[a x a y a b]T mm. The design specifications are |S 11|≤−12 dB for 2.3 to 7.6 GHz. The high-fidelity model R f is evaluated with the CST MWS transient solver [15] (3,556,224 mesh cells, simulated in 60 min). The low-fidelity model R cd is also evaluated in CST MWS but with a coarser mesh: 110,208 mesh cells, simulation time 1.5 min. The initial design is x init=[40 30 10 20]T mm. The approximate low-fidelity model optimum, x (0)=[40.33 25.6 8.4 20.8]T mm, has been found using a pattern search algorithm, at the cost of 85 evaluations of R cd. The BSVR coarse model has been created using 100 low-fidelity model samples allocated using LHS in the vicinity of x (0) defined by deviation d=[2 2 1 1]T mm. The BSVR model R c was subsequently used as a coarse model for the SM algorithm. Figure 15 shows the low-fidelity model at the initial design, as well as the low- and high-fidelity model responses at x (0). Because the major discrepancy between the models is a vertical shift, the primary SM transformation used for this example is the multiplicative response correction applied to all designs, x (0),x (1),…,x (i), considered during the optimization run. The SM surrogate is then enhanced using an additive output SM with the SM model having the form \(\boldsymbol{R}_{s}^{(i)}(\boldsymbol{x}) = \boldsymbol{A}^{(i)}\cdot \boldsymbol{R}_{c} (\boldsymbol{x}) + [\boldsymbol{R} _{f}(\boldsymbol{x}^{(i)}) - \boldsymbol{R}_{c}(\boldsymbol{x}^{(i)})]\).

The final design, x (7)=[39.84 24.52 8.84 21.40]T mm, is obtained in seven SM iterations. The high-fidelity model response at x (7) is shown in Fig. 16. At that design, we have |S 11|≤−10.9 dB for 2.3 GHz to 7.6 GHz. The overall design cost is summarized in Table 5 and corresponds to about 15 evaluations of the high-fidelity model. The convergence of the algorithm (Fig. 17) is consistent with that for the previous example.

7 Conclusion

In this chapter, we presented a Bayesian support vector regression methodology for accurate modeling of microwave components and structures. We demonstrated the possibility of reduction of the number of fine-discretization training points by performing BSVR modeling on coarse-discretization EM simulation data (selected by standard experimental design) and then obtaining high-fidelity simulations only for the points that contribute to the initial BSVR model in a nontrivial way. The computational savings thus obtained had little effect on the modeling accuracy. We have also demonstrated that the reduced-training-set BSVR models perform as well as the full-training-set models in parametric optimization of antenna structures. A notable advantage of BSVR is that only a single parameter must be set by the user, namely β (hyperparameters are initialized randomly during training). This is in contrast to, for instance, neural network-based methodologies for regression, which might require the tuning of a variety of architectural/performance parameters (e.g., number of hidden units, learning rate, momentum). We also discussed the use of BSVR surrogates for variable-fidelity design optimization of antennas, where the main optimization engine is space mapping, whereas the underlying coarse model is obtained by approximating low-fidelity EM simulation data. As a result, the optimization can be accomplished at a low computational cost corresponding to a few evaluations of the high-fidelity EM simulations of the structure under design.

References

Angiulli, G., Cacciola, M., Versaci, M.: Microwave devices and antennas modelling by support vector regression machines. IEEE Trans. Magn. 43, 1589–1592 (2007)

Jacobs, J.P.: Bayesian support vector regression with automatic relevance determination kernel for modeling of antenna input characteristics. IEEE Trans. Antennas Propag. 60, 2114–2118 (2012)

Chu, W., Keerthi, S.S., Ong, C.J.: Bayesian support vector regression using a unified loss function. IEEE Trans. Neural Netw. 15, 29–44 (2004)

Rasmussen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning. MIT Press, Cambridge (2006)

Devabhaktuni, V.K., Yagoub, M.C.E., Zhang, Q.J.: A robust algorithm for automatic development of neural network models for microwave applications. IEEE Trans. Microw. Theory Tech. 49, 2282–2291 (2001)

Couckuyt, I., Declercq, F., Dhaene, T., Rogier, H., Knockaert, L.: Surrogate-based infill optimization applied to electromagnetic problems. Int. J. RF Microw. Comput.-Aided Eng. 20, 492–501 (2010)

Tokan, N.T., Gunes, F.: Knowledge-based support vector synthesis of the microstrip lines. Prog. Electromagn. Res. 92, 65–77 (2009)

Schölkopf, B., Smola, A.J.: Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT Press, Cambridge (2002)

Bandler, J.W., Georgieva, N., Ismail, M.A., Rayas-Sánchez, J.E., Zhang, Q.J.: A generalized space mapping tableau approach to device modeling. IEEE Trans. Microw. Theory Tech. 49, 67–79 (2001)

Rayas-Sánchez, E., Gutierrez-Ayala, V.: EM-based Monte Carlo analysis and yield prediction of microwave circuits using linear-input neural-output space mapping. IEEE Trans. Microw. Theory Tech. 54, 4528–4537 (2006)

Koziel, S., Bandler, J.W.: Recent advances in space-mapping-based modeling of microwave devices. Int. J. Numer. Model. 23, 425–446 (2010)

Zhang, L., Zhang, Q.J., Wood, J.: Statistical neuro-space mapping technique for large-signal modeling of nonlinear devices. IEEE Trans. Microw. Theory Tech. 56, 2453–2467 (2011)

Bandler, J.W., Cheng, Q.S., Koziel, S.: Simplified space mapping approach to enhancement of microwave device models. Int. J. RF Microw. Comput.-Aided Eng. 16, 518–535 (2006)

Koziel, S., Bandler, S.W., Madsen, K.: A space mapping framework for engineering optimization: theory and implementation. IEEE Trans. Microw. Theory Tech. 54, 3721–3730 (2006)

CST Microwave Studio, ver. 2011. CST AG, Bad Nauheimer Str. 19, D-64289 Darmstadt, Germany (2012)

Jiao, J.-J., Zhao, G., Zhang, F.-S., Yuan, H.-W., Jiao, Y.-C.: A broadband CPW-fed T-shape slot antenna. Prog. Electromagn. Res. 76, 237–242 (2007)

Wi, S.-H., Lee, Y.-S., Yook, J.-G.: Wideband microstrip patch antenna with U-shaped parasitic elements. IEEE Trans. Antennas Propag. 55, 1196–1199 (2007)

Alexandrov, N.M., Lewis, R.M.: An overview of first-order model management for engineering optimization. Optim. Eng. 2, 413–430 (2001)

Kolda, T.G., Lewis, R.M., Torczon, V.: Optimization by direct search: new perspectives on some classical and modern methods. SIAM Rev. 45, 385–482 (2003)

Koziel, S.: Multi-fidelity multi-grid design optimization of planar microwave structures with Sonnet. In: International Review of Progress in Applied Computational Electromagnetics, April 26–29, Tampere, Finland, pp. 719–724 (2010)

Manchec, A., Quendo, C., Favennec, J.-F., Rius, E., Person, C.: Synthesis of capacitive-coupled dual-behavior resonator (CCDBR) filters. IEEE Trans. Microw. Theory Tech. 54, 2346–2355 (2006)

FEKO® User’s Manual, Suite 6.0. EM Software & Systems-S.A. (Pty) Ltd, 32 Techno Lane, Technopark, Stellenbosch, 7600, South Africa (2010)

Beachkofski, B., Grandhi, R.: Improved distributed hypercube sampling. American Institute of Aeronautics and Astronautics. Paper AIAA 2002-1274 (2002)

Chen, C.Y., Hsu, C.Y.: A simple and effective method for microstrip dual-band filters design. IEEE Microw. Wirel. Compon. Lett. 16, 246–248 (2006)

Koziel, S., Echeverría-Ciaurri, D., Leifsson, L.: Surrogate-based methods. In: Koziel, S., Yang, X.S. (eds.) Computational Optimization, Methods and Algorithms. Studies in Computational Intelligence, pp. 33–60. Springer, Berlin (2011)

Koziel, S., Ogurtsov, S., Jacobs, J.P.: Low-cost design optimization of slot antennas using Bayesian support vector regression and space mapping. In: Loughborough Antennas and Propagation Conf. (2012). doi:10.1109/LAPC.2012.6402988

Koziel, S., Cheng, Q.S., Bandler, J.W.: Space mapping. IEEE Microw. Mag. 9, 105–122 (2008)

Bandler, J.W., Cheng, Q.S., Dakroury, S.A., Mohamed, A.S., Bakr, M.H., Madsen, K., Sondergaard, J.: Space mapping: the state of the art. IEEE Trans. Microw. Theory Tech. 52, 337–361 (2004)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust Region Methods. MPS-SIAM Series on Optimization. Springer, Berlin (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Jacobs, J.P., Koziel, S., Leifsson, L. (2013). Bayesian Support Vector Regression Modeling of Microwave Structures for Design Applications. In: Koziel, S., Leifsson, L. (eds) Surrogate-Based Modeling and Optimization. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-7551-4_6

Download citation

DOI: https://doi.org/10.1007/978-1-4614-7551-4_6

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-7550-7

Online ISBN: 978-1-4614-7551-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)