Abstract

In general, average cognitive function declines across much of the adult life span. This decline has come to be understood as normative, though the rates of decline and the ages at which they commence vary across different aspects of function. Decline takes place in the context of much larger variation among individuals of any given age, and the rates of decline show individual differences as well. Although characterization of these normative patterns is now quite good, understanding of what drives the changes is much more limited. In this chapter, we review studies that have used a variety of different behavior genetic analytical approaches to investigate some of the thorniest questions facing cognitive aging, but we also highlight areas ripe for future behavior genetic approaches. We review quantitative genetic studies that have taken both cross-sectional and longitudinal approaches, as well as studies that have examined the extent to which different aspects of cognitive function and variables with which it is associated show common genetic influences. We then turn to behavior genetic contributions to special topics in cognitive aging including intra-individual variability and terminal decline, the problems of sample selectivity, and gene-environment correlation. Following these topics involving aggregate genetic contributions to individual differences, we consider molecular genetic approaches to identifying individual genes involved in cognitive aging.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Telomere Length

- Genetic Influence

- Cognitive Aging

- General Cognitive Ability

- Shared Environmental Influence

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Over the past 20 years or so, characterization of the nature of age differences and changes with age in cognitive function has improved dramatically. In general, average cognitive function declines across much of the adult life-span, and this decline has come to be understood as normative, though the rates of decline and the ages at which they commence vary across different domains of function. This decline takes place in the context of much larger variation among individuals of any given age and the rates of decline show individual differences as well. Although characterization of these normative patterns is now quite good, understanding of what drives the changes is much more limited. Some researchers posit that inherent neurobiological processes are of primary importance, while others focus on psychosocial factors. Behavior genetic approaches to investigating possible explanations offer unique opportunities to distinguish among these kinds of possibilities and to explore their interplay. In this chapter, we review studies that have used a variety of different behavior genetic analytical approaches to investigate some of the thorniest questions facing cognitive aging, but we also highlight areas ripe for future behavior genetic approaches.

We do not directly address dementia (see Chap. 7) or mild cognitive impairment (see Chap. 4). It may, nonetheless, have impacted many of the studies we discuss. Dementia becomes very common at older ages, reaching rates of 25–30 % for Alzheimer’s disease alone in those over age 85 (e.g., Blennow et al. 2006). This implies that rates of all-cause dementia are considerably higher. Although most studies of cognitive aging screen for dementia, the methods used focus on thresholds of cognitive impairment and many are quite insensitive to declines in function in people who have had particularly high function in midlife. Moreover, decline develops over a period as long as a decade, so that many who do not yet qualify for diagnosis may still show incipient symptoms. Thus many aging samples likely contain participants in early and undiagnosed stages of dementia. Because dementia shows genetic influences, this could have its own biasing effects on estimates of genetic influences on normative cognitive aging.

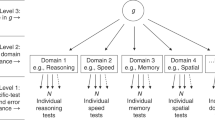

Cognitive function is not a unitary construct. Molecular genetic studies sometimes address general cognitive ability , especially general reasoning ability. This is warranted because much research shows that the common factor that may be derived from diverse cognitive tests declines with age. Sometimes studies also or instead address more specific cognitive domains, such as aspects of memory or processing speed for which decline with age is especially sharp. Some of these functions appear to be affected by age, even after accounting for age effects on general cognitive ability. Some studies examine even more specific cognitive tests and tasks and components. Because of differences in the normative patterns of aging across these different aspects of cognitive function, replication or generalization of specific estimates of magnitudes of influences or specific effects should not necessarily be expected.

In this chapter, we review quantitative genetic studies that have taken both cross-sectional and longitudinal approaches, as well as studies that have examined the extent to which different aspects of cognitive function and variables with which it is associated show common genetic influences. We then turn to behavior genetic contributions to special topics in cognitive aging including intra-individual variability and terminal decline , the problems of sample selectivity and gene–environment correlation. Following these topics involving aggregate genetic contributions to individual differences, we consider molecular genetic approaches to identifying individual genes involved in cognitive aging.

1 Quantitative Genetic Approaches

Quantitative genetic approaches involve the analysis of twin and family data in order to identify, quantify, and characterize the factors that contribute to individual differences in a trait (i.e., phenotypic variability). At the initial stages of inquiry, the focus is often on quantifying the independent contributions of three major factors: additive genetic influences (commonly termed “A”), shared environmental influences that act to make people who live together similar (termed “C”), and nonshared environmental influences , including measurement error, that act to make people different (termed “E”). As research on a specific phenotype advances, there is increasing emphasis on the exploration of models of gene–environment interplay . Quantitative genetic research on late-life cognitive function is generally at the initial stage of decomposing phenotypic variance into underlying biometric components and has relied almost exclusively on the analysis of monozygotic (MZ) and dizygotic (DZ) twin similarity. These twin studies have sought to address three major questions. First, in cross-sectional research, what are the contributions of genetic and environmental factors to cognitive function at various ages? Second, in longitudinal research, what are the genetic and environmental contributions to both stability and change in cognitive function? Third, in multivariate research, what are the factors that underlie the genetic and environmental components of variance?

1.1 Cross-Sectional Twin Research

1.1.1 General Cognitive Ability

One of the most robust findings in the behavioral genetics literature is that genetic factors contribute to individual differences for most behavioral traits (Turkheimer 2000). Late-life cognitive function does not provide an exception to this general rule. Table 5.1 summarizes major cross-sectional twin studies in this area (Lee et al. 2010). Several features of the information summarized in the table are worthy of comment. First, as compared to many areas within behavioral genetics, there are few twin samples and the sizes of these samples are small relative to twin samples for many other behavioral traits. Even the samples derived from the large Scandinavian registries are modest when compared to behavioral genetic research on other phenotypes. This is a reflection of the challenges (e.g., mortality, frailty, emigration) associated with ascertaining and assessing large representative twin samples in late life. Second, despite the modest number and sizes of the relevant twin studies, a consistent pattern of findings is evident. In late life, MZ twins are consistently more similar in general cognitive ability than DZ twins, resulting in heritability estimates that are moderate to large (i.e., 50–80 %), and comparable to those from younger adult samples. Moreover, the estimates of the proportion of variance attributable to shared environmental factors have been consistently low. Indeed, in only one study was it estimated to be anything other than zero.

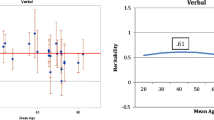

The failure to observe evidence for shared environmental influences on late-life cognitive function is perhaps to be expected. The magnitudes of shared environmental influences on a broad array of behavioral phenotypes drop off markedly during the transition from late adolescence to early adulthood (Bergen et al. 2007), the period when twins are likely to establish separate residences. Little, if any, shared environmental influence on the cognitive function of older twins, many of whom have not lived together for 50 years or more, may simply be consistent with this more general observation, though it has implications for theories positing that cognitive function is strongly shaped by early-life environmental circumstances. Of theoretical interest is also whether heritability estimates for late-life cognitive function differ from estimates at other adult ages. It is well known that the heritability of general cognitive ability increases from childhood through early adulthood (Haworth et al. 2010). It is less clear whether heritability changes from early through late adulthood. Finkel and Reynolds (2010) plotted estimates of the heritability of general cognitive ability from cross-sectional twin studies as a function of sample age. As every study did not report heritability estimates based on the same biometric model (i.e., some reported heritability for the AE model and others used the ACE model), we plot the MZ and DZ twin correlations for general cognitive ability reported in cross-sectional studies of adult twins as a function of age, in Fig. 5.1. The figure clearly shows that both the MZ and DZ correlations tend to decrease with age, with MZ correlations decreasing at a slightly more rapid rate than DZ correlations. This pattern is consistent with the conclusion drawn by Finkel and Reynolds (2010), that late-life reductions in the heritability of general cognitive ability reflect an increasing importance of nonshared environmental factors. Despite this general consistency, there is considerable variation in the sizes of the twin correlations. Some of this can be attributed to differences in the measures of general cognitive ability used. Although different measures tend to be well correlated (Johnson et al. 2004), they can differ when some measures tap the general construct much more broadly and/or reliably than others (Johnson et al. 2008).

1.1.2 Specific Cognitive Abilities

It is beyond the scope of this chapter to survey systematically the vast research literature on the myriad specific cognitive abilities that have been investigated in studies of older twins. We instead focus our discussion on memory, an ability that is seen to be fundamentally linked with aging (Craik and McDowd 1987) and consequently has been the most extensively investigated specific cognitive ability in late life. Table 5.2 summarizes major cross-sectional twin studies of memory function. Again, there are considerable differences among the specific estimates of magnitudes of genetic influences, partly due to differences in the specific aspects of memory measured in the various studies. Nevertheless, the patterns evident with general cognitive ability in Table 5.1 can also be seen with memory. That is, it is moderately heritable, albeit somewhat less so than general cognitive ability. The lower heritability of memory measures likely reflects the abbreviated nature of many of the memory assessments, which generally have lower reliability than the broad measures of general cognitive ability. Also, consistent with general cognitive ability, there is no evidence for shared environmental influences on memory function in late life. The largest source of variance is the nonshared environment, which typically has accounted for 50–60 % of the variance in memory measures, but which includes variance due to measurement error.

1.2 Longitudinal Twin Research

Only a longitudinal study can assess change in cognitive function at the individual level, and thus allow for an investigation of the factors that contribute to individual cognitive aging. Undertaking a longitudinal study in a late-life sample is, however, challenging, much more so than cross-sectional research. To be informative, longitudinal studies should ideally assess large samples and include multiple follow-up assessments, requiring time spans of many years. The costs of longitudinal research, in both funding and researcher time, are considerable. These challenges are further compounded by sample loss to follow-up due to illness or mortality, which can greatly diminish their size, and in twin samples by the need to recruit most participants in pairs. Because of the logistical challenges associated with undertaking longitudinal twin research in late life, the number of relevant data sources for studies of genetic influences on general cognitive ability is limited. Those available, however, have been quite extensively utilized, and two have been maintained over many assessments.

Most longitudinal twin studies of aging have been analyzed by fitting latent growth curve models (Lee et al. 2010; Neale and McArdle 2000). Briefly, latent growth curve analysis involves using the individual sequences of observed phenotypes to estimate the components of longitudinal curves. In studies of cognitive aging, these curves might more accurately be called decay rather than growth curves, although we retain the standard nomenclature. At a minimum, two components are estimated. The first is the initial value or intercept, which in effect captures individual differences that are stable over the multiple assessments. Because the intercept is estimated as a latent variable and moreover is a function of the multiple phenotypic observations, the impact of measurement error is minimized. The second component is the slope, or rate of linear change across time. The slope is arguably the component of greatest interest, as it captures how individuals are changing on average across the observation period. In some cases, nonlinear models of change are investigated by estimating a quadratic component, which reflects acceleration/deceleration in the rate of change. Alternatively, a nonlinear model might involve estimating a change point, after which the rate of linear change is different from the original. Reliable estimates of these additional components, however, require relatively large numbers of longitudinal observations (Bryk and Raudenbush 2002). There have thus been only a few attempts in the behavioral genetics literature to characterize individual differences in nonlinear components of change.

The most extensive longitudinal twin study of cognitive aging is the Swedish Adoption/Twin Study of Aging (SATSA) . SATSA began in 1984 with a sample of 303 reared-together and reared-apart twins aged 50 years and older. It includes up to six waves of assessments spanning nearly 20 years (Finkel and Pedersen 2004). Plomin, Pedersen, Lichtenstein, and McClearn (Plomin et al. 1994) provided the first longitudinal analysis of SATSA cognitive data. However, their analysis was restricted to just the first two waves of cognitive assessment, which were separated by only 3 years. As a consequence, rather than focus on cognitive change, which was minimal over this time span, they focused on cognitive stability, which was substantial as reflected by a longitudinal correlation of 0.92. They estimated the heritability of general cognitive ability to be 80 % and concluded that 90 % of the stability of cognitive function across the two time points could be ascribed to genetic influences.

Since this initial publication, the SATSA sample has been assessed cognitively an additional two times, bringing the maximal retest interval to 13 years. Reynolds et al. (2005) provided the most comprehensive and up-to-date longitudinal analysis of the SATSA cognitive data. We focus here on findings for their measure of general cognitive ability, the first principal component of a battery of ten tests of specific cognitive ability . In their growth curve analysis of up to four assessments on a sample of 362 pairs of twins, they concluded that the intercept was highly heritable (91 %), but that the rate of linear change was not (heritability estimate 1 %). However, they did report a significant heritable effect on the quadratic component (43 %). The finding of genetic influences on the quadratic but not the linear component is somewhat counterintuitive, especially because it was observed in the context of overall decreasing twin similarity with age. A possible face-value interpretation is that genetic influences are important to the large variance in stable individual differences, but the variance in cognitive change (primarily decline) that takes place in “early old age” is much smaller by comparison so that there is little power to identify its sources. There is greater variance, of which some is genetic, in the rate and timing of acceleration in decline in “late old age” that may be associated with overtly declining health.

The second major longitudinal twin study of cognitive aging is the Longitudinal Study of Aging Danish Twins (LSADT). LSADT utilizes a cohort-sequential design. It was begun in 1995 and includes up to six waves of in-person assessment spanning 10 years (Christensen et al. 1999). A total of 1,112 same-sex twin pairs of known zygosity aged 70 years and older have participated in LSADT. McGue and Christensen (2007) provides the most comprehensive and up-to-date longitudinal analysis of the LSADT cognitive data. We focus here on their analysis of LSADT’s measure of general cognitive ability , which is a composite of five brief individual cognitive measures of fluency, forward and backward digit span, and immediate and delayed word recall, which means that it is somewhat limited than SATSA’s as it emphasizes on several aspects of memory . The heritability of the intercept (39 %) was significant but more moderate than that reported in SATSA. There was also a significant shared environmental effect on the intercept (30 %), unlike in SATSA. However, in agreement with SATSA, they reported nonsignificant estimates for both the genetic (18 %) and shared environmental (2 %) contributions to the slope. They did not fit a quadratic component, which might explain the larger, though nonsignificant, estimate of genetic influence on the slope.

Longitudinal twin studies of cognitive aging are in general agreement. Although the heritabilities of cognitive abilities broadly construed at any point in adulthood are significant and at least moderate (with estimates generally at least 50 %), change in cognitive performance appears to be predominantly due to nonshared environmental factors. Despite the consistency of findings, several factors caution against drawing strong conclusions about the absence of genetic influences on cognitive change. First, retest intervals have generally fallen in the 4–10-year range, which may be too limited a time period to allow for reliable assessment of individual change. Second, late-life cognitive assessment can be confounded by the effects of impending death (Bosworth and Schaie 1999), which could attenuate twin similarity for cognitive ability when twins are not concordant for time at death (Johansson et al. 2004). Third, practice effects, which have been shown to exist and to vary in older samples even when retest intervals are long (Rabbitt et al. 2004; Singer et al. 2003) probably need further consideration than they have received to date. Finally, and perhaps most significantly, change has typically been assessed linearly. Yet the two studies that investigated nonlinear models of change (McArdle and Plassman 2009; Reynolds et al. 2005), did find evidence for genetic influences on these higher-order moments of cognitive change.

1.3 Multivariate Twin Research

Multivariate methods have been used to explore the nature of genetic effects on late-life cognitive function. A reasonable guiding hypothesis is that genetic factors influence cognitive function because they influence the brain structures and processes upon which higher-level cognitive function depends (Fjell and Walhovd 2010). For example, the speed with which individuals process information is thought to reflect the integrity of underlying neural systems that support higher-level cognitive function (Kennedy and Raz 2009). Processing speed is typically measured using psychometric tests such as digit symbol coding, experimental tests such as processing speed, or psychophysical tests such as inspection time (Deary 2000). Processing speed shows marked decreases with age. It is also moderately to highly heritable. In an early report from SATSA , the estimated heritabilities for measures of speed ranged from 51 to 64 % (Pedersen et al. 1992). Similarly, in a recent publication based on the Older Australian Twin Study, the estimated heritability for five speed measures ranged from 0.35 to 0.62 (Lee et al. 2012). Of interest, the lowest heritability estimate in this study was for Choice Reaction Time, which another twin study had also reported to have low heritability (Finkel and McGue 2007).

Some consider processing speed to be simply another domain of cognitive function, both with respect to the hierarchical structure of cognitive abilities and within cognitive aging. From this perspective, processing speed has its own variance like other cognitive ability tests, as well as variance shared with all other cognitive ability tests, as captured by its loading on the general cognitive factor (Carroll 1993; Salthouse 2004). Consistent with this, one bivariate behavior genetic study found common genetic variance between processing speed and general intelligence, but neither appeared to be causal to the other (Luciano et al. 2005). Others hypothesize that the observed declines in speed underlie declines in other cognitive functions, especially so-called fluid abilities (Finkel et al. 2007; Salthouse 1996). Indeed, a meta-analysis concluded that speed can account statistically for a large share of the variance in a broad array of cognitive measures (Verhaeghen and Salthouse 1997), and the few studies that have appeared have been consistent in indicating common genetic variance between measures of speed and other aspects of cognitive function (Finkel et al. 2005; Lee et al. 2012). More directly corroborating the hypothesis that declines in speed underlie cognitive aging, a SATSA study found that that the genetic contribution to processing speed appeared to drive age-related changes in memory and spatial but not verbal ability (Finkel et al. 2009). Processing speed may, of course, not be the only leading indicator of more general cognitive aging, though it is the only one to have been examined to date in behavior genetic studies.

2 Special Topics in Cognitive Aging

2.1 Intraindividual Variability in Cognitive Abilities

Many cognitive tasks are designed to include a series of items that tap the same basic skills, knowledge, or perceptual or manipulative capacities at different levels of difficulty. Items on these tasks are usually scored as correct or incorrect, and a single score consisting of the correct number is generated. With scores of this type, the ideal is that, if a person were to carry out the same set of items again, s/he would generate the same score. Of course, this ideal is never attained. Differences in scores with repeated assessments over even short time periods always occur. Some of these are practice effects; some might reflect state differences such as recent caffeine consumption or relative fatigue; and some are outright error of measurement. Despite this, differences in scores over extended time spans are considered to reflect change in true score (plus the other sources of change as relevant). For tasks such as this, it is most common to examine means for a study group overall, and variance in scores reflects interindividual differences. Tasks that are intended to assess fundamental cognitive processes, however, are generally designed differently. The idea in designing such tasks is that any complex cognitive task must require execution of several simpler cognitive processes. Identification and measurement of such processes would facilitate understanding of individual differences in performance on the more complex cognitive tasks to which they contribute.

The kinds of very simple tasks used to reflect fundamental cognitive processes take very little time to carry out and almost everyone can do them. For example, one common task measuring reaction time requires the participant to push a button when s/he sees a symbol flash onto a screen. That’s all. The measure taken is the time from presentation of the symbol flash on the screen to the participant’s button press. As this task (like most tasks of fundamental processes) is so simple, it is possible to get participants to do it many times without fatigue. Doing so reveals variance in response times both across and within individual participants. Reliability of estimates of variance across individuals can be increased dramatically by using the average within-person variance for participants across many task trials. This is typically done, and such averages generally show substantial correlations with age as well as with performance on more complex cognitive tasks.

However, the intraindividual variation also tends to show stability both over time (Hultsch et al. 2000; Rabbitt et al. 2001; Ram et al. 2005) and across tasks of fundamental cognitive processes and more complex cognitive tasks (Fuentes et al. 2001; Hultsch et al. 2000; Li et al. 2001). Moreover, people who tend to perform not very well on cognitive tests of all kinds tend to show greater variability on tests of fundamental cognitive processes (Li et al. 2001; Li et al. 2001). This is true both when cognitive ability has been low throughout life, and when pre-existing cognitive ability has been impaired by dementia (e.g., Hultsch et al. 2000), schizophrenia (e.g., Winterer and Weinberger 2004), brain injury (e.g., Stuss et al. 1994), or even just normal aging (e.g., Deary and Der 2005; West et al. 2002). Moreover, increases in variability have been linked to decreases in performance in the same individuals over time (MacDonald et al. 2003). Greater performance variability on fundamental cognitive tasks also appears to be associated with concurrent lower performance on more complex cognitive tasks independent of the association between mean level performance on fundamental cognitive tasks and performance on more complex cognitive tasks (e.g., Li et al. 2004). In addition, increases in variability on fundamental cognitive tasks over time have been linked to decreases over the same period in performance on more complex cognitive tasks more strongly than vice versa (e.g., Bielak et al. 2010; Lovden et al. 2007). Taken together, these observations suggest that variation around the average within-person performance level is systemic and thus potentially meaningful, that greater variability in performance on fundamental cognitive tasks may be related to impairments in central nervous system function that also impair performance on more complex cognitive tasks, and that this performance serves as a sort of leading indicator, or canary-in-the-coal-mine warning, of coming general cognitive decline.

If so, variability in performance on these tasks should show genetic influences, as do mean levels of performance, as well as most other psychological measures that show stability over periods of days or weeks. To our knowledge, this has been investigated twice, once in a small younger sample, and once in a larger older sample. Vernon (1989) administered eight reaction time tasks to a sample of 102 twin pairs ranging in age from 15 to 37 years. The tasks generated 11 measures of intraindividual variability , with heritability estimates ranging from 41 to 98 %. Finkel and McGue (2007) carried out a much more extensive examination. They used a sample of 738 participants including 316 twin pairs, ranging in age from 27 to 95 years, with median 62 years. The participants completed simple and four-choice reaction time tasks, though the number of trials administered for each task (15) was much smaller than is customary for such tasks, limiting the reliability of the means and standard deviations. In both tasks, Finkel and McGue (2007) estimated genetic influences on mean and intraindividual standard deviation separately for decision and movement times, under the presumption that decision time directly reflects central nervous system function, and movement time motor processes that are more peripheral to cognitive function.

Univariate estimates of genetic and environmental influences indicated that genetic influences accounted for 20–35 % of the variance in movement and decision time means and movement time standard deviation, but effectively none of the variance in decision time standard deviation. For the latter, shared environmental influences accounted for 13 % of the variance; these influences accounted for 0–7 % of the variance in the other measures. Age accounted for 3–12 % of the variance in all the measures. Multivariate analyses found genetic and age influences common to the four measures, even decision time standard deviation. Some nonshared environmental influences were common to all but decision time standard deviation, but all measures except movement time standard deviation also showed nonshared environmental influences unique to each measure. There was little consistency in the results of these two studies, and both suffered from substantial limitations that likely contributed to this lack of consistency. For the Vernon (1989) study, small sample size was a primary limitation, and its age range rendered it irrelevant to cognition in old age. Its tasks and the kind of analysis carried out also differed. The analysis in the Finkel and McGue (2007) study was considerably more sophisticated, but the wide range and strongly negative skew of the sample’s age distribution likely introduced sources of variance that undermine the relevance of its results to cognition in old age. Moreover, the number of trials, which would now be considered rather small, undoubtedly contributed to error variance. Clearly, given the gathering evidence that intraindividual variability in fundamental cognitive task performance is an early indicator of cognitive decline, additional behavior genetic studies in this area are warranted.

2.2 Terminal Decline

The concept of terminal decline has attracted considerable research attention in the area of cognitive aging research. It emerged from clinical observations, and generates interest because it offers hope of practical applications to cope with the social burden presented by cognitive declines in aging populations. It is burdened by measurement problems that make even confirming its existence difficult, and has not been well studied from a behavior genetic perspective. Still, its importance as a current topic of investigation in the field of cognitive aging implies that anyone interested in the behavior genetics of cognition should be familiar with it and how behavior genetics might contribute to our understanding of it.

The concept of terminal decline , or drop, grew out of observations that cross-sectional analyses of age differences in cognitive function suggested much sharper declines in function with age than longitudinal studies and that study participation appeared to be biased towards higher-performing individuals in better physical health. The idea of terminal decline is that, beginning some period before death, cognitive functions begin to decline very sharply. This idea has great appeal because, if the period and rate of terminal decline could be identified, aging individuals and clinicians could have forewarning of impending death. Early researchers on the topic in the 1960s (Jarvik and Falek 1963; Kleemeier 1962; Lieberman 1966; Riegel and Riegel 1972) speculated that all observed differences in average cognitive function with age might be attributed to sharp declines in the performance of those who did not survive the next few years after test administration, with survivors remaining stable until they too reached their last few years of life. That is, in cross-sectional samples , decreasing average cognitive function with age may result from increasing proportions of these samples being in this period of terminal decline at older ages.

The subsequent 50 years have seen development of longitudinal data bases and new statistical techniques that make it possible to track individual changes over time much more closely than was possible then. These developments have generally indicated that the idea of complete stability until some sharp decline shortly before death is too optimistic, but they generally support the idea that cognitive decline is steeper in later old age than in earlier old age. This makes for a rather blurry image of terminal decline . Unfortunately, this is at least partly because these research developments have also soundly confirmed the omnipresence of two measurement problems: a tendency for both longitudinal and cross-sectional samples of older adults to be increasingly biased with increasing participant age, to varying degrees in different samples, towards healthier and better-performing individuals (e.g., Rabbitt et al. 2008), and the need for measurement to continue until most of the sample population has died. Because of the difficulties these create in assessing and summarizing patterns of intraindividual change accurately, questions of rates of normative change, the length of some period of terminal decline and even its existence remain hotly debated (e.g., Batterham et al. 2011; Gerstorf et al. 2011; MacDonald et al. 2011; Piccinin et al. 2011; Rabbitt et al. 2011). The debate is fueled by focus on change-point analytical methods that are based on an assumption that cognitive decline can be best modeled as linear, with one constant slope pertaining prior to the beginning of the terminal period, and another pertaining afterwards (e.g., Sliwinski et al. 2006; Wilson et al. 2003), rather than, for example, gradually accelerating with age such as might be described by a quadratic function. That is, the methods most commonly used to measure the length of the terminal period and the rates of change before and after it rely on the assumption that the phenomenon of terminal drop is real.

To our knowledge, only one study has attempted to investigate how genetic influences may be involved in declines in cognitive function when linked directly to time to death. Johansson et al. (2004) first used latent growth models to observe that time to death predicted rate of change in several different aspects of cognitive function in a sample of twins over age 80 at study inception, considering the twins as individuals. They went on to examine the patterns of intraclass twin correlations for initial levels and rates of change in the different aspects of cognitive function. For levels, these showed the typical pattern of large correlations in MZ twin pairs and smaller but still substantial correlations in DZ twins, indicating substantial genetic influences. For rate of change, the correlations were generally small in absolute magnitude and many were negative for MZ twins. For DZ twins, many were negative, one even strongly so, and those that were positive were generally small. There was no meaningful evidence of genetic influence on rates of change. Johansson et al. (2004) also examined the individual assessment correlations separately in MZ and DZ twin pairs both of whom participated in all four of the assessments for which they had data, comparing them to those for twin pairs who were intact at only three or two assessments or the first assessment. Results were very mixed, but there was a small tendency for the correlations to be lower at the last assessment for which the pairs were intact, suggesting that they were becoming less similar in the period before at least one of them died. This would be consistent with the existence of some form of terminal decline to which genetic influences did not contribute, especially since there was no evidence of differences in this (very tentative) pattern between MZ and DZ twins.

Clearly, more research is needed on the topic of terminal decline, or perhaps more generally on the topic of the shapes of the typical trajectories of decline in different cognitive functions in old age. Most research on this topic is driven by empirical observations rather than theory, and it may be helpful to develop clearer theoretical rationales for one form of decline or another so that models of alternative hypothesized processes can be pitted against each other (Platt 1964). For example, it is reasonable to postulate that some aspect of cognitive decline accelerates once at a point some time before death that is the same for all or most individuals, as assumed in implementing latent change models. But it is just as reasonable to posit that this decline accelerates at some point, say at age 70, that is similar for all, regardless of when they will die, and also reasonable to postulate that decline is continuous in old age, but accelerates. In this latter case, there is no fixed “change point” but rather decline that is much faster for people close to death than for people further from death, whatever their specific ages. This is more consistent with studies that have implemented models with linear and quadratic terms. Most difficult to evaluate of all, it is also just as reasonable to postulate that decline accelerates just once sometime before death, but that the timing of this acceleration depends on the cause of death so that it varies from individual to individual, depending on cause of death (Rabbitt et al. 2011), and/or perhaps some other factors. It is interesting that, as discussed earlier, several SATSA studies to date that have modeled decline using quadratic functions have suggested that genetic influences are more apparent on the term representing quadratic than on the term representing linear change. Is this real? Does this generalize or is it unique to SATSA? Is it specific to certain aspects of cognitive function and not to others? It should be observed in additional samples before we draw any conclusions. Complicating things still further, Pedersen et al. (2003) demonstrated that failure to model terminal decline can inflate the apparent heritability of linear change in SATSA, though they did not consider nonlinear (quadratic) change. But if the observation that accelerating (quadratic) change is more heritable than linear change replicates, does it imply that accelerating decline is a better model than terminal decline? Does it imply that genetic variation contributes primarily to factors related to mortality and not to cognitive aging prior to inception of mortality-related deterioration? At this point, there is simply not enough evidence to form even a tentative conclusion.

2.3 Selection Effects and Gene–Environment Correlation

Most samples in studies of cognitive aging, whether cross-sectional or longitudinal , tend to show higher average cognitive function and socioeconomic status compared to the comparably aged population at large. There are two reasons for this. First, study samples in general tend to show somewhat higher average cognitive function and socioeconomic status than the otherwise-comparable population because these factors contribute to understanding the importance and relevance of research (e.g., Nishiwaki et al. 2005); that is, they tend to be somewhat select. Second, the resulting sample range restriction or selectivity is exaggerated in aging samples because cognitive functions and mortality tend to be positively related (24 % reduction in hazard rate for each standard deviation increase in intelligence; Calvin et al. 2011), and older participants who are close to death and thus in poor physical health and potentially suffering terminal decline are less likely to participate in research studies regardless of their original levels of cognitive function and socioeconomic status. This has the effect that, within cross-sectional study samples and initial samples in longitudinal studies that have large age ranges, older participants tend to have had higher midlife socioeconomic status and general cognitive function, often represented by tests of crystallized knowledge such as word-reading accuracy, than younger participants, thus leading to underestimates of the extent of cognitive decline with age (Rabbitt et al. 2008).

Longitudinal studies with narrow age ranges can avoid these problems. But, since the primary reason for attrition from such studies is often death or disability, the samples still become increasingly selected for high midlife cognitive function (Lachman et al. 1982) no matter how measured. Thus, even narrow age-range longitudinal studies can underestimate the extent of normative cognitive decline. To the extent that genetic influences on cognitive ability vary with levels of socioeconomic status, estimates of genetic influences on all aspects of the processes involved may be affected. Offsetting this, however, is the possibility that some study participants were in early stages of undiagnosed dementia.

Sample selectivity brings with it other challenges in understanding cognitive aging, some of which twin samples intended for behavior genetic analyses are especially well positioned to address. There is high interest in the “use it or lose it” hypothesis, or the idea that maintenance of intellectual, or even physical, activity in old age may slow the rate of cognitive decline, and substantial evidence at least for an association between greater activity and slower decline (Schooler and Mulatu 2001). Establishing that such activity is actually causal in reducing the rate of cognitive decline is not, however, straightforward. There are two basic reasons for this. First, the causal influences may flow in the opposite direction. That is, people who are suffering cognitive decline may withdraw from activities because they have become too difficult. Longitudinal samples, whether of twins or not, are the best means to address this possibility, though in practice it is difficult to sequence exposure and outcome measures in order to resolve it conclusively (e.g., Hoffman et al. 2011). Second, other variables may create the association through confounding. Confounding takes place when some third variable(s): (1) actually causes the outcome, (2) is correlated with the exposure, and (3) is not affected by the exposure (McNamee 2003). Although almost any kind of variable could act as a confounder, one of the most likely possibilities, given the pervasive presence of genetic influences on behavioral traits is that the genetic and environmental influences that contribute to motivation towards and enjoyment of engagement in activities may also contribute to preservation of good cognitive function, thus creating what behavior geneticists consider gene–environment correlation. That is, individuals may actively select, consciously or unconsciously, environments that reinforce the genetically influenced characteristics that originally led them to seek those environments (see Chap. 6 for additional discussion). Two sets of behavior genetic models can be of particular help in addressing this possibility.

The first is the co-twin control model . Because MZ twins share a common genotype and, generally, early rearing environment, one twin within a pair can provide control for genetic and familial environmental background for the other. Thus, if, in twin pairs where one is exposed to some environment and the other is not, the exposed twins have an outcome that the nonexposed twins do not, this provides unusually strong evidence that the environmental exposure is actually causative. Control is weaker when discordant DZ pairs are compared because they are less genetically similar, but DZ pairs can still provide important information, and many studies have included them because discordant MZ pairs are rare for many kinds of environments, rendering sample sizes small. There are always some qualifications to this, of course. Twins may not be completely representative of the more general population, cause may actually flow in the opposite direction unless some longitudinal control is in place, or some unmeasured third variable may confound the association through nonshared environmental influences (McGue et al. 2010). There may be inaccuracies even when results appear to refute the causal inference, due to lack of reliability of the measure of difference between co-twins in the outcome. Despite all this, the model provides one of the best tests of confounding by gene–environment correlation.

The co-twin control model has been applied in several studies involving cognitive function in old age. For example, Potter et al. (2006) investigated the association between occupational complexity and cognitive function in a large sample of US male veterans who were on average in their mid-60s at time of baseline assessment. Although the intellectual complexity of the jobs these men held before retirement was significantly associated with their cognitive function, this association did not hold up within MZ twin pairs who were discordant for job complexity. This suggests that rather than reflecting solely an environmental influence, the association of job complexity with cognitive function likely arises at least partly because intellectually demanding jobs are filled by individuals who are cognitively able (Finkel et al. 2009).

McGue and Christensen (2007) had somewhat better luck in demonstrating potentially causal effects. They examined differences in cognitive, primarily memory function in aging Danish MZ twins discordant for level of social activity. They observed that, within MZ pairs, the twin with the greater amount of social activity also showed better cognitive function at any assessed point in time, but there were no differences between the twins in rate of decline in function over time. The effect of social activity in discordant MZ twins was smaller, however, than the effect in the overall sample, indicating that gene–environment correlation was also important in understanding the association. This kind of result, where evidence for both directly causal effects and gene–environment correlation is present, is probably most typical of adequately powered studies investigating many different phenotypes. In the cognitive aging literature, different studies have not yet measured either phenotypes or environments in similar enough ways to draw overall conclusions.

The second behavior genetic model that is useful in evaluating the possibility that gene–environment correlation confounds apparent risk–outcome associations is Purcell’s (2002) model of gene–environment interaction in the presence of gene–environment correlation. Although this model has received criticism (Rathouz et al. 2008), it is useful in many situations. Its primary advantage is that it makes it possible to get some sense of the processes underlying and linking gene–environment interaction and correlation (Johnson 2007, 2011). This is because it reveals both when genetically and environmentally influenced variance differs with level of environmental exposure, and when and to what degree the genetic and environmental influences on environmental exposures and outcome phenotypes are linked. For example, Johnson et al. (2009) used this model to explore the associations among educational attainment and primarily memory-related cognitive and physical function in Danish twins aged 70 and over. General biological aging, chronic illnesses that affect both physical and cognitive function such as diabetes, and high lifetime-stable cognitive ability that facilitates lifestyle choices and health habits have been offered as (not mutually exclusive) possible explanations for the widely observed link between physical and cognitive function in old age, with education generally assumed to be a protective factor.

Study results were complex, but likely indicative of the sorts of intertwined processes we should expect to be involved in cognitive aging. Physical function did not moderate genetic or environmental influences on cognitive function, though both their genetic and environmental influences were substantively linked. This suggested that physical deterioration did not precede or cause deterioration in cognitive function, but that, instead, they declined together for some of the same reasons. Cognitive function, however, did moderate genetic and both shared and nonshared environmental influences on physical function, with greater variance from all sources associated with lower cognitive function. This, in conjunction with the basic association between cognitive and physical function, suggested that lifetime-stable cognitive ability supported the development of lifestyle factors that maintained both physical and cognitive function, especially because the pattern of genetic and nonshared environmental correlations suggested that the lifestyle factors acted to minimize expression of genetic vulnerabilities. There was no evidence that educational attainment provided resources to minimize or prevent the sorts of chronic illnesses that affect physical function because it did not moderate physical function. It did, however, moderate variance in cognitive function, suggesting that education acted in ways similar to lifetime-stable cognitive function in facilitating lifestyles that helped to maintain health. Results of this kind are at best suggestive of processes, however, and this area of research badly needs additional methods that can more rigorously distinguish among the kinds of possibilities this study addressed.

3 Molecular Genetic Approaches

The principal molecular genetic approaches to studying cognitive abilities in old age are candidate gene and genome-wide association studies (GWAS). In candidate gene association studies, associations between particular genes and traits are investigated, while in GWAS hundreds of thousands or even a million genetic markers throughout the genome are scanned for association. To date, there have been few GWAS studies of cognitive aging, and those that have been carried out require replication and have offered very little with respect to mechanistic pathways that might be associated with differential cognitive aging. There have, however, been several genetic studies of cognitive aging that have gone far beyond candidate gene and GWAS studies.

3.1 Candidate Gene Studies

In candidate gene studies, researchers consider whether variants in specific individual genes might be associated with people’s differences in cognitive aging. To carry out such studies, some choice must be made of which genes might hold variants that could be associated with differences in cognitive aging from the 20,000+ protein-coding genes in the human genome. Typically, to date, single nucleotide polymorphisms (SNPs) have been chosen, because these can be tested easily and the minor allele will be possessed by reasonable numbers of subjects in most samples. Harris and Deary (2011), Deary et al. (2009), and Payton (2009) have recently reviewed these studies. As with most other phenotypes, they have provided few replicable results, with the exception of small effects from the gene for apolipoprotein E (APOE) . Payton found total agreement for none of the 50 genes that had been studied with respect to normal cognitive aging in the 14-year period between 1995 and 2009, concluding that the field is “largely bereft of consensus and adequate research design…. Sadly however, if the question were to be asked ‘after 14 years of cognitive research what genes can we conclusively say are responsible for the variation in general cognition or its decline with age in healthy individuals?’ the answer would have to be ‘none’” (p. 465). Problems he identified in many studies included poor and varying assessments of the cognitive phenotype, especially those studies using the Mini-Mental State Exam due to its commonly observed ceiling effects; the possibility of sex-specific effects; poor sample sizes; population stratification; and failure to adjust for vascular risk factors that are known to be associated with dementia and cognitive decline. He also addressed the failure to consider either gene–environment or gene–gene interaction, citing the example of how variation in the FADS2 gene interaction with breastfeeding appeared to affect children’s intelligence (Caspi et al. 2007) and the example of how the brain-derived neurotrophic factor (BDNF) and REST gene variants interacted in their association with general intelligence in a group of older people without dementia (Miyajima et al. 2008).

Obvious candidate genes for normative cognitive aging are those that have been associated with Alzheimer’s disease because of its long period of development. There are three genes that show mutations that are strongly associated with early onset of this disease: amyloid precursor protein (APP) , and presenilin 1 and 2 (PS1, PS2; see Hamilton et al. 2011), but these account for only a very small percentage of Alzheimer’s cases. The much more common form of Alzheimer’s that may confound studies of normative cognitive aging has an older age of onset. The best known and replicated genetic risk for this form of the disease is possession of the epsilon 4 allele of the gene for APOE (Corder et al. 2003). Close to this gene on human chromosome 19 is the gene for translocase of the outer mitochondrial membrane 40 homolog (TOMM40), and variation in this gene, too, is associated with late-onset Alzheimer’s-type dementia (Roses et al. 2010). Large-scale GWAS studies of Alzheimer’s disease have also found replicated associations between the disease and genetic variation in the following genes: BIN1, CLU, CR1, PICALM, and the genetic region BLOC1S3/EXOC3L2/MARK4 (Hamilton et al. 2011; Seshadri et al. 2010).

These genes were examined for associations with verbal declarative memory, abstract reasoning, and executive function in the Lothian Birth Cohorts of 1921 (mean age 79) and 1936 (mean age 70; Hamilton et al. 2011). The tests—involving 158 SNPs —were done without adjusting for childhood IQ score to examine cognition in old age, and with this adjustment to examine cognitive aging, and with and without adjustment for APOE e4 status. After adjusting for multiple testing, no single SNP was associated with any cognitive ability. However, one haplotype from TRAPPC6A was associated with abstract reasoning in those lacking an APOE e4 allele. Also suggested, but with less strong evidence, was an interaction between APP and BIN1 in affecting verbal declarative memory in older people who carried the APOE e4 allele.

Given the robust association of the APOE e4 allele with Alzheimer’s disease , some have suggested that this allele may be associated with better cognition at younger ages. This would be an example of antagonistic pleiotropy (Williams 1957), or effects of one gene on more than one trait, at least one of which is advantageous and one disadvantageous. A meta-analysis of 20 studies that compared general cognitive function in APOE e4 carriers and noncarriers in children, adolescents and young adults, however, found no significant differences (Ihle et al. 2012). This null finding casts doubt on the antagonistic pleiotropy hypothesis, at least with respect to general cognitive function (Tuminello and Han 2011), though samples in many of the studies meta-analyzed were small, limiting ability to detect small effects. On the other hand, it is now clear that possession of the e4 allele of APOE is associated not just with Alzheimer’s disease but with lower cognitive function in old age more generally. Wisdom et al. (2011) carried out a meta-analysis including 40,942 nondemented adults in 77 studies. They found that e4 carriers scored more poorly on tests of episodic memory (often tests of verbal declarative memory; d = − 0.14, p < 0.01), executive function (d = − 0.06, p < 0.05), perceptual speed (d = − 0.07, p < 0.05), and general cognitive ability (d = − 0.05, p < 0.05). The detriment in episodic memory and general cognitive ability associated with the e4 allele increased with age, consistent with observations of increasing genetic variance in memory with age (Reynolds et al. 2005). There were no consistently significant differences in attention, primary memory, verbal ability , or visuospatial skill, though patterns were similar and there were fewer studies testing these domains. For example, the effect size (d) for primary memory was − 0.11, but was not significant owing to smaller sample size. There was variability in the tests used to test the same-named domain, and that many of the studies testing what was termed general cognitive ability used the minimum mean square error (MMSE), with its marked ceiling effect. In the Lothian Birth Cohort 1921, APOE e4 carriers scored significantly lower than noncarriers on a well-validated test of general intelligence at age 79 years, despite the two groups’ showing no significant difference at age 11 (Deary et al. 2002). When cognitive aging was studied in the same individuals from ages 79–83 and 87, e4 carriers showed more deterioration in verbal declarative memory and abstract reasoning, but there was no significant difference in executive function (Schiepers et al. 2012). Those individuals with a longer allelic variant of TOMM40—which is linked with APOE—showed similar results. These small effects could have resulted from presence in the sample of preclinical or undiagnosed cases of Alzheimer’s disease .

Beyond genes that have been associated with Alzheimer’s disease, three genes stand out as having been extensively studied in relation to cognition, including in older people. Interest in these genes derives substantially because some see the cognitive decrements seen in psychiatric disorders, especially schizophrenia , as integral to those disorders and suspect that genetic susceptibility to the disorder may affect general cognition even if an individual escapes the disorder itself (e.g., Autry and Monteggia 2012). One is the gene for BDNF , which has a common functional polymorphism (Val66Met). This variant has been linked to memory function in humans and other species. A review of this genetic variant’s association with cognitive abilities, including memory phenotypes, found that results to date were inconsistent but that, “the general consensus from the numerous studies has been that in healthy white populations, when challenged with various cognitive or motor learning behavioral tasks, humans with one or more copies of the BDNF Met allele have altered performance suggestive of a decrease in plasticity [ability to retain new information]” (Dincheva et al. 2012, p. 36). Another is the gene for catechol-O-methyl transferase, which has a functional polymorphism (Val158Met). The Met allele leads to lower levels of dopamine and to degradation of other neurotransmitters, especially in the frontal cortex. This gene has been extensively studied in people with schizophrenia as well as in healthy subjects, and has been associated with cognitive functions involving prefrontal cortex : executive function , working memory, fluid-type intelligence, and attention (Dickinson and Elevag 2009). A meta-analysis concluded, there was evidence that people who were homozygous for the Met allele might score higher on general IQ-type tests (Barnett et al. 2008). Finally, variants in the gene for dystrobrevin-binding protein 1 (DTNBP1) were originally but inconsistently associated with schizophrenia . A meta-analysis of nine SNPs in this gene, across 10 cohorts (total N = 7,592), found that, overall, minor allele carriers had lower general cognitive ability or IQ-type scores (Zhang et al. 2010). The subjects’ ages ranged from young to older adults, so this does not refer specifically to cognitive aging. It also requires replication.

Many other individual genes have been discussed by Payton (2009), Reinvang et al. (2010), and Harris and Deary (2011), though none has produced consistent findings. Beyond individual genes, which, given their action, have emerged as candidate genes for cognitive aging, there are studies of groups of many genes which are associated with given functions. For example, there have been studies of cognitive aging with respect to genes that are associated with oxidative stress (Harris et al. 2007) and longevity (Lopez et al. 2011). From the former, the prion protein gene (PRNP) emerged as being possibly associated with cognitive aging. From the latter, the genesynaptojanin-2 (SYNJ2) emerged as being possibly linked with cognitive abilities. Telomere length is related to cognitive stress. Telomeres are nucleoprotein complexes at the ends of chromosomes, and they tend to be shorter in the presence of oxidative stress. Telomere length is thought to act as a biomarker of successful aging. However, a large study on age-homogeneous individuals found no association between telomere length at age 70 and cognitive change since childhood and other cognitive and physical phenotypes (Harris et al. 2010). Others have found, in a sample of younger adult women, telomere length to be associated with level of cognitive ability (Valdes et al. 2010).

3.2 Genome-Wide Association Studies

The currently available main methodological alternative to testing specific genetic variants for association with cognitive functions in old age is to record each participant’s alleles at a large number of SNPs placed throughout the genome and to test for associations between any of these markers and cognitive abilities. This GWAS approach typically uses hundreds of thousands of SNPs. Moreover, based on assumed-known haplotype patterns, studies often use the measured alleles at these markers to impute the alleles at additional genetic loci, often increasing the number of associations considered to be well over a million. Therefore, type 1 statistical errors present a large problem, though potential inaccuracies in the imputation process should not be disregarded. Because of the large probability of type 1 errors, and because it has become clear that, for almost all complex quantitative traits, individual SNP effects are very small, these studies demand large sample sizes. The p value taken to be genome-wide significant in such studies is < 10−8, and replication is expected to be sought in independent cohorts prior to initial publication.

To date, there is only one published GWAS study of general cognitive abilities in old age (Davies et al. 2011). This measured approximately 500,000 SNPs in over 3,500 older people (from late-middle age to 79 years old). They came from five cohorts in Scotland and England, the so-called CAGES consortium: Cognitive Aging Genetics in England and Scotland. The cognitive phenotypes tested were fluid- and crystallized-type intelligence. For crystallized intelligence , the National Adult Reading test was used in the Scottish Cohorts and the Mill Hill Vocabulary test in the English cohorts. Fluid intelligence was based on a principal components analysis of diverse tests in the Scottish Cohorts, and from a combination of Alice Heim 4 and Cattell Culture Fair tests in the English cohorts. This points out an additional difficulty with GWAS studies: in forming consortia of studies to increase sample size, it is common to combine cognitive test scores reflecting constructs that are only superficially similar, thus blurring the measurement of the intended phenotype and offsetting the increased power to detect effects provided by the combined samples.

In the CAGES consortium study, there was no genome-wide significant SNP for fluid or crystallized intelligence . Considering all SNPs located within single genes rather than individual SNPs, one gene was significantly associated with fluid intelligence: forming-binding protein 1-like (FNBP1L). This did not replicate in a Norwegian sample, ranging in age from 18 to 78 years. The next analysis in this study used the so-called Genome-wide Complex Trait Analysis (GCTA) method (Visscher et al. 2010; Yang et al. 2010). This uses all ~ 500,000 measured SNPs simultaneously in a model that creates an association matrix and allows estimation of the correlation between the phenotype and the extent of genetic similarity in the sample, consisting of conventionally unrelated individuals. Therefore, for the first time based on DNA testing, estimates were provided for the narrow-sense (purely additive) heritability of fluid (0.51, s.e. = 0.11, p = 1.2 × 10−7) and crystallized (0.40, s.e. = 0.11, p = 5.7 × 10−5) intelligence in older age. Further analysis using this method found that there was a tendency for longer chromosomes to explain more cognitive ability variance. Finally, the study attempted to predict intelligence in each cohort by using the genetic information from all autonomic SNPs in the others. The correlations had means of 0.110 and 0.081 for fluid and crystallized intelligence, respectively, and were 0.076 and 0.092, respectively, in a separate Norwegian sample that had not been used in the GWAS . Therefore, this study suggests that a substantial proportion of the variance in cognitive ability in older ages is accounted for by genetic variants in linkage disequilibrium with common SNPs.

The GCTA method applied to the GWAS data in the study by Davies at al. (2011) was extended to study cognitive aging and lifetime cognitive stability in the three Scottish cohorts (total N = 1,940) of the CAGES consortium (Deary et al. 2012), in a demonstration of the method’s potential as well as its extensive data requirements and limitations. All three had taken the same general cognitive ability test—the Moray House Test No. 12—at age 11. They also took various cognitive tests in old age: age 65 for the Aberdeen Birth Cohort 1936, age 70 for the Lothian Birth Cohort 1936, and age 79 for the Lothian Birth Cohort 1921. The GCTA method was used to estimate the genetic contribution to general fluid intelligence in old age after adjusting for childhood intelligence, thus providing an estimate for the proportion of genetic influence on lifetime cognitive change. This was 0.24, though with a large standard error of 0.20, meaning that it was far from significant. In this study, the Lothian cohorts had also taken the same Moray House Test in childhood and old age. Using this test score in both childhood and old age, the ~ 500,000 SNPs accounted for 7.4 % (s.e. = 0.24) of variation in the residual change score. Clearly, these estimates carry little meaning as they were not significant and their confidence intervals contained both 0 and 1. The analysis method requires much larger sample sizes and likely more direct measures of change from peak cognition in adulthood.

The first GWAS study of cognitive aging, based on repeated measures of 17 tests on almost 750 subjects in the Religious Orders Study, at least age 75 at enrollment, found that APOE was significantly associated with cognitive change in old age (De Jager et al. 2012). Replication was conducted in three cohorts providing over 2,000 additional subjects. Replicated, too, was an SNP that affected the expression of the genes PDE7A and MTFR1, which are, respectively, involved in inflammation and oxidative stress.

Because processing speed is considered by some to be fundamental to cognitive function in general and cognitive aging in particular, it has been the subject of its own GWAS (Luciano et al. 2011). The cohorts involved in the GWAS of processing speed were mostly in older age, including the Lothian Birth Cohorts of 1921 (age 82) and 1936 (age 70), and the Helsinki Birth Cohort Study (age 64). The Brisbane study was younger, at 16 years. The total N for the study was almost 4,000 subjects. The four cohorts included were remarkable for having experimental (reaction time) and psychophysical (inspection time) measures of processing speed, and not just psychometric tests. Processing speed in each case was assessed using a factor analysis-derived general factor of processing speed from multiple tests. There were no genome-wide significant associations. There were some suggestively significant results (p < 10−5), some plausible candidate genes (e.g., TRIB3). Biological pathways analysis, which examines whether SNPs that have suggestive significance in a GWAS analysis are over-represented in biological pathways of interest, suggested association with the gene processes of cell junction, focal adhesion, receptor binding, and cellular metabolic processes. Several of the GWAS-identified genes apparently involved in these processes were also considered relevant to Alzheimer disease mechanisms.

Integrity of the myelin-sheathed brain white matter might provide one mechanism through which processing speed could be involved in cognitive aging, as these myelin sheaths allow faster neural transmission. Using diffusion-tensor magnetic resonance brain imaging on a relatively large subsample of the Lothian Birth Cohort 1936 (N = 535), there was a general factor of brain white matter integrity across many major tracts (Penke et al. 2010). Furthermore, it was also shown that this general factor was associated significantly with processing speed : older people with brain white matter of higher integrity had faster processing speed, as assessed using a general factor based on reaction and inspection time measures. This was the basis for a GWAS of brain white matter integrity , the phenotype being the same general factor of white matter integrity (Lopez et al 2012). There were no genome-wide significant associations. There was suggestive significance for ADAMTS18, which has roles in tumor suppression and hemostasis, and LOC388630, whose function was unknown. Biological pathways analysis found over-representation of genes related to cell adhesion and neural transmission pathways.

3.3 The Future and Other Molecular Genetic Approaches

The general absence of replicable associations and the very small effect sizes of most of those that were replicable in the first reports of GWAS studies from complex quantitative traits led to realism about the likely effect sizes of individual genetic variants. There was concern about the “missing heritability” for such traits, because the heritability accounted for by common SNPs was so far below that which had been estimated by behavior genetic studies using twin and adoption studies. The development and application of the GCTA method—in which heritability is estimated by fitting all SNPs, typically hundreds of thousands, simultaneously—has revised this. It seems that there is less missing heritability, but the new problem is that, for many quantitative traits, there will be very large numbers of very small genetic contributions. This makes mechanistic studies very difficult, in their traditional form, and calls into question the very premise of underlying clearly identifiable causal mechanisms, as usually implicitly defined. The GWAS studies of cognitive abilities that will appear in the near future will be larger, in the tens of thousands, to try to find some individual, replicable contributions. The CHARGE consortium will soon report large GWAS studies of memory, processing speed, executive function , and general cognitive ability , mostly in older people. The COGENT consortium will also report a GWAS on general cognitive ability of mostly older people. The CAGES consortium will report a GWAS on age-related cognitive change.

These are just larger GWAS studies, of the same design as Davies et al. (2011). Future studies are also likely to be designed to consider gene-by-environment and gene-by-gene interactions at the GWAS level. There will be studies of additional types of genetic variation, beyond SNPs , such as copy number variations. One such small study has already appeared (Yeo et al. 2011). It found that people who had more rare genetic deletions had lower intelligence. There will be studies that use SNP arrays with increasingly large numbers of SNPs, including greater numbers of SNPs imputed from information based on whole-genome sequencing. There will soon be studies based on whole-genome sequencing and sequencing of only protein-coding regions throughout the genome, which will look for genetic associations with rare and even private mutations (i.e., those unique to particular families).

There will be studies relating intelligence and cognitive aging to individual differences in DNA methylation and other forms of gene expression. DNA methylation changes with age as well as with environmental experiences (including in important brain areas: Hernandez et al. 2011). To the extent that the age changes are regular, individual differences in DNA methylation can provide, in part, records of environmental effects on the gene expression, which in turn can affect phenotypes and their successful aging, including cognition (Feil and Fraga 2012). DNA methylation and gene expression can both be examined at the genome-wide level—on arrays with hundreds of thousands of markers. Both will take the study of intelligence and cognitive aging and genetics to more mechanistic levels. However, both come with a problem that does not affect SNP testing: tissue specificity. DNA methylation and other forms of control of gene expression vary across tissues, and even within the brain there is region-by-region variation in gene expression. It remains to be discovered how much overall individual differences in expression are common across tissues, how much these differences relate to cognitive abilities and cognitive aging, and thus how much it will be necessary to study brain tissues to understand associations between gene expression and cognition. Gene expression studies certainly promise more by way of understanding mechanisms because they capture actual gene function, not just presence of polymorphisms (Geschwind and Konopka 2009). Another emerging avenue of investigation is the output of genetic expression in the form of protein concentrations. A small pilot study of the urinary proteome and general intelligence has already appeared. It indicated some proteins that might have roles in cognition (Lopez et al. 2011). Noncoding RNAs and their regulatory networks (Qureshi and Mehler 2011) are also emerging as potentially relevant to cognitive aging, and to aging-related phenotypes and processes such as the brain’s plasticity and its response to stress.

4 Conclusions

Genetic studies of normal cognitive aging have made clear that genetic influences continue to be involved in late-life cognitive function. It is far less clear, however, to what degree they are involved in the declines in function with age that have come to be considered normative. And, despite huge technological advances in probing the human genome, we remain far from understanding how they are involved or which particular genes contribute, with the striking exception of APOE. The open questions from the genetic perspective, however, closely parallel those in all approaches to the study of cognitive aging. As populations throughout the world continue to “gray,” growing older due to declining birth rates as well as increased longevity, meeting the challenge of understanding cognitive aging is of tremendous social importance. In many ways, genetic studies are well-positioned to offer important insights into the processes involved. They face the same measurement and sample selection difficulties as the rest of the field, but generally to no worse degree. And they afford unique opportunities to disentangle some of the causal knots faced by other approaches. We are excited by these challenges and opportunities and look forward to future progress in the field.

References

Autry, A. E., & Monteggia, L. M. (2012). Brain-derived neurotrophic factor and neuropsychiatric disorders. Pharmacoloogical Reviews, 64, 238–258.

Barnett, J. H., Scoriels, L., & Munafo, M. R. (2008). Meta-analysis of the cognitive effects of the catechol-O-methyltransferase gene Val158/108Met polymorphism. Biological Psychiatry, 64, 137–144.

Batterham, P. J., Mackinnon, A. J., & Christensen, H. (2011). The effect of education on the onset and rate of terminal decline. Psychology and Aging, 26, 339–350.

Bergen, S. E., Gardner, C. O., & Kendler, K. S. (2007). Age-related changes in heritability of behavioral phenotypes over adolescence and young adulthood: A meta-analysis. Twin Research and Human Genetics, 10(3), 423–433.

Bielak, A. A., Hultsch, D. F., Strauss, E., MacDonald, S. W., & Hunter, M. A. (2010). Intraindividual variability in reaction time predicts cognitive outcomes 5 years later. Neuropsychology, 24, 731–741.

Blennow, K., de Leon, M. J., & Zetterberg, H. (2006). Alzheimer’s disease. Lancet, 368, 387–403.

Bosworth, H. B., & Schaie, K. W. (1999). Survival effects in cognitive function, cognitive style, and sociodemographic variables in the Seattle Longitudinal Study. Experimental Aging Research, 25(2), 121–139.

Bryk, A. S., & Raudenbush, S. W. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks: Sage.

Calvin, C. M., Deary, I. J., Fenton, C., Roberts, B. A., Der, G., Leckenby, N., & Batty, G. D. (2011). Intelligence in youth and all-case mortality: Systematic review with meta-analysis. International Journal of Epidemiology, 40, 626–644.

Carmelli, D., DeCarli, C., Swan, G. E., Jack, L. M., Reed, T., Wolf, P. A., & Miller, B. L. (1998). Evidence for genetic variance in white matter hypersensitivity volume in normal elderly male twins. Stroke, 29(6), 1177–1181.

Carroll, J. B. (1993). Human cognitive abilities: A survey of factor analytic studies. Cambridge: Cambridge University Press.

Caspi, A., Williams, B., Kim-Cohen, J., Craig, I. W., Milne, B. J., Poulton, R., et al. (2007). Moderation of breastfeeding effects on the IQ by genetic variation in fatty acid metabolism. Proceedings of the National Academy of Sciences of the United States of America, 104, 18860–18865.