Abstract

Our conception of attention is intricately linked to limited processing capacity and the consequent requirement to select, in both space and time, what objects and actions will have access to these limited resources. Seminal studies by Treisman(Cognitive Psychology, 12, 97-136, 1980) and Broadbent (Perception and Psychophysics, 42, 105-113, 1987; Raymond et al. Journal of Experimental Psychology: Human Perception and Performance, 18, 849-860, 1992) offered the field tasks for exploring the properties of attention when searching in space and time. After describing the natural history of a search episode we briefly review some of these properties. We end with the question: Is there one attentional “beam” that operates in both space and time to integrate features into objects? We sought an answer by exploring the distribution of errors when the same participant searched for targets presented at the same location with items distributed over time (McLean et al. Quarterly Journal of Experimental Psychology, 35A, 171–186, 1982) and presented all at once with items distributed over space (Snyder Journal of Experimental Psychology, 92, 428–431, 1972). Preliminary results revealed a null correlation between spatial and temporal slippage suggesting separate selection mechanisms in these two domains.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

A taxonomy of Attention

With roots in a program of research begun by Michael Posner over 40 years ago (Posner and Boies 1971 ) three isolable functions of attention —alertness, orienting, and executive control—have been identified and linked to specific neural networks (Posner and Peterson 1990; Fan et al. 2005 ). In the domain of space , where selection has been referred to as orienting and most of the research has been on visual orienting, two important distinctions were first made by Posner (1980) and have since been highlighted in work from Klein’s laboratory (for a review, see Klein 2009 ). One concerns whether selection is accomplished by an overt reorientation of the receptor surface (an eye movement) or by a covert reorientation of internal information processing mechanisms. The other concerns whether the eye movement system or attention is controlled primarily by exogenous (often characterized as bottom-up or reflexive) means or by endogenous (often characterized as top-down or voluntary) means.

Helmholtz provided the first demonstration that attention could be shifted covertly and consequently independently of the direction of gaze. When control is purely endogenous, (Klein 1980 ; Klein and Pontefract 1994) and others (e.g., Hunt and Kingstone 2003 ; Schall and Thompson 2011 ) have demonstrated that such shifts of attention are not accomplished via sub-threshold programming of the oculomotor system . On the other hand, when orienting is controlled exogenously, by bottom-up stimulation, it is difficult to disentangle activation of covert orienting from activation of the oculomotor programs.

In the domain of covert orienting, Klein has emphasized the importance of distinguishing between whether control is (primarily) endogenous or exogenous because different resources or mechanisms seem to be recruited to the selected location or object when the two different control systems are employed. This assertion was first supported by the following double dissociation: (1) When exogenously controlled, attention interacts with opportunities for illusory conjunctions and is additive with non-spatial expectancies, and (2) when endogenously controlled, attention is additive with opportunities for illusory conjunctions and interacts with non-spatial expectancies (Briand and Klein 1987; Briand 1998 ; Handy et al. 2001 ; Klein and Hansen 1990; Klein 1994 ). Several other dissociations discovered by others reinforce Klein’s conclusion that different resources are recruited when orienting is controlled endogenously versus exogenously (for reviews, see Klein 2004, 2009).

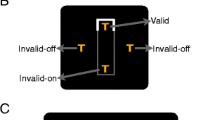

Thinking about the importance of this distinction in the world of orienting led Klein and Lawrence to propose an alternative taxonomy (Klein and Lawrence 2011 ), illustrated in Fig. 1, in which two modes of control (endogenous and exogenous) operate in different domains time, space, modality, task, etc.). Searching entails the endogenous and exogenous control of attention in space and time . In contrast to the literature using Posner’s cuing paradigm, however, in typical search tasks the endogenous/exogenous distinction is often not made explicit. In spatial search, for example, perhaps this is because even when search is hard (the target does not exogenously capture attention) we typically do not experience volitional control of the search process—of the sequence of decisions about where to look next for the target . It has been suggested that these “decisions” are typically made by low-level subroutines (Klein and Dukewich 2006 ). It seems likely that the endogenous control of search is instantiated before the search episode begins based on the observer’s knowledge about properties of the target (setting up a template matching process) and distractors (e.g. establishing attentional control settings to implement guided search).

A taxonomy of attention proposed by Klein and Lawrence (2011)

Natural History of a Search Episode

A typical search episode begins with some specification of what the target is; usually some information about the nature of the material to be searched through for the target; perhaps some useful information on how to find it; and, critically, what to do when it is found. The human searcher is thought to incorporate these tasks- or goal-oriented elements into a mental set, program or strategy so that their performance will optimize their payoffs. In Broadbent’s theory (1958 ) an important component of this process was “setting the filter” so that task-relevant items (targets) would have access to limited capacity processing mechanisms while task irrelevant items would be excluded. Duncan (1981) would later provide a useful recasting of Broadbent’s ideas. Instead of “filtering” he referred to a “selection schedule” and, recognizing the many empirical demonstrations that an unselected stimulus could nevertheless activate complex internal representations, he suggested that the limitation has more to do with availability for reporting an item than the quality or nature of an item’s internal representation. We see subsequently proposed endogenous control mechanisms such as attentional control settings (ACS) (Folk et al. 1992 ) and “task-set reconfiguration” (Monsel 1996) as firmly rooted in these earlier ideas.

During the search episode the efficient performer must represent the target and the feature(s) that will distinguish the target from the distractors. Representations activated by the spatial search array or temporal search stream are compared against these representations to determine if the target is present and if so to report its properties according to observer’s goals. This comparison process might take place one at a time or in parallel across the items in the search array or stream.

Two paradigms for exploring the information processing dynamics of searching will be emphasized in this chapter. These paradigms were developed to study, in relatively pure form, searching in space and in time . Searching in space entails the allocation of attention to items distributed in space and presented at the same time. Searching in time entails the allocation of attention to items distributed in time and presented at the same location. With a few exceptions (e.g., Arend et al. 2009 ; Keele et al. 1988 ; McLean et al. 1982 ; Vul and Rich 2010 ) searching in space and time has been studied separately, usually in studies with a similar objective: understanding the role of attention in detecting, identifying, or localizing targets . We believe that it will be empirically fruitful and theoretically timely for these somewhat separate efforts to be combined. And, it will be useful, because in the real world searching often combines these two pure forms.

Searching in Space

There are many studies from before 1980 that used a wide variety of spatial search tasks, The spatial search paradigm emphasized here (see Fig. 2) was imbued with excitement by Anne Treisman’s (Treisman and Gelade 1980 ; Treisman and Schmidt 1982) use of it to provide support for her feature integration theory in which spatial attention is the binding agent for otherwise free-floating features . When observers are asked to indicate whether a target is present in an array of distractors, two dramatically different patterns are frequently reported. In one case (i.e., difficult search—target is not defined by a single unique feature), illustrated in Fig. 2, reaction time for both target absent and target present trials is a roughly linear function of the number of distractors and the slope for the target absent trials is approximately twice that of the present trials . This pattern is intuitively compatible with (indeed predicted by) a serial self-terminating search (SSTS) process in which each item (or small groups of items) is compared against a representation of the target and this process is repeated until a match is found or until the array has been exhausted . In the other case (not illustrated) (i.e., easy search—target is defined by a single unique feature), reaction time is unaffected by the number of distractor items. Phenomenologically, instead of having to search for the target, it “pops out” of the array.

A prototypical “present/absent” search task (is there a solid “O” in the display?) is illustrated on the left. Typical results illustrated on the right showing reaction time to make the decision (open symbols = target absent trials; filled symbols = target present trials) as a function of the number of items in the display. (Adapted from Treisman 1986)

This model task and the theory Treisman inferred from its use have been remarkably fruitful in generating: modifications of the model task (e.g., the preview-search paradigm of Watson and Humphreys 1997 ; the dynamic search paradigm of Horowitz and Wolfe 1998 ), theoretical debates (such as: are so-called “serial” search patterns like that illustrated in Fig. 2 caused by truly sequential or by parallel processes; and, when search is a sequential process of inspections, how much memory is there about rejected distractors, see Klein and Dukewich 2006, for a review), empirical generalizations (e.g., Wolfe’s 1998, re view; the search surface of Duncan and Humphreys 1989 ), and conceptual contributions (e.g., the guided search proposal of Wolfe et al. 1989; the foraging facilitator proposal of Klein 1988 ).

The model task and the theory of Treisman encouraged Klein and Dukewich (2006) to address the question whether search is primarily driven by serial or parallel mechanisms. While rooted in basic research on spatial search , we believe that their advice applies equally to searching in time and to real-world search behavior:

When there is more than one good strategy to solve a problem it seems reasonable to assume that nature may have figured out a way to take advantage of both….We recommend that future research seek to determine, rather than which strategy characterizes search, ‘‘when’’ and ‘‘how’’ the two strategies combine. (Klein and Dukewich 2006, p. 651 )

Searching in Time

In the mid-1960’s Molly Potter discovered that people could read when the text was presented using rapid serial visual presentation (RSVP) , that is with words presented one after the other at the same location in a rapid sequence. A few decades later this mode of stimulus presentation began to be used as a tool for exploring the consequences of limited processing capacity , particularly for dealing with multiple “targets” in streams of unrelated items (Broadbent and Broadbent 1987 ; Weichselgartner and Sperling 1987 ). Broadbent and Broadbent (1987), for example, showed how difficult it is to identify two targets when they are in close succession.

The difficulty identifying subsequent items after successfully identifying an earlier one was subsequently named an “attentional blink ” by Raymond et al. (1992 ). The blink and the task for exploring it that was developed by Broadbent, Raymond and Shapiro propelled this paradigm to the center stage of attention research. In the seminal paradigm of Raymond et al. (1992) (see Fig. 3, left/bottom), multiple letters are presented rapidly and sequentially at the same location (in RSVP). In the sequence of letters, all but one of which are black, there are two targets (separated by varying numbers of distractors) and the observer has two tasks: Report the identity of the white letter and report if there was an X in the stream of letters after the white letter.

Two different methods that have been used to explore the attentional blink. Both entail presenting a sequence of individual alphanumeric items using RSVP (with about 100 ms separating item onsets). The stream on the left illustrates the “detect-X” task pioneered by Raymond et al. (1992). After a random number of black letters the first target, a white letter (T1), is displayed. Then, at varying lags after the presentation of T1 an X (T2 or probe) might or might not (this alternative is shown in the box with the dashed line) be presented. At the end of the stream the observer reports the identity of the white letter and whether or not an X had appeared in the stream. Typical results from this task are shown in the inset at the bottom. Open symbols show the probability of correctly reporting that an X was present as a function of its position following a white letter when that letter had been correctly identified. Filled symbols show the same results when there was no requirement to report the white letter. The stream on the right illustrates the paradigm developed by Chun and Potter (1995) and used by many others. Here there is a stream of items in one category (digits) in which two targets from another category (letters) are embedded. At the end of the stream the observer’s task is to report the identities of the two targets. Typical results (accuracy of T2 reports when T1 was identified correctly) are shown in the inset at the top

One possible weakness of this particular paradigm (often called “detect X”) is that the “blink” it generates and measures may have quite different sources: double speeded identification and switching the mental set (the selection schedule or filter setting) from color to form (“white” to “X”). A more general paradigm (that is more like Broadbent’s) is often used to avoid such switching. Chun and Potter (1995) used one version of this paradigm (Fig. 3, right/top) in which the observers task is to report the identity of two letter targets that are embedded in a stream of digits.

As with the spatial search paradigm, these methods for exploring “searching in time” using one or more targets embedded in a stream of rapidly presented items, have been remarkably fruitful in generating: modifications of the model task, theoretical debates, empirical generalizations , and conceptual contributions (e.g., Dux and Marios 2009 for a review ).

Searching in Space and Time: Some Comparisons

The Nature of the Stimuli

It seems likely that if a certain kind of stimulus pops out in a spatial search it might also do so in temporal search and vice versa. Duncan and Humphreys (1989) identified two principles that interact in determining the difficulty of searching in space for a target among distractors. One factor is: How similar is the target to the distractors? The other is: How heterogeneous are the distractors? How these factors interact to determine search difficulty (see Fig. 4) was described by Duncan and Humphreys (1989) ; neither factor alone makes searching particularly hard, but when combined they interact and conspire to make search extremely difficult. Would searching in time (in RSVP ) show the same relationship? While there are hints that this might be true, there are no dedicated studies that we are aware of.

Center: The “search surface” (adapted from Duncan and Humphreys 1989) represents the difficulty of finding a target (height of the surface is the predicted slope of the reaction time/set size function) as a function of two properties of the search array: target distractor similarity and distractor heterogeniety. Corners: Sample search arrays illustrating the four corners of the search surface. The line with the obviously unique slope in the lower left panel is the target in all four panels. The target is easily found when it is accompanied by a homogenous array of distractors of a very different orientation (lower left)

There are a variety of other stimulus features for which we could pose a similar question: If your own name pops out of an RSVP stream and even escapes the attentional blink will it also pop out in spatial search? Will socially important stimuli such as faces (emotional or otherwise) capture attention in both space and time? Given the history of this symposium, we can ask “What does motivation have to do with it?” For example, would pictures of food be easier to find when you are hungry than after you have just eaten? Will attention be captured by stimuli that have been previously rewarded?

The Participants

There are many participant factors that could be explored. We would expect searching in space and time to show similar benefits from training and expertise , for example. The same expectation would apply to developmental changes. Exploring the efficiency of spatial search across the lifespan, Hommel et al. (2004) found a U-shaped function with less efficient performance at the extremes. Based on their findings, if you have recently turned 25 or so, you are at your peak. A similar pattern, though perhaps with a slightly older “optimum” age, was reported for the magnitude of the attentional blink by Georgiou-Karistianis et al. (2007) Looking at patients with focal brain damage or known neurological problems would provide an arena for comparison that could have relevance to the neural systems involved in search. Examples described here are from studies of patients with unilateral neglect, a disorder commonly associated with parietal lesions. In spatial search tasks patients with neglect are slower and less likely to find targets, particularly when these are present in the neglected hemifield (e.g., Butler et al. 2009 ; Eglin et al. 1989 ). The right-to-left gradient of increasing omissions (see Fig. 5a) might be related to a difficulty disengaging attention from attended items toward items in the neglected field (for a review, see Losier and Klein 2001 ). Poor performance, particularly repeated reports of targets (cf Butler et al. 2009), might be attributed, in part, to defective spatiotopic coding of inhibition of return (IOR) which depends on an intact right parietal lobe (Sapir et al. 2004 ). This would converge with the proposal that the function of IOR is to encourage orienting to novelty (Posner and Cohen 1984 ) and, consequently, to discourage reinpsections (Klein 1988 ). Using an RSVP task , Husain et al. (1997) showed that the attentional blink was longer and deeper in patients suffering from visuo-spatial neglect due to damage to the right hemipshere. In this study, all the items were presented at fixation ., Consequently, this temporal deficit might be a more general version of the aforementioned disengage deficit: difficulty disengaging attention from any item on which it is engaged.

Spatial and temporal processing in patients suffering from neglect and control participants. a Probability of report [by normal controls (NC), control patients with right hemisphere lesions (RHC), and patients suffering from neglect following damage to the right hemisphere (NEG)] of target letters and numbers among non-alphanumeric distractors presented in a 20 by 30 degree spatial array in peripersonal and extrapersonal space (from Butler et al., 2009). b and c Probability of detecting an X in the “detect X” paradigm illustrated in Fig. 3. Unfilled squares represent performance when participants were not required to report the white letter in the stream (single task). Filled squares represent performance on the “detect X” task (second target) when participants were required to report the white letter (first target). b data from normal controls. c data from patients with neglect (Data in b and c are from Husain et al. (1997); figures b and c are adapted from Husain and Rorden (2003)

The role(s) of Endogenous Attention in Time and Space

As noted earlier the concept of limited capacity seems to play an important role in both kinds of search. When searching in space , one reflection of this limit is seen in the relatively steep slopes that characterize difficult searches (searches for which the target does not pop-out). As noted earlier, one way to explain steep slopes is in terms of the amount of time required for an attentional operator to sequentially inspect individual items in the array or to sequentially inspect regions (when it is possible for small sets of nearby items to be checked simultaneously) until the target is located. When searching in time this is seen as an attentional blink—in the period immediately following the successful identification of a target, some important target-identifying resources appear to be relatively unavailable.

An interesting difference that characterizes at least the standard versions of these tasks is that stimuli in RSVP are data limited : every item is both brief and masked while in a typical spatial search episode the stimulus array is neither brief nor masked. With multiple items displayed all at the same time , spatial search is characteristically resource limited. That noted, several researchers (e.g., Dukewich and Klein 2005 ; Eckstein 1998 ) have explored spatial search using limited exposure durations. And, while in this chapter we are concentrating on relatively pure examples of searching in space and time , there have also been some highly productive hybrids (such as the dynamic search condition of Horowitz and Wolfe 1998, 2003, ).

The ideas of attentional control settings and contingent capture seem to operate similarly in both space and time . In spatial search it has been demonstrated that attentional capture is contingent on the features one is searching for (Folk et al. 1992 ) as well as the locations where targets will be found (Ishigami et al. 2009 ; Yantis and Jonides 1990 ). Capture by distracting non-targets that share features with the target has also been demonstrated in temporal search (Folk et al. 2008 ).

Another aspect of attentional control concerns its intensity (Kahneman, 1973 ). For example, in his review of IOR, Klein (2000) proposed that the strength of attentional capture by task-irrelevant peripheral cues would depend directly on the degree to which completing the target task requires attention to peripheral onsets . As a consequence of increased capture, attentional disengagement from the cue and therefore the appearance of IOR would be delayed .

A similar mechanism was uncovered in our studies of the attentional blink . The initial question we (McLaughlin et al. 2001 ) posed was whether difficulty to identify the first target (T1), when varied randomly from trial-to-trial, would affect blink magnitude. We used the target-mask, target-mask paradigm (which, it must be noted, demonstrates that it is not necessary to use RSVP streams to explore searching in time) pioneered by Duncan et al. (1994) . As shown in the bottom panel of Fig. 6 we varied how much data was available about either T1 or T2 (second target) in order to implement an objective, quantifiable and data-driven difference in target identification difficulty. We designed the experiment so as to avoid any location or task switching (the task was simply to report the two letters). Despite the success of our data-driven manipulation of T1 difficulty, the answer to this question was a resounding “NO” (see top panel of Fig. 7)Footnote 1. When we manipulated the difficulty of T2, this had dramatic effects on T2 performance and no effect on T1 (bottom panel of Fig. 7).

Methods used by McLaughlin et al. (2001) to explore the effect of the difficulty of target (T) processing upon the magnitude of the blink using a target-mask, target-mask paradigm to induce and measure the blink. The difficulty of either T1 (first target) or T2 (second target) was manipulated by varying the relative durations of the target and mask (M)

Why would such a dramatic difference in difficulty of T1 have no effect on the blink? We suggested that this was because the blink is about the effort the participant expects to have to exert in advance of the trial—an ACS that is about how much processing resources might be needed to perform the task. Because we randomly intermixed the 3 difficulty levels, and because (apparently) resources are not (or cannot be) re-allocated in real time when T1 is presented, all trials would have been subjected to the same ACS. We tested this proposal, in a subsequent paper (Shore et al. 2001 ), by comparing the results when the same data-driven manipulation of T1 difficulty was mixed or blocked. As predicted by an ACS view, when we blocked difficulty there was a significant effect of T1 difficulty on the magnitude of the AB (particularly between the hard and medium/easy conditions, See Fig. 8).

There may be a related “strategic” effect in both the spatial and temporal search literatures. Smilek et al. (2006, ), in a paper entitled: “Relax! Cognitive strategy influences visual search” seemed to show that simply telling their participants not to try so hard reduced their slopes (i.e., increased their search efficiency). Similarly, Olivers and Nieuwenhuis (2005) reported that relaxing by listening to music could reduce the attentional blink .

Binding of Targets in Space and Time

We will end this section by describing one empirical strategy for comparing searching in space and in time . The background comes from two papers that reported interesting “slippage” of targets in space and time. The first, by Snyder (1972 ), was about searching in space; the second by McLean et al. (1982), was about searching in time. In Snyder’s study multiple items were presented briefly at the same time in different locations whereas in McLean et al. (1982) multiple items were presented rapidly in time at the same location. For present purposes we will emphasize the conditions in which the participant’s task was to report the identity of a target letter that was defined by color. As we will see, both studies reported a certain amount of sloppiness of the attentional beam (or window); whether the errors were true illusory conjunctions is not so important as their distribution in space and time .

In Snyder’s spatial search task, 12 letters were placed in a circular arrangement on cards for presentation using a tachistoscope Footnote 2. On each trial the participant had to verbally report the name of a uniquely colored letter and then report its position (using an imaginary clockface: 1–12). Stimulus duration was adjusted on an individual basis so that accuracy of the letter identification was about 50 % (regardless of accuracy of the letter localization). The key finding for present purposes was that when reporting identitiesFootnote 3, errors were more likely to be spatially adjacent to the target letter than further away. Snyder found a similar pattern of spatial slippage when the feature used to identify the target was form-based (a broken or inverted letter).

In McLean et al.’s temporal search task, the target color varied from trial to trial and the participant’s task was to report the identity of the single item presented in the target color. (In another condition the participant reported the color of a target defined by its identity.) Each stream, created photographically using movie film, consisted of 17 letters rendered using 5 different identities and 5 different colors. Films were projected on the screen with SOAs of 67 ms (15 flames/s). The key finding for present purposes was an excess of temporally adjacent intrusion errors relative to reports of items in the stream temporally more distant from the target (interestingly, immediate post-target intrusions were more likely than immediate pre-target intrusions).

If there were one attentional beam that operates in both space and time to integrate features into objectsFootnote 4, and if there are individual differences in the efficacy of this beam, then we would expect the spatial and temporal sloppiness that was reported by Snyder (1972) and McLean et al. (1982) to be correlated across individuals. To test this idea, data on spatial and temporal search must be obtained from the same participants. We have begun to explore this possibility and will briefly report some of our preliminary findings.

In our first project we tested 46 participants on spatial and temporal search tasks that were closely matched to those of Snyder (1972) and McLean et al. (1982) . The order of tasks was counterbalanced. In order to ensure that there would be a sufficient number of errors while performance would be substantially above chance, for each task we titrated the exposure duration so that overall accuracy in reporting the target’s identity was in the 50–60 % correct range. The key results are illustrated in Fig. 9.

Results from Ishigami and Klein (2011). Observers were searching in space (left panel) and time (right panel) for a target of a pre-specified color. Accurate reports of the target’s identity are indicated in the percentages indicated above relative position = 0. The remaining data are the percentage of erroneous reports of items from the array (that were not the target) as a function of the distance (in space and time) of these items relative to the target. Positive positions are, relative to the target, clockwise in space and after in time

We were quite successful in achieving the overall level of accuracy we were aiming for (50–60 % correct). While the scales are different (there were fewer errors in the spatial task) the patterns are similar in space and time , and the key findings from Snyder (1972) and McLean et al. (1982) were replicated: errors are more likely to come from positions adjacent to the target. Moreover, in space there were more counter clockwise than clockwise errors; and in time there were more post- than pre-target errors. For each participant and task we computed a measure of “slippage” that was the average rate of near errors (± 1) minus the average rate of far errors (all other erroneous reports from the presented array). The correlation between spatial and temporal slippage was very close to zero (r 44 = 0.03) suggesting that the attentional beam that attaches identities to locations may not be the same beam that attaches identities to timeFootnote 5.

Conclusion

We have discussed the concept of attention —selection made necessary by limited processing capacity —and some of its manifestations in spatial and in temporal search behavior. As described in the chapter, searching in space and time has been typically studied separately predominantly with an objective to understand the role of attention in detecting, identifying, or localizing targets . However, in the real-world, we are often searching for targets that are surrounded by distractors in space and all of this happens in scenes that unfold over time (e.g., looking for a particular exit on a highway when driving; or your child in a busy playground). We described above our first attempt to compare searching in time and space in the same individuals. Preliminary results revealed a null correlation between spatial and temporal slippage suggesting different selection mechanisms in these two domains. We plan next to experimentally balance two tasks (space and time) so that we can have firmer conclusion about this relationship and merge our two tasks so that we can explore searching in space and time simultaneously.

In the course of this chapter we have raised several questions: Will the principles (Duncan and Humphreys 1989 ) that determine the difficulty of searching in space generalize to searching in time? Are the same brain regions responsible for spatial and temporal search (e.g., Arend et al. 2009 )? Do attentional control settings work in the same way in spatial and temporal search? Is the binding of features to space and to time implemented by one “beam” or by independent “beams,” each operating in its own domain? Answers to these questions which, in some cases, the literature is beginning to provide, will have important theoretical and practical implications.

Notes

- 1.

Also note the absence of lag-1 sparing. This occurred, despite the very short amount of time between T1 and T2 at lag 1, because T1 had nevertheless been masked—see Fig. 6).

- 2.

Some readers may find this surprising, but even though Posner’s laboratory (which is where these experiments were conducted) was in the forefront of using computers for psychological research, in 1971 there was almost no possibility of computerized presentation with color displays.

- 3.

Snyder (1972) used ‘legitimate’ trials for the analyses reported in his paper. By his definition legitimate trials are trials for which the reported location falls within ± 1 of the location of reported identity.

- 4.

This is the beam controlled exogenously by bottom-up stimulation (see also, Briand and Klein 1987). To be sure, and as described earlier, the ACS or selection schedule was put into operation by endogenous control mechanisms.

- 5.

When we applied Snyder’s exclusion criteria (i.e., legitimate trials, see footnote 3) to both our spatial and temporal tasks, the correlation was marginally significant, r = 0.34, p = 0.051, but becomes non-significant when a single outlier is removed (r = 0.20). For a confident conclusion, further research is required.

References

Arend, I., Rafal, R., & Ward, R. (2009). Spatial and temporal deficits are regionally dissociable in patients with pulvinar lesions. Brain: A journal of neurology, 131(Pt 8), 2140–2152.

Berbaum, K. S., Franken Jr., E. A., Dorfman, D. D., Rooholamini, S. A., Kathol, M. H., Barloon, T. J., Behlke, F. M., et al. (1990). Satisfaction of search in diagnostic radiology. Investigative Radiology, 25(2), 133.

Briand, K. A. (1998). Feature integration and spatial attention: More evidence of a dissociation between endogenous and exogenous orienting. Journal of Experimental Psychology: Human Perception and Performance, 24,1243–1256.

Briand, K., & Klein, R. M. (1987). Is Posner’s beam the same as Treisman’s glue?: On the relationship between visual orienting and feature integration theory. Journal of Experimental Psychology: Human Perception & Performance, 13(2), 228–247.

Broadbent, D.E. (1958). Perception and communication. London: Pergamon Press.

Broadbent, D.E., & Broadbent, M.H. (1987). From detection to identification: Response to multiple targets in rapid serial visual presentation. Perception & Psychophysics, 42,105–113.

Butler, B. C., Lawrence, M., Eskes, G. A., & Klein, R. M. (2009) Visual search patterns in neglect: Comparison of peripersonal and extrapersonal space. Neuropsychologia, 47,869–878.

Chun, & Potter (1995) A two-stage model for multiple target detection in rapid serial visual presentation. Journal of Experimental Psychology: Human Perception and Performance, 21, 109–127

Dukewich, K. & Klein, R. M. (2005) Implications of search accuracy for serial self-terminating models of search. Visual Cognition, 12,1386–1403.

Duncan, J. (1981) Directing attention in the visual field. Perception & Psychophysics, 30(1), 90–93.

Duncan, J., & Humphreys, G. (1989). Visual search and stimulus similarity. Psychological Review, 96,433–458.

Duncan, J., Ward, R., & Shapiro, K. (1994). Direct measurement of attentional dwell time in human vision. Nature, 369(6478), 313–5.

Dux, P. E., & Marois, R. (2009) The attentional blink: A review of data and theory. Attention, Perception & Psychophysics, 71(8), 1685–1700.

Eckstein, M. P. (1998). The lower visual search efficiency for conjunctions is due to noise and not serial attentional processing. Psychological Science, 9(2), 111–118.

Eglin, M., Robertson, L. C., & Knight, R. T. (1989). Visual search performance in the neglect syndrome. Journal of Cognitive Neuroscience, 12, 542–5.

Fan, J., McCandliss, B. D., Fossella, J., Flombaum, J. I., & Posner, M. I. (2005). The activation of attentional networks. NeuroImage, 26, 471–479.

Folk, C. L., Leber, A. B., & Egeth, H. E. (2008) Top-down control settings and the attentional blink: Evidence for nonspatial contingent capture. Visual Cognition, 16(5), 616–642.

Folk, C. L., Remington, R. W., & Johnston, J. C. (1992). Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology: Human Perception and Performance, 18(4), 1030–1044.

Georgiou-Karistianis, N., Tang, J., Vardy, Y., Sheppard, D., Evans, N., Wilson, M., Gardner, B. Farrow, M., & Bradshaw, J. (2007) Progressive age-related changes in the attentional blink paradigm. Aging, Neuropsychology, and Cognition, 14, 213–226.

Handy, T. C., Green, V., Klein, R. M., & Mangun, G. R. (2001). Combined expectancies: event-related potentials reveal the early benefits of spatial attention that are obscured by reaction time measures. Journal of Experimental Psychololgy: Human Perception Performance, 27(2), 303–317.

Hommel, B., Li, K. X. H., & Li, S.-C. (2004) Visual search across the lifespan. Developmental Psychology, 40(4), 545–558.

Horowitz, T. S., & Wolfe, J. M. (1998). Visual search has no memory. Nature, 394(6693), 575–576.

Horowitz, T., & Wolfe, J. (2003). Memory for rejected distractors in visual search? Visual Cognition, 10(3), 257–298.

Hunt, A., & Kingstone, A. (2003). Covert and overt voluntary attention: Linked or independent? Cognitive Brain Research, 18,102–105.

Husain, M., & Rorden, C. (2003) Nonspatially lateralized mechanisms in hemispatial neglect. Nature Reviews Neuroscience, 4,26–36.

Husain, M., Shapiro, K., Martin, J., & Kennard, C. (1997) Abnormal temporal dynamics of visual attention in spatial neglect patients. Nature, 385,154–156.

Ishigami, Y., & Klein, R. M. (2011). Attending in space and time: Is there just one beam? Canadian Journal of Experimental Psychology, 65(4): 30.

Ishigami, Y., Klein, R. M. & Christie, J. (2009) Using illusory line motion to explore attentional capture. Visual Cognition, 17(3), 431–456.

Kahneman, D. (1973). Attention and effort. Englewood Cliffs: Prentice Hall.

Keele, S. W., Cohen, A., Ivry, R., Liotti, M., & Yee, P. (1988). Tests of a temporal theory of attentional binding. Journal of Experimental Psychology: Human Perception and Performance, 14(3), 444–452.

Klein, R. M. (1980). Does oculomotor readiness mediate cognitive control of visual attention. In R. Nickerson (Ed.), Attention and Performance VIII (pp. 259–276). Hillsdale: Erlbaum.

Klein, R. M. (1988). Inhibitory tagging system facilitates visual search. Nature, 334(6181), 430–431.

Klein, R. M. (1994) Perceptual-motor expectancies interact with covert visual orienting under endogenous but not exogenous control. Canadian Journal of Experimental Psychology, 48, 151–166.

Klein, R. M. (2000) Inhibition of return. Trends in Cognitive Sciences, 4(4), 138–147.

Klein, R. M. (2009) On the control of attention. Canadian Journal of Experimental Psychology, 63, 240–252.

Klein, R. M., & Dukewich, K. (2006) Does the inspector have a memory? Visual Cognition, 14,648–667.

Klein, R. M., & Hansen, E. (1990). Chronometric analysis of spotlight failure in endogenous visual orienting. Journal of Experimental Psychology: Human Perception & Performance, 16(4), 790–801.

Klein, R. M., & Lawrence, M. A. (2011) The modes and domains of attention. In M. I. Posner (Ed.) Cognitive Neuroscience of Attention (2nd edn.). New York: Guilford Press.

Klein, R. M., & Pontefract, A. (1994) Does oculomotor readiness mediate cognitive control of visual attention? Revisited! In C. Umiltà & M. Moscovitch (Eds.) Attention & Performance XV: Conscious and Unconscious Processing (p. 333–350). Cambridge: MIT Press.

Losier, B. J., & Klein, R. M. (2001) A review of the evidence for a disengage operation deficit following parietal lobe damage. Neuroscience and Biobehavioral Reviews, 25,1–13.

McLean, J. P., Broadbent, D. E., & Broadent, M. H. P. (1982) Combining attributes in rapid serial visual presentation tasks. Quarterly Journal of experimental Psychology, 35A,171–186.

McLaughlin, E.N., Shore, D. I., & Klein, R. M. (2001) The attentional blink is immune to masking induced data limits. Quarterly Journal of Experimental Psychology, 54A,169–196.

Monsell, S. (1996). Control of mental processes. In V. Bruce (Ed.), Unsolved mysteries of the mind: Tutorial essays in cognition (pp. 93- 148). Howe: Erlbaum.

Moray, N.,(1993). Designing for attention. In A. Baddeley & L. Weiskrantz (Eds.) Attention: selection, awareness, and control (pp. 111–134). Oxford: Oxford University Press.

Olivers, C. N. L., & Nieuwenhuis, S. (2005). The beneficial effect of concurrent task-irrelevant mental activity on temporal attention. Psychological Science, 16(4), 265–269.

Posner M. I. (1980) Orienting of attention. Quarterly Journal of Experimental Psycholology A, 32, 3–25

Posner, M. I., & Boies, S. (1971). Components of attention. Psychological Review, 78, 391–408.

Posner, M. I., & Cohen, Y. (1984) Components of attention. In H. Bouma & D. Bowhuis (Eds), Attention and Performance X (p. 531–556). Hillside: Erlbaum.

Posner, M.I., & Petersen, S.E. (1990). The attention system of the human brain. Annual Review of Neuroscience, 13, 25–42.

Raymond, J. E., Shapiro, K. L., & Arnell, K. M. (1992). Temporary suppression of visual processing in an RSVP task: An attentional blink? Journal of Experimental Psychology: Human Perception and Performance, 18, 849–860.

Sapir, A., Hayes, A., Henik, A., Danziger, S., & Rafal, R. (2004). Parietal lobe lesions disrupt saccadic remapping of inhibitory location tagging. Journal of Cognitive Neuroscience, 16(4), 503–509.

Schall, J. D. & Thompson, K. G. (2011) Neural mechanisms of saccade target selection evidence for a stage theory of attention and action. In M. I. Posner (Ed.) Cognitive Neuroscience of Attention (2nd edn.). New York: Guilford Press.

Shore, D. I., McLaughlin, E. N, & Klein, R. M. (2001) Modulation of the attentional blink by differential resource allocation. Canadian Journal of Experimental Psychology, 55,318–324.

Smilek, D., Enns, J. T., Eastwood, J. D., & Merikle, P. M. (2006). Relax! Cognitive strategy influences visual search. Visual Cognition, 14, 543–564.

Snyder, C. R. R. (1972) Selection, inspection and naming in visual search. Journal of eperimental Psychology, 92, 428–431.

Townsend, J. T. (1971). A note of the identifiability of parallel and serial processes. Perception and Psychophysics, 10,161–163.

Treisman, A. (1986) Features and objects in visual processing. Scientific American 255, 114B–125B.

Treisman, A. M., & Gelade, G. (1980). A feature integrauon theoryof perception. Cognitive Psychology, 12, 97–136

Treisman, A., & Schmidt, H. (1982). Illusory conjunctions in the perception of objects. Cognitive Psychology, 14(1), 107–141.

Vul, E., & Rich, A. N. (2010) Independent sampling of features enables conscious perception of bound objects. Psychological Science, 21(8) 1168–1175.

Watson, D. G., & Humphreys, G. W. (1997) Visual marking: Prioritizing selection for new objects by top-down attentional inhibition of old objects. Psychological Review, 104, 90–122

Weichselgartner, E., & Sperling, G. (1987). Dynamics of automatic and controlled visual attention. Science, 238, 778–780.

Wolfe, J. M. (1998). What can 1 million trials tell us about visual search? Psychological Science, 9(1) 33–39.

Wolfe, J. M., Cave, K. R., & Franzel, S. L. (1989) Guided search: an alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance, 15, 419–433.

Wolfe, J. M., Horowitz, T. S., Van Wert, M. J., Kenner, N. M., Place, S. S., & Kibbi, N. (2007). Low target prevalence is a stubborn source of errors in visual search tasks. Journal of experimental psychology. General, 136(4), 623–38.

Yantis, S., & Jonides, J. (1990). Abrupt visual onsets and selective attention: Voluntary versus automatic allocation. Journal of Experimental Psychology: Human Perception & Performance, 16(1), 121–134.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer Science+Business Media New York

About this chapter

Cite this chapter

Klein, R., Ishigami, Y. (2012). Searching in Space and in Time. In: Dodd, M., Flowers, J. (eds) The Influence of Attention, Learning, and Motivation on Visual Search. Nebraska Symposium on Motivation. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-4794-8_2

Download citation

DOI: https://doi.org/10.1007/978-1-4614-4794-8_2

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4614-4793-1

Online ISBN: 978-1-4614-4794-8

eBook Packages: Behavioral ScienceBehavioral Science and Psychology (R0)