Abstract

To create effective immersive training experiences, it is important to provide intuitive interfaces that allow users to move around and interact with virtual content in a manner that replicates real world experiences. However, natural locomotion remains an implementation challenge because the dimensions of the physical tracking space restrict the size of the virtual environment that users can walk through. To relax these limitations, redirected walking techniques may be employed to enable walking through immersive virtual environments that are substantially larger than the physical tracking area. In this chapter, we present practical design considerations for employing redirected walking in immersive training applications and recent research evaluating the impact on spatial orientation. Additionally, we also describe an alternative implementation of redirection that is more appropriate for mixed reality environments. Finally, we discuss challenges and future directions for research in redirected walking with the goal of transitioning these techniques into practical training simulators.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Virtual

- Environments

- Redirected walking

- Locomotion

- Mixed reality

- Augmented reality

- Redirection

- Reorientation

- Human-computer interaction

1 Locomotion in Virtual Environments

To create effective immersive training experiences, it is important to engage users in simulated environments that convincingly replicate the mental, physical, and emotional aspects of a real world situation. Such applications seek to invoke a sense of presence—the feeling of “being there” in the virtual world, despite the fact that the user is aware that the environment is a simulation. For users to respond realistically in a virtual environment, the system must support the sensorimotor actions that allow them to walk around and perceive the simulated content as if it were real [18]. Indeed, experiments have demonstrated that walking yields benefits over less natural forms of locomotion such as joystick travel. When walking naturally, users experience a greater sense of presence [28] and less cognitive demand on working memory and attention [23, 30]. Additionally, studies have also shown that walking results in superior performance on travel and search tasks [16, 24].

The military has long been interested in providing immersive training experiences that attempt to replicate the energy and motions of natural locomotion. For example, the U.S. Naval Research Laboratory developed Gaiter, an immersive system that uses a harness and full-body tracking to allow users to locomote by walking-in-place [27]. Other examples include omni-directional treadmills (e.g. [4, 17]) and mechanical human-sized “hamster balls” (e.g. [9]). While recent research has shown that walking on an omnidirectional treadmill can be very close to real walking [19], these devices introduce translational vestibular conflicts when users alter their walking speed. Furthermore, omnidirectional treadmills are generally expensive, mechanically complicated, and only usable by a single person at a time. Thus, many immersive systems continue to rely on handheld devices to simulate walking through the virtual environment. For example, the U.S. Army recently awarded a $57 million contract to develop the Dismounted Soldier Training System, a multi-person immersive virtual reality training simulator using head-mounted displays and joysticks mounted on each soldier’s weapon for locomotion [13].

In the past few years, advances in wide-area motion tracking technology have made it possible to realize natural walking through larger physical workspaces than were previously possible with traditional electromagnetic trackers. Additionally, portable rendering devices have made it possible to provide untethered movement through large-scale immersive spaces, such as the HIVE system developed at Miami University [29], and even outdoor environments with the integration of GPS tracking. Although it is now feasible to design virtual environments that can be explored entirely through natural walking, the finite dimensions of the physical tracking space will ultimately limit the size of the virtual environment that can be represented. To address this limitation, researchers developed redirected walking, a technique that subtly manipulates the mapping between physical and virtual motions, thereby enabling real walking through potentially expansive virtual worlds in comparably limited physical workspaces. In this chapter, we present design considerations for employing redirected walking techniques in immersive training applications. We also demonstrate an alternative redirection technique designed for mixed reality environments that is drastically different from previous implementations, and discuss the challenges and future work we have identified based on feedback from demonstrations and military personnel.

2 Redirected Walking

Redirected walking exploits imperfections in human perception of self-motion by either amplifying or diminishing a component of the user’s physical movements [14]. For example, when the user rotates his head, the change in head orientation is measured by the system, and a scale factor is applied to the rotation in the virtual world. The net result is a gradual rotation of the entire virtual world around the user’s head position, which in turn alters their walk direction in the real world. These rotation gains are most effective when applied during head turns while the user is standing still [6] or during body turns as the user is walking around [3]. However, the virtual world can also be rotated as the user walks in a straight line in the virtual world, a manipulation known as curvature gains. If these manipulations are applied slowly and gradually, the user will unknowingly compensate for the rotation, resulting in a walking path that is curved in the real world. Studies have shown that the magnitude of path curvature that can be applied without becoming perceptible is dependent on the user’s velocity [11].

The illusion induced by redirected walking works perceptually because vision tends to dominate over vestibular sensations when these two modalities are in conflict [1]. In practice, redirection is useful in preventing the user from exiting the boundaries of the physical workspace. From the user’s perspective, it will appear as though they are proceeding normally through the virtual environment, but in reality they will be unknowingly walking in circles. Thus, when applied properly, redirected walking can be leveraged to allow a user to physically walk through a large and expansive virtual world using a real world tracking area that is substantially smaller in size, an advantage that is especially useful for training environments.

Redirected walking is a promising method for enabling natural locomotion in virtual environments; however, there is a perceptual limit for the magnitude of conflict between visual and vestibular sensations. Excessive manipulation can become noticeable to the user, or at worst cause simulator sickness or disorientation. As a result, an important focus of research has been to quantify the detection thresholds for redirected walking techniques. Psychophysical studies have found that users can be physically turned approximately 49 % more or 20 % less than the perceived virtual rotation and can be curved along a circular arc with a radius of at least 22 m while believing they are walking in a straight line [21]. Because of these limitations, deploying redirected walking in arbitrary environment models remains a practical challenge—if the users’ motions are not easily predictable, redirection may not be able to be applied quickly enough to prevent them from walking outside of the boundaries of the tracked space.

3 Practical Considerations for Training Environments

While redirected walking has been shown to work perceptually, these techniques have yet to be transitioned into active training simulators. In this section, we present practical considerations for using redirected walking techniques in training environments, and discuss recent research and design mechanics that have begun to address the challenges of deploying redirected walking in practical applications.

3.1 Impact of Redirection on Spatial Orientation

It is important to consider how soldiers will react when self-motion manipulation techniques are used in an immersive training simulator. Such applications often require users to rapidly assess the environment and respond quickly to perceived threats. However, when users are redirected, the mapping between the real and virtual worlds is manipulated. If a user reacts instinctively based on the spatial model of the real world, this could lead to confusion or poor performance in the virtual simulator, which may negatively impact the training value. Thus, we performed a study of the effect of redirection on spatial orientation relative to both the real and virtual worlds to determine which world model users would favor in spatial judgments [26].

Walkthrough of the pointing task in the spatial orientation experiment. a Participants pointed at a target in the real world. b Next, they put on the head-mounted display and pointed at a visible target in the virtual world. c–d After being redirected by \(90^{\circ }\), participants pointed back to where the thought the real and virtual targets were. Participants generally pointed to target positions as if they had been rotated along with the virtual world, instead of their original locations

Participants in the study performed a series of pointing tasks while wearing a Fakespace Wide 5 head-mounted display in our approximate \(10 \times 10\,\mathrm{m}\) tracking area. First, they were asked to remove the display and point at a real target using a pistol grip-mounted Wiimote that was also tracked using the motion capture system (see Fig. 14.1a). Next, they put the display back on and pointed to a different target that was visible in the virtual environment (see Fig. 14.1b). Participants were then asked to walk down a virtual road and visit a virtual room, and during this process, they were redirected by \(90^{\circ }\). After leaving the room, and walking down to the end of the road, they then were asked to point back to the locations where they thought the original real and virtual targets were, even though they were no longer visible (see Fig. 14.1c–d).

The results from this experiment showed that participants pointed to the real targets as if they had been rotated along with the virtual world. In other words, their spatial models of the real world also appeared to be updated by the manipulations to the virtual world. In general, we speculate that users will often trust what they see in virtual environments, and will therefore tend to rely on visual information over vestibular sensation for spatial judgments. Our observations, both from this experiment and from our own informal tests, suggest that it is very difficult to hold on to both the real world and virtual world spatial models simultaneously. These findings suggest that redirected walking is highly promising for use in immersive training environments, as we can expect users to respond correctly to the virtual content with little or no spatial interference from residual memories of the real world.

3.2 Augmenting Effectiveness of Redirected Walking

In order to increase the utility of redirected walking in immersive training scenarios, we have explored possibilities for augmenting the magnitude of redirection that can be achieved without becoming noticeable to the user. In particular, the interface between the ground and the user’s feet is frequently taken for granted, but if floor contact can be manipulated, it may be possible to more strongly curve the user’s walking path. To probe this effect, we constructed shoe attachment prototypes designed to introduce an angular bias to users’ footsteps. These attachments were constructed from a section of NoTrax rubber finger mat cut to the shape of the sole, and were designed to be worn around the user’s existing shoes (see Fig. 14.2a). The bottom of the mat contains a multitude of floor contact elements that were trimmed at 45–60\(^{\circ }\) diagonals in a uniform direction (see Fig. 14.2b). During forward steps, the user’s heel typically makes contact with the floor first. At the rest of the foot rolls forward, the floor-contact elements that begin to bear new weight buckle and pull towards one side due to the diagonal trim. This effect progresses towards the front of the foot, resulting in a net rotation of the shoe attachment about the heel.

a Attachments were designed to fit around the user’s existing shoes and induce an angular rotation of the user’s foot about the heel. b The bottom of each shoe attachment comprised a multitude of rubber floor contact elements that were diagonally trimmed, causing them to buckle and pull towards one side as weight is applied

Informal testing with our left-biased prototype has provided anecdotal evidence that haptic foot manipulation may be useful in conjunction with redirected walking. In blindfolded walking tests, users appeared to gravitate slightly more towards the left when compared to normal walking. To quantify the degree of bias, we attached LED markers to the user’s foot and tracked the orientation before and after each footstep. Wearing the shoes resulted in an average foot rotation of approximately \(4^{\circ }\) per step when walking slowly. However, when walking quickly or running, the effect is reduced because the foot hits the ground with too much speed and force for the progressive buckling of floor contact elements to induce angular bias.

These prototypes have indicated that it is possible to introduce a bias to a user’s walking path using an intermediary between ground and the user’s shoes. However, formal study is required to determine if this effect can be used to augment redirected walking techniques. Our long-term vision would be to design a pair of “active shoes” that can dynamically alter the rotational bias as the user explores a virtual environment. Such devices, if proven effective, would be useful training simulators that needed to maximize the effectiveness of redirected walking techniques. Additionally, we also suggest that other often neglected sensory modalities may be useful for augmenting redirected walking. For example, previous studies have shown that spatialized audio can be used to influence the perception of self-motion [15], but it has yet to be explored in conjunction with redirection techniques.

3.3 Designing Experiences for Redirected Walking

The effectiveness of redirected walking depends largely upon the users’ motions—the virtual world can be rotated faster to redirect the user’s walking path during turns of the head or body. Unfortunately, user behavior in immersive simulators is often unpredictable, and if the user chooses to follow an unexpected route, the redirected walking technique may fail to prevent them from exiting the tracking space. However, the content of the virtual world can be designed to support redirected walking by subtly influencing and guiding user behavior. We have identified three design mechanics that can be leveraged to maximize the effectiveness of redirected walking: attractors, detractors, and distractors. We further suggest that in the context of immersive training, these mechanics should be ecologically valid and seamlessly merged into the content of the training scenario, so that the redirection will be as unintrusive as possible.

Attractors are designed to make the user walk towards a specific location or direction. For example, an environment to train soldiers how to search for improvised explosive devices may include suspicious objects or containers that attract users to come investigate them. Alternatively, avatars also have shown to be highly promising for supporting redirected walking (e.g. [11]). This may be especially useful in the context of training, since soldiers typically operate in squads. A virtual squadmate can move around the virtual environment and communicate with users to entice them to walk towards locations that would be advantageous for redirection.

Detractors are obstacles in the virtual environment that can be employed to prevent users from approaching inaccessible areas or force them to take a less direct route through the environment. For example, researchers have used partially opened doors to elicit body turns while navigating through doorways instead of walking straight through, thus allowing greater amounts of redirection to be applied [2]. Again, we suggest that an avatar may be ideally suited for such a role. Studies of proxemics have shown that people will attempt to maintain a comfortable distance from another person, and this social behavior has also been replicated when interacting with a virtual character [8]. If the user is approaching the boundaries of the tracking space, a virtual squadmate might walk in front, thereby forcing the user to turn and providing an opportunity for redirection.

Distractors were first introduced by Peck et al. to capture the user’s attention and elicit head turns during reorientation [12]. This design mechanic has many potential uses in training environments. For example, explosions, gunfire, and vehicles are just a few events that might be encountered in a military combat simulator. These phenomena provide opportune moments for redirection, while the user’s attention has been diverted away from the act of walking. We suggest that any practical training scenario seeking to use redirected walking techniques should be designed to make maximal use of these opportunities for distraction.

4 Redirection in Mixed Reality Environments

Mixed reality experiences that combine elements from the physical and virtual worlds have also been a focus for training applications, such as the Infantry Immersion Trainer at the Marine Corps Base Camp Pendleton [10]. Traditionally, mixed reality is often used to refer to the visual merging of real and virtual elements into a single scene. However, in the Mixed Reality Lab at the Institute for Creative Technologies, we are particularly interested in enhancing virtual experiences using modalities beyond just visuals, such as passive haptic feedback using real objects that are aligned with virtual counterparts. Experiments have shown that the ability to reach out and physically touch virtual objects substantially enhances the experience of the environment when using head-mounted displays [5].

Because redirected walking requires a continuous rotation of the virtual environment about the user, it disrupts the spatial alignment between virtual objects and their real world counterparts in mixed reality scenarios. While researchers have demonstrated that it is possible to combine redirected walking with passive haptic feedback, solutions have been limited in their applicability. For example, Kohli et al. presented an environment that redirects users to walk between multiple virtual cylindrical pedestals that are aligned with a single physical pedestal [7]. Unfortunately, this solution does not generalize to other types of geometry that would not be perceptually invariant to rotation (i.e. non-cylindical objects). Steinicke et al. extended this approach by showing that multiple virtual objects can be mapped to proxy props that need not match the haptic properties of the virtual object identically [20]. However, due to the gradual virtual world rotations required by redirected walking, synchronizing virtual objects with corresponding physical props remains a practical challenge.

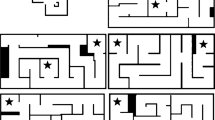

Recent research has presented a drastically different approach to redirection that does not require gradual rotations of the virtual world. This technique, known as change blindness redirection, reorients the user by applying instantaneous alterations to the architecture of the virtual world behind the user’s back. So long as the user does not directly observe the manipulation visually, minor structural changes to the virtual environment, such as the physical orientation of doorways (see Fig. 14.3), are difficult to detect and most often go completely unnoticed. Perceptual studies of this technique have shown it to provide a compelling illusion—out of 77 users tested across two experiments, only one person noticed that a scene change had occurred [22]. Furthermore, because this technique shifts between discrete environment states, it is much easier to deploy in mixed reality applications that provide passive haptic feedback [25].

(top row) Overhead map views of the virtual environment through the three stages of change blindness redirecton, with the user’s location indicated by the yellow marker. The blue rectangle indicates the boundaries of the tracking area, and the user’s path through the virtual world is plotted in red. (middle row) The view from an overhead camera mounted in the tracking space. (bottom row) The virtual environment as viewed through the head-mounted display. a The user walks down the gravel path and enters the first virtual building. b The user enters the back room of the building. When the user searches through the crates, the door behind him is moved. c The user exits and continues down the same gravel path. The original layout is restored as the user approaches the second virtual building

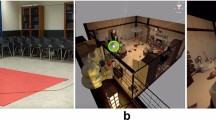

Figure 14.4 demonstrates change blindness redirection being used in a mixed reality environment that combines synthetic visuals with a passive haptic gravel walking surface. In our example application, which was themed as an environment similar to those that might be used for training scenarios, users are instructed to search for a cache of weapons hidden in a desert village consisting of a gravel road connecting a series of buildings (see Fig. 14.4a). Inside of each building, the location of one of the doors is moved as they are looking inside strategically-placed containers (see Fig. 14.4b). Upon exiting the building, users will be located at the opposite end of the gravel road from where they entered, allowing them to walk repeatedly over the same surface to enter the next building (see Fig. 14.4c). Thus, the gravel road could be extended infinitely, so long as the user stops to explore each building along the way. Of course, if users choose to continue walking straight down the road without stopping, an intervention would be required to prevent them from exiting the physical workspace. Feedback from demonstrations and informal testing of this environment suggest that the physical sensation of walking on a gravel surface provides a compelling illusion that makes the virtual environment seem substantially more real.

Our vision is to provide a dynamic mixed reality experience with physical props that can be moved to a different location in the tracking space when the user is redirected. Dynamically moving props would be difficult and unpredictable with redirected walking techniques that use gradual, continuous rotations. However, because change blindness redirection shifts between predictable discrete environment states, it would be relatively simple to mark the correct locations for each prop on the floor, and have assistants move them to the appropriate place whenever a scene change is applied to the environment. Thus, we expect spatial manipulation techniques to prove useful for mixed reality training scenarios that incorporate a variety of passive haptic stimuli such as walking surfaces, furniture, and other physical props.

5 Challenges and Future Directions

In this section, we discuss the practical challenges and future directions towards transitioning redirected walking in active training environments, based on feedback we have received from public demonstrations and discussions with domain experts and military personnel.

Automated redirection in arbitrary environments. Much of the previous research has focused on purpose-built environments with constrained scenarios designed to test individual techniques. However, in a practical training setting, users will not always follow the designer’s expectations. Such a system would need to be able to figure out how to automatically redirect in an optimal manner without imposing unnatural restrictions on freedom of movement. Automated approaches are a non-trivial problem, as they would require analyzing the scene structure, predicting the user’s walking path within the virtual and physical spaces, selecting the most appropriate technique to employ based on the current state of the user and system, and gracefully intervening when failure cases are encountered.

Redirection with multiple users. Small squad training is an notable topic of interest for the U.S. Army, and the possibility of employing redirected walking with multiple users is one of the most frequent questions we have received from military personnel. To the best of our knowledge, research thus far in redirected walking has focused exclusively on the single user experience. We believe that it is possible to redirect multiple users within a single virtual environment, but this would also introduce new challenges when the two users need to interact and their virtual world coordinate systems do not align well with each other. Thus, redirected walking with multiple simultaneous users is an important area for future work.

Evaluating impact on training value. While research thus far has shown promising results for using redirected walking in virtual environments, the open question remains whether redirected walking techniques will be compelling and effective specifically in a training context. Many of the studies conducted by the virtual reality community tend to draw their subjects from either the general population or university students. However, soldiers are a self-selected population with specialized skills and training, and it seems logical to conclude that their experiences in an immersive training simulator could be drastically different from those of a randomly selected person drawn from the general population. Before redirected walking can be transitioned to these practical environments, there is a need to understand the impact that these techniques will have on learning gains and training outcomes. Thus, domain-specific evaluation remains an important focus for future studies.

References

Berthoz A (2000) The brain’s sense of movement. Harvard University Press, Cambridge

Bruder G, Steinicke F, Hinrichs K, Lappe M (2009) Arch-explore: a natural user interface for immersive architectural walkthroughs. In: IEEE symposium on 3D user interfaces, pp 145–152

Bruder G, Steinicke F, Hinrichs K, Lappe M (2009) Reorientation uring body turns. In: Joint virtual reality conference of EGVE-ICAT-EuroVR, pp 145–152

Darken RP, Cockayne WR, Carmein D (1997) The omni-directional treadmill: a locomotion device for virtual worlds. In: ACM symposium on user interface software and technology, pp 213–221

Insko BE (2001) Passive haptics significantly enhances virtual environments. Ph.D. thesis, University of North Carolina, Chapel Hill

Jerald J, Peck TC, Steinicke F, Whitton MC (2008) Sensitivityto scenemotion for phases of head yaws. In: Symposium on applied perception in graphics and visualization, ACM Press, New York, pp 155–162. doi:10.1145/1394281.1394310

Kohli L, Burns E, Miller D, Fuchs H (2005) Combining passive haptics withredirected walking. In: International conference on artificial reality and telexistence, ACM Press, New York, pp 253–254. doi:10.1145/1152399.1152451

Llobera J, Spanlang B, Ruffini G, Slater M (2010) Proxemics withmultiple dynamic characters in an immersive virtual environment. ACM Trans Appl Percept 8(1):1–12. doi:10.1145/1857893.1857896

Medina E, Fruland R, Weghorst S (2008) Virtusphere: walking in a human size VR “hamster ball”. In: Human factors and ergonomics society annual meeting, pp 2102–2106

Muller P, Schmorrow D, Buscemi T (2008) The infantry immersion trainer: todays holodeck. Marine Corps Gazette

Neth CT, Souman JL, Engel D, Kloos U, Buthoff HH, Mohler BJ (2011) Velocity-dependent dynamic curvature gain for redirected walking. In: Proceedings of the IEEE virtual reality, pp 151–158

Peck TC, Fuchs H, Whitton MC (2009) Evaluation of reorientation techniques and distractors for walking in large virtual environments. IEEE Trans Vis Comput Graph 15(3):383–394. doi:10.1109/TVCG.2008.191

Quinn K (2011) US Army to get dismounted soldier training system. Defense News Train Simul J

Razzaque S (2005) Redirected walking. Ph.D. thesis, University of North Carolina, Chapel Hill

Riecke BE, Feuereissen D, Rieser JJ (2010) Spatialized sound inuences biomechanical self-motion illusion (“Vection”). In: Symposium on applied perception in graphics and visualization, p 158

Ruddle RA, Lessels S (2009) The benefits of using a walkinginterface to navigate virtual environments. ACM Trans Comput Hum Interact 16(1):1–18. doi:10.1145/1502800.1502805

Schwaiger MC, Thummel T, Ulbrich H (2007) A 2D-motion platform: the cybercarpet. In: Joint EuroHaptics conference and symposium on haptic interfacesfor virtual environment and teleoperator systems. IEEE, pp 415–420. doi:10.1109/WHC.2007.1

Slater M (2009) Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos Trans R soc London Ser B Biol Sci 364(1535):3549–3557. doi:10.1098/rstb.2009.0138

Souman JL, Giordano PR, Schwaiger MC, Frissen I, Thumel T, Ulbrich H, Luca AD, Bulthoff HH, Ernst MO (2011) CyberWalk: enabling unconstrained omnidirectional walking through virtual environments. ACM Trans Appl Percept 8(4):1–22. doi:10.1145/2043603.2043607

Steinicke F, Bruder G, Hinrichs K, Jerald J, Frenz H, Lappe M (2009) Real walking through virtual environments by redirection techniques. J Virtual Real Broadcast 6(2)

Steinicke F, Bruder G, Jerald J, Frenz H, Lappe M (2010) Estimation of detection thresholds for redirected walking techniques. IEEE Trans Vis Comput Graph 16(1):17–27. doi:10.1109/TVCG.2009.62

Suma EA, Clark S, Finkelstein SL, Wartell Z, Krum DM, Bolas M (2011) Leveraging change blindness for redirection in virtual environments. In: Proceedings of the IEEE virtual reality, pp 159–166

Suma EA, Finkelstein SL, Clark S, Goolkasian P, Hodges LF (2010) Effects of travel technique and gender on a divided attention task in a virtual environment. In: IEEE symposium on 3D user interfaces. IEEE, pp 27–34. doi:10.1109/3DUI.2010.5444726

Suma EA, Finkelstein SL, Reid M, V Babu S, Ulinski AC, Hodges LF (2010) Evaluation of the cognitive effects of travel technique in complex real and virtual environments. IEEE Trans Vis Comput Graph 16(4):690–702. doi:10.1109/TVCG.2009.93

Suma EA, Krum DM, Bolas M (2011) Redirection on mixed reality walking surfaces. In: IEEE VR workshop on perceptual illusions in virtual environments, pp 33–35

Suma EA, Krum DM, Finkelstein SL, Bolas M (2011) Effects of redirection on spatial drientation in real and virtual environments. In: IEEE symposium on 3D user interfaces, pp 35–38

Templeman JN, Denbrook PS, Sibert LE (1999) Virtual locomotion: walking in place through virtual environments. Presence Teleoper Virtual Environ 8(6):598–617. doi:10.1162/105474699566512

Usoh M, Arthur K, Whitton MC, Bastos R, Steed A, Slater M, Brooks FP (1999) Walking \(>\) walking-in-place \(>\) flying, in virtual environments. In: ACM conference on computer graphics and interactive techniques (SIGGRAPH). ACM Press, New York, pp 359–364. doi:10.1145/311535.311589

Waller D, Bachmann E, Hodgson E, Beall AC (2007) The HIVE: a huge immersive virtual environment for research in spatial cognition. Behav Res Methods 39(4):835–843

Zanbaka CA, Lok BC, Babu SV, Ulinski AC, Hodges LF (2005) Comparison of path visualizations and cognitive measures relative to travel technique in a virtual environment. IEEE Trans Vis Comput Graph 11(6):694–705. doi:10.1109/TVCG.2005.92

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Cite this chapter

Suma, E.A., Krum, D.M., Bolas, M. (2013). Redirected Walking in Mixed Reality Training Applications. In: Steinicke, F., Visell, Y., Campos, J., Lécuyer, A. (eds) Human Walking in Virtual Environments. Springer, New York, NY. https://doi.org/10.1007/978-1-4419-8432-6_14

Download citation

DOI: https://doi.org/10.1007/978-1-4419-8432-6_14

Published:

Publisher Name: Springer, New York, NY

Print ISBN: 978-1-4419-8431-9

Online ISBN: 978-1-4419-8432-6

eBook Packages: EngineeringEngineering (R0)