Abstract

We consider an information theoretic model of a communication channel with a time-varying probability law. Specifically, our model consists of a state-dependent discrete memoryless channel, in which the underlying state process is independent and identically distributed with known probability distribution, and for which the channel output at any time instant depends on the inputs and states only through their current values. For this channel, we provide a strong converse result for its capacity, explaining the structure of optimal transmission codes. Exploiting this structure, we obtain upper bounds for the reliability function when the transmitter is provided channel state information causally and noncausally. Instrumental to our proofs is a new technical result which provides an upper bound on the rate of codes with code words that are “conditionally typical over large message-dependent subsets of a typical set of state sequences.” This technical result is a nonstraightforward extension of an analogous result for a discrete memoryless channel without states; the latter provides a bound on the rate of a good code with code words of a fixed composition.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- State dependent channel

- Channel state information

- Strong converse

- Reliability function

- Capacity

- Probability of error

- Gelfand–Pinsker channel

- Type

- Typical set

- DMC

1 Introduction

The information theoretic model of a communication channel for message transmission is described by the conditional probability law of the channel output given the input. For instance, the binary symmetric channel is a model for describing the communication of binary data in which noise may cause random bit-flips with a fixed probability. A reliable encoded transmission of a message generally entails multiple uses of the channel. In several applications, such as mobile wireless communication, digital fingerprinting, and storage memories, the probability law characterizing the channel can change with time. This time-varying behavior of the channel probability is described typically in terms of the evolution of the underlying channel condition, termed “state.” The availability of channel state information (CSI) at the transmitter or receiver can enhance overall communication performance (cf. [7, 1, 6]).

We consider a state-dependent discrete memoryless channel (DMC), in which the underlying state process is independent and identically distributed (i.i.d.) with known probability mass function (PMF), and for which the channel output at any time instant depends on the inputs and states only through their current values. We address the cases of causal and noncausal CSI at the transmitter. In the former case, the transmitter has knowledge of all the past channel states as well as the current state; this model was introduced by Shannon [8]. In the latter case, the transmitter is provided access at the outset to the entire state sequence prevailing during the transmission of a message; see Gelfand–Pinsker [5]. We restrict ourselves to the situation where the receiver has no CSI, for receiver CSI can be accommodated by considering the states, too, as channel outputs.

Two information theoretic performance measures are of interest: Channel capacity and reliability function. The channel capacity characterizes the largest rate of encoded transmission for reliable communication. The reliability function describes the best exponential rate of decay of decoding error probability with transmission duration for coding rates below capacity. The capacities of the models above with causal and noncausal CSI were characterized in classic papers by Shannon [8] and Gelfand–Pinsker [5]. The reliability function is not fully characterized even for a DMC without states; however, good upper and lower bounds are known, which coincide at rates close to capacity [9, 10, 3].

Our contributions are twofold. First, we provide a strong converse for the capacity of state-dependent channels, which explains the structure of optimal codes. Second, exploiting this structure, we obtain upper bounds for the reliability functions of the causal and noncausal CSI models. Instrumental to our proofs is a new technical result which provides an upper bound on the rate of codes with code words that are “conditionally typical over large message-dependent subsets of a typical set of state sequences.” This technical result is a nonstraightforward analog of [3, Lemma 2.1.4] for a DMC without states; the latter provides a bound on the rate of a good code with codewords of a fixed composition. A preliminary conference version of this work is in [11].

In the next section, we compile pertinent technical concepts and tools that will be used to prove our results. These standard staples can be found, for instance, in [3, 2]. The channel models are described in Sect. 22.3. Sections 22.4–22.6 contain our main results.

2 Preliminaries: Types, Typical Sets and Image Sets

Let \(\mathcal{X}\) be a finite set. For a sequence \(\mathbf{x} \in {\mathcal{X}}^{n}\), the type of x, denoted by Q x , is a pmf on \(\mathcal{X}\), where Q x (x) is the relative frequency of x in x. Similarly, joint types are pmfs on product spaces. For example, the joint type of two given sequences \(\mathbf{x} \in {\mathcal{X}}^{n}\) \(\mathbf{s} \in {\mathcal{S}}^{n}\) is a pmf Q on \(\mathcal{X}\times \mathcal{S}\), where Q x, s (x, s) is the relative frequency of the tuple (x, s) among the tuples \(\left ({x}_{t},{s}_{t}\right )\), t = 1, …, n. Joint types of several n-length sequences are defined similarly.

The number of types of sequences in \({\mathcal{X}}^{n}\) is bounded above by \({(n + 1)}^{\vert \mathcal{X}\vert }\). Denoting by \({\mathcal{T}}_{Q}^{(n)}\) the set of all sequences in \({\mathcal{X}}^{n}\) of type Q, we note that

For any pmf P on \(\mathcal{X}\), and type Q on \({\mathcal{X}}^{n}\),

from which, along with (22.1), it follows that

Next, for a pmf P on \(\mathcal{X}\) and δ > 0, a sequence \(\mathbf{x} \in {\mathcal{X}}^{n}\) is P typical with constant δ if

and P(x) = 0 implies Q x (x) = 0. The set of all P -typical sequences with constant δ, is called the P -typical set, denoted \({\mathcal{T}}_{[{P}_{}]}^{(n)}\) (where the dependence on δ is not displayed explicitly). Thus,

In general, δ = δ n and is assumed to satisfy the “δ-convention” [3], namely

The typical set has large probability. Precisely, for δ = δ n as in (22.2),

Consider sequences \(\mathbf{x} \in {\mathcal{X}}^{n}\), \(\mathbf{y} \in {\mathcal{Y}}^{n}\) of joint type Q x, y . The sequence \(\mathbf{y} \in {\mathcal{Y}}^{n}\) has conditional type V if Q x, y = Q x V , for some stochastic matrix \(V : \mathcal{X} \rightarrow \mathcal{Y}\). Given a stochastic matrix \(W : \mathcal{X} \rightarrow \mathcal{Y}\), and \(\mathbf{x} \in {\mathcal{X}}^{n}\), a sequence \(\mathbf{y} \in {\mathcal{Y}}^{n}\) of conditional type V is W-conditionally typical if for all \(x \in \mathcal{X}\):

and W(y∣x) = 0 implies V (y∣x) = 0. The set of all W-conditionally typical sequences conditioned on \(\mathbf{x} \in {\mathcal{X}}^{n}\) is denoted by \({\mathcal{T}}_{[W]}^{(n)}(\mathbf{x})\). In a manner similar to (22.3), it holds that

For a subset A of \(\mathcal{X}\), we shall require also estimates of the minimum cardinality of sets in \(\mathcal{Y}\) with significant W-conditional probability given x ∈ A. Precisely, a set \(B \subseteq \mathcal{Y}\) is an ε-image (0 < ε ≤ 1) of \(A \subseteq \mathcal{X}\) under \(W : \mathcal{X} \rightarrow \mathcal{Y}\) if W(B∣x) ≥ ε for all x ∈ A. The minimum cardinality of ε-images of A is termed the image size of A (under W), and is denoted by g W (A, ε). Coding theorems in information theory use estimates of the rates of the image size of \(A \subseteq {\mathcal{X}}^{n}\) under W n, i.e., \((1/n)\log {g}_{{W}^{n}}(A,\epsilon )\). In particular, for multiterminal systems, we compare the rates of image sizes of \(A \subseteq {\mathcal{X}}^{n}\) under two different channels W n and V n. Precisely, given stochastic matrices \(W : \mathcal{X} \rightarrow \mathcal{Y}\) and \(V : \mathcal{X} \rightarrow \mathcal{S}\), for every 0 < ε < 1, δ > 0 and for every \(A \subseteq {\mathcal{T}}_{[{P}_{X}]}^{(n)}\), there exists an auxiliary rv U and associated pmfs P UXY = P U∣X P X W and \({P}_{UXZ} = {P}_{U\mid X}{P}_{X}V\) such that

where \(0 \leq t \leq \min \{ I(U \wedge Y ),I(U \wedge S)\}\).

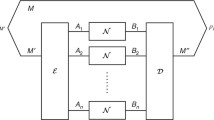

3 Channels with States

Consider a state-dependent DMC \(W : \mathcal{X}\times \mathcal{S}\rightarrow \mathcal{Y}\) with finite input, state, and output alphabets \(\mathcal{X}\), \(\mathcal{S}\), and \(\mathcal{Y}\), respectively. The \(\mathcal{S}\)-valued state process {S t } t = 1 ∞ is i.i.d. with known pmf P S . The probability law of the DMC is specified by

An (M, n)-code with encoder CSI consists of the mappings (f, ϕ) where the encoder mapping \(f = \left ({f}_{1},\ldots ,{f}_{n}\right )\) is either causal, i.e.,

or noncausal, i.e.,

with \(\mathcal{M} =\{ 1,\ldots ,M\}\) being the set of messages. The decoder ϕ is a mapping

We restrict ourselves to the situation where the receiver has no CSI. When the receiver, too, has CSI, our results apply in a standard manner by considering an associated DMC with augmented output alphabet \(\mathcal{Y}\times \mathcal{S}\).

The rate of the code is (1 ∕ n)logM. The corresponding (maximum) probability of error is

where \({\phi }^{-1}(m) =\{ \mathbf{y} \in {\mathcal{Y}}^{n} : \phi (\mathbf{y}) = m\}\) and ( ⋅)c denotes complement.

Definition 23.1.

Given 0 < ε < 1, a number R > 0 is ε-achievable if for every δ > 0 and for all n sufficiently large, there exist (M, n)-codes (f, ϕ) with \((1/n)\log M > R - \delta \) and e(f, ϕ) < ε. The supremum of all ε-achievable rates is denoted by C(ε). The capacity of the DMC is

If C(ε) = C for 0 < ε < 1, the DMC is said to satisfy a strong converse [12]. This terminology reflects the fact that for rates R > C, e(f, ϕ) > ε for n ≥ N(ε), 0 < ε < 1. (In contrast, a standard converse shows that for R > C, e(f, ϕ) cannot be driven to 0 as n → ∞.)

For a given pmf \({P}_{\tilde{X}\tilde{S}}\) on \(\mathcal{X}\times \mathcal{S}\) and an rv U with values in a finite set \(\mathcal{U}\), let \(\mathcal{P}\left ({P}_{\tilde{X}\tilde{S}},W\right )\) denote the set of all pmfs P UXSY on \(\mathcal{U}\times \mathcal{X}\times \mathcal{S}\times \mathcal{Y}\) with

for some mapping h,

For γ ≥ 0, let \({\mathcal{P}}_{\gamma }\left ({P}_{\tilde{X}\tilde{S}},W\right )\) be the subset of \(\mathcal{P}\left ({P}_{\tilde{X}\tilde{S}},W\right )\) with I(U ∧ S) ≤ γ; note that \({\mathcal{P}}_{0}\left ({P}_{\tilde{X}\tilde{S}},W\right )\) corresponds to the subset of \(\mathcal{P}\left ({P}_{\tilde{X}\tilde{S}},W\right )\) with U independent of S.

The classical results on the capacity of a state-dependent channel are due to Shannon [8] when the encoder CSI is causal and Gelfand and Pinsker [5] when the encoder CSI is noncausal.

Theorem 23.1.

For the case with causal CSI, the capacity is

and holds with the strong converse.

Remark.

The capacity formula was derived by Shannon [8], and the strong converse was proved later by Wolfowitz [12].

Theorem 23.2 (Gelfand–Pinsker [5]).

For the case with noncausal CSI, the capacity is

One main result below is to show that the previous result, too, holds with a strong converse.

Definition 23.2.

The reliability functionE(R), R ≥ 0, of the DMC W is the largest number E ≥ 0 such that for every δ > 0 and for all sufficiently large n, there exist n-length block codes (f, ϕ) with causal or noncausal CSI as above of rate greater than R − δ and \(e(f,\phi ) \leq \exp [-n(E - \delta )]\) (see, for instance, [3]).

4 A Technical Lemma

For a DMC without states, the result in [3, Corollary 6.4] provides, in effect, an image size characterization of a good codeword set; this does not involve any auxiliary rv. In the same spirit, our key technical lemma below provides an image size characterization for good codeword sets for the causal and noncausal DMC models, which now involves an auxiliary rv.

Lemma 23.1.

Let ε,τ > 0 be such that ε + τ < 1. Given a pmf \({P}_{\tilde{S}}\) on \(\mathcal{S}\) and conditional pmf \({\tilde{P}}_{X\mid S}\) , let (f,ϕ) be a (M,n)-code as above. For each \(m \in \mathcal{M}\) , let A(m) be a subset of \({\mathcal{S}}^{n}\) which satisfies the following conditions:

Furthermore, let (f,ϕ) satisfy one of the following two conditions:

-

(a)

In the causal CSI case, for \(n \geq N(\|\mathcal{X}\|,\|\mathcal{S}\|,\|\mathcal{Y}\|,\tau ,\epsilon )\),Footnote 1 it holds that

$$\begin{array}{rcl} \frac{1} {n}\log M \leq I(U \wedge Y ) + \tau ,& & \\ \end{array}$$for some \({P}_{UXSY } \in {\mathcal{P}}_{\tau }({P}_{\tilde{X}\mid \tilde{S}}{P}_{\tilde{S}},W)\) .

-

(b)

In the noncausal CSI case, for \(n \geq N(\|\mathcal{X}\|,\|\mathcal{S}\|,\|\mathcal{Y}\|,\tau ,\epsilon )\) , it holds that

$$\begin{array}{rcl} \frac{1} {n}\log M \leq I(U \wedge Y ) - I(U \wedge S) + \tau ,& & \\ \end{array}$$for some \({P}_{UXSY } \in \mathcal{P}({P}_{\tilde{X}\mid \tilde{S}}{P}_{\tilde{S}},W)\) .

Furthermore, in both cases it suffices to restrict the rv U to take values in a finite set \(\mathcal{U}\) with \(\|\mathcal{U}\|\leq \|\mathcal{X}\|\|\mathcal{S}\| + 1\) .

Proof.

Our proof below is for the case when (22.11a) holds. The case when (22.11b) holds can be proved similarly with minor modifications; specifically, in the latter case, we can find subsets A′(m) of A(m), \(m \in \mathcal{M}\), that satisfy (22.8)–(22.10) and (22.11a) for some ε′, τ′ > 0 with ε′ + τ′ < 1 for all n sufficiently large.

With (22.11a) holding, set

Let \({P}_{\tilde{Y }} = {P}_{\tilde{X}\tilde{S}}W\) be a pmf on \(\mathcal{Y}\) defined by

Consequently,

for all \(n \geq N(\|\mathcal{X}\|,\vert \mathcal{S}\|,\vert \mathcal{Y}\|,\tau ,\epsilon )\) (not depending on m and s in A(m)). Denoting

we see from (22.11a) and (22.12) that

so that

where \({g}_{{W}^{n}}(B(m),\tau )\) denotes the smallest cardinality of a subset D of \({\mathcal{Y}}^{n}\) with

With \({m}_{0} ={ arg\min }_{1\leq m\leq M}\|C(m)\|\), we have

Consequently,

The remainder of the proof entails relating the “image size” of B(m 0), i.e., \({g}_{{W}^{n}}(B({m}_{0}),\tau )\), to \(\|A({m}_{0})\|\), and is completed below separately for the cases of causal and noncausal CSI.

First consider the causal CSI case. For a rv \(\hat{{S}}^{n}\) distributed uniformly over A(m 0), we have from (22.9) that

Since

where the rv I is distributed uniformly over the set {1, …, n} and is independent of all other rvs, the previous identity, together with (22.15), yields

Next, denote by \(\hat{{X}}^{n}\) the rv f(m 0, \hat{S}n) and by \(\hat{{Y }}^{n}\) the rv which conditioned on \(\hat{{X}}^{n}\), \(\hat{{S}}^{n}\), has (conditional) distribution W n, i.e., \(\hat{{Y }}^{n}\) is the random output of the DMC W when the input is set to \(\left (\hat{{X}}^{n},\hat{{S}}^{n}\right )\). Then, using [3, Lemma 15.2], we get

for all n sufficiently large. Furthermore,

where the last-but-one equality follows from the DMC assumption, and the last equality holds since \(\hat{{X}}^{I-1} = f({m}_{0},\hat{{S}}^{I-1})\). The inequality above, along with (22.17) and (22.14) gives

Denote by \(\hat{U}\) the rv \((\hat{{S}}^{I-1},I)\) and note that the following Markov property holds:

Also, from the definition of B(m 0),

where Q x, s (x, s) is the joint type of x, s, and the last equation follows upon interchanging the order of summation. It follows from (22.8) and (22.10) that \(\|{P}_{\hat{{X}}_{I},\hat{{S}}_{I}} - {P}_{\tilde{X}\tilde{S}}\| \leq {\delta }_{n}\) for some δ n → 0 satisfying the delta convention. Furthermore,

Let the rvs \(\tilde{X},\tilde{S},\tilde{Y }\) have a joint distribution \({P}_{\tilde{X}\tilde{S}\tilde{Y }}\). Define a rv U which takes values in the same set as \(\hat{U}\), has \({P}_{\hat{U}\mid \hat{{X}}_{I}\hat{{S}}_{I}}\) as its conditional distribution given X, S, and satisfies the Markov relation

Then using the continuity of the entropy function and the arguments above, (22.18) yields

and (22.16) yields

for all n sufficiently large, where \({P}_{UXSY } \in {\mathcal{P}}_{\tau }({P}_{\tilde{X}\tilde{S}},W)\).

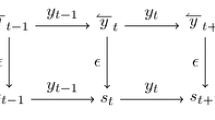

Turning to the case with noncausal CSI, define a stochastic matrix \(V : \mathcal{X}\,\times \,\mathcal{S}\,\rightarrow \,\mathcal{S}\) with

and let g V n be defined in a manner analogous to g Wn above with \({\mathcal{S}}^{n}\) in the role of \({\mathcal{Y}}^{n}\) in (22.13). For any \(m \in \mathcal{M}\) and subset E of \({\mathcal{S}}^{n}\), observe that

In particular, if E satisfies

it must be that A(m) ⊆ E, and since E = A(m) satisfies (22.19), we get that

using the definition of B(m). Using the image size characterization in (22.4) [3, Theorem 15.11], there exists an auxiliary rv U and associated pmf \({P}_{UXSY } = {P}_{U\mid XS}{P}_{\tilde{X}\tilde{S}}W\) such that

where \(0 \leq t \leq \min \{ I(U \wedge Y ),I(U \wedge S)\}\). Then, using (22.14), (22.20), and (22.21) we get

which, by (22.9), yields

In (22.21), \({P}_{UXSY } \in \mathcal{P}({P}_{\tilde{X}\mid \tilde{S}}{P}_{\tilde{S}},W)\) but need not satisfy (22.6). Finally, the asserted restriction to \({P}_{UXSY } \in \mathcal{P}({P}_{\tilde{X}\mid \tilde{S}}{P}_{\tilde{S}},W)\) follows from the convexity of I(U ∧ Y ) − I(U ∧ S) in P X∣US for a fixed P US (as observed in [5]).

Lastly, it follows from the support lemma [3, Lemma 15.4] that it suffices to consider those rvs U for which \(\|\mathcal{U}\|\leq \|\mathcal{X}\|\|\mathcal{S}\| + 1\).

5 The Strong Converse

Theorem 23.3 (Strong converse).

Given 0 < ε < 1 and a sequence of (M n ,n) codes (f n ,ϕ n ) with e(f n ,ϕ n ) < ε, it holds that

where C = C Sh and C GP for the cases of causal and noncausal CSI, respectively.

Proof.

Given 0 < ε < 1 and a (M, n)-code (f, ϕ) with e(f, ϕ) ≤ ε, the proof involves the identification of sets A(m), \(m \in \mathcal{M}\), satisfying (22.8)–(22.10) and (22.11a). The assertion then follows from Lemma 22.1. Note that e(f, ϕ) ≤ ε implies

for all \(m \in \mathcal{M}\). Since \({P}_{S}\left ({\mathcal{T}}_{[{P}_{S}]}^{n}\right ) \rightarrow 1\) as n → ∞, we get that for every \(m \in \mathcal{M}\),

for all \(n \geq N(\|\mathcal{S}\|,\epsilon )\). Denoting the set \(\{ \cdot \}\) in (22.22) by \(\hat{A}(m)\), clearly for every \(m \in \mathcal{M}\),

and

for all \(n \geq N(\|\mathcal{S}\|,\epsilon )\), whereby for an arbitrary δ > 0, we get

for all \(n \geq N(\|\mathcal{S}\|,\delta )\). Partitioning \(\hat{A}(m)\), \(m \in \mathcal{M}\), into sets according to the (polynomially many) conditional types of f(m, s) given s in \(\hat{A}(m)\), we obtain a subset A(m) of \(\hat{A}(m)\) for which

for all \(n \geq N(\|\mathcal{S}\|,\|\mathcal{X}\|,\delta )\), where \({\mathcal{T}}_{m}^{n}(\mathbf{s})\) represents a set of those sequences in \({\mathcal{X}}^{n}\) that have the same conditional type (depending only on m).

Once again, the polynomial size of such conditional types yields a subset \(\mathcal{M}{^\prime}\) of \(\mathcal{M}\) such that f(m, s) has a fixed conditional type (not depending on m) given s in A(m), and with

for all \(n \geq N(\|\mathcal{S}\|,\|\mathcal{X}\|,\delta )\). Finally, the strong converse follows by applying Lemma 22.1 to the subcode corresponding to \(\mathcal{M}{^\prime}\) and noting that δ > 0 is arbitrary.

6 Outer Bound on Reliability Function

An upper bound for the reliability function E(R), 0 < R < C, of a DMC without states is derived in [3] using a strong converse for codes with codewords of a fixed type. The key technical Lemma 22.1 gives an upper bound on the rate of codes with codewords that are conditionally typical over large message-dependent subsets of the typical set of state sequences and serves, in effect, as an analog of [3, Corollary 6.4] for state-dependent channels to derive an upper bound on the reliability function.

Theorem 23.4 (Sphere packing bound).

Given δ > 0, for 0 < R < C, it holds that

where

with

and

for the causal and noncausal CSI cases, respectively.

Remark.

In (22.23), the terms \(D({P}_{\tilde{S}}\|{P}_{S})\) and \(D(V \|W\mid {P}_{\tilde{S}}{P}_{\tilde{X}\mid \tilde{S}})\) account, respectively, for the shortcomings of a given code for corresponding “bad” state pmf and “bad” channel.

Proof.

Consider sequences of type \({P}_{\tilde{S}}\) in \({\mathcal{S}}^{n}\). Picking \(\hat{A}(m) = {\mathcal{T}}_{{P}_{\tilde{S}}}^{n}\), \(m \in \mathcal{M}\), in the proof of Theorem 22.3, and following the arguments therein to extract the subset A(m) of \(\hat{A}(m)\), we have for a given δ > 0 that for all \(n \geq N(\|\mathcal{S}\|,\|\mathcal{X}\|,\delta )\), there exists a subset \(\mathcal{M}{^\prime}\) of \(\mathcal{M}\) and a fixed conditional type, say \({P}_{\tilde{X}\mid \tilde{S}}\) (not depending on m), such that for every \(m \in \mathcal{M}{^\prime}\),

Then for every \(V \in \mathcal{V}(R,{P}_{\tilde{X}\tilde{S}})\), we obtain using Lemma 22.1 (in its version with condition (22.11b)), that for every δ′ > 0, there exists \(m \in \mathcal{M}{^\prime}\) (possibly depending on δ′ and V ) with

for all \(n \geq N(\|\mathcal{S}\|,\|\mathcal{X}\|,\|\mathcal{Y}\|,\delta {^\prime})\). Since the average V n-(conditional) probability of \({\left ({\phi }^{-1}(m)\right )}^{c}\) is large, its W n-(conditional) probability cannot be too small. To that end, for this m, apply [3, Theorem 10.3, (10.21)] with the choices

for (y, s) ∈ Z, to obtain

Finally,

for \(n \geq N(\|\mathcal{S}\|,\|\mathcal{X}\|,\|\mathcal{Y}\|,\delta ,\delta {^\prime})\), whereby it follows for the noncausal CSI case that

for every δ > 0. Similarly, for the case of causal CSI, for τ > 0, letting

we get

The continuity of the right side of (22.26), as shown in the Appendix, yields the claimed expression for E SP in (22.23) and (22.24).

7 Acknowledgement

The work of H. Tyagi and P. Narayan was supported by the U.S. National Science Foundation under Grants CCF0830697 and CCF1117546.

8 Appendix: Continuity of the Right Side of (23.26)

Let

Further, let

and

To show the continuity of g(τ) at τ = 0, first note that g(τ) ≥ g(0) for all τ ≥ 0. Next, let \({P}_{\tilde{S}}^{0}\) attain the minimum in (22.29) for τ = 0. Clearly,

Also, let \({P}_{U\tilde{X}\mid \tilde{S}}^{\tau }\) attain the maximum of \(g({P}_{\tilde{S}}^{0},\tau )\) in (22.28). For the associated joint pmf \({P}_{\tilde{S}}^{0}{P}_{U\tilde{X}\mid \tilde{S}}^{\tau }\), let \({P}_{U}^{\tau }\) denote the resulting U-marginal pmf, and consider the joint pmf \({P}_{U}^{\tau }{P}_{\tilde{S}}^{0}{P}_{\tilde{X}\mid U\tilde{S}}^{\tau }\). Then, using (22.28) and (22.29) and the observations above,

The continuity of g(τ) at τ = 0 will follow upon showing that

The constraint on the mutual information (22.28) gives by Pinsker’s inequality [3, 4] that,

i.e.,

For \({P}_{U\tilde{X}\tilde{S}} = {P}_{U}^{\tau }{P}_{\tilde{S}}^{0}{P}_{\tilde{X}\mid U\tilde{S}}^{\tau }\), let V 0 attain the minimum in (22.26), i.e.,

By (22.31), for \({P}_{U\tilde{X}\tilde{S}} = {P}_{\tilde{S}}^{0}{P}_{U\tilde{X}\mid U\tilde{S}}^{\tau }\) and \({P}_{Y \mid \tilde{X}\tilde{S}} = {V }^{0}\), by standard continuity arguments, we have

and

where \(\nu = \nu (\tau ) \rightarrow 0\) as τ → 0. Consequently,

Finally, noting the continuity of \(f(R,{P}_{U\tilde{X}\tilde{S}})\) in R [3, Lemma 10.4], the proof is completed.

Notes

- 1.

In our assertions, we indicate the validity of a statement “for all n ≥ N(.)” by showing the explicit dependency of N; however, the standard picking of the “largest such N” from (finitely many) such Ns is not indicated.

References

Biglieri, E., Proakis, J., Shamai, S.(Shitz).: Fading channels: information-theoretic and communications aspects. IEEE Trans. Inf. Theor. 44(6), 2619–2692 (1998)

Csiszár, I.: The method of types. IEEE Trans. Inf. Theor. 44(6), 2505–2523 (1998)

Csiszár, I., Körner, J.: Information Theory: Coding Theorems for Discrete Memoryless Channels, 2nd edn. Cambridge University Press, Cambridge (2011)

Fedotov, A.A., Harremoës, P., Topsøe, F.: Refinements of Pinsker’s inequality. IEEE Trans. Inf. Theor. 49(6), 1491–1498 (2003)

Gelfand, S.I., Pinsker, M.S.: Coding for channels with random parameters. Prob. Contr. Inf. Theor. 9(1), 19–31 (1980)

Keshet, G., Steinberg, Y., Merhav, N.: Channel coding in presence of side information. Foundations and Trends in Commun. Inf. Theor. 4(6), 445–586 (2008)

Lapidoth, A., Narayan, P.: Reliable communication under channel uncertainty. IEEE Trans. Inf. Theor. 44(6), 2148–2177 (1998)

Shannon, C.E.: Channels with side information at the transmitter. IBM J. Res. Dev. 2, 289–293 (1958)

Shannon, C.E., Gallager, R.G., Berlekamp, E.R.: Lower bounds to error probability for coding on discrete memoryless channels-i. Inf. Contr. 65–103 (1966)

Shannon, C.E., Gallager, R.G., Berlekamp, E.R.: Lower bounds to error probability for coding on discrete memoryless channels-ii. Inf. Contr. 522–552 (1967)

Tyagi, H., Narayan, P.: The Gelfand-Pinsker channel: strong converse and upper bound for the reliability function. In: Proceedings of the IEEE International Symposium on Information Theory, Seoul, Korea (2009)

Wolfowitz, J.: Coding Theorems of Information Theory. Springer, New York (1978)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Birkhäuser Boston

About this chapter

Cite this chapter

Tyagi, H., Narayan, P. (2013). State-Dependent Channels: Strong Converse and Bounds on Reliability Function. In: Andrews, T., Balan, R., Benedetto, J., Czaja, W., Okoudjou, K. (eds) Excursions in Harmonic Analysis, Volume 1. Applied and Numerical Harmonic Analysis. Birkhäuser, Boston. https://doi.org/10.1007/978-0-8176-8376-4_22

Download citation

DOI: https://doi.org/10.1007/978-0-8176-8376-4_22

Published:

Publisher Name: Birkhäuser, Boston

Print ISBN: 978-0-8176-8375-7

Online ISBN: 978-0-8176-8376-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)