Abstract

During the past decade regulatory toxicology has been changing its general approach, since it is implementing the “Toxicity Testing the 21st Century” (Tox21) vision proposed by the US National Research Council NRC to reduce and replace traditional safety testing in animals by more predictive toxicity data generated with human cell tissues and organs. New the Adverse Outcome Pathway (AOP) concept allows to integrate non-animal data with existing knowledge into computation models, which allow to predict adverse effects in humans and the environment after exposure to hazardous chemicals with sufficient confidence for safety assessment. The OECD is closely collaborating with national regulatory agencies in Europe and the USA on implementing the AOP concept into “Integrated Approaches for Testing and Assessment” (IATA), which allow regulators to assess the results generated with the new AOP approach for specific endpoints. The Tox21 concept, which is relying on advanced technologies, using human stem cells, multiorgan-chips and in silico models will most probably prove that the future of toxicology will be in vitro.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Adverse outcome pathway (AOP)

- Human cells and tissues

- Integrated testing

- IATA

- In vitro toxicology

- Bioprinting

- Multiorgan-chip

- Stem cells

- ESC

- HiPSC

- Regulatory testing

- Tox21

- Virtual organs

Challenges for Regulatory Testing in the Twenty-First Century

Regulators and the general public are facing increasingly complex challenges that require harnessing the best available science and technology on behalf of patients and consumers. Therefore, we need to develop new tools, standards, and approaches that efficiently and consistently assess the efficacy, quality, performance, and safety of products. However, up to now, the importance of regulatory science has not been sufficiently appreciated and it is underfunded. New scientific discoveries and technologies are not being sufficiently applied to ensure the safety of new chemicals, drugs, and other products to which consumers are likely to be exposed. In addition, members of the public are demanding that greater attention is paid to many more chemicals and products already in commercial use, but the existing testing systems do not have the capacity to deliver the in vivo data required. Thus, we must bring twenty-first-century approaches to twenty-first-century products and problems (Andersen and Krewski 2009).

Most of the toxicological methods used for regulatory assessment still rely on high-dose animal studies and default extrapolation procedures that have remained relatively unchanged for decades, despite the technological revolutions in the biosciences over the past 50 years. The new technologies allow to test tens of thousands of chemicals a year in high-throughput systems, and thousands of chemicals a year in organotypic cultures and low through-put systems. However, we now need to develop better predictive models, in order to identify concerns earlier in the product development process, to reduce time and costs involved in testing, and to reduce the loss of promising biological molecules due to false positive results. We need to modernize the tools used to identify potential risks to consumers who are exposed to drugs, new food additives, and other chemical products.

The challenge today is that the toxicological evaluation of chemicals must take advantage of the on-going revolution in biology and biotechnology. This revolution now permits the study of the effects of chemicals by using cellular components, cells, and tissues – preferably of human origin – rather than whole animals.

The novel regulatory science would take advantage of new tools, including functional genomics, proteomics, metabolomics, high-throughput screening, human-organs-on-a-chip, and systems biology, and could then replace current toxicology assays with tests that incorporate the mechanistic underpinnings of disease and of underlying toxic side-effects. This should allow the development, validation and qualification of preclinical and clinical models that accelerate the evaluation of toxicity during the development of drugs and other chemicals to which humans are exposed. The goals include the development of biomarkers to predict toxicity and screening at-risk human subjects during clinical trials, as well as after new products are made available on the market. The new methods also should enable the rapid screening of the large number of industrial chemicals that have not yet been evaluated under the current testing system, for example, according to the EU chemicals regulation (REACH).

The above-described new technologies enable to generate large datasets (also termed “big data”), which can be utilized in computational toxicology utilizing artificial intelligence tools and machine learning approaches. Currently, the global capacity to test chemicals thoroughly in traditional animal studies would probably not be more than 50–100 chemicals a year. By contrast, the new high-throughput methods developed in the US Environmental Protection Agency (EPA) Comptox program (Williams et al. 2017), involving the robotic systems at the National Center for Advancing Translational Sciences (NCATS) at the National Institutes of Health (NIH), could test 30,000 or more chemicals in several hundred functional tests within a year, while by using human cells and systems. This flood of new biological data would drive the development of more-satisfactory, more-predictive computer algorithms that could assist regulatory decision-making. With the addition of data from new human “multi-organ- chip” technologies (see below), the regulatory relevance of the data from the high-throughput systems (HTS) could be further refined (Rowan and Spielmann 2019) .

Adapting Toxicity Testing to the Challenges of the Twenty-First Century in Europe

To adapt toxicity testing to progress in the life sciences and to end toxicity testing in animals, in the 1980s, various government institutions of the European Commission (EC) and EU Member States promoted and funded the development and validation of in vitro toxicity tests, which were accepted at the international level by the OECD in the early 2000s. Initially, the research activities in Europe were stimulated by the requirements of the EU Cosmetics Directive (EC 2009) and were aimed at ending the suffering of experimental animals in safety tests for cosmetics, and especially in local toxicity tests on the skin and eye. The funding of research in the Alternative Testing Strategies Programme of the 6th (FP6) and 7th (FP7) EU Framework Programmes of the Research and Innovation Directorate General of the EC was quite successful, since, for this specific field of toxicology, in vitro tests were developed, validated, and accepted by regulators, and the full ban on animal testing for cosmetic products manufactured or marketed within the EU finally came into forced on 11 March 2013 (EC 2013). Although this was a unique success story, and represented a breakthrough from the scientific, regulatory, and ethical points of view, which was acknowledged around the world, those in vitro toxicity tests were based on the progress with in vitro culture techniques achieved in the twentieth century.

To speed up the change to challenges of the twenty-first century in collaboration with the cosmetics industry the EU FP7 multi-center SEURAT-1 project was established to replace repeat-dose systemic toxicity testing in vivo in animals. In addition, the EU launched another FP7 project, Accelerate (XLR8), to implement the transition to a toxicity pathway-based paradigm for chemical safety assessment, a concept proposed in 2007 by the US National Research Council (NRC) report, Toxicity Testing in the twenty-first Century: A Vision and a Strategy (NRC 2007).

The US Vision Toxicity Testing in the Twenty-First Century (Tox21) (US NRC 2007)

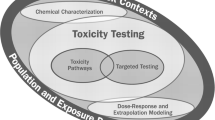

The new concept for a toxicity testing paradigm relies mainly on understanding “toxicity pathways” – the cellular response pathways that can result in adverse health effects when sufficiently perturbed (NRC 2007; Andersen and Krewski 2009; Krewski et al. 2010). In the new approach biological significant alterations are evaluated without relying on animal studies. In addition, “targeted testing” had to be conducted, to clarify and refine information from toxicity pathway tests for chemical risk assessments. Therefore, targeted testing in animals will become less necessary, as better systems are developed to understand how chemicals are metabolized in the human body, when applying only tests in cells and tissues. Testing in animals may then be phased out in the next 10–15 years, provided that more resources are devoted to improving regulatory toxicology.

A toxicity pathway refers to a chemically induced chain of events at the cellular level that may ultimately lead to an adverse effect such as tumor formation. Such pathways ordinarily coordinate normal processes, such as hormone signaling or gene expression. For example, a protein that, upon chemical binding, blocks or amplifies the signaling of a specific receptor could alter the pathway’s normal function and induce a “pathway perturbation.” Dose-response and extrapolation modeling will permit the translation of cellular tests to exposed humans. Specifically, the modeling will estimate exposures that would lead to significant perturbations of toxicity pathways, observed in cellular tests.

The Adverse Outcome Pathway (AOP) Concept

The adverse outcome pathway (AOP) concept was proposed as essential element of the Tox21 vision by the US Environment Protection Agency (EPA) , which defined that an AOP is a sequence of key events linking a molecular initiating event (MIE) to an adverse outcome (AO) through different levels of biological organization (Ankley et al. 2010). AOPs span multiple levels of biological organization, and the AO can be at the level of the individual organism, population or ecosystem. Each AOP is a set of chemicals, biochemical, cellular, or physiological responses, which characterize the biological effects cascade resulting from a specific exposure (Ives et al. 2017). The key events in an AOP should both be definable and make sense from a physiological and biochemical perspective. By using the AOP approach, it is possible to identify endpoints of regulatory concern and to ask which toxicity mechanisms are most likely to lead to these outcomes. AOPs are the central element of a toxicological knowledge framework being built to support chemical risk assessment based on mechanistic reasoning.

Meanwhile, the AOP concept has been accepted by the international scientific community, and the OECD launched a new program on the development of AOPs in 2013 (OECD 2013). Consequently, the OECD requires that the AOP concept should be considered when new toxicity tests are introduced, or existing ones are updated (OECD 2017a, b). The AOP knowledge base (AOP KB), set up by the OECD, is a formal Internet-based repository (http://aopkb.org) for information on AOPs. The content of the AOP KB, which contained 284 AOPs in March 2020, continues to evolve, as more information is gained on AOPs, their key events (KEs) and key event relationships (KERs).

Approaches such as the development of AOPs and the identification of modes of action (MoA), together with the use of use integrated approaches to testing and assessment (IATA) (OECD 2017b) as the means of combining multiple lines of evidence, are seen as the fundamental pathway to the hazard identification and characterization of a chemical. MoAs and AOPs are conceptually similar: MoAs include the chemical specific kinetic processes of Absorption, Distribution, Metabolism and Elimination (ADME) , and describe the mechanism of action of the chemical in the human body, whereas AOPs focus on nonchemical specific biological pathways starting with a molecular initiating event MIE (e.g., binding to an enzyme), resulting in perturbations (KEs) leading to an AO at the organism level as outlined in Fig. 1.

An AOP is a conceptual framework that links a molecular-level initiating event with adverse effects relevant for risk assessment. Each AOP consists of a set of chemicals, biochemical, cellular, and physiological responses, which characterize the biological effects cascade resulting from a specific toxic insult (Ankley et al. 2010). AOPs span multiple levels of biological organization. AOPs often start out being depicted as linear processes; however, the amount of detail and linearity characterizing the pathway between a molecular initiating event and an adverse outcome within an AOP can vary substantially, both as a function of existing knowledge and assessment needs (OECD 2017a) .

Integrated Approaches to Testing and Assessment (IATA)

Complex endpoints cannot be predicted by a single stand-alone non-animal test as it will never be possible to reproduce a whole organism, mainly due to the lack of kinetic relationships and cross-talk among cells, tissues, and organs (Rowan and Spielmann 2019). It is instead necessary to use integrated approaches to testing and assessment (IATA) based on a weight-of-evidence (WoE) approach, where information and evidence from a battery of tests can be incorporated (OECD 2017b). Data can then be integrated by means of modeling. This will lead to a shift toward the use of more human data in terms of biologically significant perturbations in key toxicity pathways.

IATA are scientific approaches to hazard to risk characterization, based on an integrated analysis of existing information coupled with new information by using testing strategies. IATA can include a combination of methods and integrating results from one or many methodological approaches ranging from flexible to rule based ones, so-called defined approaches. IATA should ideally be based on knowledge of the MoA by which chemicals induce their toxicity. Such information is, of course, quite often missing for complex endpoints, for example, carcinogenicity or developmental toxicity. This approach has been used successfully. to partially replace skin sensitization testing in animals: the first three in vitro AOP-based OECD TGs have been adopted covering the skin sensitization AOPs for protein binding (TG 442C; OECD 2015a), as well as for keratinocyte activation (TG 442D; OECD 2015b) and dendritic cell activation (TG 442E; OECD 2016).

New Technologies

During the last 30 years significant increase in knowledge and technology allows us today to conduct safety testing on human cells and on 3D tissue models and organoids rather than on animal models.

The first important step was the discovery and application of stem cells, in particular of embryonic stem cells (ESC) and of adult induced pluripotent stem cells (iPSC) from both animals and humans. At the same time perfused human 3D cell culture organ culture models, multiorgan-chips (MOC), were developed to model human diseases and also to study beneficial and adverse effects of drugs and other chemicals on human tissues and organs. Moreover, quite independently computer based virtual models of organ system have been developed, which incorporate biological structure and extend the data from in vitro toxicity testing to a higher level of biological organization.

Advancing toxicology in the twenty-first century by applying the new technologies with human tissues – stem cells, perfused organ chips, 3D-bioprinting, virtual models, and use of artificial intelligence (AI) – will be highlighted in this section.

Stem Cells as Advanced Tools in Predictive Toxicology

Stem cells have the ability to differentiate along different lineages and the ability to renew themselves. Stem cells are broadly classified into embryonic stem cells (ESCs) found in the embryo, stem cells isolated from adult tissues, and induced pluripotent stem cells (iPSCs). Along with self-renewal capacity, ESCs are pluripotent cells with the ability to differentiate into three embryonic germ cell layers. Almost 30 years ago, we developed the first stem cell-based toxicity test, the mouse embryonic stem cell test (mEST) (Laschinski et al. 1991), which has become most popular after it was successfully validated by ECVAM (Genschow et al. 2002) and we provided a robust protocol (Seiler and Spielmann 2011). Meanwhile, many variants of the EST have been developed and high-throughput-screening (HTP) variants are used during preclinical drug development.

The most apparent advantage of using hESCs instead of mESCs is to limit the possibility of false negatives that may arise due to species-specific differences. The advanced versions of the humanized hEST are using reporter genes and other biomarkers as endpoints for embryotoxicity. In the US ToxCast program the commercial hiPSC high-throughput assay (Stemina STM) that predicts the developmental toxicity potential based on changes in cellular metabolism following chemical exposure (Palmer et al. 2013, 2017), 1065 ToxCast phase I and II chemicals were screened in single-concentration or concentration-response for the targeted biomarker (ratio of ornithine to cystine secreted or consumed from the media). The encouraging results of this extensive study support the application of the Stemina STM platform for predictive toxicology and further demonstrate its value in ToxCast as a novel resource that can generate testable hypotheses aimed at characterizing potential pathways for teratogenicity and HTS prioritization of environmental chemicals for an exposure-based assessment of developmental hazard (Zurlinden et al. 2020).

Ethical issues of obtaining hESCs from human pregnacies have led to the development of hiPSCs generated from mature somatic cells and reprogrammed to a pluripotent state. hiPSCs possess properties of self-renewal and differentiation into many types of cell lineage that are similar to hESCs. In fact, owing to their ability to differentiate into all the lineages of the human body, including germ cells, stem cells, and, in particular, hiPSC can be utilized for the assessment, in vitro, of embryonic, developmental, reproductive, organ, and functional toxicities, relevant to human physiology, without employing live animal tests and with the possibility of high throughput applications. Noteworthy, patient-derived disease-specific hiPSCs with genetic background sensitive to disease pathology could provide evidence to understand disease mechanisms for developing and testing compounds. Although variations in differentiation efficiency of various hESC lines may induce significant variability of experimental toxicity data, hESCs can help develop more reliable toxicity testing. Additional significant advantages in using human hESCs are unlimited self-renewal capabilities and differentiation into a variety of specialized cell types. Thus, stem cell toxicology will tremendously assist in the toxicological evaluation of the increasing number of synthetic chemicals that we are exposed to, of which toxicity information is limited .

Human-on-a-Chip (Multiorgan-Chip) Technology Applied to Toxicity Testing

Pressures to change from the use of traditional animal models to novel technologies arise from their limited value for predicting human health effects and from animal welfare considerations (Andersen and Krewski 2009). This change depends on the availability of human organ models combined with the use of new technologies in the field of omics and systems biology, as well as respective evaluation strategies. Ideally, this requires an appropriate in vitro model for each organ system.

In this context, it is important to consider combining individual organ models into systems. The miniaturization of such systems on the smallest possible chip-based scale is envisaged, to minimize the demand for human tissue and to match with the high-throughput needs of industry (Huh et al. 2011; Esch et al. 2015). A multiorgan-chip technology has been developed, based on a self-contained smartphone size chip format, in a project funded by the German Ministry for Research and Technology (BMBF) (Marx et al. 2016, 2020). An integrated micro-pump supports microcirculation for 28 days under dynamic perfusion conditions. The inclusion of human organ equivalents for liver, intestine, kidney, and skin allowed ADME and toxicity (ADMET) testing in a four-organ-chip. The system holds promise for developing disease models for preclinical efficacy and toxicity testing of new drugs. An encouraging example is a human microfluidic two-organ-chip model of pancreatic islet micro-tissues and liver spheroids, which maintained a functional feedback loop between the liver and the insulin-secreting islet micro-tissues for up to 15 days in an insulin-free medium (Bauer et al. 2018), which is a promising simulation of human type 2 diabetes mellitus.

It has been hypothesized that exposure of in vitro assembled premature iPSC-derived organoids to the physiological environment of a micro-physiological system (MPS) , such as perfusion, shear stress, electrical stimulation, and organoid cross talk in interconnected arrangements, might constitute the missing step for their final and complete in vitro differentiation. The final aim is to combine different “organoids” to generate a human-on-a-chip, an approach that would allow studies of complex physiological organ interactions. The recent advances in the area of induced pluripotent stem cells (hiPSCs, Ramme et al. 2019) provide a range of possibilities that include cellular studies of individuals with different genetic backgrounds, for example, human disease models. However, throughput remains a significant limitation and there will continue to be a need for emphasis on “fit-for-purpose” assays.

Since 2012, the NIH and the US Food and Drug Administration (FDA) have been funding a major multi-center program for development of a technology platform that will mimic human physiological systems in the laboratory, by using an array of integrated, interchangeable engineered human tissue constructs ― “a human-body-on-a-chip” (NIH 2012). The program, which is coordinated by the National Center for Advancing Translational Sciences (NCATS), intends to combine the technologies to create a microfluidic platform that can incorporate up to 10 individual engineered human micro-physiological organ system modules in an interacting circuit. The goal of the program is to create a versatile platform capable of accurately predicting drug and vaccine efficacy, toxicity, and pharmacokinetics in preclinical testing.

Results obtained with chips and microfluids systems indicate that static and dynamic conditions of in vitro cultures may provide significantly different predictions for some endpoints, but they also bring different view on the possibility of future in vitro assessment of the absorption, distribution, metabolization and excretion of drugs and xenobiotics. The developers of chips and microfluids systems anticipate that once these systems get validated for specific applications in toxicology, they will also be swiftly adopted into the pre-clinical stages of drug development. The new culture systems will be adaptable to the integration of future technologies, for example, advances in stem cell culture, 3D-bioprinting, and into personalized medicine using individual patient-derived tissue (Marx et al. 2020; Marrella et al. 2020).

3D-Biopriniting

3D bio-printing is a new type of tissue engineering technology that is expanding hand in hand with advances in material engineering and bio-polymer chemistry. In this technology, cell-laden biomaterials are used as “bio-inks” and raw materials. Compared to the classic tissue engineering that provided highly standardized in vitro skin and epithelial models, 3D bioprinting allows to produce highly organized 3D tissue models, which are physiologically and morphologically similar to their in vivo biological counterparts (Weinhart et al. 2019). In addition to vascularize the 3D-printed in vitro models, in combination with microfluidic and micro-physiological systems it offers a very promising platform for precisely monitored, long-term toxicity studies. It is expected that the 3D bioprinting technology will be used to construct tissues and organs with complex responses and will especially be applied in safety and efficacy studies of novel drugs.

The use of 3D bio-printed tissues and organs will also provide new approaches for high-throughput toxicity testing that will improve the prediction of human responses to chemical and drugs. In order to implement these technologies into the regulatory framework, it will be necessary to adapt the concept of “open-source” models and to integrate them it into existing TGs. The standardization and validation of these systems will be challenging but they are inevitable.

Virtual Organ Models

Cell-agent based models are useful for modeling developmental toxicity by virtue of their ability to accept data on many linked components and implement a morphogenetic series of events. These data may be simulated (e.g., what is the effect of localized cell death on the system?) or data derived from in vitro studies. In the latter case, perturbed parameters are introduced as simple lesions or combinations of lesions identified from the data, where the assay features have been annotated and mapped to a pathway or cellular process implemented in the virtual model. Whereas in the EPA ToxCast program predictive models are built with computer-assisted mapping of chemical-assay data to chemical endpoint effects (Judson et al. 2010), the virtual tissue models incorporate biological structure, and thus extend the in vitro data to a higher level of biological organization. A developing system can be modeled and perturbed “virtually” with toxicological data, then the predictions on growth and development can be mapped against real experimental findings.

The goal of the US “Virtual Liver” project is to develop models for predicting liver injury due to chronic chemical exposure, by simulating the dynamics of perturbed molecular pathways, their linkage with adaptive or adverse processes leading to alterations of cell state, and integration of the responses into a physiological tissue model. When completed, the Virtual Liver Web portal and accompanying query tools will provide a framework for the incorporation of mechanistic information on hepatic toxicity pathways, and for characterizing interactions spatially and across the various cells types that comprise liver tissue. The German BMBF funded Virtual Liver Project focuses on the establishment of a 3D model of the liver that correctly recapitulates alterations of the complex micro-architecture, both in response to, and during regeneration from, chemically induced liver damage https://fair-dom.org/partners/virtual-liver-network-vln/. The long-term goal will be to integrate intracellular mechanisms into each cell of the model, as many of the critical intracellular key mechanisms still need to be elucidated.

The US EPA program, the Virtual Embryo Project (v-Embryo™ https://www.ehd.org/virtual-human-embryo/), is a computational framework for developmental toxicity, focused on the predictive toxicology of children’s health and developmental defects following prenatal exposure to environmental chemicals. The research is motivated by scientific principles in systems biology, as a framework for the generation, assessment, and evaluation of data, tools, and approaches in computational toxicology. The long-term objectives are: to determine the specificity and sensitivity of biological pathways relevant to human developmental health and disease; to predict and understand key events during embryogenesis leading to adverse fetal outcomes; and to assess the impacts of prenatal exposure to chemicals at various stages of development and scales of biological organization.

Artificial Intelligence (AI) and Machine Learning

High amounts of newly generated in vitro data present an opportunity for using artificial intelligence (AI) and machine learning to improve the knowledge on toxicity pathways and offer a broader insight into the safety assessment of chemicals and mixtures. The upcoming decade will be certainly an era of “ big data” requiring novel approaches to traditional methods of data analysis. This will present both a challenge and an opportunity for toxicologists and regulators. While in the twentieth century, the community only slowly accepted in silico as a discipline of toxicology, in the twenty-first century, we will be more and more exposed to the new QSARs, artificial intelligence and machine learning methods leveraging neural networks (Tang et al. 2019).

These technologies are very promising and already in use by the pharmaceutical industry and some regulatory agencies in the USA. They will reduce the use in vivo and in vitro experiments due to the predictions based on computational (“in silico”) modeling, which is risk-free, low-costly, and high-throughput. On the other hand, it will be a challenge to introduce these and more complex systems into the non-expert community.

The Future of Toxicology Is in Vitro

The process of validation of new approaches needs to be reconsidered in terms of efficiency and time to completion (Rowan and Spielmann 2019). In particular, the scientific community needs to understand that if it is possible for advanced non-animal methods to meet some or all regulatory needs. Furthermore, the fate of the animal testing in this transitional phase toward IATA is unclear.

Therefore, it is not surprising, but encouraging, that by the end of 2017, in the USA the FDA and the NIH had published new roadmaps for toxicity testing, which were based on the new principles of safety testing without animals by employing the novel molecular and computational techniques, for example, the FDA Predictive Toxicology Roadmap (FDA 2017) and the ICCVAM Strategic Roadmap for Establishing New Approaches to Evaluate the Safety of Chemicals and Medical Products in the United States (ICCVAM 2018). In this context, the FDA makes the most pragmatic proposal, and suggests that “Rather than validation, an approach we frequently take for biological (and toxicological) models and assays is qualification. Within the stated context of use, qualification is a conclusion that the results of an assessment using the model or assay can be relied on to have a specific interpretation and application in product development and regulatory decision-making” (FDA 2017).

It is also very encouraging that, early in the twenty-first century, the US regulatory agencies are giving the “long sought goal of refining, reducing, and replacing testing on animals” the high priority that it deserves, both for scientific and for animal welfare reasons, in accordance with the hopes expressed 60 years ago by the pioneers of the Three Rs concept, William Russell and Rex Burch (1959).

In conclusion, twenty-first-century technologies are providing multi-dimensional human data at the molecular and cellular level that will significantly advance regulatory toxicology.

Cross-References

References

Andersen ME, Krewski D (2009) Toxicity testing in the 21st century: bringing the vision to life. Toxicol Sci 107:324–330

Ankley GT, Bennett RS, Erickson RJ et al (2010) Adverse outcome pathways: a conceptual framework to support ecotoxicology research and risk assessment. Environ Toxicol Chem 29:730–741

Bauer S, Wennberg Huldt C, Kanebratt K et al (2018) Functional coupling of human pancreatic islets and liver spheroids on-a-chip: towards a novel human ex vivo type 2 diabetes model. Nat Sci Rep 8:14620. https://doi.org/10.1038/s41598-017-14815-w

Esch EW, Bahinski A, Huh D (2015) Organs-on-chips at the frontiers of drug discovery. Nat Rev Drug Discov 14:248–260

European Commission (EC) (2009) EU regulation no 1223/2009 of the European Parliament and of the council of 30 November 2009 on cosmetic products. Off J Eur Union L342:59–210

European Commission (EC) (2013) Communication from the Commission to the European Parliament and the Council on the animal testing and marketing ban and on the state of play in relation to alternative methods in the field of cosmetics. 11.03.2013 COM 135 final. European Commission, Brussels, 15 p

FDA (US Food and Drug Administration) (2017) FDA’s predictive toxicology roadmap. US Food and Drug Administration, Silver Spring, 16 p

Genschow E, Spielmann H, Scholz G et al (2002) The ECVAM international validation study on in vitro embryotoxicity tests: results of the definitive phase and evaluation of prediction models. Altern Lab Anim 30:151–176

Huh D, Hamilton GA, Ingber D (2011) From 3D cell culture to organs-on-chips. Trends Cell Biol 21:745–754

Interagency Coordinating Committee for the Validation of Alternative Methods (ICCVAM) (2018) A strategic roadmap for establishing new approaches to evaluate the safety of chemicals and medical products in the United States. National Toxicology Program, National Institute of Environmental Health Sciences, Research Triangle Park, 13 p

Ives C, Campia I, Wang RL et al (2017) Creating a structured adverse outcome pathway knowledgebase via ontology-based annotations. Appl In Vitro Toxicol 4:298–311

Judson RS, Houck KA, Kavlock RJ et al (2010) In vitro screening of environmental chemicals for targeted testing prioritization: the ToxCast project. Environ Health Perspect 118:485–492

Krewski D, Acosta D, Andersen M et al (2010) Toxicity testing in the 21st century: a vision and strategy. J Toxicol Environ Health B Crit Rev 13:51–138

Laschinski G, Vogel H, Spielmann H (1991) Cytotoxicity test using blastocyst-derived euploid embryonal stem cells: a new approach to in vitro teratogenesis screening. Reprod Toxicol 5:57–64

Marrella A, Buratti P, Markus J et al (2020) In vitro demonstration of intestinal absorption mechanisms of different sugars using 3D organotypic tissues in a fluidic device. ALTEX 37:255–264

Marx U, Andersson TB, Bahinski A et al (2016) Biology-inspired micro-physiological system approaches to solve the prediction dilemma of substance testing. ALTEX 33:272–321

Marx U, Akabane T, Andersson TB et al (2020) Biology-inspired micro-physiological systems to advance patient benefit and animal welfare in drug development. ALTEX 37:364–394

National Institutes of Health (NIH) (2012) Press release, 24: NIH funds development of tissue chips to help predict drug safety DARPA and FDA to collaborate on groundbreaking therapeutic development initiative. National Institutes of Health, Bethesda. 2 p

National Research Council (NRC) (2007) Toxicity testing in the 21st century: a vision and a strategy. The National Academies Press, Washington, DC. 216 p

OECD (2013) Guidance document on developing and assessing adverse outcome pathways. Series on testing and assessment no. 184. OECD, Paris. 33 p

OECD (2015a) OECD TG No. 445C: in chemico skin sensitization: direct peptide reactivity assay (DPRA). OECD, Paris. 19 p

OECD (2015b) OECD TG No. 445D: in vitro skin sensitisation: human cell line activation test (h-CLAT). OECD, Paris. 20 p

OECD (2016) OECD test No. 442E: in vitro skin sensitisation: ARE-Nrf2 luciferase test method. OECD, Paris. 20 p

OECD (2017a) Revised guidance document on developing and assessing adverse outcome pathways. Series on testing and assessment no. 184. OECD, Paris. 32 p

OECD (2017b) Guidance document for the use of adverse outcome pathways in developing integrated approaches to testing and assessment (IATA). Series on testing and assessment no. 260. OECD, Paris. 33 p

Palmer JA, Smith AM, Egnash LA et al (2013) Establishment and assessment of a new human embryonic stem cell-based biomarker assay for developmental toxicity screening. Birth Defects Res B Dev Reprod Toxicol 98:343–363

Palmer JA, Smith AM, Egnash LA et al (2017) A human induced pluripotent stem cell-based in vitro assay predicts developmental toxicity through a retinoic acid receptor mediated pathway for a series of related retinoid analogues. Reprod Toxicol 73:350–361

Ramme AP, Koenig L, Hasenberg T et al (2019) Autologous induced pluripotent stem cell-derived four-organ-chip. Future Sci OA 5:65. https://doi.org/10.2144/fsoa-2019-0065

Rowan A, Spielmann H (2019) The current situation and prospects for tomorrow: toward the achievement of historical ambitions. In: Balls M, Combes R, Worth A (eds) The history of alternative test methods in toxicology. Academic Press/Elsevier, London, pp 325–331. Chapter 6.2

Russell WMS, Burch RL (1959) The principles of humane experimental technique. Methuen, UK, London. 238 p

Seiler A, Spielmann H (2011) The validated embryonic stem cell test to predict embryotoxicity in vitro. Nat Protoc 6:961–978

Tang W, Chen J, Wang Z et al (2019) Deep learning for predicting toxicity of chemicals: a mini review. J Environ Sci Health Part C 36:252–271

Weinhart M, Hocke A, Hippenstiel S et al (2019) 3D organ models – revolution in pharmacological research? Pharmacol Res 139:446–451

Williams A, Grulke CM, Edwards J et al (2017) The CompTox chemistry dashboard: a community data resource for environmental chemistry. J Cheminform 9:61. https://doi.org/10.1186/s13321-017-0247-6

Zurlinden TJ, Saili KS, Rush N et al (2020) Profiling the ToxCast library with a pluripotent human (H9) stem cell line-based biomarker assay for developmental toxicity. Toxicol Sci 174:189–209

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this entry

Cite this entry

Spielmann, H., Kandarova, H. (2021). Integration of Advanced Technologies into Regulatory Toxicology. In: Reichl, FX., Schwenk, M. (eds) Regulatory Toxicology . Springer, Cham. https://doi.org/10.1007/978-3-030-57499-4_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-57499-4_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-57498-7

Online ISBN: 978-3-030-57499-4

eBook Packages: Biomedical and Life SciencesReference Module Biomedical and Life Sciences