Abstract

In the last years, rule-based systems have been widely employed in several different application domains. The performance of these systems is strongly affected by the process of information granulation, which defines in terms of specific information granules such as sets, fuzzy sets and rough sets, the labels used in the rules. Generally, information granules are either provided by an expert, when possible, or extracted from the available data. In the framework of rule-based classifiers, we investigate the importance of determining an effective information granulation from data, preserving the comprehensibility of the granules. We show how the accuracies of rule-based classifiers can be increased by learning number and parameters of the granules, which partition the involved variables. To perform this analysis, we exploit a multi-objective evolutionary approach to the classifier generation we have recently proposed. We discuss different levels of information granulation optimization employing both the learning of the number of granules per variable and the tuning of each granule during the evolutionary process. We show and discuss the results obtained on several classification benchmark datasets using fuzzy sets and intervals as types of information granules.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In all activities involving knowledge representation, reasoning and decision-making, people typically express themselves by resorting to some generic and conceptually meaningful entities, which are called information granules (Zadeh 1997; Yao et al. 2013). The meaning and purpose of information granulation depend on the specific application domain. For example, granulation may simply refer to variable quantization. On the other hand, granules may correspond to data clusters, or to modules of a software design, etc. Granules may, in turn, consist of finer granules based, e.g., on similarity and functionality criteria.

Various formalisms and processing platforms for information granulation exist, including sets (in particular, intervals Yao 2009), rough sets (Liu et al. 2014), fuzzy sets (Pedrycz et al. 2014), type-2 fuzzy sets (Mendel and John 2002), interval-valued fuzzy sets (Gorzałczany 1987), and shadowed sets (Pedrycz and Vukovich 2002). The choice of a suitable formalism is basically problem-dependent. Further, granules can be directly specified by a human expert or derived automatically from data.

The term granular computing (GC) is often used to refer to a common conceptual and algorithmic platform for granular information processing. GC can be seen as a general framework embracing all methodologies and techniques that make use of information granules in problem solving (Pedrycz 2013; Pedrycz et al. 2015).

In this paper, we will consider granular rule-based classifiers (GRBCs), i.e., rule-based systems aimed at performing classification and consisting of rules whose antecedent part includes information granules. In particular, we are interested in granules that form a partition of the universe of the variables involved in the rules. To this aim, we take into account two formalisms for representing information granules: sets (in particular, numeric intervals Yao 2009) and fuzzy sets (Zadeh 1997). A set (interval) realizes an information granule by allowing each element of the universe of discourse either to belong or not to belong to that granule. A fuzzy set generalizes this notion by allowing any number in the real unit interval to represent the membership degree of an element to the information granule. Actually, two further commonly used formalisms for dealing with GRBCs are type-2 fuzzy sets and interval-valued fuzzy sets (Pedrycz 2015). We will not discuss in detail these formalisms in the following, but we will give some indication on how they can be managed in our approach.

A granular rule-based system basically includes a rule base (RB), a database (DB) containing the definition of the granules used in the RB, and an inference engine. RB and DB comprise the knowledge base of the rule-based system. Of course, the input–output mapping performed by the granular rule-based system relies on the specific formal frameworks in which the various types of information granules are defined and processed.

The rules can be generated either by encoding an expert’s knowledge or automatically from data, typically exploiting a set of training samples consisting of input-target pairs. Once you choose the granulation type, the automatic generation of rules should be guided by a suitable trade-off between accuracy and rule interpretability so as to avoid, e.g., a too high number of rules and hardly comprehensible partitions of the involved variables. To this aim, multi-objective evolutionary algorithms can be profitably exploited. In particular, when fuzzy sets are used as information granules, the systems resulting from multi-objective evolutionary optimization are typically referred to as multi-objective evolutionary fuzzy systems (MOEFSs) in the literature (Ducange and Marcelloni 2011; Fazzolari et al. 2014).

Several papers, mainly related to MOEFSs, adopt multi-objective evolutionary algorithms for rule selection (Gacto et al. 2010) (e.g., from an initial RB heuristically generated) or rule learning (Cococcioni et al. 2007), DB tuning (Botta et al. 2009), rule learning/selection together with DB learning (in particular, partition granularity and membership function parameters) (Villar et al. 2012; Antonelli et al. 2009a, b). As regards other formalisms for representing information granules, in the last years, a number of evolutionary-based approaches, mainly based on single-objective optimization, have been proposed (Castillo and Melin 2012a, b; Sanz et al. 2010, 2011, 2013, 2015). Recently, researchers have particularly focused on GRBCs based on interval-valued fuzzy sets (Sanz et al. 2010, 2011, 2013, 2015), which can be considered a simplified version of type-2 fuzzy sets (Bustince Sola et al. 2015).

In this paper, within the framework of GRBCs, we will show how granulation tuning (i.e., tuning of the partitions) and granulation learning (i.e., learning of the most suitable number of information granules), used either separately or jointly, can sensibly influence performance.

More precisely, we will start from uniform partitions of the involved variables. Each of these partitions consists of equally sized information granules, whose number is chosen based on heuristic considerations. In particular, in case of intervals and fuzzy sets, we adopt, respectively, partitions consisting of consecutive, non-intersecting intervals, and overlapping triangular fuzzy sets.

In this context, we will exploit rule learning from data. We will use the performance obtained by the developed rule-based systems on classification benchmark datasets as a quantity of reference against which we will assess the improved results achieved by equipping the rule-based systems with granulation tuning and/or granulation learning.

To this aim, on the one hand, starting from fixed uniform initial partitions of the variables, we will perform, besides rule learning, partition tuning. The meaning of partition tuning depends, of course, on the specific granulation tool adopted, e.g., in the case of intervals, partition tuning consists in suitably moving the endpoints, whereas, in the case of fuzzy sets, partition tuning concerns the determination of the position of fuzzy sets by identifying the values of the parameters of the corresponding membership functions.

On the other hand, when dealing with uniform partitions, we may be interested in determining the suitable number of elements of each partition: thus, we will learn, besides the rules, the number of elements of the partitions (without performing any tuning). Finally, we will perform concurrently rule learning, learning of the number of elements making the partitions, and tuning of the partitions.

We will show that the introduction of tuning and learning of information granulation helps to improve performance in all the considered cases.

From an operation point of view, we will approach the generation of the GRBCs, including the number and the position of the granules, from data through a multi-objective evolutionary process, considering accuracy and interpretability as the objectives to be optimized. At the end of the optimization process, the decision maker will just have to choose the system representing the best trade-off between the considered objectives for the particular application.

Finally, we present and discuss the results obtained by applying the generated GRBCs to 24 well-known classification benchmark datasets. In particular, we show how granulation tuning and learning affect the accuracy and the interpretability of the solutions generated by the multi-objective evolutionary optimization process.

The paper is organized as follows. Section 2 introduces the GRBCs and discusses their interpretability. Section 3 describes the main features of multi-objective evolutionary granular rule-based classifiers, which are simply called multi-objective granular classifiers (MOGCs) from now on, such as chromosome coding, mating operators, objective functions and multi-objective evolutionary algorithm. In Sect. 4, we discuss experimental results obtained by applying MOGCs to classification problems. Finally, Sect. 5 draws conclusions.

2 Rule-based classifiers

Let \(X = \{ X_1, \ldots , X_F \}\) be the set of input variables and \(X_{F+1}\) be the output variable. Let \(U_f\), with \(f=1,\ldots ,F\), be the universe of the fth input variable \(X_f\). Let \(P_f = \{ A_{f,1}, \ldots , A_{f,T_f} \}\) be a partition of variable \(X_f\) consisting of \(T_f\) information granules. In classification problems, the output variable \(X_{F+1}\) is a categorical variable assuming values in the set \(\Gamma \) of K possible classes \(\Gamma = \{ C_1, \ldots , C_K \}\). Let \(\{({\mathbf{x}}_1,x_{F+1,1}), \ldots , ({\mathbf{x}}_N,x_{F+1,N})\}\) be a training set composed of N input–output pairs, with \({ {\mathbf x}}_t=[x_{t,1}\dots ,x_{t,F}] \in {\mathfrak {R}}^F\), \(t=1,\ldots , N\) and \(x_{F+1,t} \in \Gamma \).

With the aim of determining the class of a given input vector, we adopt an RB composed of M rules expressed as:

where \(C_{j_{m}}\) is the class label associated with the mth rule, and \({\rm RW}_{m}\) is the rule weight, i.e., a certainty degree of the classification in the class \(C_{j_{m}}\) for a pattern belonging to the subspace delimited by the antecedent of the rule \(R_m\).

In this paper, we consider only sets (intervals) and fuzzy sets as information granule types. A set A defined on a universe of discourse U is typically described by a characteristic function \(A(x):U \rightarrow \{0,1\}\): the value 1 (respectively, 0) means that the element belongs (does not belong) to the information granule represented by the set. The characteristic function is also used to define the three fundamental set operations, namely, union, intersection and complement. A particular type of sets are intervals, for which both set-theoretic and algebraic operations are defined (Moore 1966; Yao 2009).

The classical notion of set (or crisp set) can be extended by introducing fuzzy sets. A fuzzy set A defined on a universe of discourse U is characterized by a membership function \(A(x):U \rightarrow {[0,1]}\) which associates with each element \(\hat{x}\) of U a number \(A(\hat{x})\) in the interval [0, 1]: \(A(\hat{x})\) represents the membership degree of \(\hat{x}\) in A (Zadeh 1965). The support and the core of A are the crisp subsets of A with, respectively, nonzero membership degrees and membership degrees equal to 1.

Though different types of membership functions, such as Gaussian, triangular and trapezoidal, can be used for characterizing fuzzy sets, for the sake of simplicity, we will consider triangular fuzzy sets, which are identified by the tuples (a, b, c), where a and c correspond to the left and right extremes of the support, and b to the core. Formally, a triangular membership function can be defined as follows:

A variable whose values are linguistic terms is called linguistic variable (Zadeh 1965). A linguistic variable L is characterized by a term set T(L), with each term labeling an information granule defined on universe U. The set of terms \(P = \{ A_1, \ldots , A_{|T(L)|} \}\), where \(|\cdot |\) is the cardinality, constitutes a partition of the universe U.

Usually, a purposely defined granule \(A_{f,0}\) (\(f=1,\ldots ,F\)) is considered for all the F input variables. This granule, which represents the “don’t care” condition, is defined by a characteristic/membership function equal to 1 on the overall universe. The term \(A_{f,0}\) allows generating rules that contain only a subset of the input variables (Ishibuchi et al. 2004).

Given an input pattern \({\hat{\mathbf {x}}}\in {\mathfrak {R}}^F\), the strength of activation (matching degree of the rule with the input) of the rule \(R_m\) is computed as:

where \(A_{f,j_{m,f}}(X_f)\) is the characteristic/membership function associated with the granule \(A_{f,j_{m,f}}\). For the sake of simplicity, in the formula, we have only considered the product as t-norm for implementing the logical conjunction.

The estimated output class \(\hat{C}\) is obtained by first calculating the association degree \(h_m({\hat{\mathbf {x}}})\) with class \(C_{j_{m}}\) for each rule \(R_m\), and then by applying a reasoning method so as to take into account all the rules that constitute the classifier.

The association degree \(h_m({\hat{\mathbf {x}}})\) is computed as:

In this paper, we adopt as rule weight the certainty factor \({\mathrm{CF}}_m\) defined as (Cordon et al. 1999; Ishibuchi et al. 2004):

The reasoning method uses the information from the RB to determine the class label for a given input pattern. We adopt the maximum matching as reasoning method: an input pattern is classified into the class corresponding to the rule with the maximum association degree calculated for the pattern. In case of tie, the pattern is classified into the class associated with the most specific rule.

In the case of type-2 fuzzy sets and interval-valued fuzzy sets, the structure of the rule shown in (1) does not change. Obviously, the inference mechanism has to be adapted to the type of granule. Popular inference mechanisms used with these types of granules have been discussed in Sanz et al. (2010, 2011, 2013, 2015); Liang and Mendel (2000).

3 Multi-objective evolutionary granular rule-based systems

The adequate granulation of the input variables affects the accuracy of the rule-based system, but also its interpretability. Accuracy is typically expressed in terms of classification rate. As regards interpretability, it is quite difficult to find a universally accepted index for interpretability assessment since it is a rather subjective and application-dependent concept. Thus, researchers have focused their attention on some factors, which influence interpretability, and on some constraints that have to be satisfied for these factors (see, e.g., Guillaume 2001; de Oliveira 1999). Various semantic and syntactic interpretability issues regarding both the RB and the DB have been taken into account mainly in the framework of fuzzy rule-based systems (see, e.g., Mencar and Fanelli 2008; Alonso et al. 2009; Zhou and Gan 2008).

Recently, the most relevant measures and strategies exploited to design interpretable fuzzy rule-based systems have been reviewed in Gacto et al. (2011). Here, a taxonomy of the interpretability measures has been proposed by considering two different dimensions, namely semantics and complexity, at RB and DB levels. In particular, complexity at RB level is expressed in terms of number of rules, total rule length (TRL) and average rule length, while complexity at DB level is determined by the number of attributes and the number of granules. On the other hand, the semantic dimension at the RB level concerns aspects such as consistency of rules, number of rules fired at the same time, and transparency of the structure, while, at DB level, concepts such as coverage of the universes, normalization of the functions characterizing the granules, distinguishability and order of granules are taken into account. In this paper, we have used just a measure of RB complexity, namely TRL.

Accuracy and interpretability are objectives in competition with each other: an increase in the former corresponds typically to a decrease in the latter. The best trade-off between the two objectives generally depends on the application context and cannot be fixed a-priori. Thus, the generation of GRBCs from data taking both the objectives into consideration is a typical multi-objective optimization problem, which can be tackled using multi-objective evolutionary algorithms (MOEAs). The output of the MOEA is a set of rule-based systems with different trade-offs between accuracy and interpretability: the user can decide for the best solution on the basis of the specific application context.

During the evolutionary process, we focus on learning data and rule bases. We generalize to generic information granules the approaches we proposed in Antonelli et al. (2009a, b) for fuzzy sets and regression problems. As regards data base learning, we aim to learn both the number and the parameters of the information granules. According to psychologists, to preserve interpretability, the number of granules, expressed as linguistic terms, per variable should be \(7\pm 2\) due to a limit of human information processing capability (Miller 1956). Thus, we can fix an upper bound \(T_{\mathrm{max}}\) for the number of granules. \(T_{\mathrm{max}}\) is a user-defined parameter which, for specific application domains, might be lower, but should never be higher than 9 for preserving interpretability.

For each variable \(X_f\), we define initial partitions with the maximum possible number \(T_{\mathrm{max}}\) of granules. These partitions can be provided by an expert, when possible, or can be generated uniformly. These partitions are denoted as virtual partitions in the following Antonelli et al. (2009a, b). During the evolutionary process, rule generation and granule parameter tuning are performed on these virtual partitions. The actual granularity is used only in the computation of the objectives. In practice, we generate RBs, denoted as virtual RBs, and tune granule parameters using virtual partitions, but assess their quality using each time different “lens” depending on the actual number of granules used to partition the single variables. Thus, we do not worry about the actual number of granules in applying crossover and mutation operators. Obviously, to compute the fitness, we have to transform the virtual GRBC into the actual GRBC. This process requires to define appropriate mapping strategies, both for the RB and for the granule parameters.

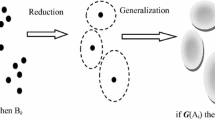

3.1 RB mapping strategy

To map the virtual RB defined on variables partitioned with \(T_{\mathrm{max}}\) granules into a concrete RB defined on variables partitioned with \(T_f\) granules, we adopt the following simple mapping strategy proposed in Antonelli et al. (2009a, b). Let “\(X_f\) is \(\widehat{A}_{f,h}\), \(h\in [1,T_{\mathrm{max}}]\)” be a generic proposition defined in a rule of the virtual RB. Then, the proposition mapped to \(X_f\) is \(\widetilde{A}_{f,s}\), with \(s\in [1,T_f]\), where \(\widetilde{A}_{f,s}\) is the granule most similar to \(\widehat{A}_{f,h}\) among the \(T_f\) granules \(\widehat{A}_{f,h}\) defined on \(X_f\). The definition of similarity depends on the specific type of granules considered in the rule-based system.

In the case of fuzzy sets, we can trivially consider as similarity measure the distance between the centers of the cores of the two fuzzy sets. If there are two fuzzy sets in the partition with centers of the cores at the same distance from the center of the core of \(\widehat{A}_{f,h}\), we choose randomly one of the two fuzzy sets. In the case of intervals, similar to the fuzzy case, we consider as similarity measure the distance between the centers of the two intervals. Since during the evolutionary process endpoints are constrained to vary within a pre-fixed range, this measure, although quite coarse, can be considered adequate. For the sake of completeness, we recall that, in the case of type-2 or interval-valued fuzzy sets, the RB mapping strategy can be easily extended using appropriate similarity functions between these types of granules. An interesting analysis on similarity measures for these granules can be found in Wu and Mendel (2009).

Note that different rules of the virtual RB can be mapped to equal rules in the concrete RB. This occurs because distinct granules defined on the partitions used in the virtual RB can be mapped to the same granule defined on the partitions used in the concrete RB. In the case of equal rules, only one of these rules is considered in the concrete RB. The original different rules are, however, maintained in the virtual RB. Indeed, when the virtual RB will be interpreted using different “lens”, all these rules can again be meaningful and contribute to increase the accuracy of the granular rule-based system. Thus, the concept of virtual RB allows us to explore the search space and concurrently exploit the optimal solutions achieved during the evolutionary process.

3.2 Granule parameter mapping strategy

As regards the granule parameter tuning, we approach the problem using a piecewise linear transformation (Pedrycz and Gomide 2007; Klawonn 2006; Antonelli et al. 2009a). We start from an initial partition of the input variables and tune the parameters of the granules, which compose the partition, by applying this transformation. Let \({\widetilde{P}}_f=\left\{ {\widetilde{A}}_{f,1},\ldots ,{\widetilde{A}}_{f,T_f}\right\} \) and \(P_f=\left\{ A_{f,1},\ldots ,A_{f,T_f}\right\} \) be the initial and the transformed partitions, respectively. In the following, we assume that the universes \(\widetilde{U}_f\) and \(U_f\) of the two partitions are identical. Further, we consider each variable normalized in [0, 1].

Let \(t(x_f): U_f \rightarrow \widetilde{U}_f\) be the piecewise linear transformation. We have that \(A_{f,j}(x_f)= \widetilde{A}_{f,j}\left( t \left( x_f \right) \right) =\widetilde{A}_{f,j}\left( \widetilde{x}_f \right) \), where \(\widetilde{A}_{f,j}\) and \(A_{f,j}\) are two generic granules from the initial and transformed partitions, respectively. As observed in Klawonn (2006), the transformation must be non-decreasing. We define the piecewise linear transformation by considering one representative for each granule. In the case of fuzzy sets, we assume that the representative coincides with the center of the core. In the case of intervals, it corresponds to the center of the interval. Similarly, in the case of type-2 and interval-valued fuzzy sets, the representative may coincide with the center of the core of the primary membership function and of the interval-valued fuzzy set, respectively. The representatives determine the change of slopes of the piecewise linear transformation \(t(x_f)\) for each variable \(X_f\). Let \(\widetilde{b}_{f,1}, \ldots ,\widetilde{b}_{f,T_f}\) and \(b_{f,1}, \ldots , b_{f,T_f}\) be the representatives of \(\widetilde{A}_{f,1}, \ldots ,\widetilde{A}_{f,T_f}\) and \(A_{f,1}, \ldots , A_{f,T_f}\), respectively. Transformation \(t(x_f)\) is defined as:

with \(b_{f,j-1}\le x_f \le b_{f,j}\).

Once defined transformation \(t(x_f)\), all the parameters which define the granules are transformed using \(t(x_f)\). As an example, we consider triangular fuzzy sets as granules. Further, we assume that the initial partition is a uniform partition (see Fig. 1). Thus, \(b_{f,1}\) and \(b_{f,T_f}\) coincide with the extremes of the universe \(U_f\) of \(X_f\). It follows that \(t(x_f)\) depends on \(T_f - 2\) parameters, that is, \(t\left( (x_f;b_{f,2},\ldots ,b_{f,T_f-1})\right) \) (Antonelli et al. 2009a). Once fixed \(b_{f,2},\ldots ,b_{f,T_f-1}\), the partition \(P_f\) can be obtained simply by transforming the three points \((\widetilde{a}_{f,j},\widetilde{b}_{f,j},\widetilde{c}_{f,j})\), which describe the generic fuzzy set \(\widetilde{A}_{f,j}\), into \((a_{f,j},b_{f,j},c_{f,j})\) applying \(t^{-1}(\widetilde{x}_f)\). In those regions where \(t(x_f)\) has a high value of the derivative (high slope of the lines), the fuzzy sets are narrower; otherwise, the fuzzy sets \({A}_{f,j}\) are wider. We define the piecewise linear transformation on the maximum granularity \(T_{\mathrm{max}}\).

In the case of intervals, we adopt a similar strategy. Each interval \(A_{f,j}\) is defined by its endpoints \([a_{f,j},c_{f,j}]\). For similarity to the fuzzy case, we assume that \(b_{f,1}\) and \(b_{f,T_f}\) coincide with the extremes of the universe \(U_f\) of \(X_f\). The partition \(P_f\) can be obtained by simply transforming the two endpoints \((\widetilde{a}_{f,j},\widetilde{c}_{f,j})\), which describe the generic interval \(\widetilde{A}_{f,j}\), into \((a_{f,j},c_{f,j})\) applying \(t^{-1}(\widetilde{x}_f)\). In the case of triangular interval-valued fuzzy sets like those used in Sanz et al. (2010, 2011, 2013, 2015), five parameters are needed for describing the granules: two for the lower and upper bounds of the lower membership function, two for the lower and upper bounds of the upper membership function, and one for the core. The transformed partition \(P_f\) can be obtained by transforming the five parameters applying \(t^{-1}(\widetilde{x}_f)\). As regards the type-2 fuzzy sets, the transformation of the partitions using the piecewise function is not trivial and requires additional studies, especially for the parameters of the secondary membership functions. On the other hand, recently, in the framework of GRBCs, interval-valued fuzzy sets are considered the most suitable alternative to type-2 fuzzy sets.

When we reduce the granularity, to maintain the original shape of the granules, we apply \(t^{-1}(\widetilde{x}_f)\) for \(j = 2,\ldots , T_f-1\), where \(T_f \ge 3\) is the actual granularity, only to the parameters which describe the granule. In the case of fuzzy sets, we apply the transformation only to the three points \((\widetilde{a}_{f,j},\widetilde{b}_{f,j},\widetilde{c}_{f,j})\), which describe the generic fuzzy set \(\widetilde{A}_{f,j}\). Figure 2 shows an example of this transformation for granularity \(T_f = 5\) using the piecewise linear transformation in Fig. 1, defined with granularity \(T_{\mathrm{max}}\) = 7. In the case of intervals, we apply the transformation only to the two endpoints \((\widetilde{a}_{f,j},\widetilde{c}_{f,j})\), which describe the generic interval \(\widetilde{A}_{f,j}\).

3.3 Chromosome coding

As shown in Fig. 3, each solution is codified by a chromosome C composed of three parts \((C_R,C_G,C_T)\), which define the rule base, the number of granules, and the positions of the representatives of the granules in the transformed space, respectively.

In particular, \(C_R\) contains, for each rule \(R_m\), the index \(j_{m,f}\) of the antecedent, for each input variable \(X_f\), and the consequent class \(C_{j_{m}}\). Thus, \(C_R\) is composed by \(M\cdot (F+1)\) natural numbers where M is the number of rules currently present in the virtual RB. The RB (defined as concrete RB) used to compute the fitness is obtained by means of the RB mapping strategy using the actual granularities fixed by \(C_G\). We assume that at most \(M_{\mathrm{max}}\) rules can be contained in the RB.

\(C_G\) is a vector containing F natural numbers: the fth element of the vector contains the number \(T_f \in \left[ 2,T_{\mathrm{max}}\right] \) of granules, which partition variable \(X_f\). \(T_{\mathrm{max}}\) is fixed by the user and is the same for all the variables.

\(C_T\) is a vector containing F vectors of \(T_{\mathrm{max}}-2\) real numbers: the fth vector \(\left[ b_{f,2},\ldots ,b_{f,T_{\mathrm{max}}-1}\right] \) determines where the granule representatives are moved and consequently the piecewise linear transformation.

To preclude that the piecewise linear transformation can become decreasing, we force \(b_{f,j}\) to vary in \(\left[ \widetilde{b}_{f,j}-\frac{\widetilde{b}_{f,j}-\widetilde{b}_{f,j-1}}{2},\widetilde{b}_{f,j}+\frac{\widetilde{b}_{f,j}-\widetilde{b}_{f,j-1}}{2}\right] \), \(\forall j \in \left[ 2,T_{\mathrm{max}}-1\right] \).

3.4 Mating operators

To generate the offspring populations, we exploit both crossover and mutation. We apply separately the one-point crossover to \(C_R\) and \(C_G\) and the BLX-\(\alpha \)-crossover, with \(\alpha \) = 0.5 to \(C_T\). Let \(s_1\) and \(s_2\) be two selected parent chromosomes. The common gene for \(C_G\) is extracted randomly in [1, F]. The common gene for \(C_R\) is selected by extracting randomly a number in \([1,\rho _{\mathrm{min}}-1]\), where \(\rho _{\mathrm{min}}\) is the minimum number of rules in \(s_1\) and \(s_2\). The crossover point is always chosen between two rules and not within a rule. When we apply the one-point crossover to \(C_R\), we can generate GRBCs with one or more pairs of equal rules. In this case, we simply eliminate one of the rules from each pair. This allows us to reduce the total number of rules.

As regards mutation, we apply two mutation operators for \(C_R\). The first operator adds \(\gamma \) rules to the virtual RB, where \(\gamma \) is randomly chosen in \([1,\gamma _{\mathrm{max}}\)]. The upper bound \(\gamma _{\mathrm{max}}\) is fixed by the user. If \(\gamma +M > M_{\mathrm{max}}\), then \(\gamma =M_{\mathrm{max}}-M\). For each rule \(R_m\) added to the chromosome, we generate a random number \(v\in [1,F]\), which indicates the number of input variables used in the antecedent of the rule. Then, we generate v natural random numbers between 1 and F to determine the input variables, which compose the antecedent part of the rule. Finally, for each selected input variable f, we generate a random natural number \(j_{m,f}\) between 0 and \(T_{\mathrm{max}}\), which determines the granule \(A_{f,j_{m,f}}\) to be used in the antecedent of rule \(R_m\) in the virtual RB. To select the consequent, a random number between 1 and the number K of classes is generated.

The second mutation operator randomly changes \(\delta \) propositions of the virtual RB. The number \(\delta \) is randomly generated in \([1,\delta _{\mathrm{max}}]\). The upper bound \(\delta _{\mathrm{max}}\) is fixed by the user. For each element to be modified, a number is randomly generated in \([0,T_{\mathrm{max}}]\).

The mutation applied to \(C_G\) randomly chooses a gene \(f\in [1,F]\) and changes the value of this gene by randomly adding or subtracting 1. If the new value is lower than 2 or larger than \(T_{\mathrm{max}}\), then the mutation is not applied.

The mutation applied to \(C_T\) first chooses randomly a variable \(X_f\), then extracts a random value \(j\in [2,T_{\mathrm{max}}-1]\) and changes the value of \(b_{f,j}\) to a random value in the allowed interval. We experimentally verified that these mating operators ensure a good balancing between exploration and exploitation, thus allowing the MOEA described in the next subsection to create good approximations of the Pareto fronts.

3.5 Multi-objective evolutionary algorithm

As MOEA we use the (2+2)M-PAES that has been successfully employed in our previous works (Antonelli et al. 2011a, b, 2012, 2014). Each chromosome is associated with a bi-dimensional objective vector. The first element of the vector measures the complexity of the granular rule-based system as TRL, that is the number of propositions used in the antecedents of the rules contained in the concrete RB (the number of rules may be different between the virtual and concrete RBs). The second element assesses the accuracy in terms of classification rate.

(2+2)M-PAES, which is a modified version of the well-known (2+2)PAES introduced in Knowles and Corne (2000), is a steady-state multi-objective evolutionary algorithm which uses two current solutions \(s_1\) and \(s_2\) and stores the non-dominated solutions in an archive. Unlike the classical (2+2)PAES, which maintains the current solutions until they are not replaced by solutions with particular characteristics, we randomly extract, at each iteration, the current solutions. If the archive contains a unique solution, \(s_1\) and \(s_2\) correspond to this unique solution.

At the beginning, the archive is initialized as an empty structure and two initial current solutions \(s_1\) and \(s_2\) are randomly generated. At each iteration, the application of crossover and mutation operators produces two new candidate solutions, \(o_1\) and \(o_2\), from the current solutions \(s_1\) and \(s_2\). These candidate solutions are added to the archive only if they are dominated by no solution contained in the archive; possible solutions in the archive dominated by the candidate solutions are removed. Typically, the size of the archive is fixed at the beginning of the execution of the (2+2)M-PAES. In this case, when the archive is full and a new solution \(o_i\), where \(i=1,2\), has to be added to the archive, if it dominates no solution in the archive, then we insert \(o_i\) into the archive and remove the solution (possibly \(o_i\) itself) that belongs to the region with the highest crowding degree. The crowding degree is calculated using an adaptive grid defined on the objective space. If the region contains more than one solution, then the solution to be removed is randomly chosen. (2+2)M-PAES terminates after a given number Z of iterations. The candidate solution acceptance strategy generates an archive that contains only non-dominated solutions. On (2+2)M-PAES termination, the archive includes the set of solutions which are an approximation of the Pareto front.

We would like to point out that the chromosome coding, the mating operators and the MOEA described in this section are applied in the following to learn GRBCs when the granules are fuzzy sets and intervals, but they can also be used without any change for the other types of granules (type-2 and interval-valued fuzzy sets) generally adopted in rule-based classifiers.

4 Experimental results

In this section, we aim to show how learning number and/or parameters of information granules affects the accuracy and interpretability of GRBCs. We will discuss the two types of granules investigated in this paper separately. The analysis has been carried out by executing (2+2)M-PAES in four different modalities. First, we have executed (2+2)M-PAES for learning only the rules using a uniform partition with \(T_f=5\), \(f=1, \ldots, F\), granules for each input variable. This value has proved to be the most effective in our previous works (Antonelli et al. 2009a, b). We have denoted this modality as PAES-R. In practice, we have used only the \(C_R\) part of chromosome C during the evolutionary process. Second, we have executed (2+2)M-PAES for learning the rules and the parameters of the granules, using an initial uniform partition with \(T_f=5\), \(f=1, \ldots, F\), granules for each input variable. We have denoted this modality as PAES-RT. In practice, we have used the \(C_R\) and \(C_T\) parts of chromosome C during the evolutionary process. Third, we have executed (2+2)M-PAES for learning the rules and the number of granules, using a uniform partition with \(T_{\mathrm{max}}=7\), \(f=1, \ldots , F\), granules for each input variable. This value has been suggested in Miller (1956). We have denoted this modality as PAES-RG. In practice, we have used the \(C_R\) and \(C_G\) parts of chromosome C during the evolutionary process. Fourth, we have executed (2+2)M-PAES for learning the rules, the number of granules and the parameters of the granules, using virtual uniform partitions with \(T_{\mathrm{max}}=7\), \(f=1, \ldots , F\), granules for each input variable. We have denoted this modality as PAES-RGT. In this last case, we have used the overall chromosome C during the evolutionary process. We decided to adopt these different modalities for evaluating the impact on accuracy and interpretability of each different level of granulation.

We aim to compare the different solutions on the Pareto fronts in the four different modalities, to evaluate how the different levels of granulation can affect the accuracy and the interpretability of the GRBCs. We executed the four modalities of (2+2)M-PAES on twenty-four classification datasets extracted from the KEEL repository.Footnote 1 As shown in Table 1, the datasets are characterized by different numbers of input variables (from 3 to 19), input/output instances (from 80 to 19,020) and classes (from 2 to 8). For the datasets CLE, HEP, MAM, and WIS, we removed the instances with missing values. The number of instances in the table refers to the datasets after the removing process. For each dataset, we performed a tenfold cross-validation and executed three trials for each fold with different seeds for the random function generator (30 trials in total). All the results presented in this section are obtained using the same folds for all the algorithms. Table 2 shows the parameters of (2+2)M-PAES used in the experiments.

Since several solutions can lie on the Pareto front approximations, typically only some representative solutions are considered in the comparison. In our previous papers (Alcalá et al. 2009; Antonelli et al. 2011b) and also in Gacto et al. (2010), for each fold and each trial, the Pareto front approximations of each algorithm are computed and the solutions are sorted in each approximation according to decreasing accuracies on the training set. Then, for each approximation, we select the first (the most accurate), the median and the last (the least accurate) solutions. We denote these solutions as FIRST, MEDIAN and LAST, respectively. Finally, for the three solutions, we compute the average values over all the folds and trials of the accuracy on both the training and the test sets, and of the TRL. On the one side, the three solutions allow us to graphically show the average trend of the Pareto front approximations obtained in the executions performed on the different folds. On the other side, we can analyze how these solutions are able to generalize when applied to the test set.

In the following, we discuss the results obtained by applying the four modalities of the (2+2)M-PAES execution on fuzzy sets and on intervals.

4.1 Fuzzy sets

Figures 4 and 5 show the FIRST, MEDIAN and LAST solutions obtained by the execution of PAES-R, PAES-RT, PAES-RG and PAES-RGT. In the figures, the x and y axes indicate the complexity calculated as TRL and the accuracy expressed in terms of classification rate. We can realize how the three solutions allow us to visualize the trend of the average Pareto front approximations. Further, by comparing the accuracies of the three solutions on the training and test sets, we can verify whether these solutions, especially the FIRST solution, suffer from overtraining. Indeed, the FIRST solution is in general the most prone to overtraining since it achieves the highest accuracy on the training set. We can observe from the plots that there exists some difference for all the three solutions between the classification rates obtained on the training set and the ones achieved on the test set. Thus, we can conclude that the decrease of performance between training and test sets does not occur only for the FIRST solution. In general, we observe that the fronts generated by PAES-R and PAES-RT are small and concentrated in an area of low TRL values. The fronts generated by PAES-RG and PAES-RGT are on average wider than the ones generated by PAES-R and PAES-RT and concentrated in an area with higher TRL values. This different distribution of the fronts is mainly due to the value of \(T_{\mathrm{max}}\) used for learning the number of granules during the evolutionary process. Indeed, we adopt \(T_{\mathrm{max}}=7\) for each input variable for PAES-RG and PAES-RGT, while we adopt \(T_f=5\) for each input variable for PAES-R and PAES-RT. A higher possible number of fuzzy sets in the partitions induces a higher number of rules. Indeed, since the rules can adopt more precise fuzzy sets at least for the most difficult attributes, they tend to be more specialized. Thus, a higher number of rules is needed to cover the dataset instances. On the other hand, this allows us to achieve in general higher accuracies. Anyway, this specialization occurs only for a limited number of attributes, which adopt a high value of granularity. The other attributes are characterized by a low value of granularity, thus highlighting the good characteristics of our granulation learning approach.

For the sake of fairness, we have executed the four algorithms using the same number of iterations. On the other hand, we have to consider that the granularity learning increases the search space and therefore would need a higher number of iterations. This is true, in particular, for PAES-RGT which has to cope with the highest search space.

Table 3 shows the numerical values for the FIRST solutions obtained by PAES-R, PAES-RT, PAES-RG and PAES-RGT. For the sake of brevity, we do not show the values of the MEDIAN and LAST solutions.

To statistically validate the results, we apply a non-parametric statistical test for multiple comparisons using all the datasets. First, we generate a distribution consisting of the mean values of the accuracies of the three solutions calculated on the test set. Then, we apply the Friedman test to compute a ranking among the distributions (Friedman 1937), and the Iman and Davenport test (Iman and Davenport 1980) to evaluate whether there exists a statistical difference among the distributions. If the Iman and Davenport p value is lower than the level of significance \(\alpha \) (in the experiments \(\alpha = 0.05\)), we can reject the null hypothesis and affirm that there exist statistical differences among the multiple distributions associated with each approach. Otherwise, no statistical difference exists. If there exists a statistical difference, we apply a post hoc procedure, namely the Holm test (Holm 1979). This test allows detecting effective statistical differences between the control approach, i.e., the one with the lowest Friedman rank, and the remaining approaches.

Table 4 shows the results of the statistical tests for the fuzzy set granules on the classification rate computed on the test set. We observe that for the FIRST solution the null hypothesis is rejected. The Holm post hoc procedure, executed using PAES-RGT as control algorithm, states that only PAES-RG is statistically equivalent to PAES-RGT. We can conclude that the learning of the number of granules allows generating solutions with higher accuracy. From Table 3 we can realize, however, that these solutions are obtained at the expense of a higher complexity. For the MEDIAN and LAST solutions, the null hypothesis is not rejected, thus stating that these solutions are statistically equivalent in terms of accuracy. However, the solutions generated by PAES-R and PAES-RT are typically characterized by a lower complexity, as we can derive from Figs. 4 and 5. Concluding, we can observe that the different levels of granulation actually affect the performance of the classifiers. When we learn the number of granules for each attribute and we learn the membership parameters together with the rules, we obtain the best performance in terms of accuracy, although with a higher complexity.

4.2 Intervals

Figures 6 and 7 show the FIRST, MEDIAN and LAST solutions obtained by the execution of PAES-R, PAES-RT, PAES-RG and PAES-RGT using intervals as granules.

As we have already observed with the fuzzy sets, the fronts generated by PAES-R and PAES-RT are small and concentrated in an area of low TRL values. The fronts generated by PAES-RG and PAES-RGT are on average wider than the ones generated by PAES-R and PAES-RT, and concentrated in an area with higher TRL values. This different distribution of the fronts can be explained using the same motivations advanced for the case of fuzzy sets.

Table 5 shows the numerical values for the FIRST solutions obtained by PAES-R, PAES-RT, PAES-RG and PAES-RGT. For the sake of brevity, we do not show the values of the MEDIAN and LAST solutions.

To statistically validate the results, we apply a non-parametric statistical test for multiple comparisons using all the datasets. Table 6 shows the results of the statistical tests for the interval granules on the classification rate computed on the test set. We observe that for the FIRST solution the null hypothesis is rejected. The Holm post hoc procedure, executed using PAES-RGT as control algorithm, states that PAES-RG is statistically equivalent to PAES-RGT. We can conclude that the learning of the number of granules allows generating solutions with higher accuracy. Also for the MEDIAN and LAST solutions, we have the same situation: The Holm post hoc procedure, executed using PAES-RGT as control algorithm, states that PAES-RG is statistically equivalent to PAES-RGT. The solutions generated by PAES-R and PAES-RT are, however, characterized by a lower complexity, as we can derive from Figs. 6 and 7. Concluding, we can again observe that by learning the number of granules for each attribute and the interval parameters together with the rules, we obtain the best performance in terms of accuracy, although with a higher complexity.

5 Conclusions

In this paper, we have dealt with the problem of developing accurate and easily comprehensible granular rule-based classifiers (GRBCs). The classification rules are learnt from data and describe the involved variables in terms of information granules, i.e., abstract entities that represent essential aspects of knowledge and system modeling. The considered GRBCs have been generated through a multi-objective evolutionary process, considering accuracy and interpretability as the objectives to be optimized. Information granules have been formalized in terms of sets (in particular, intervals) and fuzzy sets. With reference to well-known classification benchmark datasets, we have shown how granulation tuning (i.e., partition adaptation) and/or granulation learning (i.e., learning of the number of partition components) can effectively influence the classification performance and the interpretability of the GRBCs. In particular, we have observed that the best results in terms of accuracy are obtained when granulation tuning and learning are used simultaneously. On the other hand, the GRBCs so generated are characterized by a higher complexity in terms of total rule length and number of rules. This is basically due to the use of a maximum number of granules for each variable higher than the number of granules used when only granulation tuning is used. Although we have considered only intervals and fuzzy sets as granules in our experiments, we have highlighted throughout the paper that the approach can be easily employed for other types of granules such as type-2 and interval-valued fuzzy sets.

Notes

Available at http://sci2s.ugr.es/keel/datasets.php (Alcalá-Fdez et al. 2011).

References

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. Multiple Valued Logic Soft Comput 17(2–3):255–287

Alonso JM, Magdalena L, González-Rodríguez G (2009) Looking for a good fuzzy system interpretability index: an experimental approach. Int J Approx Reason 51(1):115–134

Antonelli M, Ducange P, Lazzerini B, Marcelloni F (2009a) Multi-objective evolutionary learning of granularity, membership function parameters and rules of Mamdani fuzzy systems. Evol Intell 2(1–2):21–37

Antonelli M, Ducange P, Lazzerini B, Marcelloni F (2009b) Learning concurrently partition granularities and rule bases of mamdani fuzzy systems in a multi-objective evolutionary framework. Int J Approx Reason 50(7):1066–1080

Antonelli M, Ducange P, Lazzerini B, Marcelloni F (2011a) Learning concurrently data and rule bases of Mamdani fuzzy rule-based systems by exploiting a novel interpretability index. Soft Comput 15(10):1981–1998

Antonelli M, Ducange P, Lazzerini B, Marcelloni F (2011b) Learning knowledge bases of multi-objective evolutionary fuzzy systems by simultaneously optimizing accuracy, complexity and partition integrity. Soft Comput 15(12):2335–2354

Antonelli M, Ducange P, Marcelloni F (2012) Genetic training instance selection in multi-objective evolutionary fuzzy systems: a co-evolutionary approach. IEEE Trans Fuzzy Syst 20(2):276–290

Antonelli M, Ducange P, Marcelloni F (2014) An experimental study on evolutionary fuzzy classifiers designed for managing imbalanced datasets. Neurocomputing 146:125–136

Botta A, Lazzerini B, Marcelloni F, Stefanescu DC (2009) Context adaptation of fuzzy systems through a multi-objective evolutionary approach based on a novel interpretability index. Soft Comput 13(5):437–449

Bustince Sola H, Fernandez J, Hagras H, Herrera F, Pagola M, Barrenechea E (2015) Interval type-2 fuzzy sets are generalization of interval-valued fuzzy sets: towards a wider view on their relationship. IEEE Trans Fuzzy Syst (in press)

Castillo O, Melin P (2012a) Optimization of type-2 fuzzy systems based on bio-inspired methods: a concise review. Inf Sci 205:1–19

Castillo O, Melin P (2012b) A review on the design and optimization of interval type-2 fuzzy controllers. Appl Soft Comput 12(4):1267–1278

Cococcioni M, Ducange P, Lazzerini B, Marcelloni F (2007) A Pareto-based multi-objective evolutionary approach to the identification of Mamdani fuzzy systems. Soft Comput 11(11):1013–1031

Cordon O, del Jesus MJ, Herrera F (1999) A proposal on reasoning methods in fuzzy rule-based classification systems. Int J Approx Reason 20(1):21–45

Ducange P, Marcelloni F (2011) Multi-objective evolutionary fuzzy systems. Lecture notes in computer science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol 6857 LNAI, pp 83–90

Fazzolari M, Alcalá R, Herrera F (2014) A multi-objective evolutionary method for learning granularities based on fuzzy discretization to improve the accuracy-complexity trade-off of fuzzy rule-based classification systems: D-mofarc algorithm. Appl Soft Comput 24:470–481

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Gacto MJ, Alcalá R, Herrera F (2010) Integration of an index to preserve the semantic interpretability in the multiobjective evolutionary rule selection and tuning of linguistic fuzzy systems. IEEE Trans Fuzzy Syst 18(3):515–531

Gacto MJ, Alcalá R, Herrera F (2011) Interpretability of linguistic fuzzy rule-based systems: an overview of interpretability measures. Inf Sci 181(20):4340–4360

Gorzałczany MB (1987) A method of inference in approximate reasoning based on interval-valued fuzzy sets. Fuzzy Sets Syst 21(1):1–17

Guillaume S (2001) Designing fuzzy inference systems from data: an interpretability-oriented review. IEEE Trans Fuzzy Syst 9(3):426–443

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 6:65–70

Iman RL, Davenport JM (1980) Approximations of the critical region of the fbietkan statistic. Commun Stat 9(6):571–595

Ishibuchi H, Nakashima T, Nii M (2004) Classification and modeling with linguistic information granules: advanced approaches to linguistic data mining (advanced information processing). Springer, Secaucus

Klawonn F (2006) Reducing the number of parameters of a fuzzy system using scaling functions. Soft Comput 10(9):749–756

Knowles JD, Corne DW (2000) Approximating the nondominated front using the Pareto archived evolution strategy. Evol Comput 8(2):149–172

Liang Q, Mendel JM (2000) Interval type-2 fuzzy logic systems: theory and design. IEEE Trans Fuzzy Syst 8(5):535–550

Liu C, Miao D, Qian J (2014) On multi-granulation covering rough sets. Int J Approx Reason 55(6):1404–1418

Mencar C, Fanelli AM (2008) Interpretability constraints for fuzzy information granulation. Inf Sci 178(24):4585–4618

Mendel JM, John RIB (2002) Type-2 fuzzy sets made simple. IEEE Trans Fuzzy Syst 10(2):117–127

Miller GA (1956) The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychol Rev 63(2):81–97. doi:10.1037/h0043158

Moore RE (1966) Interval analysis, vol 4. Prentice-Hall Englewood Cliffs

Pedrycz W (2015) From numeric models to granular system modeling. Fuzzy Inf Eng 7(1):1–13

Pedrycz W, Al-Hmouz R, Morfeq A, Balamash AS (2014) Building granular fuzzy decision support systems. Knowl Based Syst 58:3–10

Pedrycz W, Gomide F (2007) Fuzzy systems engineering: toward human-centric computing. Wiley, New York. doi:10.1002/9780470168967

Pedrycz W, Vukovich G (2002) Granular computing with shadowed sets. Int J Intell Syst 17(2):173–197

Pedrycz W, Succi G, Sillitti A, Joana I (2015) A general framework of information granules. Knowl Based Syst Data Descr

Pedrycz W (2013) Granular computing: analysis and design of intelligent systems. CRC Press

Rafael A, Ducange P, Herrera F, Lazzerini B, Marcelloni F (2009) A multiobjective evolutionary approach to concurrently learn rule and data bases of linguistic fuzzy-rule-based systems. IEEE Trans Fuzzy Syst 17(5):1106–1122

Sanz JA, Bernardo D, Herrera F, Bustince H, Hagras H (2015) A compact evolutionary interval-valued fuzzy rule-based classification system for the modeling and prediction of real-world financial applications with imbalanced data. IEEE Trans Fuzzy Syst 23(4):973–990

Sanz JA, Fernandez A, Bustince H, Herrera F (2013) Ivturs: a linguistic fuzzy rule-based classification system based on a new interval-valued fuzzy reasoning method with tuning and rule selection. IEEE Trans Fuzzy Syst 21(3):399–411

Sanz JA, Fernánde A, Bustince H, Herrera F (2010) Improving the performance of fuzzy rule-based classification systems with interval-valued fuzzy sets and genetic amplitude tuning. Inf Sci 180(19):3674–3685

Sanz J, Fernández A, Bustince H, Herrera F (2011) A genetic tuning to improve the performance of fuzzy rule-based classification systems with interval-valued fuzzy sets: degree of ignorance and lateral position. Int J Approx Reason 52(6):751–766

Valente de Oliveira J (1999) Semantic constraints for membership function optimization. IEEE Trans Syst Man Cybern Part A Syst Hum 29(1):128–138

Villar P, Fernandez A, Carrasco RA, Herrera F (2012) Feature selection and granularity learning in genetic fuzzy rule-based classification systems for highly imbalanced data-sets. Int J Uncert Fuzziness Knowl Based Syst 20(03):369–397

Wu D, Mendel JM (2009) A comparative study of ranking methods, similarity measures and uncertainty measures for interval type-2 fuzzy sets. Inf Sci 179(8):1169–1192

Yao JT, Vasilakos AV, Pedrycz W (2013) Granular computing: perspectives and challenges. IEEE Trans Cybern 43(6): 1977–1989

Yao Y (2009) Interval sets and interval-set algebras. In: ICCI’09. 8th IEEE international conference on cognitive informatics. IEEE, pp 307–314

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Zadeh LA (1997) Toward a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic. Fuzzy Sets Syst 90(2):111–127

Zhou SM, Gan JQ (2008) Low-level interpretability and high-level interpretability: a unified view of data-driven interpretable fuzzy system modelling. Fuzzy Sets Syst 159(23):3091–3131

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Antonelli, M., Ducange, P., Lazzerini, B. et al. Multi-objective evolutionary design of granular rule-based classifiers. Granul. Comput. 1, 37–58 (2016). https://doi.org/10.1007/s41066-015-0004-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41066-015-0004-z