Abstract

Objective

A significant body of work has now amassed investigating the interaction between facial cues of sex and emotional expression. For instance, studies have found that male/more masculine faces are perceived more easily as angry, while female/more feminine faces are perceived more easily as happy. Two key mechanisms have been proposed to explain this interaction: a visual-structural account, where the interaction emerges due to physical overlap between facial cues of sex and emotion, and a stereotype based account, where the interaction is driven by associations between men and women, and particular emotions. Previous work often remains silent in regard to the mechanism/s underlying this interaction. This article aims to provide an up-to-date review of the literature as this may provide insight into whether facial structure, stereotypes, or a combination of both mechanisms explains the interaction between sex and emotion in face perception.

Method

The review brings together research on the interaction between emotional expression and sex cues using a range of different methods.

Results

The existing literature suggests that unique influences of both structural overlap and stereotypes can be observed in circumstances where the influence of one mechanism is reduced or controlled. Studies sensitive to detecting both mechanisms have provided evidence that both can concurrently act to contribute to the interaction between sex and emotion.

Conclusion

These results are consistent with a role of selection in the physical appearance of facial signals of sex and particular emotional expressions and/or the cognitive structures involved in recognizing sex and emotion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The human face is a rich source of social information. Facial structure and texture are quickly and effortlessly processed to recognize sex, age, and ethnicity (e.g., Ito and Urland 2003; Kubota and Ito 2007; Wiese et al. 2008). Given that humans are fundamentally a social species, and the importance of facial information in social interactions, it would follow that humans would have evolved specialized face processing mechanisms. Indeed, social judgements based solely on facial information are made within 100 ms of viewing a face (Willis and Todorov 2006), and are often consistent across different individuals (Hehman et al. 2015), perhaps indicating that humans have evolved to attend to and efficiently process facial information useful for reproduction and survival.

There are around 40 facial muscles dedicated to manipulating the form of the face. Observers can quickly and automatically interpret these movements as signals of a person’s emotional states and intentions (Tracy and Robins 2008). Early models of face processing proposed that cues that remain stable from moment to moment (like sex) and cues that change from moment to moment (like facial expressions) were processed independently (Bruce and Young 1986). In this early conceptualization of face processing, sex cues present on a face should not influence recognition of speech or emotional expression or vice versa. However, subsequent empirical work has demonstrated that facial sex and emotion cues do interact to influence interpretation of the other. For example, work investigating cue interference (using Garner and dual task paradigms) provides a mix of evidence to suggest that the sex of a face is processed obligatorily and influences recognition of facial emotional expression (Atkinson et al. 2005; García-Gutiérrez et al. 2017; Karnadewi and Lipp 2011), and that facial emotional expressions may be processed obligatorily to influence processing of the sex of the face (Becker 2017). Studies investigating visual after-effects have provided further evidence for the interaction between sex and emotion when processing faces (Bestelmeyer et al. 2010; Harris and Ciaramitaro 2016; Pallett and Meng 2013).

When it comes to identifying the mechanism underlying this interaction between sex and emotion in face processing, two key mechanisms have been proposed. The first is a structural account, where the interaction emerges due to physical overlap between visual cues of sex and emotion (e.g., see Becker et al. 2007; Hess et al. 2009b). The second account proposes that the interaction between sex and emotion in face perception emerges due to the overlap between broadly held stereotypes or evaluative associations relating to men and women, and particular emotions (e.g., see Hugenberg and Sczesny 2006; Craig et al. 2017; Bijlstra et al. 2010). Evolutionarily, such a distinction is important as it provides insight into the cognitive structure that has evolved in interpreting sex and emotion from faces or even the co-evolution of the appearance of signals of sex and particular emotional expressions.

While a significant body of work has now amassed investigating the interaction between sex and emotional expression using a range of methods, this work often remains silent in regard to the mechanism/s underlying any Sex × Emotion interaction observed. As such, the aim of this article is to provide an up-to-date review, bringing together literature investigating the interaction between facial emotional expression and sex cues. First, we will describe structural and stereotype based accounts. Second, we will summarize previous research investigating the interaction between sex and emotion using a range of different methodological approaches measuring behavior, physiology, and neural activity tapping into recognition of sex and emotion, as well as other related processes such as attention and face memory. Drawing together the broader literature may help to determine the mechanism/s underlying the Sex × Emotion interaction that has been observed across different contexts. A table summarizing the included literature investigating the interaction between sex cues an emotion is provided in the online supplementary materials.

Accounts for the Interaction between Sex and Emotion

Visual-Structural Account

Sexual dimorphism in a species (i.e., differences between males and females) can evolve when males and females face sex-specific evolutionary pressures. Often, this is due to sexual selection. In most species, males and females face differential levels of minimal investment required to produce offspring; as such, it is more evolutionarily advantageous for males and females to invest in differing mating strategies (Trivers 1972). Often, this can also lead to evolved differences in physical characteristics between the sexes (Larsen 2003), such as differences in body size, or ornamentation (e.g., the peacock’s tail). Like any other species, we can expect sexual dimorphism to evolve in humans. While sexual dimorphism in humans exists in many physical characteristics, such as differences in overall muscle mass (Miller et al. 1993), sexual dimorphism in faces (i.e., the masculinity of men’s faces and the femininity of women’s faces) is of particular interest given their importance in human social interaction (Perrett et al. 1998; Stirrat and Perrett 2010; Watkins et al. 2010).

For men, where the minimal investment in producing offspring is low, it is potentially more evolutionarily advantageous to invest in mating effort over parental effort (Trivers 1972). As such, a key evolutionary pressure for men is being intra-sexually competitive, either in having access to and/or control of resources, or access to and/or control of mates directly (Buss 1988). Therefore, facial traits that would have been beneficial in physical confrontations with other men would have been selected for. Typically masculine traits, such as a large jaw or deep-set brows, are advantageous in a fight, as these traits are better suited for bracing physical blows and protecting important areas of the face (e.g., eyes, nose). Exaggerated masculine facial traits, such as elongated chin, lower forehead, and thicker eyebrows, can also act as a signal of formidability or fighting ability, dissuading other would be competitors. Indeed, facial masculinity, or traits thought to be associated with facial masculinity (e.g., facial width-to-height ratio; fWHR) has been associated with formidability or fighting prowess. fWHR has been reported to be associated with actual fighting performance in professional mixed martial arts fighters (Třebický et al. 2015; Zilioli et al. 2015), and a lower probability of being killed in a violent physical encounter (Stirrat et al. 2012). Facial sexual dimorphism in young men also predicts actual strength and perceived masculinity (Windhager et al. 2011). Men with masculine traits are also perceived as more aggressive, and are estimated to have higher fighting ability (Sell et al. 2009; Třebický et al. 2013).

On the other hand, for women, the minimal investment for producing offspring is much more costly (Trivers 1972), requiring around 40 weeks gestation plus post-natal care. As such, a key evolutionary pressure faced by women is to attract and keep high quality men who will contribute to child rearing. Therefore, women may have evolved facial traits that exaggerated cues associated with fertility and future reproductive potential; in particular, that of youth and health. Typically feminine features, such as large facial features (particularly large eyes relative to overall face size), are associated with neoteny and youth (Jones and Hill 1993). These are traits thought to be associated with fertility and future reproductive potential (Bovet et al. 2018; Pflüger et al. 2012), as well as self-reported and objective health (Gray and Boothroyd 2012; Rhodes et al. 2007). Indeed, it is generally considered among men that feminine features are attractive (Perrett et al. 1998).

Just as sexually dimorphic facial features can be used to infer a person’s sex, combinations of facial movements are interpreted to infer emotional states. For example, the presence of the lip corners pulled back and the cheeks raised is interpreted as happiness, and lowered brows with wide eyes and pursed lips, as anger (Ekman et al. 2002). These emotions are recognized with above chance accuracy across the world, including by observers with limited exposure to people of other cultural backgrounds (Ekman et al. 1969; Ekman and Friesen 1971). This evidence has been taken to support the idea that the general appearance of a set of ‘basic’ emotional expressions is evolutionarily evolved (Ekman 1992), though cultural accents and display rules can influence the precise appearance and use of these expressions (Elfenbein and Ambady 2002; Elfenbein 2013; Marsh et al. 2003), and it should be noted that there is also evidence to dispute the concept of cultural universality of emotional expression (Barrett 2006; Jack et al. 2012). Darwin (1872) proposed that the form and movements of emotional expressions in humans and other animals evolved through a number of routes. Some expressions represent actions that have been functional for survival in our ancestral past (though they may no longer serve a function). For example, the widening of the eyes in fear may allow the observer to take in more visual information and the bearing of the teeth in anger is a preparation for a biting attack. Other expressions are antecedent movements of another signal in order to communicate the opposite meaning (e.g., a dog crouching in submission opposing an erect stance of dominance), others are actions of the nervous system.

As can be seen from these two separate literatures, the visual-structural cues involved in signalling sex and those signalling emotions overlap. For example, larger eye size is associated with femininity and also expressions of fear and surprise. The brow, lips, and jaw are important facial features for communicating anger, and are also related to masculinity (Adams Jr. et al. 2015; Becker et al. 2007; Marsh et al. 2005). This has led some researchers to propose that facial sex cues can influence emotion recognition and vice versa due to overlap between structural cues of sex and particular expressions (Becker et al. 2007; Hess et al. 2009b). Building on the earlier work of Darwin, researchers have also proposed that certain emotional expressions may have evolved to mimic or enhance evolutionarily relevant social signalling. They propose that the widening of the eyes in fear aims to mimic juvenile facial cues in order to signal submission. The narrowing of the eyes and the pursing of the lips in anger mimics the structure of more mature faces to signal strength and dominance (Marsh et al. 2007; Sacco and Hugenberg 2009). Although framed in regard to cues of youth and maturity, these signals are also those that co-vary with sex (Adams Jr. et al. 2015; Jones and Hill 1993; Tay 2015). Within this perspective, observed interactions between sex and emotion in behavior are attributed to facial structure; for instance, the faster recognition of anger on a male face is due to shared structural cues strengthening the anger signal and facilitating recognition of this expression.

Socio-Cognitive Account

Another perspective proposes that commonly held stereotypes (informational associations) or evaluations (valence-based associations) regarding the traits and behaviors of women and men influence processing of sex and emotion from the face. Adult women are stereotyped as more caring and submissive, and men as stronger and more agentic (Prentice and Carranza 2002). Women are also stereotyped as generally experiencing and expressing emotions more intensely than men, and in particular are expected to express more happiness, sadness, and fear, and men more anger (Fabes and Martin 1991; Plant et al. 2000). When expressions are congruent with gender-based stereotypes, this should facilitate recognition of a particular sex or emotion compared to when stereotypes and emotional expressions are incongruent. For example, the stereotype that women are more likely to experience happiness and men more likely to experience and express anger could lead to faster recognition of anger on male than on female faces. Women and men also differ in how they are implicitly evaluated, with women evaluated as more pleasant than men by both male and female observers (Eagly et al. 1991). If the interaction between sex and emotion is driven by implicit evaluations, observers may recognize happiness more quickly than anger on female faces as the relatively positive evaluation of females facilitates recognition of the evaluatively congruent happy expression (Hugenberg and Sczesny 2006; Craig et al. 2017).

Sex and Emotion Interactions in Social Perception

The Influence of Sex on Emotion Perception

The majority of studies investigating the interaction between sex and emotion have investigated how the sex of the face or sex related cues influence emotion perception. Most studies in this area present participants with faces varying in sex (male and female) and emotion (usually happy and angry, but other combinations of expressions have also been investigated) and ask them to categorize these faces by their expression as quickly and accurately as possible. Using this method, studies find that participants are faster and/or more accurate at categorizing happiness on female faces compared to male faces (Aguado et al. 2009; Becker et al. 2007; Stebbins and Vanous 2015; Tipples 2019) and at categorizing anger on male compared to female faces (Aguado et al. 2009; Becker et al. 2007; Le Gal and Bruce 2002; Pixton 2011; Smith et al. 2017; Tipples 2019; Taylor 2017). Other studies have used the same method, but follow up significant sex by emotion interactions by considering response time differences between the two emotional expressions for each sex. These studies have found faster recognition of happiness than anger (and other negative emotions like fear, and sadness) on female faces, but not male faces (Bijlstra et al. 2010; Craig and Lipp 2017, 2018; Craig et al. 2017; Hugdahl et al. 1993; Hugenberg and Sczesny 2006; Lipp et al. 2015). While relatively faster recognition of happiness on female faces, and anger on male faces is consistent with both the stereotype and structural accounts, similar interaction patterns between sex and emotion in studies using other negative emotional expressions (like sadness), which are not structurally associated with facial masculinity/femininity, perhaps suggests a more central role of gender-based evaluations in resulting interaction patterns. Evidence from timed categorization tasks suggests that the relatively positive evaluation of women compared to men drives the influence of facial sex on emotional expression recognition in these tasks (Bijlstra et al. 2010; Craig and Lipp 2017, 2018; Hugenberg and Sczesny 2006).

Another approach to investigating the influence of sex on emotion recognition involves presenting faces morphed between two expressions. In these tasks, participants are asked to indicate when they first detect the onset or offset of a particular expression in videos of faces morphing from one expression to another, or label ambiguous morphed expressions on male and female faces. Inzlicht et al. (2008), presented participants with computer generated male and female faces morphing between anger and happiness and found that participants were slower to detect the offset of anger on male than on female faces. Parmley and Cunningham (2014), found that female participants were faster to detect sadness on female than male faces morphing from a neutral to a sad expression. Participants judging the emotion present on still photographs taken from points along an angry-neutral-happy continuum indicated that male face morphs needed significantly more of the happy face incorporated into the morph to be perceived as neutral when compared to the true neutral face (Harris et al. 2016). These studies, again, find biases in perceiving particular sex-emotion combinations, with an angry advantage for male faces, and sad and happy advantages for female faces. Finding that anger is perceived more readily on male faces and that more happiness is required in order for a male face to appear neutral could be due to the participants’ stereotyped paring of males and anger, or because the structure of the male face is more similar to anger making detection of anger easier and requiring a stronger happy signal to make the face appear neutral rather than slightly angry.

Rather than focusing on the speed and accuracy of emotion recognition, other studies have measured participants’ self-report ratings of emotional male and female faces. When participants rated angry male and female faces in the context of a work based conflict scenario, raters perceived angry males as experiencing more anger than female faces (Algoe et al. 2000). These findings were interpreted in line with the stereotype account, though it is possible that structural overlap of sex cues and expression may also have played a role.

Perception of emotion in neutral faces can also indicate particular combinations of sex and emotion that overlap in structure or stereotypes. Adams Jr. et al. (2012), found that neutral female faces were rated as appearing less angry and more fearful, sad, and joyful than male faces. Mignault and Chaudhuri (2003) also reported that participants were more likely to perceive happiness on neutral female faces and anger on neutral male faces. Other studies investigating social categorization with neutral faces have found that ratings of masculinity are correlated with perception of anger (Tskhay and Rule 2015; Young et al. 2018). Although some of these results could be explained under both structural and stereotype based accounts, finding that structural masculinization facilitates the perception of anger in neutral faces, perhaps implicates a role of facial structure over stereotypes on the interaction between sex and emotion in face processing.

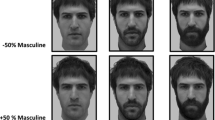

The Influence of Cues Associated with Sex on Emotion Perception

Another approach to investigating the interaction between sex and emotion has used digitally manipulated, or computer generated faces to investigate the influence of manipulating facial structure and surrounding cues (e.g., hair, clothes) on the interaction between sex and emotion. For example, Becker et al. (2007), presented participants with six computer generated androgynous prototypes with neutral expressions. Each face prototype was presented in a range of contexts including attached to a body wearing male or female clothes. Faces were also digitally altered to have a more square or round jaw, or with brow ridge lowered or raised. Wearing male or female clothes increased the masculinity/femininity ratings of the faces, but did not increase ratings of anger/happiness, but making the face rounder led participants to rate the faces as happier and more feminine. In another experiment, Becker et al. (2007), presented participants with pairs of faces including one either structurally masculinized or feminized face and one unaltered face with a neutral expression. Participants chose which of the two faces was more happy or angry). On trials where the masculinized face was presented with the unaltered face, it was always judged the angrier of the two. These particular findings provide evidence that facial structure contributed to the interaction between sex cues and emotion as manipulation of gendered facial structure, but not external cues of gender influenced perception of emotion.

In another study aiming to more closely match the faces in structure by presenting the same faces with either male or female haircuts, participants recognized angry expressions more easily and rated them as more intense when expressed by an apparent female. Apparent men were rated as more often showing happiness, and surprise (Hess et al. 2004). Steephen et al. (2018), also found that androgynous faces with male haircuts were perceived as happier than the same faces with female haircuts. Initially, this pattern is perhaps opposite to what we would predict given the stereotype account; however, as facial structure was matched in these studies, it was proposed that violating the expectation that women should be happy (and men angry) accounted for this role of face sex on emotion perception. As such, this finding is interpreted as support for the role of stereotypes in the absence of structure on the interaction between sex and emotion. Here, it is interesting to note that the influence of stereotypes on emotion perception was in the opposite direction to that typically observed (apparent males were perceived as happier rather than angrier). These findings demonstrate that expectations (stereotypes about gender) do influence emotion perception; however, given the direction of the effect observed, these results to not support the idea that activation of the male stereotype facilitates anger recognition.

Other studies have investigated the influence of specific cues related to sex on emotion perception. While these studies do not directly compare emotion perception on male and female faces, the findings are informative in the broader literature regarding the interaction between sex and emotion. For example, beards consistently enhance ratings of masculinity and dominance, as well as age in male faces (Dixson and Brooks 2013; Dixson and Vasey 2012). Beards enhance judgments of male facial masculinity, dominance, and aggressiveness compared to clean-shaven faces by augmenting the apparent size of underlying masculine facial features (Mefodeva et al. 2020; Sherlock et al. 2017), particularly the size of the jaw (Dixson et al. 2017). In a recent study, Craig et al. (2019) looked at the influence of beards on emotion recognition. Participants were faster to recognize angry expressions on bearded than clean-shaven faces. A subsequent experiment measuring participants explicit perceptions of the faces revealed that the presence of a beard enhanced the perceived aggressiveness of angry faces, but also enhanced the perceived prosociality of happy faces. The self-reported stereotypes were inconsistent with the response time patterns suggesting that the influence of facial hair was more likely to be due to the beard augmenting facial features (such as the jaw area) resulting in facilitated recognition of anger. This evidence was taken to suggest that apparent facial structure rather than stereotypes accounted for the influence of sex cues on emotion recognition.

Related studies have investigated the influence of manipulating facial dominance on emotion perception. Masculinity is associated with dominance (Senior et al. 1999) and so too is the expression of anger (Montepare and Dobish 2003; Senior et al. 1999; Zebrowitz and Montepare 2008). Previous theoretical work investigating the interaction between sex and emotion has proposed that it is the intersection between the structure and social meaning of facial dominance and particular expressions that drives the interaction between sex and emotion (Adams Jr. et al. 2015). In support of this, Hess et al. (2009a), presented participants with an oddball task. Participants had to detect infrequently presented neutral faces (oddballs) amongst more frequently presented emotional faces. When presented with angry faces, participants were faster to detect oddballs that were rated as high in affiliation than those rated high in dominance, whereas in happiness blocks, participants were faster to detect dominant than affiliative oddballs.

Similarly, a high facial fWHR, thought to be associated with masculinity (Senior et al. 1999), is interpreted by perceivers as a signal of dominance and aggression (Alrajih and Ward 2014; Mileva et al. 2014). Deska et al. (2018) found that participants recognized anger more easily on (both male and female) faces with a high fWHR. Though evidence is mixed as to whether fWHR varies systematically between males and females (Geniole et al. 2015; Kramer et al. 2012), a meta-analysis suggests that if it does, the effect size is small (Geniole et al. 2015). Also, fWHR differences within men are unlikely to be dependent on male androgens, further casting doubt as to whether fWHR is under sexual selection (Dixson 2018). Finding that fWHR increases the ease of anger perception suggests that cues either directly or indirectly associated with masculinity and those that increase perceived dominance facilitate recognition of anger.

Finding an influence of these structural/visual manipulations on perceived emotion suggests a possible role of facial structure in the interaction between sex and emotion. However, it is also possible that these manipulations alter the implicit stereotypes activated in response to the face. In the case of beardedness, participants’ explicit stereotypes about the faces suggested that a role of stereotypes is unlikely; however, manipulating face shape, dominance, or fWHR could also activate implicit associations. These may not be gender stereotypes per se, but they could be implicit associations relating to concepts like dominance or aggressiveness, and it could be these associations that facilitate recognition of particular emotions (rather than an influence of visual structural overlap alone).

The Influence of Emotion on Sex Perception

As in the area of emotion recognition, a number of studies have investigated the influence of emotion on sex recognition using timed categorization tasks, where participants categorize faces by their sex as quickly and accurately as possible. In these tasks, participants are generally faster (and/or more accurate) to label faces as ‘female’ when they are expressing happiness (Aguado et al. 2009; Bayet et al. 2015; Becker et al. 2007; Hess et al. 2009b; Lipp et al. 2015; Smith et al. 2017) and faster and/or more accurate to categorize male faces as ‘male’ when they have an angry expression (Aguado et al. 2009; Bayet et al. 2015; Becker et al. 2007; Le Gal and Bruce 2002; Smith et al. 2017; Švegar et al. 2018). Another study presented androgynous faces with various blends of happiness, fear and anger (Hess et al. 2009b). Faces with angry expressions were most likely to be labelled male. Faces presenting a blend of fear and happiness were most likely to be labelled female. Posing an emotional expression alters both the apparent structure of the face, and elicits particular sex stereotypes and associations; therefore, the influence of expression on perceived gender in these studies could be due to either stereotype based or structural mechanisms.

Another study manipulated the constituent features of an angry expression (e.g., lowered brow, enhanced chin) on computer generated male faces with a neutral expression (Sell et al. 2014), and measured how these manipulations influenced perception of physical strength, a trait related to masculinity (Windhager et al. 2011). These manipulations enhanced participants’ ratings of the perceive strength of the individuals when compared to faces altered in the opposite direction (Sell et al. 2014). Although the cues manipulated were derived from the facial movements involved in expressing anger, they are also features that signal masculinity (Adams Jr. et al. 2015; Becker et al. 2007; Marsh et al. 2005), suggesting a link between structural cues of anger, perceived formidability and masculinity.

Internal Representations Related to Sex and Emotion

Another approach to examining the overlap between sex and emotion in the face is to probe participants’ internal representations of faces. Becker et al. (2007) asked participants to imagine either an angry or a happy face, and found that participants were more likely to imagine an angry face as male. Other techniques, like reverse correlation, have also been used to tap into participants’ internal representations. Generally, the reverse correlation approach involves overlaying ambiguous face stimuli (for example, neutral faces, androgynous faces, or faces morphed between two expressions) with visual noise (for example, Gabor noise, which is similar to television static) that subtly alters the appearance of the face. By asking participants to choose which face out of a pair best matches a given category (for example, selecting the face which appears most angry or happy or most like a male or a female), after a large number of trials, averaging the visual noise patterns of the chosen faces generates a reflection of a participants’ internal representations of that category (see Brinkman et al. 2017).

Using this approach, Brooks et al. (2018), found that reverse correlated images constructed to represent the male category were rated by independent raters as angrier and constructed female images were rated as appearing happier. Further, constructed images representing the angry category were rated as more masculine, and happy correlated images were rated as more feminine by independent raters. In the context of these experiments, participants are not responding to real faces. The averages generated purportedly reflect people’s internal representations of a given emotional expression or gender. As such, interactions between sex and emotion observed in the resulting averages was attributed to participants’ existing gender/emotion internal representations (stereotypes) rather than the structure of the faces in this study (Brooks et al. 2018). However, another consideration is that natural structural overlap between cues of sex and emotion could have influenced judgements of sex/emotion provided by the independent raters.

Neural Activity during Social Perception

Research has also recorded brain activity while participants view faces varying in sex and emotion. Electroencephalography (EEG) has been adopted in a few studies as it is sensitive to the time course of processing faces. EEG involves recording electrical activity from the scalp while participants are presented with experimental stimuli. For example, participants are presented with many trials of each face type while electrical activity is recorded. Brain activity immediately after the presentation of the faces is averaged across trials and across electrodes at particular scalp locations to create signature patterns of activity called event related potentials (ERPs; Luck 2014). Particular ERP components are consistently observed in the presence of certain stimulus types or under certain task conditions. For example, in the context of face processing and emotion perception, the P1 is an early component involved in the early processing of visual stimuli (Woodman 2010), the N170 is a component involved in structural processing of faces (Bentin et al. 1996) and the LPC is a later component sensitive to evaluative congruity (Herring et al. 2011).

One study recording EEG activity while participants labelled the emotion on faces also varying in sex, found an interaction between face sex and emotion in the N170 (Valdés-Conroy et al. 2014). A Sex × Emotion interaction in the N170 was also found by Liu et al. (2017), when participants judged emotional expressions on faces also varying in sex. Liu and colleagues also found a Sex × Emotion interaction at P1 and in the LPC when participants were tasked with judging the sex of the faces. However, other studies involving emotion categorization have not found a Sex × Emotion interaction at the N170 or P1, but have found an interaction at the LPC (Doi et al. 2010). Although findings are somewhat mixed, observing interactions between sex and emotion in these early visual structural components like the P1 and the N170 and later evaluation related components like the LPC across multiple experiments suggests that both early perceptual processes and later association based/evaluative processes may be involved in the interaction between sex and emotion, lending support for the role of both structure and stereotypes in the interaction between sex and emotion.

Functional Magnetic Resonance Imaging (fMRI) is another method of measuring neural activity. This technique has greater spatial resolution than EEG making it suitable for identifying specific brain regions or networks activated when viewing faces varying in sex and emotion. In studies using this technique, interactions between sex and emotion have been observed across a number of areas including the superior temporal gyrus, middle frontal gyrus, anterior insula, lateral occipital cortex, fusiform gyrus, dorsal anterior cingulate cortex, paracingulate gyrus, and thalamus (Kohn and Fernández 2017).

Some similar areas of activation were also identified in a recent study by Stolier and Freeman (2016). In this study, associations between social categories including race, sex, and emotion, were measured using an implicit association type task. These associations were correlated with brain activity as participants viewed computer generated faces varying in race, sex, and emotion. They found that the early visual cortex, right fusiform gyrus and orbital frontal cortex were brain areas with activation patterns that correlated with behavioral measures of the overlap between social categories. The areas identified include those involved in visual processing as well as conceptual knowledge (stereotypes). In their second study, only right frontal gyrus activity correlated with behaviourally measured conceptual associations when faces were more stringently matched on luminance and contrast, and visual similarity between faces of different types was statistically controlled for. These imaging results support the idea that interactions between sex and emotion occur in early visual processing, particularly when visual differences between the stimulus categories are not controlled for. A role of higher order conceptual knowledge (stereotypes) remains when visual differences are controlled for. In this study, however, neural activity was correlated with participants’ individual pattern of stereotypes measured in behaviour and race of the faces was also varied. While it does provide evidence for a role of both structure and stereotypes in social perception, it does not provide direct evidence that structure or stereotypes can account for facilitated perception of anger on male faces or happiness on female faces.

Other Evidence

Across the numerous studies described above, an association between facial masculinity and anger, and between femininity and happiness (as well as sadness, fear, and surprise in some cases) has been observed. However, an interaction between sex and emotion has not always been observed in ways that are consistent with either the structural or stereotype based explanations. For example, Rahman et al. (2004) found faster recognition of happy and sad expressions on male faces than on female faces expressing the same emotions. Trnka et al. (2007) presented participants with male and female faces expressing anger, contempt, disgust, fear, happiness, sadness, and surprise, and found that only fear was recognised more accurately on male faces compared to female faces. In another study, Wells et al. (2016) presented a range of expressions (happy, sad, angry, surprised, fear, and disgusted at three different intensities) and found that participants were more accurate to recognise disgust on female than male faces, but happiness on male than female faces. These findings are inconsistent with the majority of evidence in this area which finds facilitated recognition of anger on male faces and happiness on female faces, so while it is possible that these are genuine effects arising due to the particulars of the experimental method, these may also be spurious findings.

When faces vary by sex and emotion as well as an additional social dimension (e.g., race, attractiveness), the influence of sex on emotion recognition also becomes less consistent. For example, a number of studies have asked participants to categorize faces varying in race, sex, and emotional expression (Craig and Lipp 2018; Li and Tse 2016; Smith et al. 2017). These studies all found that the race and the sex of the faces influenced emotion recognition. Studies by Craig and Lipp (2018) and Li and Tse (2016) found the largest happy advantage for own-race female faces. Smith et al. (2017), found an anger advantage for other-race Black male faces (but not White male faces or female faces) and the largest happy advantage for other-race (Black) female faces. Using a different method, Marinetti et al. (2012) presented Chinese and European American participants with Asian and Caucasian male and female faces morphing between happiness and anger. European American participants where slower to indicate the offset of anger in the morph videos for female than for male Caucasian faces, but slower to indicate the offset of anger on male than on female Asian faces. Chinese participants were slower to indicate the offset of happiness on male than on female Caucasian faces but slower to indicate the offset of happiness on female than on male Asian faces. While there is some variability across studies, these studies suggest that a happy female bias is generally still observed when the race of the face is also manipulated.

On the other hand, in a study by Lindeberg et al. (2019), participants categorized happiness and anger on faces varying in sex (male, female) and their attractiveness (attractive, unattractive). Across two experiments, a moderating influence of attractiveness, but no influence of the sex of the face on emotion categorization was observed. This study does not provide clear evidence for a role of stereotypes or facial structure on the interaction between sex and emotion.

Regardless of the inconsistent findings mentioned above, a vast majority of research investigating the interaction between sex related cues and emotion in social perception suggests that femininity and happiness (and sometimes fear or surprise), as well as masculinity and anger, overlap in structure and stereotypes. Evidence for a role of facial structure over stereotypes has been observed in tasks where visual/structural information relevant to the masculinity/femininity of the face is manipulated (e.g., Becker et al. 2007; Craig et al. 2019; Deska et al. 2018). On the other hand, evidence for the influence of gender-based stereotypes is observed when the structure of the face is held constant, when positive and negative expressions on male and female faces are categorized, or when participants internal representations of gender or emotional expression are probed (e.g., Brooks et al. 2018; Craig et al. 2017; Hess et al. 2004). However, we note that when a role of stereotypes is observed, the direction of their influence does not always lead to facilitated recognition in stereotype congruent ways (i.e., faster recognition of anger of male faces). Together, these studies suggest that both structure and stereotypes can contribute to the influence of sex on emotion perception and vice versa, but whether an influence of each of these mechanisms is observed depends on the nature of the task.

Sex and Emotion Interactions on Other Processes

Attention

A number of studies have investigated the interaction between facial cues of sex and emotion on how participants allocate their attention in a range of different tasks. In visual search type tasks, participants are presented with groups of faces and are asked to indicate the presence or absence of a face that is different from the rest (e.g., finding the happy face in a crowd of neutral faces). In one study using this approach, Öhman et al. (2010), found that participants were faster to detect happy than angry female faces, and were also faster to detect angry male faces under some search conditions. Similarly, Williams and Mattingley (2006), found participants were more efficient to detect angry than fearful targets when faces were male, but not when they were female. Amado et al. (2011) also found that angry expressions were detected more rapidly than happy or fearful expressions on male but not female faces. Consistent with the patterns observed in recognition, these studies suggest biased allocation of spatial attention to particular combinations of sex and emotion, and in particular to happy female and angry male faces.

Other studies measure participants’ eye-movements as they search through crowds of faces as eye movements and attention are closely related (Peterson et al. 2004). In one study, participants searched for fearful targets in neutral and happy backgrounds. Participants’ scan paths were shorter when searching for targets through happy female backgrounds, but participants were faster to find fearful targets in neutral backgrounds when the faces were male than when they were female (Horovitz et al. 2018). In another study where participants inspected crowds consisting of a mix of happy and neutral or angry and neutral expressions, participants spent more time fixating on female crowds when happy expressions were present, and more time fixating on male crowds when angry expressions were present (Bucher and Voss 2019). When faces were presented one at a time, Taylor (2017) also found that participants spent longer looking at angry male than angry female faces. These studies, too, generally suggest that attention is guided in ways that are consistent with overlapping facial structure or stereotypes relating to sex and emotion (i.e., an attentional bias towards happy female and angry male faces).

Another approach to investigating attention is to look at how well participants can attend to objects in their environment across time (rather than across space). To investigate whether facial sex and emotion information influence the allocation of attention across time, Stebbins and Vanous (2015) asked participants to pick out an upright and an inverted face from a rapid visual stream of scrambled faces. The first face target was either male or female with an angry, neutral, or happy expression. When the two faces were presented right after each other in the stream, participants were worse at identifying the second target when the first target was an angry male than when it was an angry female. This difference did not emerge when the faces were happy or when the two targets were presented further apart in the stream. This was taken to suggest an early attentional advantage for angry male faces, again demonstrating that facial sex and emotion information interact to influence allocation of attention.

The interaction between sex and emotion in attention has also been investigated by measuring the startle reflex. In these studies, participants are presented with white noise blasts designed to elicit a startle reflex while they view images. The size of a participant’s startle is commonly quantified by measuring blinks (blink startle) with electrodes placed below the eye. Using this approach, Hess et al. (2007), presented startle probes 3–5 s after the onset of faces varying in sex and emotion. Larger blink startle responses were observed for angry compared to happy and neutral expressions when the face was male, but not female. Other studies have also investigated modulation of blink startle, presenting the startle probes very shortly after the onset of the faces. At the shortest period between the image and the startle probe (300 ms), the sex and emotion of the faces interacted to influence the size of the blink. In one study, smaller blinks were found for angry male faces compared to angry female faces (Duval et al. 2013). In another, they found smaller blinks for disgust compared to neutral expressions on male faces, but smaller blinks to happy compared to disgust faces and a trend towards smaller blinks to happy compared to neutral expressions on female faces (Duval et al. 2018). Although the patterns of influence differed depending on the time between the image and the startle probe, based on the broader blink startle literature, these findings were all interpreted as evidence that attention is preferentially allocated to male faces with negative expressions (anger and disgust) and female faces with positive expressions (happiness).

Across these studies, attention is preferentially allocated to negative (angry) male faces, and happy female faces when measuring both spatial and temporal attention. This pattern is consistent with both stereotype and structural accounts. The stereotype that males are more angry/aggressive (Plant et al. 2000) could make the anger signal more important or relevant to attend to (in order to avoid threat). On the other hand, the anger expression on a masculine face could appear angrier due to overlapping visual-structural cues, so the attentional bias could be due to the greater perceived intensity of the expression.

Memory

A number of studies have also investigated how the interaction between sex and emotion might influence learning and memory. So far, results have been mixed. In one early study using a fear conditioning approach, participants were presented with faces varying in sex and emotion with some faces paired with a shock. In a subsequent phase where shocks were no longer presented, participants’ skin conductance responses were larger for a longer time for male angry faces paired with a shock than for female angry faces or faces presenting other expressions paired with a shock. This results suggests that participants anticipated the shock for longer for angry male faces (Öhman and Dimberg 1978), possibly suggesting that male angry faces are perceived as more threatening. This could be due to visual structural overlap (with the confluence of male and angry features making the face appear angrier) or due to the stereotype of men being more aggressive.

Another study taking a slightly different approach to look at associative learning presented participants with three face-name pairs to learn (with neutral expressions presented in the learning phase). After the learning phase, participants had to recall the name of these three individuals (this time presented with emotional expressions). Participants were faster to name the female than male faces when the face posed a happy or neutral expression, but not a fearful or angry expression (Hofmann et al. 2006), suggesting that particular combinations of sex and emotion (e.g. happy females) are processed with greater ease. In associative learning studies like these ones, learning and memory processes are involved as differential responding to the faces is due to participants’ memory of the association between the face and previously presented shocks or names; however participants see only one or a couple of exemplars of each sex or emotion category. This method differs from other approaches more typically applied to investigating face memory.

More commonly, learning and memory processes have been investigated in face identity recognition tasks where participants are presented with a number of faces to learn. They later encounter these faces along with new faces that they have not previously seen, and are asked to indicate whether each face is one that they have seen before or a new face. In these studies, both correct recognition of previously seen faces (hits), as well as incorrect ‘seen’ responses to new faces (false alarms) are considered to take into account a potential response bias (for example, where participants respond ‘seen’ to all faces), though depending on the nature of the task, this is not always possible. Within the broader face recognition literature, an own-gender bias has been identified, where participants are better at recognising faces of their own gender, particularly in female participants (see Herlitz and Loven 2013 for a review). When the emotional expression on the faces is also varied, a variety of findings have emerged.

In one study, participants correctly responded ‘seen’ more frequently for previously encountered angry male faces than happy male faces and for happy female faces more frequently than angry male faces (Tay and Yang 2017). Similarly, Becker et al. (2014), found that participants correctly responded ‘seen’ more frequently for previously encountered angry male faces than angry female, neutral female, or neutral male faces, but only in male participants. In both these experiments, emotion effects could only be detected in correct recognition of previously encountered faces as there were no comparable false alarm trials (where a participant can respond ‘seen’ to an unseen face). This means a role of response bias cannot be ruled out in these studies.

In studies where both hits and false alarms could be analysed, results contrary to the typical angry-male and happy-female bias have also been found. Armony and Sergerie (2007) found that female participants were better at remembering female than male faces when expressing fearful, but not happy or neutral expressions, but male participants’ face recognition performance was not influenced by the sex or expression on the face. Cortes et al. (2017), found that memory was better for female than male faces expressing disgust, fear, and neutral expressions, but not happy, sad, or angry expressions. Further, Wang (2013), found that male participants were more accurate at recognising female than male faces when they were neutral, but not happy or angry. Female participants were also better at recognizing female than male faces regardless of expression. In a study where the race of the faces was also varied, when collapsing across faces of different races, face recognition was better for male faces expressing fear compared to anger, and for female faces expressing anger compared to fear (Krumhuber and Manstead 2011). In these studies, where the accuracy of recognition could be teased apart from response bias, there was no consistent influence of sex and emotion on identity recognition. Though further research would be needed to determine which (if any) of these is a true effect that is generalizable beyond the participants and stimuli used in each study, all patterns observed to date are inconsistent with structural or stereotype effects observable when measuring other cognitive processes (e.g. emotion recognition, sex categorization, or attention). This suggests that these mechanisms are unlikely to be playing a role in face memory.

Together, these findings suggest that typical sex-based associations can influence participants’ responses in a face recognition memory task, but only when response bias cannot be taken into account. In these studies where response bias is not considered, participants are more willing to indicate that they have ‘seen’ structurally and/or stereotypically congruent happy female/angry male faces (Becker et al. 2014; Tay and Yang 2017). This is possibly due to participants’ expectations regarding gender and emotion shifting participants’ feelings of familiarity with faces in stereotype congruent ways. Angry males and happy females may feel more familiar or fluent leading to more ‘seen’ responses. In studies where both hits and false alarms could be analysed and the role of response bias could be accounted for, no benefit in recognition performance for any particular sex-emotion combination has been consistently found. Overlaps between sex and emotion in facial structure or in stereotypic associations do not seem to confer a benefit for face identity recognition despite the evidence described above that stereotype congruent faces are preferentially allocated attention.

Computer Based Image Classification

A number of studies measuring behavior, physiology, or neural activity described above have identified a unique role of facial structure in the interaction between facial sex and emotion (e.g., Becker et al. 2007). Other evidence for the role of facial structure comes, not from human observers, but from evidence using computer-based image classifiers. Image classifiers are computer programs trained to be able to distinguish between stimuli of different types (such as faces of different emotions) based purely on the visual properties of the images they are provided. Although human biases can be trained into computer classifiers through biased selection of training stimuli (Fu et al. 2014), computers do not directly hold the stereotypes and associations of human observers. As such, biases observed in image classification are likely to reflect visual structural overlap in the stimuli being classified. Zebrowitz et al. (2010), trained a connectionist model-based computer classifier to recognize happy, angry, and surprised expressions. After training, the classifier was presented with neutral male and female faces. Neutral female faces activated the surprise network more than male faces and neutral male faces activated the anger network more than female faces. Bayet et al. (2015), have similarly revealed human like Sex × Emotion biases in some types of computer-based image classifiers. As such, finding an influence of facial sex on emotion classification or a role of facial expression in sex classification in computer-based classifiers suggests evidence of an overlap between structural cues of sex and particular emotions.

Summary

A number of studies have provided evidence for a unique role of facial structure in the interaction between sex and emotion in faces. These are derived from studies manipulating facial structure (e.g., Becker et al. 2007; Hess et al. 2004), as well as studies using computer-based image classification to minimize the influence of stereotypes (Bayet et al. 2015; Zebrowitz et al. 2010). These studies suggest that facial cues of masculinity such as a strong brow, and angular jaw facilitate recognition of anger, and cues of femininity such as larger eyes and a rounder face facilitate recognition of expressions like happiness, fear, and surprise (e.g., Becker et al. 2007; Hess et al. 2009a; Zebrowitz et al. 2010).

There is also evidence emerging from the literature for a unique role of higher order stereotypes and evaluations in the interaction between sex and emotion in person perception. For example, in studies where no faces are presented or participants internal representations are probed (e.g., using reverse correlation) associations between males and anger and between females and happiness can be found (Becker et al. 2007; Brooks et al. 2018). Where the role of structure is reduced by matching stimuli more closely and statistically controlling for visual similarity, brain activity in higher order conceptual areas still correlated with behavioural measures of stereotypes (Stolier and Freeman 2016). Where faces are matched in facial structure and other cues of sex are present (e.g. hair/clothes), a role of stereotypes on emotion perception is also observed, however in these studies, the influence of stereotypes results in patterns opposite to the stereotype (e.g. perception of apparent male faces as happier; Hess et al. 2004; Steephen et al. 2018). Across these studies, there is evidence for a stereotypic association between men and anger and women and happiness, though the influence of these stereotypes does not always lead to facilitated recognition of these categories. Other studies have also provided evidence for a role of valence based associations, but not structure, in emotion categorization tasks. For example, studies have found that the influence of sex on emotion recognition is comparable when categorizing negative emotions that overlap with masculine facial structure (i.e., anger), but also those that do not (i.e., sadness and fear; Bijlstra et al. 2010; Craig et al. 2017; Hugenberg and Sczesny 2006).

Structural and stereotype accounts are not mutually exclusive. Both mechanisms could concurrently contribute to Sex × Emotion interactions observed in past research in tasks where the influence of one mechanism is not constrained through the task design. Recent studies using techniques able to detect the contribution of both structure and stereotypes with the same participants and within the same task have provided evidence for a concurrent role of both mechanisms. As described above, EEG studies have shown Sex × Emotion interactions emerge in early components related to visual and structural encoding as well as later components related to activation of existing associations (e.g., Liu et al. 2017). Stolier and Freeman (2016) also found brain regions related to lower level visual perception, as well as higher order associations, were activated in a way that correlated with the interaction between social categories and emotion observed in behavior. Finally, a recent study using response time modelling identified two processes involved in the interaction between sex and emotion in emotion recognition. Sex × Emotion interactions were observed in non-decision time and response caution but not drift rate (Tipples 2019). These results were interpreted as evidence for an early role of overlapping visual information (non-decision time) followed by an influence of stereotypes that resulted in more cautious responding for judgments counter to participants’ stereotypic/evaluative expectations.

Conclusion

As to the ultimate cause of the Sex × Emotion interaction observed, evidence for structural overlap between particular combinations of sex and emotion suggests a possible role of evolution. The form of facial expressions may have evolved to mimic or enhance existing socially significant signals present on the face. For example, the form of the expression of anger may mimic or enhance facial cues of masculinity or dominance (Adams Jr. et al. 2015; Marsh et al. 2005). This may have afforded an evolutionary advantage, for example, by enhancing signals conveying interpersonal threat and increasing perceived formidability. This may confer a survival advantage by curtailing potentially costly physical conflicts (e.g., Craig et al. 2019; Tay 2015). Other expressions like happiness or fear may mimic or enhance signals of safety and submission, offering opportunities for seeking affiliation, coalition, and social support (Becker et al. 2011; Tay 2015).

Further, it is possible that widely held gender-based associations (stereotypes and evaluations) at least partly originate from shared signals for sex and particular emotional expressions (see Adams Jr. et al. 2015 for a theoretical review). Co-occurrence of facial cues signalling sex and emotion is one path by which masculinity and anger, and femininity and happiness and fear could have become more easily associated. These associations could subsequently be proliferated through other social and cultural processes. If this is the case, both the structural and the stereotypic influences of sex on emotion perception may be ultimately derived from the same source—the evolution of facial cues signalling sex and emotion and the cognitive processes to recognize these cues. It is also possible that the source of these gender based stereotypes and evaluations may be due to other biologically based sex differences not associated with the face (like the influence of hormones on behavior; Mehta and Josephs 2010), or socially/culturally prescribed gender differences in behavior and social roles (Eagly and Steffen 1984; Prentice and Carranza 2002). As these potential sources of gender stereotypes all co-exist today, the ultimate origin of widely held gender-based stereotypes and evaluations may not be possible to identify.

Together, the broader literature demonstrates that unique influences of each mechanism (structure and stereotypes) can be observed in circumstances where the potential influence of one mechanism is reduced or removed. There is also evidence for the concurrent influence of both stereotypes and structure on the interaction between sex and emotional expression in face perception, with the relative influence of these two mechanisms dependent on which processes are engaged by the task (e.g., Bijlstra et al. 2010). Although future research would be needed in order to more systematically tease apart the roles of structure and stereotypes and identify contexts where one or the other mechanism plays the largest role, there is good evidence that both mechanisms contribute to of sex-emotion biases observed in neural activity, physiology, and behavior.

References

Adams Jr., R. B., Nelson, A. J., Soto, J. A., Hess, U., & Kleck, R. E. (2012). Emotion in the neutral face: A mechanism for impression formation? Cognition and Emotion, 26, 431–441. https://doi.org/10.1080/02699931.2012.666502.

Adams Jr., R. B., Hess, U., & Kleck, R. E. (2015). The intersection of gender-related facial appearance and facial displays of emotion. Emotion Review, 7, 5–13. https://doi.org/10.1177/1754073914544407.

Aguado, L., García-Gutierrez, A., & Serrano-Pedraza, I. (2009). Symmetrical interaction of sex and expression in face classification tasks. Perception & Psychophysics, 71, 9–25. https://doi.org/10.3758/app.71.1.9.

Algoe, S. B., Buswell, B. N., & DeLamater, J. D. (2000). Gender and job status as contextual cues for the interpretation of facial expression of emotion. Sex Roles, 42, 183–208. https://doi.org/10.1023/A:1007087106159.

Alrajih, S., & Ward, J. (2014). Increased facial width-to-height ratio and perceived dominance in the faces of the UK's leading business leaders. British Journal of Psychology, 105, 153–161. https://doi.org/10.1111/bjop.12035.

Amado, S., Yildirim, T., & İyilikçi, O. (2011). Observer and target sex differences in the change detection of facial expressions: A change blindness study. Cognition, Brain, Behavior: An Interdisciplinary Journal, 15, 295–316.

Armony, J. L., & Sergerie, K. (2007). Own-sex effects in emotional memory for faces. Neuroscience Letters, 426, 1–5. https://doi.org/10.1016/j.neulet.2007.08.032.

Atkinson, A. P., Tipples, J., Burt, D. M., & Young, A. W. (2005). Asymmetric interference between sex and emotion in face perception. Perception & Psychophysics, 67, 1199–1213. https://doi.org/10.3758/bf03193553.

Barrett, L. F. (2006). Are emotions natural kinds? Perspectives on Psychological Science, 1, 28–58. https://doi.org/10.1111/j.1745-6916.2006.00003.x.

Bayet, L., Pascalis, O., Quinn, P. C., Lee, K., Gentaz, É., & Tanaka, J. W. (2015). Angry facial expressions bias gender categorization in children and adults: Behavioral and computational evidence. Frontiers in Psychology, 6, 346. https://doi.org/10.3389/fpsyg.2015.00346.

Becker, D. V. (2017). Facial gender interferes with decisions about facial expressions of anger and happiness. Journal of Experimental Psychology: General, 146, 457–463. https://doi.org/10.1037/xge0000279.

Becker, D. V., Kenrick, D. T., Neuberg, S. L., Blackwell, K. C., & Smith, D. M. (2007). The confounded nature of angry men and happy women. Journal of Personality and Social Psychology, 92, 179–190. https://doi.org/10.1037/0022-3514.92.2.179.

Becker, D. V., Anderson, U. S., Mortensen, C. R., Neufeld, S. L., & Neel, R. (2011). The face in the crowd effect unconfounded: Happy faces, not angry faces, are more efficiently detected in single- and multiple-target visual search tasks. Journal of Experimental Psychology: General, 140, 637–659. https://doi.org/10.1037/a0024060.

Becker, D. V., Mortensen, C. R., Anderson, U. S., & Sasaki, T. (2014). Out of sight but not out of mind: Memory scanning is attuned to threatening faces. Evolutionary Psychology, 12, 901–912. https://doi.org/10.1177/147470491401200504.

Bentin, S., Allison, T., Puce, A., Perez, E., & McCarthy, G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8, 551–565. https://doi.org/10.1162/jocn.1996.8.6.551.

Bestelmeyer, P. E. G., Jones, B. C., DeBruine, L. M., Little, A. C., & Welling, L. L. M. (2010). Face aftereffects suggest interdependent processing of expression and sex and of expression and race. Visual Cognition, 18, 255–274. https://doi.org/10.1080/13506280802708024.

Bijlstra, G., Holland, R. W., & Wigboldus, D. H. J. (2010). The social face of emotion recognition: Evaluations versus stereotypes. Journal of Experimental Social Psychology, 46, 657–663. https://doi.org/10.1016/j.jesp.2010.03.006.

Bovet, J., Barkat-Defradas, M., Durand, V., Faurie, C., & Raymond, M. (2018). Women's attractiveness is linked to expected age at menopause. Journal of Evolutionary Biology, 31, 229–238. https://doi.org/10.1111/jeb.13214.

Brinkman, L., Todorov, A., & Dotsch, R. (2017). Visualising mental representations: A primer on noise-based reverse correlation in social psychology. European Review of Social Psychology, 28, 333–361. https://doi.org/10.1080/10463283.2017.1381469.

Brooks, J. A., Stolier, R. M., & Freeman, J. B. (2018). Stereotypes bias visual prototypes for sex and emotion categories. Social Cognition, 36, 481–493. https://doi.org/10.1521/soco.2018.36.5.481.

Bruce, V., & Young, A. (1986). Understanding face recognition. British Journal of Psychology, 77, 305–327. https://doi.org/10.1111/j.2044-8295.1986.tb02199.x.

Bucher, A., & Voss, A. (2019). Judging the mood of the crowd: Attention is focused on happy faces. Emotion, 19, 1044–1059. https://doi.org/10.1037/emo0000507.

Buss, D. M. (1988). The evolution of human intrasexual competition: Tactics of mate attraction. Journal of Personality and Social Psychology, 54, 616–628. https://doi.org/10.1037//0022-3514.54.4.616.

Cortes, D., Laukka, P., Lindahl, C., & Fischer, H. (2017). Memory for faces and voices varies as a function of sex and expressed emotion. PLoS One, 12, e0178423-e0178423. https://doi.org/10.1371/journal.pone.0178423.

Craig, B. M., & Lipp, O. V. (2017). The influence of facial sex cues on emotional expression recognition is not fixed. Emotion, 17, 28–39. https://doi.org/10.1037/emo0000208.

Craig, B. M., & Lipp, O. V. (2018). The influence of multiple social categories on emotion perception. Journal of Experimental Social Psychology, 75, 27–35. https://doi.org/10.1016/j.jesp.2017.11.002.

Craig, B. M., Koch, S., & Lipp, O. V. (2017). The influence of social category cues on the happy categorisation advantage depends on expression valence. Cognition and Emotion, 31, 1493–1501. https://doi.org/10.1080/02699931.2016.1215293.

Craig, B. M., Nelson, N. L., & Dixson, B. J. W. (2019). Sexual selection, agonistic signaling, and the effect of beards on recognition of men’s anger displays. Psychological Science, 30, 728–738. https://doi.org/10.1177/0956797619834876.

Darwin, C. (1872). The expression of emotions in animals and man. London: Murray.

Deska, J. C., Lloyd, E. P., & Hugenberg, K. (2018). The face of fear and anger: Facial width-to-height ratio biases recognition of angry and fearful expressions. Emotion, 18, 453–464. https://doi.org/10.1037/emo0000328.

Dixson, B. J. (2018). Is male facial width-to-height ratio the target of sexual selection? Archives of Sexual Behavior, 47, 827–828. https://doi.org/10.1007/s10508-018-1184-9.

Dixson, B. J., & Brooks, R. C. (2013). The role of facial hair in women's perceptions of men's attractiveness, health, masculinity and parenting abilities. Evolution and Human Behavior, 34, 236–241. https://doi.org/10.1016/j.evolhumbehav.2013.02.003.

Dixson, B. J., & Vasey, P. L. (2012). Beards augment perceptions of men's age, social status, and aggressiveness, but not attractiveness. Behavioral Ecology, 23(3), 481–490. https://doi.org/10.1093/beheco/arr214.

Dixson, B. J., Lee, A. J., Sherlock, J. M., & Talamas, S. N. (2017). Beneath the beard: Do facial morphometrics influence the strength of judgments of men's beardedness? Evolution and Human Behavior, 38, 164–174. https://doi.org/10.1016/j.evolhumbehav.2016.08.004.

Doi, H., Amamoto, T., Okishige, Y., Kato, M., & Shinohara, K. (2010). The own-sex effect in facial expression recognition. NeuroReport, 21, 564–568. https://doi.org/10.1097/WNR.0b013e328339b61a.

Duval, E. R., Lovelace, C. T., Aarant, J., & Filion, D. L. (2013). The time course of face processing: Startle eyeblink response modulation by face gender and expression. International Journal of Psychophysiology, 90, 354–357. https://doi.org/10.1016/j.ijpsycho.2013.08.006.

Duval, E. R., Lovelace, C. T., Gimmestad, K., Aarant, J., & Filion, D. L. (2018). Flashing a smile: Startle eyeblink modulation by masked affective faces. Psychophysiology, 55, e13012. https://doi.org/10.1111/psyp.13012.

Eagly, A. H., & Steffen, V. J. (1984). Gender stereotypes stem from the distribution of women and men into social roles. Journal of Personality and Social Psychology, 46, 735–754. https://doi.org/10.1037/0022-3514.46.4.735.

Eagly, A. H., Mladinic, A., & Otto, S. (1991). Are women evaluated more favorably than men? An analysis of attitudes, beliefs, and emotions. Psychology of Women Quarterly, 15, 203–216. https://doi.org/10.1111/j.1471-6402.1991.tb00792.x.

Ekman, P. (1992). An argument for basic emotions. Cognition and Emotion, 6, 169–200. https://doi.org/10.1080/02699939208411068.

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17, 124–129. https://doi.org/10.1037/h0030377.

Ekman, P., Sorenson, E. R., & Friesen, W. V. (1969). Pan-cultural elements in facial displays of emotion. Science, 164, 86–88. https://doi.org/10.1126/science.164.3875.86.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system (FACS): Manual. Salt Lake City: A Human Face.

Elfenbein, H. A. (2013). Nonverbal dialects and accents in facial expressions of emotion. Emotion Review, 5, 90–96. https://doi.org/10.1177/1754073912451332.

Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128, 203–235. https://doi.org/10.1037/0033-2909.128.2.203.

Fabes, R. A., & Martin, C. L. (1991). Gender and age stereotypes of emotionality. Personality and Social Psychology Bulletin, 17, 532–540. https://doi.org/10.1177/0146167291175008.

Fu, S., He, H., & Hou, Z. (2014). Learning race from face: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36, 2483–2509. https://doi.org/10.1109/TPAMI.2014.2321570.

García-Gutiérrez, A., Aguado, L., Romero-Ferreiro, V., & Pérez-Moreno, E. (2017). Discrimination of face gender and expression under dual-task conditions. Attention, Perception, & Psychophysics, 79, 614–627. https://doi.org/10.3758/s13414-016-1236-y.

Geniole, S. N., Denson, T. F., Dixson, B. J., Carré, J. M., & McCormick, C. M. (2015). Evidence from meta-analyses of the facial width-to-height ratio as an evolved cue of threat. PLoS ONE, 10. https://doi.org/10.1371/journal.pone.0132726.

Gray, A. W., & Boothroyd, L. G. (2012). Female facial appearance and health. Evolutionary Psychology, 10, 66–77. https://doi.org/10.1177/147470491201000108.

Harris, D. A., & Ciaramitaro, V. M. (2016). Interdependent mechanisms for processing gender and emotion: The special status of angry male faces. Frontiers in Psychology, 7, 1046. https://doi.org/10.3389/fpsyg.2016.01046.

Harris, D. A., Hayes-Skelton, S. A., & Ciaramitaro, V. M. (2016). What's in a face? How face gender and current affect influence perceived emotion. Frontiers in Psychology, 7, 1468. https://doi.org/10.3389/fpsyg.2016.01468.

Hehman, E., Flake, J. K., & Freeman, J. B. (2015). Static and dynamic facial cues differentially affect the consistency of social evaluations. Personality and Social Psychology Bulletin, 41, 1123–1134. https://doi.org/10.1177/0146167215591495.

Herlitz, A., & Loven, J. (2013). Sex differences and the own-gender bias in face recognition: A meta-analytic review. Visual Cognition, 21, 1306–1336. https://doi.org/10.1080/13506285.2013.823140.

Herring, D. R., Taylor, J. H., White, K. R., & Crites, S. L. (2011). Electrophysiological responses to evaluative priming: The LPP is sensitive to incongruity. Emotion, 11, 794–806. https://doi.org/10.1037/a0022804.

Hess, U., Adams Jr., R. B., & Kleck, R. E. (2004). Facial appearance, gender, and emotion expression. Emotion, 4, 378–388. https://doi.org/10.1037/1528-3542.4.4.378.

Hess, U., Sabourin, G., & Kleck, R. E. (2007). Postauricular and eyeblink startle responses to facial expressions. Psychophysiology, 44, 431–435. https://doi.org/10.1111/j.1469-8986.2007.00516.x.

Hess, U., Adams Jr., R. B., & Kleck, R. E. (2009a). The categorical perception of emotions and traits. Social Cognition, 27, 320–326. https://doi.org/10.1521/soco.2009.27.2.320.

Hess, U., Adams Jr., R. B., Grammer, K., & Kleck, R. E. (2009b). Face gender and emotion expression: Are angry women more like men? Journal of Vision, 9, 1–8. https://doi.org/10.1167/9.12.19.

Hofmann, S. G., Suvak, M., & Litz, B. T. (2006). Sex differences in face recognition and influence of facial affect. Personality and Individual Differences, 40, 1683–1690. https://doi.org/10.1016/j.paid.2005.12.014.

Horovitz, O., Lindenfeld, I., Melamed, M., & Shechner, T. (2018). Developmental effects of stimulus gender and the social context in which it appears on threat detection. British Journal of Developmental Psychology, 36, 452–466. https://doi.org/10.1111/bjdp.12230.

Hugdahl, K., Iversen, P. M., & Johnsen, B. H. (1993). Laterality for facial expressions: Does the sex of the subject interact with the sex of the stimulus face? Cortex, 29, 325–331.

Hugenberg, K., & Sczesny, S. (2006). On wonderful women and seeing smiles: Social categorization moderates the happy face response latency advantage. Social Cognition, 24, 516–539. https://doi.org/10.1521/soco.2006.24.5.516.

Inzlicht, M., Kaiser, C. R., & Major, B. (2008). The face of chauvinism: How prejudice expectations shape perceptions of facial affect. Journal of Experimental Social Psychology, 44, 758–766. https://doi.org/10.1016/j.jesp.2007.06.004.

Ito, T. A., & Urland, G. R. (2003). Race and gender on the brain: Electrocortical measures of attention to the race and gender of multiply categorizable individuals. Journal of Personality and Social Psychology, 85, 616–626. https://doi.org/10.1037/0022-3514.85.4.616.

Jack, R. E., Garrod, O. G. B., Yu, H., Caldara, R., & Schyns, P. G. (2012). Facial expressions of emotion are not culturally universal. Proceedings of the National Academy of Sciences, 109, 7241–7244. https://doi.org/10.1073/pnas.1200155109.

Jones, D., & Hill, K. (1993). Criteria of facial attractiveness in five populations. Human Nature, 4, 271–296. https://doi.org/10.1007/bf02692202.

Karnadewi, F., & Lipp, O. V. (2011). The processing of invariant and variant face cues in the Garner paradigm. Emotion, 11, 563–571. https://doi.org/10.1037/a0021333.

Kohn, N., & Fernández, G. (2017). Emotion and sex of facial stimuli modulate conditional automaticity in behavioral and neuronal interference in healthy men. Neuropsychologia. Advance Online Publication. https://doi.org/10.1016/j.neuropsychologia.2017.12.001.

Kramer, R. S. S., Jones, A. L., & Ward, R. (2012). A lack of sexual dimorphism in width-to-height ratio in white European faces using 2D photographs, 3D scans, and anthropometry. PLoS One, 7, e42705. https://doi.org/10.1371/journal.pone.0042705.

Krumhuber, E. G., & Manstead, A. S. R. (2011). When memory is better for out-group faces: On negative emotions and gender roles. Journal of Nonverbal Behavior, 35, 51–61. https://doi.org/10.1007/s10919-010-0096-8.

Kubota, J. T., & Ito, T. A. (2007). Multiple cues in social perception: The time course of processing race and facial expression. Journal of Experimental Social Psychology, 43, 738–752. https://doi.org/10.1016/j.jesp.2006.10.023.

Larsen, C. S. (2003). Equality for the sexes in human evolution? Early hominid sexual dimorphism and implications for mating systems and social behavior. Proceedings of the National Academy of Sciences, 100, 9103–9104. https://doi.org/10.1073/pnas.1633678100.

Le Gal, P. M., & Bruce, V. (2002). Evaluating the independence of sex and expression in judgments of faces. Perception & Psychophysics, 64, 230–243. https://doi.org/10.3758/bf03195789.

Li, Y., & Tse, C. S. (2016). Interference among the processing of facial emotion, face race, and face gender. Frontiers in Psychology, 7, 1700. https://doi.org/10.3389/fpsyg.2016.01700.

Lindeberg, S., Craig, B. M., & Lipp, O. V. (2019). You look pretty happy: Attractiveness moderates emotion perception. Emotion, 19, 1070–1080. https://doi.org/10.1037/emo0000530.

Lipp, O. V., Karnadewi, F., Craig, B. M., & Cronin, S. L. (2015). Stimulus set size modulates the sex–emotion interaction in face categorization. Attention, Perception, & Psychophysics, 77, 1285–1294. https://doi.org/10.3758/s13414-015-0849-x.

Liu, C., Liu, Y., Iqbal, Z., Li, W., Lv, B., & Jiang, Z. (2017). Symmetrical and asymmetrical interactions between facial expressions and gender information in face perception. Frontiers in Psychology, 8, 9. https://doi.org/10.3389/fpsyg.2017.01383.

Luck, S. J. (2014). An introduction to the event-related potential technique. Cambridge, MA: MIT press.