Abstract

The Schrödinger equation for a macroscopic number of particles is linear in the wave function, deterministic, and invariant under time reversal. In contrast, the concepts used and calculations done in statistical physics and condensed matter physics involve stochasticity, nonlinearities, irreversibility, top-down effects, and elements from classical physics. This paper analyzes several methods used in condensed matter physics and statistical physics and explains how they are in fundamental ways incompatible with the above properties of the Schrödinger equation. The problems posed by reconciling these approaches to unitary quantum mechanics are of a similar type as the quantum measurement problem. This paper, therefore, argues that rather than aiming at reconciling these contrasts one should use them to identify the limits of quantum mechanics. The thermal wavelength and thermal time indicate where these limits are for (quasi-)particles that constitute the thermal degrees of freedom.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Imagine a gas of \(10^{23}\) atoms at a finite temperature T. On the most microscopic level, how do you envision the gas? Is it a set of balls that follow classical trajectories and have elastic collisions with each other? Or a set of wave packets that are so small at high temperatures that the particles resemble hard objects? Or is it a set of plane waves, such as are used in the calculation of the canonical partition function of the ideal gas? Or is it an entangled mess where the wave functions of all particles are present all over the volume and have a high degree of entanglement?

This question is closely related to that of the quantum–classical transition and the measurement process: the Schrödinger equation leads to an entanglement of all particles that have interacted with each other. However, the classical picture is that of localized particles that resemble small balls more than spatially broadly spread wave functions. In condensed matter physics and statistical physics, we are dealing with systems of \(10^{23}\) particles at finite temperatures, and, therefore, we encounter the question of the quantum–classical transition at every corner. Solids have a crystal structure with localized atoms; conduction electrons scatter locally from defects; biomolecules have a complex shape with their atoms having well-defined positions. Usually, however, this question of the quantum–classical transition is not made explicit in condensed matter physics. The student who learns the material simply wonders why the methods employed in condensed matter theory and statistical physics deviate so much from a Schrödinger equation of many particles and appear over and again incompatible with it.

In this paper, I want to address this topic and expose the many different ways in which condensed matter physics and statistical physics differ from a many-particle Schrödinger equation. This will teach us a lot about the nature and scale of the transition from the quantum to the classical behavior. This means that condensed matter physics and statistical physics should be used as key sciences when trying to solve the puzzles posed by the interpretation of quantum mechanics.

Let us start with the Schrödinger equation: the Schrödinger equation is viewed by many as the ‘Theory of Everything’ [26] for the nonrelativistic systems described by condensed matter theory and statistical physics. For a many-particle system, it has the general form:

with

Here, \(\alpha \) and \(\beta \) count the particles of the system, which are either the atoms or the electrons and nuclei or the ions and valence electrons, depending on the considered system. The potential \(V_{\alpha \beta }(\mathbf {r}_\alpha - \mathbf {r}_\beta )\) describes the interaction between particles \(\alpha \) and \(\beta \). Given the initial state \(\psi (\mathbf {r}_1, \cdots , \mathbf {r}_N,0)\), future and past states are given by the relation:

if the interaction potentials are not explicitly time dependent.

The Schrödinger equation has the following three important properties:

-

1.

It is linear. This means that the time evolution of a superposition of two wave functions is given by the superposition of the two time evolutions. It further means that all particles that have interacted in the past are entangled. If the initial state is not entangled, i.e., if it is a product state:

$$\begin{aligned} \varPsi (\mathbf {r}_1, \ldots , \mathbf {r}_N,0) = \prod \limits _{\alpha =1}^N \phi _\alpha (\mathbf {r}_\alpha ), \end{aligned}$$then the state at a later time will be a superposition of product states

$$\begin{aligned} \varPsi (\mathbf {r}_1, \ldots , \mathbf {r}_N,t) = \sum \limits _{i_1,\ldots ,i_N}c_{i_1,\ldots ,i_N}\prod \limits _{\alpha =1}^N \phi _{i\alpha }(\mathbf {r}_\alpha ). \end{aligned}$$A further consequence of the linearity of the Schrödinger equation is that initially localized wave packets disperse and become delocalized.

-

2.

It is deterministic. This means that the initial state, combined with the Hamiltonian determines the future time evolution.

-

3.

It is invariant under time reversal. The time-reversed Schrödinger equation is solved by the complex conjugate wave function, and this does not affect the observables.

However, the world that surrounds us has none of these three properties. It consists of localized objects with classical properties that are not superpositions of states with different eigenvalues of the observables. The time evolution of macroscopic systems has nonlinear and stochastic elements, as becomes manifest in chaotic systems. Furthermore, when running backwards a movie of any process occurring in the real world, the process looks completely unrealistic. In fact, all three properties of the Schrödinger equation are already violated within quantum mechanics, namely by the measurement process, without which no description of quantum mechanics is complete. During a measurement, an irreversible, stochastic ‘collapse’ to one of the possible measurement outcomes happens.

There are a number of interpretations of quantum mechanics that attempt to explain away this dichotomy between the Schrödinger equation and the measurement process, however, to many people, those interpretations remain unsatisfactory. These interpretations consider the mathematical description according to the Schrödinger equation to be the adequate description of the time evolution of a nonrelativistic system, include the many worlds [13], relational [33], consistent (or decoherent) histories [20], modal, epistemic, de Broglie–Bohm, and statistical [2] interpretations. These interpretations differ in the ontological status that they ascribe to the wave function and in their explanation of the observed randomness. Thus, for instance, the consistent histories interpretation does not consider the wave function as real but uses the formalism to calculate possible sequences of events and their probabilities, while Everett [13] considers the wave function as real but claims that our consciousness takes a stochastic route through the various branchings that occur at each measurement event. Epistemic interpretations, such as the relational interpretation [33, 34] or QBism [14] argue that the actual values of the physical variables of a system are only meaningful in relation to another system with which this system interacts, or that they represent states of knowledge rather than the objective reality. Similarly, modal interpretations are not concerned with an objective description independent of observers, but only in consistent classical descriptions of systems that are on a microscopic level described by quantum mechanics [21]. The statistical interpretation [2] suggests that wave functions do not describe single systems but ensembles of identically prepared systems. The de Broglie–Bohm pilot wave theory is different in nature as it postulates hidden variables, namely the particle position and its deterministic time evolution, which depends non-locally on the wave function. The stochasticity of the measurements is encoded in the initial value of the hidden variable.

In contrast to these interpretations, I will in this paper pursue the idea that there are limits of validity to the Schrödinger equation and that this is the reason why macroscopic objects behave differently than suggested by the three properties of the Schrödinger equation listed above. This is a view held by a variety of scientists, for instance by the condensed matter theorist and Nobel laurate Anthony Leggett [27]. In fact, the Copenhagen Interpretation also presupposes such limits as it maintains that a classical terminology for measurement outcomes and a classical environment are necessary for describing quantum systems. However, it remains ambiguous with respect to the ontological status of the classical and quantum worlds and the scale at which the ‘Heisenberg cut’ between the quantum and classical description occurs.

Limits of unitary time evolution are implemented explicitly in models for stochastic wave function collapse [3, 15]. They include stochasticity, localization, and irreversibility in the description of the time evolution of quantum systems from the onset. According to these models, the Schrödinger equation is only approximately correct as it neglects this stochastic component. The attractiveness of this alternative description lies in the fact that spontaneous collapses are extremely rare in single-particle systems but frequent in macroscopic systems. The shortcoming is that it contains ad hoc parameters that cannot be derived from other theories, which is due to the fact that the specific context in which the collapse occurs does not feature in the description.

The approach that appears the most promising to me is to include top-down effects on a quantum system from its context in addition to the usual bottom-up approach of describing macroscopic systems in terms of their parts and the interactions between them. This approach has been advanced by Ellis [12] and more recently by Ellis and myself [9]. Such an approach has due to its realism the advantage that it does not require sophisticated interpretations quantum mechanics to reconcile the properties of the Schrödinger equation with those of the classical world. Furthermore, it presumes that the existing descriptions of condensed matter systems are the most appropriate ones, as they have proven to be empirically adequate. What is thus lacking is not a new interpretation or a new, possibly more fundamental or exact, theory, but a fresh look at the calculations and concepts actually used in systems that consist of a macroscopic number of particles and have a finite temperature.

For this reason, I will in the following consider those fields of theoretical physics that are most appropriate for describing macroscopic systems. These are condensed matter theory and statistical physics. It is my goal to show that the methods used in these two areas of physics reveal us a lot about the transition from quantum to classical behavior. As these theories were developed as empirically adequate descriptions of the phenomena observed in macroscopic systems, they implement implicitly the quantum-classical transition in various ways. In fact, these methods include elements from classical physics as well as from quantum physics, and they violate all three above-listed properties of the Schrödinger equation.

In the next two sections, I will first analyze several methods used in condensed matter theory, and then turn to statistical mechanics and its fundamental concepts of probabilities and entropies.

Some of the thoughts and arguments presented here have been discussed in my previous publications [7, 8].

2 Condensed matter theory

Condensed matter theory deals with phenomena such as superconductivity, magnetism, quantum Hall effect, metal–insulator transition, Anderson localization, and many more. Text books and research papers on condensed matter theory are full of quantum mechanical equations that capture the important features of these phenomena. However, these quantum mechanical equations are never the ‘Theory of Everything’ (1), but effective equations for only some of the degrees of freedom of the system. When setting up these equations, empirical knowledge about the phenomena to be described and a basic understanding of these phenomena in terms of a model are essential. This is beautifully summarized by Leggett in an article that he wrote at the occasion of the 90th birthday of Karl Popper [27]:

No significant advance in the theory of matter in bulk has ever come about through derivation from microscopic principles. (...) I would confidently argue further that it is in principle and forever impossible to carry out such a derivation. (...) The so-called derivations of the results of solid state physics from microscopic principles alone are almost all bogus, if ’derivation’ is meant to have anything like its usual sense. Consider as elementary a principle as Ohm’s law. As far as I know, no-one has ever come even remotely within reach of deriving Ohm’s law from microscopic principles without a whole host of auxiliary assumptions (‘physical approximations’), which one almost certainly would not have thought of making unless one knew in advance the result one wanted to get, (and some of which may be regarded as essentially begging the question). This situation is fairly typical: once you have reason to believe that a certain kind of model or theory will actually work at the macroscopic or intermediate level, then it is sometimes possible to show that you can ‘derive’ it from microscopic theory, in the sense that you may be able to find the auxiliary assumptions or approximations you have to make to lead to the result you want. But you can practically never justify these auxiliary assumptions, and the whole process is highly dangerous anyway: very often you find that what you thought you had ’proved’ comes unstuck experimentally (for instance, you ‘prove’ Ohm’s law quite generally only to discover that superconductors don’t obey it) and when you go back to your proof you discover as often as not that you had implicitly slipped in an assumption that begs the whole question. (...) Incidentally, as a psychological fact, it does occasionally happen that one is led to a new model by a microscopic calculation. But in that case one certainly does not believe the model because of the calculation: on the contrary, in my experience at least one disbelieves or distrusts the calculation unless and until one has a flash of insight and sees the result in terms of a model. I claim then that the important advances in macroscopic physics come essentially in the construction of models at an intermediate or macroscopic level, and that these are logically (and psychologically) independent of microscopic physics. Examples of the kind of models I have in mind which may be familiar to some readers include the Debye model of a crystalline solid, the idea of a quasiparticle, the Ising or Heisenberg picture of a magnetic material, the two-fluid model of liquid helium, London’s approach to superconductivity .... In some cases these models may be plausibly represented as ’based on’ microscopic physics, in the sense that they can be described as making assumptions about microscopic entities (e.g. ’the atoms are arranged in a regular lattice’), but in other cases (such as the two-fluid model) they are independent even in this sense. What all have in common is that they can serve as some kind of concrete picture, or metaphor, which can guide our thinking about the subject in question. And they guide it in their own right, and not because they are a sort of crude shorthand for some underlying mathematics derived from ’basic principles.’

In the following, I will highlight these characteristics of condensed matter theory by describing several widely used methods.

2.1 Born–Oppenheimer approximation

The Born–Oppenheimer approximation is the basis of quantum chemistry and solid state physics. It separates the quantum mechanical equation of the electrons from that of the ions.

The following derivation follows closely the textbook ’Quantum Mechanics’ by Schwabl [38]. The Born–Oppenheimer approximation starts from the Hamilton Operator for all electrons and ions:

with the kinetic energy

of electrons and ions, respectively, and with the three contributions to electrostatic energy between electrons and electrons, electrons and ions, and ions and ions. We focus on the time-independent Schrödinger equation:

as this method is usually used to find the ground state. We make the ansatz

which is a product of a wave function \(\varPhi (\mathbf {X})\) for the ions and a wave function \(\psi (\mathbf {x}|\mathbf {X})\) of the electrons (with given ion positions). The vector \(\mathbf {X}\) denotes the positions \((\mathbf {X}_1, \dots , \mathbf {X}_N)\) of the ions, and the vector \(\mathbf {x}\) the positions \((\mathbf {x}_1, \ldots , \mathbf {x}_N)\) of the electrons. The wave function of the electrons for given positions \(\mathbf {X}\) of the ions is determined from the reduced Schrödinger equation:

The energy eigenvalue has the contributions \(\epsilon (\mathbf {X}) = V_{II}(\mathbf {X}) + E^{el}(\mathbf {X})\), which are the interaction energy of the ions and the energy eigenvalue of the electrons in the potential of the ions. To obtain the ion wave function \(\varPhi (\mathbf {X})\), the reduced Schrödinger equation is inserted into the full Schrödinger equation, giving

Multiplication with \(\psi ^*(\mathbf {x}|\mathbf {X})\) and integration over \(\mathbf {x}\) and neglection of two terms gives the Born–Oppenheimer equation:

Here, the energy contribution \(\epsilon (\mathbf {X})\) due to the electrons is responsible for an effective attractive interaction between the ions. The equilibrium positions of the ions are obtained by minimizing \(\epsilon (\mathbf {X})\). When these minima are sufficiently pronounced, the ions are well localized. The second derivatives of \(\epsilon (\mathbf {X})\) at the positions of the minima determine the oscillation frequencies of the ions and yield the vibrational spectra of molecules or the phonon spectrum of crystals. The two neglected terms can be argued to be smaller by a factor m / M than the leading terms, with M being the mass of the ions and m the mass of the electrons. The intuitive justification given for this procedure is that the electrons move much faster than the ions and thus are at all times in equilibrium with the present position of the ions. This is also called the adiabatic approximation. Philosophers of chemistry point out that the Born–Oppenheimer approximation is in fact a mixture of classical and quantum mechanics [5, 29, 31]. By making a product ansatz, the entanglement between electrons and ions is ignored. By presuming localized ions, spatial superpositions of different ion positions are ruled out. This is the basis for molecules assuming specific shapes that break the symmetry of the underlying full Schrödinger equation. The breaking of a symmetry is something similar to the measurement process, since in both cases only one of several possible options becomes realized. In fact, it is well known that the ground state of a wave function cannot break a symmetry of the Schrödinger equation if the degree of freedom that shows this symmetry occurs explicitly in the Hamilton operator.

To summarize, the Born–Oppenheimer approximation violates the linear superposition principle of the Schrödinger equation. It mixes classical with quantum features to obtain molecular configurations and crystal structures. Most subfields of condensed matter theory take such structures for granted, for instance when they deal with the properties of electrons in crystals.

2.2 Nonlinear wave equations

The linearity of the Schrödinger equation is essential for obtaining superpositions and entanglement. Despite the importance of this feature, condensed matter physicists use various equations that are nonlinear in the wave function. The best-known examples are the Hartree and Hartree–Fock equations. Both are used to approximate the ground state of a many-electron system by a variational calculation that expresses the many-particle wave function in terms of a product of one-particle wave functions, which is additionally antisymmetrized in the Hartree–Fock approach. In the Hartree approach, the ansatz for the spatial part of the wave function is, therefore,

Minimizing the expectation value of the Hamilton operator with respect to the one-particle wave functions with the constraint that all wave functions shall be normalized gives:

This is a nonlinear equation in the one-particle wave functions that is to be solved self-consistently with the condition that the states of the different particles are orthogonal to each other. They have the form of mean-field equations where each particle is subject to a classical electrostatical potential that is caused by the charge density of the other particles. Just as for the Born–Oppenheimer approximation, we see here that elements from classical physics enter the quantum mechanical calculation. An additional classical feature was already put into the system at the beginning of the calculation: the product ansatz rules out entanglement.

In the Hartree–Fock method, Eq. (10) obtains an additional term that comes from the antisymmetrization. A similar method is also used for calculating the ground state of interacting bosons, giving the Gross–Pitaevskii equation:

Here, the wave function \(\psi ({\mathbf {r}})\) is normalized such that \(\langle \psi |\psi \rangle = N\), with N being the particle number. Since bosons all go into the same one-particle ground state, only one state occurs in this equation. Since the atoms of a Bose gas have a short-range interaction, the potential has been approximated as \(V({\mathbf {r}}-{\mathbf {r}}') = g \delta ({\mathbf {r}}-{\mathbf {r}}')\). Here arises another remarkable feature: the interpretation of the wave function changes. The macroscopic wave function of the ground state of a bosonic system is not interpreted as a probability amplitude but as a partially classical object. In particular, the states of systems that contain N and \(N+1\) particles, respectively, are not assumed to be orthogonal to each other (as required by the quantum mechanical Fock-space formalism) but to be parallel to each other and to differ only by a factor \(\sqrt{(N+1)/N}\).

Similar nonlinear and classical features occur in density functional theory, which calculates the ground state charge density of the electrons of a molecule or crystal. In the Kohn–Sham approach [24], the effective one-particle Schrödinger equation to be solved takes the form:

The effective potential

includes the external potential that comes from the nuclei, the long-range electrostatic repulsion of the electrons in the form of a Hartree term, and a short-range exchange-correlation potential that captures all effects that have been neglected in the other terms. This means that entanglement and antisymmetry of electrons are only taken into account on short length scales, while the long-range part of the interaction is assumed to be classical. Even though the one-particle wave functions are interpreted only as an auxiliary quantity to calculate the ground-state charge density and not as the real states of the electrons, this does not change the fact that density functional theoretical calculations use successfully nonlinearities and classical ingredients. The theory considers linear superposition and entanglement only on short distances.

2.3 Molecular dynamics simulations

Molecular dynamics (MD) simulations are successfully used to characterize the structure and dynamics of systems that consist of many atoms at a finite temperature [28, 40]. They include quantum mechanical features only in a very limited way, if at all. In purely classical simulations, molecules are represented as a collection of point masses and charges that obey Newton’s laws. When the formation and breaking of bonds, the polarization of atoms or molecules, or excited states shall be taken into account, the quantum mechanical properties of the electrons must be considered, employing ab initio MD simulations. For given positions of the nuclei, the electronic structure of atoms and molecules is calculated using Density Functional Theory. The motion of the nuclei is then calculated classically based on the force fields resulting from the electronic structure, and the electronic structure in turn is recalculated based on the changed positions of the nuclei. When quantum mechanical properties of nuclei become important, for instance with proton transfer processes that involve tunneling, the Feynman path integral formalism of statistical mechanics is used to describe the nuclei. Again, quantum effects are only included on short-length scales, and the state of N particles is calculated as a product, as if the particles were distinguishable and not entangled with each other.

In plasma physics, and when describing electrons in a conductor, another version of MD is used, which is called wave packet molecular dynamics [18]. This type of simulation assumes that the particles are wave packets all the time. These wave packets are modeled by their center-of-mass coordinate and their width, and it is assumed that they have Gaussian shape. The computer simulation, therefore, does not run the Schrödinger equation, but a classical equation where forces and velocities are obtained by their quantum mechanical averages, and this is justified by the Ehrenfest theorem.

In all these different methods for performing MD simulations, the atoms are localized: they are points for classical MD simulations, they have the extension of the electronic shell for ab initio simulations, and the extension of the thermal wavelength for path integral ab initio simulations. In none of these approaches are the atoms or molecules entangled with each other, even though the time evolution of the system according to the full Schrödinger equation for all particles would yield such an entanglement. The success of MD calculations shows that they are adequate to capture the phenomena observed in these systems.

2.4 Linear response theory

Linear response theory is a conceptually very interesting theory as it breaks time reversal invariance and relies on the concept of causality in its old-fashioned sense: first the cause, then the effect. It is an important tool for connecting theory with experiments as it is employed to calculate the response of a system to an applied field F, such as an electrical or magnetic field or a pressure. If \({\hat{B}}\) is the observable to which the field couples and \({\hat{A}}\) the observable that is evaluated, the response of the expectation value of this observable to the field is obtained in the Heisenberg picture as [36]:

with the susceptibility

Here, the expectation value \(\langle \cdots \rangle _0\) is evaluated in thermodynamic equilibrium using the canonical or grand canonical ensemble. The step function \(\theta (t-t')\) implements causality such that the effect of the field at time \(t'\) is only felt at times \(t > t'\). The derivation of these expressions presumes implicitly that the system is in thermal equilibrium when no field is applied and that it returns to this equilibrium after the field ceases to act. These assumptions are included as an additional feature, and they cannot be justified by the many-particle Schrödinger equation. They are in fact a version of the second law of thermodynamics.

This leads us to the next section, which discusses in more detail how statistical physics is done and how it also violates the properties of the many-particle Schrödinger equations.

2.5 Interim discussion

The examples of condensed matter calculations given so far are only a small glimpse into the methods and concepts used in this field. In fact, one could write an entire book that analyzes the methods and calculations used in condensed matter theory. Over and again, one can see that elements foreign to a many-particle Schrödinger equation are introduced. In fact, all three properties of the Schrödinger equation are violated in condensed matter theory: linearity, determinism, and time reversal invariance. Linear superposition and entanglement are taken into account only over short distances, as we have seen in the previous subsections. Determinism is broken wherever transition probabilities are used. This becomes most evident in calculations of (solid-state) quantum field theory and scattering theory, both of which were not mentioned explicitly so far. Transition probabilities are also implicit in statistical physics, which was mentioned in the context of linear response theory. Time reversal invariance is broken whenever response functions are used. In addition to the example mentioned above, this happens also in scattering theory, where the incoming wave knows nothing about the scattering potential, but the outgoing wave does, and in quantum field theory, where propagators are the microscopic equivalent of response functions.

In the next section, we will discuss how statistical mechanics also violates all three properties of the Schrödinger equation.

3 Statistical mechanics

3.1 Statistical independence

The textbook of Landau and Lifschitz on Statistical Physics [25] begins with the assumption that subsystems of a larger system are statistically independent. This means that the probability of a subsystem to be in a given microstate is independent of the microstate of neighboring subsystems. It is only determined by the macrostate, i.e., by the state variables. This basic assumption is a necessary requirement for observables to be sum variables and for the extensivity of thermodynamic systems. The standard derivation of the Boltzmann probabilities in the canonical ensemble, as done for instance by Schwabl [37] or Kittel and Kroemer [23], relies on this statistical independence, as it makes a product ansatz for the probabilities of a subsystem and the remaining system to be in their different possible microstates.

The assumption of statistical independence is logically incompatible with entanglement, as entanglement implies that specific states of one subsystem are correlated with specific states of the other subsystem. Statistical physics is, therefore, incompatible with a unitary time evolution of a many-particle system as it always leads to entangled states. We have already seen in the previous section that calculations in condensed matter physics often include entanglement only on short-length scales. In fact, whenever a product ansatz is used in quantum mechanical calculations, statistical independence is implicitly presumed, and entanglements that should result from past interactions are neglected. A product ansatz is often used when dealing with quantum systems and their environment, for instance in models of the measurement process [42], or in the derivation of the Lindblad equation [4].

Such a product ansatz is successful not because it is justified by the Schrödinger equation, but because it is in agreement with the observed properties of the respective systems. It thus appears that cutting entanglement beyond a certain distance is an appropriate procedure if one wants to capture what goes on in finite-temperature systems.

3.2 Probabilities

Probabilities are a fundamental, irreducible concept in statistical mechanics. They are introduced at the beginning of the considerations and derivations performed in statistical mechanics. Often, all the equilibrium properties in statistical mechanics are derived from the basic axiom that in an isolated system in equilibrium all microstates that are compatible with the macroscopic state variables (such as energy, volume, particle number) occur with the same probability, see, e.g., [23].

The concept of probabilities is foreign to a deterministic theory, such as the many-particle Schrödinger equation. Although several authors try to ‘derive’ the axiom of equal probabilities from a deterministic theory [10] or to ascribe it to our subjective ignorance of the precise microscopic state of the system [22, 25], they cannot do this consistently, but need additional assumptions, which amounts to encoding all the stochasticity of the trajectory in the initial state [8, 16]. Then the basic axiom takes the following form: among all the possible states that are compatible with a certain limited precision of the initial state, each state has the same probability to be the actual initial state. Only then can one claim that the vast majority of these initial states leads to a homogeneous distribution in phase space and, therefore, to equal probabilities of the microstates in equilibrium. The mentioned problem of introducing probabilities in a deterministic theory exists already for classical mechanics, but becomes much worse in quantum mechanics. The reason is that the deterministic time evolution of a many-particle Schrödinger equation leads to a superposition of all possible classical outcomes (i.e., particle configurations), even when the above arguments about the limited precision of the initial state are applied and decoherence makes the density matrix diagonal with a very high probability. For instance, when a gas of atoms is considered, one can make plausible arguments that the density matrix of a subsystem loses correlations in space and is in position representation nonzero only along a stripe around the diagonal [30]. Such a density matrix can be interpreted as a superposition of different classical states of the gas, in each of which the atoms are localized to a large degree. This is a situation similar to the measurement problem: decoherence might give a diagonal density matrix, but for a single run of a time evolution it does not give only one classical outcome but all of them at once. If physics shall agree with our observation of only one outcome, this is not satisfactory.

It thus seems that the probabilities used in statistical mechanics point to limits of validity of a deterministic time evolution. In fact, the very concept of entropy suggests that the state of a finite-temperature system is specified only by a limited number of bits. In an isolated system, we have in equilibrium \(S=k_B \ln \varOmega \), with \(\varOmega \) being the number of different microstates. In contrast, a wave function requires an infinite number of bits to be fully specified, as it is a complex-valued function.

If states are specified only by a limited number of bits, this leads directly to a stochastic time evolution [8, 16, 19] since the initial state does not yet unequivocally specify the full future time evolution. This in turn means that there must be stochastic transitions or ‘collapses’ (which could be continuous in time) that specify which of the possible time evolutions is actually taken.

Stochasticity of the time evolution in turn leads to irreversibility: this becomes most evident by looking at equations for the time evolution of probability distributions or ensembles, such as the Boltzmann equation (for a gas of particles that make elastic collisions), the Fokker–Planck equation (for a stochastic reaction system or other classical stochastic process), or the Lindblad equation. All of them converge to a unique stationary distribution under suitable conditions, and this means that they can be associated with a Liouville function that never increases. In particular, the initial state is forgotten after some time and cannot be deduced any more from the later state.

Therefore, we see that the very concept of probabilities violates all three properties of the Schrödinger equation: it limits superpositions, determinism, and reversibility. Furthermore, these features of statistical physics reflect what happens in a measurement process. In their textbook on statistical mechanics [25], Landau and Lifschitz suggest that the irreversibility arising from statistical physics and that of the measurement process might in fact have the same origin. Indeed, the methods developed in the meantime in the fields of the foundations of statistical mechanics and in quantum foundations are very similar. These are based on decoherence theory and the theory of open quantum systems and acknowledge the importance of heat baths with a macroscopic number of degrees of freedom. However, all these methods introduce features that are incompatible with unitary time evolution, as will be discussed further in the next section.

3.3 Maximum entropy principle

The maximum entropy principle is more general than the principle that in an isolated system in equilibrium all microstates have the same probability. It applies also to systems in contact with a heat bath or a particle reservoir. The maximum entropy principle [22] states that in equilibrium the probabilities \(p_n\) of the microstates are such that entropy \(S=-k_B\sum _n p_n \ln p_n\) is maximized, given the relevant constraints. For a system in contact with a heat bath this constraint is a fixed mean energy, which is imposed on the system by the temperature of the environment. Maximizing entropy for a fixed mean energy and denoting the Lagrange multiplier that is introduced to fix the mean energy as \(1/k_BT\), one obtains the probability to be in the microstate n as:

with \(E_n\) being the energy of microstate n, and \(Z=\sum _n e^{-E_n/k_BT}\) the partition function. Similarly, imposing a mean energy and a mean particle number on the system leads to the grand canonical probabilities:

with \(\mu \) being the chemical potential and \(N_n\) the particle number of microstate n and \(Z_{GK}= \sum _ne^{-(E_n-N_n\mu )/k_BT}\). For an isolated system, there are no constraints, and all probabilities are equal.

The principle of maximum entropy means essentially that a system in equilibrium is completely indifferent with respect to the microstate in which it should be. Often, this principle is interpreted as being due to our ignorance of the precise microstate, but why should the system care about our ignorance when choosing its microstates? If there was anything else unknown to us determining the state, the principle of maximum entropy would not be empirically adequate, and the results derived from it would not agree with observation. But equilibrium statistical mechanics, which is based on the above-given probabilities, yields a wealth of results that agree with the experiment, for instance the behavior of specific heat at high and low temperatures, the temperature of Bose–Einstein condensation, the spectrum of black-body radiation, and many more.

All this means that a system in equilibrium is determined solely by the macroscopic state variables imposed on it from the outside, classical world. This is a clear-cut example of top-down causation [11]. Notice again the similarities to the measurement process: the context (i.e., the experimental setup) determines the possible measurement outcomes and their probabilities. Of course, both are also affected by the incoming wave function, but this is again similar to a statistical physics system: the possible energy eigenstates depend on intrinsic properties, such as the masses and interactions of the particles.

The principle of maximum entropy is in fact applied regularly in the foundations of statistical mechanics and in open quantum systems, usually without mentioning this explicitly. Most of these ‘derivations’ use concepts from decoherence theory [17, 30, 32]. They invoke ‘typical’ environmental states, which are random ones among all those that are compatible with our knowledge of the system, as mentioned above. This means that these environmental states are determined by nothing but the classical variables that we know of or can control. The ‘derivation’ of the second law of thermodynamics using the eigenstate thermalization hypothesis [6, 39] also employs this principle: the starting point is the plausible hypothesis that thermodynamic observables evaluated in an eigenstate of a macroscopic system show the properties of thermal equilibrium, such as an even distribution of density in space. Now, if a special initial condition is chosen, such as all atoms of a gas being in one corner of a box, this initial condition can be written as a superposition of eigenstates. This superposition is special in the sense that it is associated with an untypical density distribution. But to argue that the system will evolve to an even density distribution, one must assume that the initial superposition is not special with respect to the future time evolution, but that it is a random or ‘typical’ one among all those that represent the initial density distribution. Only then can one expect that the superposition of eigenstates does not lead to special or ‘unlikely’ future states.

A similar analysis can be performed of the ‘derivations’ of the Lindblad equation from a quantum system coupled to its environment, with the total system being modeled quantum mechanically. Again, the environmental states must be assumed to be not correlated in a special way but to forget the past dynamics, if the Lindblad equation shall result in the end for the dynamics of the reduced density matrix of the quantum system.

To summarize this subsection: the principle of maximum entropy is applied in various ways in the field of foundations of statistical mechanics and of open quantum systems. Whenever ‘typical’ states are invoked, this means essentially that the system (or environment) is determined by nothing else but the factors that are already taken into account.

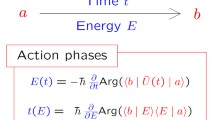

3.4 Thermal wavelength and thermal time

If we take seriously the principles of statistical independence, stochasticity, and maximum entropy on which statistical physics is based, we must conclude that unitary time evolution and linear superposition according to the Schrödinger equation are valid only under restricted conditions and are otherwise limited in length and time scale. In fact, there are many reasons why the most appropriate quantum mechanical description of particles and quasiparticles in finite-temperature systems is a wave packet of limited extension that remains a wave packet under time evolution: (i) the treatment of electrons in conductors as wave packets. (ii) The treatment of particles in MD simulations as localized objects in space. (iii) The requirement that in the classical limit of high temperature the atoms of a gas should become classical particles. (iv) The treatment of the atoms of a gas as wave packets when it is argued that Bose–Einstein condensation sets in when these wave packets begin to overlap due to decreasing temperature or increasing density. (v) The limited number of bits available for describing the state of a gas or other many-particle systems, as given by the entropy.

However, if wave packets remain wave packets under time evolution, this time evolution cannot be exclusively determined by a Hamilton operator since this would lead to a spreading of the wave packet in space due to dispersion and scattering events. Therefore, an ongoing process of localization must occur that is not captured by the Schrödinger equation. Such localization processes are indeed implemented in Lindblad equations for open quantum systems [4] and stochastic collapse models [3].

The width of thermal wave packets is given by the thermal wavelength. This is for instance presumed when the following quick derivation of the Bose–Einstein condensation temperature of an ideal Bose gas is given: Bose–Einstein condensation happens when the density of the gas becomes so large that the distance between atoms becomes of the order of the thermal wavelength. This leads to \((V/N)^{1/3} = \lambda _\mathrm{th} = h/\sqrt{2\pi m k_B T} \) and consequently \(T = (N/V)^{2/3}h^2/(2\pi m k_B)\), which is apart from a numerical factor of the order 1 identical with the condensation temperature derived from the fully fledged calculation. The thermal wavelength emerges naturally in calculations of the canonical partition function of an ideal gas. Its order of magnitude can also be estimated without performing calculations from the equipartition theorem \(\frac{3}{2} k_BT=E=h^2/(2m\lambda _{th}^2)\). The ratio \(\lambda _{th} ^3/V\) is often called the quantum concentration (see for instance the textbook by Kittel and Kroemer [23]). When the density is so high that the wave packets overlap, bosons tend to go into the same quantum state. In the opposite case that the density is so small that the wave packets are not in contact for most of the time, the gas can be approximated as a classical ideal gas of well-localized atoms. Similar arguments can be made for fermionic gases: when the density is so large that all the wave packets touch each other, it cannot be further increased due to the Pauli principle, leading to a Fermi temperature that is up to a constant factor identical with the Bose–Einstein condensation temperature. Again, in the limit of very low density, quantum effects become completely irrelevant, and the Fermi gas can be treated like a classical gas. For thermally equilibrated fermions as well as for bosons, the specific quantum mechanical effects become thus only important when the concentration is not small compared to the quantum concentration.

For massless particles, such as photons and phonons, the thermal wavelength is \(\pi ^{3/2}\hbar c/k_BT\), with c being the velocity of light and sound, respectively. Interestingly, the density of photons and phonons in thermal equilibrium adjusts itself such that the average distance between these particles is of the same order as this thermal wavelength.

Closely associated with the thermal wavelength is a thermal time proportional to \(\hbar /k_BT\). In the nonrelativistic quantum theory of open systems, it sets the time scale beyond which the dynamics of the system shows the Markov property [41]. This time is of the order of the time that a wave packet with a kinetic energy of the order of \(k_BT\) needs to cover the distance of the thermal wavelength. In field-theoretical treatments of condensed matter physics, the inverse of the thermal time occurs as the Matsubara frequency.

All the foregoing considerations suggest that the thermal wavelength and thermal time of the degrees of freedom that constitute the heat bath set the length and time scales for quantum coherence and linear superposition for all particles that are part of the heat bath and all those that are in equilibrium with it and exchange energy with it. This view is to be distinguished from decoherence theory, which also gives a loss of quantum mechanical coherence beyond the thermal wavelength and thermal time. However, according to decoherence theory the quantum system and the heat bath are described by a combined Schrödinger equation, and the loss of quantum coherence in the considered quantum system is due to an entanglement with the heat bath. In contrast, the view advocated here is that there is no global wave function for the combination of quantum system and heat bath but that the dynamics of the heat bath is described by a (partially) stochastic time evolution of (quasi-)particle wave packets.

4 Conclusions

Let us come back to the finite-temperature gas mentioned in the Introduction. We have seen that molecular dynamics simulations would treat such a gas quantum mechanically only on short-length scales of the order of the thermal wavelength and classically beyond. Similarly, statistical mechanics requires the inclusion of quantum superposition only on distances of the order of the thermal wavelength. This means that the representation of the gas atoms as wave packets, which remain wave packets under time evolution, is the most appropriate one. For solids, the situation is similar: the lattice structure of a crystal presumes localized ions, and theories of electrical resistance all rely on localized conduction electrons. Very generally, statistical independence of subsystems is a starting assumption of statistical mechanics, and this means again that no entanglement is taken into account beyond short distances. The same is to be said about the product ansatz used in theories of quantum measurement and open quantum systems. All this (and all the other features of condensed matter theory and statistical mechanics described in the previous sections) implies that there are limits to unitary time evolution and quantum superposition in macroscopic, finite-temperature systems.

In fact, only as long as a quantum system does not interact with the rest of the world can it be described by the Schrödinger equation. When a system has finite temperature, it cannot avoid interaction with the rest of the world, as it emits thermal radiation. This is an irreversible process that makes the time evolution of the entire system non-unitary.

Theories and interpretations of quantum mechanics that try to describe the interaction with a heat bath by a unitary time evolution cannot solve the puzzles and contradictions posed by quantum mechanics. Global application of unitary time evolution always leads to superpositions of states and histories (each of which might resemble classical states and histories), and not to a unique, stochastically chosen outcome as observed in experiments on quantum measurement. This is the criticism brought forward by several authors [1, 35] against the claim that decoherence solves the measurement problem or explains the quantum-classical transition.

The most natural conclusion is, therefore, that there are limits of validity to the Schrödinger equation and to linear superposition. In fact, everybody would admit that the Schrödinger equation has limits of validity as it ignores relativistic effects, spin, and the coupling to the electrodynamic vacuum. Nevertheless, many scientists appear to think that these limitations of the Schrödinger equation do not become relevant in condensed matter physics, in particular since there are established methods for including the spin degrees of freedom and transitions that involve the emission or absorption of photons. However, this is an unfounded belief, since all three properties of the Schrödinger equation (linear superposition, determinism, time symmetry) are violated by the methods employed in statistical mechanics and condensed matter theory, as this paper has explained. Such ‘violations’ are obviously needed to describe adequately the phenomena observed in condensed matter.

This should remind us that all our theories in physics are models of reality and not an exact image of reality. They are idealizations that neglect many features of a system to explain the phenomena or properties of interest. Therefore, it should not be surprising that not everything can be described by one theory. Nevertheless, the challenge remains to figure out where the limits of the Schrödinger equation are in condensed matter systems. In the previous sections, we have pointed to the thermal wavelength and the thermal time. However, this applies only to those degrees of freedom that constitute the heat bath or that are in equilibrium with it. Superpositions, entanglement, and spatially extended quantum states can be sustained over considerable time in situations where the interaction with the thermal degrees of freedom is sufficiently weak. This applies to nuclear spins in NMR experiments and electron spins in ESR experiments, to photons that are entangled over many kilometers, or to macroscopic wave functions that constitute the superfluid or superconducting state and are protected by an energy gap from thermal excitations.

In fact these macroscopic wave functions are not quantum states in the full sense. As mentioned above, they have both classical and quantum features. Their phase is a quantum feature, but the amplitude is proportional to the square root of the particle density, which means that states with different particle numbers are not orthogonal to each other. But they should be according to the Fock-space formalism. Furthermore, calculations that involve these macroscopic wave functions are often nonlinear in the wave function, as for instance the Ginzburg–Landau theory of superconductivity, or the Gross–Pitaevskii equation for Bose condensates.

Crystals are another good example for classical features of a ‘ground state’ of a macroscopic system. They are the ‘vacuum’ for the phonons, just as a superfluid is a vacuum for rotons and phonons, and the BSC state is a vacuum for the Bogoliubov quasiparticles (which are unpaired electrons). Condensed matter physics thus shows us over and again that quantum systems depend on their classical context: first, the existence and properties of the considered ‘vacuum’, or medium, in which (quasi)particles live, is itself dependent on classical environmental variables such as temperature and pressure, and it assumes itself classical features. Second, the (quasi)particles themselves depend on the specific medium in which they occur. In view of this strong dependence of quantum systems on the classical context, a reductionist view that takes quantum mechanics as the foundation of everything, is untenable. We physicists need to learn to look at top-down influences in addition to bottom-up influences.

Biological systems are a particularly intriguing example for the interaction between classical and quantum physics. It still is an open question over which spatial and temporal scale quantum excitations occur in biological systems. Since these systems are nonequilibrium systems with a complex structure, there are no a priori reasons why all these excitations should be confined to the thermal length and time scales. The emergent field of quantum biology will in the future certainly provide important insights into the limits of quantum superposition.

References

Adler, S.L.: Why decoherence has not solved the measurement problem: a response to P.W. Anderson. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 34(1), 135–142 (2003)

Ballentine, L.E.: The statistical interpretation of quantum mechanics. Rev. Mod. Phys. 42, 358–381 (1970)

Bassi, A., Lochan, K., Satin, S., Singh, T.P., Ulbricht, H.: Models of wave-function collapse, underlying theories, and experimental tests. Rev. Mod. Phys. 85(2), 471 (2013)

Breuer, H.-P., Petruccione, F.: The Theory of Open Quantum Systems. Oxford University Press on Demand, Oxford (2002)

Chibbaro, S., Rondoni, L., Vulpiani, A.: Reductionism, Emergence and Levels of Reality, Ch. 6. Springer, Berlin (2014)

Deutsch, J.M.: Quantum statistical mechanics in a closed system. Phys. Rev. A 43(4), 2046 (1991)

Drossel, B.: Connecting the quantum and classical worlds. Annalen der Physik 529(3), 1600256 (2017)

Drossel, B.: Ten reasons why a thermalized system cannot be described by a many-particle wave function. Stud. Hist. Philos. Sci. Part B Stud. Hist. Philos. Mod. Phys. 58, 12–21 (2017)

Drossel, B., Ellis, G.: Contextual wavefunction collapse: an integrated theory of quantum measurement. New J. Phys. 20(11), 113025 (2018)

Eisert, J., Friesdorf, M., Gogolin, C.: Quantum many-body systems out of equilibrium. Nat. Phys. 11(2), 124–130 (2015)

Ellis, G.: How Can Physics Underlie the Mind? Top-down Causation in the Human Context. Springer, Heidelberg (2016)

Ellis, G.F.R.: On the limits of quantum theory: contextuality and the quantum-classical cut. Ann. Phys. 327(7), 1890–1932 (2012)

Hugh Everett, I.I.I.: “Relative state” formulation of quantum mechanics. Rev. Mod. Phys. 29(3), 454 (1957)

Fuchs, C.A.: Qbism, the perimeter of quantum Bayesianism. arXiv preprint arXiv:1003.5209 (2010)

Gisin, N.: Collapse. What else? In: Gao, S. (ed.) Collapse of the Wave Function: Models, Ontology, Origin, and Implications, pp. 207–224. Cambridge University Press, Cambridge (2018)

Gisin, N.: Indeterminism in physics, classical Chaos and Bohmian mechanics. Are real numbers really real? arXiv preprint arXiv:1803.06824 (2018)

Gogolin, C., Eisert, J.: Equilibration, thermalisation, and the emergence of statistical mechanics in closed quantum systems. Rep. Prog. Phys. 79(5), 056001 (2016)

Grabowski, P.E.: A review of wave packet molecular dynamics. In: Frontiers and Challenges in Warm Dense Matter, pp. 265–282. Springer, Berlin (2014)

Grangier, P., Auffèves, A.: What is quantum in quantum randomness? Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 376(2123), 20170322 (2018)

Griffiths, R.B.: Consistent histories and the interpretation of quantum mechanics. J. Stat. Phys. 36(1–2), 219–272 (1984)

Hollowood, T.J.: Decoherence, discord, and the quantum master equation for cosmological perturbations. Phys. Rev. D 95(10), 103521 (2017)

Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620 (1957)

Kittel, C., Kroemer, H.: Thermal Physics. Macmillan, London (1980)

Walter Kohn and Lu Jeu Sham: Self-consistent equations including exchange and correlation effects. Phys. Rev. 140(4A), A1133 (1965)

Landau, L.D., Lifshitz, E.M.: Course of Theoretical Physics. Elsevier, Amsterdam (2013)

Laughlin, R.B., Pines, D.: The theory of everything. In: Proceedings of the national academy of sciences of the United States of America, pp. 28–31 (2000)

Leggett, A.J.: On the nature of research in condensed-state physics. Found. Phys. 22(2), 221–233 (1992)

Marx, D., Hutter, J.: Ab initio molecular dynamics: theory and implementation. Mod. Methods Algorithms Quantum Chem. 1, 301–449 (2000)

Matyus, E.: Pre-born-oppenheimer molecular structure theory. arXiv preprint arXiv:1801.05885 (2018)

Popescu, S., Short, A.J., Winter, A.: Entanglement and the foundations of statistical mechanics. Nat. Phys. 2(11), 754–758 (2006)

Primas, H.: Chemistry, Quantum Mechanics and Reductionism: Perspectives in Theoretical Chemistry, vol. 24. Springer, Heidelberg (2013)

Reimann, P., Evstigneev, M.: Quantum versus classical foundation of statistical mechanics under experimentally realistic conditions. Phys. Rev. E 88(5), 052114 (2013)

Rovelli, C.: Relational quantum mechanics. Int. J. Theor. Phys. 35(8), 1637–1678 (1996)

Rovelli, C.: Space is blue and birds fly through it. Philos. Trans. R. Soc. A 376, 2017.0312 (2018)

Schlosshauer, M.: Decoherence, the measurement problem, and interpretations of quantum mechanics. Rev. Mod. Phys. 76(4), 1267 (2005)

Schwabl, F.: Advanced quantum mechanics. Springer, Berlin, Heidelberg (2005)

Schwabl, F., Brewer, W.D.: Statistical Mechanics. Advanced Texts in Physics. Springer, Berlin (2006)

Schwabl, F.: Quantum mechanics. Springer, Berlin, Heidelberg (2007)

Srednicki, M.: Chaos and quantum thermalization. Phys. Rev. E 50(2), 888 (1994)

Tuckerman, M.E., Martyna, G.J.: Understanding modern molecular dynamics: techniques and applications. J. Phys. Chem. B 104(2), 159–178 (2000)

Weiss, U.: Quantum Dissipative Systems, vol. 13. World Scientific, Singapore (2012)

Wojciech Hubert Zurek: Decoherence, einselection, and the quantum origins of the classical. Rev. Mod. Phys. 75(3), 715 (2003)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Drossel, B. What condensed matter physics and statistical physics teach us about the limits of unitary time evolution. Quantum Stud.: Math. Found. 7, 217–231 (2020). https://doi.org/10.1007/s40509-019-00208-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40509-019-00208-3