Abstract

A substantial proportion of the classroom time involves exposing students to a variety of assessment tasks. As students process these tasks, they develop beliefs about the importance, utility, value, and difficulty of the tasks. This study aimed at deriving a model describing the multivariate relationship between students’ perceptions of the assessment tasks and classroom assessment environment as a function of gender. Using a clustering sampling procedure, participants were 411 students selected from the second cycle of the basic education grades at Muscat public schools in Oman. As defined by McMillan (Educational research: Fundamentals of the consumer, 2012, pp. 176–177), the research design employed in this study was descriptive in nature that includes correlational and comparative aspects. Results revealed statistically significant gender differences with respect to the perceptions of the assessment tasks and classroom assessment environment favoring female students. Also, results showed that for both males and females, a learning-oriented assessment environment tended to be associated with high degrees of congruence with instruction, authenticity, student consultation, and diversity. However, the relationship between performance-oriented assessment environment and perceptions of the assessment tasks differed in male and female classrooms. Implications for instruction and assessment as well as recommendations for future research were discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The Conceptual Framework of the Study

Of increasing interest to educational assessment researchers is the role of classroom assessment environment in motivating students to learn (Brookhart 1997). The present study aimed at describing the relationship between students’ perceptions of the assessment tasks and classroom assessment environment. Theoretically, the conceptual foundation of the study is based on a synthesis of previous work done in classroom assessment by Ames (1992), Brookhart (1997), McMillan and Workman (1998), and Stiggins and Chappuis (2005). As suggested by Brookhart (2004), theory relevant to studying classroom assessment comes from different fields such as theories of learning and motivation, sociology, and social learning theory. For example, based on achievement goal theory, Ames (1992) argued that the following classroom assessment practices are likely to lead to a learning-oriented assessment environment: (a) designing assessment tasks that include challenge, variety, novelty, and active involvement; (b) giving students opportunities to make choices and decisions regarding their learning; (c) providing private recognition and rewards that focus on individual student effort and improvement; (d) creating small groups of heterogeneous abilities that encourage working effectively with others on learning tasks and developing a feeling of belongingness; (e) conducting evaluation practices that are private, assess progress, improvement, and mastery, and avoid social comparisons; and (f) allowing for time on the assessment task to vary with the nature of the task and student needs.

In 1997, Brookhart offered a theoretical framework for the role of classroom assessment in student motivation and achievement. The framework integrated classroom assessment literature and social-cognitive theories of learning and motivation. In this framework, Brookhart postulated that students’ perceptions of the assessment tasks in terms of difficulty, importance, interest, utility, complexity, and value communicate certain characters of the classroom assessment environment to students, which in turn might influence their motivational beliefs and achievement. Building on Brookhart’s (1997) theoretical framework and other theories of learning and motivation, McMillan and Workman (1998) demonstrated that the following assessment practices are likely to encourage students to perceive their classroom assessment environment as being instrumental to learning: (a) being clear about how learning will be evaluated, (b) providing specific feedback following an assessment activity, (c) using mistakes to show students how learning can be improved, (d) using moderately difficult assessments, (e) using many assessments rather than a few major tests, (f) using authentic assessment tasks, (g) using pre-established criteria for evaluating student work, (h) providing incremental assessment feedback, and (i) providing scoring criteria before administering the assessment task.

Along similar lines, Stiggins and Chappuis (2005) described four conditions that together may foster positive perceptions of the assessment environment. These conditions state that classroom assessments should focus on clear purposes, provide accurate reflections of achievement, provide frequent descriptive feedback on work improvement rather than judgmental feedback, and involve students in the assessment process. Armed with the aforementioned conceptual framework, this study aimed at developing a model describing the relationships between students’ perceptions of the assessment tasks and classroom assessment environment as a function of gender for Omani students in the second cycle of the basic education grades. Following is a review of literature in these areas.

Perceptions of Assessment Tasks

A substantial proportion of the classroom time involves exposing students to a variety of assessment tasks (Mertler 2003). Educators have long recognized that the assessment tasks used in the classroom communicate important messages to students about the value, importance, and usefulness of the tasks (Black and Wiliam 1998; Linnenbrink and Pintrich 2001, 2002; McMillan and Workman 1998). The characteristics of the assessment tasks as perceived by students are central to the understanding of student motivation and achievement-related outcomes (Alkharusi 2008, 2010, 2011; Dorman et al. 2006; Watering et al. 2008). Hence, students’ perceptions of the assessment tasks should deserve recognition and investigation.

Research has shown that classroom assessment tasks can be evaluated from students’ perspectives along a variety of dimensions. For example, based on a sample of 658 science students in English secondary schools, Dorman and Knightley (2006) developed a 35-item inventory measuring students’ perceptions of the assessment tasks along five dimensions: congruence with planned learning, authenticity, student consultation, transparency, and diversity. Congruence with planned learning refers to the extent to which students perceive the assessment tasks align with the subject’s learning objectives and activities. Authenticity refers to the extent to which students perceive the assessment tasks are related to their everyday living. Student consultation refers to the extent to which students are involved and consulted in the assessment process. Transparency refers to the extent to which students are clearly informed about the purposes and forms of the assessment. Diversity refers to the extent to which students perceive that they can complete the assessment tasks at their own speed.

Dorman et al. (2006) provided evidence that assessment tasks with low degrees of congruence with planned learning, authenticity, and transparency could have a detrimental effect on the confidence of students in successfully performing academic tasks. In their study of upper secondary Bruneian students’ perceptions of assessment tasks, Dhindsa et al. (2007) found that although students perceived that their classroom assessment tasks aligned with what they learned in the classes and had transparency, there were low levels of student consultation, authenticity, and diversity. Both Dorman et al. (2006) and Dhindsa et al. (2007) argued for more research identifying perceived characteristics of the assessment tasks supportive of a classroom environment that is conducive to increased student learning.

Classroom Assessment Environment

The assessment tasks are typically designed for students by the classroom teacher. The overall sense or meaning that students make out of the various assessment tasks constitutes the classroom assessment environment (Brookhart and DeVoge 1999). Brookhart (1997) described assessment environment as a classroom context experienced by students as the teacher establishes assessment purposes, assigns assessment tasks, sets performance criteria and standards, gives feedback, and monitors outcomes. Brookhart and her colleagues pointed out that each classroom has its own “assessment ‘character’ or environment” perceived by the students and springs from the teacher’s assessment practices (Brookhart 2004, p. 444; Brookhart and Bronowicz 2003).

Several researchers have studied classroom assessment environment in relation to student achievement-related outcomes. For example, Church et al. (2001) found that students’ perceptions of the assessment environment as being interesting and meaningful were positively related to adoption of mastery goals, whereas perceptions of the assessment environment as being difficult and focusing on grades rather than learning were negatively related to adoption of mastery goals and positively related to adoption of performance goals. Likewise, Wang (2004) found that after controlling for student gender, students’ perceptions of the assessment environment as being learning oriented contributed positively to their adoption of mastery goals, whereas students’ perceptions of the assessment environment as being test oriented contributed negatively to their adoption of performance goals. Similarly, Alkharusi (2009) reported that students’ perceptions of the assessment environment as being learning oriented were positively related to students’ self-efficacy and mastery orientations, whereas students’ perceptions of the assessment environment as being hard and emphasizing grades contributed negatively to students’ self-efficacy and mastery orientations.

However, the measurement of the students’ perceptions of the assessment environment was not quite clear in the previous studies. As such, in an attempt to quantify students’ perceptions of the classroom assessment environment, Alkharusi (2011) developed a scale measuring students’ perceptions of the classroom assessment environment. The development of the scale was theoretically grounded on achievement goal theory. The findings showed that students’ perceptions of the assessment environment centered around two facets: learning- and performance oriented. The learning-oriented assessment environment focused on assessment practices that enhance student learning and mastery of content materials such as asking students a variety of meaningful assessment tasks with moderate difficulty, giving them opportunities to improve their performance, and providing them informative assessment feedback. The performance-oriented assessment environment focused on assessment practices that provide students difficult and less meaningful assessment tasks with unattainable assessment standards and criteria, emphasize the importance of grades rather than learning, and compare students’ performances normatively. In light of the conceptual framework of the current study outlined by Ames (1992), Brookhart (1997), McMillan and Workman (1998), and Stiggins and Chappuis (2005) and the theoretical framework of the Dorman and Knightley’s (2006) Perceptions of Assessment Tasks Inventory (PATI) as well as previous research work (e.g., Alkharusi 2008, 2009; Dorman et al. 2006), it is expected that students are likely to perceive their assessment environment as being learning oriented in classes having assessment tasks with a high degree of congruence with planned learning, authenticity, student consultation, transparency, and diversity. Also, it is expected that students are likely to perceive their assessment environment as being performance oriented in classes having assessment tasks with a low degree of congruence with planned learning, authenticity, student consultation, transparency, and diversity.

Gender

Previous research on students’ perceptions of the classroom assessment tasks and assessment environment has suggested that students’ gender might need to be considered. Specifically, female students tend to report more positive perceptions of their classroom environment than male students (Alkharusi 2010, 2011; Anderman and Midgely 1997; Meece et al. 2003). Also, student gender was found to moderate the relationship between assessment environment and motivation-related outcomes. For example, Wang (2004) found that performance goals were positively related to both perceptions of the classroom assessment environment as being learning- and test oriented for male students, but not for female students. Also, mastery goals were found to be positively related to perceptions of the classroom assessment environment as being learning oriented for male students, but not for female students. However, both Alkharusi (2013) and Dhindsa et al. (2007) found no gender differences on the students’ perceptions of the assessment tasks. These findings call for further examination of the role of student gender on the perceptions of the assessment tasks and assessment environment.

Gender stereotypes and differential gender role socialization patterns are often used to explain gender differences in student perceptions and other achievement-related outcomes (Alkharusi 2008, 2010; Kenney-Benson et al. 2006; Lupart et al. 2004). However, when considering the context of the present study, Omani students in the second cycle of the basic education grades are disaggregated by gender in public schools. Male- and female students’ schools are separated. Also, male students are taught by male teachers only, and female students are taught by female teachers only. As aforementioned above, the classroom assessment environment is typically created by the teacher for the students (Brookhart 1997). As such, considering the disaggregation nature of the public schools in Oman becomes critical to comprehending the potential gender differences in students’ perceptions of the assessment tasks and assessment environment.

Although much has been written about the role of students’ perceptions of the classroom assessment tasks and assessment environment in student motivation and achievement-related outcomes (e.g., Alkharusi 2008, 2010; Brookhart 2004; Brookhart et al. 2006; Cauley and McMillan 2010; Nolen 2011; Rodriguez 2004), research investigating which perceptions of the assessment tasks would be most relevant to a specific classroom assessment environment is limited. The current study aimed at developing a model of the classroom assessment environment to acquire a better understanding of the relationship between students’ perceptions of the classroom assessment tasks and assessment environment. Armed with the aforementioned past studies, the present study will also investigate gender differences with respect to the perceptions of the classroom assessment tasks and assessment environment.

Purpose of the Study and Research Questions

As suggested above, several classroom assessment educators (e.g., Brookhart 1997, 2004; McMillan and Workman 1998) have considered what a theory of classroom assessment may look like. Such a theory should be able to inform teachers what features of the classroom assessment tasks are conducive to an assessment environment that enhances student motivation and learning. However, there is a paucity of research studying association between perceived characteristics of the assessment tasks and classroom assessment environment. Therefore, the current study attempted to fill this gap by investigating the relationship between students’ perceptions of the assessment tasks and classroom assessment environment.

Specifically, the study aimed at developing a model that describes the potential meaningful multivariate relationship between students’ perceptions of the assessment tasks and classroom assessment environment. The model was expected to illustrate which perceptions of the assessment tasks would be most relevant to a specific classroom assessment environment. Based on previous research, in order to develop a descriptive model of the classroom assessment environment, there seems a need to examine gender differences with respect to students’ perceptions of the assessment tasks and classroom assessment environment. This study attempted to meet this need too. Hence, the study was guided by the following research questions:

-

(1)

Are there statistically significant gender differences with respect to students’ perceptions of the assessment tasks and classroom assessment environment?

-

(2)

How do students’ perceptions of the assessment tasks relate to their perceptions of the classroom assessment environment?

Methods

Research Design

As defined by McMillan (2012, pp. 176–177), the research design employed in this study was descriptive in nature that includes correlational and comparative aspects. The correlational part involves studying the multivariate relationship between students’ perceptions of the assessment tasks and classroom assessment environment. The comparative part involves examining gender differences with respect to students’ perceptions of the assessment tasks and classroom assessment environment. As such, causal relationships cannot be established from the findings of the study.

Participants and Procedures

The target population of this study was students in the second cycle of the basic education grades at Muscat public schools in Oman. A list of all students could not be obtained from the Ministry of Education in Oman. Therefore, a clustering sampling procedure was employed to select the students by utilizing a list of all public schools in Muscat. The list contained 36 male- and 36 female schools. A random sample of 10 male- and 10 female schools was selected. Then, one grade level of the second cycle of the basic education grades was randomly selected from each school, and all students from that grade was included in the study. This resulted in a sample of 585 Omani students (365 females and 220 males) being surveyed. Valid responses were obtained from 411 Omani students (259 females and 152 males) with an overall response rate of about 70 %. Their ages ranged from 12 to 17 years with an average of 15 and a standard deviation (SD) of 1.24. Permission was requested from the Ministry of Education and school principals to collect data from the students during a regular scheduled class meeting. The students were informed that a study about their perceptions of the classroom assessment environment is being conducted. They were informed that they were not obligated to participate in the study, and if they wished to participate, their responses would remain anonymous and confidential. They were also told that participation in the study would not influence their grades or relations with the teacher in any way.

Students who wished to participate were asked to respond to a self-report questionnaire, which will be described in a later section of this study. It contained three main sections about demographic information in terms of gender and age, perceptions of the assessment tasks, and perceptions of the assessment environment. The questionnaire was administered by assistant researchers during a scheduled class meeting. The administration took about one class period, and was preceded by a brief set of instructions about how to complete the questionnaire.

Instrument

The instrument used was a self-report questionnaire with three main sections: demographic information, perceptions of the assessment tasks, and perceptions of the assessment environment. The questionnaire items were subjected to a content validation process done by a panel of seven experts in the areas of educational measurement and psychology from Sultan Qaboos University and Ministry of Education. They were asked to judge the clarity of wording and appropriateness of each item for the use with the targeted participants and its relevance to the construct being measured. Their feedback was used for refinement of the items. Internal consistency reliability of the whole questionnaire was .91 as measured by Cronbach’s α. Internal consistency reliability of the different sections of the questionnaire was established using Cronbach’s α as described in the respective sections below.

With respect to factorial validity, responses to the items of the questionnaire were subjected to principal-component analyses. Details about the analyses are explained later in the next sections. Although some of the items have factor loadings as low as .30, these sizes of the loadings were comparable to the original versions of the instruments in the previous studies as it will be mentioned later in the next sections. In addition, the size of the loadings might have been influenced by the homogeneity of the scores in the sample, and as such a low cutoff (.30) was used for interpretation of the factors as suggested by Tabachnick and Fidell (2013).

Demographic Information

The demographic information of the questionnaire covered gender and age.

Perceptions of Assessment

This section of the questionnaire included 35 items from Dorman and Knightley’s (2006) PATI. The items measure students’ perceptions of assessment tasks in terms of congruence with planned learning (7 items; α = .73; e.g., “I am assessed on what the teacher has taught me”), authenticity (7 items; α = .75; e.g., “My assessment tasks in this class are meaningful”), student consultation (7 items; α = .74; e.g., “I am asked about the types of assessment I would like to have in this class”), transparency (7 items; α = .85; e.g., “I am told in advance when I am being assessed”), and diversity (7 items; α = .63; e.g., “I am given a choice of assessment tasks”). Responses were obtained on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Alkharusi (2013) translated the PATI from English to Arabic and tested the validity and reliability of the translated version of the PATI for use with Omani students. With regard to validity, Alkharusi (2013) reported that a principal-components factor analysis with varimax rotation on the 35 items of the Arabic version of the PATI showed that together the five factors accounted for 43.78 % of the total variance. The first factor accounted for 10.27 % of the variance (eigenvalue = 2.32) and consisted of the seven transparency items, with loadings ranging from .49 to .68. The second factor accounted for 9.80 % of the variance (eigenvalue = 2.11) and consisted of the seven authenticity items, with loadings ranging from .37 to .67. The third factor accounted for 9.26 % of the variance (eigenvalue = 2.09) and consisted of the seven diversity items, with loadings ranging from .44 to .62. The fourth factor accounted for 7.42 % of the variance (eigenvalue = 1.97) and consisted of the seven congruence with planned learning items, with loadings ranging from .30 to .69. The fifth factor accounted for 7.04 % of the variance (eigenvalue = 1.69) and consisted of the seven student consultation items, with loadings ranging from .36 to .62. With regard to reliability, Alkharusi (2013) reported that internal consistency coefficients for the measures of congruence with planned learning, authenticity, student consultation, transparency, and diversity were .71, .72, .65, .66, and .63 as indicated by Cronbach’s α, respectively.

In the current study, the Arabic version of the PATI was used. The principal-components factor analysis was also conducted on the 35 items of the Arabic version of the PATI. Both varimax and oblimin rotation methods yielded similar results. Based on the analysis, together the five factors accounted for 42.36 % of the total variance. The first factor accounted for 24.46 % of the variance (eigenvalue = 8.32) and consisted of the seven transparency items, with loadings ranging from .32 to .67. The second factor accounted for 6.22 % of the variance (eigenvalue = 2.12) and consisted of the seven diversity items, with loadings ranging from .34 to .66. The third factor accounted for 4.75 % of the variance (eigenvalue = 1.61) and consisted of the seven authenticity items, with loadings ranging from .42 to .69. The fourth factor accounted for 3.65 % of the variance (eigenvalue = 1.24) and consisted of the seven student consultation items, with loadings ranging from .42 to .60. The fifth factor accounted for 3.27 % of the variance (eigenvalue = 1.11) and consisted of the seven congruence with planned learning items, with loadings ranging from .36 to .71. The score reliabilities of the current sample seem comparable to those reported by Dorman and Knightley (2006) and Alkharusi (2013). Specifically, internal consistency coefficients in this study for the measures of congruence with planned learning, authenticity, student consultation, transparency, and diversity were .71, .72, .66, .76, and .73 as indicated by Cronbach’s α, respectively. Internal consistency reliability of the whole section of the 35 items of the PATI was .91 as measured by Cronbach’s α. Each measure was constructed by averaging its corresponding items.

Assessment Environment

This section of the questionnaire included 18 items from Alkharusi’s (2011) Perceptions of the Classroom Assessment Environment Scale (PCAES). This scale was designed and administered in Arabic. The items measure students’ perceptions of the classroom assessment environment as being learning oriented (9 items; α = .82; e.g., “In this class, the teacher helps us identify the places where we need more effort in future”), and performance oriented (9 items; α = .75; e.g., “The tests and assignments in this class are difficult to students”). Responses were obtained on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). Alkharusi (2011) tested factorial validity of the PCAES by conducting a principal-components analysis with varimax rotation based on a sample of 450 Omani students from the second cycle of the basic education grades. As reported by Alkharusi (2011), together the two factors accounted for 41.90 % of the total variance. The first factor accounted for 29.19 % of the variance (eigenvalue = 4.67) and consisted of the nine items of the learning-oriented assessment environment, with loadings ranging from .43 to .76. The second factor accounted for 12.71 % of the variance (eigenvalue = 2.03) and consisted of the nine items of the performance-oriented assessment environment, with loadings ranging from .36 to .84. In addition, Alkharusi (2011) examined criterion-related validity by correlating the scores of PCAES with the academic achievement scores. Results showed that perceptions of the assessment environment as being learning oriented correlated positively with academic achievement, r(448) = .31, p < .001; whereas perceptions of the assessment environment as being performance oriented correlated negatively with academic achievement, r(448) = −.20, p < .05.

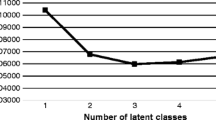

In this study, the PCAES was also administered in Arabic. Its factorial validity was examined by conducting a principal-components analysis with varimax rotation. Results showed that together the two factors accounted for 32.09 % of the total variance. The first factor accounted for 17.69 % of the variance (eigenvalue = 3.18) and consisted of the nine items of the learning-oriented assessment environment, with loadings ranging from .45 to .71. The second factor accounted for 14.40 % of the variance (eigenvalue = 2.59) and consisted of the nine items of the performance-oriented assessment environment, with loadings ranging from .36 to .70. Internal consistency coefficients for the measures of perceived learning- and performance-oriented assessment environment were .76 and .68 as indicated by Cronbach’s α, respectively. Internal consistency reliability of the whole section of the 18 items of the PCAES was .70 as measured by Cronbach’s α. Each measure was constructed by averaging its corresponding items.

Data Analysis

In relation to the aforementioned purposes of the study, the following statistical procedures were employed:

-

(1)

Multivariate analyses of variances (MANOVAs) were conducted to examine gender differences with respect to students’ perceptions of the assessment tasks and classroom assessment environment.

-

(2)

Pearson-product moment correlation coefficients were computed to examine bivariate relationships between perceptions of the assessment tasks and classroom assessment environment.

-

(3)

A canonical correlation analysis was conducted to explore multivariate relationships between perceptions of the assessment tasks and classroom assessment environment.

Results

Multivariate Analyses of Variances

A MANOVA was conducted to assess the extent to which male and female students differ in their perceptions of the assessment tasks in terms of congruence with planned learning, authenticity, student consultation, transparency, and diversity. Table 1 presents the means (M) and SDs for males and females on the perceptions of the assessment tasks. Results indicated statistically significant multivariate effects for gender on the perceptions of the assessment tasks, F(5, 405) = 11.35, p < .001, Wilks’ Λ = .88. Gender accounted for approximately 12 % (η 2 = .123) of the variability in the perceptions of the assessment tasks. The univariate analyses indicated statistically significant effects for gender on the congruence with planned learning, F(1, 409) = 26.13, p < .001, η 2 = .06; authenticity, F(1, 409) = 12.38, p < .001, η 2 = .03; student consultation, F(1, 409) = 7.24, p < .01, η 2 = .02; and transparency, F(1, 409) = 47.81, p < .001, η 2 = .11. As shown in Table 1, female students reported more positive perceptions of the assessment tasks than male students with respect to the congruence with planned learning, authenticity, student consultation, and transparency.

Another MANOVA was conducted to examine gender differences on the perceptions of the classroom assessment environment. Table 2 presents the M and SDs for males and females on the perceptions of the classroom assessment environment. Results indicated statistically significant multivariate effects for gender on the perceptions of the classroom assessment environment, F(2, 408) = 16.90, p < .001, Wilks’ Λ = .92. Gender accounted for 7.6 % (η 2 = .076) of the variability in the perceptions of the classroom assessment environment. The univariate analyses indicated statistically significant effects for gender on the learning-oriented assessment environment, F(1, 409) = 12.07, p < .01, η 2 = .03; and performance-oriented assessment environment, F(1, 409) = 17.67, p < .001, η 2 = .04. As shown in Table 2, female students tended to perceive their classroom assessment environment as more learning oriented than male students; whereas male students tended to perceive their classroom assessment environment as more performance oriented than female students.

Bivariate Correlation Analyses

The aforementioned MANOVA showed statistically significant gender differences with respect to the perceptions of the assessment tasks and classroom assessment environment. Therefore, the bivariate correlational analysis of the perceptions of the assessment tasks and classroom assessment environment should be conducted separately for males and females. Tables 3 and 4 present the bivariate correlations between perceptions of the assessment tasks and perceived assessment environment for males and females, respectively. As shown in Tables 3 and 4, there were differences between males and females in the pattern of the bivariate correlations between perceptions of the assessment tasks and perceived assessment environment. In male classrooms, learning- and performance-oriented assessment environments were positively correlated with each other. In contrast, there was no statistically significant relationship between the two types of the assessment environment in female classrooms. Although both types of the assessment environment had positive correlations with perceived features of the assessment tasks in male classrooms, the correlations were stronger with the learning- than with the performance-oriented assessment environment. In female classrooms, learning-oriented assessment environment had statistically significant positive moderate correlations with the perceived features of the assessment tasks, whereas performance-oriented assessment environment had a statistically significant negative week correlation with congruence with planned learning and a statistically significant positive week correlation with student consultation. These gender differences in the bivariate correlations between perceptions of the assessment tasks and classroom assessment environment suggested multiple patterns or differential relationships between each of the two sets of variables.

As shown in Tables 3 and 4, the five dimensions of the assessment tasks (congruence with planned learning, authenticity, student consultation, transparency, and diversity) are intercorrelated. Based on the conceptual framework of the study outlined by Ames (1992), Brookhart (1997), McMillan and Workman (1998), and Stiggins and Chappuis (2005) and the theoretical framework of the Dorman and Knightley’s (2006) PATI, these dimensions are not independent. Theoretically, when these aspects of the assessment tasks are emphasized with a high degree in a classroom, it is expected that students are likely to perceive their assessment environment as being learning oriented (Alkharusi 2008). Alternatively, when these aspects of the assessment tasks are emphasized with a low degree in a classroom, it is expected that students are likely to perceive their assessment environment as being performance oriented (Alkharusi 2008).

Canonical Correlation Analyses

To study the underlying patterns of the relationships between the perceptions of the assessment tasks and perceived assessment environment, two multivariate linear models were fitted to the males and females’ data by means of canonical correlation analyses. The perceptions of assessment tasks were utilized as predictor variables of the perceived assessment environment. With regards to males’ data, the full model across all variates was statistically significant, F(10, 290) = 28.52, p < .001, Wilk’s Λ = .25; suggesting some relationship between the variable sets across the variates. The analysis yielded two canonical variates with squared canonical coefficients of .73 and .07 for each variate. Based on the dimension reduction analysis, the two pairs of the canonical variates should be interpreted, F(10, 290) = 28.52, p < .001 for the first pair; and F(4, 146) = 2.66, p < .05 for the second pair. Table 5 presents the standardized canonical coefficients between the perceptions of the assessment tasks and perceived assessment environment for male students. The first canonical variate accounted for 73 % of the common variance between the perceptions of the assessment tasks and perceived assessment environment, whereas the second canonical variate accounted for 7 % of the common variance between the two sets of the variables.

As also shown in Table 5, the perceived characteristics of the assessment tasks associated with the first canonical variate were congruence with planned learning, authenticity, student consultation, and diversity; whereas the perceived assessment environment correlated with the first canonical variate was learning-oriented assessment environment. Taken as a pair, in male classrooms, high degrees of congruence with planned learning, authenticity, student consultation, and diversity were associated with an assessment environment that is more oriented toward learning. The second canonical variate from the perceived features of the assessment tasks was composed of congruence with planned learning, authenticity, student consultation, transparency, and diversity; and the corresponding canonical variate from the assessment environment side was composed of performance-oriented assessment environment. More specifically, in male classrooms, high degrees of authenticity, student consultation, and diversity as well as low degrees of congruence with planned learning and transparency were associated with an assessment environment that is more oriented toward performance.

The first canonical variate explained 90 % of the variance in the perceptions of the assessment tasks and 65 % of the variance in the perceptions of the assessment environment. The second canonical variate explained 10 % of the variance in the perceptions of the assessment tasks and 35 % of the variance in the perceptions of the assessment environment. The two variates from the perceptions of the assessment tasks extracted 49 % of the variance in the perceptions of the assessment environment. Also, together, the variates from the perceptions of the assessment environment extracted 67 % of the variance in the perceptions of the assessment tasks.

With regards to females’ data, the full model across all variates was statistically significant, F(10, 504) = 40.15, p < .001, Wilk’s Λ = .31; suggesting some relationship between the variable sets across the variates. The analysis yielded two canonical variates with squared canonical coefficients of .65 and .12 for each variate. Based on the dimension reduction analysis, the two pairs of the canonical variates should be interpreted, F(10, 504) = 40.15, p < .001 for the first pair; and F(4, 253) = 8.44, p < .001 for the second pair. Table 6 presents the standardized canonical coefficients between the perceptions of the assessment tasks and perceived assessment environment for female students. The first canonical variate accounted for 65 % of the common variance between the perceptions of the assessment tasks and perceived assessment environment, whereas the second canonical variate accounted for 12 % of the common variance between the two sets of the variables.

As also shown in Table 6, the perceived characteristics of the assessment tasks associated with the first canonical variate were congruence with planned learning, authenticity, student consultation, and diversity; whereas the perceived assessment environment correlated with the first canonical variate was a learning-oriented assessment environment. Taken as a pair, in female classrooms, high degrees of congruence with planned learning, authenticity, student consultation, and diversity were associated with an assessment environment that is more oriented toward learning. The second canonical variate from the perceived features of the assessment tasks was composed of congruence with planned learning, student consultation and diversity; and the corresponding canonical variate from the assessment environment side was composed of performance-oriented assessment environment. More specifically, in female classrooms, high degrees of student consultation, and diversity as well as a low degree of congruence with planned learning were associated with an assessment environment that is more oriented toward performance.

The first canonical variate explained 74 % of the variance in the perceptions of the assessment tasks and 50 % of the variance in the perceptions of the assessment environment. The second canonical variate explained 17 % of the variance in the perceptions of the assessment tasks and 50 % of the variance in the perceptions of the assessment environment. The two variates from the perceptions of the assessment tasks extracted 83 % of the variance in the perceptions of the assessment environment. Also, together, the variates from the perceptions of the assessment environment extracted 50 % of the variance in the perceptions of the assessment tasks.

Discussion

Classroom assessment and its role in student motivation and achievement have been the focus of much attention for over the past years. Until recently, little research has been done on how student’ perceptions of the assessment tasks may relate to their perceptions of the classroom assessment environment. This study attempted to address this topic by having two main goals: (a) to examine gender differences on the perceptions of the assessment tasks and classroom assessment environment and (b) to assess the nature of the relationships between perceptions of the assessment tasks and classroom assessment environment. Investigating students’ perceptions of the assessment process should be helpful in providing teachers and other educators some insights regarding possible ways of creating classroom environments conducive to student learning and development. Also, understanding the classroom assessment process from the students’ perspectives in terms of accurateness, meaningfulness, and fairness is important to understanding the consequential validity of the assessment process (Schaffner et al. 2000).

Consistent with the findings from earlier research by Alkharusi (2011), Anderman and Midgely (1997), and Meece et al. (2003), results of the current study generally showed that female students tended to hold more positive perceptions of the assessment tasks and assessment environment than male students. In addition, the correlation analysis showed different patterns based on gender with regard to the relationships between the perceptions of the assessment tasks and classroom assessment environment. More specifically, learning- and performance-oriented assessment environments were positively correlated with each other in male classrooms, whereas there was no significant relationship between the two types of the assessment environment in female classrooms. This suggests that although the two types of the assessment environment may represent unique assessment climates in female classrooms, both types of the assessment environment might be operating simultaneously in male classrooms. Although both types of the assessment environment had positive correlations with perceived features of the assessment tasks in male classrooms, learning-oriented assessment environment in female classrooms had positive relationships with the perceived features of the assessment tasks, whereas performance-oriented assessment environment had a negative week correlation with congruence with planned learning and a positive week correlation with student consultation. These findings underpin the importance for future research to examine gender differences in the classroom assessment process to identify which assessment features are facilitative for learning of the different groups of students. Classroom observations and interviews might shed more light on the differential effects of gender on the assessment process.

Results from the canonical correlation analyses yielded two unique roots that accounted for interpretable amount of variance in the perceptions of the assessment tasks and assessment environment. For both males and females, the first canonical root was defined by a learning-oriented assessment environment and perceptions of assessment tasks in terms of congruence with planned learning, authenticity, student consultation, and diversity. Interpretation of this root suggests that the following features of the assessment process are likely to promote a learning-oriented assessment environment: (a) the assessment tasks align with the objectives of the instructional process and they are meaningful and relevant to the real-life of the students and that (b) the students are consulted and informed about the assessment tasks and they are given assessment tasks suitable to their ability. However, male and female students differed on the second root which was defined solely by the performance-oriented assessment environment on the assessment environment side. In males’ model, the performance-oriented assessment environment was associated with low congruence with planned learning, low transparency in the assessment, high authenticity, high student consultation, and high diversity. In female’s model, the performance-oriented assessment environment was associated with low congruence with planned learning, high consultation, and high diversity. These results imply that male classrooms acknowledge multiple forms of the assessment environment. This may be due to the complex educational goals operating in the males’ achievement settings. Perhaps the assessment process in males’ classes stimulates desires for competence in both intrapersonal sense and normative sense. Further research is needed to test whether simultaneous adoption of both learning- and performance-oriented assessment environments is associated with more desirable outcomes than adoption of a single assessment environment.

There are a number of limitations to the present study that necessitate cautious interpretation of the results. First, perhaps the shared variance among the perceptions of the assessment tasks might have masked more basic features of the assessment tasks that are true correlates of the specific assessment environment. Also, a systematic bias might have affected these results. On one hand, what students report regarding their perceptions of the classroom assessment might not be the same as the perceptions they make at the time they are actually engaging in an assessment task. On other hand, the students may not be able to accurately assess their perceptions of the classroom assessment. Classroom observations and interviews with students and teachers may help clarify these issues. Second, the study was descriptive in nature, so causal relationships between assessment tasks and assessment environment cannot be assumed and require additional research. Future researchers might need to consider some form of experimental research to testify the relationships found in the present study. A third limitation involves the generalizability of the results to all public schools in the country. Future research should consider a more representative sample selected from different educational governorates across the country. A fourth limitation is concerned with the imbalance of the male and female participants (152 vs. 259). Although the response rates were about 69 and 70 % for males and females, respectively, and that the assumption of the homogeneity of variance–covariance matrices was not rejected for the current sample data, future research should consider equal sample sizes of males and females.

However, the study, as it stands, has some ecological validity. It describes some important relationships between day-to-day classroom assessment activities. This should help teachers identify activities needed for creating an assessment environment that could maximize student learning. For example, the models imply that for effective learning to occur, congruence should exist between instructional objectives and assessment tasks. Specifically, the models suggest that congruence with planned learning might facilitate the development of a learning-oriented assessment environment, which should promote desirable motivation and achievement-related outcomes and impede the development of a performance-oriented assessment environment, which might minimize the likelihood of successful academic performance.

Implications of the Study

Teachers depend on day-to-day classroom assessment to enhance their instruction and its potential impact on student motivation and learning. The findings of this study raise some implications related to instruction and assessment. First, teachers should be aware that alignment between the objectives of the instruction and assessment tasks is essential for desirable outcomes of student motivation and learning. Second, the assessment tasks themselves should emphasize authenticity linking instruction and assessment in meaningful ways. Third, teachers should involve students in the assessment process to motivate them to learn. In this regard, teachers should change their “view of assessment as something that is being done to students to something that is being done with and for the students” (Klenowski 2009, p. 89). This can partially be accomplished by having effective instructional conversations between teachers and students about the instructional objectives and the assessment process (Ruiz-Primo 2011). Finally, continuous professional development programs should be developed for teachers to encourage them formulating assessment practices capable of creating an assessment environment conducive for student learning.

References

Alkharusi, H. (2008). Effects of classroom assessment practices on student’s achievement goals. Educational Assessment, 13, 243–266.

Alkharusi, H. (2009). Classroom assessment environment, self-efficacy, and mastery goal orientation: A causal model. INTI Journal, Special issue on teaching and learning, 104–116.

Alkharusi, H. (2010). Literature review on achievement goals and classroom goal structure: Implications for future research. Electronic Journal of Research in Educational Psychology, 8, 1363–1386.

Alkharusi, H. (2011). Development and datametric properties of a scale measuring students’ perceptions of the classroom assessment environment. International Journal of Instruction, 4, 105–120.

Alkharusi, H. (2013). Canonical correlational models of students’ perceptions of assessment tasks, motivational orientations and learning strategies. International Journal of Instruction, 6, 21–38.

Ames, C. (1992). Achievement goals and the classroom motivational climate. In D. H. Schunk & J. Meece (Eds.), Student perceptions in the classroom (pp. 327–348). Hillsdale, NJ: Erlbaum.

Anderman, E. M., & Midgely, C. (1997). Changes in achievement goal orientations, perceived academic competence, and grades across the transition to middle-level schools. Contemporary Educational Psychology, 22, 269–298.

Black, P., & Wiliam, D. (1998). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 80, 139–148.

Brookhart, S. M. (1997). A theoretical framework for the role of classroom assessment in motivating student effort and achievement. Applied Measurement in Education, 10, 161–180.

Brookhart, S. M. (2004). Classroom assessment: Tensions and intersections in theory and practice. Teachers College Record, 106, 429–458.

Brookhart, S. M., & Bronowicz, D. L. (2003). ‘I don’t like writing. It makes my fingers hurt’: Students talk about their classroom assessment. Assessment in Education: Principles, Policy and Practice, 10, 221–242.

Brookhart, S. M., & DeVoge, J. G. (1999). Testing a theory about the role of classroom assessment in student motivation and achievement. Applied Measurement in Education, 12, 409–425.

Brookhart, S. M., Walsh, J. M., & Zientarski, W. A. (2006). The dynamics of motivation and effort for classroom assessment in middle school science and social studies. Applied Measurement in Education, 19, 151–184.

Cauley, K. M., & McMillan, J. H. (2010). Formative assessment techniques to support student motivation and achievement. The Clearing House, 83, 1–6.

Church, M. A., Elliot, A. J., & Gable, S. L. (2001). Perceptions of classroom environment, achievement goals, and achievement outcomes. Journal of Educational Psychology, 93, 43–54.

Dhindsa, H. S., Omar, K., & Waldrip, B. (2007). Upper secondary Bruneian science students’ perceptions of assessment. International Journal of Science Education, 29, 1261–1280.

Dorman, J. P., Fisher, D. L., & Waldrip, B. G. (2006). Classroom environment, students’ perceptions of assessment, academic efficacy and attitude to science: A LISREL analysis. In D. Fisher & M. S. Khine (Eds.), Contemporary approaches to research on learning environment: Worldviews (pp. 1–28). Singapore: World Scientific Publishing.

Dorman, J. P., & Knightley, W. M. (2006). Development and validation of an instrument to assess secondary school students’ perceptions of assessment tasks. Educational Studies, 32, 47–58.

Kenney-Benson, G. A., Pomerantz, E., Ryan, A. M., & Patrick, H. (2006). Sex differences in math performance: The role of children’s approach to schoolwork. Developmental Psychology, 42, 11–26.

Klenowski, V. (2009). Australian indigenous students: Addressing equity issues in assessment. Teaching Education, 20, 77–93.

Linnenbrink, E. A., & Pintrich, P. R. (2001). Multiple goals, multiple contexts: The dynamic interplay between personal goals and contextual goal stresses. In S. Volet & S. Jarvela (Eds.), Motivation in learning contexts (pp. 251–270). Amsterdam: Pergamon.

Linnenbrink, E. A., & Pintrich, P. R. (2002). Motivation as an enabler for academic success. School Psychology Review, 31, 313–327.

Lupart, J. L., Cannon, E., & Telfer, J. A. (2004). Gender differences in adolescent academic achievement, interests, values and life-role expectations. High Ability Studies, 15, 25–42.

McMillan, J. H. (2012). Educational research: Fundamentals for the consumer (6th ed.). Boston: Pearson.

McMillan, J. H., & Workman, D. J. (1998). Classroom assessment and grading practices: A review of the literature. ERIC Document Reproduction Service No. ED453263.

Meece, J. L., Herman, P., & McCombs, B. L. (2003). Relations of learner-centered teaching practices to adolescents’ achievement goals. International Journal of Educational Research, 39, 457–475.

Mertler, C. A. (2003, October). Preservice versus inservice teachers’ assessment literacy: Does classroom experience make a difference? In Paper presented at the meeting of the Mid-Western Educational Research Association, Columbus, OH.

Nolen, S. B. (2011). The role of educational systems in the link between formative assessment and motivation. Theory into Practice, 50, 319–326.

Rodriguez, M. C. (2004). The role of classroom assessment in student performance on TIMSS. Applied Measurement in Education, 17, 1–24.

Ruiz-Primo, M. A. (2011). Informal formative assessment: The role of instructional dialogues in assessing students’ learning. Studies in Educational Evaluation, 37, 15–24.

Schaffner, M., Burry-Stock, J. A., Cho, G., Boney, T., & Hamilton, G. (2000). What do kids think when their teachers grade? In Paper presented at the Annual Meeting of the American Educational Research Association.

Stiggins, R., & Chappuis, J. (2005). Using student-involved classroom assessment to close achievement gaps. Theory into Practice, 44, 11–18.

Tabachnick, B. G., & Fidell, L. S. (2013). Using multivariate statistics (6th ed.). Boston: Pearson.

Wang, X. (2004). Chinese EFL students’ perceptions of classroom assessment environment and their goal orientations in the college English course. Unpublished Master’s Thesis, Queen’s University, Kingston, ON.

Watering, G., Gijbels, D., Dochy, F., & Rijt, J. (2008). Students’ assessment preferences, perceptions of assessment and their relationships to study results. Higher Education, 56, 645–658.

Acknowledgments

This research was thankfully supported by a grant (RC/EDU/PSYC/12/01) from The Research Council in Oman. This funding source had no involvement in the conduct of the research and preparation of the article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alkharusi, H., Aldhafri, S., Alnabhani, H. et al. Modeling the Relationship Between Perceptions of Assessment Tasks and Classroom Assessment Environment as a Function of Gender. Asia-Pacific Edu Res 23, 93–104 (2014). https://doi.org/10.1007/s40299-013-0090-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40299-013-0090-0