Abstract

Numerous studies have reported on the thermoregulation and hydration challenges athletes face in team and individual sports during exercise in the heat. Comparatively less research, however, has been conducted on the American Football player. Therefore, the purpose of this article is to review data collected in laboratory and field studies and discuss the thermoregulation, fluid balance, and sweat losses of American Football players. American Football presents a unique challenge to thermoregulation compared with other sports because of the encapsulating nature of the required protective equipment, large body size of players, and preseason practice occurring during the hottest time of year. Epidemiological studies report disproportionately higher rates of exertional heat illness and heat stroke in American Football compared with other sports. Specifically, larger players (e.g., linemen) are at increased risk for heat ailments compared with smaller players (e.g., backs) because of greater body mass index, increased body fat, lower surface area to body mass ratio, lower aerobic capacity, and the stationary nature of the position, which can reduce heat dissipation. A consistent finding across studies is that larger players exhibit higher sweating rates than smaller players. Mean sweating rates from 1.0 to 2.9 L/h have been reported for college and professional American Football players, with several studies reporting 3.0 L/h or more in some larger players. Sweat sodium concentration of American Football players does not seem to differ from that of athletes in other sports; however, given the high volume of sweat loss, the potential for sodium loss is higher in American Football than in other sports. Despite high sweating rates with American Football players, the observed disturbances in fluid balance have generally been mild (mean body mass loss ≤2 %). The majority of field-based studies have been conducted in the northeastern part of the United States, with limited studies in different geographical regions (i.e., southeast) of the United States. Further, there have been a limited number of studies examining body core temperature of American Football players during preseason practice, especially at the high school level. Future field-based research in American Football with various levels of competition in hotter geographical regions of the United States is warranted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Larger American Football players (e.g., linemen) are at increased risk for exertional heat illness and heat stroke compared with smaller players (e.g., backs) because of greater body mass index, increased body fat, lower surface area to body mass ratio, lower aerobic capacity, and the stationary nature of the position, which can reduce heat dissipation. |

In general, American Football players exhibit higher sweating rates than athletes in other sports. Sweat sodium concentration of American Football players does not seem to differ from other sports; however, given the high volume of sweat loss, the potential for sodium loss is higher in American Football than in other sports. |

Coaches, athletic trainers, and team personnel should be aware of the unique thermoregulation, fluid balance, and sweat loss challenges American Football players face during preseason practice and monitor players more diligently, particularly large linemen within the first 3–4 days of practice. |

1 Introduction

Exercise in the heat presents a challenge to maintaining thermal homeostasis and markedly impairs exercise capacity [1]. Multiple factors can disrupt thermal balance, including the exercise intensity, ambient environment, hypohydration, and clothing [1]. Studies have documented the influence of hot environments on body core temperature (T c), sweating rates, and fluid balance during practice and game play for various team and individual sports [2–4]. The majority of reports, however, from team and individual sports have primarily examined smaller athletes compared with the American Football player. American Football presents unique challenges for thermoregulation and fluid balance. This is due to the large body size of players as well as the high-intensity nature of the sport, uniform requirements, and preseason training and early competition occurring during the hottest parts of the year. Numerous reports have documented the disproportionately higher rates of exertional heat illness (EHI) and heat stroke (EHS) in American Football players compared with other sports [5–7]. Therefore, there is a need to understand the T c challenges these athletes face at various levels of competition. The intent of this review is to provide an overview of laboratory and field-based studies and discuss the thermoregulation, fluid balance, and sweat losses of American Football players. Specifically, we discuss the thermoregulatory challenges American Football players face during the preseason, the inhibiting nature of the required protective equipment, the increased risk for EHI and EHS among American Football players compared with other sports, and why larger American Football players are at a greater risk of EHI and EHS. EHI, which includes exercise-associated muscle cramps, heat syncope, heat exhaustion, and EHS, will be discussed in relation to preseason American Football practice. Sweating rates, body mass losses, fluid intakes, and sweat sodium concentrations will also be reviewed. Finally, practical applications, gaps in the literature, and recommendations for future avenues of research with American Football players will be presented.

2 Methods of Literature Search

The literature search was conducted using PubMed and EBSCO databases. Multiple search phrases pertaining to “American Football,” “thermoregulation,” “heat illness,” “heat stroke,” “sweating rates,” and “fluid balance” were used to identify relevant articles. Bibliographies of relevant articles were searched to identify any potential additional studies. The search period was from inception to January 2016. Selection criteria were broad in order to encompass a wide array of studies examining thermoregulation, fluid balance, and sweating rates of American Football players. The titles and abstracts were reviewed based on general inclusion criteria: English language, full-length articles published in peer-reviewed journals, and healthy youth and adult athletes. An overview of the studies is provided in Tables 1, 2.

3 Exertional Heat Illness and Heat Stroke in American Football

3.1 Exertional Heat Illness Rates

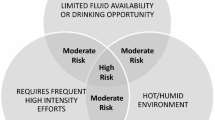

Thermal homeostasis is maintained when there is a balance between heat gain and heat dissipation. The body takes multiple measures to preserve T c within a relatively narrow range, predominately through redistribution of blood flow and activation of sweat glands [1]. A number of factors can challenge thermal homeostasis, including exercise intensity, the ambient environment, and clothing ensemble [1]. Exercise in the heat represents a major challenge to maintain a T c within a tolerable range for that individual athlete. As the ambient temperature and humidity increase, the capacity to dissipate heat is diminished. Due to the mismatch between heat production and dissipation, the athlete’s risk increases for EHI and EHS. EHS is defined as a T c of >40.5 °C associated with central nervous system disturbances and multiple organ system failure [13].

EHI has been estimated to impact 9000 high-school athletes each year [6]. Kerr et al. [6] analyzed data from 2005 to 2011 from the National High School Sports-Related Injury Surveillance System and showed EHI occurred at a rate of 1.2 incidences per 100,000 athlete exposures. American Football players accounted for 74.4 % of EHIs at a rate of 4.42 per 100,000 athlete exposures [6]. The highest percentages of EHIs occurred with offensive linemen (35.7 %), followed by defensive linemen (16.9 %) and linebackers (9.7 %) [6]. American Football players were 11.4 times more likely to develop EHI compared with all other sports combined [6]. The next largest number of EHI events occurred in girls’ volleyball (4.8 %), girls’ soccer (3.0 %), and boys’ wrestling (3.0 %) [6]. Most American Football EHI events occurred in the southeastern region of the United States during the month of August (60.3 %) and during the preseason (90.4 %) [6]. Huffman et al. [9] reported that 60 % of all EHIs in high school American Football players occurred during the preseason. The authors also noted that the majority of EHIs took place >1 h into the practice [9]. Similarly, Yard et al. [10] showed that most EHIs occurred either 1–2 h (46.6 %) or >2 h (37.2 %) after practice had begun. Kerr et al. [6] reported a similar percentage (36.7 %) for EHIs occurring >2 h into the practice session for American Football players. During a single American Football season in the southeastern United States, Cooper et al. [11] reported 139 EHIs among five universities. Exercise-associated muscle cramps accounted for 70 % of the EHIs, while heat exhaustion and heat syncope accounted for 23 and 7 %, respectively [11]. The vast majority of these EHI incidents (88 %) occurred in August [11]. No incidences of EHS were reported in the study [11].

3.2 Exertional Heat Stroke Fatalities

Exercise in the heat has also led to numerous deaths in high school, college, and professional sports due to EHS. American Football fatalities have been tracked since 1931 by the American Football Coaches Association, but not until 1960 were heat stroke deaths routinely monitored [7]. The National Center of Catastrophic Sport Injury Research (NCCSIR) reported 140 EHS deaths from 1960 to 2014 for American Football [7]. Since the mid 1990s, 54 American Football players have died from EHS, with 90 % of fatalities occurring during practice. Of the 54 deaths, 77 % occurred at the high school level [7]. Fatal EHS cases have doubled within the last 15 years, with 41 cases from 2000 to 2014, compared with 20 deaths from 1985 to 1999 [7]. Although major efforts have been made by governing bodies setting forth policies and recommendations to help reduce heat illness for American Football players during preseason practice, improvements are still clearly needed [9, 17]. For example, it has been recommended by the National Athletic Trainer’s Association (NATA) for all states to adopt appropriate heat acclimatization guidelines [12]. This is particularly warranted at the high school level, since this is the population in which the majority of EHS fatalities have occurred [7].

4 Thermoregulation in American Football Players

4.1 Anthropometry

The NATA guidelines list several non-environmental risk factors for EHI, including body mass index (BMI) [13]. Specifically, obese players (BMI ≥ 30 kg/m2 obese) are at greater risk for EHI [13]. Multiple studies have reported on the BMI profiles of American Football players [14–20]. Malina et al. [21] investigated 653 youth American Football players (9–14 years) and found that 45 % were classified as either overweight or obese according to their BMI. Kaiser et al. [14] assessed 65 Division I freshmen American Football players morphological profiles and showed that their average BMI (29.8 kg/m2) values classified them as either overweight or obese. They reported similar findings for professional American Football players (30.1 kg/m2). However, it is important to note that the players’ body fat percentages (13 % professional and 15 % collegiate) in this study [14] were found to be within an acceptable range for health status [22]. Recent studies using more sophisticated techniques to measure body composition (i.e., dual-energy X-ray absorptiometry) report that collegiate and professional American Football linemen have greater body compositions and BMI compared with non-linemen (i.e., quarterbacks, wide receivers, etc.) [15, 16, 23]. Havenith et al. [24] have suggested that increased body fat mass may exert an additional effect on the response to heat stress. This is due to the increase in metabolic rate for carrying the fat mass when compared with lean individuals [24].

The rise in EHS fatalities is likely multifactorial, but an increase in player size (BMI) has been proposed as one possible explanation [5]. Several studies have reported an increase in players BMI over time [17–20]. Multiple studies also support the role of BMI being a predisposing factor for heat ailments [5, 6, 13]. Of the 58 documented hyperthermia-related deaths of American Football players between 1980 and 2009, Grundstein et al. [5] reported that 79 % of the players were large, with a BMI of >30 kg/m2 and 86 % of those individuals played the lineman position. All deaths occurred under high (23–28 °C) or extreme (>28 °C) environmental conditions as defined by the American College of Sports Medicine (ACSM) using wet bulb globe temperature (WBGT) [5].

Other critical factors with EHS for large players include excess body fat percentage, decreased aerobic capacity, and lower surface area to body mass ratio [5]. Aerobic capacity has been shown to vary among American Football players at the college [25] and professional [26] level based upon position. Linemen have been reported to have lower VO2max (43.5–55.9 mL/kg/min) compared with backs (52.4–60.2 mL/kg/min) [25, 26]. VO2max is defined as the maximal capacity for oxygen consumption by the body during maximal exertion [27]. The lower surface area to mass ratio of larger players is a main contributing factor to higher T c [28, 29]. This is due to diminished heat dissipation with a lower surface area and greater heat production with a larger body mass [28, 29]. Further, the stationary nature of the lineman position, which is usually played by larger American Football players, could be another potential factor for a reduced heat dissipation compared with smaller players participating in more mobile positions [30, 31]. Other reported contributing factors in EHS deaths include hot and/or humid environmental conditions and time of year (preseason). Evidence that body size (BMI) and extreme environmental conditions can have varying effects on players’ health and safety means it is imperative that coaches and athletic trainers understand the risks associated with these factors for heat-related illness.

4.2 The American Football Uniform and Heat Stress

The American Football uniform covers 70 % of the skin surface area [32] and can impede heat loss through convection, radiation, and evaporation [32]. Evaporation of sweat from the skin provides the main mechanism for cooling the body and accounts for 80 % of heat loss during exercise [33]. Once the maximal evaporation required to maintain thermal balance surpasses the maximal evaporation possible in the ambient environment, heat storage becomes inevitable, especially during high-intensity exercise [34]. Clothing can reduce the capacity for evaporative heat loss by creating a microenvironment that is hotter and more humid than the ambient environment [34, 35]. The semi-encapsulating American Football uniform increases thermal insulation by inhibiting both dry and evaporative heat exchange with the environment. If the uniform reduces heat dissipation, this could potentially increase players’ risk for heat ailments. Therefore, it is critical to understand the impact of the American Football uniform on the maintenance of thermoregulation.

Nearly 50 years ago the first study was conducted on American Football uniforms, showing restricted heat loss with the uniforms during moderate environmental temperatures [36]. In 1969, Mathews et al. [37] performed a follow-up study having participants exercise for 30 min at a moderate environmental temperature wearing either a full American Football uniform or shorts. Results showed a greater peripheral blood flow and increased heart rate and sweating rate with the full American Football uniform compared with shorts. The American Football uniform also inhibited evaporative cooling, with a final T c of 39.0 °C compared with 38.4 °C with shorts at the end of the exercise bout. These early studies were foundational and clearly showed the added thermal strain of the American Football uniform. The studies were conducted in response to 12 EHS deaths from 1959 to 1962 in high school and college American Football players [36, 37]. The studies noted players’ deaths being attributed to multiple factors, including exercising in a humid environment, players dressed in full uniform, and restricted fluid intake. The majority of fatalities occurred within the first 2 days of practice, with players not acclimatized to the heat. Mathews et al. [37] made recommendations for preventative measures, which included allowing ad libitum drinking and heat acclimatization. This study provided warnings about the American Football uniform over 30 years prior to the National Collegiate Athletic Association (NCAA) policy for preseason American Football practice [38].

In 2002, thresholds for identifying levels of uncompensable heat stress were established for American Football uniforms under a variety of environmental conditions [39]. Data from this study were used by the ACSM to develop guidelines for wearing uniforms during exercise in the heat [8]. McCullough and Kenney [40] provided quantitative data modeling heat exchange while wearing an American Football uniform. This study showed a progressive increase in evaporative resistance and thermal insulation with the full American Football uniform compared with shorts or a partial uniform [40]. These results were later confirmed by Armstrong et al. [32], who showed that a full modern American Football uniform caused greater thermal and cardiovascular strain compared with a partial uniform or control (shorts and socks) in a hot environment. Elevated Tc with the full uniform has been shown to persist during recovery periods because of the inhibiting nature of the uniform retarding heat loss [31, 37]. Johnson et al. [41] also showed a higher T c in a full compared with a partial modern uniform. However, interestingly, players were unable to perceive the greater thermal strain. This is an important point for athletic trainers, as perceptual response in American Football players may not be indicative of thermal strain while exercising in the heat [41].

In 2003, the NCAA implemented a policy for preseason American Football practice to help players with heat acclimatization and reduce heat illness commonly associated with the first 7–10 days of practice. The policy provides guidelines for equipment progression before full pads can be worn, the duration of the practice, the number of practice sessions per day, and rest time between each practice for twice daily practices [38]. Prior to the 2003 NCAA policy, no guidelines existed for progression through the first weeks of preseason practice, except under the coach’s discretion.

4.3 Field-Based Studies

Prior to the 2003 NCAA policy, Godek et al. [42, 43] was the first to examine thermoregulation in American Football players (Division II) during a field-based study. The study investigated T c in American Football players compared with cross-country runners while exercising in a hot and humid environment (Table 1). A variety of American Football positions were selected (i.e., linemen, tight ends, linebackers, running backs, and defense backs), representing a wide range of body types. Players practiced twice a day for 8 days with the exception of day 7, in which the team participated in an intrasquad scrimmage. Equipment for American Football players included no pads on day 1 and thereafter half pads in the morning and full pads in the afternoon (days 2–6, 8). The American Football players and cross-country runners continued their normal training sessions throughout the study. The ambient temperature and humidity were similar in the morning and afternoon between days 4 and 8 for practices. T c was significantly higher on day 4 compared with day 8 in both American Football players and runners [42]. American Football players showed a higher T c with pads (either half or full) compared with no pads [42]. A significantly higher T c was also reported for full pads in the afternoon compared with half pads in the morning [43] (Table 1). The mean T c between full and half pads was small (0.2 °C, Table 1), with the authors noting the difference having little biological or clinical value [43]. There was a continual fluctuation in T c for American Football players throughout practice depending on exercise intensity and rest periods. Despite hot and humid conditions (Table 1), no player approached a T c of 40 °C with signs or symptoms of EHS.

In 2006, Yeargin et al. [44] investigated the new NCAA policy for heat acclimatization in Division I American Football players during preseason practice [38]. Over an 8-day period, the authors tested 11 players from various positions. They reported post-practice T c, measured when players were leaving the field, ranging from 37.2 to 40.7 °C. Over the 8-day period, post-practice T c exceeded 39 °C in 19 measurements, with 79 % of the occurrences in players with a BMI of >30 kg/m2. The authors noted T c was <39 °C for players with a mean BMI of ≤32 kg/m2 compared with T c of >39 °C for players with a BMI of ≥34 kg/m2. A weak but significant relation (r = 0.23) was shown for T c and BMI. However, no relation was found between positions and hyperthermia. The greatest physiological strain occurred in the first 2 days of practice, accounting for 45 % of all reported T c of >39.0 °C. Despite practices occurring in a warm-humid environment (28.1 °C WBGT and 65 % relative humidity, averaged from the first 2 days of practice), players were able to gradually acclimatize to the heat with no reports of EHI under the new NCAA policy (i.e., progressive increase in equipment, duration and the number of practices throughout the week).

Previous field studies have not reported a relation between player position and hyperthermia [42–45], potentially because of the low number of players in each position. A field study by Godek et al. [31] addressed this by examining T c between National Football League (NFL) linemen (i.e., offensive and defensive linemen) and backs (i.e., wide receivers, running backs, corner backs, tight ends, and linebackers) during twice daily preseason practices. They observed a significantly greater T c in NFL linemen compared with backs (Table 1). Higher T c occurred during individual drills and team and live scrimmages with linemen. Due to the intermittent nature of the sport, T c fluctuated throughout practice, rising during activity and decreasing during breaks, as shown in previous studies [42]. Waligum and Paolone [29] also reported higher T c in linemen compared with backs during a laboratory-based study. As previously mentioned (Sect. 4.1), the differences in T c between linemen and backs are multifactorial. Potential factors include anthropometric differences (i.e., BMI, percentage of body fat, body surface area, and surface area to mass ratio) and position-specific requirements influencing metabolic heat production or reduced heat dissipation [31, 46, 47], which was first proposed by Godek et al. [31].

One limitation of most field-based studies is the lack of data quantifying exercise intensity among players [31, 42, 44, 48]. A recent field study by DeMartini et al. [30] showed that linemen engaged in more isometric work and covered less distance at lower velocities than backs, although this does not suggest a lower metabolic rate or heat production of linemen compared with backs [30]. For example, backs covered greater distances and velocities compared with linemen, but average heart rate was the same during the total practice for backs and linemen [30]. Due to the stationary nature of the position for linemen, it is plausible that reduced heat dissipation could be a primary factor, among others, for higher T c. This was demonstrated by Deren et al. [46, 47] in a series of studies in which the authors had linemen and non-linemen exercise at a fixed metabolic heat production per unit of body surface area at 350 W.m−2 for 60 min at 32 °C. The results showed a significantly higher Tc and sweating rate with linemen despite a 25 % lower heat production per unit mass. This suggests that linemen have a compromised potential for heat dissipation via a diminished sweating efficiency due to a lower skin wettedness and lower sweat gland density [46].

In a follow-up field study, Deren et al. [47] reported that self-generated air movements were significantly lower in linemen compared with backs during preseason practice. The study showed convective and evaporative heat loss are lower during wind sprints, individual drills, and team scrimmages for linemen. Air flow, whether through self-generated movements or from the ambient environment (i.e., wind), helps to move warm air away via convective currents and can also help to evaporate sweat by increasing the evaporative capacity relative to still air [49]. Since linemen run at a lower velocity, less ambient air would circulate in the microenvironment, therefore reducing convective and evaporative heat loss [50]. Deren et al. [47] proposed including mechanical misting fans to artificially induce air flow and moisture, helping to increase convective and evaporative cooling during stationary drills or breaks in practice for offensive and defensive linemen [46, 47]. Previous laboratory-based studies [28, 29] are consistent with recent laboratory and field studies [46, 47] showing heat dissipation to be diminished in linemen compared with backs [29, 46, 47] and in larger versus smaller subjects [28]. Investigators have also examined differences in T c between offensive and defensive linemen [48]. However, no difference in T c between offensive and defensive linemen was shown for twice daily practices during the preseason [48], potentially due to similar characteristics between positions.

Considering the success of the NCAA policy for progression of preseason practice with American Football athletes [44], it is interesting that not every level of competition has adopted or implemented appropriate guidelines. In 2009, the NATA published preseason heat acclimatization guidelines for secondary school athletes [12]. However, numerous states at the high school level still have inadequate recommendations in place for heat acclimatization and progression through preseason practices [51]. Only 14 states have implemented all of the heat acclimatization guidelines set forth by the NATA, with other states being deficient [51]. Limited research exists on T c during preseason practice with high school players. Yeargin et al. [52] examined Tc in adolescent American Football players (Table 1) over 10 days of preseason practice in late August. Players practiced once a day for days 1–5 and 8–10 and twice daily for days 6 and 7. There was a main effect for time (days) on maximum T c, with T c higher on days 1–3 and lower on days 6 and 7. This is in agreement with previous field-based studies showing greater physiological strain during the first 2–3 days of practice in collegiate players [42, 44]. Maximal daily T c was correlated with maximum WBGT, in line with previous research [44]. The authors provided a model in which adolescent American Football players can safely participate in preseason practice without excessive T c occurring (<40 °C) [52].

4.4 Work to Rest Ratio

Work to rest ratio should also be considered in relation to environmental conditions during the preseason [13]. T c for American Football players has been shown to fluctuate during practice based upon periods of activity and rest [31], with exercise intensity being one of the primary factors influencing T c [31]. Guidelines from the NATA recommend the work to rest ratio be adjusted on the basis of the environmental conditions and intensity of the exercise [13]. As previously mentioned (Sect. 4.2), elevated T c with the full uniform has been shown to persist during recovery periods (i.e., rest breaks) because of the inhibiting nature of the uniform retarding heat loss [37, 53]. Therefore, athletes should be permitted and encouraged to remove equipment (i.e., helmets) during rest breaks [13]. The NATA further recommends that breaks should be planned in advance and located in the shade, with athletes having enough time to consume fluids [13].

5 Sweating Rate and Body Fluid Balance

5.1 Sweating Rate

As mentioned previously (Sect. 4.2), thermoregulatory sweating occurs during exercise and/or heat stress in an attempt to dissipate body heat and regulate Tc. With sweating, heat is transferred from the body to water (sweat) on the surface of the skin. When this water gains sufficient heat, it is converted to a gas (water vapor), thereby removing heat from the body [54]. Many intrinsic (e.g., genetics, aerobic capacity, body size, and heat acclimatization) and extrinsic (e.g., environmental temperature/humidity, practice intensity, clothing/protective equipment) factors modify the rate of sweating, thus the variability in sweat loss between athletes can be considerable [55]. Metabolic heat production is a major factor in dictating increases in T c and therefore sweating rate increases in proportion to exercise intensity [56]. Large body size [31, 57–62], high environmental temperatures [56], and wearing clothing or protective equipment [32, 37, 63] can also increase heat strain and sweating rate [55, 64].

The commonly cited range in sweating rate across a wide range of individuals during exercise is 0.5–2.0 L/h [55]. However, in American Football players, sweating rates above this range have been observed. Several studies of collegiate and professional American Football teams have reported mean sweating rates of ~2.0 L/h or more [31, 65, 66]. Furthermore, sweating rates 3.0 L/h or more have been reported in some larger adult players [45, 65, 67, 68]. Several studies have compared sweating rates among groups of athletes to determine whether differences in body size, playing position, level of play, age, uniform configuration, and other factors are associated with differences in sweat loss. The following sections discuss the impact that these various factors have on sweating rate in American Football players (see Table 2 for summary).

5.2 Body Size

Several authors have discussed the impact of body size (body mass or body surface area) on thermoregulation [31, 57–62]. It is well established that heavier athletes produce and store more heat at a given absolute work rate (e.g., running velocity) than their lighter counterparts [57–59]. It therefore stands to reason that body size significantly impacts thermoregulatory responses such as sweating rate. As such, a direct significant relation between sweating rate and body surface area [31, 60, 61] or body mass [60, 62] is commonly reported in American Football studies. In addition, the large differences in sweating rate reported between American Football and other sports are usually nullified by accounting for differences in body mass or body surface area (i.e., expressing sweating rate relative to body mass in kg or body surface area in m2) [55, 65, 67].

5.3 Playing Position and Level of Play

As discussed previously (Sect. 4.1), there are considerable size and body composition differences between certain playing positions, such as linemen versus backs. In addition to the effects of body size, a higher fat mass also increases body heat storage, due to inhibition of heat dissipation [57]. Given the impact of body size and composition on heat strain, it is not surprising that linemen have been shown to exhibit significantly higher sweating rates than backs during summer training [60, 61, 68]. For example, in one study with professional players, sweating rate was significantly higher in linemen (2.25 ± 0.68 L/h) and linebackers/quarterbacks (1.98 ± 0.48 L/h) than backs (1.40 ± 0.45 L/h) [68]. Only one study has compared sweating rates in American Football players of different playing levels. Godek et al. [61] tested collegiate and professional players matched for body mass, height, and body surface area playing under the same outdoor environmental conditions and found no differences in sweating rates between levels. In addition to body size, exercise intensity is obviously another important determinant of sweating rate. One laboratory study has compared regional sweating rates of linemen and backs cycling at a fixed metabolic heat production per unit body surface area [46]. In this study, linemen exhibited significantly greater sweating rates on the forehead, chest, shoulder, and forearm, compared with subjects who played a back position. Interestingly, sweating efficiency was found to be lower in the linemen, as T c was higher in linemen despite their greater sweating rates compared with backs [46]. More work in this area is needed to better understand the effect of player position and position-specific factors on heat strain, thermoregulatory sweating, and fluid replacement needs.

5.4 Age

As shown in Table 2, the sweating rates of youth American Football players are generally lower than those of collegiate and professional players. For instance, during summer training, the mean sweating rate in youths ranged from 0.6 to 1.3 L/h across three studies [52, 62, 69], while adults exhibited mean sweating rates of 1.0–2.9 L/h across nine studies [31, 43, 60, 61, 65, 66, 68, 70, 71]. In addition, in one study, Yeargin et al. [52] found that sweating rate (averaged across 10 days of preseason training) was significantly lower in younger (14–15 years, 0.6 ± 0.4 L/h) versus older (16–17 years, 0.8 ± 0.3 L/h) youth heat-acclimatized American Football players. It is important to note that these age/maturation differences among American Football players’ sweating rates could be due in part to unmatched exercise intensities within and between studies. Nonetheless, laboratory studies controlling for exercise intensity have also reported age/maturation differences in sweating rate [72–75]. In addition, the lower sweating rates in younger/pre-pubertal athletes remain even after accounting for differences in body mass or body surface area [67, 72–74].

5.5 Practice Versus Game

Only one American Football study has compared sweating rates during practices and games. McDermott et al. [62] tested 9- to 15-year-old players over the course of a 5-day summer camp and found that mean sweating rate during games (1.30 ± 0.57 L/h) was about two times that of practices (0.65 ± 0.35 L/h). The differences in sweating rate could be attributed to differences in exercise intensity or work to rest ratio since ratings of perceived exertion (on the 20-point Borg scale) were significantly higher during games (19 ± 2) compared with practices (16 ± 2) [26]. Additional work is needed to help determine how hydration needs of American Football players at all levels of play vary between practices and games.

5.6 Uniform Configuration

As discussed previously (Sect. 4.2), protective equipment impairs heat loss capacity because of its high insulation and low water vapor permeability and therefore leads to increased thermal strain [63]. In addition, the extra weight load from wearing equipment increases metabolic heat gain [37, 63]. Consequently, wearing American Football pads during exercise has been shown to increase the thermoregulatory sweating response [32, 37]. Armstrong et al. [32] compared sweating rates of men wearing a full uniform, partial uniform (without helmet and shoulder pads), and control clothing (shorts, socks, and shoes only) while performing a standardized American Football-simulated laboratory protocol in the heat (33 °C). In this study, sweating rate was significantly higher in the full (2.05 ± 0.34 L/h) and partial (1.86 ± 0.25 L/h) uniforms versus the control clothing (1.24 ± 0.16 L/h). However, there seems to be no significant difference in sweating rate with different uniform configurations. In the study by Armstrong et al. [32], no differences in sweating rate were found between full and partial uniforms. Similarly, in a field study, Godek et al. [43] reported no differences in collegiate American Football players’ sweating rates when wearing full (2.01 ± 0.66 L/h) versus half pads (1.97 ± 0.53 L/h) during summer training. These results are not surprising, since in both studies, there were no differences in the players’ T c between uniform configurations [32, 43].

5.7 Fluid Balance

When sweat output exceeds fluid intake, a body water deficit (hypohydration) accrues, which can lead to impaired circulatory and thermoregulatory function during endurance exercise. For example, laboratory studies have shown that ≥3 % hypohydration causes a decrease in plasma volume and therefore a decrease in stroke volume and a compensatory increase in heart rate to maintain a given cardiac output [76–78]. In addition, ≥3 % hypohydration has been shown to delay the onset and decrease the sensitivity of the sweating and skin blood flow response to hyperthermia [79–81]. The physiological strain associated with hypohydration is exacerbated by greater body water deficits and when exercise is performed in hot-humid environments [76, 82]. For more details on the physiology and performance effects of hypohydration, the reader is referred to a recent comprehensive review [83].

Despite high sweating rates in American Football players, the observed disturbances in fluid balance have generally been mild (see Table 2). For example, most field studies with professional [31, 60, 68], collegiate [43, 44, 70, 71], and high school [69] American Football players reported mean body mass losses of ≤2 %. These results suggest that the drinking breaks in most studies were sufficient to provide athletes with enough fluid to offset sweat losses. In fact, some studies in youth players have shown better in-practice hydration (i.e., replacing sweat losses) than off-field rehydration (i.e., pre- and in-between practice) habits [52, 62]. This is an interesting finding considering that youth athletes’ perceived sweat losses often underestimate their actual sweat losses, but can perhaps be explained by a combination of adequate fluid availability and relatively low sweating rates (making it easier to drink enough to offset losses during practice) [52]. Given the structure of the game, which includes allowance of player substitutions, frequent stoppage of play (timeouts, breaks after each quarter, etc.), and most athletes in college and NFL playing only one side of the ball (offense or defense), American Football players should have ample opportunity to consume fluids.

Only one study, by Horswill et al. [66], has reported mean pre- to post-practice losses of >2 % body mass (see Table 2). This was one of the few studies in which athletes remained in full pads for the duration of practice. In addition, the location of this testing (southeastern United States) provided for some of the highest levels of heat stress (WBGT 29.4–32.2 °C) among field studies to date. The fluid losses from sweating in these athletes may have been at a high enough rate that their intake could not keep pace with their losses [66].

A few studies have also tracked baseline (pre-practice) body mass changes throughout consecutive days of summer training camp [43, 44, 69]. These study results showed that mild body mass deficits accrued after the first day of training, but hypohydration levels did not worsen over the remainder of camp. That is, fluid intake between practice sessions was apparently sufficient to avoid cumulative hypohydration from consecutive days of training. For example, in collegiate players, baseline body mass decreased by 0.8, 1.5, 0.9, 1.1, and 0.5 kg (i.e., ~0.4–1.3 %), respectively, during days 2, 3, 4, 6, and 8 compared with that of day 1 [43]. Similarly, Stover et al. [69] reported that the pre-practice body mass of high school players was consistently ~0.5 kg (i.e., 0.6 %) less on days 2–5 of training camp versus day 1. There also seems to be no significant effect of player position on fluid balance. That is, athletes with higher sweating rates consumed more fluid than their counterparts who sweated less, leading to no differences in body mass deficits between linemen and backs [60, 61].

Another potential concern related to fluid balance is overdrinking relative to sweat losses, which can increase the risk for symptomatic exercise-associated hyponatremia (i.e., blood sodium dilution to <125–130 mmol/L) [84]. Hyponatremia is a serious, potentially life-threatening condition and has been reported in American Football [84–86]. Thus, hydration education and behaviors should be aimed at preventing significant fluid imbalances, including overdrinking (body mass gain) as well as under drinking (≥3 % body mass loss). Knowing individual sweating rates (in various training and environmental conditions), monitoring body mass changes and urine output (volume and concentration), and thirst can help inform appropriate personalized fluid replacement strategies [55, 84].

6 Sweat Sodium Concentration

Thermoregulatory sweat is comprised of water and electrolytes, predominantly sodium and chloride. The total amount of sodium lost in sweat depends on the rate and duration of sweating as well as sweat sodium concentration. Mean whole-body sweat sodium concentration during exercise across a variety of athletes is ~35–40 mmol/L, but ranges from ~10 mmol/L to as high as ~90 mmol/L [55, 87]. The considerable inter-individual variability in sweat sodium concentration is due to multiple factors, including genetics, diet, heat acclimatization status, and sweating rate [55]. The “gold-standard” method of measuring whole-body sweat sodium concentration is the whole-body washdown technique [88]. However, in field studies, regional (e.g., forearm) sweat collections have been used as a more practical means to estimate sweat sodium concentration, and regression equations [87, 89] can be used to predict whole-body sweat sodium concentration.

Only a few studies have measured sweat sodium concentration of American Football players during summer training [52, 67, 68]. The sweat sodium concentration of American Football players does not seem to differ significantly from that of athletes in other sports. For example, Baker et al. [67] found no difference in the mean forearm sweat sodium concentration of competitive/professional athletes in American Football (44 ± 19 mmol/L) versus other team/skill sports, such as baseball (36 ± 18 mmol/L), basketball (46 ± 17 mmol/L), tennis (40 ± 19 mmol/L), and soccer (36 ± 13 mmol/L). In addition, Godek et al. [68] measured forearm sweat sodium concentration in professional players and found no differences between backs/receivers and quarterbacks/linebackers (group mean was 50 ± 16 mmol/L, range was 15–99 mmol/L). Finally, in agreement with others [72] testing the impact of age/maturation on sweat composition, Yeargin et al. [52] found that forearm sweat sodium concentration was ~30 % lower in 14- to 15-year-old (27.3 ± 17.2 mmol/L) versus 16- to 17-year-old (40.4 ± 19 mmol/L) players during summer training (although this difference did not reach statistical significance). To date, no studies, to the best of our knowledge, have measured sweat sodium concentration during the competitive season or determined intra-subject variability with changes in exercise intensity, weather, heat-acclimatization or training status, or other factors that change throughout the course of an American Football season.

Although sweat sodium concentrations observed in American Football players are similar to other sports, it is expected that total sweat sodium losses would be higher in Football due to the very high sweating rates commonly observed, particularly in larger players (e.g., linemen) [67]. However, the impact of these sodium losses in American Football players is currently unclear. There are insufficient data to inform clear recommendations on how much sodium needs to be replaced for the maintenance of physiological or performance outcomes. It has been suggested that sodium should be consumed during exercise when total sodium losses are 3–4 g or more [90, 91]. For an American Football player with a whole-body sweat sodium concentration of 40 mmol/L (920 mg/L) and a sweating rate of 2 L/h, it would take 1.6–2.2 h of training to lose 3–4 g of sodium. Since American Football practices are commonly ~2 h or more, many adult players would be expected to lose ≥3–4 g of sodium during summer training camps. Indeed, Godek et al. [68] reported total sodium losses of 9.8 ± 6.0 g (group mean ± standard deviation) on days when professional American Football players practiced 4.5 h during the preseason.

The potential benefits or rationale for sodium ingestion during exercise include the replacement of sweat electrolyte losses [55] and better maintenance of sodium balance [92, 93] and plasma volume [91]. However, there is currently a lack of well-controlled intermittent high-intensity studies measuring the impact of sodium loss or replacement in American Football. There is some indication that higher sweat sodium losses may occur in players with a history of heat-related whole-body muscle cramps [71]. However, the association with high sweat sodium losses is not consistently found in all cramp-prone players [66]. More work is needed to understand the impact of sodium losses on hydration and/or performance outcomes during exercise. It is clear, however, that sodium ingestion significantly improves post-exercise rehydration. Ingestion of sodium with water helps stimulate more complete rehydration, which includes both better plasma volume restoration and whole-body fluid balance compared with ingestion of plain water [94–98]. The increase in blood sodium concentration and osmolality with sodium ingestion stimulates renal water reabsorption, such that urine output is inversely related to the sodium content of the ingested fluid [94]. Rapid and complete restoration of fluid balance via sodium and water ingestion is particularly important during twice per day practice sessions. In most other situations, water and sodium can be consumed with normal eating and drinking practices (i.e., with meals) [91].

7 Study Limitations, Gaps in the Literature, and Future Directions

Considering the disproportionate death rates from EHS in American Football compared with other sports, it is interesting that only five field-based studies have investigated T c responses in American Football players (Table 1). Further, only one study has investigated T c responses among American Football players at the high school level [52]. This is surprising, considering deaths from EHS have been routinely tracked in American Football players since the 1960s and the largest percentage of EHS has occurred in high school players [7]. There is still work to be done at the high school level, as not all states have adopted appropriate policies to date. High school athletic directors should consider adopting appropriate policies for preseason practice even if adequate policies are not in place for their state.

Another gap in the literature is that very few studies have been conducted in geographical locations that are in the hottest areas of the United States (i.e., southeast, south, southwest regions). Out of the five field-based studies investigating T c responses, only one has been conducted in the southeastern United States (i.e., Florida) [48], with the majority of work being done in the northeast [31, 42–44, 52]. Understanding challenges players face in hotter geographical portions of the United States will provide valuable insights into the risk associated with preseason practice in these areas. More research is also needed in a range of player age levels and from different regions of the USA to get a more comprehensive understanding of the fluid intake behaviors of American Football players. In addition, future work is warranted to inform clear recommendations on how much sodium needs to be replaced during training for the maintenance of physiological or performance outcomes in American Football.

8 Conclusion

Field-based studies have been conducted in the preseason with American Football players competing at the high school, college, and professional levels. A consistent finding across studies is the significant relation between body size (surface area or body mass) versus sweating rate, such that larger players (e.g., linemen and linebackers) exhibit higher sweating rates than smaller players (e.g., backs). Wearing an American Football uniform, whether full or half pads, leads to significantly higher sweating rates compared with wearing standard athletic clothing (e.g., shorts). Mean sweating rate ranged from 0.6 to 1.3 L/h in studies with youth American Football players and from 1.0 to 2.9 L/h in college and professional American Football players. Despite high sweating rates in American Football players, the observed disturbances in fluid balance have generally been mild (mean body mass loss ≤2 %). Sweat sodium concentration of American Football players does not seem to differ from that of athletes in other sports, but given the high volume of sweat loss in American Football players, the potential for sodium loss is higher in American Football than in other sports. It is well established that sodium ingestion plays an important role in stimulating more complete rehydration following exercise, particularly during twice per day training sessions. However, future work is needed to better understand how much sodium needs to be replaced during training for the maintenance of physiological or performance outcomes in American Football. In general, youth players exhibit lower sweating rates and lower sweat sodium concentrations than adult American Football players.

Epidemiological studies report disproportionately higher rates of EHI and EHS with American Football compared with other sports, with the majority of incidences occurring during preseason practice during the hottest times of the year. Field- and laboratory-based studies are in agreement that larger individuals are at greater risk for heat ailments early during the preseason, specifically within the first 3–4 days of practice. Larger players are at increased risk for heat ailments compared with smaller players because of a greater BMI, increased body fat, lower surface area to body mass ratio, lower aerobic capacity, and the stationary nature of the position they may play, which can reduce heat dissipation. Coaches, athletic trainers, and team personnel should be aware of the unique thermoregulation, fluid balance and sweat loss challenges American Football players face during preseason practice and monitor players more diligently, particularly large linemen.

References

Racinais S, Alonso J, Coutts AJ, et al. Consensus recommendations on training and competing in the heat. Sports Med. 2015;45:925–38.

Grantham J, Cheung SS, Connes P, et al. Current knowledge on playing football in hot environments. Scand J Med Sci Sports. 2010;20:161–7.

Maughan RJ, Shirreffs SM. IOC consensus conference on nutrition in sport, 25–27 October 2010, International Olympic Committee, Lausanne, Switzerland. J Sports Sci 2011;29 Suppl 1:S1.

Bergeron MF. Hydration and thermal strain during tennis in the heat. Br J Sports Med. 2014;48(Suppl 1):i12–7.

Grundstein AJ, Ramseyer C, Zhao F, et al. A retrospective analysis of American football hyperthermia deaths in the United States. Int J Biometerol. 2012;57:11–20.

Kerr ZY, Casa DJ, Marshall SW, et al. Epidemiology of exertional heat illness among U.S. high school athletes. Am J Prev Med. 2013;44:8–14.

Kucera KL, Klossner D, Colgate B, et al. National Center for Catastrophic Sport Injury Research. Annual survey of football injury research, 1931–2014, University of North Carolina at Chapel Hill, 2014. https://nccsir.unc.edu/files/2013/10/Annual-Football-2014-Fatalities-Final.pdf. Accessed Jan 2016.

Armstrong LE, Casa DJ, Millard-Stafford M, et al. American College of Sports Medicine position stand. Exertional heat illness during training and competition. Med Sci Sports Exerc. 2007;39(3):556–72.

Huffman EA, Yard EE, Fields SK, et al. Epidemiology of rare injuries and conditions among United States high school athletes during 2005–2006 and 2006–2007 school years. J Athl Train. 2008;43:624–30.

Yard EE, Gilchrist J, Haileyesus T, et al. Heat illness among high school athletes-U.S., 2005–2009. J Safety Res. 2010;41:471–4.

Cooper ER, Ferrara MS, Broglio SP. Exertional heat illness and environmental conditions during a single football season in the southeast. J Athl Train. 2006;41:332–6.

Casa DJ, Csillan D. Preseason heat-acclimatization guidelines for secondary school athletics. J Athl Train. 2009;44:332–3.

Casa DJ, DeMartini JK, Bergeron MF, et al. National athletic trainers’ association position statement: exertional heat illnesses. J Athl Train. 2015;50:986–1000.

Kaiser GE, Womack JW, Green JS, et al. Morphological profiles for first-year National Collegiate Athletic Association Division I football players. J Strength Cond Res. 2008;22:243–9.

Bosch TA, Burruss TP, Weir NL, et al. Abdominal body composition differences in NFL football players. J Strength Cond Res. 2014;28:3313–9.

Dengel DR, Bosch TA, Burruss TP, et al. Body composition and bone mineral density of National Football League players. J Strength Cond Res. 2014;28:1–6.

Kraemer WJ, Torine JC, Silvestre R, et al. Body size and composition of National Football League players. J Strength Cond Res. 2005;19:485–9.

Wang MQ, Downey GS, Perko MA, et al. Changes in body size of elite high school football players: 1963–1989. Percept Mot Skills. 1993;76:379–83.

Noel MB, VanHeest JL, Zaneteas P, et al. Body composition in Division I football players. J Strength Cond Res. 2003;17:228–37.

Secora CA, Latin RW, Berg KE, et al. Comparison of physical and performance criteria of NCAA Division I football players: 1987 and 2000. J Strength Cond Res. 2004;18:286–91.

Malina RM, Morano PJ, Barron M, et al. Overweight and obesity among youth participants in American football. J Pediatr. 2007;151:378–82.

Gallagher D, Heymsfield SB, Heo M, et al. Healthy percentage body fat ranges: an approach for developing guidelines based on body mass index. Am J Clin Nutr. 2000;72:694–701.

Melvin MN, Smith-Ryan AE, Wingfield HL, et al. Muscle characteristics and body composition of NCAA division I football players. J Strength Cond Res. 2014;28:3320–9.

Havenith G, Coenen JM, Kistemaker L, et al. Relevance of individual characteristics for human heat stress response is dependent on exercise intensity and climate type. Eur J Appl Physiol Occup Physiol. 1998;77:231–41.

Smith DP, Byrd RJ. Body composition, pulmonary function and maximal oxygen consumption of college football players. J Sports Med Phys Fitness. 1976;16:301–8.

Wilmore JH, Haskell WL. Body composition and endurance capacity of professional football players. J Appl Physiol. 1972;33:564–7.

Howley ET, Bassett DR, Welch HG. Criteria for maximal oxygen uptake: review and commentary. Med Sci Sports Exerc. 1995;27:1292–301.

Epstein Y, Shapiro Y, Brill S. Role of surface area to mass ratio and work efficiency in heat intolerance. J Appl Physiol. 1983;54:831–6.

Wailgum TD, Paolone AM. Heat tolerance of college football linemen and backs. Phys Sportsmed. 1984;12:81–6.

DeMartini JK, Martschinske JL, Casa DJ, et al. Physical demands of National Collegiate Athletic Association Division I football players during presason training in the heat. J Strength Cond Res. 2011;25:2935–43.

Godek SF, Bartolozzi AR, Burkholder R, et al. Core temperature and percentage of dehydration in professional football linemen and backs during preseason practices. J Athl Train. 2006;41:8–14.

Armstrong LE, Johnson EC, Casa DJ, et al. The American football uniform: uncompensable heat stress and hyperthermic exhaustion. J Athl Train. 2010;45:117–27.

Pascoe DD, Shanley LA, Smith EW. Clothing and exercise I: biophysics of heat transfer between the individual, clothing and environment. Sports Med. 1994;18:38–54.

Gavin TP. Clothing and thermoregulation during exercise. Sports Med. 2003;33:941–7.

Davis JK, Bishop PA. Impact of clothing on exercise in the heat. Sports Med. 2013;43:695–705.

Fox EL, Mathews DK, Kaufman WS, et al. Effects of football equipment on thermal balance and energy cost during exercise. Res Q. 1966;37:332–9.

Mathews DK, Fox EL, Tanzi D. Physiological responses during exercise and recovery in a football uniform. J Appl Physiol. 1969;26:611–5.

NCAA Membership Service Staff. 2005–2006 NCAA Division I Manual. Indianapolis: The National Collegiate Athletic Association. NCAA legislation 17.11.2.3 five day acclimatization period, Division I-A and Division I-AA.

Kulka TJ, Kenney WL. Heat balance limits in football uniforms how different uniform ensembles alter the equation. Phys Sportsmed. 2002;30:29–39.

McCullough EA, Kenney WL. Thermal insulation and evaporative resistance of football uniforms. Med Sci Sports Exerc. 2003;35:832–7.

Johnson EC, Ganio MS, Lee EC, et al. Perceptual responses while wearing an American football uniform in the heat. J Athl Train. 2010;45:107–16.

Godek S, Godek JJ, Bartolozzi AR. Thermal responses in football and cross-country athletes during their respective practices in a hot environment. J Athl Train. 2004;39:235–40.

Godek SF, Godek JJ, Bartolozzi AR. Hydration status in college football players during consecutive days of twice-a-day preseason practices. Am J Sports Med. 2005;33:843–51.

Yeargin SW, Casa DJ, Armstrong LE, et al. Heat acclimation and hydration status of American football players initial summer workouts. J Strength Cond Res. 2006;20:463–70.

Godek SF, Bartolozzi AR. Hydration and core temperature in a football player during preseason: a case study. Athl Ther Today. 2004;9:64–70.

Deren TM, Coris EE, Bain AR, et al. Sweating is greater in NCAA football linemen independently of heat production. Med Sci Sports Exerc. 2012;44:244–52.

Deren TM, Coris EE, Casa DJ, et al. Maximum heat loss potential is lower in football linemen during an NCAA summer training camp because of lower self-generated air flow. J Strength Cond Res. 2014;28:1656–63.

Coris EE, Mehra S, Walz SM, et al. Gastrointestinal temperature trends in football linemen during physical exertion under heat stress. South Med J. 2009;102:569–74.

Pascoe DD, Bellingar TA, McCluskey BS. Clothing and exercise. II. Influence of clothing during exercise/work in environmental extremes. Sports Med. 1994;18:94–108.

Holmer I, Nilsson H, Havenith G, et al. Clothing convective heat exchange proposal for improved prediction in standards and models. Ann Occup Hyg. 1999;43:329–37.

Korey Stringer Institute. Heat acclimatization guidelines by state. http://ksi.uconn.edu/high-school-state-policies/heat-acclimatization-policies/. Accessed Jan 2016.

Yeargin SS, Casa DJ, Judelson DA, et al. Thermoregulatory responses and hydration practices in heat-acclimatized adolescents during preseason high school football. J Athl Train. 2010;45:136–46.

Hitchcock KM, Millard-Stafford ML, Phillips JM, et al. Metabolic and thermoregulatory responses to a simulated American football practice in the heat. J Strength Cond Res. 2007;21:710–7.

Wenger CB. Heat of evaporation of sweat: thermodynamic considerations. J Appl Physiol. 1972;32:456–9.

Sawka MN, Burke LM, Eichner ER, et al. American College of Sports Medicine position stand. Exercise and fluid replacement. Med Sci Sports Exerc. 2007;39:377–90.

Gagnon D, Jay O, Kenny GP. The evaporative requirement for heat balance determines whole-body sweat rate during exercise under conditions permitting full evaporation. J Physiol. 2013;591:2925–35.

Buresh R, Berg K, Noble J. Heat production and storage are positively correlated with measures of body size/composition and heart rate drift during vigorous running. Res Q Exerc Sports. 2005;76:267–74.

Dennis SC, Noakes TD. Advantages of a smaller body mass in humans when distance running in warm humid conditions. Eur J Appl Physiol Occup Physiol. 1999;79:280–4.

Marino FE, Mbambo Z, Kortekaas E, et al. Advantages of smaller body mass during distance running in warm, humid environments. Eur J Appl Physiol Occup Physiol. 2000;441:359–67.

Godek SF, Bartolozzi AR, Burkholder R, et al. Sweat rates and fluid turnover in professional football players: a comparison of National Football League linemen and backs. J Athl Train. 2008;43:184–9.

Godek SF, Bartolozzi AR, Peduzzi C, et al. Fluid consumption and sweating in National Football League and collegiate football players with different access to fluids during practice. J Athl Train. 2010;45:128–35.

McDermott BP, Casa DJ, Yeargin SW, et al. Hydration status, sweat rates, and rehydration education of youth football campers. J Sports Rehabil. 2009;18:535–52.

McLellan TM, Daanen HA, Cheung SS. Encapsulated environment. Compr Physiol. 2013;3:1363–91.

Sawka MNLL, Montain SJ, Sonna LA. Integrated physiological mechanisms of exercise performance, adaptation, and maladaptation to heat stress. Compr Physiol. 2011;1:1883–928.

Godek SF, Bartolozzi AR, Godek JJ. Sweat rate and fluid turnover in American football players compared with runners in a hot and humid environment. Br J Sports Med. 2005;39:205–11.

Horswill CA, Stofan JR, Lacambra M, et al. Sodium balance during U.S. football training in the heat: cramp-prone vs. reference players. Int J Sports Med. 2009;30:789–94.

Baker LB, Barnes KA, Anderson MA, et al. Normative data for regional sweat sodium concentration and whole-body sweating rate in athletes. J Sport Sci. 2015;12:1–11.

Godek SF, Peduzzi C, Burkholder R, et al. Sweat rates, sweat sodium concentrations, and sodium losses in 3 groups of professional football players. J Athl Train. 2010;45:364–71.

Stover EA, Zachwieja J, Stofan J, et al. Consistently high urine specific gravity in adolescent American football players and the impact of an acute drinking strategy. Int J Sports Med. 2006;27:330–5.

Stofan JR, Osterberg KL, Horswill CA, et al. Daily fluid turnover during preseason training in U.S. college football. Int J Sport Nutr Exerc Metab. 2007;17:340–51.

Stofan JR, Zachwieja JJ, Horswill CA, et al. Sweat and sodium losses in NCAA football players: a precursor to heat cramps? Int J Sport Nutr Exerc Metab. 2005;15:641–52.

Meyer F, Bar-Or O, MacDougall D, et al. Sweat electrolyte loss during exercise in the heat: effects of gender and maturation. Med Sci Sports Exerc. 1992;24:776–81.

Falk B, Bar-Or O, Calvert R, et al. Sweat gland response to exercise in the heat among pre-, mid-, and late-pubertal boys. Med Sci Sports Exerc. 1992;24:313–9.

Inbar O, Morris N, Epstein Y, et al. Comparison of thermoregulatory responses to exercise in dry heat among prepubertal boys, young adults and older males. Exp Physiol. 2004;89:691–700.

Rowland T. Thermoregulation during exercise in the heat in children: old concepts revisited. J Appl Physiol. 2008;105:718–24.

Montain SJ, Coyle EF. Influence of graded dehydration on hyperthermia and cardiovascular drift during exercise. J Appl Physiol. 1992;73:1340–50.

Gonzalez-Alonso J, Mora-Rodriguez R, Below PR, et al. Dehydration reduces cardiac output and increases systemic and cutaneous vascular resistance during exercise. J Appl Physiol. 1995;79:1487–96.

Sawka MN, Young AJ, Francesconi RP, et al. Thermoregulatory and blood responses during exercise at graded hypohydration levels. J Appl Physiol. 1985;59:1394–401.

Fortney SM, Nadel ER, Wenger CB, et al. Effect of blood volume on sweating rate and body fluids in exercising humans. J Appl Physiol. 1981;51(6):1594–600.

Fortney SM, Wenger CB, Bove JR, et al. Effect of hyperosmolality on control of blood flow and sweating. J Appl Physiol. 1984;57(6):1688–95.

Nadel ER, Fortney SM, Wenger CB. Effect of hydration state of circulatory and thermal regulations. J Appl Physiol. 1980;49(4):715–21.

Sawka MN, Young AJ, Francesconi RP, et al. Thermoregulatory and blood responses during exercise at graded hypohydration levels. J Appl Physiol. 1985;59:1394–401.

Cheuvront SN, Kenefick RW. Dehydration: physiology, assessment, and performance effects. Compr Physiol. 2014;4:257–85.

Hew-Butler T, Rosner MH, Fowkes-Godek S, et al. Statement of the Third International Exercise-Associated Hyponatremia Consensus Development Conference, Carlsbad, California. Clin J Sports Med. 2015;25:303–20.

Dimeff RJ. Seizure disorder in a professional American football player. Curr Sports Med Rep. 2006;5:173–6.

Herfel R, Stone CK, Koury SI, et al. Iatrogenic acute hyponatraemia in a college athlete. Br J Sports Med. 1998;32:257–8.

Baker LB, Stofan JR, Hamilton AA, et al. Comparison of regional patch collection vs. whole body washdown for measuring sweat sodium and potassium loss during exercise. J Appl Physiol. 2009;107:887–95.

Shirreffs SM, Maughan RJ. Whole body sweat collection in humans: an improved method with preliminary data on electrolyte content. J Appl Physiol. 1997;82:336–41.

Patterson MJ, Galloway SD, Nimmo MA. Variations in regional sweat composition in normal human males. Exp Physiol. 2000;85:869–75.

Coyle EF. Fluid and fuel intake during exercise. J Sport Sci. 2004;22:39–55.

Shirreffs SM, Sawka MN. Fluid and electrolyte needs for training, competition, and recovery. J Sport Sci. 2011;29:S39–46.

Montain SJ, Cheuvront SN, Sawka MN. Exercise associated hyponatremia: quantitative analysis to understand the aetiology. Br J Sports Med. 2006;40:98–106.

Vrijens DM, Rehrer NJ. Sodium-free fluid ingestion decreases plasma sodium during exercise in the heat. J Appl Physiol. 1999;86:1847–51.

Maughan RJ, Leiper JB. Sodium intake and post-exercise rehydration in man. Eur J Appl Physiol Occup Physiol. 1995;71:311–9.

Maughan RJ, Owen JH, Shirreffs SM, Leiper JB. Post-exercise rehydration in man: effects of electrolyte addition to ingested fluids. Eur J Appl Physiol Occup Physiol. 1994;69:209–15.

Shirreffs SM, Aragon-Vargas LF, Keil M, et al. Rehydration after exercise in the heat: a comparison of 4 commonly used drinks. Int J Sport Nutr Exerc Metab. 2007;17:244–58.

Wemple RD, Morocco TS, Mack GW. Influence of sodium replacement on fluid ingestion following exercise-induced dehydration. Int J Sport Nutr. 1997;7:104–16.

Ray ML, Bryan MW, Ruden TM, et al. Effect of sodium in a rehydration beverage when consumed as a fluid or meal. J Appl Physiol. 1998;85:1329–36.

Dubois D, Dubois EF. A formula to estimate the approximate surface area if height and weight be known. Arch Intern Med. 1916;17:863–71.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

No sources of funding were used to assist in the preparation of this review.

Conflicts of interest

Jon Davis, Corey Ungaro, Kelly Barnes, Lindsay Baker, and John Stofan are employees of the Gatorade Sports Science Institute, a division of PepsiCo. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of PepsiCo, Inc.

Rights and permissions

About this article

Cite this article

Davis, J.K., Baker, L.B., Barnes, K. et al. Thermoregulation, Fluid Balance, and Sweat Losses in American Football Players. Sports Med 46, 1391–1405 (2016). https://doi.org/10.1007/s40279-016-0527-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-016-0527-8