Abstract

In countries such as Australia, the UK and Canada, decisions on whether to fund new health technologies are commonly informed by decision analytic models. While the impact of making inappropriate structural choices/assumptions on model predictions is well noted, there is a lack of clarity about the definition of key structural aspects, the process of developing model structure (including the development of conceptual models) and uncertainty associated with the structuring process (structural uncertainty) in guidelines developed by national funding bodies. This forms the focus of this article. Building on the reports of good modelling practice, and recognising the fundamental role of model structuring within the model development process, we specified key structural choices and provided ideas about model structuring for the future direction. This will help to further standardise guidelines developed by national funding bodies, with potential impact on transparency, comprehensiveness and consistency of model structuring. We argue that the process of model structuring and structural sensitivity analysis should be documented in a more systematic and transparent way in submissions to national funding bodies. Within the decision-making process, the development of conceptual models and presentation of all key structural choices would mean that national funding bodies could be more confident of maximising value for money when making public funding decisions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Within the decision-making process, decision analytic models are frequently used to inform decisions on whether to fund health technologies |

The impact of inappropriate structural choices on model predictions is well noted. However, current guidelines mainly provide generic advice regarding the model structuring process. |

To make better-informed public funding decisions, we provided some recommendations to further optimise and standardise the guidelines on issues around model structuring and the characterisation of structural uncertainty, with consequential impact on the accuracy, transparency and comparability of model-based economic evaluations. |

1 Introduction

In several countries (e.g. the UK and Australia) public funding decisions are informed by an evaluation of whether the expected additional benefits generated by a new health technology justify its additional cost. Several decision-making bodies, such as the Pharmaceutical Benefits Advisory Committee (PBAC) in Australia and the National Institute for Health and Care Excellence (NICE) in the UK, use decision analytic models to generate economic evidence [1, 2].

The accuracy of estimates of costs and outcomes obtained from decision analytic models are driven by the model development process. Within this process, choices/assumptions are made to inform two key steps: (1) specification of model structural aspects (i.e. model structuring process) such as health states/events included in the model; and (2) identification of the numerical value of model inputs such as baseline risks, treatment effects and utility values (i.e. model populating process) [3]. Due to imperfect information, these processes are inevitably uncertain (termed structural and parameter uncertainty, respectively).

In decision making, both the structuring and populating processes (including the specification of uncertainties associated with these processes) should be fully transparent and evidence based so that decision makers can understand their potential impact on the results of economic evaluation [4]. National funding bodies such as PBAC and NICE have developed guidelines to enhance comparability across submissions and to reflect best practice resulting in better informed public funding decisions. Whilst these guidelines fully address the issues around model input estimates and characterising uncertainty around the true value of model parameters (i.e. parameter uncertainty), it is perhaps surprising that there has been relatively less consistent and specific methodological guidance regarding model structuring practices, including any assessment and characterisation of structural uncertainty [5,6,7,8].

To clarify the concept of model structuring, consider a simple representation of a model expressed as Y = S(P), where Y represents model outputs, P denotes model input estimates and S represents aspects (assumptions) that impose a structural relationship between the model inputs and the model outputs (e.g. using linear predictor functions to estimate model outputs given the estimates of model inputs). The choice of model inputs and how they are to be related are inextricably linked to the choice of structural aspects [9, 10]. This indicates the importance of the process of deciding on what structural aspects/assumptions to include (or exclude) (e.g. whether to include depression as an important health state in a frailty model). The model structuring process is considered to be one of the key stages in the development of a decision analytic model [4]. A relevant point is about uncertainty associated with the choice of structural aspects (assumptions). Structural uncertainty, according to Strong and Oakley [11], arises because we are uncertain about the true structural relationship between the model output (Y) and a set of quantities which form model inputs (P), and this can be quantified as the difference between the model output (at its true inputs) and the true target quantity (the structural error). Parameter sensitivity analysis [e.g. probabilistic sensitivity analysis (PSA)] deals with uncertainty around the true values of P once a set of structural aspects has been chosen, implicitly assuming no uncertainty associated with the choice of S itself. This means that if the process of selecting structural aspects are not appropriate then the PSA cannot characterise all key sources of uncertainty providing misleading results. Due to lack of perfect information about the true characterisation of S, structural decisions are inevitably uncertain.

In recent years, the literature on the impact of choices around model structure on model predictions has grown rapidly [12,13,14,15]. It is well noted that including inappropriate structural aspects (or excluding relevant and important ones), even if we use the true value of inputs, can lead to biased model predictions and, hence, poorly informed policy decisions [16, 17]. For example, Frederix et al. [14] compared 11 model-based evaluations of anastrazole and tamoxifen in patients with early breast cancer, all models using the same clinical trial as a principle source of effectiveness data. They found that alternative choices about structural aspects (e.g. alternative health states, time dependency in estimation of transition probabilities) can result in widely divergent estimates of outcome measures (e.g. life-years gained). Le [18] examined the impact of alternative health states and transitions between them in Markov models for the treatment of advanced breast cancer (a Markov model including stable disease, responding to therapy, disease progressing and death vs. a similar model excluding the responding to therapy state). The study found a wide range of cost-effectiveness results, suggesting the need to investigate the impact of structural choices on model-based economic evaluations in a more systematic way.

In the absence of sufficient and good-quality guidance, analysts may use different approaches (often subjective) to make structural decisions and deal with structural uncertainty. These inconsistencies can potentially produce discrepancies in model predictions, undermining funding decisions. This forms the focus of this article.

Building on reports on good modelling practice, including those of the International Society for Pharmacoeconomics and Outcomes Research–Society for Medical Decision Making [ISPOR-SMDM] Modeling Good Research Practices Task Force [19] and the Technical Support Document from the NICE Decision Support Unit (DSU) [20], we review and critique the literature relating to the model structuring process (including model conceptualisation) and draw conclusions.

This article represents contribution to good practice for modelling with a focus on the public funding decision-making process. We first provide an overview of the available literature concerning model structuring, clarifying what exactly model structuring means (Sect. 2). Key structural aspects are then specified (Sect. 3), and uncertainty associated with structural choices discussed (Sect. 4). In the absence of specific definition of structural aspects, this section provides a framework to define and specify key structural choices, enhancing the transparency and consistency of the model structuring process through improved communications between key stakeholders (including sponsors of new health technologies, evaluators of submissions to funding bodies and policy makers). We also review the guidelines developed by some major national funding bodies to clarify the extent to which good practices have been adhered to in these guidelines (Sect. 5), and suggest ideas about how model structuring can be presented in a more systematic way in the future, including the characterisation of uncertainty associated with choice of alternative structural aspects (Sect. 6).

We argue that an appropriate model structuring process should inform the most appropriate base-case analysis. The process will also inform alternative plausible structural choices as potential sources of structural uncertainty, which should be characterised.

It is important to note that this review is not intended to address every aspect of model structuring (e.g. how to identify and use evidence to inform structural aspects), nor to prescribe a set of comprehensive guidelines. Instead, we focus on some key issues within the process of model structuring and identify areas for further methodological improvements in the decision-making process. Also, it is not intended to systematically review guidelines developed by national funding bodies. While the article focuses on decision-making bodies such as the PBAC, NICE, the Canadian Agency for Drugs and Technologies in Health (CADTH), and the French national guidelines, the discussion can be applied more widely to other national funding bodies that use economic evaluation to inform funding decisions, e.g. the Medical Services Advisory Committee (MSAC) in Australia.

2 Model Structuring: Definitions

The aim of model structuring is to first systematically identify the best available evidence to inform the most appropriate model structural aspects (e.g. clinical evidence to inform the progression of the condition under study). The process, by informing alternative plausible structural choices, also helps identify areas of structural uncertainty [21,22,23].

A key issue is how structural aspects are defined and identified [3]. Lack of clarity about structural aspects of a model can make it difficult to have a meaningful discussion about model structuring. In decision analytic modelling, structural aspects have been defined with varying interpretations broadly defined as “the assumptions imposed by the modelling framework” [24], “the assumptions inherent in the decision model” [22], “the assumptions we make about the structure of the model” [25], “uncertainty about the correct functional forms for combining the inputs” [26] and “…modelling approach, structural assumptions, model type” [3]. These definitions provide some sense of what structural aspects are, but only in the most general terms. Bojke et al. [12] and Claxton et al. [21] provided some examples of structural choices, including the choice of events/states, alternative statistical models to estimate input parameters (including extrapolation issues), the choice of evidence (assessment of applicability) and the choice of relevant comparators. Examples provided by Bilcke et al. [27] include the choice of disease states, dynamic or static transition probabilities, and type of functions used to extrapolate data. Recently, more specific examples of structural aspects were provided by Drummond et al. [4]. They defined a few common structural issues: events and patient risk profile included in the model, the durability of the effectiveness of an intervention, the possibility of the occurrence of events of interest (once vs. several times), and the choice between time-varying and constant probabilities.

Although some examples of structural aspects/assumptions are included in the existing literature, it appears that a consensus on the specification of all key structural choices has yet to be agreed upon. There is a need to compound and represent structural aspects in a more systematic and consistent way. Moreover, as discussed in more detail in Sect. 3, some examples of model structural aspects do not truly reflect structural aspects. For example, the choice of relevant comparators is often considered as part of the process of defining and understanding the decision problem (also known as problem conceptualisation) [6, 19, 25].

3 Model Structural Aspects

Choices about key structural aspects pertain to three categories: (1) important health states/events and patient attributes (represented by conceptual modelling); (2) other structural choices/assumptions that determine how inputs are estimated and are related; and (3) choice of modelling technique (implementation platform) [28, 29]. These choices can affect other aspects of modelling including input data requirements (i.e. model populating).

3.1 Conceptual Modelling

Model conceptualisation is broadly defined as the abstraction of reality at an appropriate level of simplification [28]. In economic evaluation, the conceptualisation process is considered as a central element of model structuring. It is about how the model appropriately reflects the natural history of the disease and the impact of competing technologies on the disease progression (including treatment-related important side effects) [6]. It is well noted that the process of model conceptualisation should not be driven by data availability to ensure the conceptual model is truly representative of the condition under study [19].

Model conceptualisation is represented by two key choices:

-

1.

Relevant and ‘significant’ health states/events (including the choice of relevant surrogate endpoints if needed). ‘Significant’ states/events are defined with respect to the impact of the health state/event on disease progression (i.e. the strength of relationship between the disease and the health state/event), as well as its impact on associated costs and/or economically important health outcomes (e.g. life expectancy and quality of life). For example, breast cancer progression can be represented by three states (disease free, relapse, death). However, if there is some evidence pointing to ‘significant’ differences between the disaggregated events (from a clinical and economic perspective), the relapse state should be disaggregated into two states (locoregional relapse, distant metastasis). Further, by the same token, the distant metastasis state can be disaggregated into two states (bone and visceral) resulting in a five-state model. The potential impact of the exclusion of relevant and significant health states/events on model predictions has been previously discussed. For example, to estimate costs and effects of eight antidepressant drugs, a depression model included the possibility of ‘response’ as the only outcome measure [30]. Given the recurring nature of depression (with possibility of experiencing depressive episodes, response, remission and recovery), this model structure is likely to bias results in favour of interventions with higher rates of response, which may be the more or the less effective treatment overall [31].

-

2.

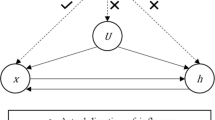

Within the model conceptualisation process, another key structural choice is about ‘important’ patient attributes that influence disease progression (risk of health states/events) or intervention effect. The important attributes may include (1) socio-demographic risk factors such as age and sex (e.g. the impact of age on the progression of frailty); (2) clinical risk profile such as the level of glycosylated haemoglobin (HbA1c) as an important factor predicting the risk of a number of diabetes-related complications; and (3) history of prior clinical events which can influence the risk of experiencing subsequent states/events in the model (i.e. as important predictor of subsequent events). An example is the inclusion of the number of previous depressive episodes as an important predictor of subsequent depressive episodes (i.e. increasing the probability of recurrence with every previous depressive episode) [31].

3.2 Other Key Structural Choices

The model structuring process also involves a number of other key structural choices/assumptions that have an impact on model predictions. These choices impose structural relationships between model inputs such that the impact of input parameters on model estimates are influenced by these choices.

-

1.

Choices about the duration of treatment effects beyond the follow-up period: this includes a one-time benefit representing no treatment effect after trial follow-up (i.e. parallel survival curves), a continuous benefit (i.e. divergent survival curves) and a rebound effect (i.e. convergent survival curves, e.g. patients in the treatment arm receiving a prosthesis die faster than the control patients beyond trial follow-up) [4]. The choice of assumption about the durability of the effectiveness of an intervention relative to comparators in the evaluation of zidovudine in patients with HIV infection (one-time benefit vs. continuous effect) has been shown to considerably impact model predictions [32]. Reporting the cost effectiveness of implantable cardioverter defibrillators compared with drug therapy in patients with cardiac arrhythmia, Hlatky et al. [33] found that an assumption of convergent survival versus parallel survival resulted in the incremental cost-effectiveness ratios (ICERs) of US$91,500/quality-adjusted life-year (QALY) and US$44,900/QALY, respectively.

-

2.

Choices about the relationship between time and transition probabilities: this includes time dependency of probabilities, as the parameter value changes over time (constantly or variously) or remains fixed over the time horizon of the evaluation. If the choice is inappropriately made, it may result in misleading results. In a depression model, for example, whether the transition probability from the depression state to death (suicide) increases as the time the patient has been in the depression state increases, and if so whether it increases constantly or variously [34].

-

3.

Choices about the effect of the treatment on transitions between states/events included in the model: this choice is about whether we assume the treatment effect can act on some or all forward transitions (representing disease progression), some or all backward transitions (representing prevention), or both. In a depression model, for example, assumptions can be made about the effect of treatment on transition from ‘remission’ to ‘depressive episode’ or on both forward and backward transitions (e.g. reducing the risk of transition from ‘remission’ to ‘depressive episode’). Price et al. [35] have demonstrated that the choice of alternative assumptions regarding varying treatment effects on forward and backward transitions in a model for asthma could alter estimates of cost effectiveness.

-

4.

Choices about statistical methods for estimating health outcomes after the observation period (relating to measures of diseases progression): an important element of model-based economic evaluations is the use of survival analysis to estimate long-term transition probabilities. This is because of a temporal gap between the time horizon of available sources (most often short-term) and the appropriate model’s analytic horizon. There are alternative methods of survival analysis including semi-parametric (Cox proportional hazards) and, more commonly, parametric modelling methods such as exponential and Weibull, as well as more flexible models (e.g. piecewise models). Studies have shown that the choice of survival functions can have a significant impact on the results of economic evaluations [36]. This is particularly so when a substantial amount of extrapolation beyond a follow-up period is needed [37]. For example, evaluating submissions to NICE, Connock et al. [38] found that the choice of log-logistic model (selected by the manufacturer for the base-case evaluation) has doubled overall survival gain that was derived from Weibull, with significant impact on cost-effectiveness results. The authors highlighted need for a consistent and evidence-based process without which there is a risk of biased economic evaluations.

-

5.

Choices about the approaches used to estimate QALYs (preferred outcome measures to inform funding decisions) and costs. An important example is about how utility values are estimated. Various instruments exist to measure utility values including direct elicitation methods (e.g. visual analogue scale [VAS]) and indirect elicitation methods (e.g. EuroQol [EQ]-5D) (see Drummond et al. [4] and Ara and Brazier [39] for details). It is well noted that the use of different instruments may result in different utility values for the same condition. For example, a recent study examined the utility values of frail people in nursing homes and found a significant difference using VAS (0.65) and EQ-5D (0.26) [40]. A relevant issue is about alternative approaches used to estimate joint health states values when data are available only for relevant single conditions (e.g. a patient with diabetes who experiences myocardial infarction). These include, but are not limited to, non-parametric approaches such as additive, multiplicative and minimum methods [41]. It has been reported that using different approaches can produce very different estimates of utility values potentially affecting model predictions [42]. To clarify the issue, suppose that there are two health events (A and B) with utility values of 0.70 and 0.63, respectively. Using different approaches, the joint utility value if a patient experiences both events is 0.33 (additive), 0.44 (multiplicative) and 0.63 (minimum estimator). Regarding cost measures, the choice of statistical methods to estimate costs (e.g. generalised linear models vs. standard linear models) can be considered as another structural choice in model-based evaluations with a potential impact on cost estimates, and hence the results of economic evaluation [25, 43].

3.3 Choice of Modelling Technique (Implementation Platform)

The choice of appropriate modelling technique and factors influencing the choice have been previously discussed in the literature [16, 19, 44]. Ideally, the choice of modelling technique should be informed by choices around other structural aspects. If, for example, the model structuring process specifies several (time-dependent) states/events with a number of important patient attributes, then individual sampling models such as individual-based state-transition models or discrete event simulations (DESs) are preferred choices (over, for example, Markov models). Pennington et al. [45] developed DESs to include additional structural assumptions to evaluate costs and effects of smoking cessation strategies and found a significant change in the results when compared with a Markov model. In practice, due to different reasons (e.g. data availability to populate the model according to the structure specified during model structuring), some structural aspects might be excluded (or simplified), and hence a less complex modelling technique might be needed.

4 Uncertainty Associated with Model Structuring

In previous sections we outlined the model structuring process and specified key structural choices. In this section we discuss uncertainty associated with this process and briefly present the main approaches to characterise this type of uncertainty.

Using a transparent and evidence-based process, model structuring generates a conceptual model including all significant health states/events (and surrogate endpoints), transition between them and important patient attributes, and specifies key structural choices/assumptions. However, due to lack of perfect information, uncertainty exists around some of the structural choices.

Structural uncertainty is associated with both the conceptual model and key structural choices/assumptions. Within the model structuring process, alternative choices/assumptions that are plausible, but uncertain given the available evidence, should be specified. It is important to note that not all structural aspects are considered as sources of structural uncertainty. For example, although the choice on the type of modelling techniques is considered as part of model structuring, it is not a source of uncertainty. The choice is clearly informed by the conceptual model and other structural aspects.

Similar to other sources of uncertainty (e.g. parameter uncertainty), if alternative plausible choices/assumptions exist, there is a need to characterise structural uncertainty appropriately using suitable approaches. Details of the main approaches to deal with structural uncertainty have been discussed elsewhere, including scenario analysis, model selection, model averaging, parameterisation and discrepancy [5, 12]. Briefly, scenario analysis involves the presentation of alternative models based on alternative plausible structural choices/assumptions. This approach puts the burden of assessing the relative plausibility of alternative structures on funding bodies. Model selection involves ranking alternative models according to some measures of prediction performance or goodness-of-fit, and selecting the best approximating model. A major limitation of this approach is that it considers the model with the best predictive ability as the most credible model, effectively assuming no structural uncertainty associated with the model. In the model averaging approach, weights are assigend to alternative models based on their predictive performance against the observed data or derived using expert elicitation methods [46]. The adequacy of weights assigned to alternative scenarios is frequently mentioned as one of the key limitations associated with model averaging. In the parameterisation approach, there some sources of structural uncertainty can be expressed as extra uncertain parameters in the model. By assigning probability distributions (derived from prior information or expert elicitation), uncertain structural aspects can be incorporated into PSA, and hence characterising both parameter and structural uncertainty. The model discrepancy approach assumes that each alternative model is only an approximation of reality. The focus of this approach is on quantifying judgements about model discrepancy, that is the difference between the model prediction and reality (i.e. the true value of the target that is being modelled).

5 Summary of Guidelines Developed by National Funding Bodies

In this section we summarise current methodological guidance relating to model structuring (and structural uncertainty) published by a number of major national funding bodies, including those from the UK, Canada, Australia and France (representing the European Union) (see Table 1).

The NICE guidelines [47] request “full documentation and justification of structural assumptions” (including “assumptions used to extrapolate the impact of treatment”) and state that “disease states should reflect the underlying biological process of the disease in question and the impact of interventions”. The guidelines acknowledge the importance of uncertainty around the “structural assumptions” and provide a few examples of structural uncertainty, including “different states of health” and “alternative methods of estimation”. To characterise structural uncertainty, the NICE guidelines recommend scenario analysis of a range of plausible choices/assumptions.

The recently updated CADTH guidelines [48] make specific reference to model conceptualisation as a means of determining an appropriate model structure. Specifically, the process involves “the development of a model structure that is defined by specific states or events and the relationships among them.” They maintain that “the model structure must be clinically relevant” and “consistent with the current knowledge of the biology of the health condition.” The guidelines define structural uncertainty as “those components of the decision problem that are not captured by the parameters (e.g., the clinical pathway with and without treatment, model structure, time dependency, and the functional forms chosen for model inputs”, and recommend that “structural uncertainty should be addressed through scenario analyses.”

Compared with previous versions, recently updated PBAC guidelines include an increased focus on model structuring (e.g. model conceptualisation and extrapolation issues) [49]. The PBAC guidelines highlight the importance of the model structure capturing “all relevant health states or clinical events along the disease or condition pathway”. The guidelines also request that it be considered “whether, for a given patient, an event experienced in the model should influence the risk of experiencing subsequent events” (as one of the examples of structural aspects). To characterise structural uncertainty, the PBAC guidelines state that multiple plausible structures should be defined and to “parameterise structural assumptions where there is sufficient clinical evidence or expert opinion to do so. Alternatively, use scenario analyses to assess the impact of assumptions around the structure of the economic model.”

The French national guidelines ask for justification of model structure (e.g. health states/events and their timing) [50]. The guidelines highlight the importance of structural sensitivity analysis and define it as uncertainty relating to the choice of the type of model, the selection of states, patterns of intervention, alternative methods for extrapolating health outcomes after the end of the observation period, the cycle length in a Markov model, etc. The guidelines recommend scenario analysis and model averaging (if alternative scenarios lead to different funning decisions) to characterise structural uncertainty.

Although the current guidelines developed by major national funding bodies acknowledge the role of model structuring and structural uncertainty, they only provide vague direction regarding these issues. For example, it seems that the CADTH guidelines refer to model structure representing only state/events included in the model. The review of guidelines also demonstrates that recommendations for the model structuring process (including the conceptualisation process) are highly non-specific. In terms of structural sensitivity analysis, some guidelines, e.g. the French Guidelines, consider the choice of modelling technique as the source of uncertainty. As discussed previously, this choice is driven by other structural aspects. The PBAC guidelines provide more specific guidance on formal approaches to characterise structural uncertainty.

Overall, although there have been important revisions in the updated versions of some national guidelines, their brevity and vagueness may lead to inconsistencies in how model structuring is presented and evaluated in the decision-making process.

6 Future Direction

Within the decision-making process, there remains scope for improvements to explicitly and systematically present and justify all key structural aspects of a model (e.g. explicit demonstration of the model conceptualisation process) and characterise structural uncertainty associated with structural choices.

One way to address these issues is a two-step process, with the first step involving the use of the best available evidence to inform all key structural aspects in a more explicit and systematic way. As noted earlier, it is beyond the scope of this article to discuss how to identify and select evidence and methods to inform structural choices specified here (e.g. methods to choose survival functions), and there are a number of published methodological guidelines and recommendations reflecting good practices to inform model structural aspects. For example, the NICE DSU describes a systematic approach to guide the extrapolation process (including a Survival Model Selection for Economic Evaluations Process Chart) [51]. A report by the ISPOR-SMDM Modeling Good Research Practices Task Force [19] and the Technical Support Document form the NICE DSU [20] provide a detailed practical guidance on model conceptualisation in decision analytic modelling. Key sources to inform the conceptual model include published clinical literature (including natural history studies and clinical guidelines), literature on relevant economic evaluations (focusing on the structure of the existing models) and expert opinion (e.g. Delphi process) [52]. Model validity is another important issue within the model development process [53]. Expert opinion can be used to assess the face validity of the conceptual model and structural assumptions.

In submissions to national funding bodies, the conceptual model development process and all other underlying structural choices/assumptions should be explicitly reported and justified (i.e. written documentation of the proposed model structure aspects, the process to identify evidence to inform structural aspects, factors influencing the choices/assumptions, expert consultations, graphical presentations such as influence diagrams) [19]. A key part of this process is to ensure that all structural choices and assumptions reflect the best available evidence. Failure to reflect the current best knowledge of the disease under study and the use of good practice guidelines and recommendations to inform the choices about key structural aspects are considered as important sources of modeller’s bias and should be avoided [54]. By reducing the risk of modeller’s bias, it is expected that this first step will facilitate the development of a robust base-case model structure addressing the following questions:

-

What structural aspects are considered to be relevant, and hence included in the final model, and why?

-

What structural aspects are excluded from the final model and why? (For example, because of a lack of reliable evidence and resources such as time. This means that the final base-case model can be a subset of the conceptual model.) What is the expected impact of such exclusions on the results of economic evaluation?

-

What are alternative plausible structural choices/assumptions?

The focus of the second step is then on any uncertainty associated with the model structuring process. Ideally, all alternative plausible structural assumptions should be formally quantified. However, it is important to recognise the difficulty associated with this process in decision making, including requirements for additional resources and time. The choice of the approach to characterise structural uncertainty also depends on preferences of appraisal committees such as PBAC (e.g. acceptance of expert opinion, quantitative or qualitative approaches to judge the potential impact of alternative plausible assumptions on model predictions).

The impact of alternative plausible structural assumptions on model predictions should be fully presented. If it is judged that alternative structural choices/assumptions are indicative of substantial different model predictions, a formal approach to characterise the uncertainty should be used. Recent frameworks for addressing structural uncertainty have recommended parameterisation as a preferred approach [12, 15, 27]. In the absence of data to inform an appropriate probability distribution (i.e. no prior information and no sufficiently reliable expert beliefs), or when a particular structural aspect is not meaningfully parameterisable, scenario analysis is recommended.

Within the decision-making process, the development of conceptual models and presentation of all key structural choices in a more systematic way (including uncertainty associated with this process) can result in better informed public funding decisions.

7 Conclusion

This article provides an overview of key structural aspects of a model, including the characterisation of uncertainty associated with structural choices/assumptions. Of note is our attempt to harmonise the terminology around the key structural aspects, which can potentially improve communications about model structuring between key stakeholders within the decision-making process. Model structuring should follow the same process as for any intervention (e.g. medical; devices, vaccines, pharmaceuticals, etc.) and the structuring process should follow what is clinically and economically relevant for a health condition at a particular point in time. Model structuring should not rely on the approach previously used and validated in the literature as new evidence may emerge which can change our understanding of the disease process and the way in which costs and outcomes develop over time.

In recent years, the literature on the potential impact of alternative structural choices/assumptions on model predictions has been growing, yet guidelines developed by national funding bodies (such as PBAC and NICE) provide limited procedural guidance on how to systematically present the model structuring process within the evaluation process. We have discussed the potential consequences of poorly structured decision analytic models, and provided some recommendations which are intended to improve transparency, comprehensiveness and consistency of model structuring. National funding bodies should work with evaluation groups and manufacturers (sponsors of new health technologies) to further optimise and standardise the guidelines on issues around model structuring and structural sensitivity analysis.

Our proposal is not intended to impose restrictions on current modelling practice within the decision-making process, but rather to offer suggestions for a more transparent and evidence-based practice. While the focus of this article has been on a limited number of national funding bodies, the discussion can be applied to other national funding bodies that use model-based economic evaluation to inform funding decisions but to which the same limitaions apply.

References

Buxton MJ, Drummond MF, Van Hout BA, Prince RL, Sheldon TA, Szucs T, et al. Modelling in economic evaluation: an unavoidable fact of life. Health Econ. 1997;6:217–27.

Caro JJ, Briggs AH, Siebert U, et al. Modeling good research practices–overview: a report of the ISPOR-SMDM modeling good research practices task force-1. Med Decis Making. 2012;32:667–77.

Cooper NJ, Sutton AJ, Ades AE, Paisley S, Jones DR, Economic WGUE. Use of evidence in economic decision models: practical issues and methodological challenges. Health Econ. 2007;16:1277–86.

Drummond MF, Sculpher MJ, Claxton K, et al. Methods for the economic evaluation of health care programmes. 4th ed. Oxford: Oxford University Press; 2015.

Haji Ali Afzali H, Karnon J. Exploring structural uncertainty in model-based economic evaluations. Pharmacoeconomics. 2015;33:435–43.

Tappenden P. Conceptual modelling for health economic model development. HEDS Discussion Paper 12/05. 2012. http://eprints.whiterose.ac.uk/74464/1/HEDSDP1205.pdf. Accessed 1 Dec 2015.

Ghabri S, Cleemput I, Josselin JM. Towards a new framework for addressing structural uncertainty in health technology assessment guidelines. Pharmacoeconomics. 2018;36:127–30.

Andronis L, Barton P, Bryan S. Sensitivity analysis in economic evaluation: an audit of NICE current practice and a review of its use and value in decision-making. Health Technol Assess. 2009;13:iii, ix–xi, 1–61.

Cooper NJ, Coyle D, Abrams KR, et al. Use of evidence in decision models: an appraisal of health technology assessments in the UK to date. J Health Serv Res Pol. 2005;10:245–50.

Draper D. Assessment and propagation of model uncertainty. J R Stat Soc Series B Stat Methodol. 1995;57:45–97.

Strong M, Oakley JE. When is a model good enough? Deriving the expected value of model improvement via specifying internal model discrepancies. Siam-Asa J Uncertain. 2014;2:106–25.

Bojke L, Claxton K, Sculpher M, et al. Characterizing structural uncertainty in decision analytic models: a review and application of methods. Value Health. 2009;12:739–49.

Brisson M, Edmunds WJ. Impact of model, methodological, and parameter uncertainty in the economic analysis of vaccination programs. Med Decis Making. 2006;26:434–46.

Frederix GWJ, van Hasselt JGC, Schellens JHM, Hovels A, Raaijmakers JAM, Huitema ADR, et al. The impact of structural uncertainty on cost-effectiveness models for adjuvant endocrine breast cancer treatments: the need for disease-specific model standardization and improved guidance. Pharmacoeconomics. 2014;32:47–61.

Jackson CH, Bojke L, Thompson SG, Claxton K, Sharples LD. A framework for addressing structural uncertainty in decision models. Med Decis Making. 2011;31:662–74.

Brennan A, Chick SE, Davies R. A taxonomy of model structures for economic evaluation of health technologies. Health Econ. 2006;15:1295–310.

Squires H, Chilcott J, Akehurst R, Burr J, Kelly MP. A systematic literature review of the key challenges for developing the structure of public health economic models. Int J Public Health. 2016;61:289–98.

Le QA. Structural uncertainty of Markov models for advanced breast cancer: a simulation study of lapatinib. Med Decis Making. 2016;36:629–40.

Roberts M, Russell LB, Paltiel AD, et al. Conceptualizing a model: a report of the ISPOR-SMDM modeling good research practices task force-2. Med Decis Making. 2012;32:678–89.

Kaltenthaler E, Tappenden P, Paisley S, Squires H. Identifying and reviewing evidence to inform the conceptualisation and population of cost-effectiveness models. NICE DSU Technical Support Document 13. Sheffield: School of Health and Related Research, University of Sheffield; 2011 [December 2015]. http://www.nicedsu.org.uk. Accessed 9 May 2018.

Claxton K, Palmer S, Longworth L, Bojke L, Griffin S, McKenna C, et al. Informing a decision framework for when NICE should recommend the use of health technologies only in the context of an appropriately designed programme of evidence development. Health Technol Assess. 2012;16:1–323.

Briggs AH, Weinstein MC, Fenwick EA, Karnon J, Sculpher MJ, Paltiel AD, et al. Model parameter estimation and uncertainty: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force–6. Value Health. 2012;15:835–42.

Weinstein MC, O’Brien B, Hornberger J, Jackson J, Johannesson M, McCabe C, et al. Principles of good practice for decision analytic modeling in health-care evaluation: report of the ISPOR task force on good research practices-modeling studies. Value Health. 2003;6:9–17.

Briggs AH, Claxton K, Sculpher MJ. Decision modelling for health economic evaluation. Oxford: Oxford University Press; 2006.

Gray AM, Clarke PM, Wolstenholme JL, et al. Applied methods of cost effectiveness analysis in health care. New York: Oxford University Press; 2011.

Gold MR. Cost-effectiveness in health and medicine. New York: Oxford University Press; 1996.

Bilcke J, Beutels P, Brisson M, Jit M. Accounting for methodological, structural, and parameter uncertainty in decision-analytic models: a practical guide. Med Decis Making. 2011;31:675–92.

Kaltenthaler E, Tappenden P, Paisley S, Squires H. NICE DSU Technical Support Document 13: identifying and reviewing evidence to inform the conceptualisation and population of cost-effectiveness models. NICE Decision Support Unit Technical Support Documents. London: NICE; 2011.

Haji Ali Afzali H, Karnon J, Merlin T. Improving the accuracy and comparability of model-based economic evaluations of health technologies for reimbursement decisions: A methodological framework for the development of reference models. Med Decis Making. 2013;33:333–42.

Sullivan PW, Valuck R, Saseen J, MacFall HM. A comparison of the direct costs and cost effectiveness of serotonin reuptake inhibitors and associated adverse drug reactions. CNS Drugs. 2004;18:911–32.

Haji Ali Afzali H, Karnon J, Gray J. A critical review of model-based economic studies of depression modelling techniques, model structure and data sources. Pharmacoeconomics. 2012;30:461–82.

Schulman KA, Lynn LA, Glick HA, Eisenberg JM. Cost effectiveness of low-dose zidovudine therapy for asymptomatic patients with human immunodeficiency virus (HIV) infection. Ann Intern Med. 1991;114:798–802.

Hlatky MA, Owens DK, Sanders GD. Cost-effectiveness as an outcome in randomized clinical trials. Clin Trials. 2006;3:543–51.

Haji Ali Afzali H, Karnon J, Gray J. A proposed model for economic evaluations of major depressive disorder. Eur J Health Econ. 2012;13:501–10.

Price MJ, Welton NJ, Briggs AH, Ades AE. Model averaging in the presence of structural uncertainty about treatment effects: influence on treatment decision and expected value of information. Value Health. 2011;14:205–18.

O’Mahony JF, Newall AT, van Rosmalen J. Dealing with time in health economic evaluation: methodological issues and recommendations for practice. Pharmacoeconomics. 2015;33:1255–68.

Wooldridge JM. Econometric analysis of cross section and panel data. 2nd ed. Cambridge (MA): MIT Press; 2010.

Connock M, Hyde C, Moore D. Cautions regarding the fitting and interpretation of survival curves examples from NICE single technology appraisals of drugs for cancer. Pharmacoeconomics. 2011;29:827–37.

Ara R, Brazier JE. Populating an economic model with health state utility values: moving toward better practice. Value Health. 2010;13:509–18.

Buckinx F, Reginster JY, Petermans J, Croisier JL, Beaudart C, Brunois T, et al. Relationship between frailty, physical performance and quality of life among nursing home residents: the SENIOR cohort. Aging Clin Exp Res. 2016;28:1149–57.

Ara R, Wailoo A. Using health state utility values in models exploring the cost-effectiveness of health technologies. Value Health. 2012;15:971–4.

Ara R, Wailoo A. Estimating health state utility values for joint health conditions: a conceptual review and critique of the current evidence. Med Decis Making. 2013;33:139–53.

Basu A, Manca A. Regression estimators for generic health-related quality of life and quality-adjusted life years. Med Decis Making. 2012;32:56–69.

Barton P, Bryan S, Robinson S. Modelling in the economic evaluation of health care: selecting the appropriate approach. J Health Serv Res Policy. 2004;9:110–8.

Pennington B, Filby A, Owen L, Taylor M. Smoking cessation: a comparison of two model structures. Pharmacoeconomics. 2018. https://doi.org/10.1007/s40273-018-0657-y (Epub 2018 May 8).

Bojke L, Claxton K, Bravo-Vergel Y, Sculpher M, Palmer S, Abrams K. Eliciting distributions to populate decision analytic models. Value Health. 2010;13:557–64.

National Institute for Health and Care Excellence (NICE). Single technology appraisal: user guide for company evidence submission template. UK; 2015. https://www.nice.org.uk/process/pmg24/chapter/cost-effectiveness. Accessed 10 Apr 2018.

CADTH. Guidelines for the economic evaluation of health technologies: Canada 2017. https://www.cadth.ca/dv/guidelines-economic-evaluation-health-technologies-canada-4th-edition. Accessed 10 Apr 2018.

Pharmaceutical Benefits Advisory Committee (PBAC). Guidelines for preparing a submission to the Pharmaceutical Benefits Advisory Committee 2016. https://pbac.pbs.gov.au/information/printable-version-of-guidelines.html. Accessed 10 Apr 2018.

Haute Autorité de Santé (HAS). Choices in methods for economic evaluation. Saint-Denis La Plaine: Department of Economics and Public Health Assessment (HAS); 2012.

Latimer N. Survival analysis for economic evaluations alongside clinical trials—extrapolation with patient-level data. NICE DSU Technical Support Document 14. Sheffield: School of Health and Related Research, University of Sheffield; 2013 [December 2015]. http://www.nicedsu.org.uk. Accessed 9 May 2018.

Husbands S, Jowett S, Barton P, Coast J. How qualitative methods can be used to inform model development. Pharmacoeconomics. 2017;35:607–12.

Haji Ali Afzali H, Gray J, Karnon J. Model performance evaluation (validation and calibration) in model-based studies of therapeutic interventions for cardiovascular diseases: a review and suggested reporting framework. Appl Health Econ Health Policy. 2013;11:85–93.

Tappenden P, Chilcott JB. Avoiding and identifying errors and other threats to the credibility of health economic models. Pharmacoeconomics. 2014;32:967–79.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Author Contributions

HHAA conceptualised and drafted the manuscript, and all co-authors reviewed and suggested changes. All authors reviewed and approved the final draft.

Funding

The Alan Williams Fellowship Award (Centre for Health Economics, the University of York) received by Hossein Haji Ali Afzali contributed to the conceptualisation of this article. This study was also supported by the National Health and Medical Research Council (NHMRC) of Australia via funding provided for the Centre of Research Excellence in Frailty and Healthy Ageing.

Conflict of Interest

Hossein Haji Ali Afzali is a member of the Evaluation Sub-Committee of the Medical Services Advisory Committee and Jonathan Karnon is a member of the Economic Sub-Committee of the Pharmaceutical Benefits Advisory Committee. Laura Bojke has no conflicts of interest to declare.

Rights and permissions

About this article

Cite this article

Haji Ali Afzali, H., Bojke, L. & Karnon, J. Model Structuring for Economic Evaluations of New Health Technologies. PharmacoEconomics 36, 1309–1319 (2018). https://doi.org/10.1007/s40273-018-0693-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-018-0693-7