Abstract

Introduction

Reporting of harms in randomized control trials is often inconsistent and inadequate.

Objective

To assess the quality of harms reporting in randomized control trials evaluating the efficacy of antibiotics used to treat pediatric acute otitis media and to investigate whether connections to pharmaceutical companies or the publication of the CONSORT-Harms extension influenced the quality of harms reporting.

Study design and setting

We considered randomized control trials that evaluated the efficacy and safety of antibiotic treatment for uncomplicated acute otitis media in children aged 0–19. We evaluated the quality of harms reporting using a 19-item checklist addressing the recommendations endorsed in the CONSORT-Harms extension.

Results

160 studies met our inclusion criteria. Overall quality of reporting relating to harms was low; on average studies adhered to 55.2% of the checklist items on the quality of harms reporting. The reporting of methods relating the measurement of harms was particularly lacking; studies adhered to an average of only 33.2% of the checklist items. The overall quality of reporting did not change after the publication of the CONSORT-Harms extension. The overall quality of reporting did not differ significantly in reports with or without declared connections to pharmaceutical companies (mean quality score of 56.8% vs 52.0%, respectively).

Conclusions

Harms reporting in pediatric randomized trials, especially the reporting of methods used to collect harms data, remains inadequate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The quality of harms reporting in randomized trials of antibiotics for acute otitis media in children remains inadequate. |

On average, trails adhered to approximately half of the recommendations endorsed in the CONSORT-Harms extension. |

1 Introduction

Despite the importance of medication adverse events in clinical decision making, the reporting of harms in randomized control trials is often inconsistent and inadequate [1,2,3,4,5,6,7,8,9,10,11]. The amount of space allocated to the reporting of harms in randomized control trials is often disproportionally low [3, 12, 13]. One meta-analysis found that one-fifth of pediatric randomized control trials failed to report any data on adverse events [2]. In an effort to improve the quality of harms reporting in randomized control trials, the CONSORT (Consolidated Standards of Reporting Trials) group released an extension (with ten recommendations) in 2004 specifically focused on the reporting of harms-related outcomes [14].

The objective of our study was to assess the methodological quality of harms reporting in randomized control trials of antibiotics used to treat pediatric acute otitis media based on the recommendations of the CONSORT-Harms extension. In addition, we sought to investigate whether publication of the CONSORT-Harms extension or connections to a pharmaceutical company influenced the quality of harms reporting. We chose acute otitis media because it is the most common indication for which antibiotics are prescribed in children [15].

2 Materials and methods

We considered published randomized control trials that evaluated adverse events of oral or intramuscular antibiotics used to treat uncomplicated acute otitis media in children 0–19 years of age. We performed an electronic search of MEDLINE (via PubMed) for reports published between 26 November 1948 and 20 April 2015 (see Electronic Supplementary Material for search strategy). We limited the search to human reports published in English. We identified additional reports by reviewing the reference lists of important review articles retrieved from the electronic search.

Two authors independently assessed the titles, abstracts and, if necessary, the full text of each report found using the search strategy to determine which reports satisfied the inclusion criteria. We required included reports to be randomized trials of oral or intramuscular antibiotics (compared to other antibiotics or placebo) used to treat children aged 0–19 years old with uncomplicated acute otitis media. Included reports must have evaluated harms, discussed a plan to collect harms data, or presented harms data in the results. We excluded reports that evaluated topical antibiotics or alternative (non-antimicrobial) therapies (except placebo), children with complications of acute otitis media (e.g., mastoiditis), and children with severe comorbid medical conditions. Disagreements were resolved by discussion.

Two authors (SWH, NS) extracted data from each included report using a standardized data extraction form. Uncertainties in the data extraction were resolved by discussion. When possible, we contacted authors for clarification. When more than one publication of a report existed, we used the publication with the most complete patient data in the analyses.

Using the detailed descriptions in the CONSORT-Harms extension [14] we developed a 19-item checklist of items most relevant to the reporting of adverse events related to the use of antibiotics in children with acute otitis media (Table in Electronic Supplementary Material).

We also collected additional data on whether the reports had connections to pharmaceutical companies. We considered reports as having connections to pharmaceutical companies if the published report indicated that any of the authors or funding sources were affiliated with a pharmaceutical company. To assess the impact of the CONSORT-Harms extension, we compared the 16 reports that were published at least 1 year after the publication of the CONSORT-Harms extension (i.e., after 16 November 2005) to reports published before this date.

Previous studies have calculated a quality score by assigning a point to each checklist item followed [1,2,3, 5,6,7, 10]. Because not all checklist items were applicable to every report, we did not find this method to be suitable for our study. Thus, we calculated a quality score to quantify a report’s overall quality of harms reporting; this variable was defined as the percent of applicable checklist items that were adequately addressed. We weighted checklist items equally, which is consistent with previous literature on the quality of harms reporting [1,2,3, 5,6,7, 10].

We used Chi-squared tests to assess whether the number of reports following each checklist item changed before or after the publication of the CONSORT-Harms extension and with or without declared connections to pharmaceutical companies. To compare quality score, we used a two-sample t test. STATA 14.0 software was used to perform all analyses.

3 Results

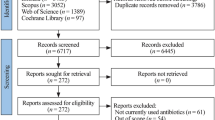

Of the 1833 articles found through the search strategy, we retrieved and reviewed 273 full text articles (Fig. 1). Of these, 160 randomized control trials met our inclusion criteria and were included in our analysis (listed in Electronic Supplementary Material).

The number of studies that adhered to each of the nineteen checklist items is shown in Table 1. The mean quality score was 55.2%, that is studies reported an average of 55.2% of the relevant checklist items. 35% of reports (56 out of 160 reports) followed less than half of the items; no reports followed all 19 items.

The reporting of methods used to collect harms data was particularly lacking; studies reported an average of only 33.2% of checklist items related to the methods used to collect harms (items 3–9). Reporting of how each adverse event was defined, how recurrent events were handled, and how withdrawal of subjects from the study due to adverse events was adjudicated were reported particularly poorly in most studies.

The quality of reporting in the results section was better than in the methods section; studies adhered to 70% of checklist items related to the reporting of adverse event data (items 10–17). Only two checklist items had low adherence rates: reporting data on severe harms and the number of times the adverse events occurred.

The mean quality score did not change significantly after the publication of the CONSORT-Harms extension (55.0% vs 56.4% respectively) (Table 2). Reports published after the publication of the CONSORT-Harms extension did however report severe adverse events data significantly more than those published before (p = 0.04).

The mean quality score did not differ significantly in reports with or without declared connections to pharmaceutical companies (56.8% vs 52.0% respectively). Reports with declared connections to pharmaceutical companies did however cite prior harms literature in discussions significantly more than those without declared connections to pharmaceutical companies (p = 0.02).

4 Discussion

We found that the quality of harms reporting in randomized control trials of antibiotics used for the treatment of pediatric acute otitis media is inadequate; 35% of reports followed less than half of the checklist items from the CONSORT Harms-extension. One previous meta-analysis studying harms reporting after the publication of the CONSORT-Harms extension in 107 pediatric randomized control trials of systemic medications (excluding probiotics, hormone replacement, and vaccines) also concluded that the quality of harms reporting was lacking; the included reports followed a mean of 3 out of 10 of the CONSORT recommendations [2]. The higher adhere rate observed in our study might be attributed to the fact that we selected randomized control trials that reported harms data or established harms as an objective, which has been shown to increase the quality of harms reporting [3]. Previous studies of treatment harms reporting in studies of adults have yielded similar results; the reported mean/median adherence to the recommendations in the CONSORT Harms-extension ranges from 41-63% regardless of the subject area (epilepsy (49%) [7], hypertension (41%) [1], pain (53–61%) [9, 10], cancer (57–63%) [6, 8], and ophthalmology (60%) [5]). The mean/median adherence to the recommendations in the CONSORT Harms-extension in two studies of reports published in high impact journals were similar (56% [4] and 58–67% [3]). This manuscript focused on quality of reporting; quality of reporting should not be conflated with the underlying validity of the data.

The reporting of the methods of harms data collection was the area needing the most improvement. Specifically, reports need to (1) define each of the adverse events, (2) explanation how (and who) determined whether an adverse event was attributable to the study product, (3) explain how recurrent adverse events were handled, and (4) specify rules used to withdraw patients from the study. Although the reporting of adverse events data was better than the reporting of methods, reports should make an effort to include data on the frequency of severe adverse events and the number of times the adverse events occurred.

We found minimal improvements in the quality of harms reporting in reports published after publication of the CONSORT-Harms extension; only item 11, reporting data on frequency of severe adverse events, improved. However, the number of reports published after the CONSORT-Harms extension was small (n = 14), which limited our ability to fully evaluate this association. Studies in other fields has shown that after the publication of the CONSORT-Harms extension there was either no improvement [5, 7], only improvements in the reporting of specific recommendations [10], or modest improvements in overall reporting [3, 9]. The modest improvements in the quality of harms reporting may signify a slow uptake in the CONSORT-Harms extension’s recommendations.

Reports with declared connections to pharmaceutical companies were more likely to cite prior harms literature in their discussion sections. Although not statistically significant, there was a trend towards improvement in three other areas (methods list and define adverse events studied, methods state rules for withdrawals, and results report data on withdrawals). These trends are supported by previous literature which shows that reports with connections to pharmaceutical companies often have higher quality harms reporting than those not connected to pharmaceutical companies [2,3,4, 6, 7, 9, 10].

There are limitations to our study that should be considered when interpreting the results. Only one database (Medline) was searched and the protocol was not registered. For the purposes of assessing the quality of harms reporting, we adapted the ten CONSORT recommendations to form a 19-item checklist. This specific checklist is not validated; however, all checklist items were created using the original CONSORT-Harms extension for harms reporting. Similar checklists of different lengths have been previously used in the literature [1, 6,7,8]. Calculating a mean quality score may not have taken into account the relative importance of items with respect to one another; nevertheless, we found it to be a useful summary statistic. We were also limited in our comparison of reports with and without connections to pharmaceutical companies as some funding sources or affiliations may not have been disclosed in the published reports.

5 Conclusion

Overall, we conclude that the quality of harms reporting in randomized control trials of antibiotics used to treat pediatric acute otitis media, is inadequate. In particular, the methods used in the collection of harms data is often not adequately described in the methods sections of the reports. Inadequate reporting of harms limits the ability of clinicians and researchers to critically evaluate the adverse events data presented in randomized control trials. Future studies would benefit from following the recommendations in the CONSORT-Harms extension.

Abbreviations

- CONSORT:

-

Consolidated standards of reporting trials

- RCTs:

-

Randomized control trials

References

Bagul NB, Kirkham JJ. The reporting of harms in randomized controlled trials of hypertension using the CONSORT criteria for harm reporting. Clin Exp Hypertens. 2012;34(8):548–54.

de Vries TW, van Roon EN. Low quality of reporting adverse drug reactions in paediatric randomised controlled trials. Arch Dis Child. 2010;95(12):1023–6.

Haidich AB, Birtsou C, Dardavessis T, Tirodimos I, Arvanitidou M. The quality of safety reporting in trials is still suboptimal: survey of major general medical journals. J Clin Epidemiol. 2011;64(2):124–35.

Maggi CB, Griebeler IH, Dal Pizzol Tda S. Information on adverse events in randomised clinical trials assessing drug interventions published in four medical journals with high impact factors. Int J Risk Saf Med. 2014;26(1):9–22.

O’Day R, Walton R, Blennerhassett R, Gillies MC, Barthelmes D. Reporting of harms by randomised controlled trials in ophthalmology. Br J Ophthalmol. 2014;98(8):1003–8.

Peron J, Maillet D, Gan HK, Chen EX, You B. Adherence to CONSORT adverse event reporting guidelines in randomized clinical trials evaluating systemic cancer therapy: a systematic review. J Clin Oncol. 2013;31(31):3957–63.

Shukralla AA, Tudur-Smith C, Powell GA, Williamson PR, Marson AG. Reporting of adverse events in randomised controlled trials of antiepileptic drugs using the CONSORT criteria for reporting harms. Epilepsy Res. 2011;97(1–2):20–9.

Sivendran S, Latif A, McBride RB, Stensland KD, Wisnivesky J, Haines L, et al. Adverse event reporting in cancer clinical trial publications. J Clin Oncol. 2014;32(2):83–9.

Smith SM, Chang RD, Pereira A, Shah N, Gilron I, Katz NP, et al. Adherence to CONSORT harms-reporting recommendations in publications of recent analgesic clinical trials: an ACTTION systematic review. Pain. 2012;153(12):2415–21.

Williams MR, McKeown A, Pressman Z, Hunsinger M, Lee K, Coplan P, et al. Adverse event reporting in clinical trials of intravenous and invasive pain treatments: an ACTTION systematic review. J Pain. 2016;17(11):1137–49.

Pitrou I, Boutron I, Ahmad N, Ravaud P. Reporting of safety results in published reports of randomized controlled trials. Arch Intern Med. 2009;169(19):1756–61.

Ioannidis JP, Contopoulos-Ioannidis DG. Reporting of safety data from randomised trials. Lancet. 1998;352(9142):1752–3.

Ioannidis JP, Lau J. Completeness of safety reporting in randomized trials: an evaluation of 7 medical areas. JAMA. 2001;285(4):437–43.

Ioannidis JP, Evans SJ, Gotzsche PC, O’Neill RT, Altman DG, Schulz K, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med. 2004;141(10):781–8.

Hersh AL, Shapiro DJ, Pavia AT, Shah SS. Antibiotic prescribing in ambulatory pediatrics in the United States. Pediatrics. 2011;128(6):1053–61.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

No sources of funding were used to assist in the preparation of this study. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Conflict of interest

Stephanie Hum, Nader Shaikh and Su Golder have no conflicts of interest that are directly relevant to the content of this study.

Financial disclosures

The authors have no financial relationships relevant to this article to disclose.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hum, S.W., Golder, S. & Shaikh, N. Inadequate harms reporting in randomized control trials of antibiotics for pediatric acute otitis media: a systematic review. Drug Saf 41, 933–938 (2018). https://doi.org/10.1007/s40264-018-0680-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40264-018-0680-0