Abstract

To meet the visual characteristics of human eyes, high resolution imaging technology came into being. In this paper, a high resolution reconstruction method of ghost imaging via SURF-NSML is proposed. Using the image registration method of Speeded Up Robust Features (SURF) and the fusion algorithm of New Sum of Modified Laplacian (NSML), a series of low-resolution images obtained by the ghost imaging system were registered and fused to obtain high-resolution images. This high resolution image reconstruction method does not need the use of spectroscopic devices, filters and other devices, simplifying the experimental equipment; and it is received by a bucket detector, which greatly increases the utilization of experimental equipment. By the reconstruction analysis of images from natural scenes and from the medical field, it is proved that this method combining the device platform with the language algorithm can reconstruct the target image with better visual characteristics and richer details, which has a good promotion effect on the research of image reconstruction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the wide application of imaging technology in all walks of life, people’s demand for image resolution is higher and higher. However, it is expensive to improve the resolution of the image only from the aspect of hardware. In the process of image acquisition, the image quality cannot meet the actual requirements due to the inherent resolution limitation, blur and noise of the optical system. Therefore, it is necessary to obtain a higher resolution image through image processing algorithm. High-resolution reconstruction is one of the methods to improve image resolution. High-resolution (HR) reconstruction refers to the process of reconstructing the original HR images [1, 2] from one or several low-resolution (LR) images.

Image registration plays an indispensable role in image HR reconstruction. SIFT (Scale Invariant Feature Transform) [3] is a robust scale-invariant Feature description method, and has been applied to face recognition, image mosaic, image registration and other fields [4]. However, SIFT algorithm has the characteristics of large amount of data, high time complexity and long time consuming. In view of the shortcomings of SIFT method with large data volume and longtime consumption, an image registration method based on Speeded Up Robust Features (SURF) was proposed. First, Magdy et al. [5] used the SURF method to extract feature points, and the matching point pairs of two images were obtained using the nearest neighbor matching. Then, the transformation relationship between images is obtained by combining Random Sampling Consensus [6] and least square method, and finally the registered image is obtained. For the registered image, the image fusion algorithm can be used to obtain high resolution image. Image fusion will collect the image data through image processing and computer technology, finally integrated into high-quality images, to improve the utilization of image information, improve the accuracy and reliability of computer interpretation. Among image fusion algorithms, the Sum of Modified Laplacian (SML) algorithm reflects the edge feature information of the image, and can clearly represent the details of the image at every scale and resolution [7]. In this way, by comparing the scale images corresponding to the two source images, the prominent details of the source images can be fused into the fusion image, so as to enrich the information of the fusion image and achieve a better fusion effect.

However, in the field of imaging, how to effectively simplify the experimental equipment and fully improve the efficiency of the imaging system while ensuring the imaging quality is also a problem that needs to be solved. Ghost imaging, as a new imaging method, makes use of quantum entanglement or spatial intensity correlation to acquire object imaging, which breaks through the traditional imaging concept of linear optics [8,9,10] and has attracted extensive attention in recent years. The non-classical light source and classical thermal light source were initially used in the ghost imaging experiment [11]. With the further research, the technology developed rapidly [12,13,14], from pseudorex ghost imaging [15], computed ghost imaging [16], compressed sensing ghost imaging [17], differential ghost imaging [18], blind ghost imaging [19] to quantum ghost imaging [20]. Liangsheng et al. [21] proposed a two-layer watermarking scheme based on computational ghost imaging and singular value decomposition. Dongfeng et al. [22] proposed a novel technique for polarization-multiplexing ghost imaging to simultaneously obtain multiple polarimetric information by a detector. Fei et al. [23] proposed a quantum circuit implementation of ghost imaging experiment, where the speckle patterns and phase mask are encoded by utilizing the quantum representation of images.

This paper proposes a high resolution reconstruction method of ghost imaging via SURF-NSML. First, a series of low-resolution images obtained by multiple measurements by the ghost imaging system are applied, and then the image registration method of Speeded Up Robust Features (SURF) and the fusion algorithm of New Sum of Modified Laplacian (NSML) are applied. These LR images are registered and fused to obtain an initial HR image. Finally, the initial HR image is further optimized by the image super-resolution reconstruction strategy based on flattening to obtain a high-resolution image with better visual characteristics. Two images in the natural scene and two images in the medical field were reconstructed, respectively, and then SSIM and RMSE indexes were used to verify and analyze the reconstruction method.

2 HR reconstruction method of ghost imaging via SURF-NSML

The HR reconstruction method of ghost imaging via SURF-NSML proposed in this paper is shown in Fig. 1. It mainly includes four parts: Acquisition of low resolution images, image registration via Speeded Up Robust Features, fusion reconstruction via New Sum of Modified Laplacian, image high-resolution optimization.

2.1 Acquisition of low resolution images

In this method, a ghost imaging system is used to obtain a series of low-resolution images. In ghost imaging system, a bucket detector with no spatial resolution is used to record the light intensity after the diffraction of an object. The laser is irradiated vertically on the spatial light modulator (such as SLM and DMD), and the light field modulated by SLM irradiates the target image \(T\left( {x,y} \right)\). \(I_{i} \left( {x,y} \right)\) is the distribution of the light field hitting target. The value of light intensity reflected from the object is collected by a bucket detector.

The light intensity value is obtained according to the Fresnel propagation function, and the calculation formula is as follows:

In the imaging process, the ghost imaging instrument platform is repeated M times, and a series of low-resolution images can be reconstructed using the associative function based on the light intensity value and modulation matrix obtained each time. The calculation formula is as follows:

where N represents the collection times of experiments carried out by the platform for each operation, and images are reconstructed using N SLM modes during the operation. \(\left\langle {B_{M} } \right\rangle\) represents the average light intensity value of the \(M{\text{th}}\) operation, \(T_{M}\) represents the LR image reconstructed at the \(M{\text{th}}\) operation.

2.2 Image registration via SURF

After a series of LR images are obtained through the ghost imaging system, to accurately estimate the shift between the images, SURF algorithm is adopted here to perform image registration. SURF determines the matching degree by calculating the Euclidean distance between two feature points. The shorter the Euclidean distance, the better the matching degree of the two feature points. The trace judgment of Hessian matrix is also added. If the signs of the trace of two feature points are the same, it means that the two features have contrast changes in the same direction; if they are different, it means that the contrast changes of the two feature points are in opposite directions. Image registration process based on SURF is shown in Fig. 2.

The overall thought process of SURF feature detection and description is as follows:

Step 1 Feature detection

Feature point detection is based on scale space theory. Point \(\hat{x} = \left( {x,y} \right)\) in image \(I\left( {x,y} \right)\), the Hessian matrix on scale \(\delta\) is defined as

where \(L_{xx}\),\(L_{xy}\) and \(L_{yy}\) are the result of the convolution of the second derivative \(\frac{{\partial^{2} }}{{\partial_{x}^{2} }}g\left( \delta \right)\) of the Gaussian filter with, \(g\left( \delta \right) = \frac{1}{{2\pi \delta^{2} }}e^{{{\raise0.7ex\hbox{${ - \left( {x^{2} + y^{2} } \right)}$} \!\mathord{\left/ {\vphantom {{ - \left( {x^{2} + y^{2} } \right)} {2\delta^{2} }}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${2\delta^{2} }$}}}} .\)

Here, the scale image pyramid is constructed with a method similar to SIFT, and the candidate feature points are selected after the extremum is obtained with the Hessian matrix. Then, interpolation operations are carried out in the scale space and image space to obtain the stable position and scale value of the feature points.

Step 2 Determine the main direction

To ensure rotation invariance, first, with the feature point as the center, the Haar wavelet responses of the points in the neighborhood with a radius of 6s (s is the scale value of the feature point) in the x and Y directions are calculated, and the Gaussian weight coefficients are assigned to these response values, so that the response contribution near the feature point is large, while the response contribution far from the feature point is small, which is more in line with the objective reality. Then, the response phases within the range of 60°are combined to form a new vector, and the direction of the longest vector is selected as the principal direction of the feature point. In this way, the main direction of each feature point can be obtained by calculating the feature points in Step 1 one by one.

Step 3 Descriptor formation

With the feature points as the center, the coordinate axis is first rotated to the main direction, and a square region with a side length of 20 s is selected according to the main direction. The window region is divided into 4*4 sub-regions. In each sub-region, the wavelet response is calculated, and the response value is also given a weight coefficient to increase the robustness of geometric transformation. Then, the absolute value of each sub-region response are added to form a description vector, and then the vector is normalized, so as to have a certain robustness to illumination.

Step 4 Feature matching

After the feature points of the reference image and the image to be registered were obtained by SURF method, the feature matching was carried out. As shown in Fig. 3. The feature vector contains the information of the neighborhood of the feature points, and the nearest neighbor matching method can be used to find out the potential matching pairs without additional information calculation. In this paper, the nearest neighbor vector matching method is adopted to find out all potential matching pairs by calculating the Euclidean distance of the feature points extracted from two images.

Step 5 Parameters estimation and resampling

Random Sampling Consensus was combined with the least square method to calculate the transformation relationship between images and remove the influence of mismatching. Finally, the registration image is resampled to obtain the registration result in the same coordinate system.

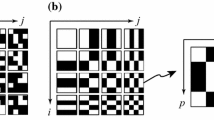

2.3 Fusion reconstruction via NSML

After a series of low resolution images are registered, NSML is used for image fusion to obtain the initial high resolution reconstructed images. To obtain better visual characteristics, more detailed information and prominent fusion effect, the improved New Sum of Modified Laplacian (NSML) image fusion algorithm is adopted here. Based on the only horizontal and vertical directions in traditional SML calculation of the Laplace operator value of each pixel with variable step size, the algorithm adds four directions on the diagonal line. The algorithm is defined as follows:

Here, \({\text{step}}\) is the variable step size parameter, \({\text{ML}}\) value accumulator window size is \(\left( {2N + 1} \right) * \left( {2N + 1} \right)\), and parameter settings of \({\text{step}}\) and \(N\) are mainly dependent on noise. The NSML fusion algorithm has excellent sharpness discrimination ability. The higher the sharpness of an image, the larger the corresponding \({\text{NSML}}\) value is. Assuming that \(F_{1} ,F_{2}\) and \(F\) respectively represent pixel values of the source image and the fusion image corresponding to the same position, while \({\text{NSML}}_{1} ,{\text{NSML}}_{2}\) respectively represent the \({\text{NSML}}\) clarity of \(F_{1} ,F_{2}\), then the fusion rules based on the NSML algorithm are as follows:

2.4 Image high-resolution optimization

In this process, the initial HR image is further optimized by a image super-resolution reconstruction strategy based on flattening. Specifically, for an image \(F\left( {x,y} \right)\) of size \(m \times n\), the gray value of pixels corresponding to any position \(\left( {x,y} \right)\)\(\left( {1 \le x \le m,1 \le y \le n} \right)\) is \(f\left( {x,y} \right)\) \(\left( {0 \le f\left( {x,y} \right) \le L - 1} \right)\) (\(L\) is the total number of image gray levels). According to the Probability Density Function of gray levels in the image, the occurrence probability of different gray levels can be expressed as:

Here, \(\delta \left( x \right) = \left\{ {\begin{array}{*{20}c} {1,x = 0} \\ {0,x \ne 0} \\ \end{array} } \right.\). Using histogram equalization optimization method, the Cumulative Distribution Function is used to perform the following transformation on the input gray level.

Function \(f\left( i \right)\)\(\left( {0 \le i < L} \right)\) represents the mapping relationship between the image gray level \(i\) before optimization and the gray level \(i^{\prime} = f\left( i \right)\) after optimization. In general, the grayscale mapping expression commonly used in histogram equalization is:

The above transformation generates an image whose gray level is relatively balanced and covers the whole range [0,1]. After histogram equalization, the contrast and average brightness of the image are significantly improved, histogram is significantly expanded on the whole brightness scale, the image quality is improved.

3 Result analysis

High resolution images can be obtained using the algorithm in this paper, which is not only suitable for reconstruction of images in natural scenes, but also for reconstruction of medical images. Generally speaking, in the process of sampling, the larger the sampling interval is, the spatial resolution is low, the imaging quality is poor, and the mosaic effect occurs in serious cases. The smaller the sampling interval, the higher the spatial resolution and the better the image quality. Therefore, in our imaging method, to obtain high spatial resolution images, we use full sampling information for reconstruction. The reconstruction effect is shown in Table 1.

Through the reconstruction of natural scene images and medical images, respectively, two reference image evaluation indexes structural similarity (SSIM) and root mean squared error (RMSE) were used for evaluation and analysis, and this algorithm was compared with the reconstructed images of ghost imaging (GI) and sum of modified laplacian-ghost imaging (SML-GI).

3.1 Feasibility analysis

Structural Similarity (SSIM) was adopted to verify the feasibility of the scheme. Structural similarity algorithm is a method to measure the degree of similarity between the image to be evaluated and the original image. It has a good consistency with the subjective feeling of human eyes. The larger the value, the better, and the maximum value is 1. The structural information is defined as brightness \(l\), contrast \(c\) and structural attribute \(s\). Mean \(\mu_{x} ,\mu_{y}\), standard deviation \(\delta_{x} ,\delta_{y}\) and covariance \(\delta_{xy}\) were respectively used to measure. Then the expression is:

\(C_{1} ,C_{2} ,C_{3}\) is a very small positive number. When \(\alpha = \beta = \gamma = 1\), the SSIM formula in formula (10) can be simplified as:

SSIM is used to evaluate the degree of similarity between the resulting image and the ideal image, as shown in the Fig. 4.

①-LR, ②-LR, ③-LR, ④-LR, ⑤-LR and ⑥-LR represent a series of low-resolution images obtained by the imaging system. Six of them are selected here as examples. \(I{\text{ - HR}}\) represents the initial high-resolution image obtained after fusion algorithm, and \(O - {\text{HR}}\) represents the optimized high-resolution image finally obtained through the algorithm in this paper. As can be seen from Fig. 4, the images obtained by fusion reconstruction using this method have higher SSIM value than the low-resolution images directly obtained by the imaging system, and the reconstruction effect is closer to the ideal image. Taking natural image 1 and medical image 2 as examples, the SSIM value of the reconstruction results obtained by this method are 0.9953 and 0.9927, almost close to 1. And this method is not only applicable to natural images, but also to medical images, which meets the applicability of imaging technology.

3.2 Fidelity analysis

Aiming at the problem of image quality fidelity evaluation, Root Mean Squared Error (RMSE) is used to measure the image quality. The main purpose is to calculate the relationship between the fused image and the ideal image. The calculation formula of the two is as follows:

where \(M \times N\) represents the size of the image, and \(V_{i,j}\) and \(V_{i,j}^{\prime }\) represent the pixel values of the original image and the reconstructed image, respectively. If the RMSE value calculated is relatively small, it means that the fused image is close to the ideal image, which can reflect that the evaluation effect of this method is better. RMSE values of reconstruction results of natural image 1 and medical image 2 at different stages are shown in Figs. 5 and 6.

As can be seen from Fig. 5, the reconstructed natural image 1 obtained by the method proposed in this paper has a lower RMSE, with a value of 0.1034. However, the lowest RMSE value in the low-resolution image obtained by the imaging system is 0.3411, which is significantly reduced by 0.2377 compared with the latter. Therefore, the natural image reconstructed by the former method has a better effect. In Fig. 6, the lowest RMSE value of the low-resolution image obtained by the imaging system is 0.3673, and the highest RMSE value is 0.6618. However, the RMSE value of reconstructed medical image 2 obtained by the method in this paper is 0.0964, significantly reduced by 0.2709–0.5654. Figure 6 and Fig. 7 prove that the fusion image of the proposed method is closer to the ideal image and can better satisfy the intuitive feeling of human vision.

3.3 Comparative analysis of experiments

To better verify the superiority of the algorithm presented in this paper, several different reconstruction methods are compared. The experimental platform is shown in Fig. 7. A 10UJ-5KHZ-532 nm laser is used, with a spectral width of 0.2 nm and a jitter error of the photosynchronous trigger signal of 0.4 ns. The light source is irradiated to the target object through a mirror (here, natural and medical images are taken as the target images, the pixel size is 64*64 and the physical size is 2.26*2.26 cm). The light reflected from the object is irradiated through the lens to the DMD (Model V-7001) modulator. The reflected light intensity on the DMD surface is received by the detector-Photomultiplier Tube (PMT, Model H10721.01), and then transmitted to the acquisition card of the computer (Model is M2I.2030. exp), and finally imaging is carried out by the computer associated reconstruction algorithm. The experimental device does not need to use spectroscopic and filter device, so the experimental device is simplified. It is received by a bucket detector, which greatly increases the utilization of experimental equipment.

Reconstructed images of different methods were evaluated and analyzed by SSIM and RMSE indexes, as shown in Table 2.

It can be seen from Table 2 that, for both natural images and medical images, the reconstruction results of the proposed method have higher SSIM value than GI and SML-GI algorithm, and lower RMSE value than the other two methods. It shows that the reconstructed image has better structural similarity with the ideal image, and is more close to the visual perception of the ideal image, and can reproduce the target image with better visual characteristics and higher quality.

4 Conclusion

To obtain high resolution images, this paper proposes a high resolution reconstruction method of ghost imaging via SURF-NSML. First, a series of low-resolution images produced by the ghost imaging system. Then, image registration method of Speeded Up Robust Features (SURF) and fusion algorithm of New Sum of Modified Laplacian (NSML) were used, and these low-resolution images are registered and fused to obtain an initial high-resolution (HR) image. Finally, the initial HR image was further optimized using the image super-resolution reconstruction strategy based on flattening to obtain a HR image with better visual characteristics and more detailed information. This HR image reconstruction method does not need the use of spectroscopic devices, filters and other devices, simplifying the experimental equipment; and it is received by a bucket detector, which greatly increases the utilization of experimental equipment. Through the reconstruction of images in the natural scene and in the medical field, SSIM and RMSE indexes are used for evaluation and analysis, and the comparison of reconstruction image between this method, GI and SML-GI algorithm, it proves that this method combining the device platform and language algorithm can reconstruct the target image with higher quality. And it can promote the research of image reconstruction.

References

S. Rohani, D. Allen, B. Gare, High-resolution imaging of the human incudostapedial joint using synchrotron-radiation phase-contrast imaging. J. Microsc. 277, 61–70 (2020)

L. Haochang, Y. Zhao, Y. Guang, High resolution imaging based on photo-emission electron microscopy excited by deep ultraviolet laser. Acta Physica Sinica Chin. Edition 69, 096801 (2020)

D. Lowe, Distinctive image features from scale-invariant key points. Int. J. Comput. Vis. 60, 91–110 (2004)

H. Hong, H. Qiqiang, L. Xiaojun, Hierarchical spatial pyramid max pooling based on SIFT features and sparse coding for image classification. IET Comput. Vis. 7, 144–150 (2013)

S. Magdy, Y. Abouelseoud, M. Mikhail, Privacy preserving search index for image databases based on SURF and order preserving encryption. IET Image Process 14, 874–881 (2020)

Y. Toda, H.H. Yz, T. Matsuno, Adaptive evolution strategy sample consensus for 3D reconstruction from two cameras. Artif. Life Robot. 25, 466–474 (2020)

S. Xiaolong, W. Zhengyong, F. Yaoqing, Fast image fusion based on sum of modified Laplacian. Comput. Eng. Appl. 51, 193–197 (2015)

M. Paul-Antoine, P.A. Morris, T. Ermes, Experimental limits of ghost diffraction: Popper’s thought experiment. Sci. Rep. 8, 13183 (2018)

P. Moreau, E. Toninelli, T. Gregory, Ghost imaging using optical correlations. Laser Photon. Rev. 12, 1700143 (2018)

P. Ryczkowski, C.G. Amiot, J.M. Dudley, Experimental demonstration of spectral domain computational ghost imaging. Sci. Rep. 11, 8403 (2021)

W. Le, Z. Shengmei, Fast reconstructed and high-quality ghost imaging with fast Walsh-Hadamard transform. Photon. Res. 4, 240–244 (2016)

Y. Yu, C. Wang, J. Liu, Ghost imaging with different frequencies through non-degenerated four-wave mixing. Opt. Express 24, 18290 (2016)

Y. Ya, W. Chengyuan, L. Jun, Ghost imaging with different frequencies through non-degenerated four-wave mixing. Opt. Express 24, 18290–18296 (2016)

Y. Hualong, Z. Leihong, Z. Dawei, Non-imaging target recognition algorithm based on projection matrix and image Euclidean distance by computational ghost imaging. Opt. Laser Technol. 137, 106779 (2021)

Z. Xiaonan, F. Xiquan, W. Bowen, Multipath effect constraint of pseudo-thermal light source in ghost imaging. Opt. Commun. 425, 185–189 (2018)

W. Heng, W. Ruizhou, L. Changsheng, Influence of intensity fluctuations on Hadamard-based computational ghost imaging. Opt. Commun. 454, 124490 (2020)

X. Wang, Y. Tao, F. Yang, An effective compressive computational ghost imaging with hybrid speckle pattern. Opt. Commun. 454, 124470 (2019)

Y. O-Oka, S. Fukatsu, Differential ghost imaging in time domain. Appl. Phys. Lett. 6, 111 (2017)

A.M. Paniagua-Diaz, I. Starshynov, N. Fayard, Blind ghost imaging. Optica 6(4), 460 (2019)

P.A. Moreau, E. Toninelli, P.A. Morris, Resolution limits of quantum ghost imaging. Opt. Express 26, 7528–7536 (2018)

S. Liansheng, C. Yin, T. Ailing, An optical watermarking scheme with two-layer framework based on computational ghost imaging. Opt. Lasers Eng. 107, 38–45 (2018)

S. Dongfeng, Z. Jiamin, H. Jian, Polarization-multiplexing ghost imaging. Opt. Lasers Eng. 102, 100–105 (2018)

Y. Fei, C. Kehan, A.M. Iliyasu, Circuit-based modular implementation of quantum ghost imaging. IEEE Access 8, 23054–23068 (2020)

Acknowledgements

This research is supported by National Natural Science Foundation of China (NSFC) 61775140.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any conflicts of interest that is related to the submitted work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ye, H., Kang, Y., Wang, J. et al. High resolution reconstruction method of ghost imaging via SURF-NSML. J. Korean Phys. Soc. 80, 964–971 (2022). https://doi.org/10.1007/s40042-022-00464-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40042-022-00464-4