Abstract

Electronic shopping is highly influenced by online reviews posted by customers against the product quality. Some fraudulent pretenders consider this as an opportunity to write the spam reviews to upgrade or degrade product’s reputation. Hence, detection of those reviews are very essential for preserving the interests of users. To date, number of researches have been proposed in order to detect the spam reviews and to provide the genuine resources for customers and business person. However, we found few limitations in existing supervised approaches. First, most of the supervised approaches have used manual labelling of reviews into spam and non-spam. However, due to identical appearance of reviews manual labelling can not be considered as authentic. Second, the scarcity of spam reviews leads to data imbalance problem. Third, computing similarities among reviews naturally needs expensive computation. In this work, we propose a novel and robust, spam review detection system which efficiently employ following three features: (i) sentiments of review and its comments, (ii) content based factor, and (iii) rating deviation. To address the aforementioned limitations, we investigated all these features for only suspicious review list in which only those reviews have kept which received comments by peer users. The proposed system achieved the F\(_1\)-score of 91%. The proposed system can be a great asset in spam detection system as it can be used as an stand-alone system to purify the product review datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Online reviews on products and services play a significant role for both manufacturers and consumers as, they hold huge user opinions and experiences [13, 24, 33, 39, 42, 46, 49]. A product with high proportion of positive reviews attracts more customers and hence, increases the product business [41]. At the same time, higher proportion of negative reviews received by any products, harm the product eminence and leads to financial loss [15, 50]. Some fraudulent pretendersFootnote 1 consider this as an opportunity to mislead the system or customers by posting spam reviews to either elevate some unpopular products or businesses or, lower some popular quality products or businesses [2, 3, 22]. To accomplish this, they appoint some group of individuals, also called spammers, to create not only synthetic positive reviews for their own products but also damaging negative reviews for their competing product [32]. Customers often selects the products with more positive opinions and hence get mis-leaded due to these non-truthful reviews [12]. This affect products as well as e-commerce sites reputation as customer may avoid purchasing products from website.

The reviews are usually short documents which are used on shopping sites to give customers an opportunity to rate and comment on products they have purchased. The objective is to characterize whether the given review is a spam or a real. The job can be modelled as a 2-category classification problem Roy et al. [40]; Singh et al. [45]. Most of the existing works used supervised machine learning approach to build the classifier. Under this direction, researchers mainly focused on extracting factors to improve the classifier performance. Three major investigated factors derived from review content are (i) similarity among the reviews [1, 16, 17, 21, 27], (ii) author’s activeness [6, 24], and (iii) author’s rating behaviour [6, 13]. Other than feature engineering, finding labelled data has also been researchers main concern towards training the classifier. Without labelling we can not train a supervised machine with a new task. Let’s say we want to train a machine to recognize spam reviews. We have millions of reviews, some of which are spam, and some of which are real. We need something either human or machine to first teach the computer which reviews are spam and which are real. Most of the existing works tried to label spam reviews that could be detected by a human. However, due to the identical appearance of reviews, it is difficult for a person to categorise a review into spam or non-spam. Spammers can carefully craft a spam review that is just like any other innocent review. They use same linguistics as it is used by the authentic reviewer. Moreover, in the current era of Internet, where thousands of review are being generated everyday, manual labelling is very costly and time consuming [30]. For example, Mukherjee et al. [32] managed to label just 2431 reviews which took them around 8 weeks of time. Therefore, one of the research challenges of review spam detection is the deficiency of labeled dataset. As per our knowledge, for product review domain there is only one dataset with true gold standard, which is publicly availableFootnote 2 [36, 37].

In most of the earlier researches mainly two labelling methods have been used: (i) manual labelling, and (ii) artificial corpus created from crowd-sourcing websites. In the prior method, several researchers [28, 29, 32] employee two or more experts for manual labelling of reviews into spam and non-spam and then calculate the agreement between the evaluators using Cohens Kappa measure (a measure of the degree of consistency between two or more than two rates). Based on agreement, they decide whether to accept the labelling or not. However, for human reader deciding a review as spam or non-spam is not a trivial task.

Other group of researchers tried to collect the spam and real reviews from the crowd-sourcing websites by paying money to those people who write reviews artificially. But the problem with these synthetic reviews are, they do not represent real spam reviews. Amazon Mechanical Turk is an example of such crowd-sourcing website. According to Mukherjee et al. [34], Turkers job was not appreciable due to lack of domain knowledge and less effort in writing spam reviews as they have little gain in doing so.

In this paper, we attempt to investigate the problem of labelling reviews and proposes a novel technique for spam review annotation aromatically. According to techrepublic.com [48], in the existing methods of spam detection, 70% of the job is probably about the pre-processing and labelling of reviews. Therefore, we must need an automated robust system to perform review labelling, so that the overall time complexity of the system is reduced.

This paper use the comment section of the review to label them as either spam or non-spam. Recently some e-commerce sites have started accepting comment on the posted reviews by other customers (as shown in Fig. 1). A comment may indicate the agreement of the customer with existing review or queries on the review or their disagreement with the review. Other customers including the reviewer can post answer to that query as reply. Users can also press “report abuse” if they find any comment as inappropriate. Usually a review receives number of comments. It is our assumption that for real authentic reviews, most of its comments have similar sentiments as of reviews. But, for spam reviews, most users may deny the reviewer’s view. Hence, most of the comments may have opposite sentiments as of a reviews. In this work, we are calculating the sentiment variation between review and their total comments. If the calculated variation is more than the threshold value, we label that review as spam review else, non-spam.

The main contribution of the paper can be gleaned as follows: First, we exploit the comment data to label the reviews in spam or non-spam classes. Using those labelled data, we train a machine learning model to classify the other unlabelled data into spam and non-spam. To the best of our knowledge, this is first effort which uses the comment data associated with review for labelling the reviews into spam and non-spam. Second, we find the labelled dataset is highly imbalanced with spam class being minority. To mitigate the effect of data imbalanced, we use two over-sampling techniques to make the classes comparable. Finally, we employ several machine learning algorithm to design a spam detection model.

The remainder of the paper is organized as follows: Sect. 2 discusses the related work; Sect. 3 presents the methodology of proposed work; Sect. 4 shows various experimental results which is followed by discussion in Sect. 5. Finally we conclude in Sect. 6.

2 Literature review

Spam detection is highly investigated for E-mails and Web-pages [8, 10, 35]. In customer reviews domain, spam detection research has started getting attention in recent years with the high growth of e-commerce [22, 31, 38]. Manually distinguishing of spam reviews from real authentic reviews is not a trivial task [20]. Hence, number of supervised approaches have been proposed in the literature which automatically separate spam reviews from real ones [6]. In this section, we present a brief review of some notable research works in this area. We have broken down those previous researches into two categories: (i) detecting spam reviews, (ii) detecting spammers.

2.1 Detecting spam reviews

According to Xie et al. [49] singleton reviewsFootnote 3 have greater impact on product’s rating and popularity. The authors used co-related temporal pattern for singleton spam review detection. They discovered that, singleton reviews are significant source of spam reviews. Mukherjee et al. [33] raised the issue of pseudo fake reviewsFootnote 4 which is extensively being used for spam detection. They compared the fake review detection accuracy on pseudo fake reviews and real fake reviews. They found 67.8% accuracy for fake review detection on Yelp’s real-life data. Li et al. [24] considered the posted orders of reviews while detecting fake reviews. They first analysed six time sensitive features based on review contents and reviewer behaviours. Then applied two methods, the threshold-based and the supervised one, to detect the fake reviews as soon as possible. Their findings showed that the supervised method worked with high precision and recall on fake reviews based on training samples. Whereas, threshold-based method achieved good accuracy without training samples. Crawford et al. [6] proposed a comprehensive survey of spam review detection using various machine learning techniques. The survey explored the performance of various approaches for classification and detection of review spam. The survey also focused on scarcity of data in online review spam. Several new research goals were proposed, one of them was effect of big data analytics for review spam detection. Heydari et al. [13] raised three deficiencies inherent in spam detection supervised approaches: data imbalance problem, manipulated rating deviation by spammers and complexity of content based methods. To overcome these deficiencies, they proposed a pattern recognition technique in which aforementioned deficiencies were investigated in suspicious time intervals. They employed author’s activeness, rating behaviour and context similarity of reviews in each captured intervals. Later, for all three factors they calculated the spam score and hence, decided a review is spam or not. Their approach suffered with few limitations also. First, the labelling of dataset as spam or non-spam was not clear and second, the authors focused only on selected time interval for spam detection however, in non-captured intervals also the presence of spam review can not be denied.

Previous approaches mostly focused on conventional distinct features, which were derived from linguistic and psychological intimation. However, these methods were limited to preserve the contextual meaning of a sentence or document from the discourse viewpoint, which reduced the performance. Ren and Ji [39] explored a deep structure of neural network model to learn document level representation for detecting spam opinions. First, the model used convolutional neural network to learn sentence representation from continuous bag of words model. Then, a gated recurrent neural network model was used to model discourse intuition and to get a document representation from combined sentence representation. At last, those documents, in the form of vectors, were used as an input or feature to detect the spam opinion. Ren and Ji [39] counted three advantages of using empirical neural network structure for spam detection over the traditional approaches. First, feature extraction was automatic, which not only captured the complex semantic information but also, reduced the human efforts. Second, through word embedding we can directly fed the raw text to the neural networks which, alleviate the scarcity of annotated data to some extent. A similar, comparative approach was proposed by [23] for deceptive spam detection using document representation and feature combination. They verified that feature combination with uni-gram outperformed the feature combination with proposed, sentence weighted neural network for domain independent dataset. However, for cross-domain dataset the effect was opposite.

2.2 Detecting spammers

There are some works which first focused on finding spammers and then make all the reviews written by them as spam reviews. Jindal et al. [18] identified unusual patterns [43] of review to identify the suspicious behaviours of reviewers. They defined a set of expectation and proposed corresponding unexpectedness measures. Each rule and group belonged to unexpectedness were considered as abnormal behaviour of reviewers which indicated spam activity. Lu et al. [28] proposed a unique, factor graph model, to detect fake review and reviewers simultaneously. They build the labelled fake reviews from Amazon dataset and defined some feature between review and reviewer. They also identified the importance of each derived features in detecting fake review and reviewers. Mukherjee et al. [32] proposed a novel collaborative method to detect spammers group. The authors first discovered a set of candidate groups using frequent item set mining from which a labelled set of spammer groups was produced. Then, they used several behavioural models among groups, individual reviewers and products they reviewed to detect the fake reviewers groups. The authors suggested that spotting a fake reviewers group is quite easier than spotting an individual fake reviewer or fake review.

A summary of the relevant research on spam review detection is presented in Table 1.

Most of the works, which used supervised approach for spam detection, implemented the data labelling manually. But, as this research follow we will see that labelling data automatically, based on the sentiment mining, give better accuracy for classifier.

3 Research methodology

This section describes the proposed methodology in detail. The flow diagram of the proposed approach is shown in Fig. 2. Five main phases of the proposed system are (i) data collection, (ii) data pre-processing, (iii) feature extraction, (iv) data labelling and, (v) classification. Each of them are explained in the following subsections.

3.1 Data collection

A total of 39,382 online product reviews were collected from Amazon.in using the scrapper software developed in python by authors. The examined product were taken from electronic categories like mobile phones, headphones and powerbank. Data were collected during the months of June 2017 to July 2017. For power bank, we collected 5080 reviews from Amazon. The total number of comments received by various reviews for product power bank was 55. For mobile phones, we collected 29,126 reviews and 2322 comments from Amazon. Similarly, for head phones we collected 5176 reviews and 62 comments. We can observed that the number of comments is very low as compare to the number of reviews for each product. There can be two reasons for this: first, the comment section with review has introduced recently (in the late 2016–2017) on Amazon but, the product exists from longer time. Second and the most important thing is a large portion of the total reviews contains in just one or two lines which, do not convey any information about the quality of product, hence, other similar users do not read and comment those reviews. Collected data were grouped into review data and comment data. For review data we extracted following fields: review id, review text, helpfulness votes, star rating, and number of comments. Similarly for comment data, comment id, comment text and review id associated with each comment were extracted.

A detail description of data collection is shown in Table 2.

3.2 Data pre-processing

As the research aims to label the reviews based on sentiments variation between review and its comments, we removed those reviews from the training review list which did not receive any comment from peers. The procedure to derive the list of training review from scrapped raw reviews of Amazon is shown in Algorithm 1. After removing such reviews the final dataset size was reduced from 29,332 reviews to 2439 reviews. Apart from this, we removed the tags present in scrapped review texts and comment texts. There were some reviews in which Unicode character and product’s snapshot were present, we cleaned those unwanted information also from the real dataset used for this study.

3.3 Feature extraction

An important step was to extract the effective features for training and testing of proposed spam detection system. We extracted seven different features from review data and comment data. The following features were extracted: (i) Rating, (ii) Review_Sentiment, (iii) Comment_Sentiment, (iv) Number_Comment, (v) Helpful_Votes, (vi) Avg_Cosine_Similarity and (vii) Rating_Deviation. A detailed description of each feature is shown in Table 3.

We used Cosine Similarity to calculate the Avg_Cosine_Similarity between reviews. Generally, a Cosine Similarity between two documents tells that how those documents are similar. In our case, we took two reviews say \(r_1\) and \(r_2\) and calculated the similarity between them. We repeated this for all pair of reviews present in review list. Using the formula given below we can find out the similarity between any two reviews.

Cosine similarity measures the two documents on the scale of 0 to 1 where, 0 refers “no match” and 1 refers “100% match”. For example, if similarity score between any two reviews comes 1 that means they are exact similar of each other.

3.3.1 Feature selection

The feature set used for training the classifier was different from the one used in testing. Because, in training we needed labelled reviews which, in turn based on the review sentiments and comments sentiments. The, following features were extracted for training: (i) Rating, (ii) Review_Sentiment, (iii) Comment_Sentiment, (iv) Number_Comment, (v) Helpful_Votes, (vi) Avg_Cosine_Similarity and (vii) Rating_Deviation.

In real life scenario, we can not wait until a review gets at least 5 comments by other peer reviewers and then check them for their authenticity. Instead, we need an automated system which can detect the spam reviews as soon as a review appears on the product’s review page. Therefore, for testing case, we selected such features which are independent on review comments. So that the proposed system will be generalized for existing reviews as well as new reviews. Hence, we selected following features for testing the system: (i) Rating, (ii) Helpful_Votes, (iii) Avg_Cosine_Similarity and (vii) Rating_Deviation.

3.4 Data labelling

In this work we have proposed the automatic labelling of reviews into spam and non-spam. Unlike this, most of the earlier works used human labelling [1, 14]. we used automatic labelling of reviews which is described below.

3.4.1 Automatic labelling

Automatic labelling of reviews into spam and non-spam is a two step process. First, we performed the sentiment analysis of review text and its associated comment texts and later, based on their sentiment differences we perform labelling. Sentiment analysis is an approach to categorise the sentiments of text in terms of positive, negative or neutral. Most of the sentiment analysis tools give the overall polarity and subjectivity score of the text as sentiment score. Polarity is calculated based on number of positive, negative and neutral terms present in a documents. Polarity range varies on the scale of \(-1\) to \(+1\) where, negative sign (−) shows the negative sentiments of text and positive sign (+) shows the positive sentiments. If the polarity scores comes zero (0) that means, neutral sentiment. Similarly, subjectivity of a sentence depends on their contexts and its range varies between 0 to 1 where, 0 refers neutral sentence and 1 refers the opinionated sentence. A python code to calculate the sentiment of reviews was developed. The current research used sentiwordnet package present in NLTK (Natural Language Tool Kit) for calculating the sentiment score. For each review the system calculated its total positive (+) score, total negative (−) score and finally addition of those two gave us overall sentiment score. Similarly, for each comments we calculated its sentiment score. An average sentiment score was computed for all comments associated with a review. We then checked the variation between review sentiments and average sentiments of its comments. If the variation between the two was more than the threshold t, we labelled those reviews as spam. We followed here voting based principal for decision making. That means, if more than t people commented against the reviewer opinion about the product’s quality, we consider that review as spam. We kept the t value as 0.6 for our experiments. The procedure to label the reviews is shown in Algorithm 2.

3.4.2 Human labelling

Three human evaluators were recruited to examine the selected 1332 reviews as in case of automatic evaluation. Three independent copies of 1332 reviews are given to the evaluators. They asked to annotate each review as per their expertise. If at least two out of three evaluators gave same label for a review, we considered that as final label. For example, if two evaluators annotates a review as spam and one annotates as non-spam, we consider the review as spam. Similarly we evaluated the label for each review.

3.4.3 Agreement measurment

To see the agreement between automatic labelling and human labelling Cohens Kappa [5] statistics was used. The Cohens Kappa equation was computed to evaluate the consistency in the two evaluation methods. The results lie between 0.65 and 0.73, which has substantial agreement. Therefore, the judgment among two evaluation methods were consistent and effective. As a product has got many reviews and it is impossible for human evaluators to judge all, we selected automatic labelling for further experiment.

3.5 Classification

We used supervised learning to detect the spam reviews by looking it as the classification problem of separating reviews into two classes. Three different classification approaches Gradient Boosting (GB) classifier [9], Random Forest (RF) classifier [25], and Support Vector Machine (SVM) [47] were employed for experiments. The classification was performed on 1332 reviews from Amazon.in. As we know, in real life scenario, the number of spam reviews are much smaller than non-spam reviews hence, we have taken care of data imbalance problem over two classes. We performed the experiments in two phases: (i) classification with imbalance data and, (ii) classification with balance data.

4 Result

In this section, we perform experiments on real dataset scrapped from Amazon to validate the effectiveness of our proposed approach presented in Sect. 3. The experiments are implemented using python language. All experiments are conducted on Linux operating system with Intel processor having 8 GB memory. The performance matrices used for classification were precision, recall, \(F_{1}\)-score and Receiver Operating Characteristics (ROC) curve [7]. They can be evaluated using following mathematical equations:

where True Positives (TP), these are the correctly predicted positive values which means that the value of actual class is yes and the value of predicted class is also yes. E.g. if actual class value indicates that the review is spam and predicted class tells you the same thing. True Negatives (TN), these are the correctly predicted negative values which means that the value of actual class is no and value of predicted class is also no. E.g. if actual class says the review is not spam and predicted class tells you the same thing. False positives and false negatives values occur when your actual class contradicts with the predicted class. False Positives (FP), when actual class is no and predicted class is yes. E.g. if actual class says the review is non-spam but predicted class tells you that the review is spam. False Negatives (FN), when actual class is yes but predicted class in no. E.g. if actual class value indicates that the review is spam and predicted class tells you that the review is non-spam.

In ROC curve the true positive rate is plotted as a function of the false positive rate for different cut-off points of classifier. ROC curve that passes through the upper left corner give the best result. That means the closer the ROC curve is to the upper left corner, the higher the overall accuracy of the test [51].

4.1 Classification with imbalance data

We checked the distribution of reviews after labelling them into spam and non-spam classes. We found that the data was highly imbalanced between two classes. Out of 1332 reviews, only 55 reviews were labelled as spam and rest 1277 reviews were non-spam. We performed the experiments on this imbalanced dataset and gave features extracted from them as an input to the classifiers. We sampled the dataset into 3:1 ratio for training and testing the system. First, we performed the experiment with RF classifier. The classification result is shown in Table 4.

As can be seen from Table 4, our target class was Class 0 or spam class whereas, Class 1 refers non-spam. Precision and Recall value for class 0 were 0.62 and 0.38, which were quite low. However, for class 1 precision and recall were 0.95 and 0.98 respectively. The confusion matrix for Table 4 is shown in Fig. 3. Confusion matrix explains the data distribution between the classes. As can be seen from Fig. 3, in Class 0 we just have 13 reviews however, in Class 1 we have 151 reviews. This imbalanced set of data yields in poor accuracy.

Later we repeated the experiment for the GB classifier and SVM. The results are shown in Table 5.

As can be seen from Table 5 that, precision accuracy for target Class 0 was increased to 0.88 for GB classifier which is quite good but recall value is still low as 0.44. Table 5 also shows the results of SVM. The results we got for SVM using imbalanced data were worst as it could not classify any review as spam.

4.2 Classification with balance data

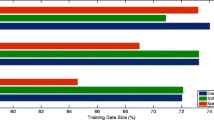

Next we performed the experiment for balance data. For balancing the data we used two over-sampling techniques: (i) SMOTE [4] and (ii) ADASYN [11]. Then we applied classification approaches RF, GB and SVM in combination with SMOTE and ADASYN. We formed following six cases for experiments:

-

Case 1: RF with SMOTE

-

Case 2: RF with ADASYN

-

Case 3: GB with SMOTE

-

Case 4: GB with ADASYN

-

Case 5: SVM with SMOTE

-

Case 6: SVM with ADASYN

The results for all six cases are shown in Tables 6, 7 and 8 for testing datasets. Table 6 shows the results of RF after applying over-sampling. Similarly, Tables 7 and 8 shows the results of GB and SVM after applying over-sampling respectively.

As can be seen from Table 6, F\(_1\)-score for SMOTE is just 0.55 but for ADASYN it is 0.91. That means, ADASYN over-sampling techniques performed better and gave higher accuracy for RF classifier.

Similarly, in Table 7 we can see that for Case 3 the F\(_1\)-score is 0.59 but, for Case 4 the F\(_1\)-score is 0.88. That means GB classifier performed better with ADASYN over-sampling. The Table 8 shows the results we got for SVM classifier with SMOTE and ADASYN respectively. For both cases, Case 5 and Case 6, SVM accuracy is comparatively low as F\(_1\)-scores are 0.49 and 0.65 respectively.

Therefore, the best performance we got for our target class 0 was in Case 2. That means the RF classifier with ADASYN over-sampling gave the best results and it classified the spam reviews with F\(_1\)-score of 0.91. However, with ADASYN over-sampling GB classifier (Case 4) gave the \(F_1\)-score accuracy of 0.88 (see in Table 7). The confusion matrix of Case 2 and Case 4 are shown in Figs. 4 and 5.

We also measured the accuracy of best cases, Case 2 and Case 4, in terms of ROC area under curve and it is shown in Figs. 6 and 7.

5 Discussion

Three major peaks of the current research are: (i) automatic labelling of reviews into spam and non-spam using sentiment mining approach, (ii) feature selection for training and testing cases and, (iii) balancing data over the two classes spam and non-spam.

To the best of our knowledge this is the first attempt to use the comment data associated with review for labelling spam reviews. Earlier, all research works used manual labelling for the same purpose [18, 32]. Some authors [17, 26] used high-quality gold-standard labeled data. The current system calculated sentiment variation between each review and its associated comments for labelling the reviews into two classes that is spam and non-spam. We kept the variation threshold value t as 0.6. As it is very difficult that all comments would be in against the review sentiment, we kept threshold t at 0.6. That means, if sentiment difference was more than 0.6, the review was labelled as spam. Apart from this we also performed human labelling to see the agreement between automatic and human labelling. Cohen’s Kappa experiment was conducted and found that two aforementioned labelling methods was in good agreement. We selected the automatic labelling over human labelling because human has limited capacity and it is more time consuming. But, an automated system can label thousands of reviews in very less time.

We used three classification approaches, RF, GB and SVM to validate our proposed model. We performed the experiments in two phases: (i) classification with imbalanced data, and (ii) classification with balanced data. Imbalanced dataset yielded poor \(F_1\)-score as 0.48 and 0.58 for RF and GB classifier respectively. SVM results were worst for our imbalanced dataset as it could not classify any review as spam. We got the best result for RF with ADASYN over-sampling technique as, the \(F_1\)-score was 0.91 for our target class spam (Table 6).

In order to show the performance improvement, we compared our proposed approach with three earlier works Lu et al. [28], Li et al. [24], and Heydari et al. [13] as shown in Table 9. Although, various related works are available, as per our knowledge, non of them considered comment section of review for their dataset preparation hence, we could not implement their dataset using our proposed model. We have done comparison between different approaches based on the \(F_1\)-score measure. Lu et al. [28] used factor graph model between review and reviewer and achieved \(F_1\)-score of 0.3961 on dataset of electronic products on Amazon. Li et al. [24] got \(F_1\)-score 0.851 on Amazon dataset for the threshold-based spam detection method. Similarly, [13] got an \(F_1\)-score of 0.86 on dataset of cameras and photos categories from Amazon.

6 Conclusion, limitations and future work

We introduced a novel sentiment mining approach for detecting spam reviews. For the supervised spam detection, we needed to label the reviews for training the classifiers, RF, GB and SVM. The labelling was done automatically based on the sentiment variation between each review and its associated comments. We kept the sentiment variation threshold 0.6. That means, for any review if more than or equal to 60% of the users have shown opposite sentiment then, it falls in suspicious list of review. Two over sampling techniques were used to classify reviews into spam and non-spam. We got the best results for RF with ADASYN over-sampling with F\(_1\)-score 0.91. The results we got were slightly better than several existing models in terms of classifier’s accuracy.

Although, the results we got are quite promising, the proposed system suffers with few limitations also. First, the paper kept only those review in suspicious review list which had received at least five comments by peer users. That means, the paper did not consider the authenticity of other reviews which did not fall in the given criteria. Second, the experiments were performed on only electronic products of Amazon. The future experiments can include products from other categories and from other e-commerce website to make a generalize system. Third, the unavailability of comment dataset. That means there were only few reviews which received the comments. In future, with the popularity of comment section more review may start receiving the comments which, might remove the use of over-sampling techniques for spam detection.

Notes

Who tend to control and optimize the overall customer opinion about any product or service.

If a review, is the only review written by reviewer then we say it as singleton review.

Pseudo fake revierws are synthetic or non-genuine reviews generated by Amazon Mechanical Turk.

References

Algur SP, Patil AP, Hiremath P, Shivashankar S (2010) Conceptual level similarity measure based review spam detection. In: International conference on signal and image processing (ICSIP), pp 416–423

Banerjee S, Chua AY (2014) A theoretical framework to identify authentic online reviews. Online Inf Rev 38(5):634–649

Banerjee S, Chua AY, Kim J-J (2015) Using supervised learning to classify authentic and fake online reviews. In: Proceedings of the 9th international conference on ubiquitous information management and communication. ACM

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Cohen J (1968) Weighted kappa: nominal scale agreement provision for scaled disagreement or partial credit. Psychol Bull 70(4):213

Crawford M, Khoshgoftaar TM, Prusa JD, Richter AN, Al Najada H (2015) Survey of review spam detection using machine learning techniques. J Big Data 2(1):23

Davis J, Goadrich M (2006) The relationship between precision-recall and roc curves. In: Proceedings of the 23rd international conference on machine learning. ACM, pp 233–240

Drucker H, Wu D, Vapnik VN (1999) Support vector machines for spam categorization. IEEE Trans Neural Netw 10(5):1048–1054

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Gyöngyi Z, Garcia-Molina H, Pedersen J (2004) Combating web spam with trustrank. In: Proceedings of the Thirtieth international conference on Very large data bases. VLDB Endowment, vol 30, pp 576–587

He H, Bai Y, Garcia EA, Li S (2008) Adasyn: adaptive synthetic sampling approach for imbalanced learning. In: IEEE international joint conference on neural networks, 2008. IJCNN 2008. (IEEE world congress on computational intelligence), pp 1322–1328

Heydari A, Ali Tavakoli M, Salim N, Heydari Z (2015) Detection of review spam: a survey. Expert Syst Appl 42(7):3634–3642

Heydari A, Tavakoli M, Salim N (2016) Detection of fake opinions using time series. Expert Syst Appl 58:83–92

Hiremath P, Algur SP, Shivashankar S (2009) Web based quality assessment of customer reviews using quartile measure. Int J Recent Trends Eng 1(1):194–199

Ho-Dac NN, Carson SJ, Moore WL (2013) The effects of positive and negative online customer reviews: do brand strength and category maturity matter? J Mark 77(6):37–53

Jindal N, Liu B (2007) Review spam detection. In: Proceedings of the 16th international conference on world wide web. ACM, pp 1189–1190

Jindal N, Liu B (2008) Opinion spam and analysis. In: Proceedings of the 2008 international conference on web search and data mining. ACM, pp 219–230

Jindal N, Liu B, Lim E-P (2010) Finding unusual review patterns using unexpected rules. In: Proceedings of the 19th ACM international conference on information and knowledge management. ACM, pp 1549–1552

Kim S, Lee S, Park D, Kang J (2017) Constructing and evaluating a novel crowdsourcing-based paraphrased opinion spam dataset. In: Proceedings of the 26th international conference on world wide web. International world wide web conferences steering committee, pp 827–836

Kumar A, Singh JP, Rana NP (2017) Authenticity of geo-location and place name in tweets. In: Twenty-third Americas conference on information systems, Boston, pp 1–9

Lau RY, Liao S, Kwok RCW, Xu K, Xia Y, Li Y (2011) Text mining and probabilistic language modeling for online review spam detecting. ACM Trans Manag Inf Syst 2(4):1–30

Li J, Ott M, Cardie C, Hovy EH (2014) Towards a general rule for identifying deceptive opinion spam. In ACL 1:1566–1576

Li L, Qin B, Ren W, Liu T (2017) Document representation and feature combination for deceptive spam review detection. Neurocomputing 254:33–41

Li Y, Lin Y, Zhang J, Li J, Zhao L (2015) Highlighting the fake reviews in review sequence with the suspicious contents and behaviours. J Inf Comput Sci 12(4):1615–1627

Liaw A, Wiener M et al (2002) Classification and regression by randomforest. R news 2(3):18–22

Lim E-P, Nguyen V-A, Jindal N, Liu B, Lauw HW (2010) Detecting product review spammers using rating behaviors. In: Proceedings of the 19th ACM international conference on Information and knowledge management. ACM, pp 939–948

Lin Y, Zhu T, Wu H, Zhang J, Wang X, Zhou A (2014) Towards online anti-opinion spam: spotting fake reviews from the review sequence. In: IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM), pp 261–264

Lu Y, Zhang L, Xiao Y, Li Y (2013) Simultaneously detecting fake reviews and review spammers using factor graph model. In: Proceedings of the 5th annual ACM web science conference. ACM, pp 225–233

Markines B, Cattuto C, Menczer F (2009) Social spam detection. In: Proceedings of the 5th International Workshop on Adversarial Information Retrieval on the Web. ACM, pp 41–48

MightyAi (2017) https://mty.ai/blog/5-reasons-you-shouldnt-label-training-data-in-house/. Accessed 5 Nov 2017

Mukherjee A, Kumar A, Liu B, Wang J, Hsu M, Castellanos M, Ghosh R (2013) Spotting opinion spammers using behavioral footprints. In: Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, pp 632–640

Mukherjee A, Liu B, Glance N (2012) Spotting fake reviewer groups in consumer reviews. In: Proceedings of the 21st international conference on World Wide Web. ACM, pp 191–200

Mukherjee A, Venkataraman V, Liu B, Glance N (2013a) Fake review detection: classification and analysis of real and pseudo reviews. Technical Report UIC-CS-2013–03, University of Illinois at Chicago, Tech. Rep

Mukherjee A, Venkataraman V, Liu B, Glance NS (2013b) What yelp fake review filter might be doing? In: ICWSM

Ntoulas A, Najork M, Manasse M, Fetterly D (2006) Detecting spam web pages through content analysis. In: Proceedings of the 15th international conference on World Wide Web. ACM, pp 83–92

Ott M, Cardie C, Hancock J (2012) Estimating the prevalence of deception in online review communities. In: Proceedings of the 21st international conference on World Wide Web. ACM, pp 201–210

Ott M, Choi Y, Cardie C, Hancock JT (2011a) Finding deceptive opinion spam by any stretch of the imagination. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies, vol 1. Association for Computational Linguistics, pp 309–319

Ott M, Choi Y, Cardie C, Hancock JT (2011b). Finding deceptive opinion spam by any stretch of the imagination. In: Proceedings of the 49th annual meeting of the association for computational linguistics: human language technologies. Association for Computational Linguistics, vol 1, pp 309–319

Ren Y, Ji D (2017) Neural networks for deceptive opinion spam detection: an empirical study. Inf Sci 385:213–224

Roy PK, Ahmad Z, Singh JP, Alryalat MAA, Rana NP, Dwivedi YK (2017) Finding and ranking high-quality answers in community question answering sites. Glob J Flex Syst Manag 19:1–16

Saini S, Saumya S, Singh JP (2017) Sequential purchase recommendation system for e-commerce sites. In: IFIP international conference on computer information systems and industrial management. Springer, pp 366–375

Saumya S, Singh JP, Baabdullah AM, Rana NP, Dwivedi YK (2018) Ranking online consumer reviews. Electron Commer Res Appl 29:78–89

Saumya S, Singh JP, Kumar P (2016) Predicting stock movements using social network. In: Conference on e-Business, e-Services and e-Society. Springer, pp 567–572

Savage D, Zhang X, Yu X, Chou P, Wang Q (2015) Detection of opinion spam based on anomalous rating deviation. Expert Syst Appl 42(22):8650–8657

Singh JP, Dwivedi YK, Rana NP, Kumar A, Kapoor KK (2017) Event classification and location prediction from tweets during disasters. Ann Oper Res, pp 1–21

Singh JP, Irani S, Rana NP, Dwivedi YK, Saumya S, Roy PK (2017) Predicting the helpfulness of online consumer reviews. J Bus Res 70:346–355

Suykens JA, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

TechRepublic (2017) Is ’data labeling’ the new blue-collar job of the aiera? Accessed from https://www.techrepublic.com/article/is-data-labeling-the-new-blue-collar-job-of-the-ai-era/. Accessed 5 Nov 2017

Xie S, Wang G, Lin S, Yu PS (2012) Review spam detection via temporal pattern discovery. In: Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, pp 823–831

Zhu F, Zhang X (2010) Impact of online consumer reviews on sales: the moderating role of product and consumer characteristics. J Mark 74(2):133–148

Zweig MH, Campbell G (1993) Receiver-operating characteristic (roc) plots: a fundamental evaluation tool in clinical medicine. Clin Chem 39(4):561–577

Acknowledgements

The authors would like to acknowledge the Ministry of Electronics and Information Technology (MeitY), Government of India for the financial support during research work through Visvesvaraya Ph.D. Scheme for Electronics and IT.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Saumya, S., Singh, J.P. Detection of spam reviews: a sentiment analysis approach. CSIT 6, 137–148 (2018). https://doi.org/10.1007/s40012-018-0193-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40012-018-0193-0